D07.1 V&V report #1: Security Requirements

definition, Target Selection, Methodology

Definition, First Security Testing and First

Formal Verification of the Target

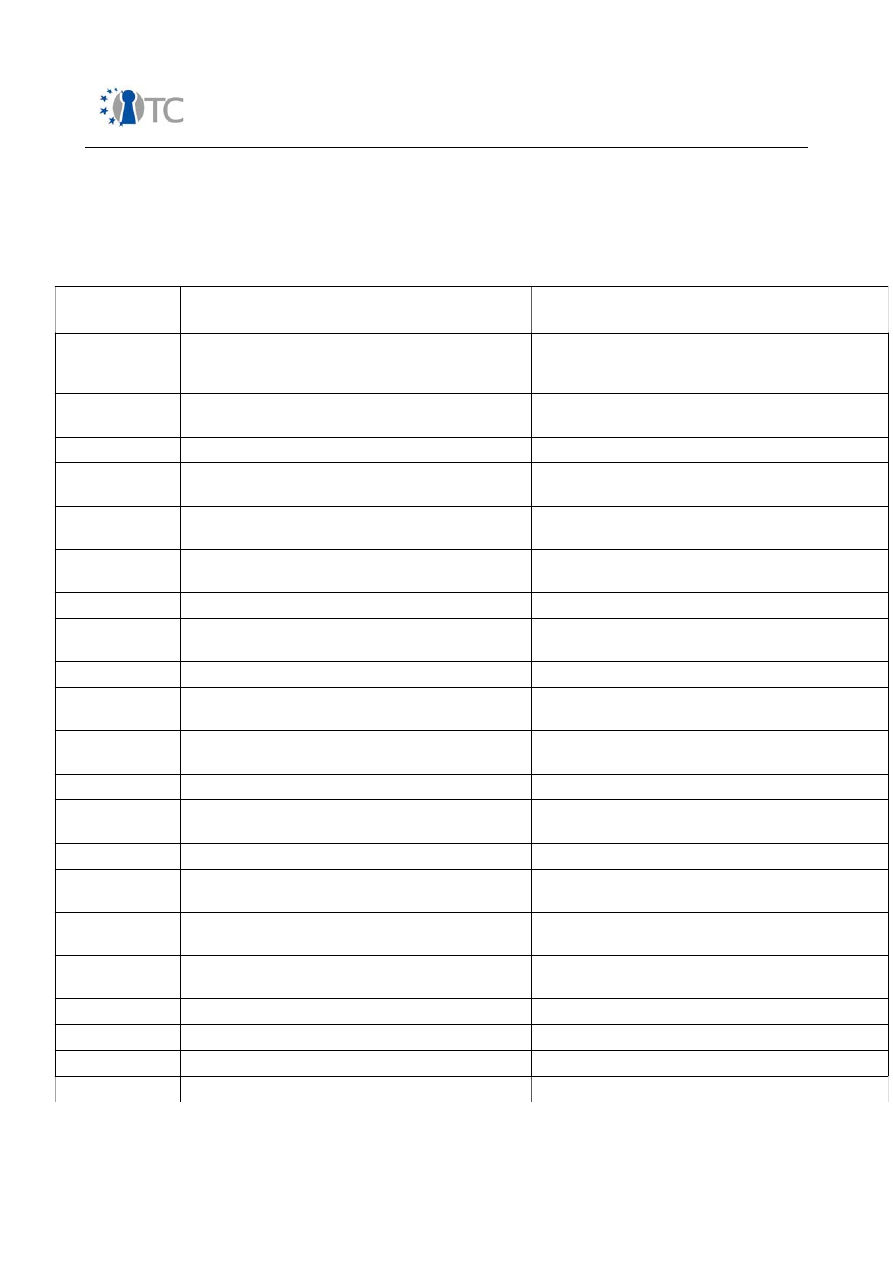

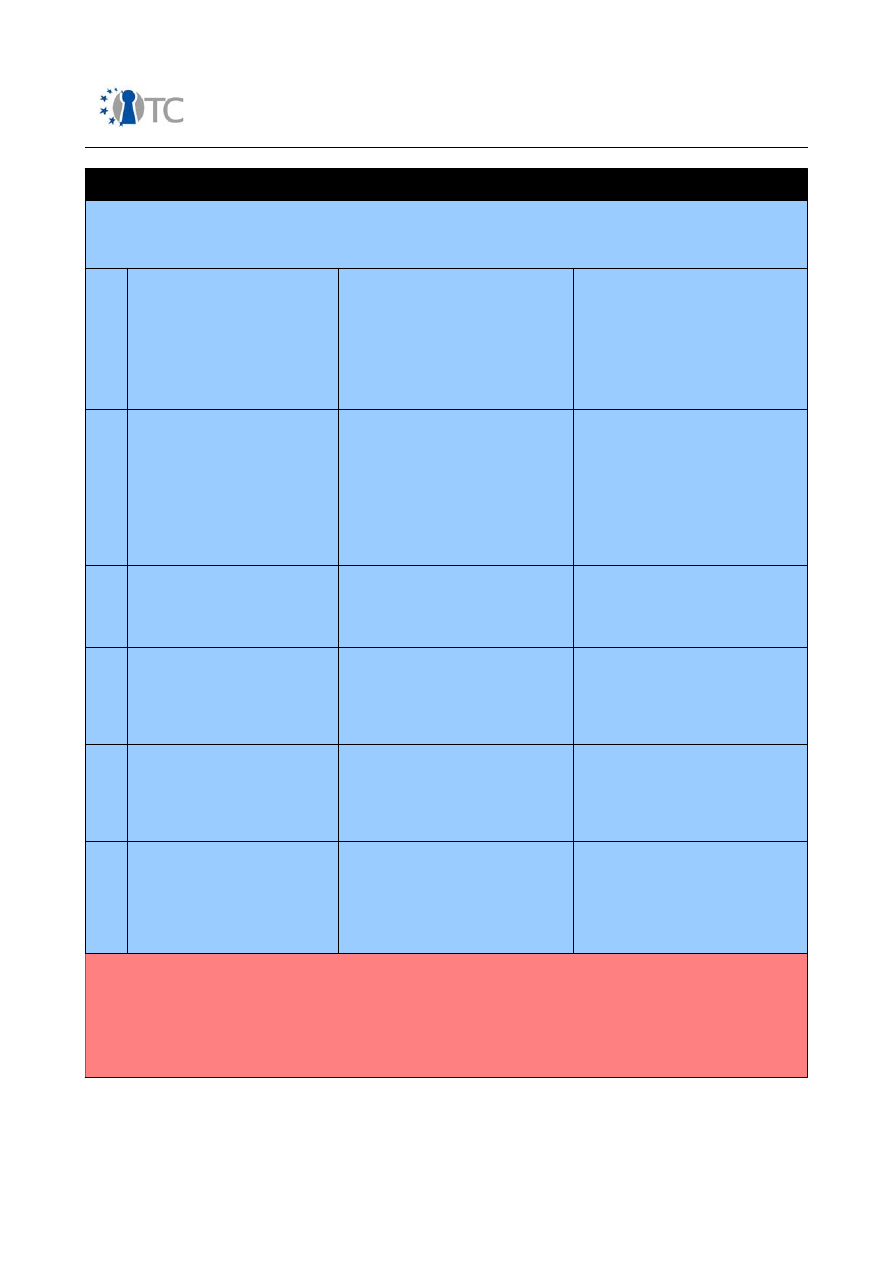

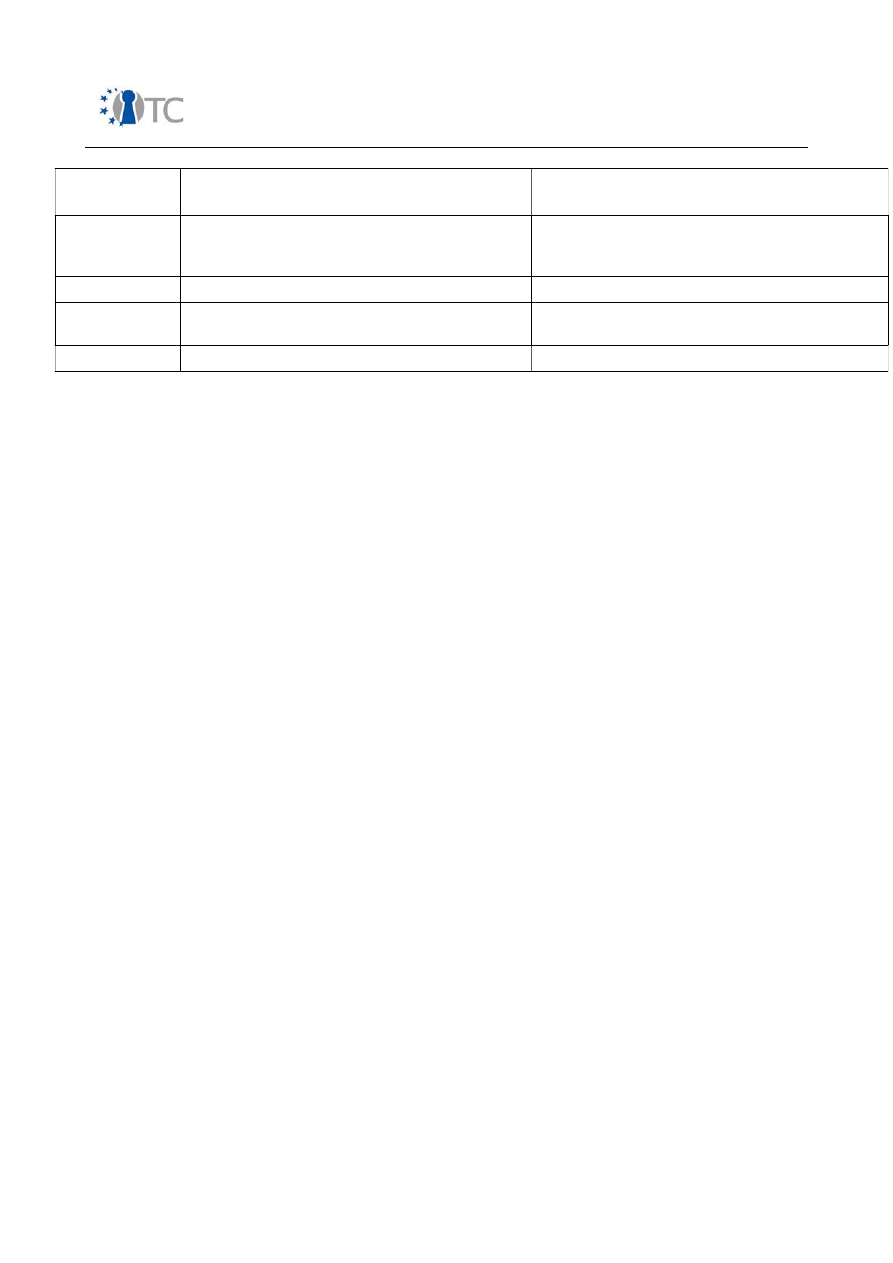

Project number

IST-027635

Project acronym

Open_TC

Project title

Open Trusted Computing

Deliverable type

Report

Deliverable reference number

IST-027635/D07.1/1.1

Deliverable title

V&V report #1: Security Requirements

definition, Target Selection, Methodology

Definition, First Security Testing and First

Formal Verification of the Target

WP contributing to the deliverable

WP07

Due date

Oct 2006 - M12

Actual submission date

29 November 2006

Responsible Organisation

CEA

Authors

Pascal Cuoq, Roman Drahtmüller, Ivan

Evgeniev, Pete Herzog, Zoltan Hornak, Virgile

Prevosto, Armand Puccetti (eds.), Gergely

Toth, Ventcislav Trifonov.

Abstract

This deliverable is the main output of WP07

for the first yearly period, ie from November

2005 to October 2006. It describes the main

results and work in progress of all partners of

WP07, i.e. ISECOM, TUS, CEA, BME and SUSE

Keywords

Security Requirements definition,Target

selection, Methodology Definition, First

security testing, First formal verfication of

the target

V&V report #1: Security Requirements definition, Target Selection, Methodology

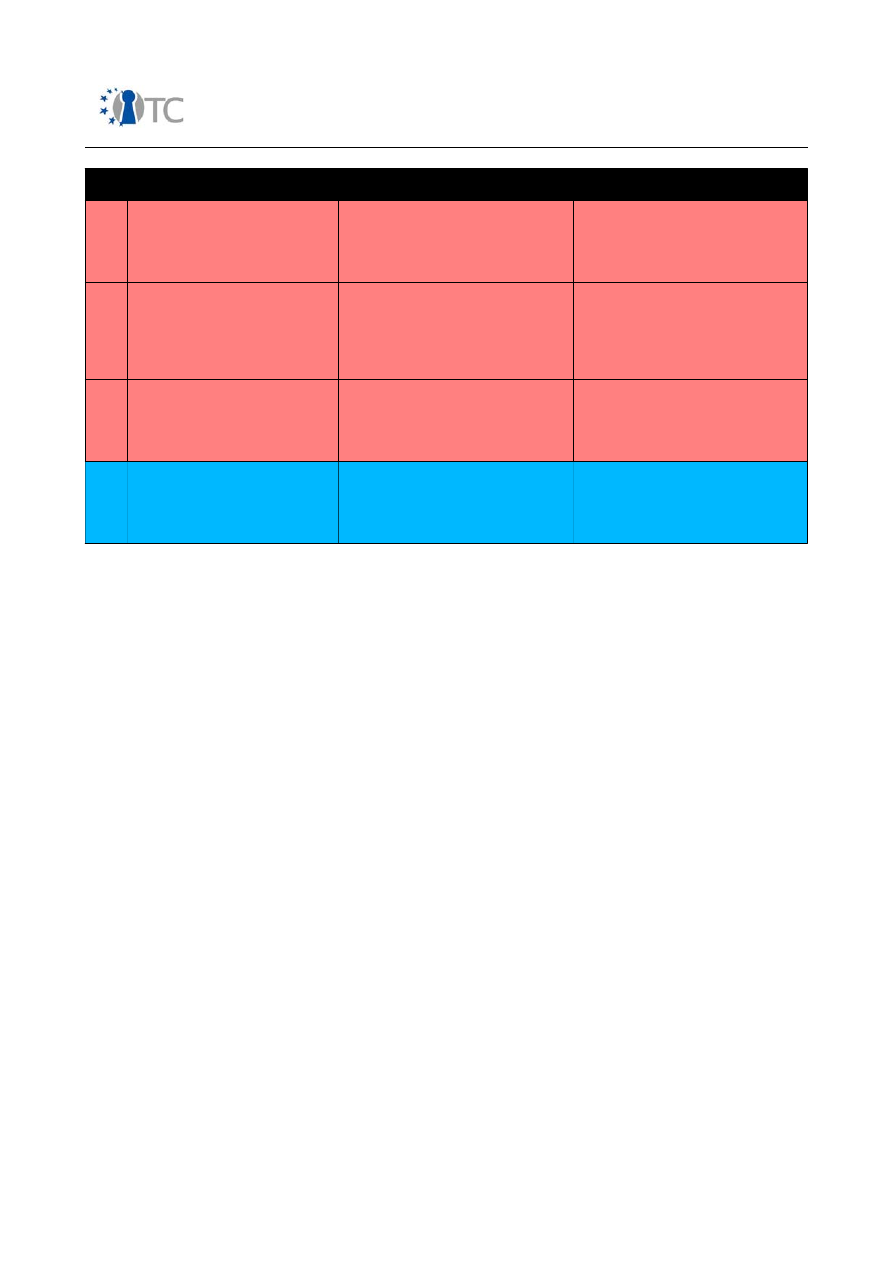

Definition, First Security Testing and First Formal Verification of the Target

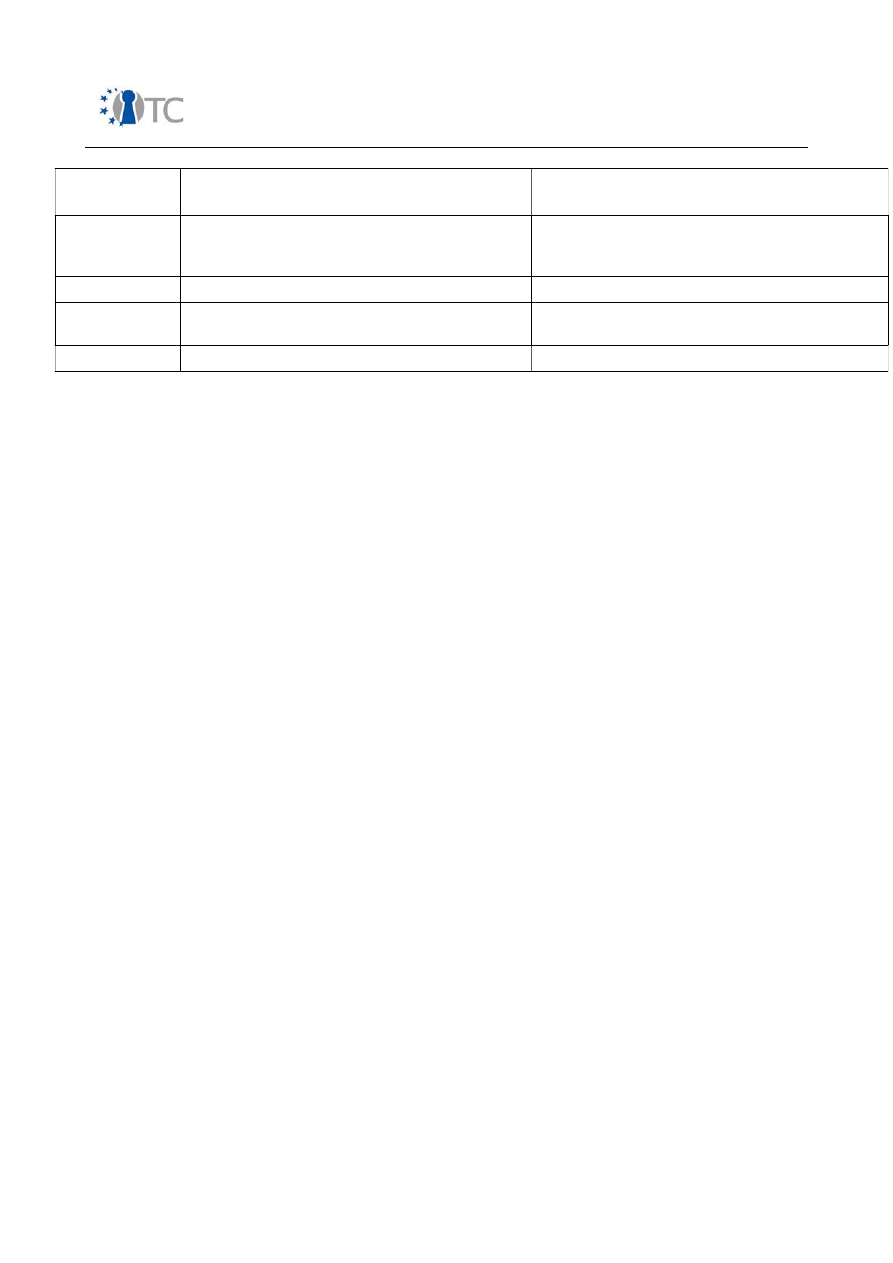

1.1

Dissemination level

Public

Revision

1.1

Instrument

IP

Start date of the

project

1

st

November 2005

Thematic Priority

IST

Duration

42 months

If you need further information, please visit our website

www.opentc.net

or contact

the coordinator:

Technikon Forschungs-und Planungsgesellschaft mbH

Richard-Wagner-Strasse 7, 9500 Villach, AUSTRIA

Tel.+43 4242 23355 –0

Fax. +43 4242 23355 –77

Email

coordination@opentc.net

The information in this document is provided “as is”, and no guarantee

or warranty is given that the information is fit for any particular purpose.

The user thereof uses the information at its sole risk and liability.

OpenTC Deliverable 07.1

2/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

Table of Contents

1 Summary.....................................................................................................................8

2 Introduction.................................................................................................................9

2.1 Outline....................................................................................................................9

2.2 Structure of this report.........................................................................................11

3 Definition of targets...................................................................................................12

3.1 Selecting Targets..................................................................................................12

3.2 Future directions...................................................................................................12

4 Development of a security testing methodology.......................................................14

4.1 Overview...............................................................................................................14

4.2 Technical background...........................................................................................16

Security Testing Process...........................................................................................16

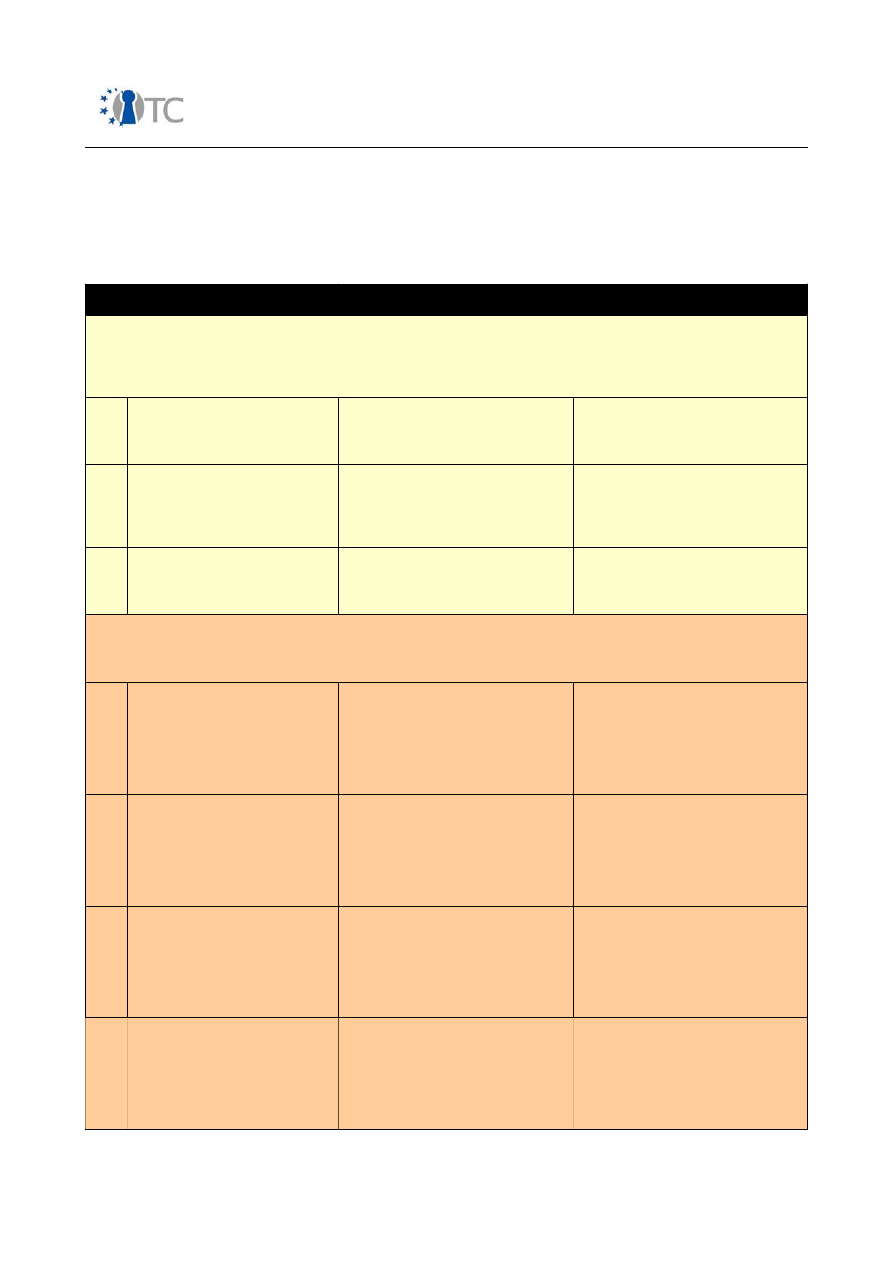

Table 1: Error Types..................................................................................................17

Operational Security Testing.......................................................................................19

Four Point Process.....................................................................................................19

Applying the Methodology..........................................................................................20

Auditor's Trifecta.......................................................................................................21

4.3 Process view.........................................................................................................23

4.4 Main results achieved...........................................................................................28

Security Metrics..........................................................................................................28

Applying Risk Assessment Values...............................................................................28

Operational Security...................................................................................................29

Controls.......................................................................................................................30

Security Limitations....................................................................................................31

Actual Security............................................................................................................33

4.5 On-going work and future directions.....................................................................33

5 Automated black-box and white box security testing................................................35

5.1 Overview...............................................................................................................35

5.2 Technical background...........................................................................................35

5.3 Process view.........................................................................................................37

5.4 Main results achieved...........................................................................................39

5.4.1 Automated test tool selection..........................................................................39

5.4.2 Automated test tool methodology adaptation for OpenTC...............................40

5.4.3 Training on OpenTC products...........................................................................45

5.4.4 Common Criteria training.................................................................................45

5.5 On-going work and future directions.....................................................................47

5.5.1 Testing of OpenTC software components.........................................................47

5.5.2 Research on test algorithms.............................................................................47

6 Development of a C code static analyser using AI.....................................................49

6.1 Overview...............................................................................................................49

6.2 Technical background...........................................................................................49

6.2.1 Static analysis versus testing...........................................................................49

6.2.2 State of the art.................................................................................................50

6.3 Process view.........................................................................................................52

6.3.1 Roadmap..........................................................................................................52

6.3.2 Achievements...................................................................................................53

6.4 Main results achieved...........................................................................................53

OpenTC Deliverable 07.1

3/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

6.4.1 Treatment of endianness and data representation issues................................53

6.4.2 Treatment of aliases.........................................................................................54

6.4.3 Localisation of the origin of over-approximations............................................55

6.5 On-going work and future directions.....................................................................55

7 Formal analysis of the XEN target.............................................................................56

7.1 Overview...............................................................................................................56

7.2 Technical background...........................................................................................56

7.3 Process view.........................................................................................................57

7.4 Main results achieved...........................................................................................61

7.5 On-going work and future directions.....................................................................66

8 Formal analysis of the TCP/IP package of the Linux kernel........................................67

8.1 Overview...............................................................................................................67

8.2 Technical background...........................................................................................67

8.3 Process view.........................................................................................................68

8.4 Main results achieved...........................................................................................72

8.5 On-going work and future directions.....................................................................72

9 Survey and state of the art of quality analysis tools .................................................73

9.1 Overview...............................................................................................................73

9.2 Technical background...........................................................................................74

9.3 Process view.........................................................................................................75

9.4 Main results achieved ..........................................................................................75

9.5 On-going work and future directions ....................................................................76

10 Statistics of Linux kernel bugs ................................................................................77

10.1 Overview ............................................................................................................77

10.2 Technical background ........................................................................................77

10.3 Process view ......................................................................................................77

10.4 Main results achieved ........................................................................................77

10.5 On-going work and future directions ..................................................................78

11 CC EAL5+ certification study..................................................................................79

11.1 Overview.............................................................................................................79

11.2 The Open Source Software development model and Common Criteria

Evaluations.................................................................................................................79

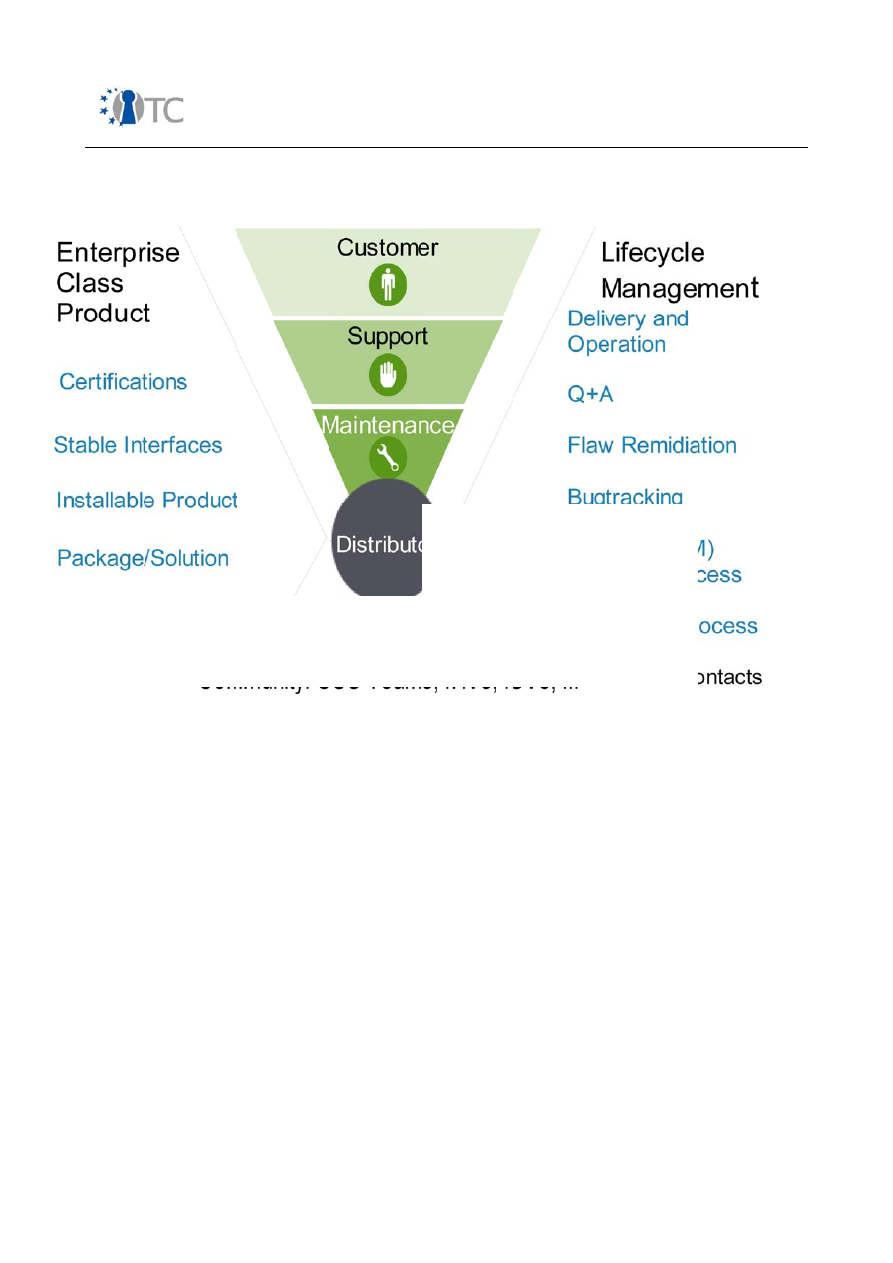

11.3 Derived consequences for the OpenTC consortium............................................82

11.4 Challenges..........................................................................................................84

11.5 Scope of the Evaluation, TOE and ST specification.............................................86

11.6 Caveats, Recommendations...............................................................................88

11.7 On-going work and future directions...................................................................89

12 References...............................................................................................................90

13 List of Abbreviations................................................................................................91

1 Appendix. Survey of code quality analysis and static analysis tools.........................94

1.1 Source Code Analysis Tools.................................................................................94

1.1.1 CodeSurfer from GrammaTech. (http://www.grammatech.com)......................94

1.1.2 CC Rider from Western Wares Llc. (http://www.westernwares.com)................94

1.1.3 Cleanscape C++Lint from Cleanscape

(http://www.cleanscape.net/products/cpp/index.html)..............................................94

1.1.4 Sourceaudit from FrontEndArt Ltd.

(http://www.frontendart.com/sourceaudit_notes.php)...............................................95

1.1.5 Telelogic Tau Logiscope from Telelogic (http://www.telelogic.com)................95

OpenTC Deliverable 07.1

4/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

1.1.6 Splint from Splint Co. (http://www.splint.org/)..................................................95

1.1.7 Understand for C++ from Scientific Toolworks Inc. (http://www.scitools.com/)

..................................................................................................................................96

1.1.8 CTC++:Test Coverage Analyzer fo C++ from Verifysoft GmbH.

(http://www.verifysoft.com/en.html).........................................................................96

1.1.9 QA C++ from QA Systems. (http://www.qa-systems.com/)..............................96

1.1.10 UNO from Spinroot (http://www.spinroot.com/uno)........................................97

1.1.11 Surveyor from Lexient Corp. (http://www.lexientcorp.com/)..........................97

1.1.12 CodeWisard from Parasoft (http://www.parasoft.com/)..................................97

1.2 Static Source Code Analyzers...............................................................................98

1.2.1 Coverity Prevent from Coverity Inc. (http://www.coverity.com/)......................98

1.2.2 K7 from Klocwork Inc. (http://www.klocwork.com/)..........................................98

1.2.3 Polyspace from Polyspace technologies (http://www.polyspace.com/).............98

2 Appendix. Linux errors.............................................................................................100

2.1 References: documents and specifications.........................................................100

2.2 Forums and mailing lists.....................................................................................100

2.3 Errors list.............................................................................................................101

3 Appendix. DoS SCN Sequence Diagrams.................................................................113

OpenTC Deliverable 07.1

5/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

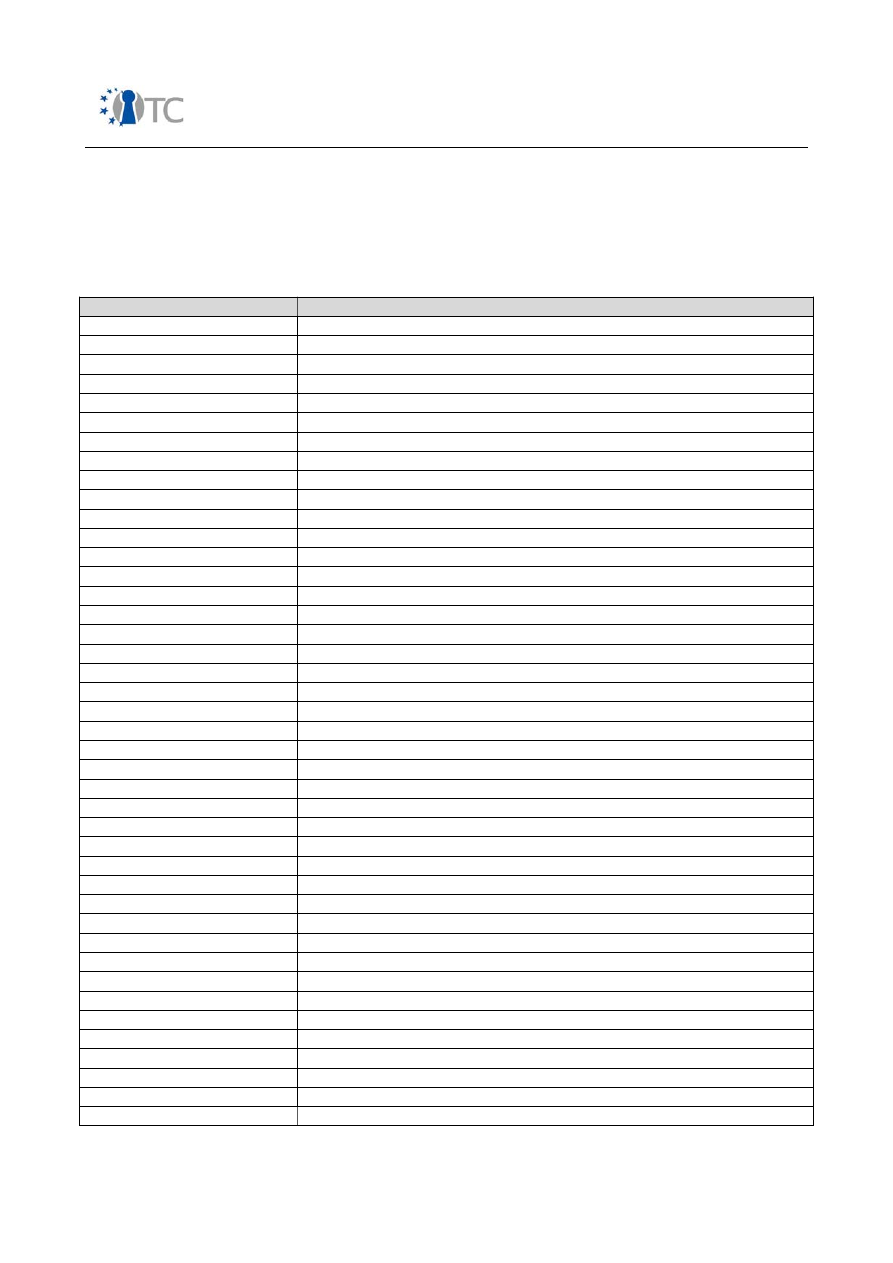

List of figures

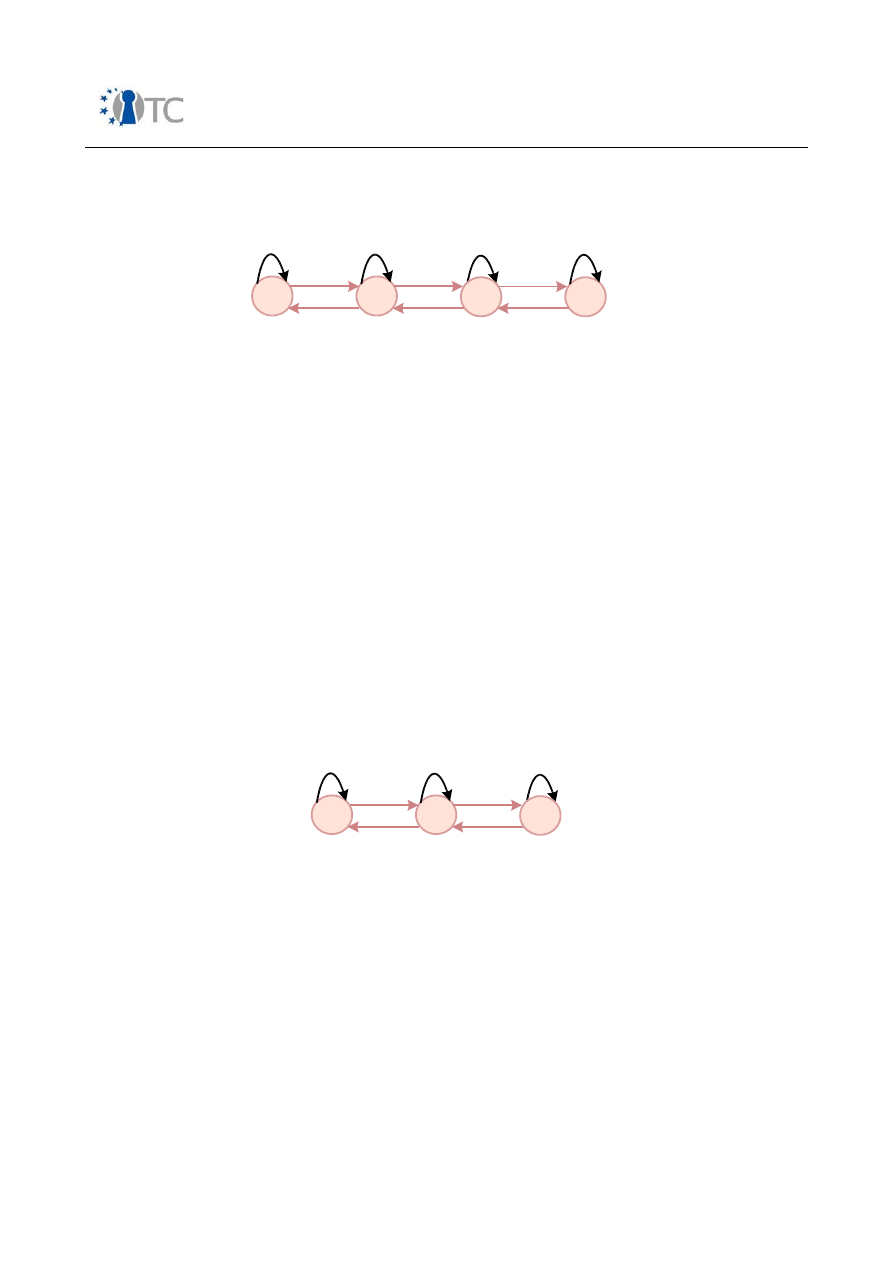

1. Figure: Methodology Application..............................................................................20

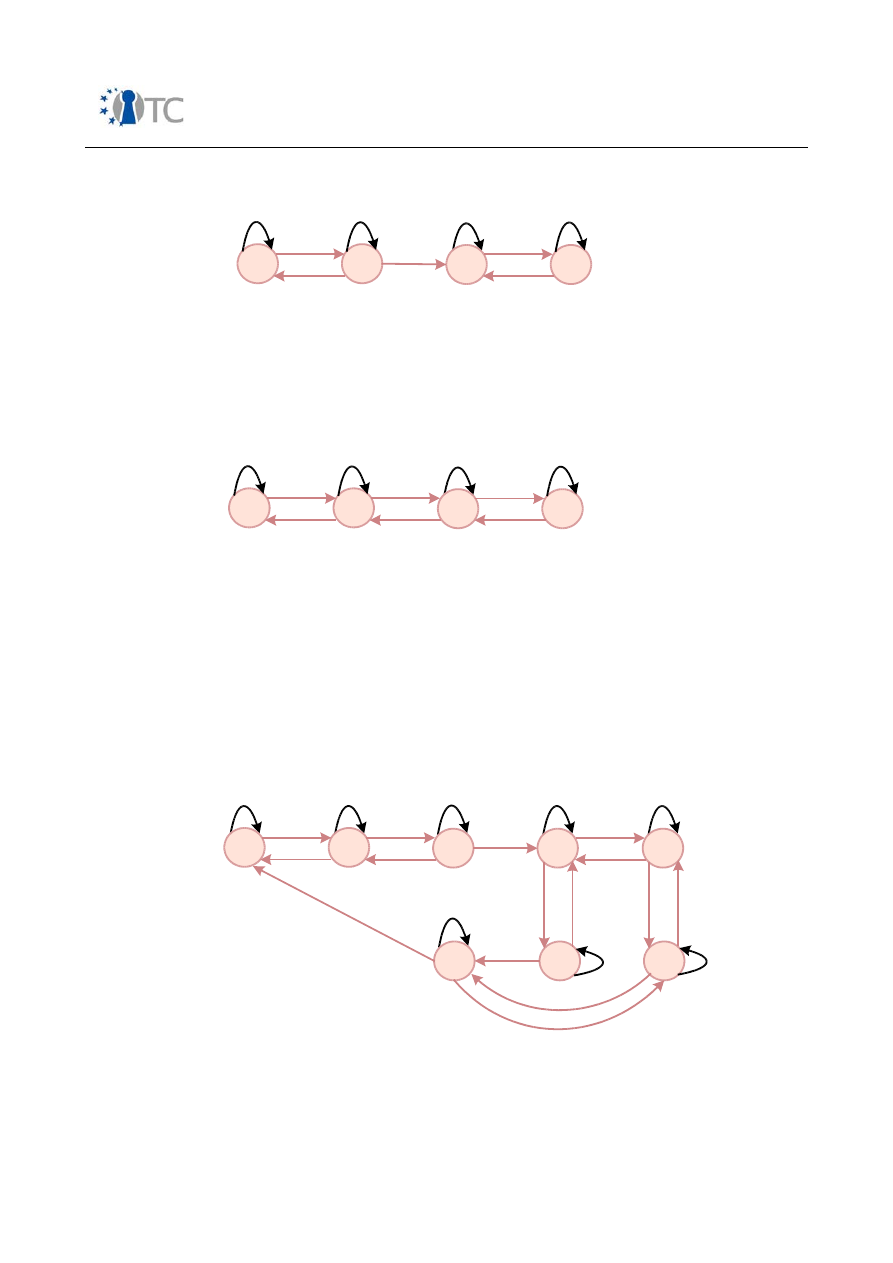

2. Figure: Test Modules................................................................................................24

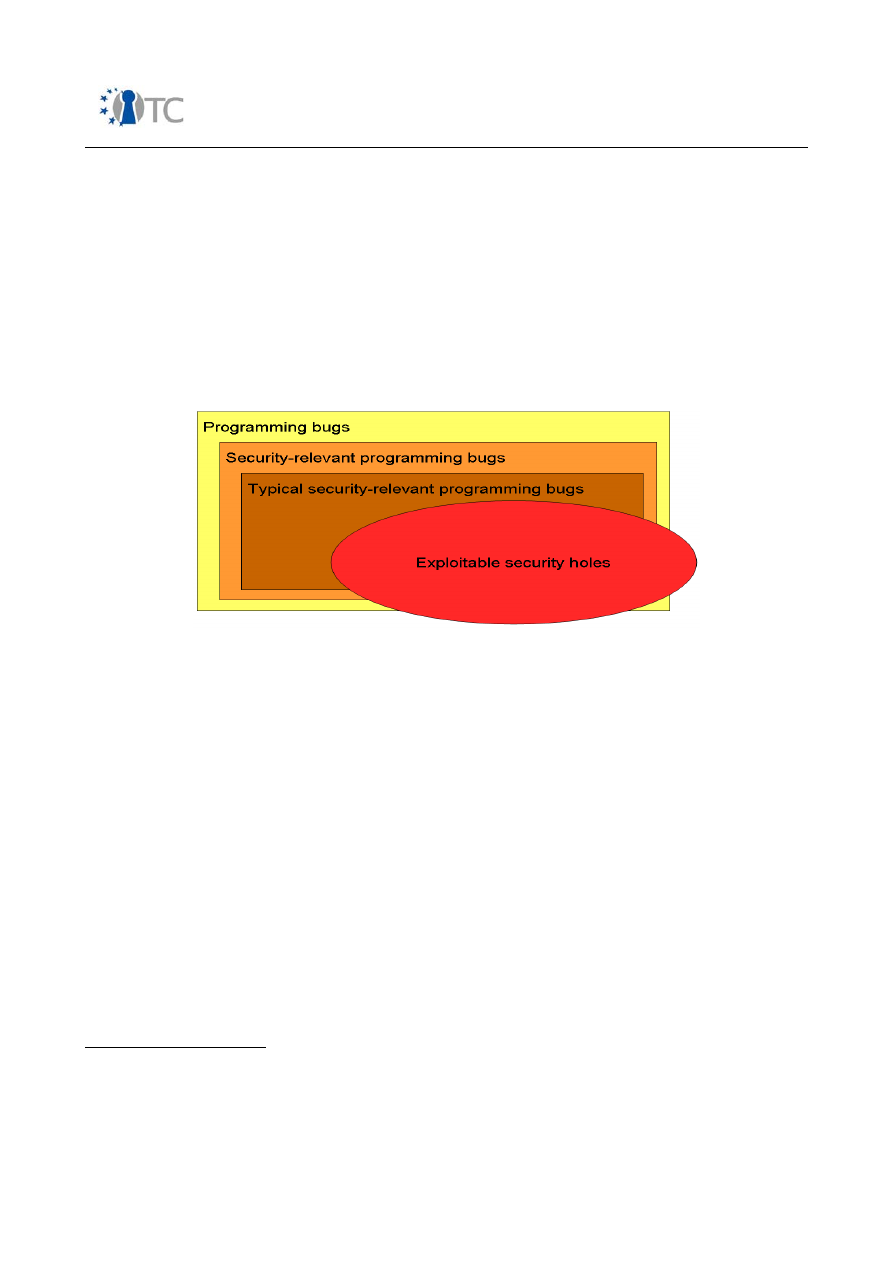

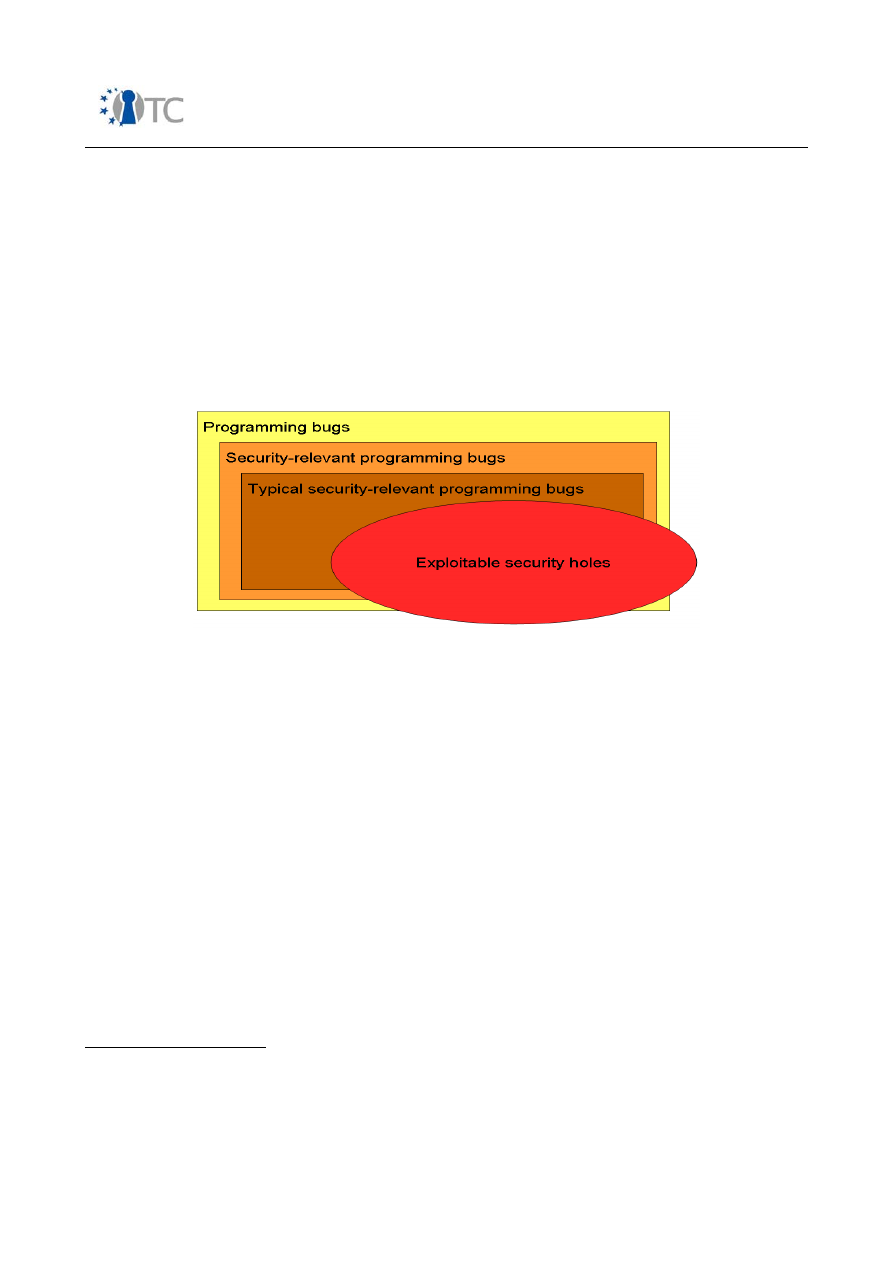

3. Figure: Classification of programming bugs.............................................................36

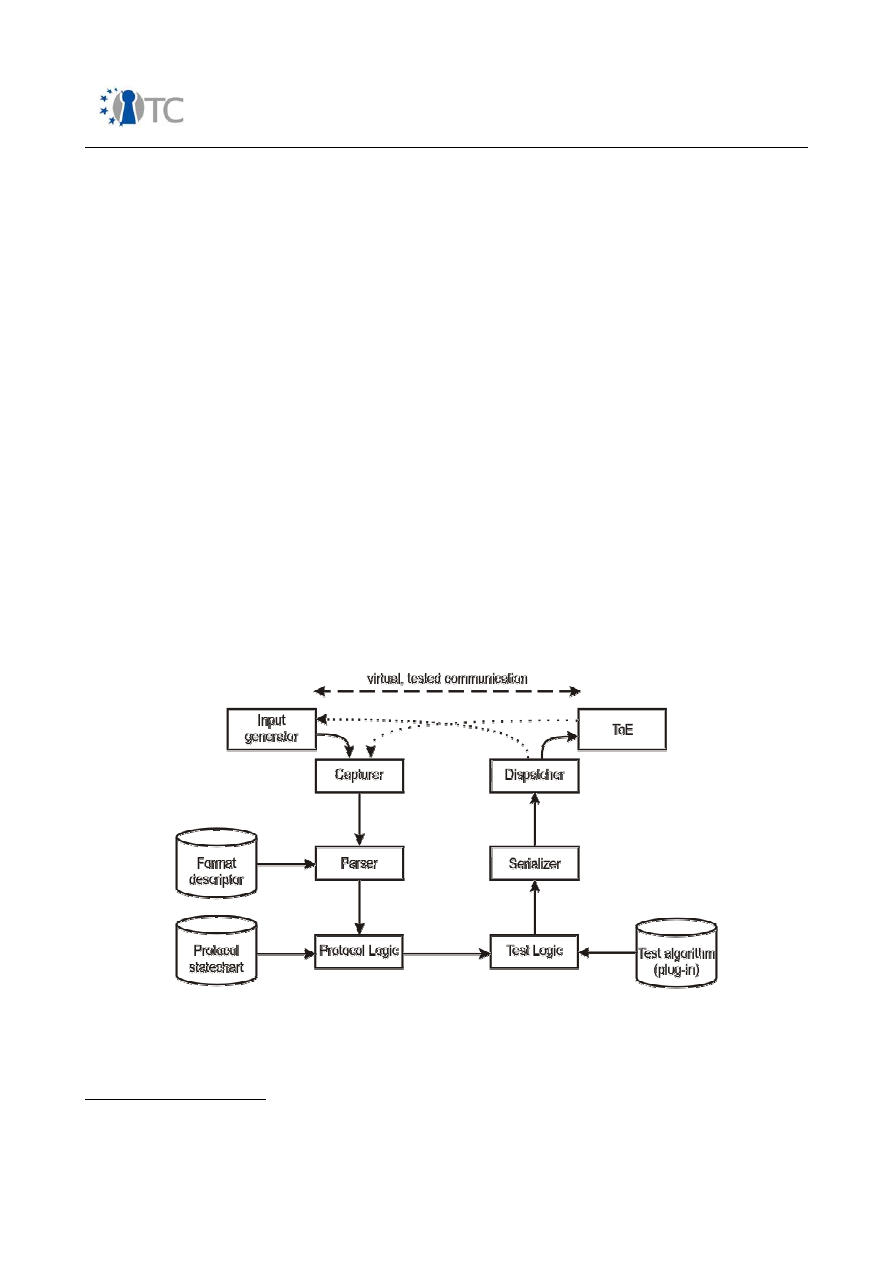

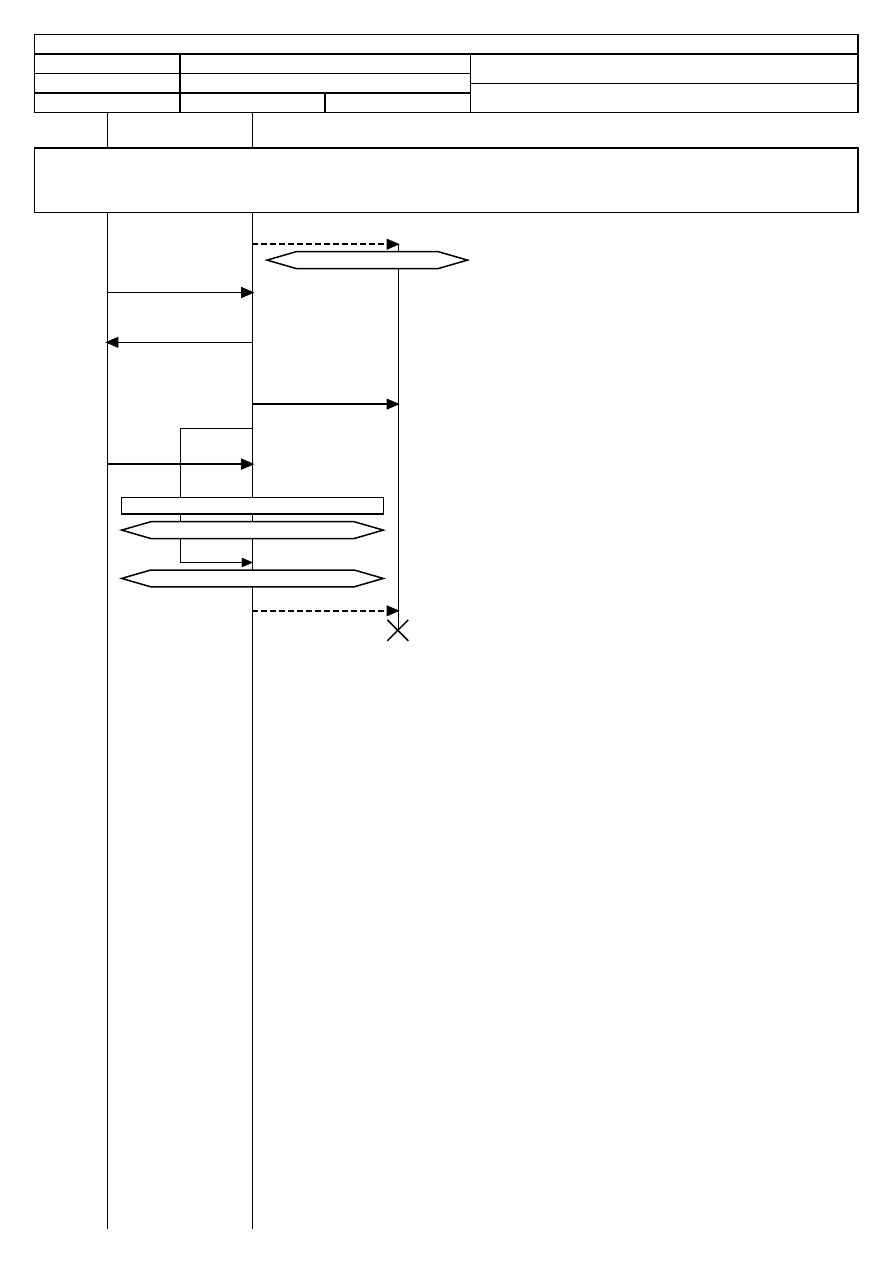

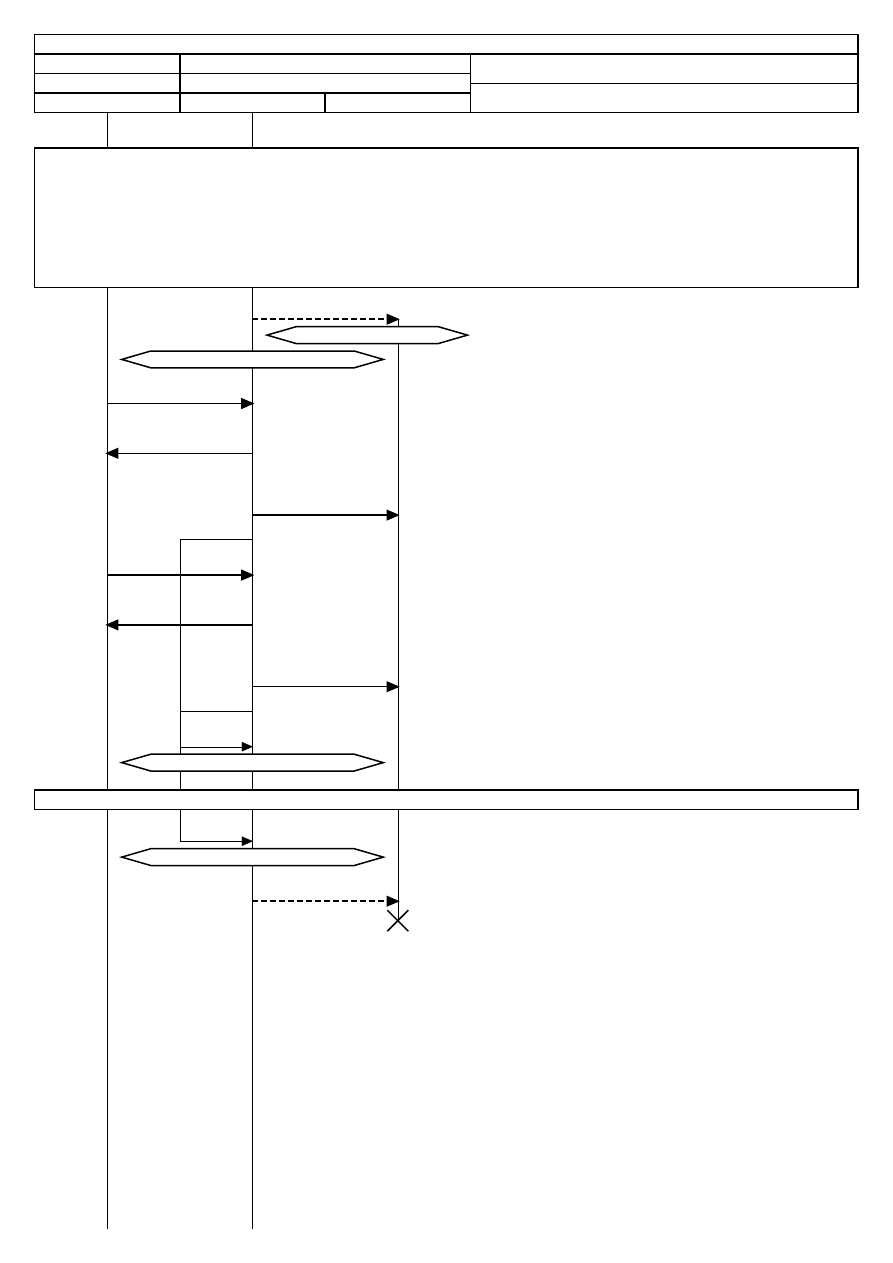

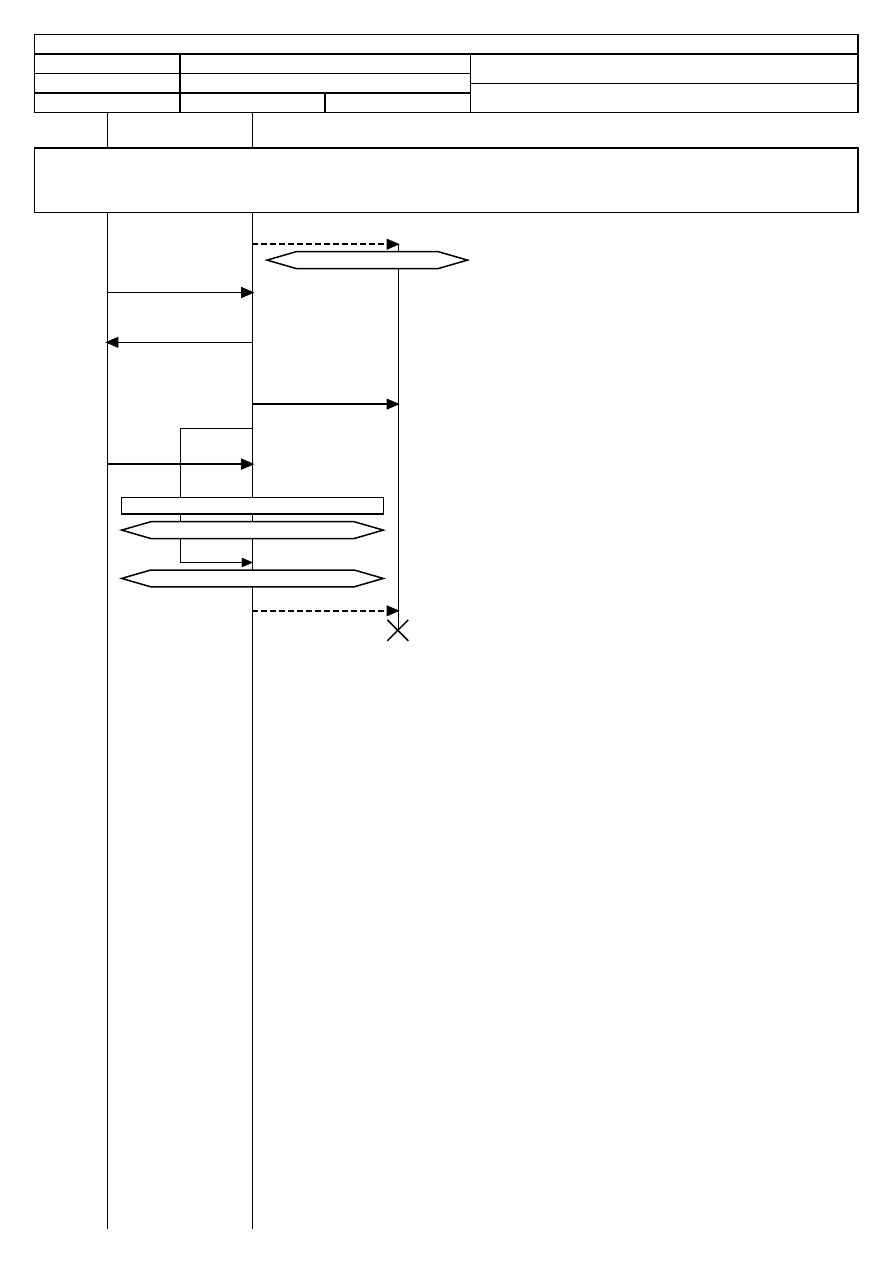

4. Figure: Black-box scenario........................................................................................42

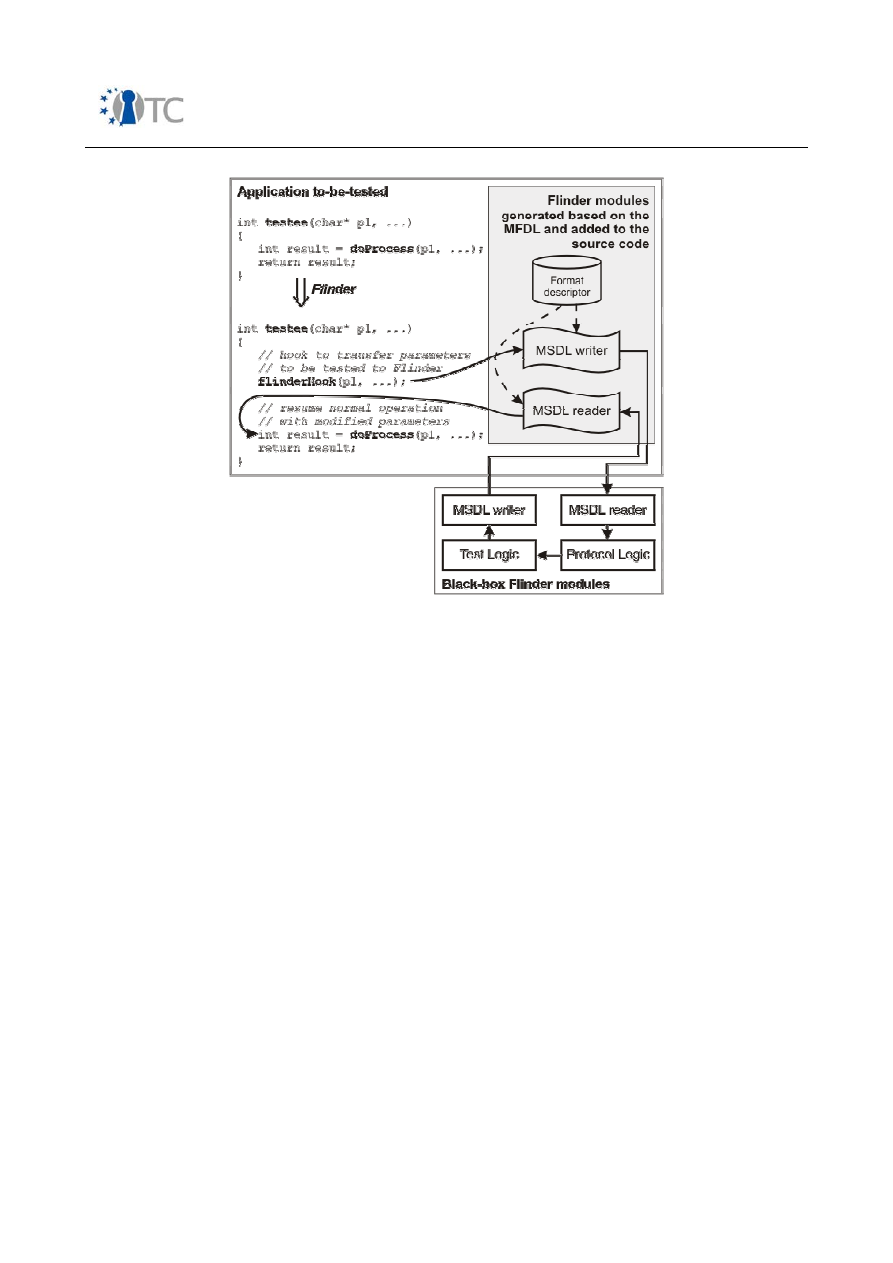

5. Figure: White-box scenario.......................................................................................44

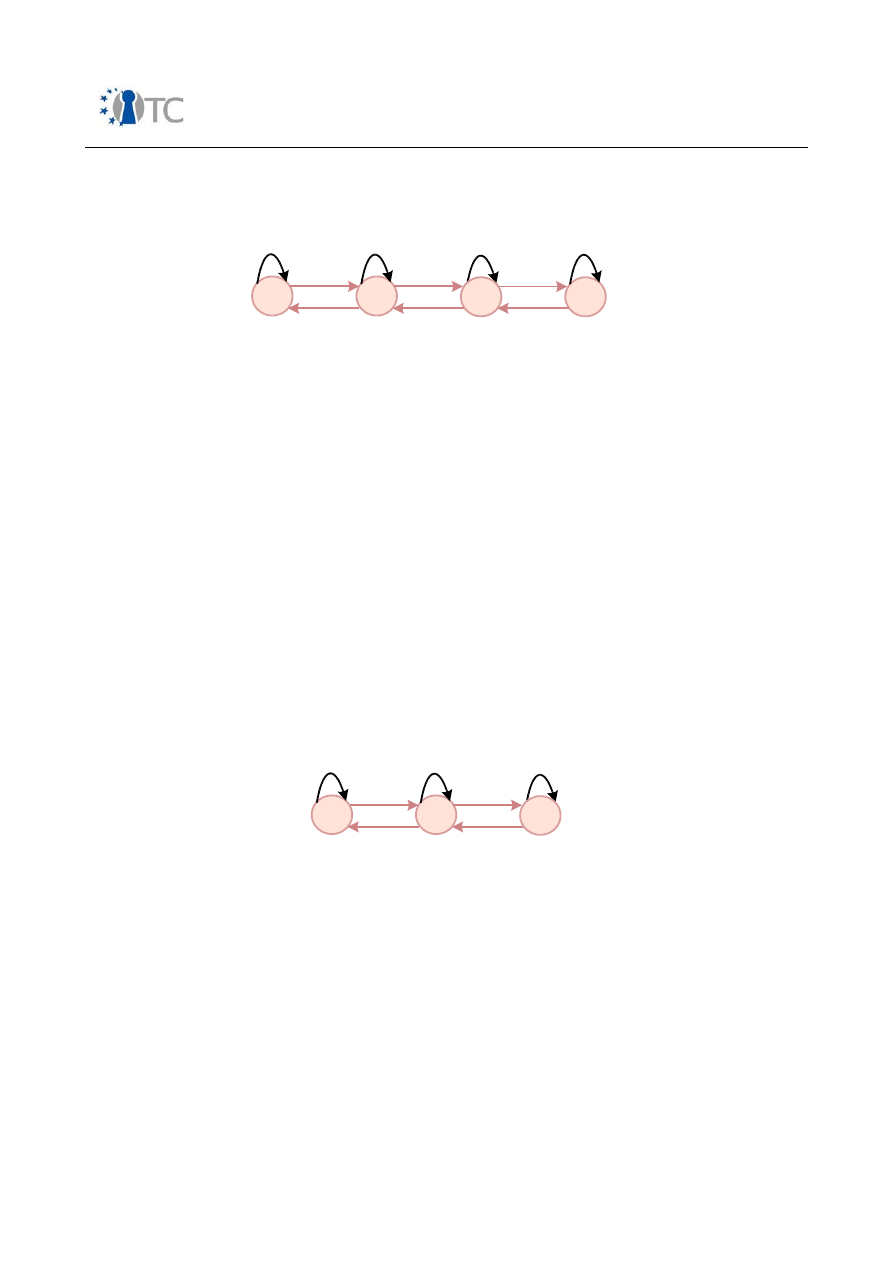

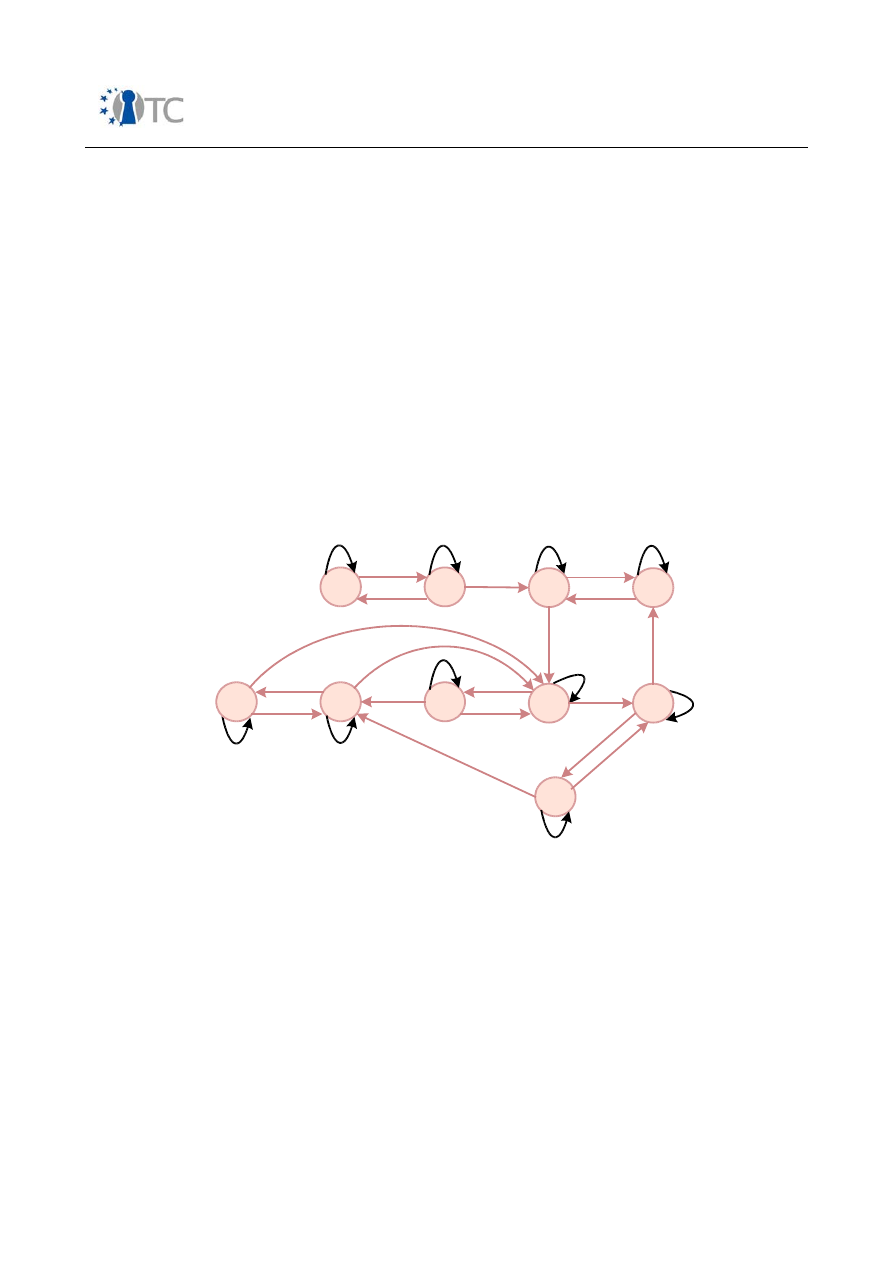

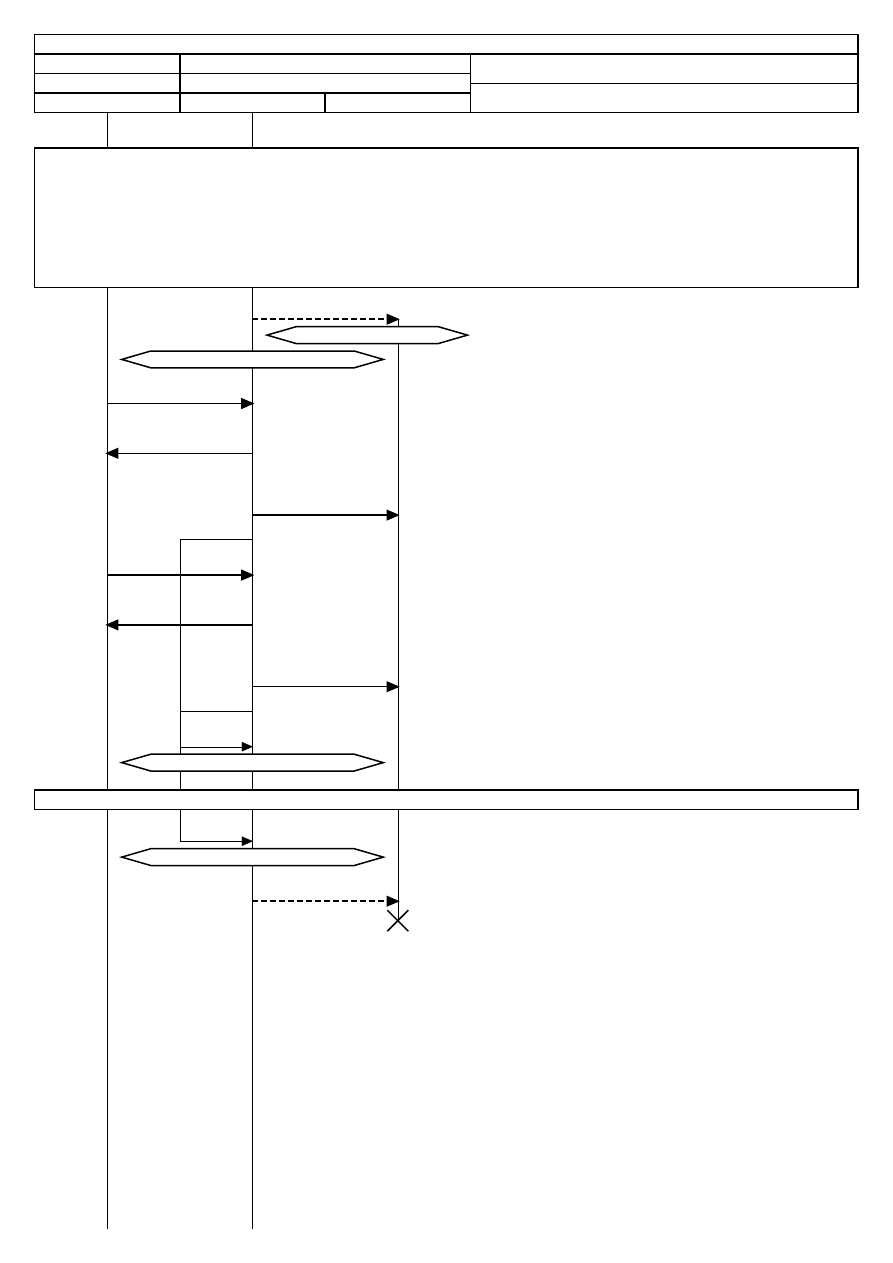

6. Figure: Automaton A1...............................................................................................69

7. Figure: Automaton A2...............................................................................................69

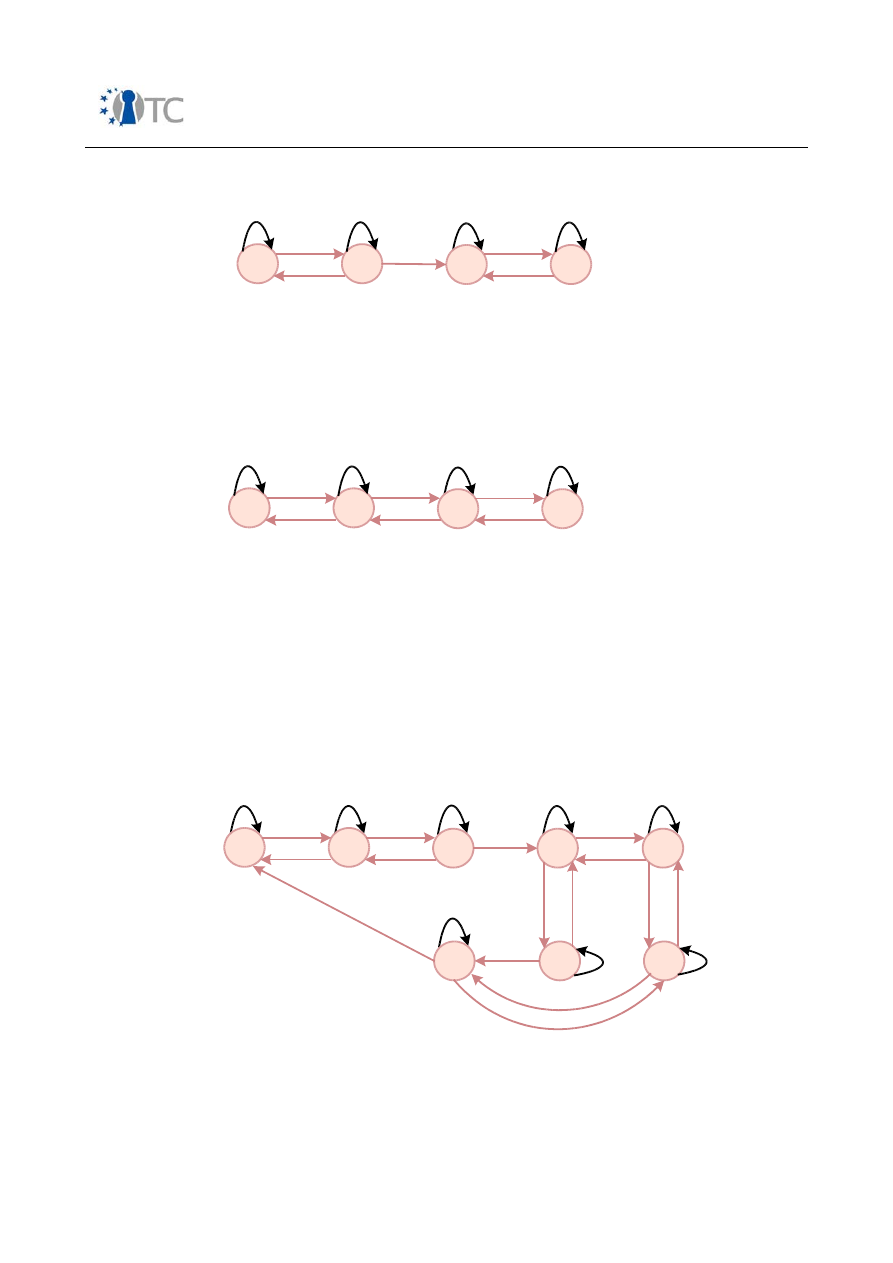

8. Figure: Automaton A3...............................................................................................70

9. Figure: Automaton A4...............................................................................................70

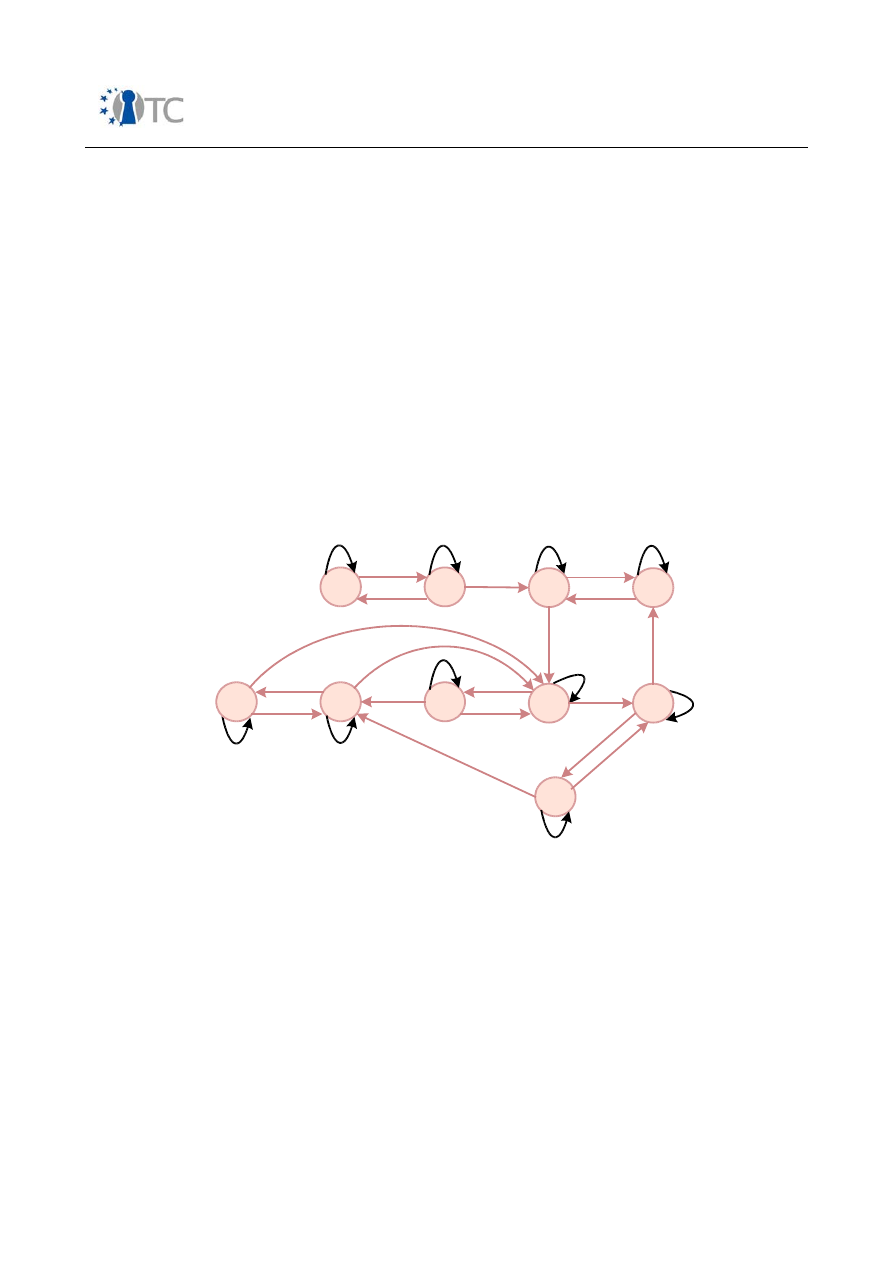

10. Figure: Automaton A5.............................................................................................70

11. Figure: Automaton A6.............................................................................................71

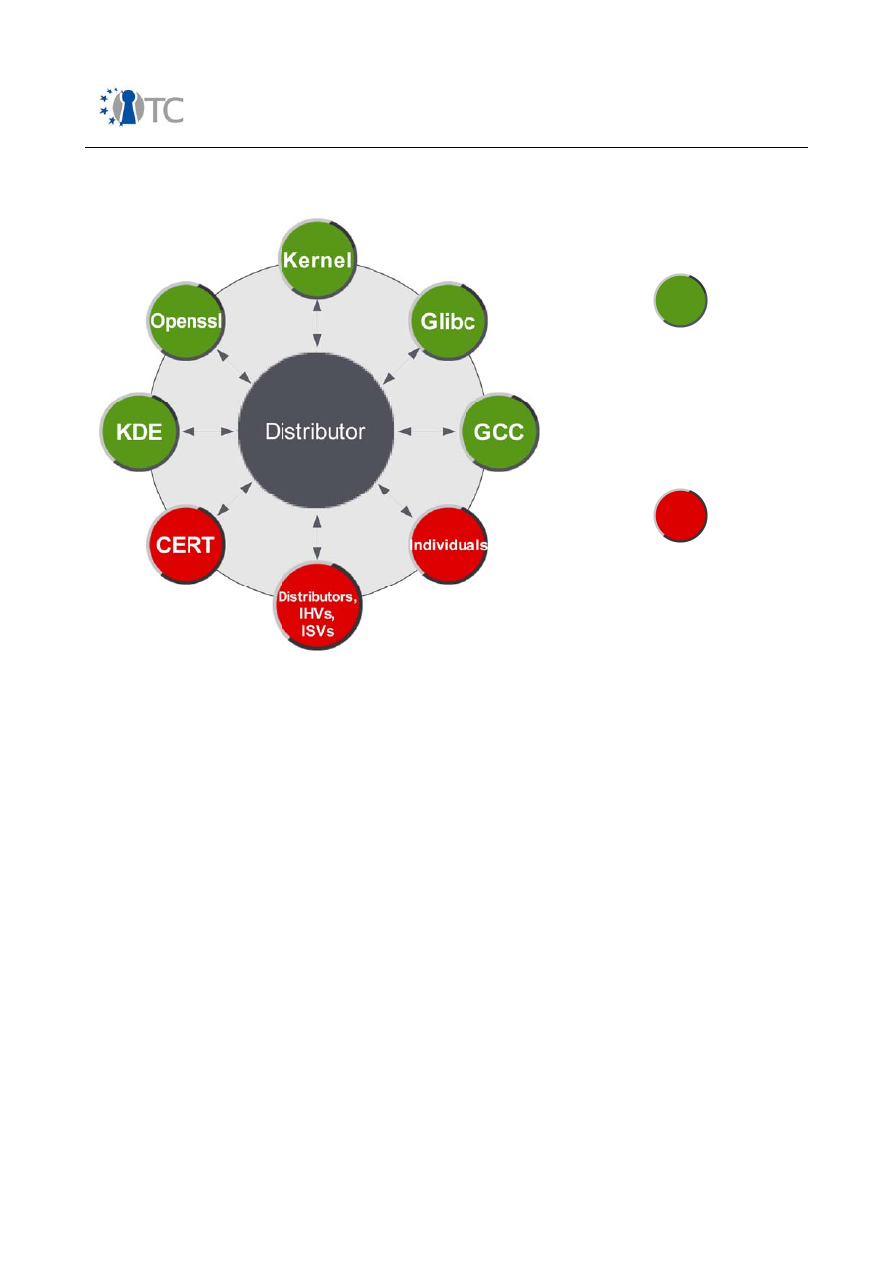

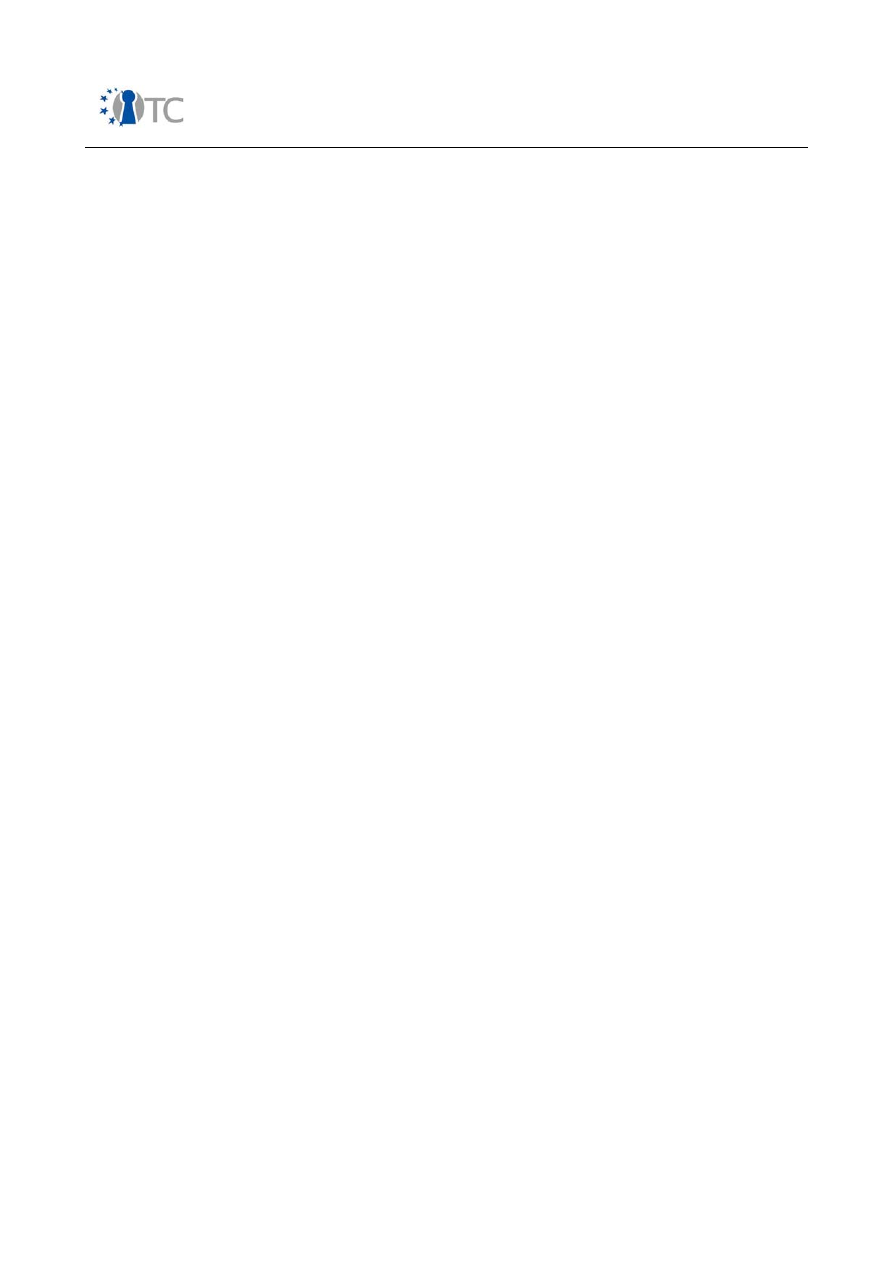

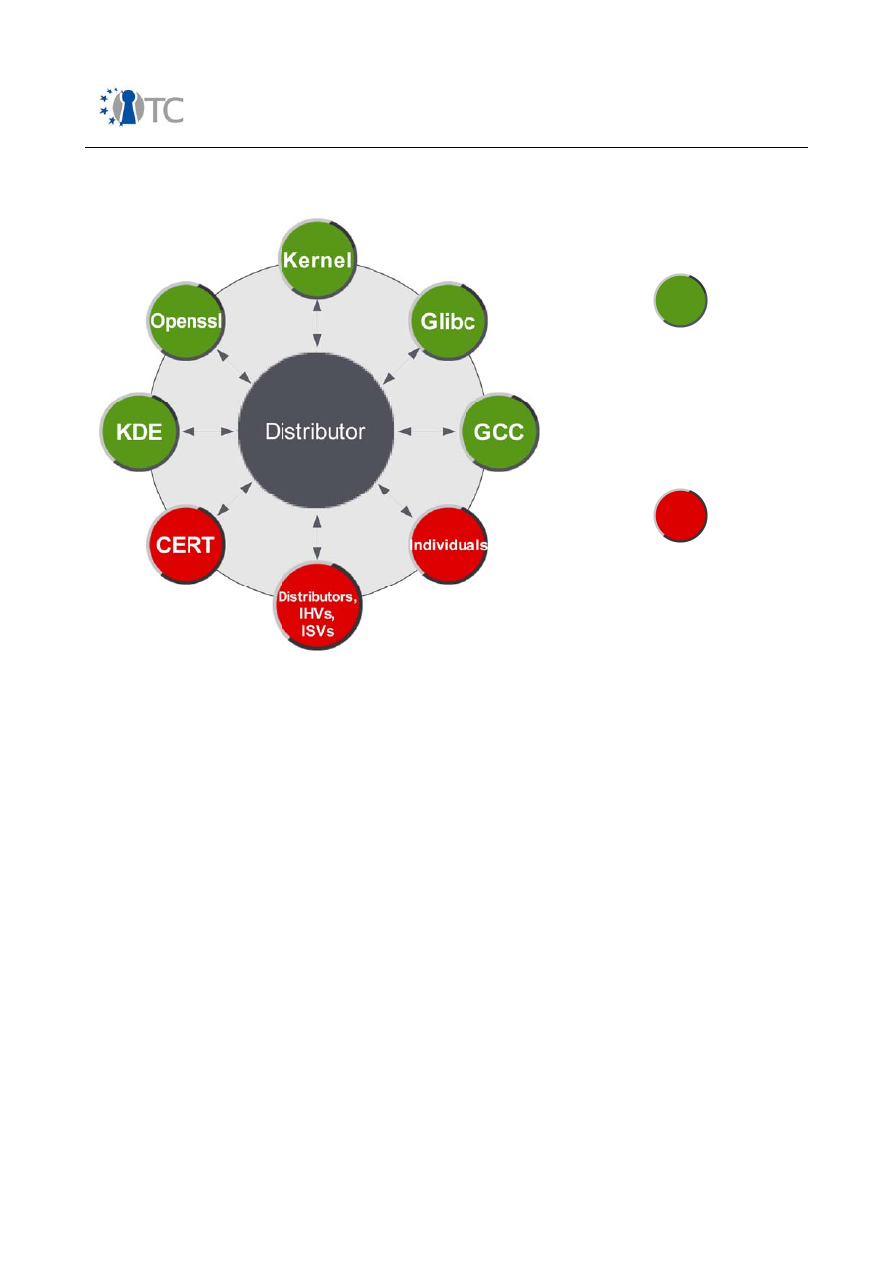

12. Figure: Open Source Software (OSS) development process ..................................81

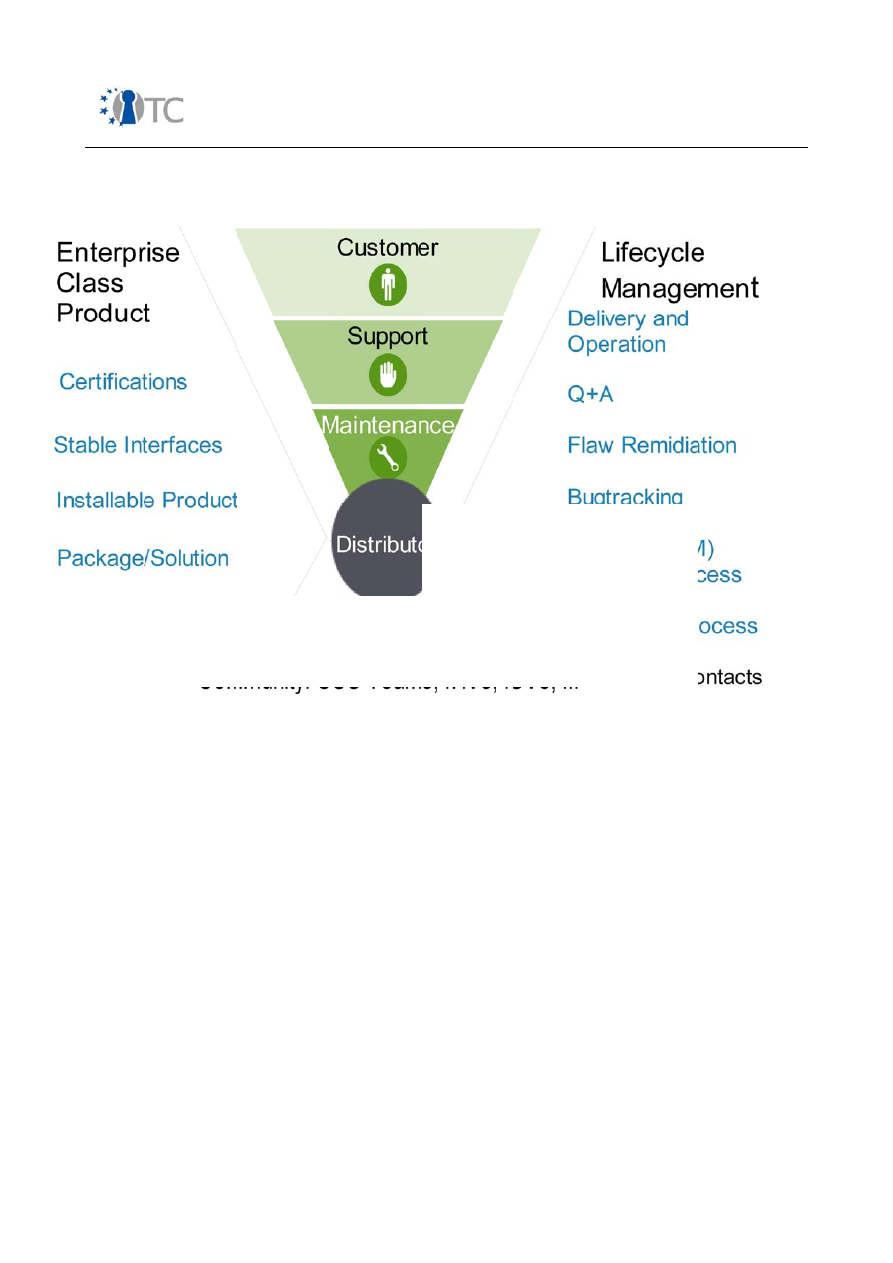

13. Figure: Development Funnel .................................................................................83

OpenTC Deliverable 07.1

6/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

List of Tables

Table 1: Error Types.....................................................................................................17

Table 2: Methodology Phases.......................................................................................25

Table 3: Actual Security................................................................................................29

Table 4: Calculating OPSEC..........................................................................................29

Table 5: Calculating Controls........................................................................................30

Table 6: Calculating Security Limitations......................................................................32

Table 7: Security Limitations Categories......................................................................33

Table 8: Formal Approaches using EventStudio............................................................67

Table 9: Comparison between CC EAL4 and EAL5........................................................84

OpenTC Deliverable 07.1

7/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

1 Summary

OpenTC sets out to develop trusted and secure computing systems based on Trusted

Computing hardware and Open Source Software. This deliverable provides the main

results of workpackage 07 dealing with support activities, i.e. methodology, testing,

verification and certification preparation. These results stem from various research

directions, and are directly related to the OS developments and their building blocks.

Some of the main results are the development and selection of testing and verification

tools, the definition of an Open Source Security testing Methodology.

OpenTC Deliverable 07.1

8/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

2 Introduction

This deliverable is the main output of WP07 for the first yearly period, i.e. from

November 2005 to October 2006. It describes the main results of that period as well

as work in progress of all partners of WP07, i.e. of BME, CEA, ISECOM, SUSE and TUS.

In this report we only present the research and development results for that period,

but do not address any project management issues, for which the reader is invited to

open the partners activities report.

2.1 Outline

The first year of OpenTC includes eight tasks, some of them being terminated and

some others are on-going.

The following is the list of all tasks included in WP07:

1. Definition of targets,

2. Development of a methodology for operational security and a security metrics,

3. Black box and white box security testing – tools development and testing

methodology,

4. Development of the PPC C code static analyser using AI,

5. Formal analysis of the XEN target,

6. Formal analysis of the TCP/IP package of the Linux kernel,

7. Survey of state of the art quality analysis and static analysis tools for C,

8. Statistics of Linux kernel bugs,

9. Study on CC EAL5+ certification feasibility.

WP07 has multiple objectives that can be classified as below:

●

To support the developments done on the OpenTC OS (including several

variants and versions): this includes classical testing, verification and

elaboration of a methodology.

●

Research on several fundamental parts, related to security and reliability: white

and black box testing and static analysis of large size targets such as XEN or the

Linux kernel, certifiability of open-source software at levels EAL5 and above,

etc.

The project started with some classical initialisation phases (hardware and software

setup, understanding of the project goals and of the roles of the partners, positioning

of the parters w.r.t. the WP and SWP goals described in the annex 1 of the contract,

survey of existing material, set up of tasks and of roles of each team member, etc).

OpenTC Deliverable 07.1

9/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

The definition of targets was envisioned as an early mandatory task, because it

channels most tasks, but remained incomplete for the first project year, as the OS

developers had no clear ideas on what the future OS will be precisely. Therefore, they

could only provide hints about components to address by WP07. These were: the L4

compatible hyper-visor Fiasco developed by TUD, the XEN hyper-visor developed by

XENSOURCE and, obviously, the last Linux kernels 2.6.15 and 2.6.16. Given the very

large size of these components, the partners concentrated on a subset of some of

them.

A methodology for ensuring a proper development and ensuring trust in the resulting

product, was another pre-requisite. This task was entrusted to ISECOM, who is

specialized in this area and deeply involved in open-source products. ISECOM first

formalised a set of security definitions and then set up a methodology for operational

security as well as means to calculate security metrics. Knowing that trust is an

ephemeral notion, with technical, human and organisation aspects, that measure

gives a value to the actual trust that can be put into a system. It does not only show

how one is prepared for threats but also how effective one is prepared against threats.

Testing and verification are the standard support activities usually part of the

development process of any critical software product, and are therefore tackled using

advanced testing and static analysis and methods by BME, TUS and CEA.

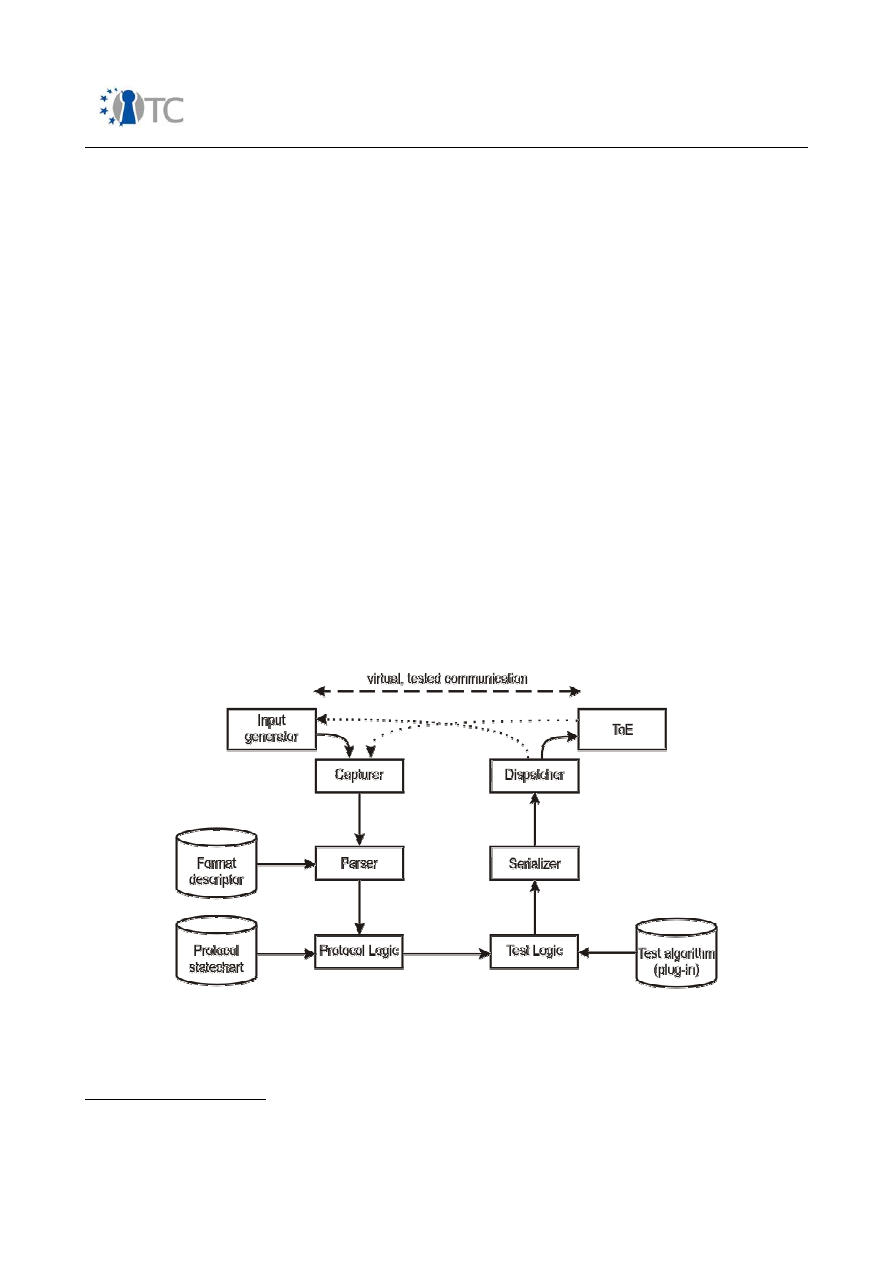

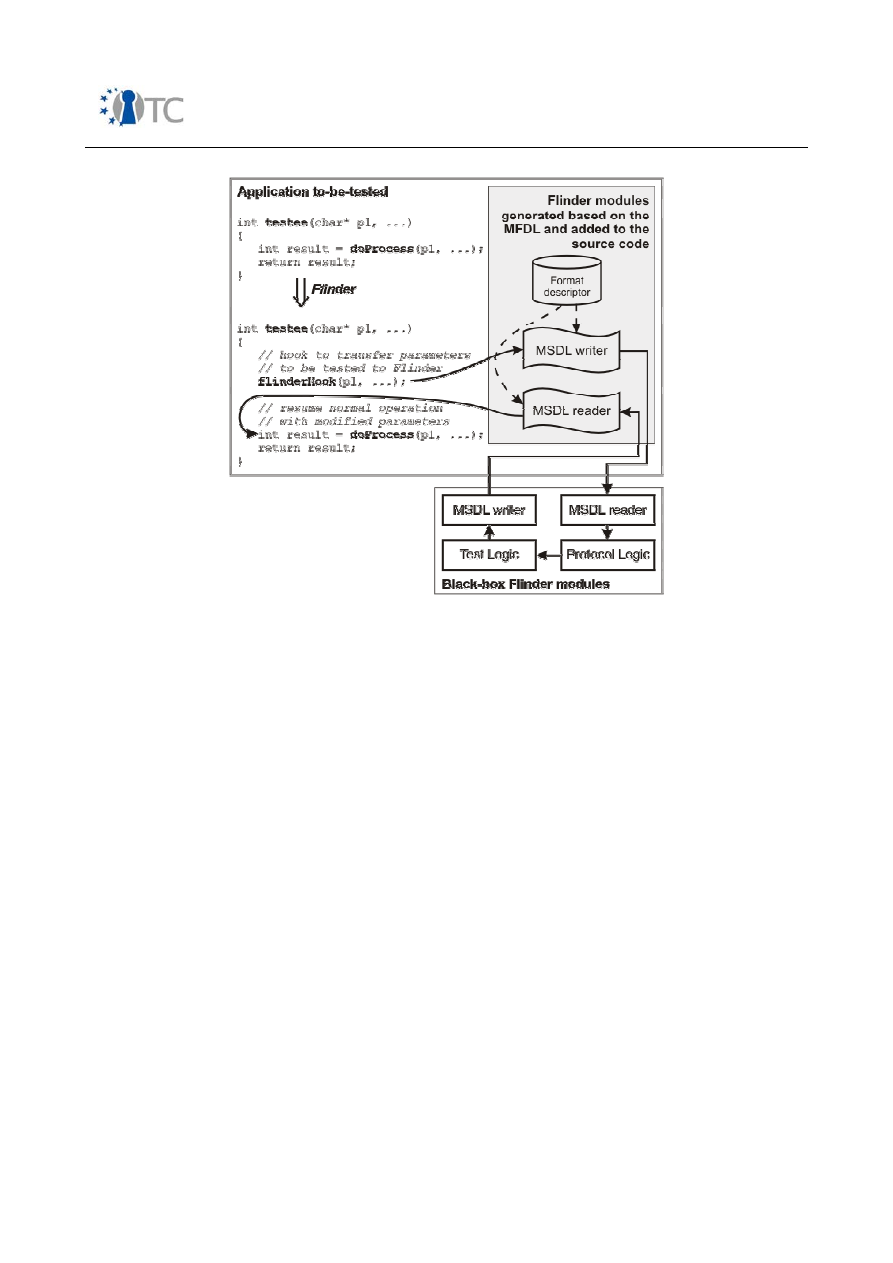

After a survey of existing automated testing tools, that led to the selection of the

Flinder tool, BME defined a testing framework, and an associated methodology

compliant with the CC. The security testing method considers black box as well as

white box testing. It innovates by considering that the ToE and the input generator

communicate via messages (perhaps over some network), the Flinder tool sitting as a

man in the middle between the two. Flinder is given the format of the messages (or of

the protocol) such that it can modify their content for black box testing purposes. The

internal form of the messages, sometimes grouped into packets, are used (and

modified) by the internal test logic (a Statechart) to drive the ToE. White box testing of

course needs the source code and annotates it to define test locations, where Flinder

connects to inject and recover data. After recompilation, testing goes as for black box

testing.

Static analysis differs by analysing some target code without actually running it, but

by building some model and proving properties on it. We distinguish two classes of

code: those amenable to a finite state model and those that can be turned into a flow-

chart model and deductive techniques applied on it. After a survey of existing static

analysis tools, TUS selected the Coverity tool for application on the target (see below).

The survey was limited to the commercial tools capable to analyse C code either at

the syntactical or at the semantic level. Among the many static analysis techniques,

abstract interpretation, sometimes combined with older techniques, appears to be the

most promising one for large applications, mainly because it builds and reasons on a

simplified model of the code (contrary to Hoare Logics) to extract simple properties

and errors. CEA is developing the PPC C code interpreter which has a new memory

model, and with a modular architecture, ready to accept and combine different

analyses (and domains) including Hoare analysers. As a first application, PPC has been

applied to the XEN core. Iteratively, this case study improves PPC along the XEN core

OpenTC Deliverable 07.1

10/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

analysis.

The certification of some piece of software such as the targets envisaged here, is not a

trivial job, especially when it hinders on habits of human developers or on

organisational or economic criteria. When the target at hand is OSS, then certification

may also get stuck because of the anarchic development process. In fact, an OSS

development process produces high quality code but using an under-deterministic

process, meaning that it is deterministic from the inside but seems non-deterministic

from the outside. SUSE has examined the relationship between the CC certification

and OSS, and evaluated the feasibility of the CC assurance criteria for level EAL5,

comparing it with criteria of the already achieved level EAL4. Given its knowledge of

Linux, SUSE re-centres the certifiability problem of Linux to the core problem, namely

the certifiability of the virtualisation layer (domain 0).

2.2 Structure of this report

This report is structured along the above mentioned tasks, presenting them in details

and giving the reader an insight into each technique and results as well as its

application to OpenTC.

Each task will be described, whenever possible, using the same model, follows:

–

Nature of the task and description of its aims and relationship with the original

plans of WP07 and its SWP: this introduces the task and binds it to the first

workplan (see annex 1 of the OpenTC contract).

–

Background: this contains basic technical elements for the reader to understand

the results.

–

Process view: this explains how the task was performed.

–

Main results achieved: this is the core part, highlighting the technical results.

–

On-going work during the second period of the project: this gives some

perspectives on what will to be done during the next project period and what

research directions will be taken for that time frame.

OpenTC Deliverable 07.1

11/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

3 Definition of targets

3.1 Selecting Targets

The understanding and definition of the analysis target was one of the first tasks that

cropped up during the project. The definition of the targets is critical as

●

It constraints the tools used to analyse them,

●

it is constrained by the efforts and time planed to analyse them,

●

its nature constraints the CC evaluation preparation,

●

its development process might differ and influence previous items, and

●

it binds WP07 to other project partners and their development plans.

Therefore discussions with the partners of WP04 have taken place in order to

understand what will be the OpenTC OS and what parts have to be considered by

WP07. Indeed, the developer are in charge and in control of the development process

and have a good understanding of the criticality of the modules being developed, to

take the right decision on the targets nature. TUD and CUCL being the authors of the

two first OpenTC OS including a virtualization layer, they were asked primarily. It

appeared that it was too early to decide upon which precise modules must be

analysed, but instead it seemed wise to consider the most critical existing

components: the core of XEN 3.0 final, L4/Fiasco V1.2 and Linux 2.16.15/16 kernel.

This broad definition of the targets has the disadvantage of leaving an important

quantity of code for analysis, and the advantage of leaving WP07 members the choice

of sub-components to analyse.

3.2 Future directions

During the project meeting in Zurich, 13 to 15 September 2006, the targets definition

subject was raised again. Discussions with WP03 and WP04 partners led to a narrower

definition of the targets:

–

With IFX

: a first version of the TSS stack has been released by IFX for testing

purposes. It is located

https://svn.opentc.net/Workpackage%2003/IFX_TSS_Stack/

.

According to IFX, this is a preliminary version, followed by an complete release end

of October. IFX proposes to provide its testing environment. One restriction applies:

found security deficits shall NOT be made public.

This is clearly a testing target for BME, as it is quite self-contained and this would

increase trust in the TSS for other partners.

–

With TUD

: Possibilities to analyse C++ parts of the OS developed in project Robin

have been discussed, but, as C++ verification techniques need maturing, this will

be re-discussed end of 2007. For instance, at CEA, some research is going on on

the static analysis of C++ code (not reported in this deliverable), that may become

applicable to L4.

–

With CUCL

: Several targets, part of XEN, are proposed by Steven Hand, namely

1. Main initialization function, __start_xen, for verification and testing

OpenTC Deliverable 07.1

12/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

purposes: this function shall not produce any error. It outputs an initial

state, to be stored and used below.

2. Hyper-calls: The system interface of an up and running XEN dom0 is

made of hyper-calls (see XEN interface manual), which are called in a

quite random order at run-time. Testing these functions is done via a

(CUCL internal) main function that invokes them randomly. Verification

shall explore these hyper-calls simultaneously. A priority has to be

assigned by CUCL to each HC.

3. Mini-OS: this is a minimal OS allowing a dom0 to run and to test it. It is

part of the XEN distribution (directory ../xen-3.0.x/extras/mini-os). It is

suggested to compile and get familiar with it, but test and verify it in a

later stage of the project.

XEN 3.0.3, to be released during weeks 38 or 39, 2006, is considered as the

target version, and CUCL expects no patches in the C code during the year after

release, to this might be considered as stable for WP07.

This is a verification target for TUS & CEA and a second testing target for BME.

The second project period will therefore concentrate on these updated targets. An

actions list was established in the meeting minutes, to remind partners of contributing

elements and dates.

OpenTC Deliverable 07.1

13/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

4 Development of a security testing methodology

4.1 Overview

The determination of the possibility and means of testing for and measuring trust as in

Trusted Computing means exploring the depths of security both philosophically and

scientifically. Therefore finding the quantification of trust in anything such as in

Trusted Computing means applying verification methods for integrity and all the

components of what it is that makes up trust. A methodology is required. The

Applied Verification for Integrity and Trust (AVIT)

is that methodology to

understand and relate in a scientific manner to the ephemeral concept of trust,

something we can do innately but which we have not yet been able to measure

without bias. In which case, it has not been possible to define a rule set for

determining trust without human input (i.e. machine generated trust has been no

better than random if not built to follow the rules of the entirely human trait of

prejudice).

AVIT has its roots in security testing. Security testing itself however is only a cousin to

trust testing. With security one can define the components of protection and control

and measure if those components are in place. Measuring the components requires

tests and those tests will also determine the reality and verity of the protection and

controls as to their limitations.

Many of the traits we currently apply to measurements, whether the length of a table

or the cubic volume of a house, allows for us to determine the validity of the object

being measured in regards to its function. For example, when we measure the length

of a table, we also determine that; 1. it is a table due to the function of a table, and 2.

that no part of that function as a table is untrue such that as a bowed or round surface

which could make it non-functional as a table. Therefore, security testing will measure

the verity of the function so as to allow the measurement of trust.

As objects for measurement or the type of measurement required gets more complex,

the obviousness of the function or limitations of that function are more obscured and

more difficult to realistically define. For example, to measure the cubic volume of

house appears simple enough because we can grasp the concept of a house, how it

functions, and when it does not function as a house. Or can we? Is a house without a

roof still a house? Does its volume then include the air above it and how far? What

needs to be missing from a house to not be a house? It's not uncommon to see a

living space and for what one person is his or her house and for another it is a cabin, a

tent, or a hole. Therefore we look at its operations: how is it being used or how does it

work? Under the operations premise, an object which performs the known functions of

a house is a house. Once we know it's a house we can measure its cubic volume

based on the known method of measuring cubic meters for the volume of a house.

Reality, however, still shows that even measuring the cubic meters of a house is not

an exact science and there is no universally accepted method for measuring such a

thing (for example the flexible term of “living space” which is measured differently

from area to area) although it's universally and commonly revealed in the sale of all

houses.

OpenTC Deliverable 07.1

14/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

With a complex object such as a computer, it absolutely requires the testing and

evaluating of its operations to security or its trustworthiness. Before the tests and

measurements can be done, we need to know something about a secure computer.

We need to define what it does and how it does it: its operations. For this we rely on

the security test and security metrics.

Defining trust for developing tests for Trusted Computing is more difficult. Since true

trusted computing does not yet exist, we cannot say how one operates. We cannot

say what is required to operate as a trusted computer. We cannot say how trust is

measured without bias. We can only approach this theoretically. However, theory

does not test or measure well except in theory. Yet, despite the obstacles, this is what

we intend to standardize.

The obstacles to trust begin with fears and uncertainties. If there is a chip, how will it

be used? If there is a software, will it take control? If everything is in place, how do I

know it's doing only what it's expected and intended to do if I can't verify for myself?

The three main obstacles are:

1. fears behind trustworthiness

2. the human problem with trustworthiness

3. the technical problem with trustworthiness

The next issue is public acceptance and the human problem with trust. More so than

that even is individual preference. Public acceptance may be something bought and

connived. But individual preference for trust is much more delicate and requires

convincing.

The third obstacle is the technical one. If we have the appropriate hardware in place

for trust, how can we be sure it is appropriate? If we need software for trust, can we

be assured the software operates correctly? Who determines how the hardware and

software interact and that what they accomplish can lead to trust as the conclusion?

Therefore the only appropriate solution is a public and open methodology that is

based on the questions we need answered to find trust and not on the current

research and implementations.

The AVIT methodology is based on that which the person can control for trust to first,

exist, and second, to be beneficial. From the three main obstacles to trustworthiness,

the first two are easiest to find control over by an individual. The third, technical

design and implementation, will require more as not even the average technically-

inclined individual will be able to ascertain trustworthiness without support. For this

reason, the AVIT methodology will focus on tests for the technical problem of

trustworthiness to overcome both the fears and the human problem:

●

Choice and finality of controls,

●

Openness of design and implementation,

●

Transparency of communication and action, and

●

Clarity and usability of operations.

OpenTC Deliverable 07.1

15/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

4.2 Technical background

The goal of AVIT requires first exploring the trusted computing theory and defining all

that which we need to know to measure trust and the operations of trusted computing.

It also requires the means for unbiased testing and measurements. To do this, we

needed to review what has already been done for security testing and for trusted

computing. We also needed to consider the components of both security and trust and

how they are developed in a rational and logical manner.

The process consists of the security audit and the components of opacity to create a

chain of trust according to the rules of trust which have been defined.

Security Testing Process

The security testing process is a discrete event test of a dynamic, stochastic system.

The target is a system, a collection of interacting and co-dependent processes, which

is also influenced by the stochastic environment it exists in. Being stochastic means

the behavior of events in a system cannot be determined because the next

environmental state can only be partially but not fully determined by the previous

state. The system contains a finite, possibly extremely large, number of variables and

each change in variable presents an event and a change in state. Since the

environment is stochastic, there is an element of randomness and there is no means

for predetermining with certainty how all the variables will affect the system state. A

discrete test examines these states within the dynamic system at particular time

intervals. Monitoring operations in a continuous manner, as opposed to a discrete

one, would provide far too much information to analyze. Nor may it even be possible.

Even continuous tests however, require tracking each state in reference to time in

order to be analyzed correctly.

A point of note is the extensive research available on change control for processes to

limit the amount of indeterminable events in a stochastic system. The auditor will

often attempt to exceed the constraints of change control and present “what if”

scenarios which the change control implementors may not have considered. A

thorough understanding of change control is essential for any auditor.

Unfortunately, auditors assume security testing is simple and often audit under what is

known as the “echo process” which requires agitating and then monitoring

emanations from the target for indicators of a particular state (secure or insecure,

vulnerable or protected, on or off, left or right). The echo process is of the cause and

effect type. The auditor makes the cause and analyzes the effect from the target. This

means of testing is very fast but is also highly prone to errors, some of which may be

devastating to the target. While the Rules of Engagement can help minimize damage

to the target in the echo process, it cannot help minimize the errors. We categorized

these errors as:

OpenTC Deliverable 07.1

16/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

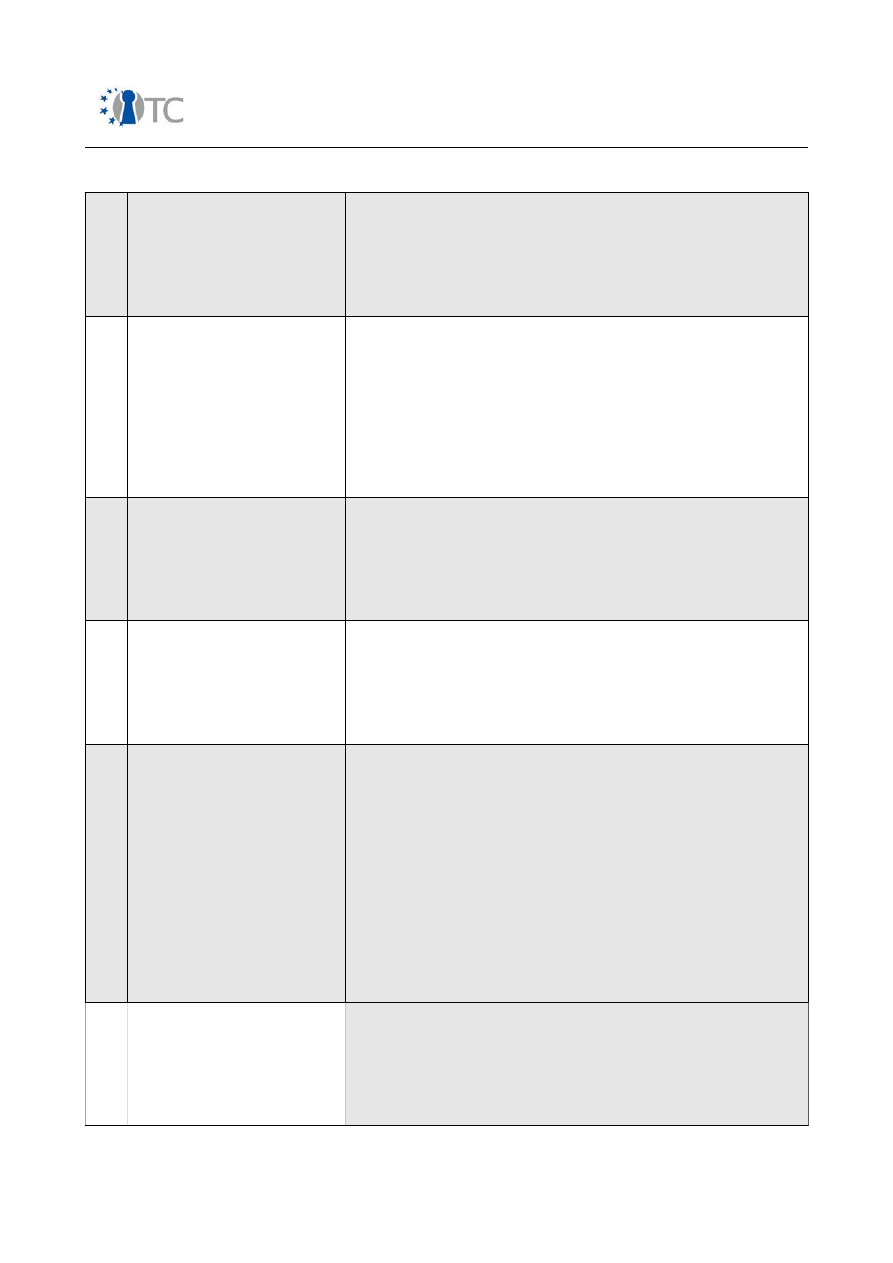

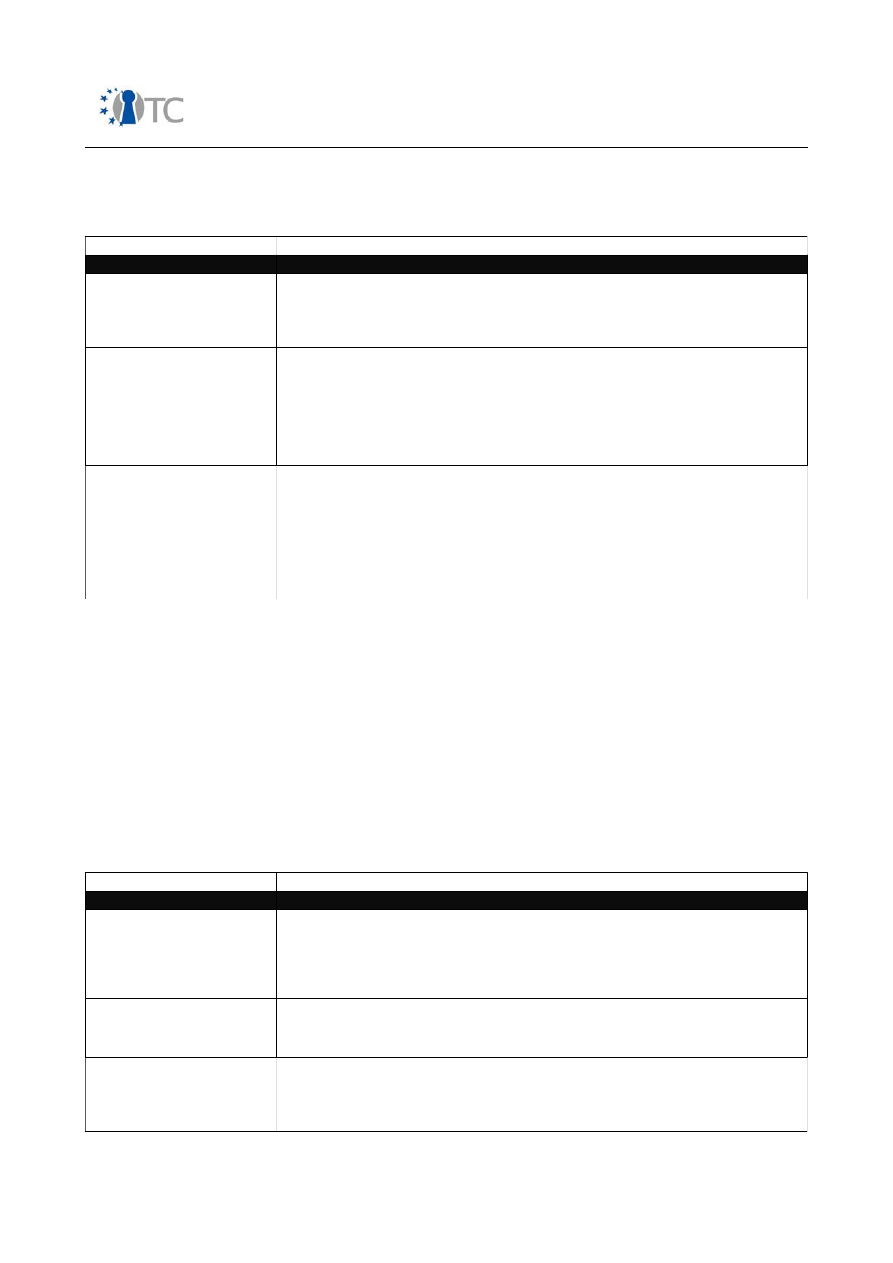

Table 1: Error Types

1

False Positive

The target response indicates a particular state as

true although in reality the state is not true. A false

positive often occurs when the auditor's expectations

or assumptions of what indicates a particular state

does not hold to real-world conditions which are rarely

black and white.

2

False Negative

The target response indicates a particular state as not

true although in reality the state is true. A false

negative often occurs when the auditor's expectations

or assumptions about the target does not hold to real-

world conditions, the tools are the wrong type for the

test, the tools are misused, or the auditor lacks

experience. A false negative can be dangerous as it is

a misdiagnoses of a secure state when it does not

exist.

3

Gray Positive

The target response indicates a particular state as

true however the target is designed to respond to any

cause with this state whether it is or not. This type of

security through obscurity may be dangerous as the

illusion cannot be guaranteed to work the same for all

stimuli.

4

Gray Negative

The target response indicates a particular state as not

true however the target is designed to respond to any

cause with this state whether it is or not. This type of

security through obscurity may be dangerous as the

illusion cannot be guaranteed to work the same for all

stimuli.

5

Specter

The target response indicates a particular state as

either true or false although in reality the state cannot

be known. A specter often occurs when the auditor's

receives a response from an external stimulus that is

perceived to be from the target. A specter may be

either intentional of the target, an anomaly from

within the channel, or the result of carelessness

and/or inexperience from the auditor. One of the

most common problems in the echo process is the

assumption that the response is a result of the test.

Cause and effect testing in the real world cannot

achieve consistently reliable results since neither the

cause nor the effect can be properly isolated.

6

Indiscretion

The target response indicates a particular state as

either true or false but only during a particular time.

That time may or may not follow a pattern and if can't

be verified at a time when the state changes, it may

cause the auditor to not comprehend the other state.

An auditor may also determine that this is an anomaly

OpenTC Deliverable 07.1

17/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

or a problem with testing equipment especially if the

auditor failed to calibrate the equipment prior to the

test and perform appropriate logistics and controls.

An indiscretion can be dangerous as it may lead to a

false reporting of the state of security.

7

Entropy Error

The target response cannot accurately indicate a

particular state as either true or false due to a high

noise to signal ratio. Akin to the idea of losing a

flashlight beam in the sunlight, the auditor cannot

properly determine state until the noise is reduced.

This type of environmentally caused error rarely exists

in the lab however is a normal occurrence outside of

the lab in an uncontrolled environment. Entropy can

be dangerous if its effects cannot be countered.

8

Falsification

The target response indicates a particular state as

either true or false although in reality the state is

dependent upon largely unknown variables due to

target bias. This type of security through obscurity

may be dangerous as the bias will shift when tests

come from different vectors or employ different

techniques. It is also likely that the target is not aware

of the bias.

9

Sampling Error

The target is a biased sample of a larger system or a

larger number of possible states. This error normally

occurs when an authority influences the operational

state of the target for the duration of the test. This

may be through specific time constraints on the test

or a bias of testing only that which is designated as

“important” within a system. This type of error will

cause a misrepresentation of the overall operational

security.

10

Constraint

The limitations of human senses or equipment

capabilities indicates a particular state as either true

or false although the actual state is unknown. This

error is not caused by poor judgment or wrong

equipment choices rather it is a failure to recognize

imposed constraints or limitations.

OpenTC Deliverable 07.1

18/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

11

Propagation

The auditor does not make a particular test or has a

bias to ignore a particular result due to an presumed

outcome. This is often a blinding from experience or a

conformational bias. The test may be repeated many

times or the tools and equipment may be modified to

have the desired outcome. As the name implies, a

process which receives no feedback and the errors

remain unknown or ignored will propagate further

errors as the testing continues. Propagation errors

may be dangerous because the errors propagated

from early in testing may not be visible during an

analysis of conclusions. Furthermore, a study of the

entire test process is required to discover propagation

errors.

12

Human Error

The errors caused by lack of ability, experience, or

comprehension, is not one of bias and is always a

factor and always present regardless of methodology

or technique. Where an experienced auditor may

make propagation errors, one without experience is

more likely not to recognize human error, something

which experiences teaches us to recognize and

compensate for. Statistically, there is an indirect

relationship between experience and human error.

The less experience an auditor has, the greater the

amount of human error an audit will contain.

Operational Security Testing

Operational security test requires applying the four point process of testing, choosing

the correct type of test, recognizing the test channels and vectors, defining the scope

according to the correct index, and applying the methodology properly.

Four Point Process

The security test process in this methodology does not recommend the echo process

for reliable results. While the echo process may be used for certain, particular tests

where the error margin is small and the increased efficiency allows for time to be

moved to other time-intensive techniques, it is not recommended for tests outside of a

deterministic environment. However, the auditor must choose carefully when and

under what conditions to apply the echo process.

While many testing processes exist, the recomended security test pocess is one

designed for optimum efficiency, accuracy, and thoroughness to assure test validity

and minimize errors in uncontrolled and stochatic environments. It is optimized for

real-world test scenarios outside of the lab. While it also uses agitation, it differs from

the echo process in that it allows for determining more than one cause per effect and

more than one effect per cause. This test process has four phases which is also why

it's referred to as the Four Point Process.

OpenTC Deliverable 07.1

19/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

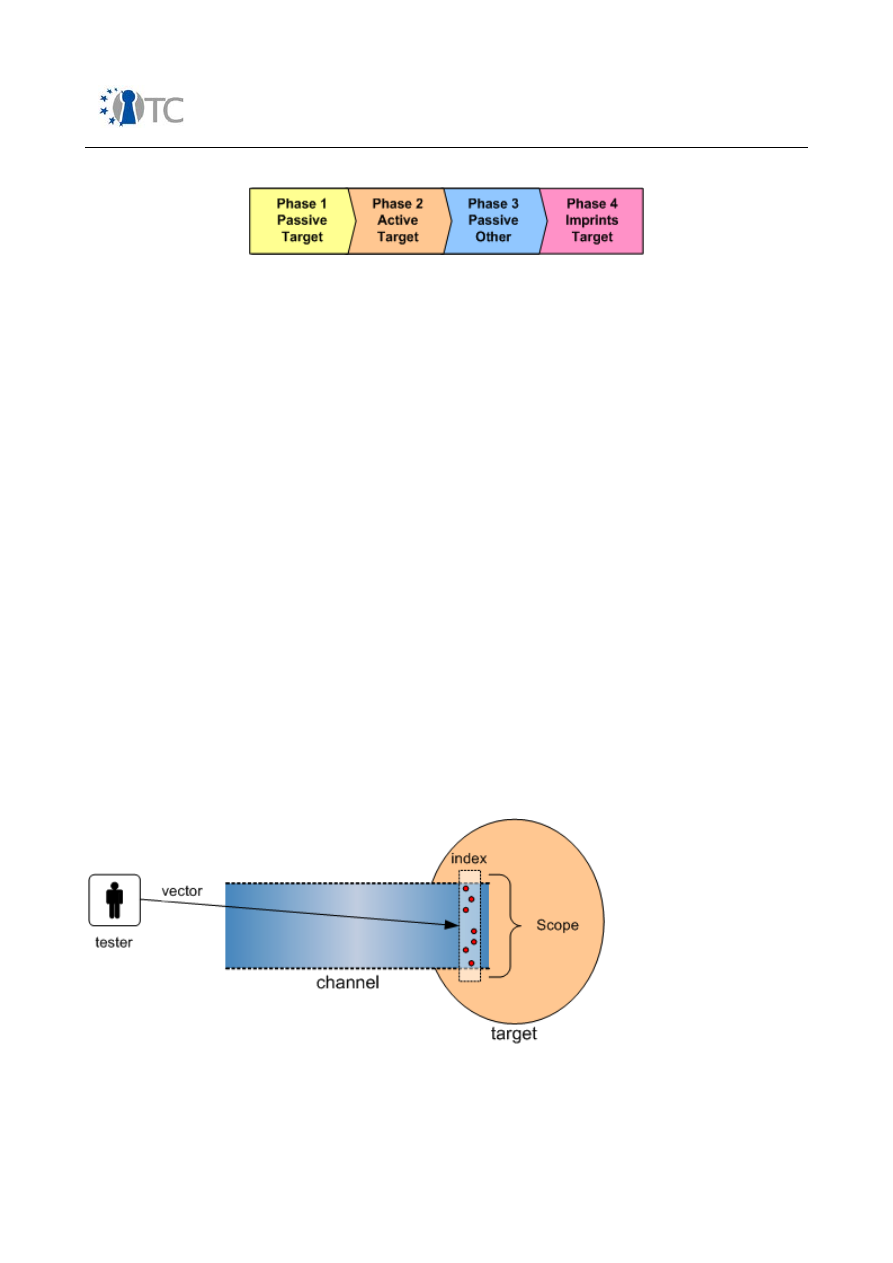

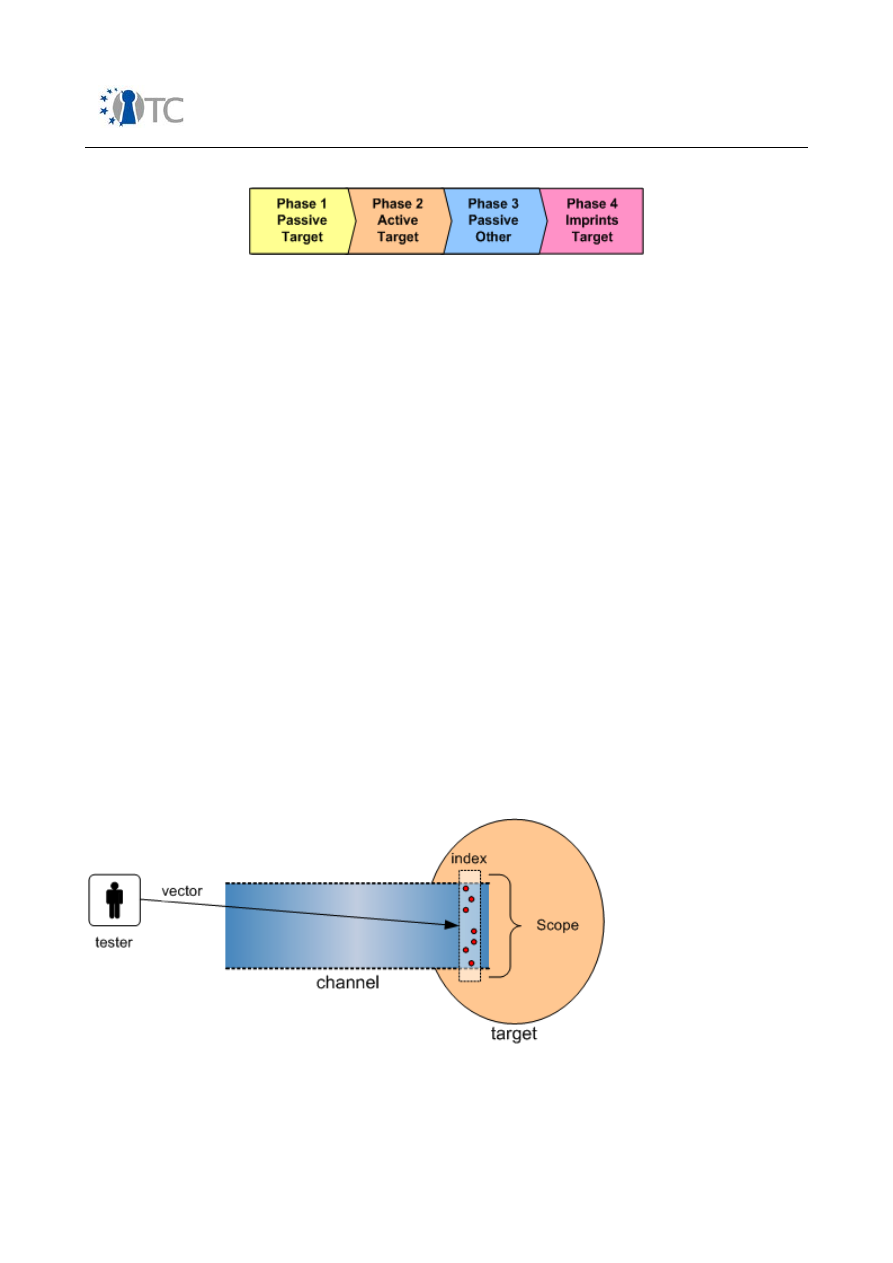

Phase 1

Passively collect data of normal operations to comprehend the target. This data does

not need to come from the target alone. The data can be collected from anywhere

and will be used to understand the interaction between the target and its

environment. This data is referred to as emanations.

Phase 2

Actively test operations by agitating operations. The auditor interacts directly with the

operations from different vectors and in varying degrees of frequency and intensity.

The frequency and intensity should both be within the normal baseline as a means of

discovery but also far beyond it as a means of stress.

Phase 3

Passively collect data from other sources interacting with the target as a result of the

test. Indirect data data sources include any type of resources used such as fuel,

energy, materials, man hours and the effected operators of the process or a particular

state such as workers or programs.

Phase 4

Collect imprints of the test from the target. The test itself will leave a trail within the

target. This trail may be in the form of messages, logs, emotions, thoughts, or tracks.

These imprints will show the trails which did not return to the tester and emanated

beyond the tester's monitoring or reach.

Applying the Methodology

1. Figure: Methodology Application

This security testing methodology has a solid base which may seem quite involved but

OpenTC Deliverable 07.1

20/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

it is actually simple in practice. It is designed as a flowchart, however unlike the

standard flowchart, the flow, represented by the arrows, may go back as well as

forward. In this way the flow is more integrated and while the beginning and the end

are clear, the audit has greater flexibility. The auditor creates a unique path through

the methodology based on the target, the type of test, the time allotted for the audit,

and the resources applied to the test. Since the path through the methodology may

be unique between auditors, it is important that the final audit report labels the

restrictions for use in result comparisons. The main reason for requiring this level of

flexibility in this methodology is because no methodology can accurately presume the

justifications for the operations of channel gateways in a target and their adequate

level of security. More directly, this methodology cannot presume a best practice for

conducting all audits as best practice is based on a specific configuration of

operations.

Best practice, or similar, is only best for some auditors, generally the originator of the

practice. Operations dictate how services should be offered and those services dictate

the requirements for operational security. Therefore a methodology that is invoked

differently for each audit and by each auditor can still have the same end result if the

auditor completes the methodology. This is also why one of the foundations of this

methodology is to record precisely what was not tested. By comparing what was

tested and the depth of the testing, with other tests, it is possible to measure

operational security (OPSEC) based on the test results.

Applying this methodology will therefore meet the auditor's goal to answer: (1) how do

current security operations work and (2) how do they work differently from how the

target responsible thinks they work? Appropriate answers to this will also ask the

target responsible the question, (3) how do they need to work? This is known as the

Auditor's Trifecta.

Auditor's Trifecta

1. How do current operations work?

The derived metrics can be applied to determine the problem areas within the scope

and which problems must be addressed. The metrics in this methodology are

designed to map the problems in different ways so as to show if the problem is a

general one or more specific, like an over-look or a mistake.

2. How do they work differently from how management thinks they work?

Access to policies or a risk assessment will map back to the different categories of the

metrics. The categories provide the current state values where a comparison can be

made with both an optimum state according to the policies and one according to

assessed threats.

3. How do they need to work?

Where the metrics show no gap between policy or risk assessment's optimum values

however the security test shows that there is indeed a protection problem regardless

of controls as implemented in policy, it is possible to clearly denote a problem. Often,

without even mapping to policy, a discrepency between the implemented controls and

the loss of protection is simply evident.

OpenTC Deliverable 07.1

21/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

The auditor's trifecta combined with the four point process provide a substantially

thorough application of this methodology.

1. Passively collect data of normal operations to comprehend the target.

2. Actively test operations by agitating operations beyond the normal baseline.

3. Analyze data received directly from the operations tested.

4. Analyze indirect data from resources and operators (i.e. workers, programs).

5. Correlate and reconcile intelligence from direct and indirect data test results.

6. Determine and reconcile errors.

7. Derive metrics from both normal and agitated operations.

8. Correlate and reconcile intelligence between normal and agitated operations to

determine an optimal level of protection and control.

9. Map the optimal state of operations to processes.

10.Create a gap analysis to determine what enhancements are needed for

processes governing necessary protection and controls to achieve the optimal

operational state from the current one.

OpenTC Deliverable 07.1

22/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

4.3 Process view

The development process for the methodology required both research of current study

in Trusted Computing and new developments, specifically gap research for what has

not yet been done.

The initial assessment required defining how operations explain function such as: How

is it being used? How does it work? We know that trusted computing is complex with

both a complex technology and a complex philosophy. What we don't know if trust is

rooted in risk assessment. Is trust just a matter of weighing risks? Is it threat

probability over possibility? To most people, we discovered that trust is about threat

possibility. Numbers and logic are obscured by the potential intensity of disaster and

the process needs to consider the human factor along with the logic.

The methodology will begin as a set of questions to be answered in the form of an

outline. The outline will be provided openly at ISECOM's website and a call for

volunteers and reviewers will be made publicly. The outline will begin under the Open

Methodology License which protects a person's Trade Secrets (a methodology is

considered a Trade Secret by law) in an open manner to foster development and use

in much the same way that the GPL protects copyright. Furthermore, the content

itself will be provided under the Common Criteria copyleft to facilitate research and

open dialog. Contributions are added to the outline and pass through an editorial

review board and then to the open document. Credit is not given as part of the

submission rather as part of the whole document. In this manner, we allow

researchers to provide information which may not be mainstream thought without

having to be afraid of repercussions. In cases of corporate reprisals, researchers may

remain anonymous as long as the information is not protected by the company as

some companies require extensive paperwork for an employee to assist such projects

even off-hours.

As researchers contribute their expertise in the various questions, the methodology

will grow. Verification will take place at ISECOM and with the partners of OpenTC to

assure facts are correct. Further public participation is advertised as the document

takes form and the transparent process is necessary to garner general public support

if not participation. The goal being a set of tests and tasks to assure trust not just for

OpenTC but as a living standard for all Trustworthiness open and closed. Therefore,

any individual or organization should be able to apply the tests and determine if it not

only is trustworthy but also how much so. To do this, we defined the security test

modules with appropriate definitions.

OpenTC Deliverable 07.1

23/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

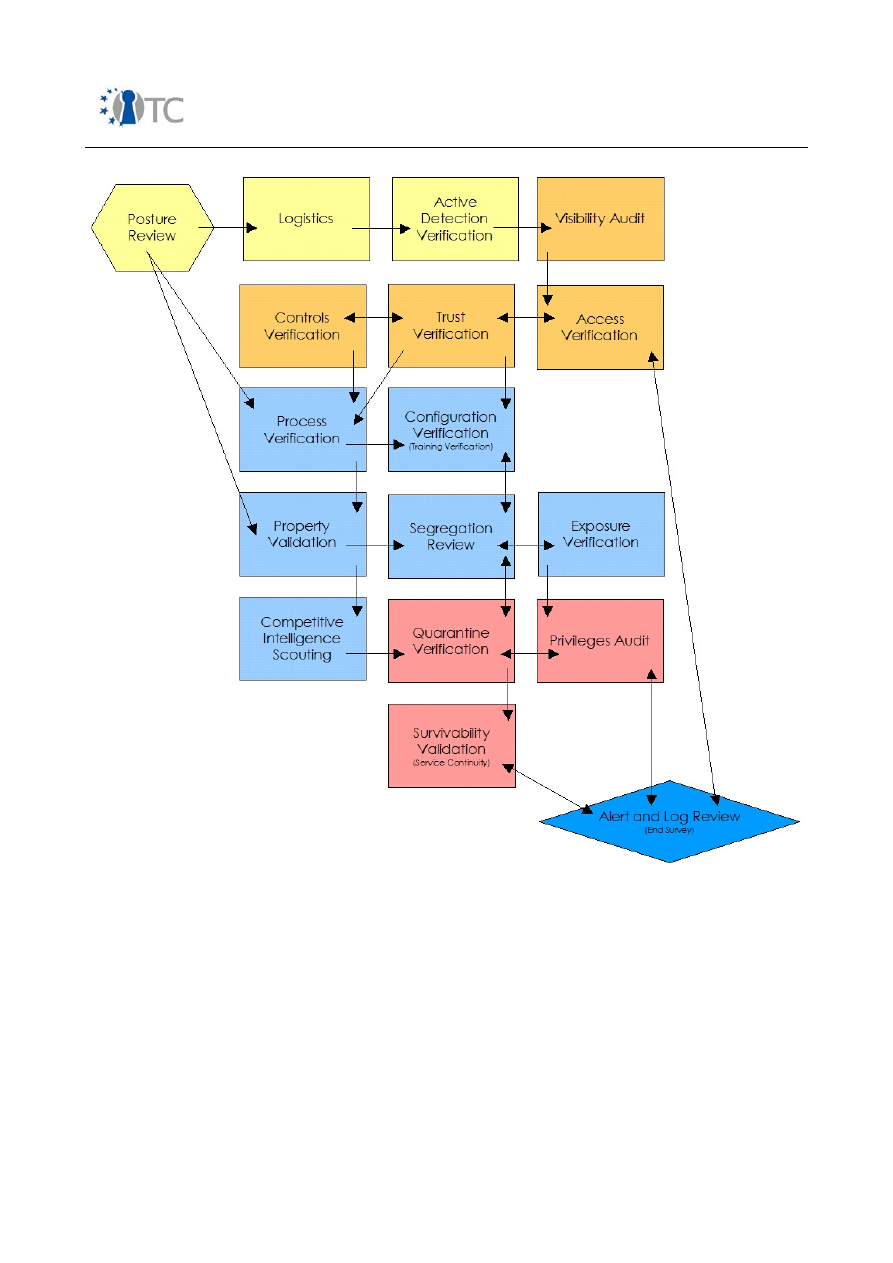

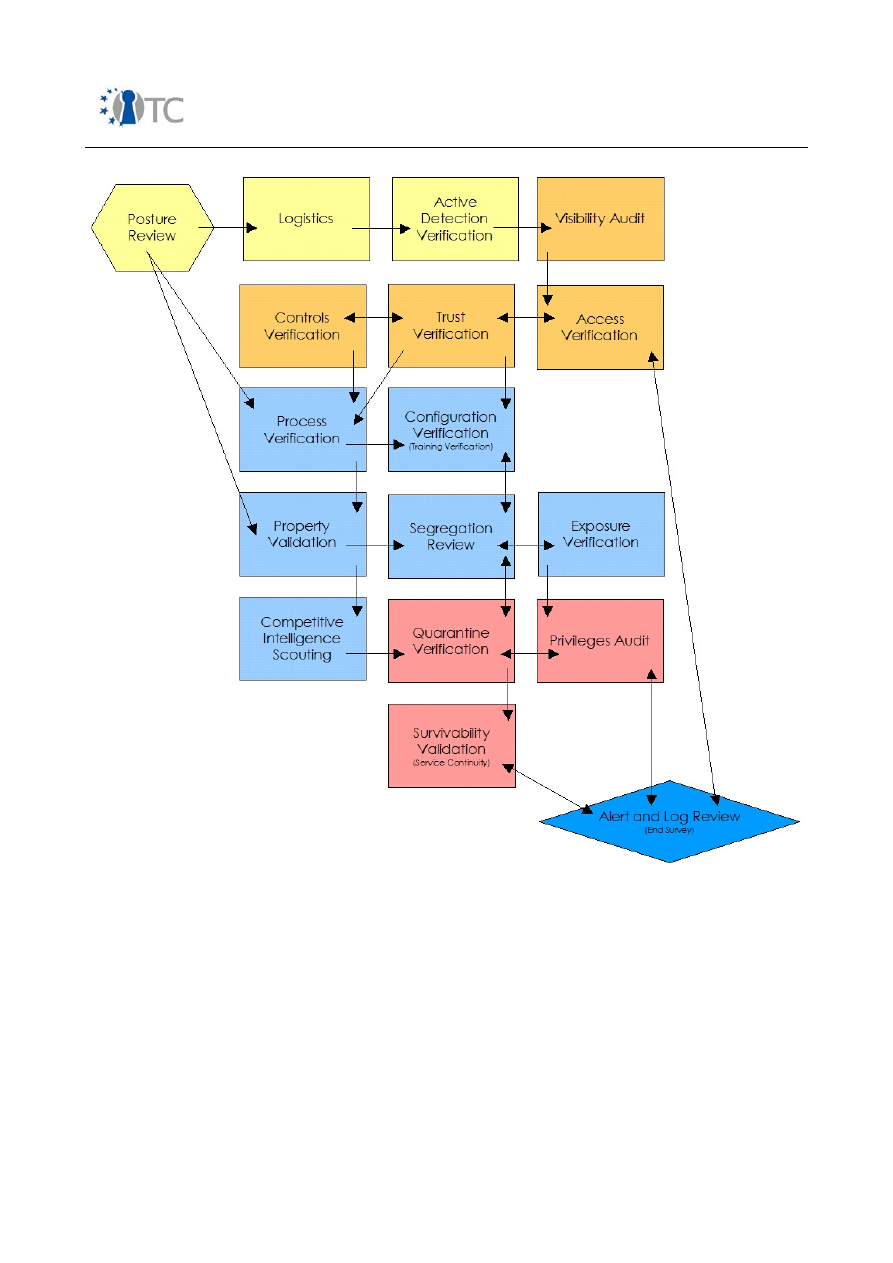

2. Figure: Test Modules

To choose the appropriate test type, it is best to first understand how the modules are

designed to work. Depending on the thoroughness, business, time allotment, and

requirements of the audit, the auditor may want to schedule the details of the audit by

phase.

There are four phases in the execution of this methodology:

A. Regulatory Phase

B. Definitions Phase

C. Information Phase

D. Interactive Controls Test Phase

OpenTC Deliverable 07.1

24/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

Each phase lends to a different depth of the audit but no one phase is less important

than another in terms of Actual Security.

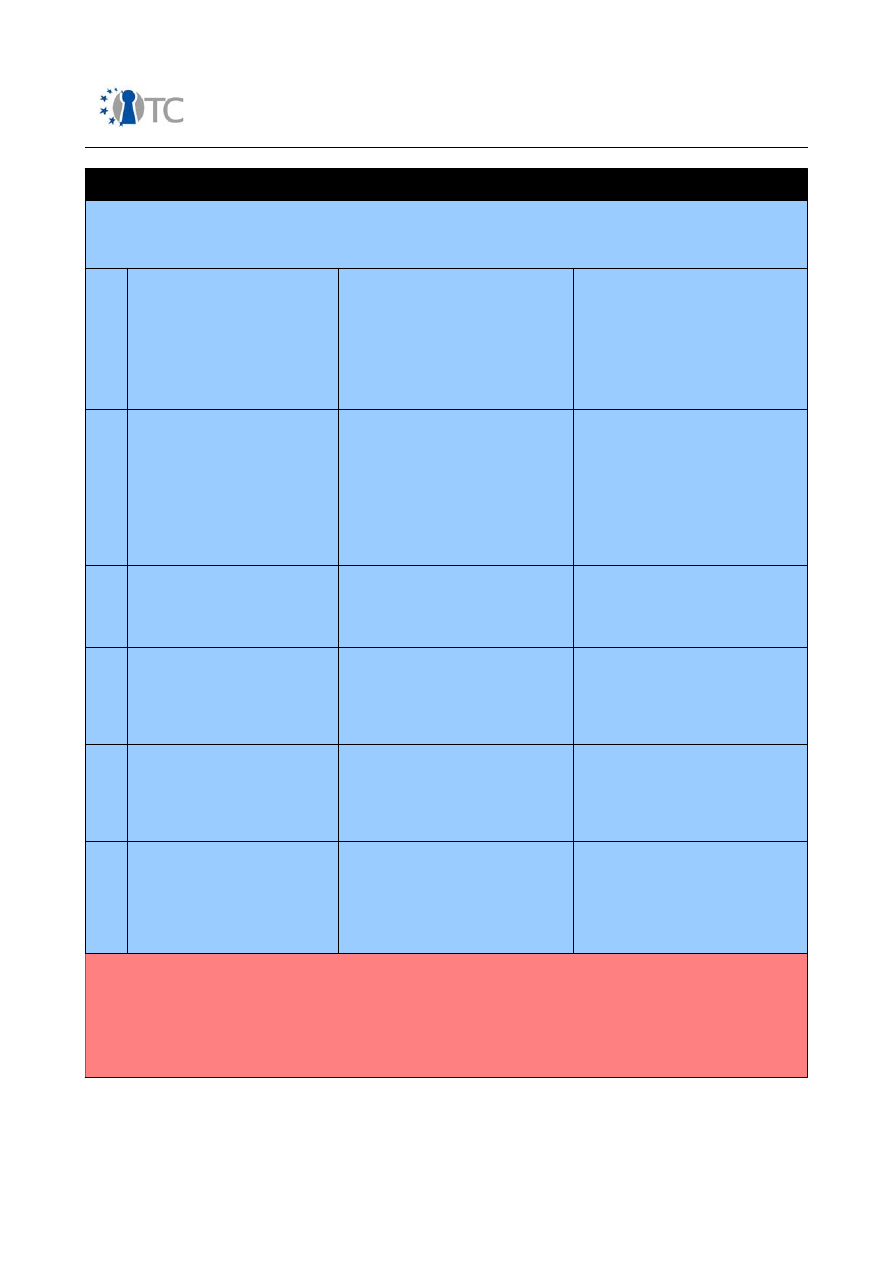

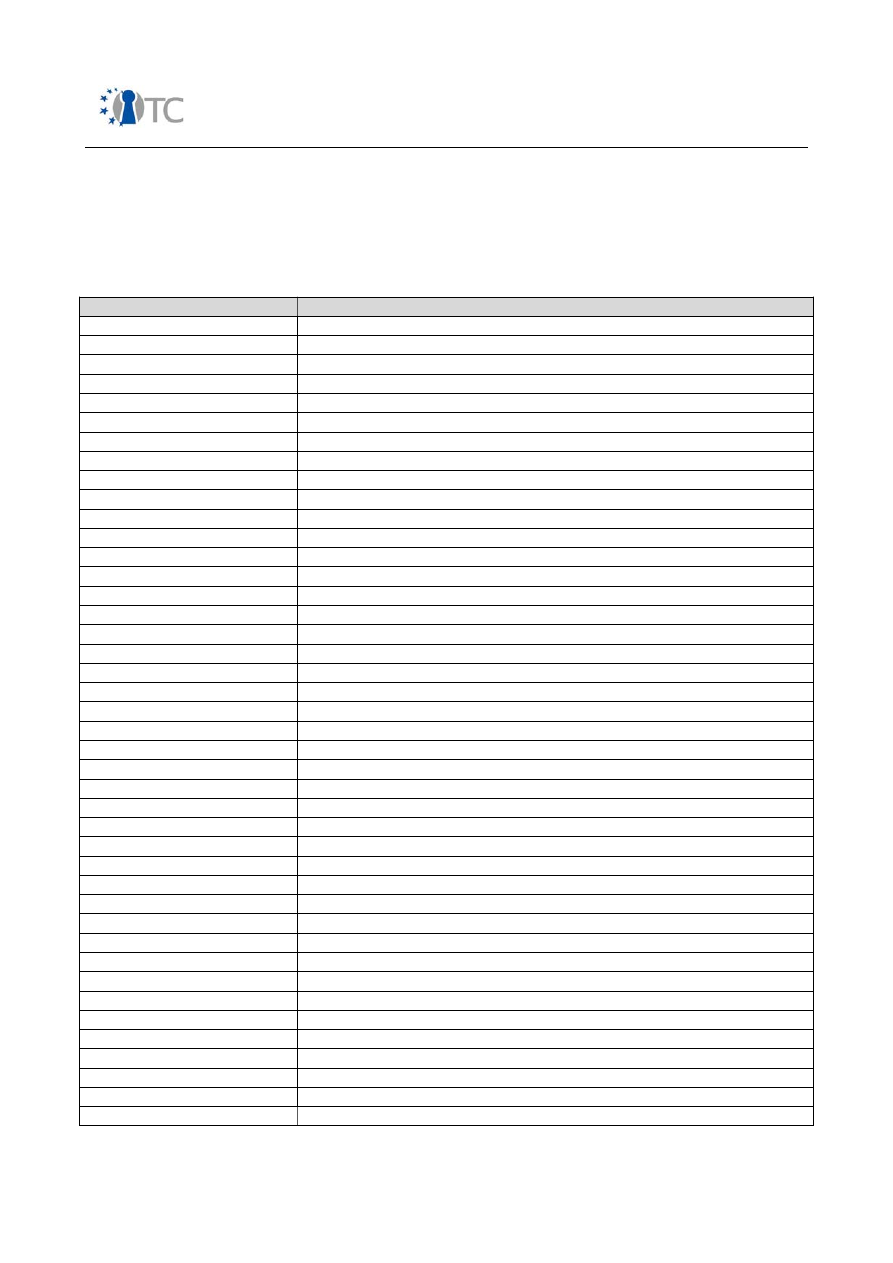

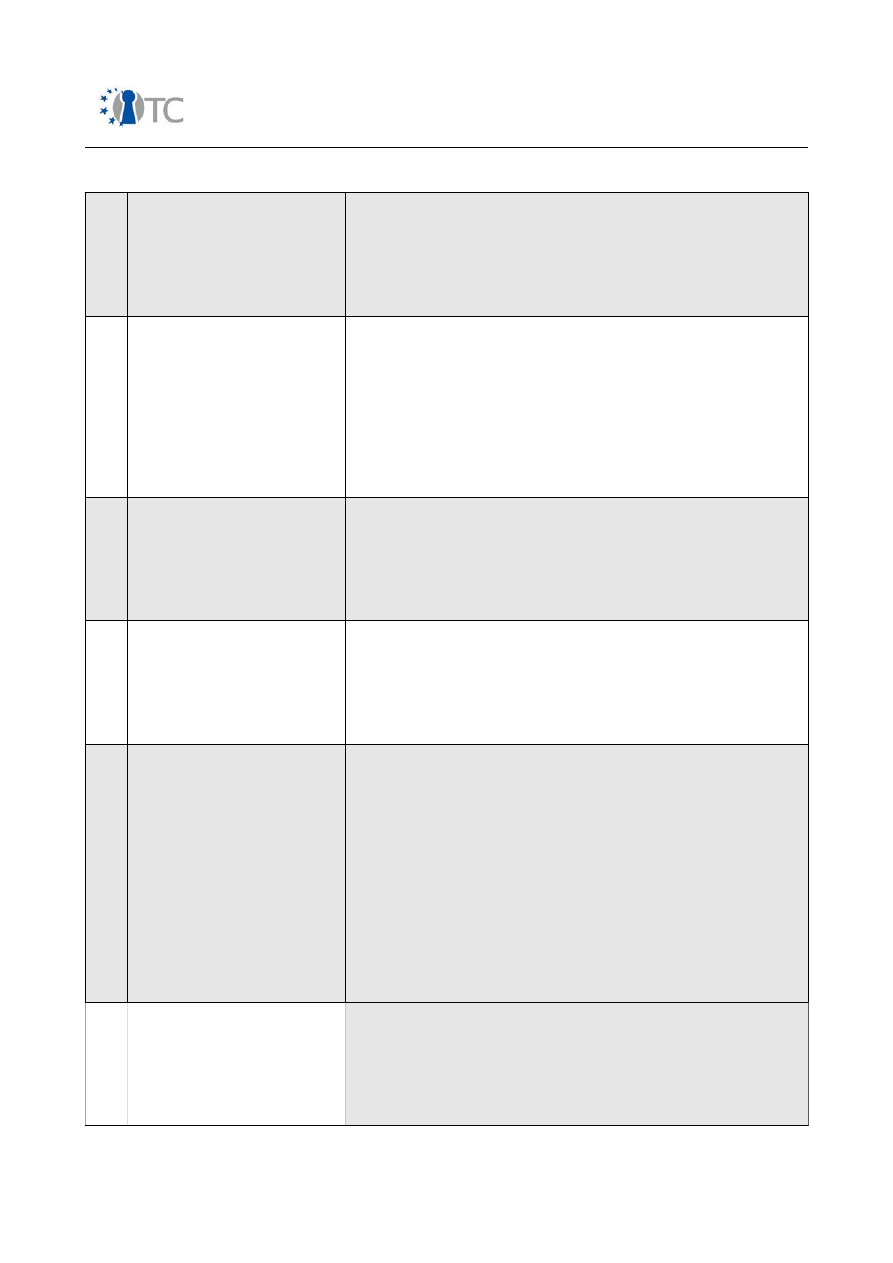

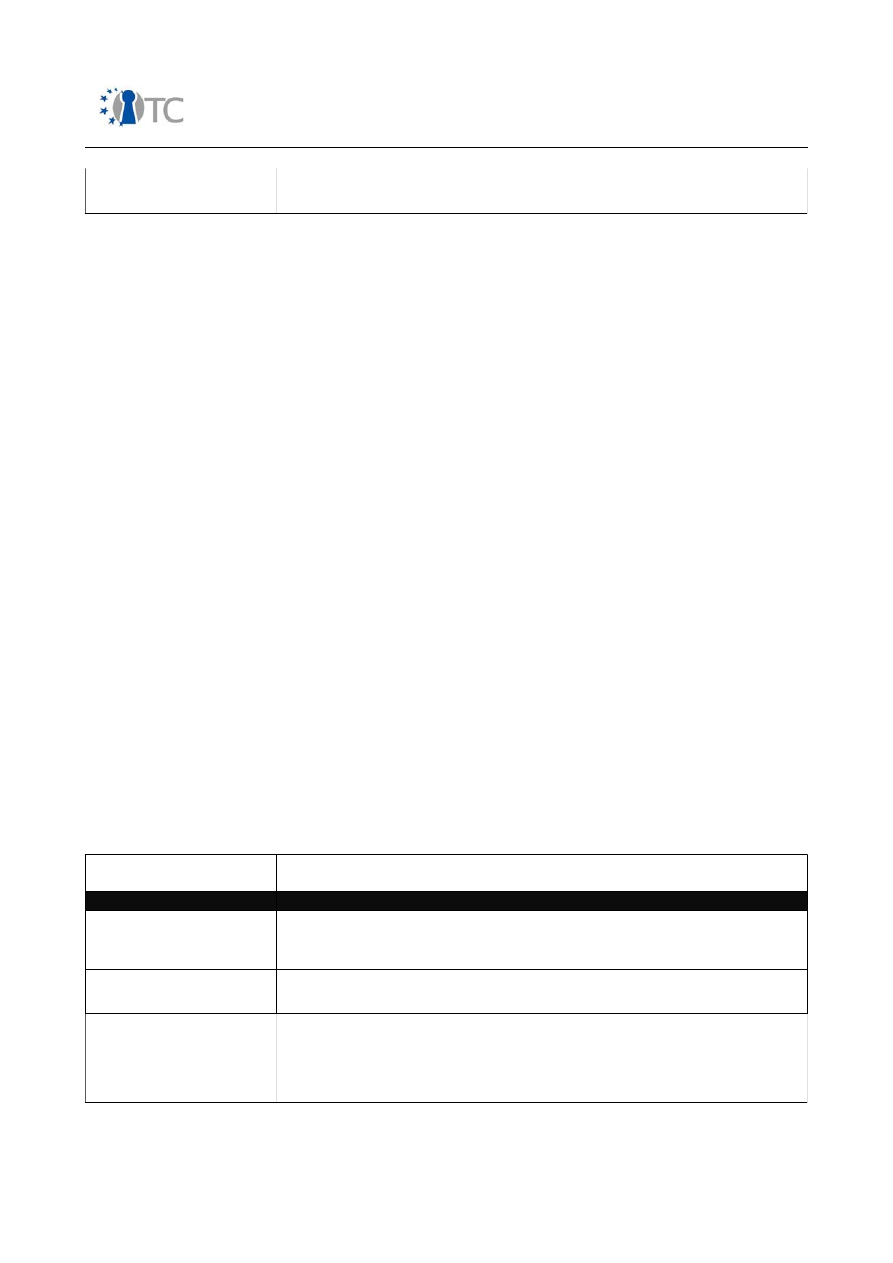

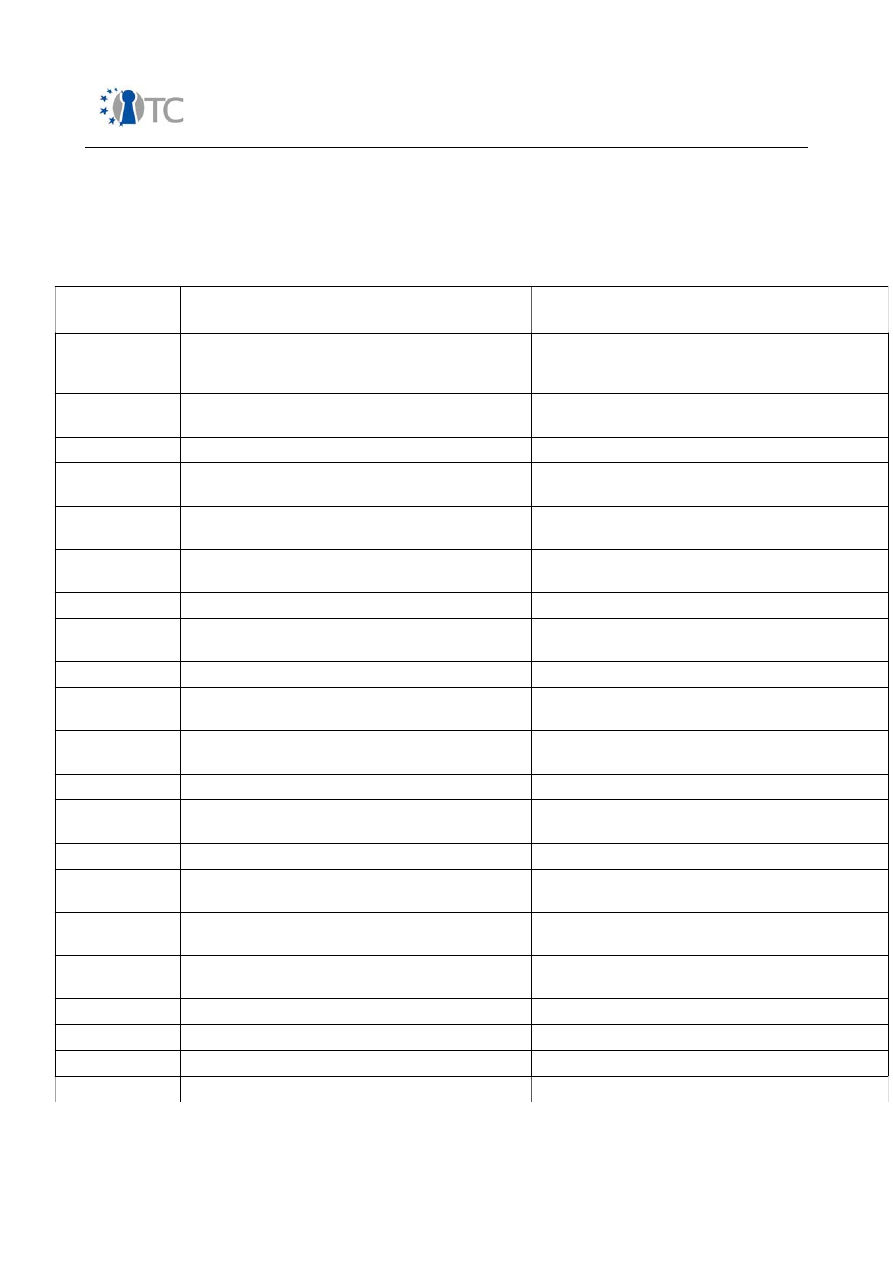

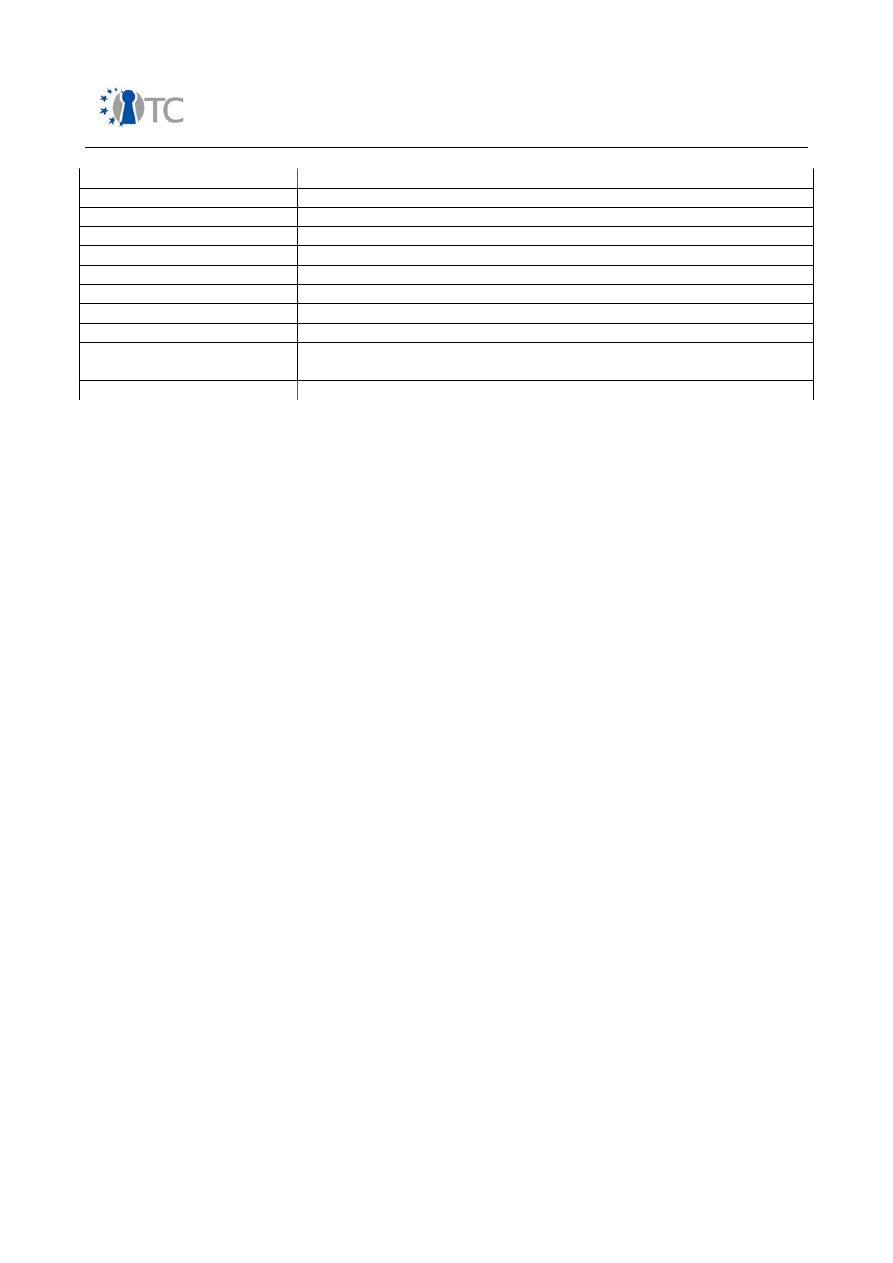

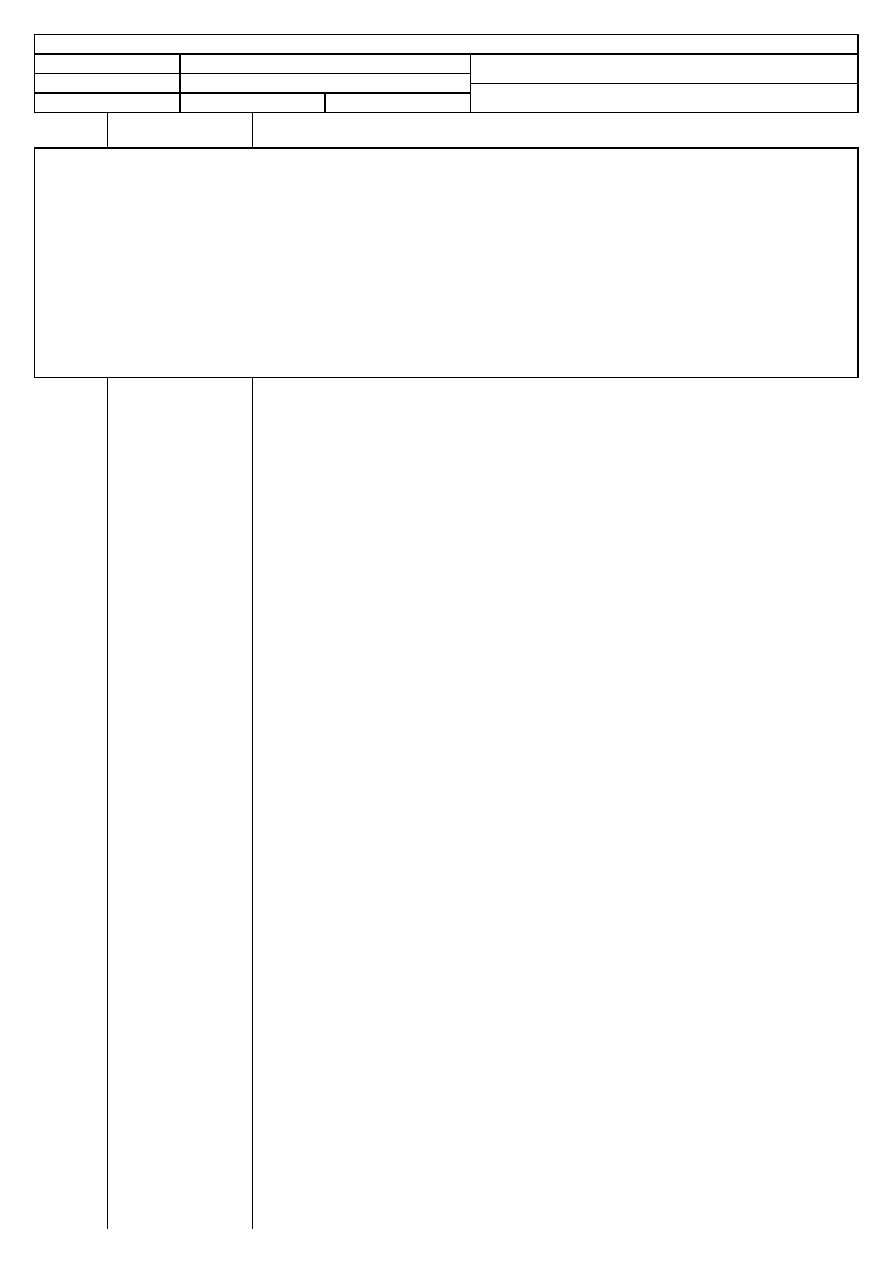

Table 2: Methodology Phases

Module

Description

Explanation

A. Regulatory Phase

Every trip begins with a direction. In the regulatory phase, the auditor begins the audit with an understanding of the

audit requirements, the scope, and constraints to the auditing of this scope. Often times, the test type is best

determined after this phase.

A.1

Posture Review

The review of the culture, rules, norms,

regulations, legislation, and policies

applicable to the target.

Know the scope and what tests must

be done. Required if the Information

Phase is to be properly conducted.

A.2

Logistics

The measurement of interaction

constraints such as distance, speed,

and fallibility to determine margins of

accuracy within the results.

Know the limitations of the audit itself.

This will minimize error and improve

efficiency.

A.3

Active Detection Verification

The verification of the practice and

breadth of interaction detection,

response, and response predictability.

Know the restrictions imposed on

interactive tests. This is required to

properly conduct Phases B and D.

B. Definitions Phase

The core of the basic security test requires knowing the security presence in relation to the scope and how

interactions with the targets convey to interactions with assets. This phase will define the scope.

B.4

Visibility Audit

The determination of the applicable

targets to be tested within the scope.

Visibility is regarded as “presence”

and not limited to human sight.

Know what targets are there and how

they interact with the scope if at all. A

dead or missing target is also an

unresponsive target. However an

unresponsive target is not necessarily a

missing target.

B.5

Controls Verification

The measurement of the use and

effectiveness of the process-based

(Class B) loss controls: non-repudiation,

confidentiality, privacy, integrity, and

alarm.

Most processes are defined in

response to a necessary interaction

and some remain long after that

interaction stops or has changed.

Knowing what process controls are in

place is a type of security archeology.

B.6

Trust Verification

The determination of trust relationships

from and between the targets. A trust

relationship exists wherever the target

accepts interaction freely and without

credentials.

Trusts for new processes are often very

limited where older processes have a

seemingly chaotic evolution to the

outsider. Knowing trust relationships

between targets will show the age or

value of the interaction.

B.7

Access Verification

The measurement of the breadth and

depth of interactive access points

within the target.

The access point is the main point of

any asset interaction. Verifying an

access point exists is one part of

determining its purpose. Full

verification requires knowing all there is

to know about the access point.

OpenTC Deliverable 07.1

25/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

Module

Description

Explanation

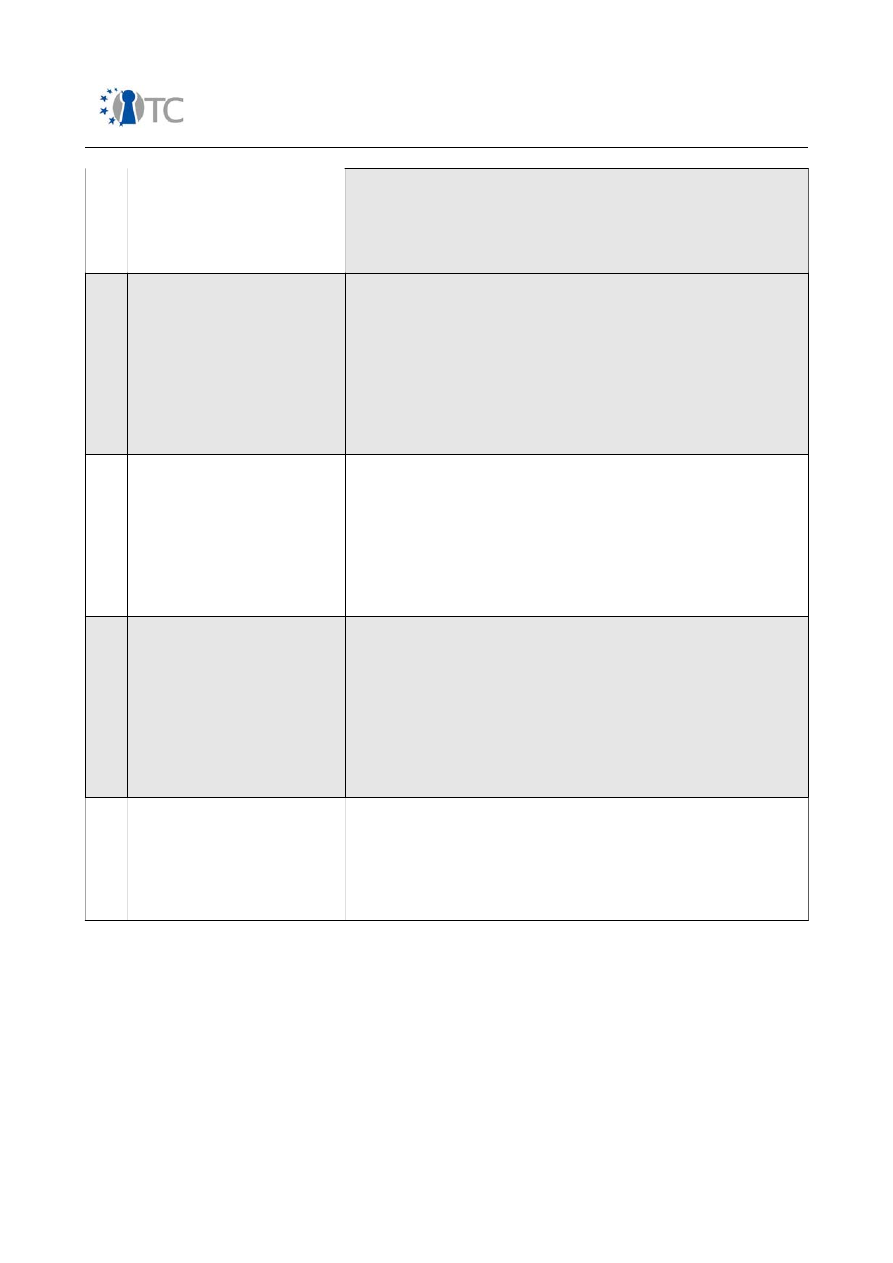

C. Information Phase

Much of security auditing is about the information that the auditor uncovers. In this phase, the various types of value

and detriment of misplaced and mismanaged information as an asset are brought to light.

C.8

Process Verification

The determination of the existence

and effectiveness of the record and

maintenance of existing actual

security levels and/or diligence

defined by the posture review.

Know the controllers and their routines

for the controls. Most processes will

have a defined set of rules however

actual operations reflect any

efficiency, laziness, or paranoia which

may redefine the rules. So it's not just

that the process is there but also how it

works.

C.9

Configuration Verification

The determination of the existence

and effectiveness of proper of security

mechanisms as defined by the posture

review.

Where tests on how interactions occur

explain the access point, the

operation of that point depends upon

the rules established for it and if those

rules are applied. Many regulations

require information regarding how

something is planned to work and this

is not always evident in the execution

of that work.

C.10

Property Validation

The measurement of the breadth and

depth in the use of illegal and/or

unlicensed intellectual property or

applications within the target.

Know the status of property ownership

rights.

C.11

Segregation Review

A determination of the levels of

personally identifiable information

defined by the posture review.

Know what privacy rights which apply

and to what extent the uncovered

personally identifiable information can

be classified based on these

requirements.

C.12

Exposure Verification

The search for freely available

information which describes indirect

visibility of targets or assets within the

chosen scope channel of the security

presence.

The word on the street has value.

Uncover information on targets and

assets from public sources including

that from the targets themselves.

C.13

Competitive Intelligence Scouting

The search for freely available

information, directly or indirectly,

which could harm or adversely affect

the target owner through external,

competitive means.

There may be more value in the

information from processes and

targets than the assets which they are

protecting. Uncover information that

by itself or in aggregate can influence

competitive business decisions.

D. Interactive Controls Test Phase

These tests are focused on penetration and disruption. This is often the final phase of a security test to assure

disruptions do not affect responses of less invasive tests and because the information for making these tests may not

be known until other phases have been carried through. The final module, D17, of Alert and Log Review, is required

to verify that prior test assumptions are true. Most security tests that do not include this phase may still need to run

an end review from the vector of the targets and assets to clarify disruptions that did not respond during standard

echo tests.

OpenTC Deliverable 07.1

26/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

Module

Description

Explanation

D.14

Quarantine Verification

The determination and measurement

of effective use of quarantine for all

access to and within the target.

Determine the effectiveness of

authentication and subjugation

controls in terms of black and white list

quarantines.

D.15

Privileges Audit

The mapping and measurement of the

impact of misuse of credentials and

privileges or the unauthorized

escalation of privilege to a higher level

privilege.

Determine the effectiveness of

authorization on authentication,

indemnification, and subjugation

controls in terms of depth and roles.

D.16

Survivability Validation

The determination and measurement

of the resistance of the target to

excessive or adverse changes.

Determine the effectiveness of

continuity and resistance controls

through the verification of denial of

service and denial of interactivity.

D.17

Alert and Log Review

A review of audit activities performed

with the true depth of those activities

as recorded by the target or from a

third-party.

Know what parts of the audit left a

usable and reliable trail.

OpenTC Deliverable 07.1

27/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

4.4 Main results achieved

Since true trusted computing does not yet exist, we cannot say how one operates. We

cannot say what is required to operate as a trusted computer. We cannot say how

trust is measured without bias. We can only approach this theoretically. However,

theory does not test or measure well except in theory. Yet this is what we intend to

standardize.

Security Metrics

The completion of a thorough security audit has the advantage of providing accurate

metrics on the state of security. The less thorough the audit means a less accurate

overall metric. Alternately, lesser skilled auditors and lesser experienced analysts will

also adversely affect the quality of the metric. Therefore, a successful metric of

security requires an audit which can be described as testing (measuring) from the

appropriate vectors required while accounting for inaccuracies and misrepresentations

in the test data and skills or experience of the security professionals performing the

audit. Faults in these requirements will result in lower quality measurements and false

security determinations.

This methodology refers to metrics as

Risk Assessment Values (RAVs)

. While not a

risk assessment in itself, an audit with this methodology and the RAVs will provide the

factual basis for a more accurate and more complete risk assessment.

Applying Risk Assessment Values

This methodology will define and quantify three areas within the scope which together

create the big picture defined as Actual Security as its relevance to the current and

real state of security. The big picture approach is to calculate separately as a hash,

each of the areas: Operations, Controls, and Limitations. The 3 hashes are combined

to form the fourth hash, Actual Security, to provide the big picture type overview and

a final metric for comparisons. Since RAVs are the minimalizing of relevant security

information, they are infinitely scalable. This allows for comparable values between

two or more scopes regardless of the target, vector, test type, or index where the

index is the method of how individual targets are calculated. This means with RAVs

that the security between a single target can be realistically compared with 10,000

targets.

One important rule to applying these metrics is that Actual Security can only be

calculated per scope. A change in channel, vector, or index is a new scope and a new

calculation for Actual Security. However, multiple scopes can be calculated together

to create one Actual Security that represents a fuller vision of operational security.

For example, the audit will be made of internet-facing servers from both the internet

side and from within the perimeter network which they reside. That is 2 vectors. The

first vector is indexed by IP address and contains 50 targets. The second vector is

indexed by MAC address and is 100 targets. Once each audit is completed and

metrics are counted for each of the 3 areas, they can be combined into one calculation

of 150 targets and the sums of each area. This will give a final Actual Security metric

OpenTC Deliverable 07.1

28/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

which is much more complete for that perimeter network then either would be alone.

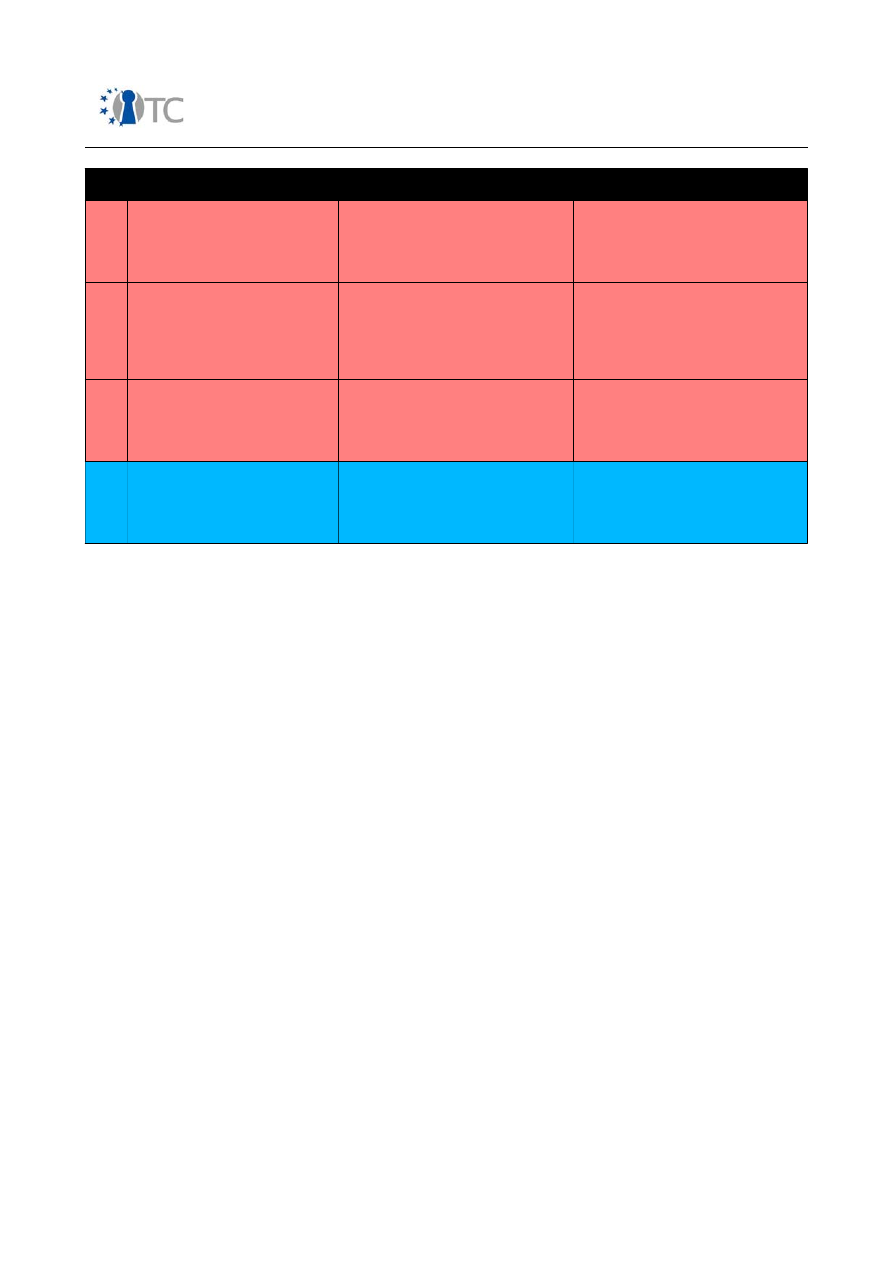

Table 3: Actual Security

Value Types

Descriptions

Operations

The lack of security one must have to be interactive, useful, public, open, or available.

For example, limiting how a person buys goods or services from a store over a particular

channel, such as 1 door for going in and out, is a method of security within the store's

operations. Operations are defined by visibility, trusts, and accesses.

Controls

Impact and loss reduction controls. The assurance that the physical and information

assets as well as the channels themselves are protected from various types of invalid

interactions as defined by the channel. For example, insuring the store in the case of fire

is a control that does not prevent the inventory from getting damaged or stolen but will

pay out equivalent value for the loss. There are 10 controls. The first five controls are

Class A which control interactions. The five class B controls are relevant to controlling

procedures.

Limitations

This is the current state of perceived and known limits for channels, operations, and

controls as verified within the audit. For example, an old lock that is rusted and

crumbling used to secure the gates of the store at closing time has an imposed security

limitation where it is at a fraction of the protection strength necessary to delay or

withstand an attack. Determining that it is old and weak through visual verification in

this case is referred to as an identified limitation. Determining it is old and weak by

breaking it using 100 kg of force when a successful deterrent requires 1000 kg of force

shows a verified limitation.

Operational Security

To measure the security of operations (OPSEC) requires the measurements of

visibility, trust, and access from the scope. The number of targets in the scope that

can be determined to exist by direct interaction, indirect interaction, or passive

emanations is its visibility. As visibility is determined, its value represents the number

of targets in the scope. Trust is any non-authenticated interaction to any of the

targets. Access is the number of interaction points with each target. The sum of all

three is the OPSEC Delta, which is the total number of openings within operations and

represents the total amount of operational security decreased within the target.

Table 4: Calculating OPSEC

OPSEC Categories

Descriptions

Visibility

The number of targets in the scope according to the scope. Count all targets by index

only once and maintain the index consistently for all targets. It is generally unrealistic to

have more targets visible then are targets in the defined scope however it may be

possible due to vector bleeds where a target which is normally not visible from one

vector is visible due to a misconfiguration or anomaly.

Trust

Count only each target allowing for unauthenticated interaction according to the

scope.

Access

This is different from visibility where one is determining the number of existing targets.

Here the auditor must count each Access per unique interaction point per unique

probe.

OpenTC Deliverable 07.1

29/118

V&V report #1: Security Requirements definition, Target Selection, Methodology

Definition, First Security Testing and First Formal Verification of the Target

1.1

OPSEC Delta

Visibility + Trust + Access

The negative change in OPSEC protection.

Controls

Controls are the 10 loss protection categories in two categories, Class A (interactive)