D05.5 Design and Concept of a

Trusted Virtual Datacenter

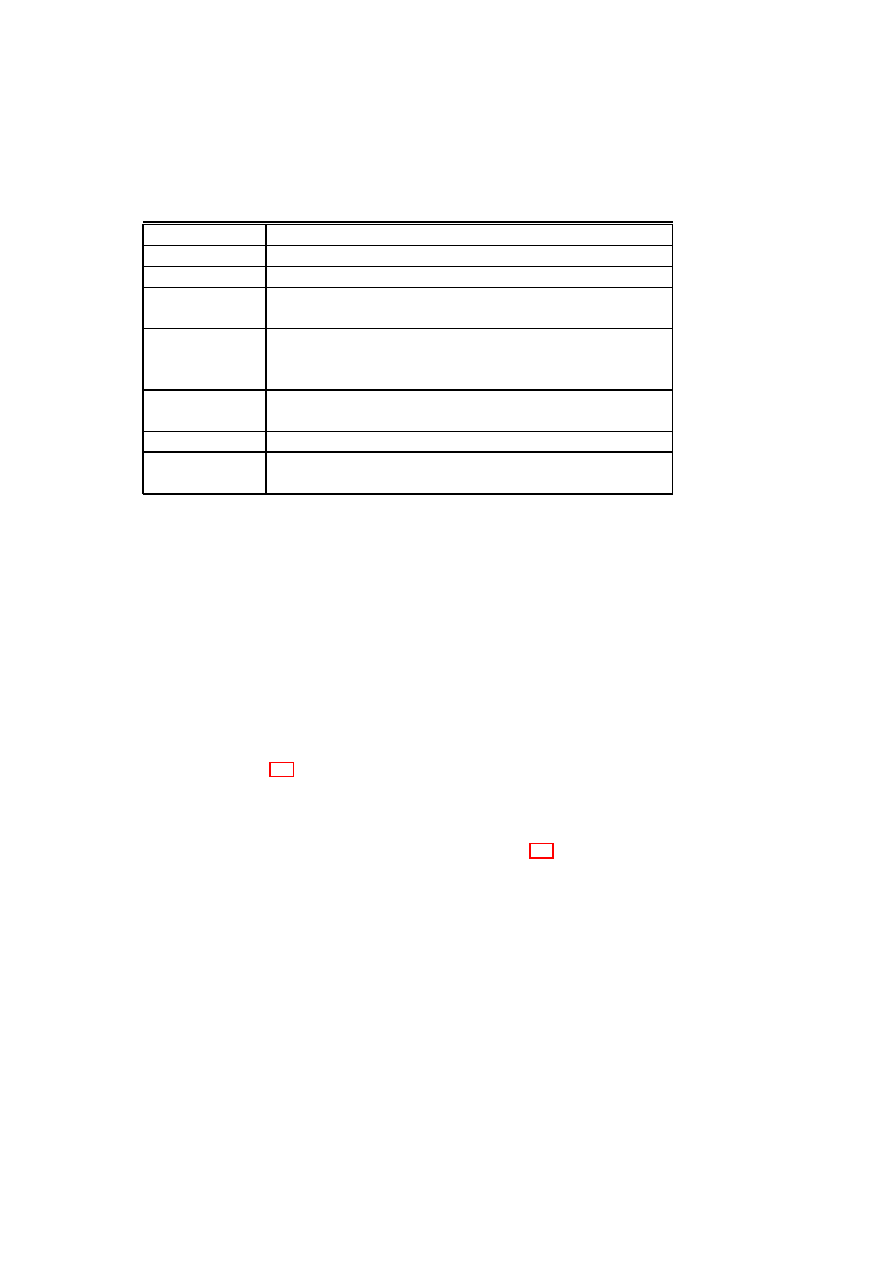

Project number

IST-027635

Project acronym

Open_TC

Project title

Open Trusted Computing

Deliverable type

Report

Deliverable reference number

IST-027635/D05.5/FINAL

Deliverable title

Design and Concept of a Trusted Virtual

Datacenter

WP contributing to the deliverable

WP05

Due date

Oct 2008 - M36

Actual submission date

November 21

st

, 2008

Responsible Organisation

IBM

Authors

Contributors listed alphabetically by

organisation:

CUCL (Theodore Hong, Eric John, Derek

Murray); HP (Serdar Cabuk, David Plaquin);

IBM (Bernhard Jansen, HariGovind V.

Ramasamy, Matthias Schunter); RUB (Yacine

Gasmi, Ahmad-Reza Sadeghi, Patrick Stewin,

Martin Unger); POL (Gianluca Ramunno,

Davide Vernizzi)

Abstract

This report describes the key concepts of the

OpenTC Secure Virtual Datacenter.

Keywords

Virtual Datacenter, Proof-of-Concept

Dissemination level

Public

Revision

FINAL

Instrument

IP

Start date of the

project

1

st

November 2005

Thematic Priority

IST

Duration

42 months

A

BSTRACT

This report describes the key concepts of the OpenTC Secure Virtual Datacenter.

It is structured into two parts.

Part I surveys the key concepts underlying the 2009 proof of concept “Trusted

Virtual Datacenter” that demonstrates how to securely virtualize a datacenter while

providing verifiable security.

Part II describes selected components and future directions in more detail.

A

CKNOWLEDGMENTS

The following people were the main contributors to this report (alphabetically

by organization): Theodore Hong, Eric John, Derek Murray (CUCL); Serdar

Cabuk, David Plaquin (HP); Bernhard Jansen, HariGovind V. Ramasamy, Matthias

Schunter (IBM); Yacine Gasmi (RUB), Ahmad-Reza Sadeghi (RUB), Patrick

Stewin (RUB), Martin Unger (RUB); Gianluca Ramunno (Polito), Davide Vernizzi

(Polito). We would like to thank our reviewer Peter Lipp from IAIK Graz for sub-

stantial feedback.

Furthermore, we would like to thank the other members of the OpenTC project

for helpful discussions and valuable contributions to the research that is docu-

mented in this report.We would like to thank in particular Cataldo Basile from

Politecnico di Torino, Italy, for valuable input on the policy framework.

This work has been partially funded by the European Commission as part of

the OpenTC project [Ope08a] (ref. nr. 027635). It is the work of the authors alone

and may not reflect the opinion of the entire project.

2

4

OpenTC D05.5 – Design and Concept of a Trusted Virtual Datacenter

III

Conclusion

86

Bibliography

89

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

Part I

Main Concepts of the OpenTC

Trusted Virtual Datacenter

5

Chapter 1

Introduction and Outline

1.1

Introduction

Hardware virtualization is enjoying a resurgence of interest fueled in part by its

cost-saving potential. By allowing multiple virtual machines to be hosted on a

single physical server, virtualization helps improve server utilization, reduce man-

agement and power costs, and control the problem of server sprawl.

A prominent example in this context is data centers.

The infrastructure

provider, who owns, runs, and manages the data center, can transfer the cost sav-

ings to its customers or outsourcing companies, whose virtual infrastructures are

hosted on the data center’s physical resources. A large number of the companies

that outsource their operations are small and medium businesses or SMBs, which

cannot afford the costs of a dedicated data center in which all the data center’s

resources are used to host a single company’s IT infrastructure. Hence, the IT in-

frastructure belonging to multiple SMBs may be hosted inside the same data center

facility. Today, even in such “shared” data centers, each run on distinct physical

resources and there is no resource sharing among various customers. In this so-

called physical cages model, the customers are physically isolated from each other

in the same data center.

Limited trust in the security of virtual datacenters is one major reason for cus-

tomers not sharing physical resources. Since management is usually performed

manually, administrative errors are commonplace. While this may lead to down-

times in virtual datacenters used by a single customer, it can lead to information

leakages to competitors if the datacenter is shared. Furthermore, multiple organi-

zations will only allow sharing of physical resources if they can trust that security

incidents cannot spread across the isolation boundary separating two customers.

Security Objectives

Our main security objective is to provide isolation among

different domains that is comparable

1

with the isolation obtained by providing one

infrastructure for each customer. In particular, we require a security architecture

1

Note that unlike physical isolation, we do not solve the problem of covert channels.

6

CHAPTER 1. INTRODUCTION AND OUTLINE

7

that protects those system components that provide the required isolation or allow

to verifiably reason about their trustworthiness of and also of any peer endpoint

(local or remote) with a domain, i.e., whether they conforms to the underlying

security policy.

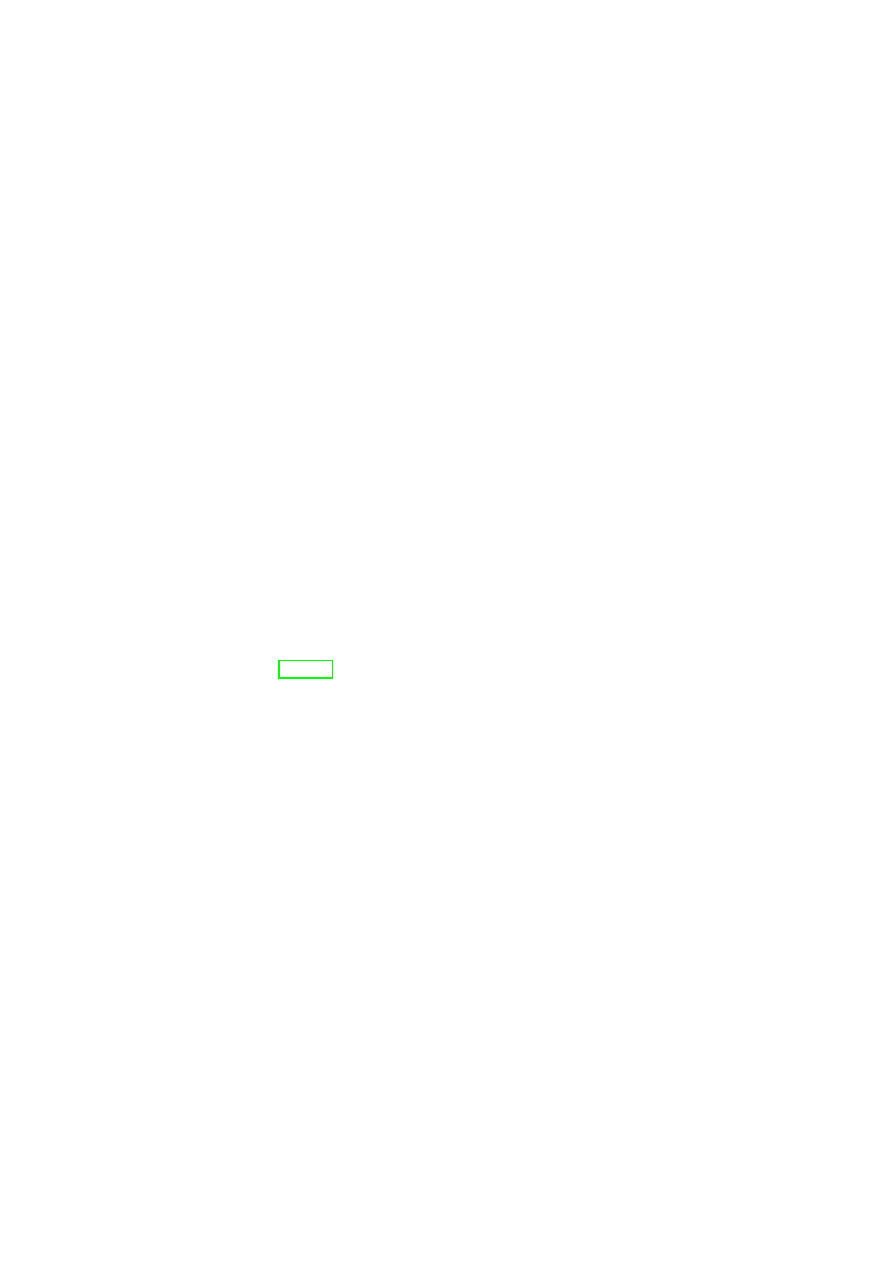

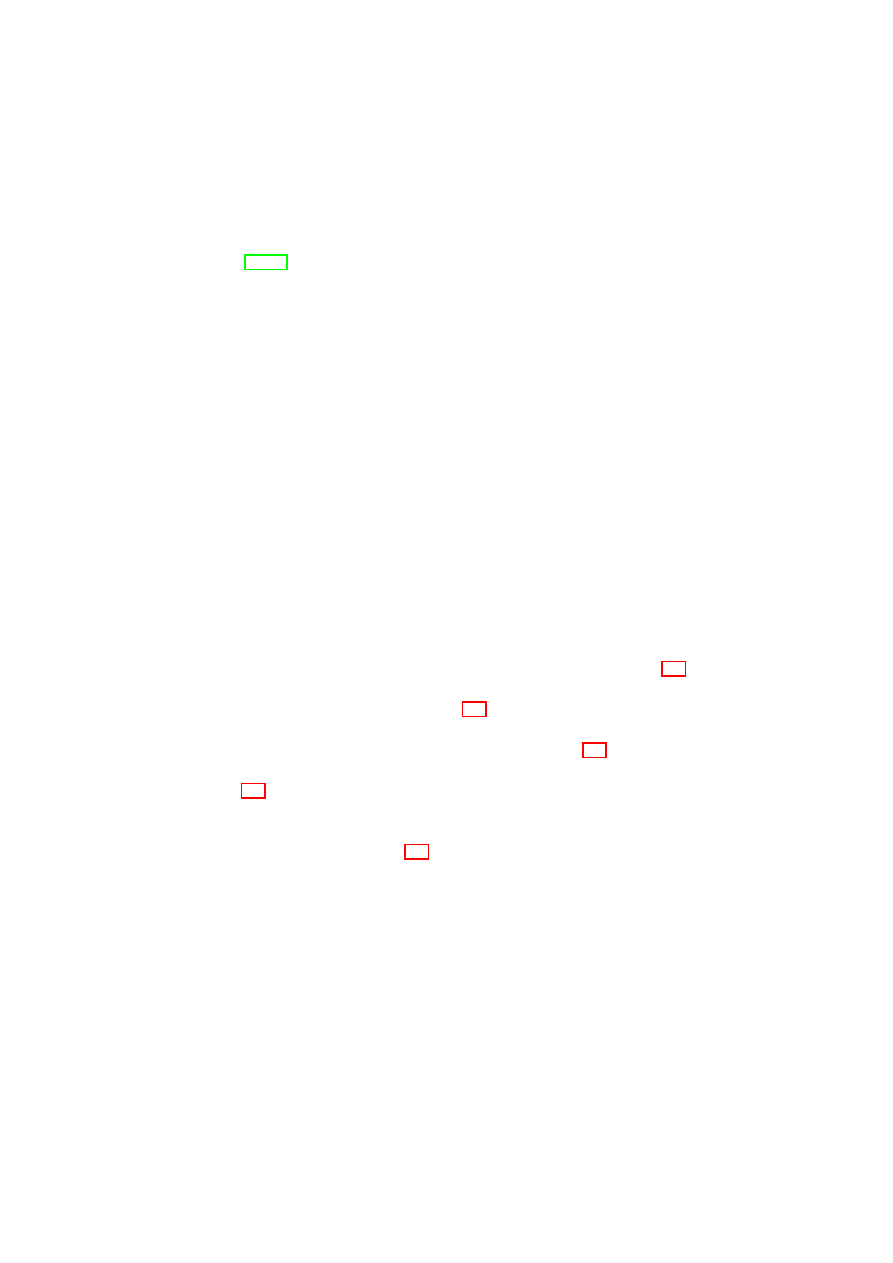

We achieve this by grouping VMs dispersed across multiple physical resources

into a virtual zone in which customer-specified security requirements are automat-

ically enforced. Even if VMs are migrated (say, for load-balancing purposes) the

logical topology reflected by the virtual domain should remain unchanged. We

deploy Trusted Computing (TC) functionality to determine the trustworthiness (as-

sure the integrity) of the policy enforcement components.

Such a model would provide better flexibility, adaptability, cost savings than

today’s physical cages model while still providing the main security guarantees

required for applications such as datacenters.

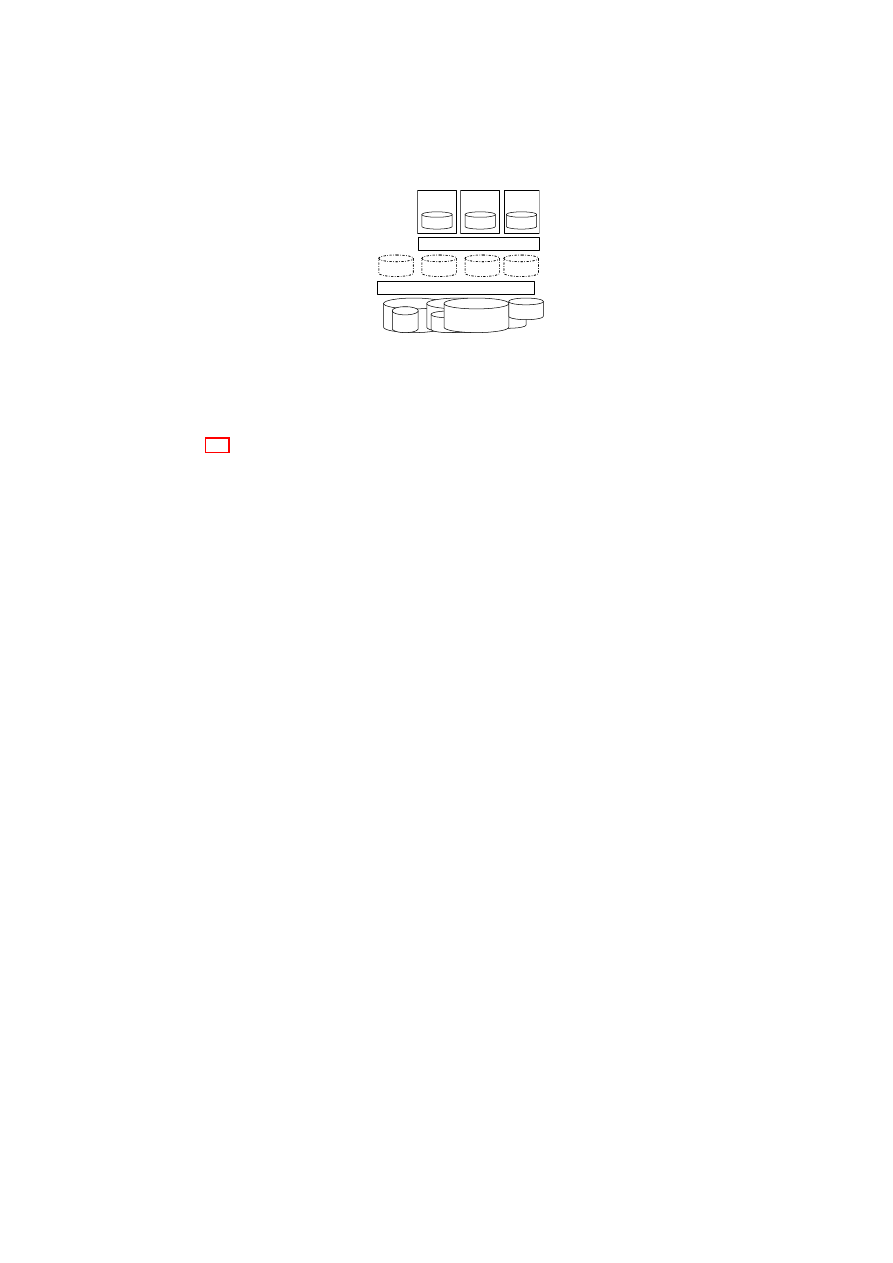

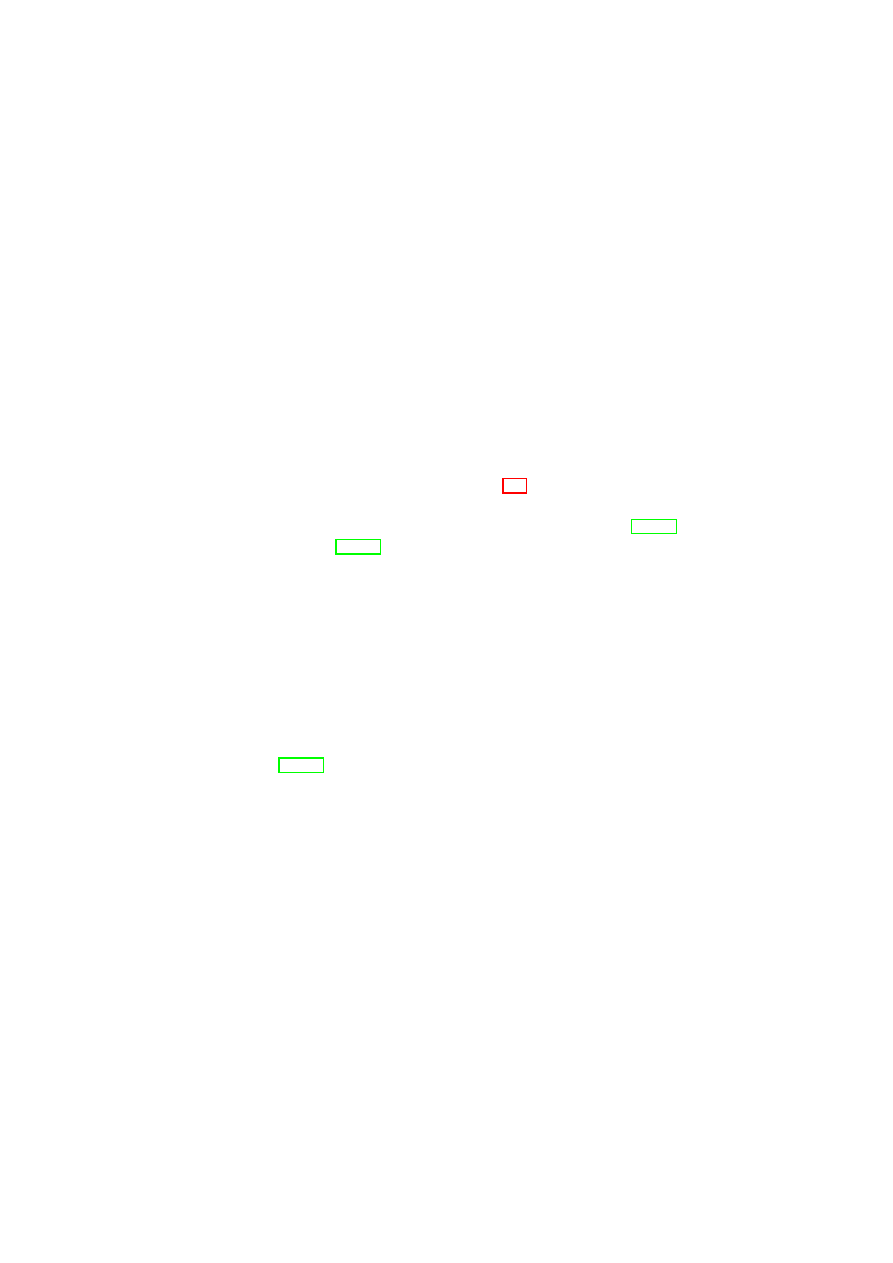

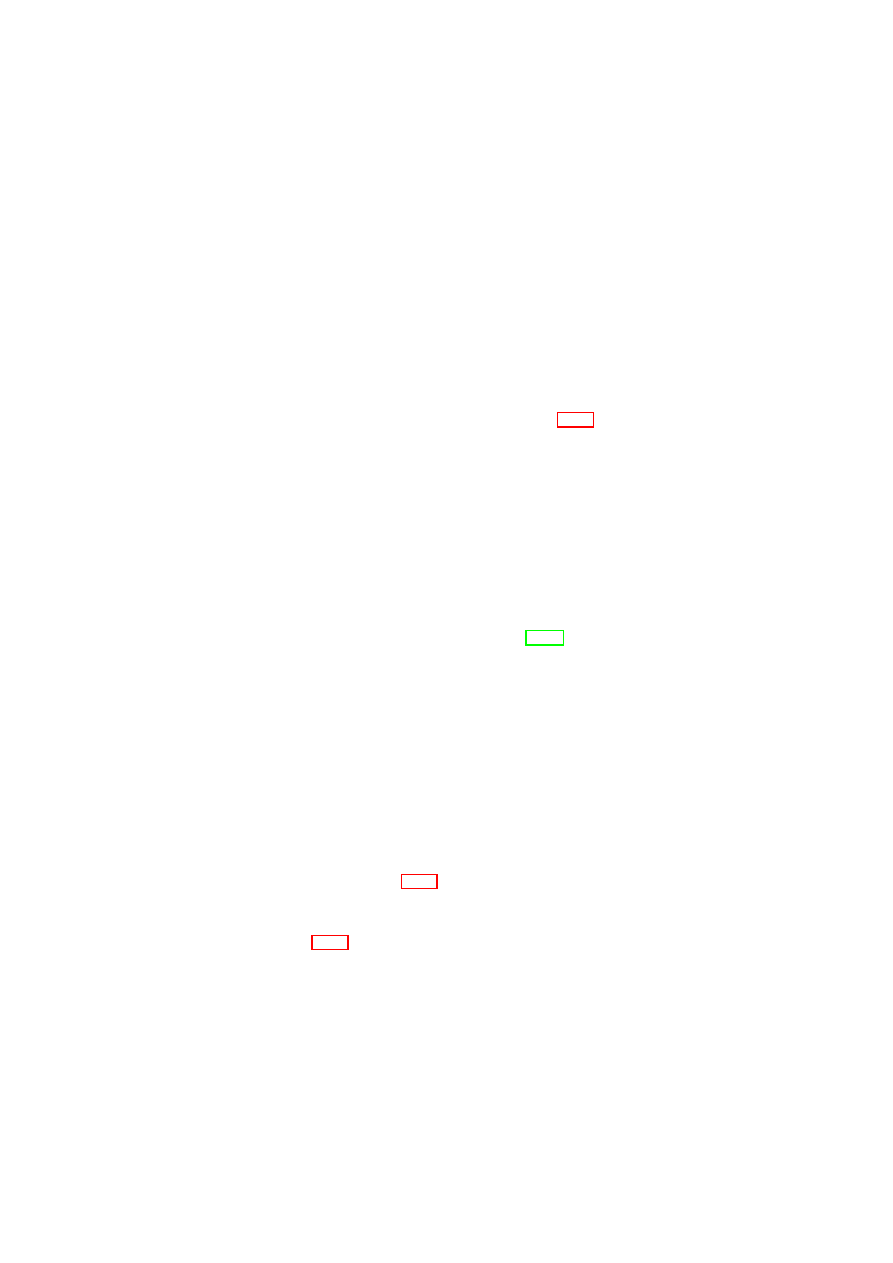

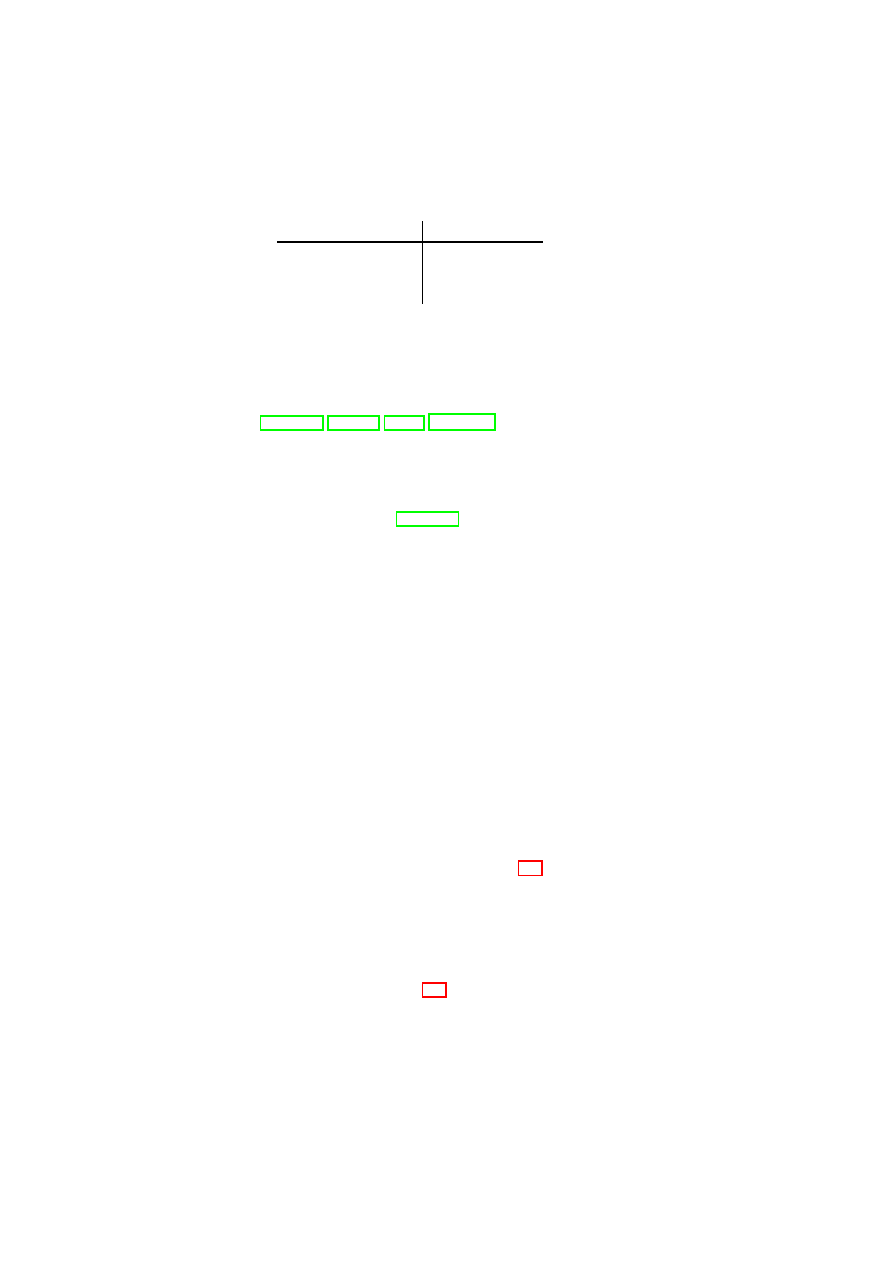

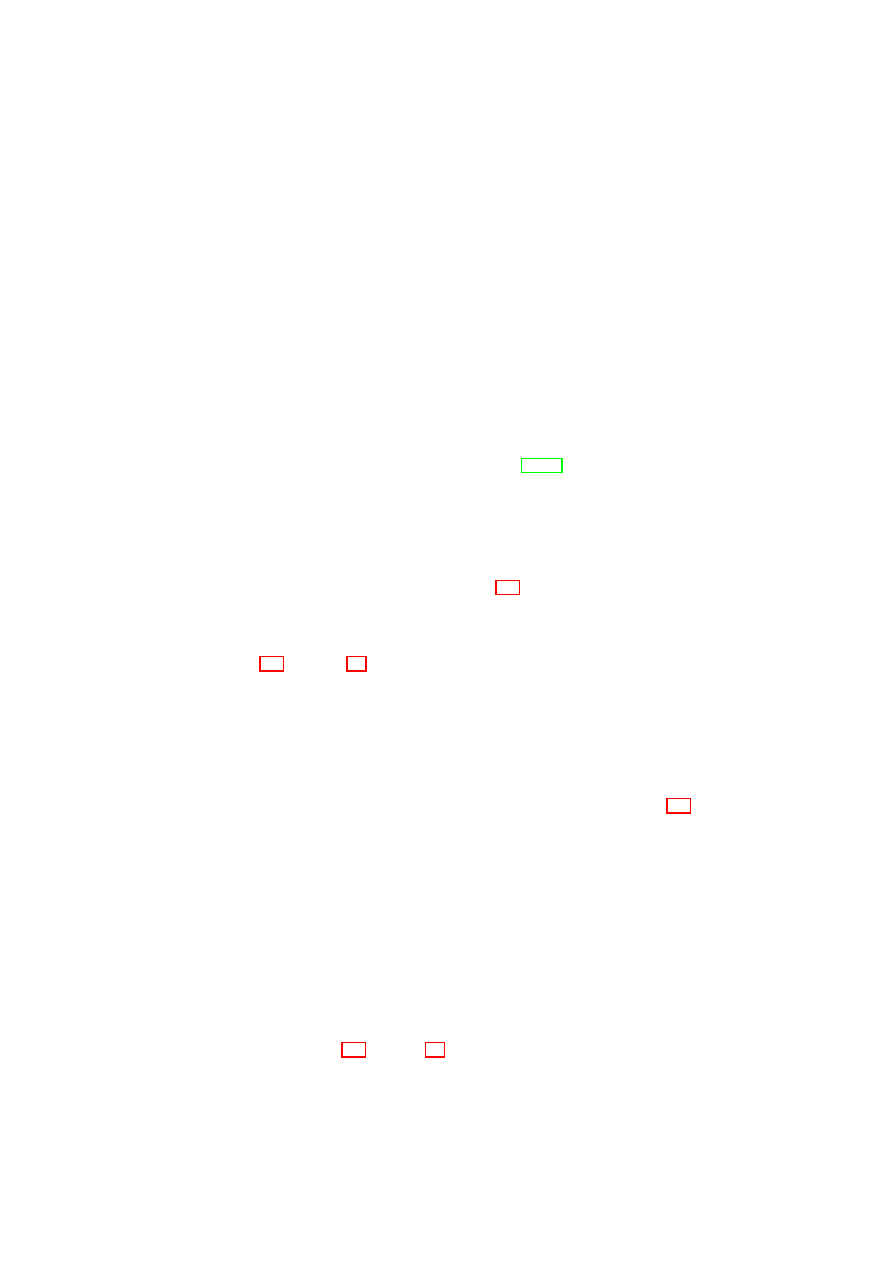

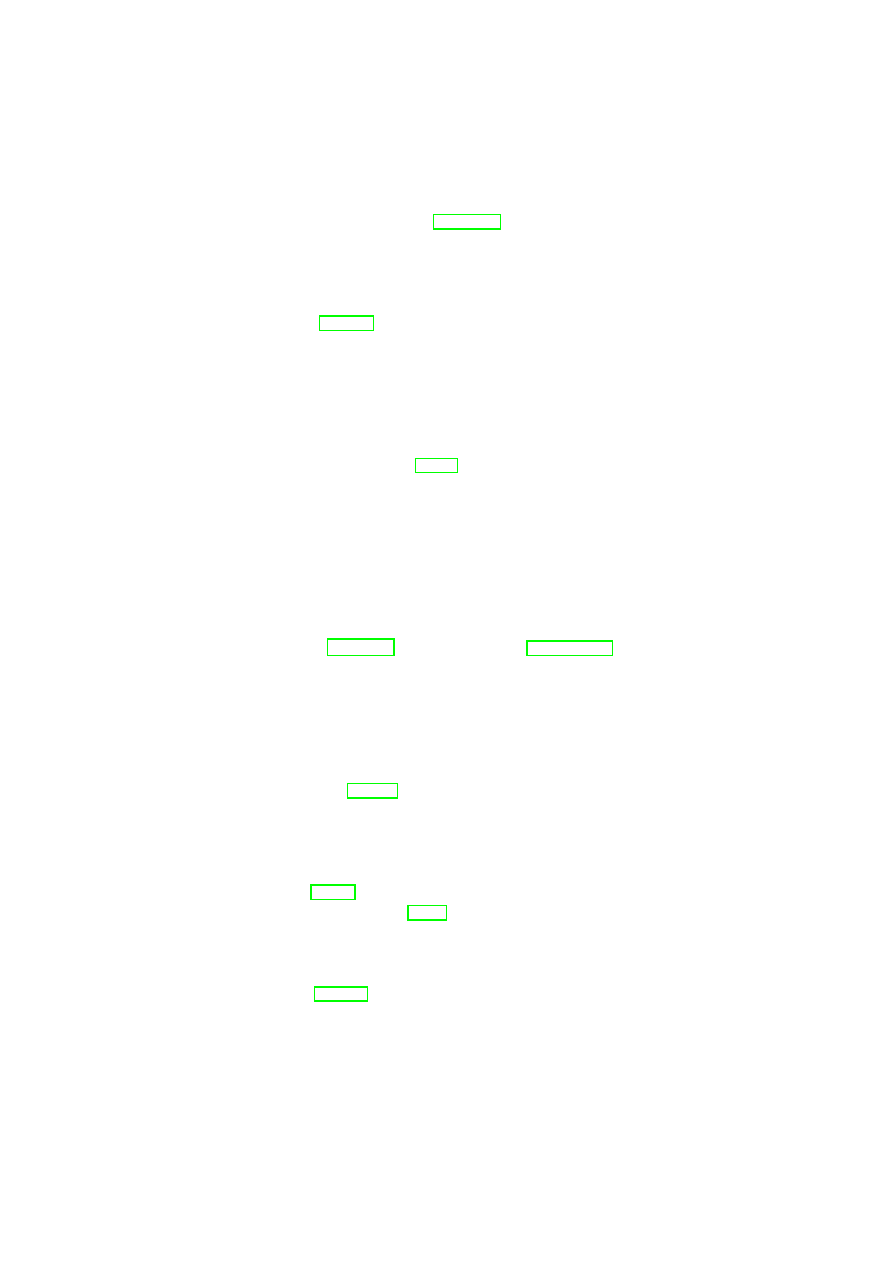

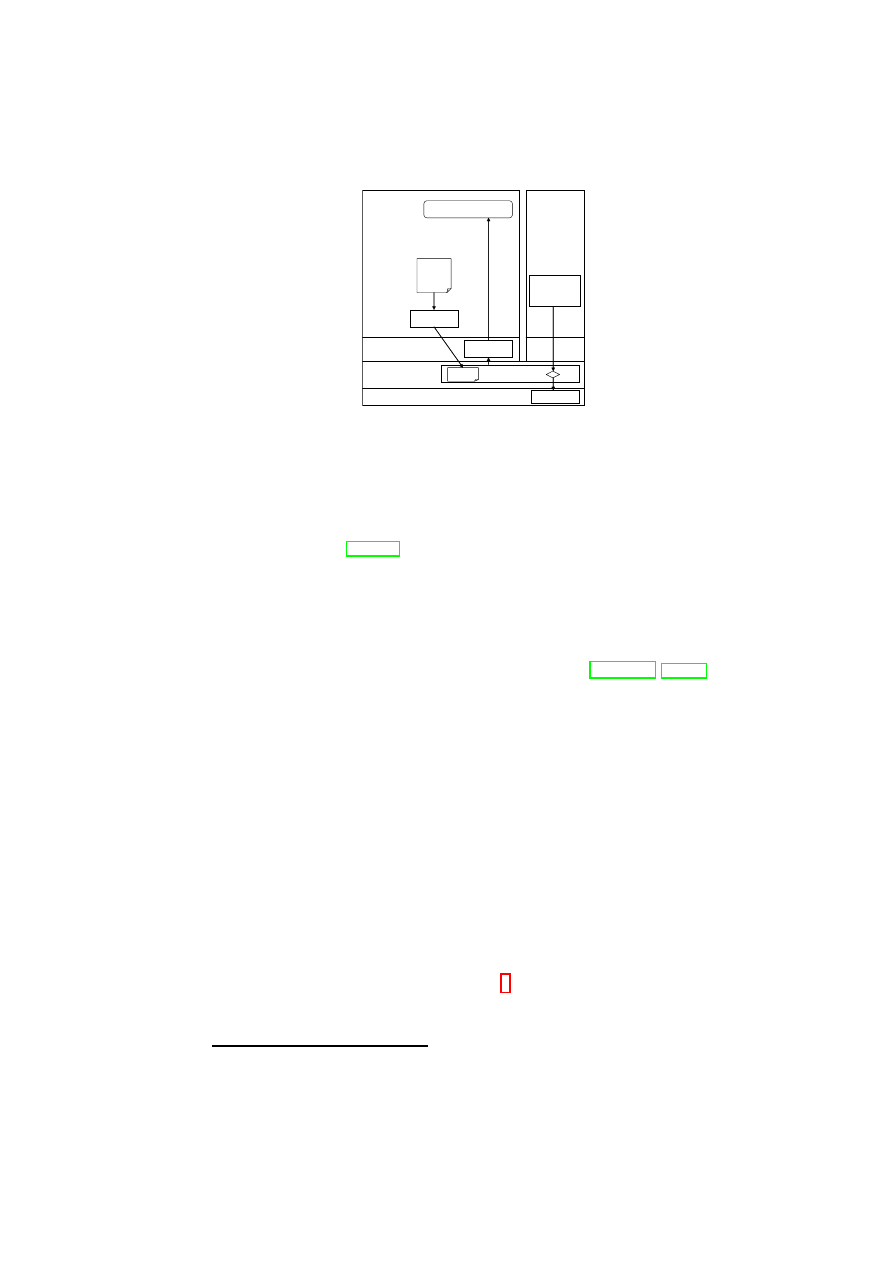

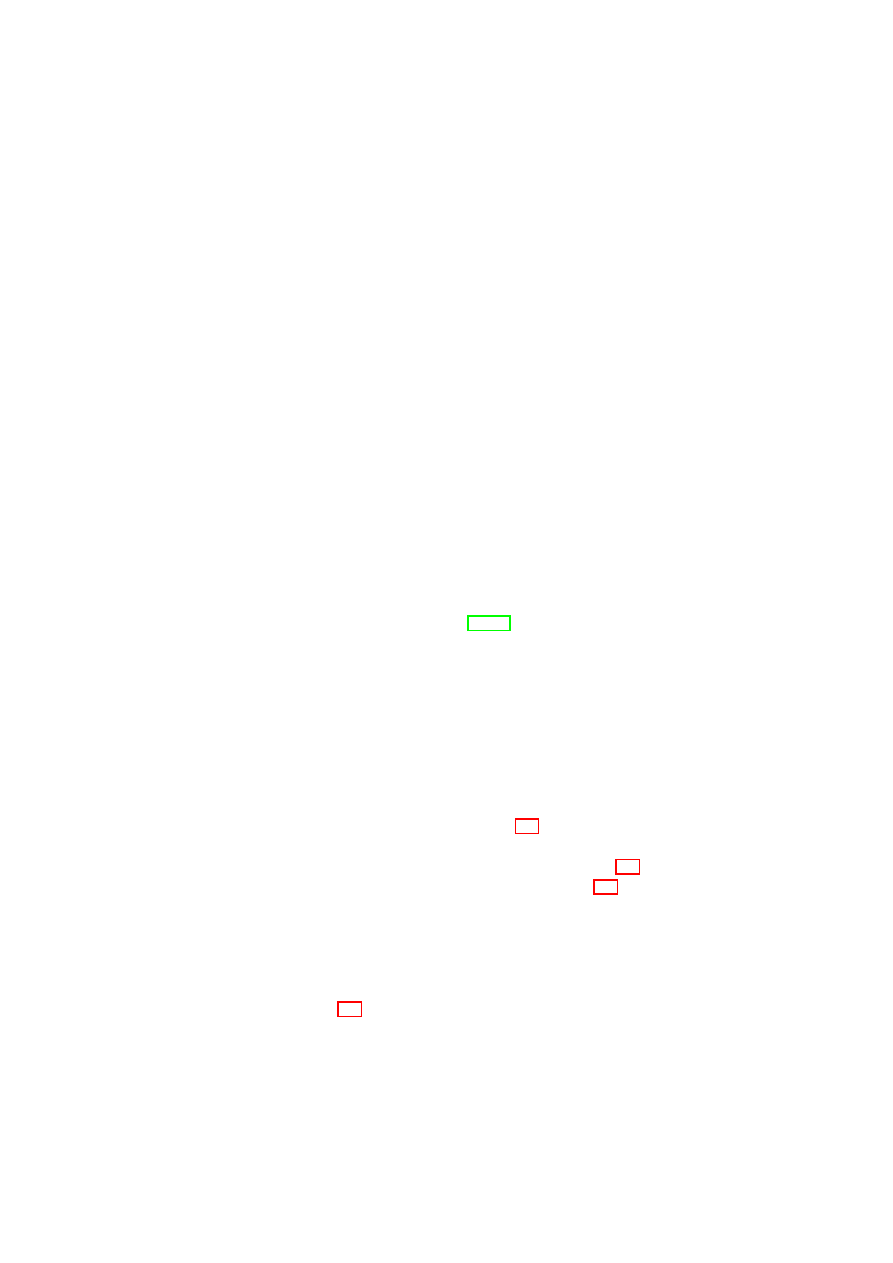

Hardware Platform

Hypervisor

Security Services

VM

A1

VM

A2

VM

A3

VM

A4

TVD1

Master

TVD1

Master

TVD1

Master

Proxy1

…

Proxy2

Hardware Platform

Hypervisor

Security Services

VM

B1

VM

B2

VM

B3

VM

B4

Proxy1

…

Proxy2

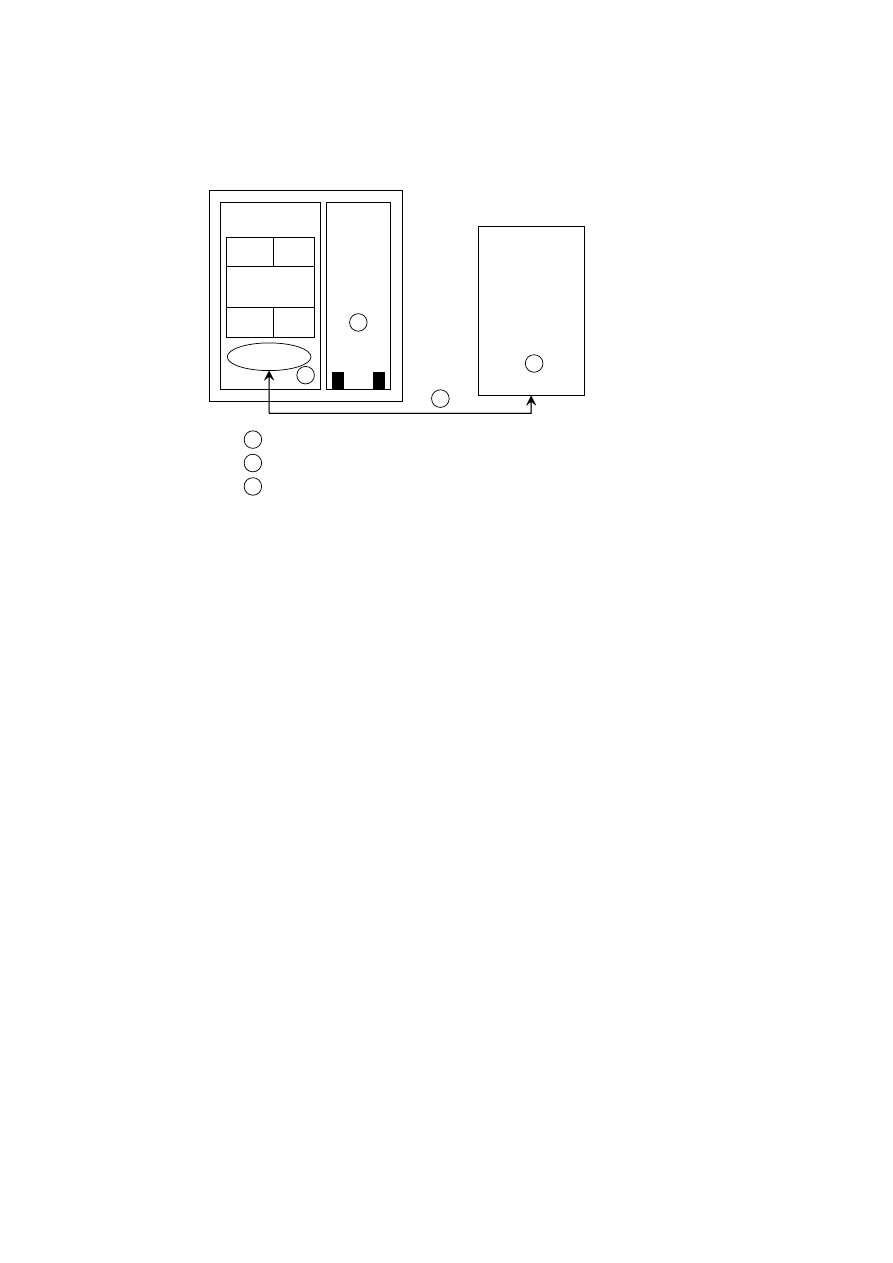

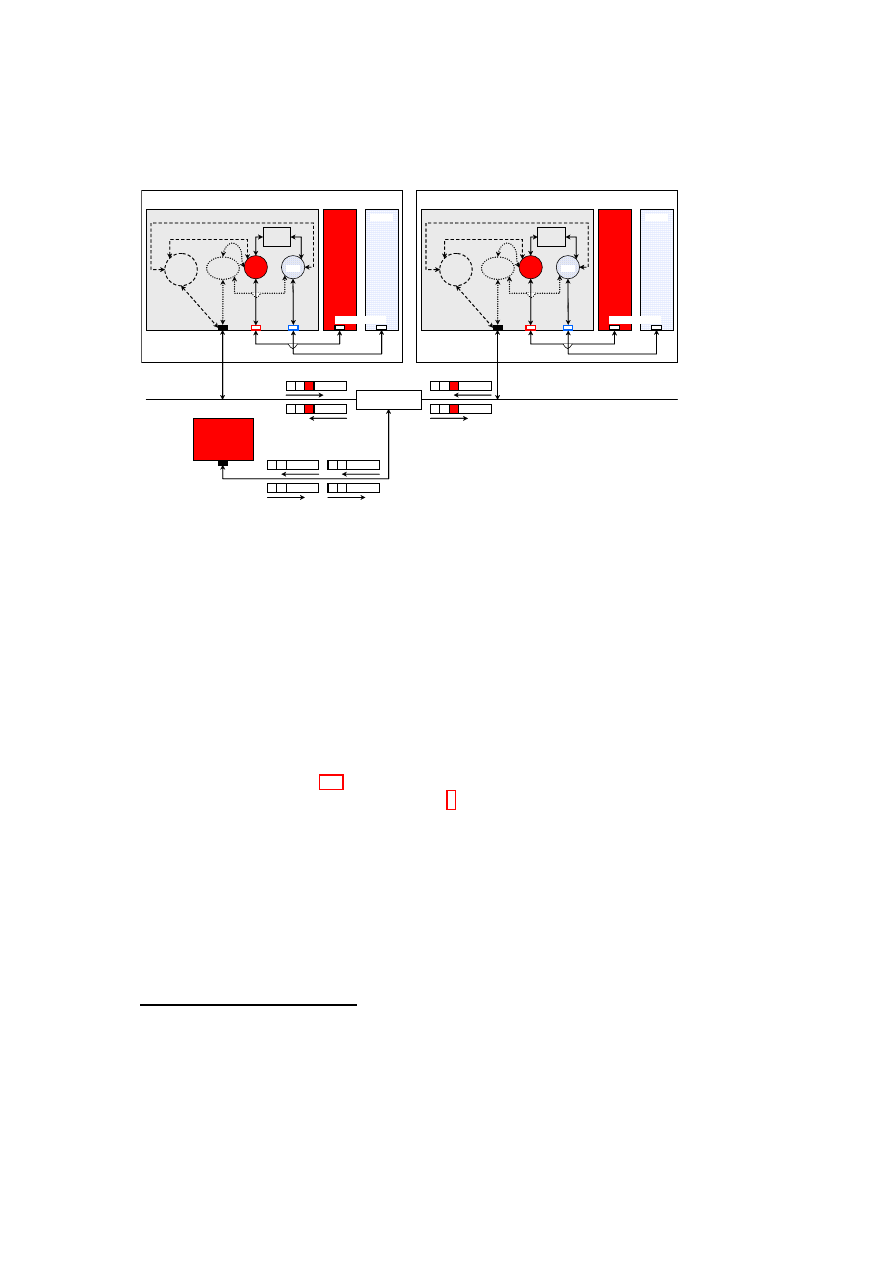

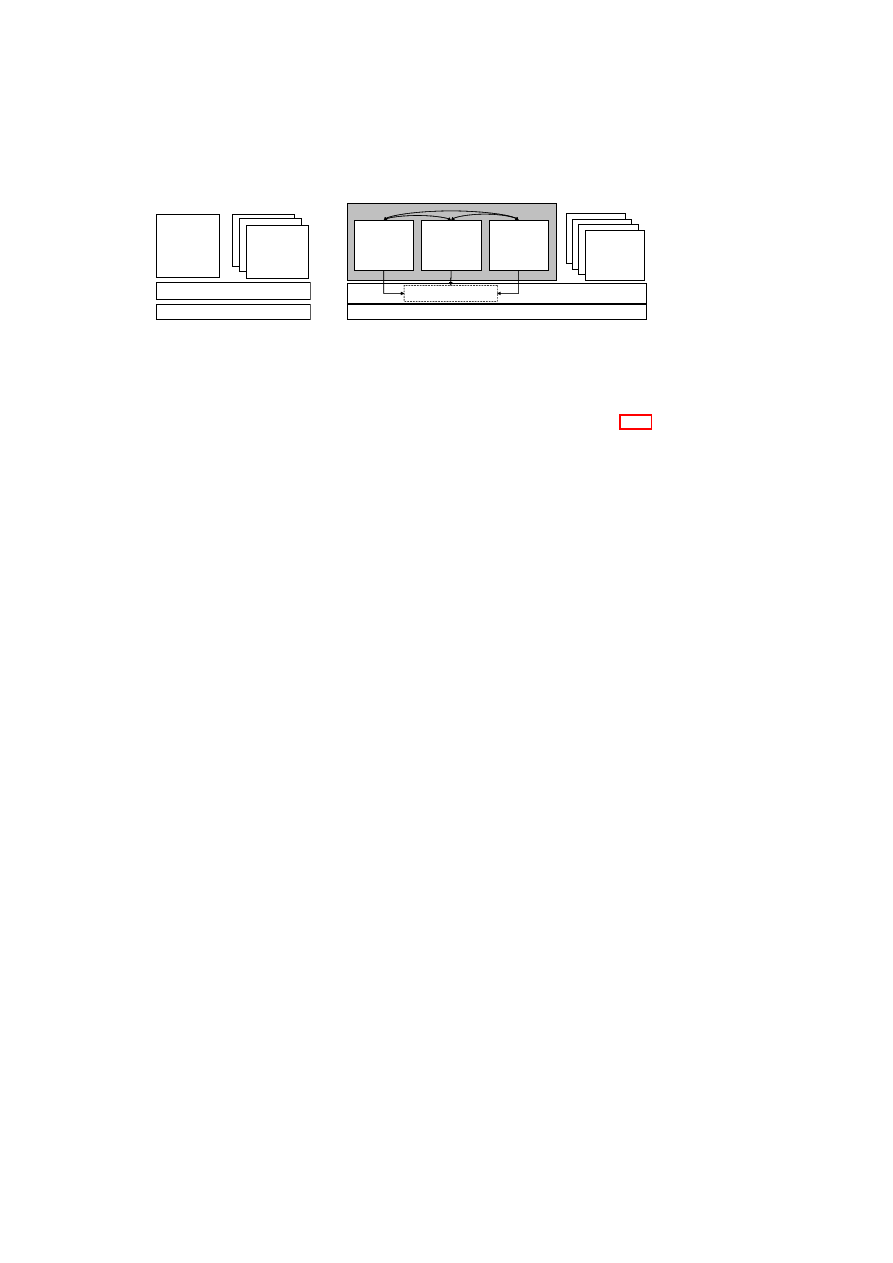

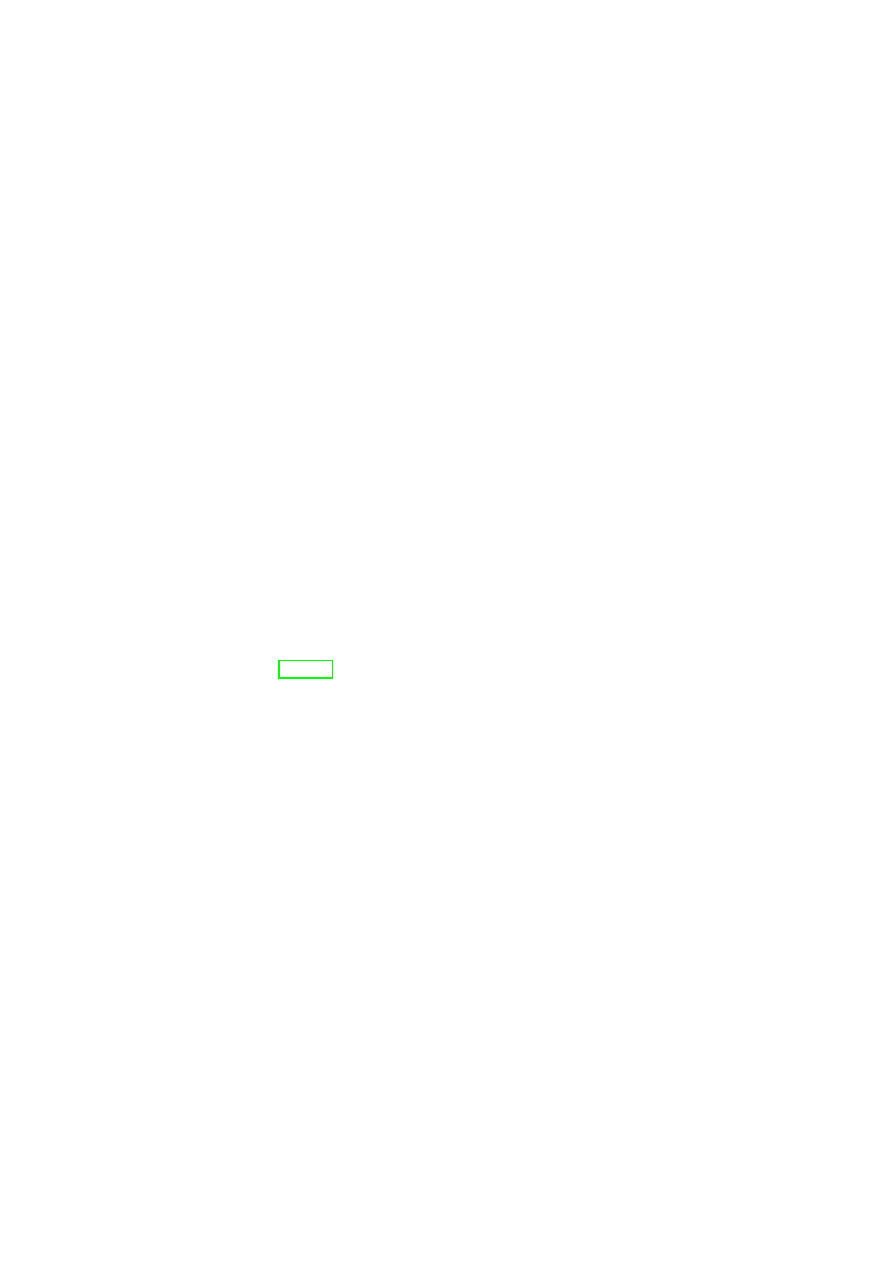

Figure 1.1: TVD Architecture: High-Level Overview.

Contribution

In this deliverable, we provide a blueprint for realizing a logical

cages model, in particular for virtualized data centers, based on a concept called

Trusted Virtual Domains or TVDs [BGJ

+

05]. Based on previous work, we de-

scribe a security management framework that helps to realize the abstraction of

TVDs by guaranteeing reliable isolation and flow control between domain bound-

aries. Our framework employs networking and storage virtualization technolo-

gies as well as Trusted Computing for policy verification. Our main contributions

are (1) combining these technologies to realize TVDs and (2) orchestrating them

through a management framework that automatically enforces isolation among dif-

ferent zones. In particular, our solution aims at automating the verification, instan-

tiation and deployment of the appropriate security mechanisms and virtualization

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

8

OpenTC D05.5 – Design and Concept of a Trusted Virtual Datacenter

technologies based on an input security model, which specifies the required level

of isolation and permitted information flows.

1.2

Outline of this Report

This deliverable is structured into three parts.

Part I introduces the objectives and main concepts of a trusted virtual datacen-

ter. This includes the the high-level architecture documented in Chapter 2 and the

policy enforcement, refinement, and management in Chapter 3.

Part II then documents details of two building blocks, namely networking and

dependability. Chapter 4 describes our revised and detailed description of auto-

mated provisioning of virtual networks and their security elements such as fire-

walls and Virtual Private Networks (VPN). Chapter 5 investigates how to increase

the dependability of datacenters in order to allow virtualization of mission-critical

applications. This chapter concludes by proposing a dependability-enhanced archi-

tecture for the Xen hypervisor where different virtual machines monitor each other.

Whenever failures are detected, the machines are then dynamically rejuvenated to

retain service.

Part III concludes this report, contains an appendix with an extensive bibliog-

raphy of related work.

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

Chapter 2

Secure Virtualized Datacenters

We now outline the OpenTC 2009 proof of concept prototype and its main archi-

tectural elements.

2.1

Objectives & Proof-of-concept Scenario

2.1.1

Main Objectives

We have built a virtual datacenter that should be comparable with a consolidated

and simplified version of today’s datacenters. Like today, a datacenter will e used

by many customers. As a consequence, customer isolation is of paramount impor-

tance.

The next important observation is that customers are usually transitioned from

their own legacy datacenters into a shared datacenter. As a consequence, each

customer usually has a legacy way of managing its datacenter. This legacy way

should be supported by the new datacenter. Ideally, whatever hard- and software

as well as management infrastructure a customer had should be virtualized and

supported by the new virtual datacenter.

2.1.2

Virtual Datacenter Scenario

The scenario we want to demonstrate has multiple sub-scenarios with increasing

complexity:

“Physical Systems” Management The first scenario is the provisioning of for-

merly physical systems. The scenario we build is a customer who boots a

server and installs this server from the network. For this scenario, virtualiza-

tion should be transparent.

Hosting Media Services The second scenario is to use the datacenter to host me-

dia services. The goal is to allow outsiders to play media iff their platform

has been validated and deemed trustworthy.

9

10

OpenTC D05.5 – Design and Concept of a Trusted Virtual Datacenter

Virtual Systems Management The third scenario aims at virtualizing virtual sys-

tems. The key idea is that today, customers will have virtualized systems

such as Xen that need to be embedded and managed as a part of our virtual

datacenter.

We do this by migrating a Xen installation into our datacenter and then com-

puting a view of the datacenter that corresponds to the former Xen installa-

tion.

2.2

High-level Architecture

We now describe the architecture of our demonstrator. This architecture has several

components, namely hosts, networks, storage, virtual machines, and software.

2.2.1

Network Architecture

We use the trusted virtual domain model to isolate different networks. In this model

each domain is isolated from other domains while being only permitted to commu-

nicate via well-defined gateways.

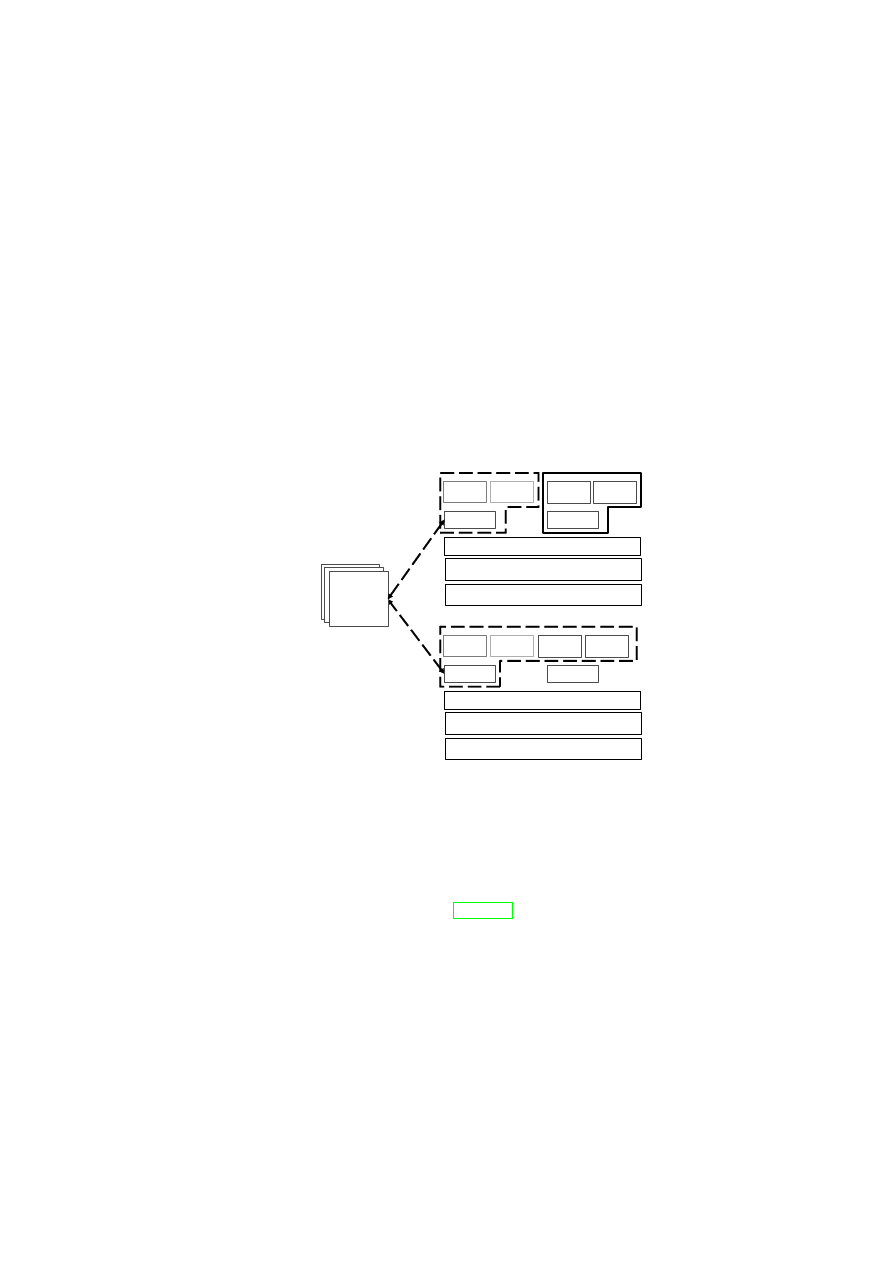

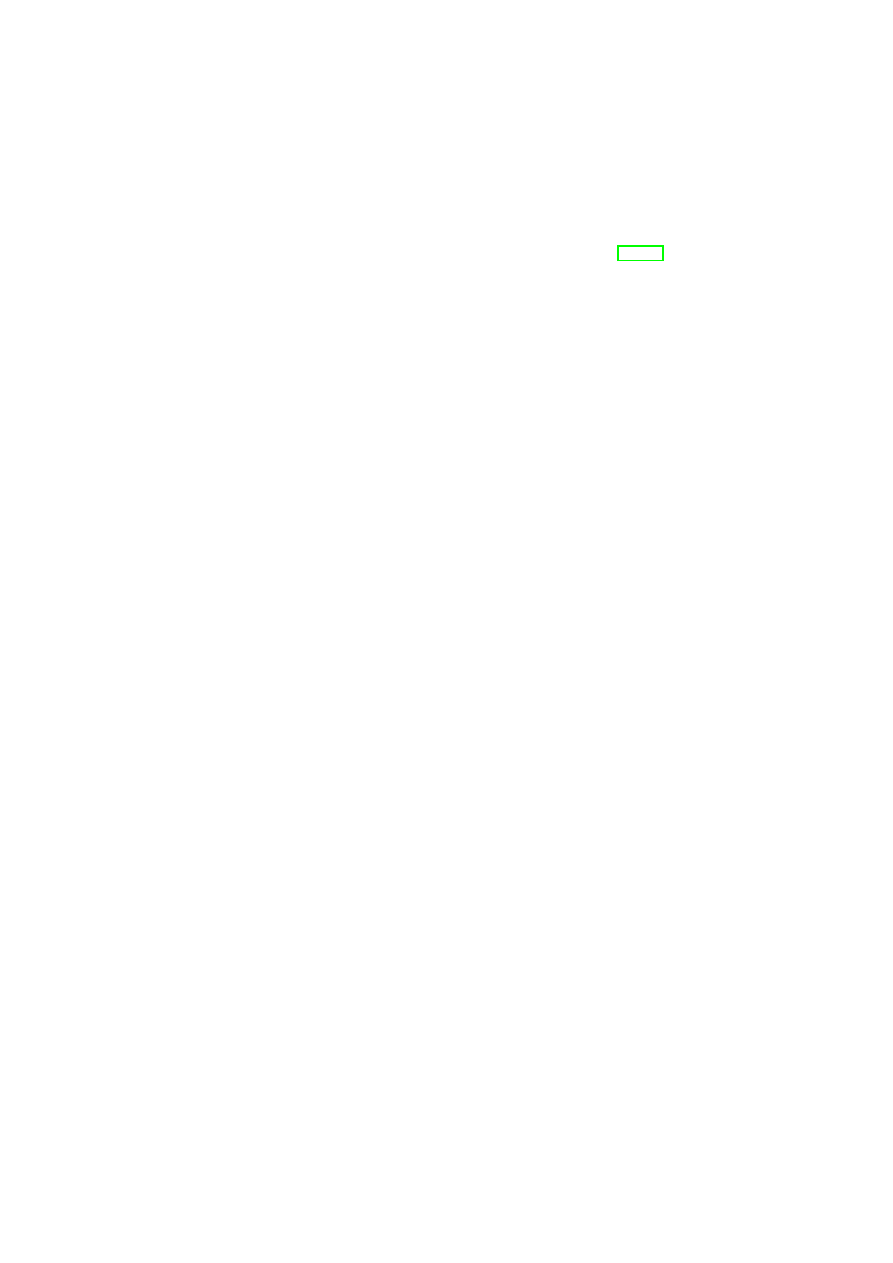

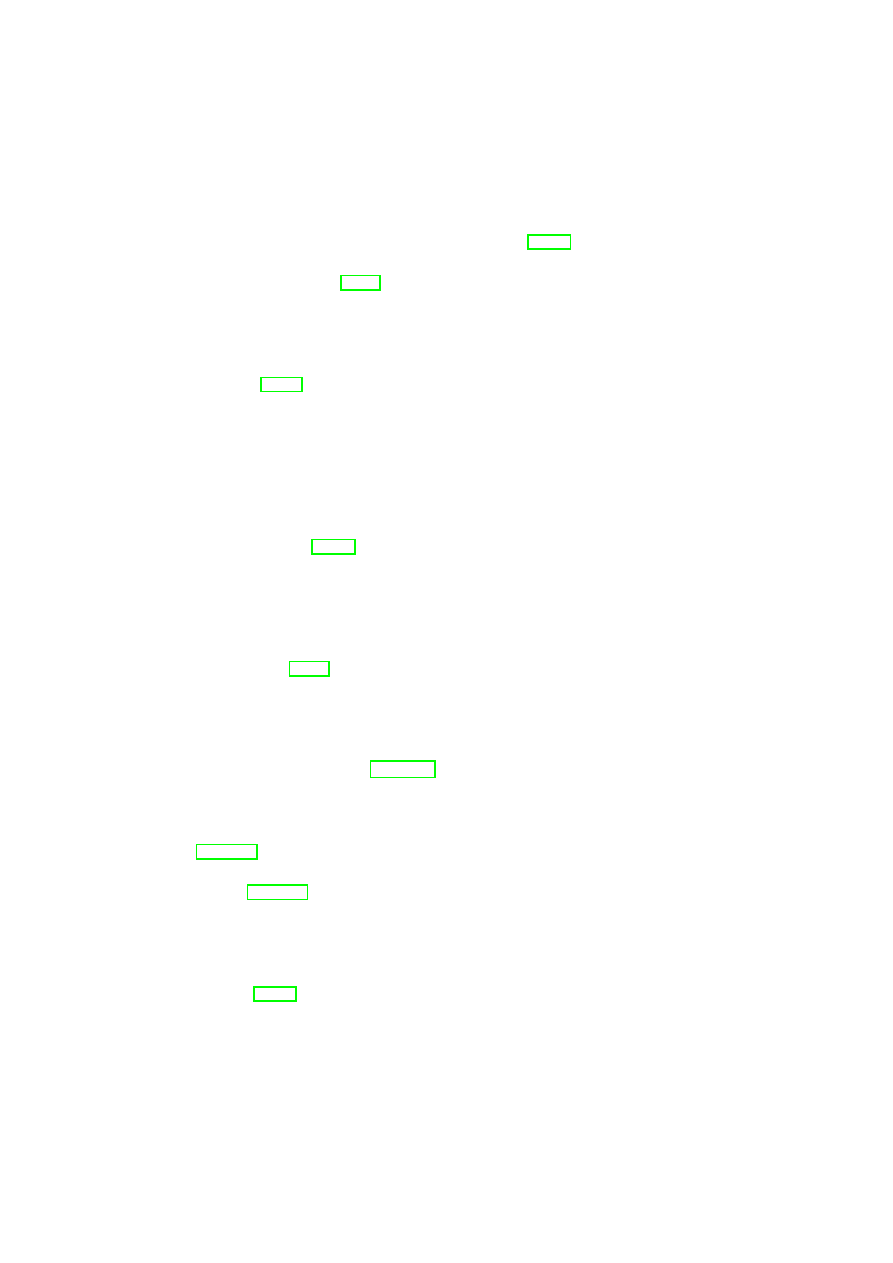

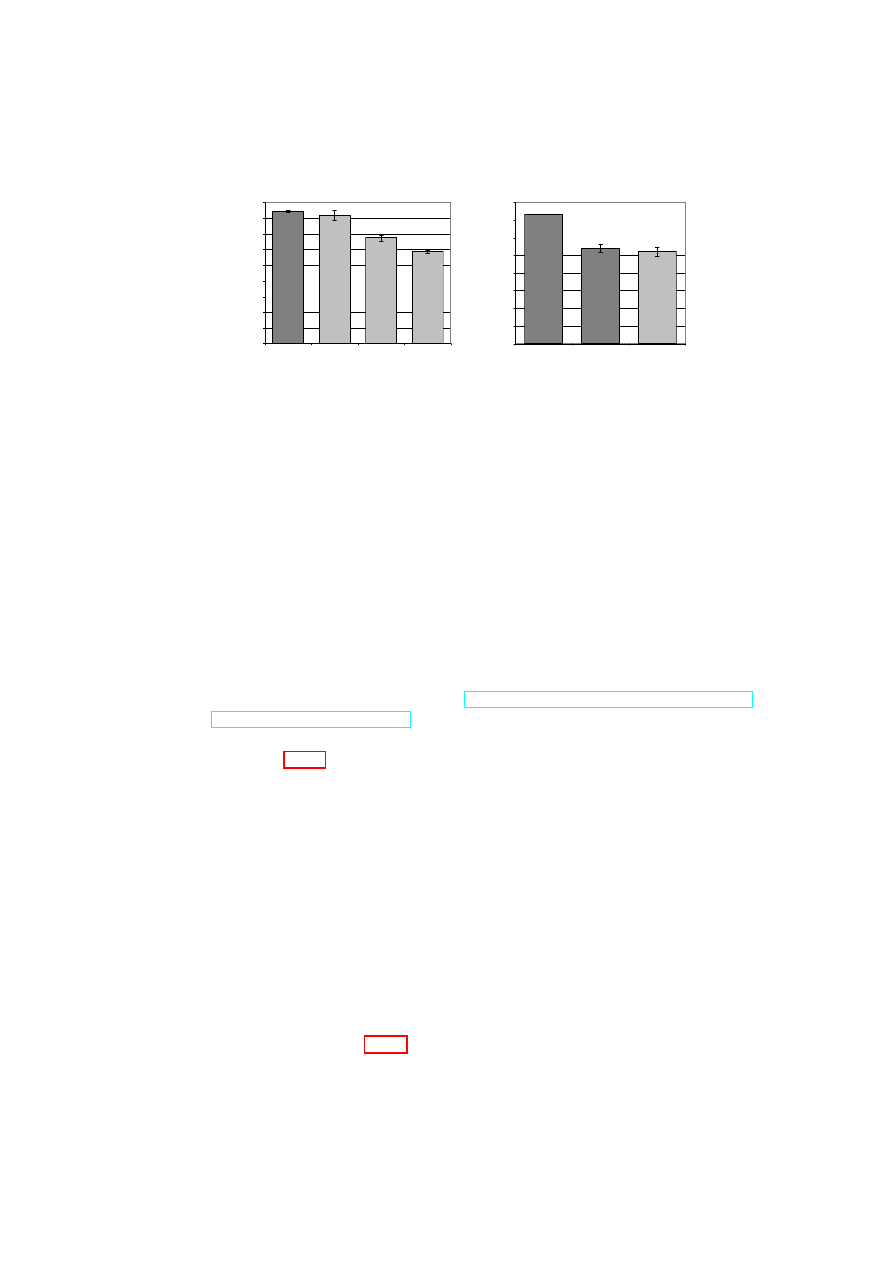

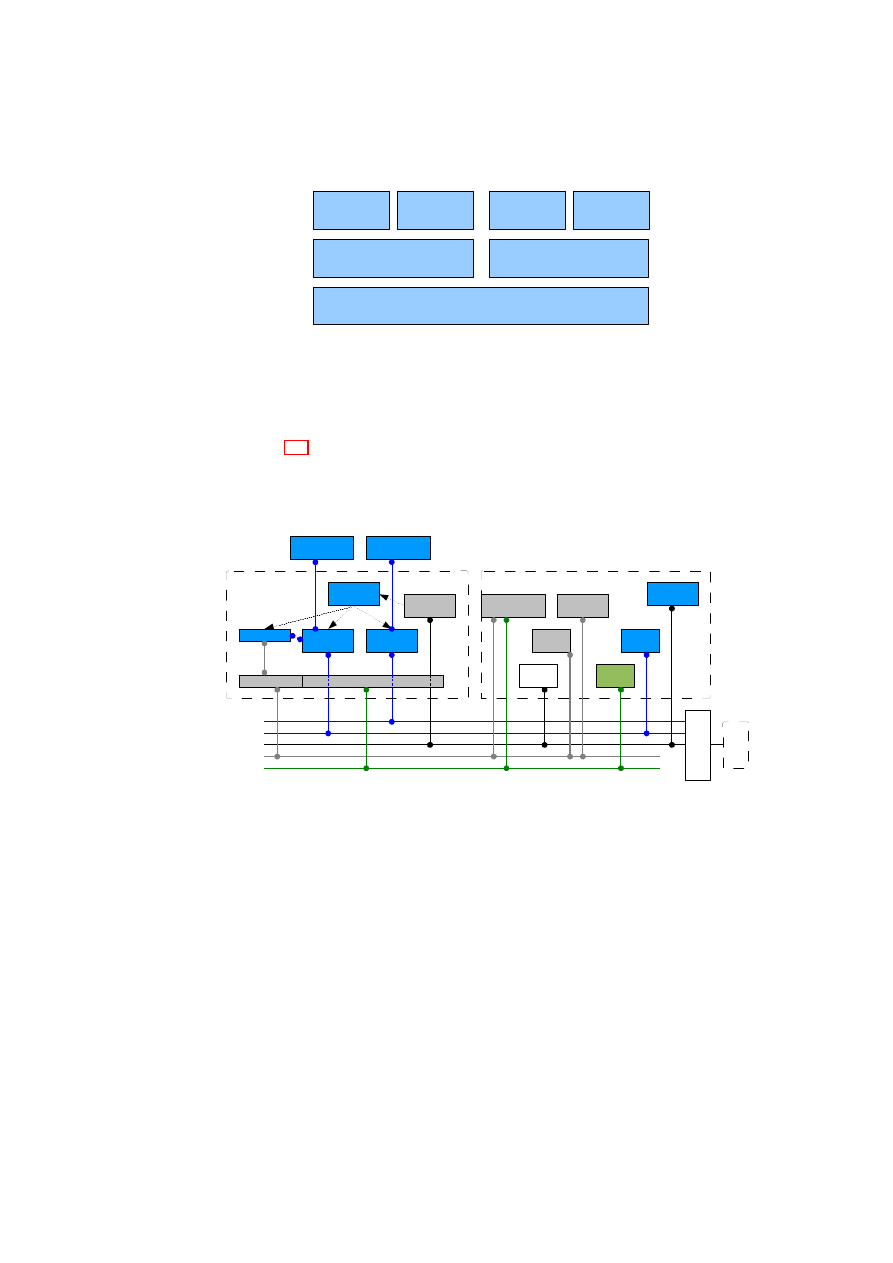

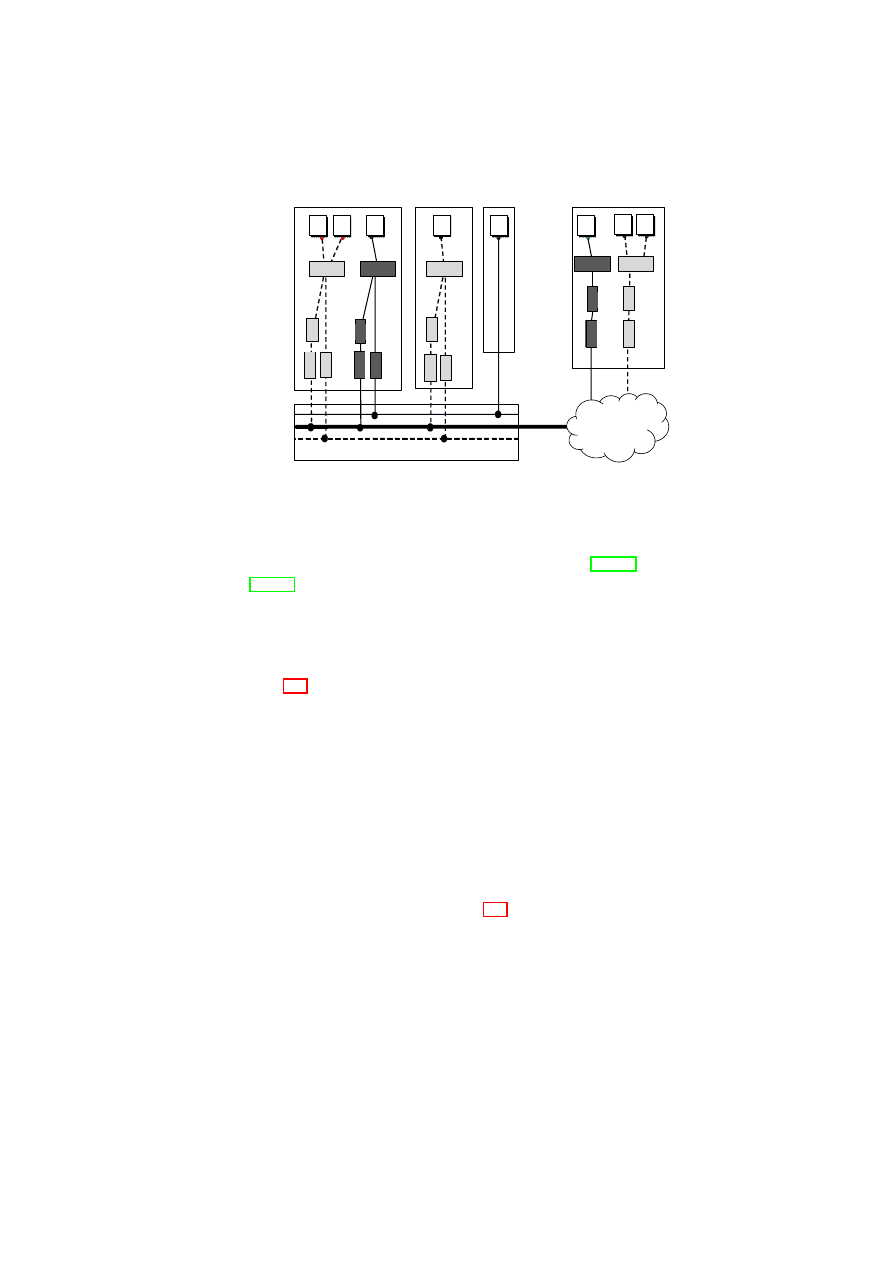

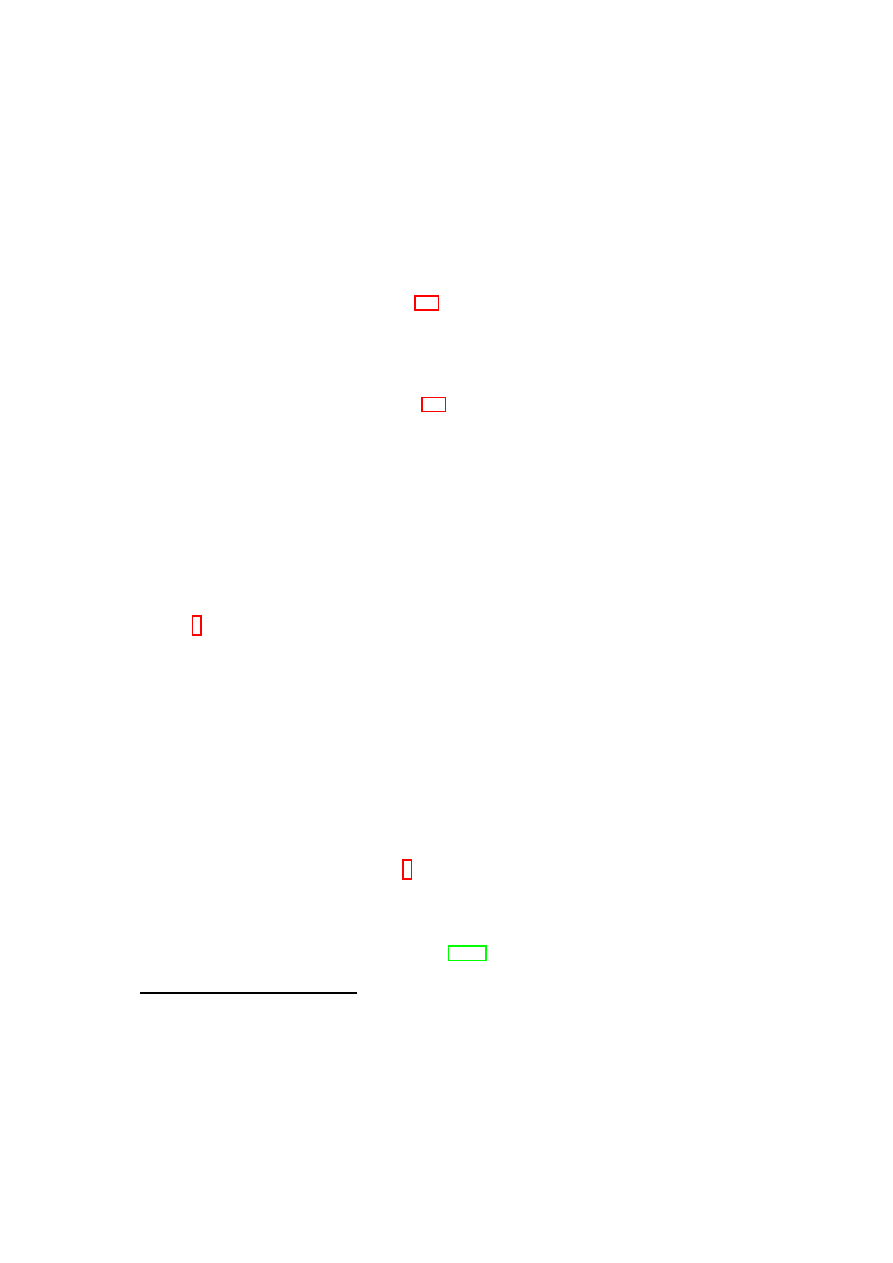

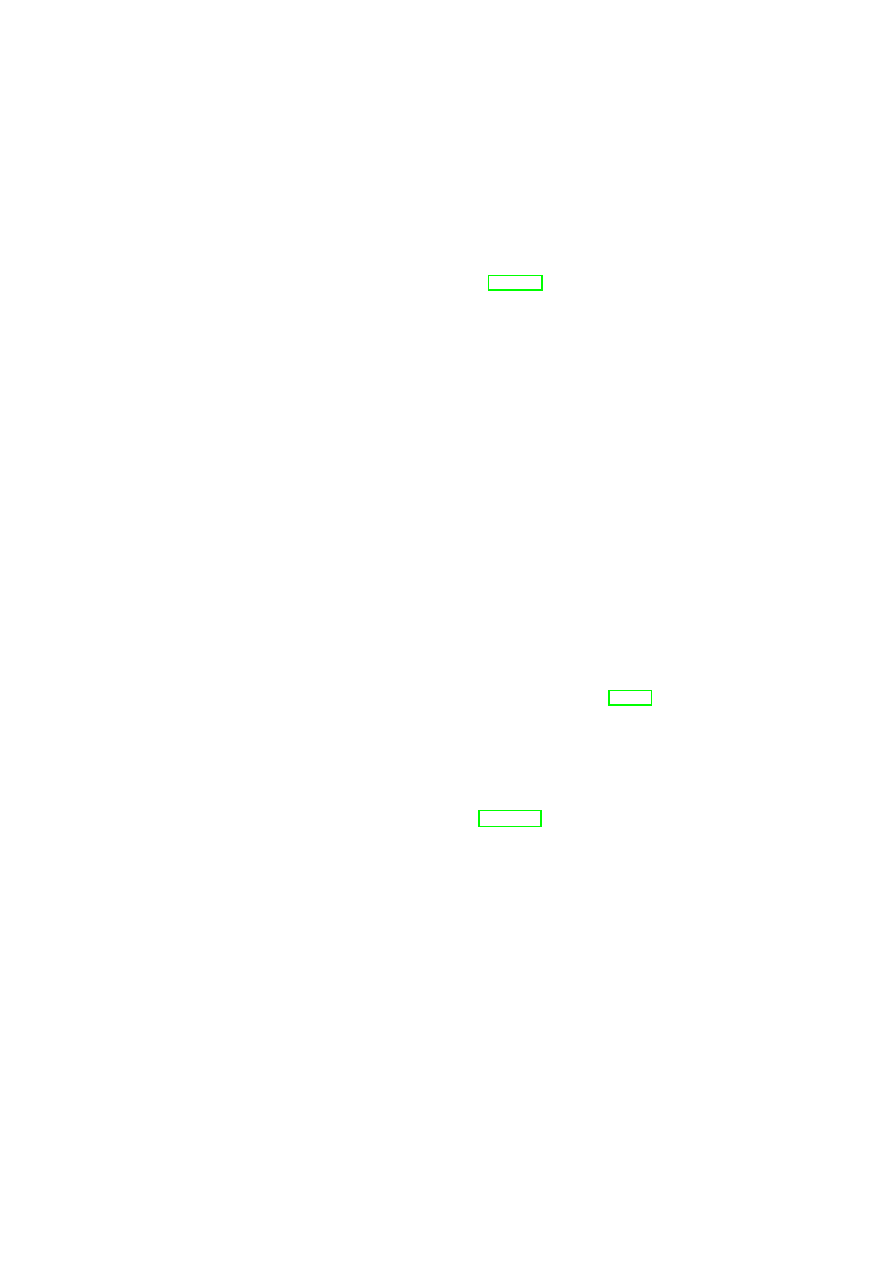

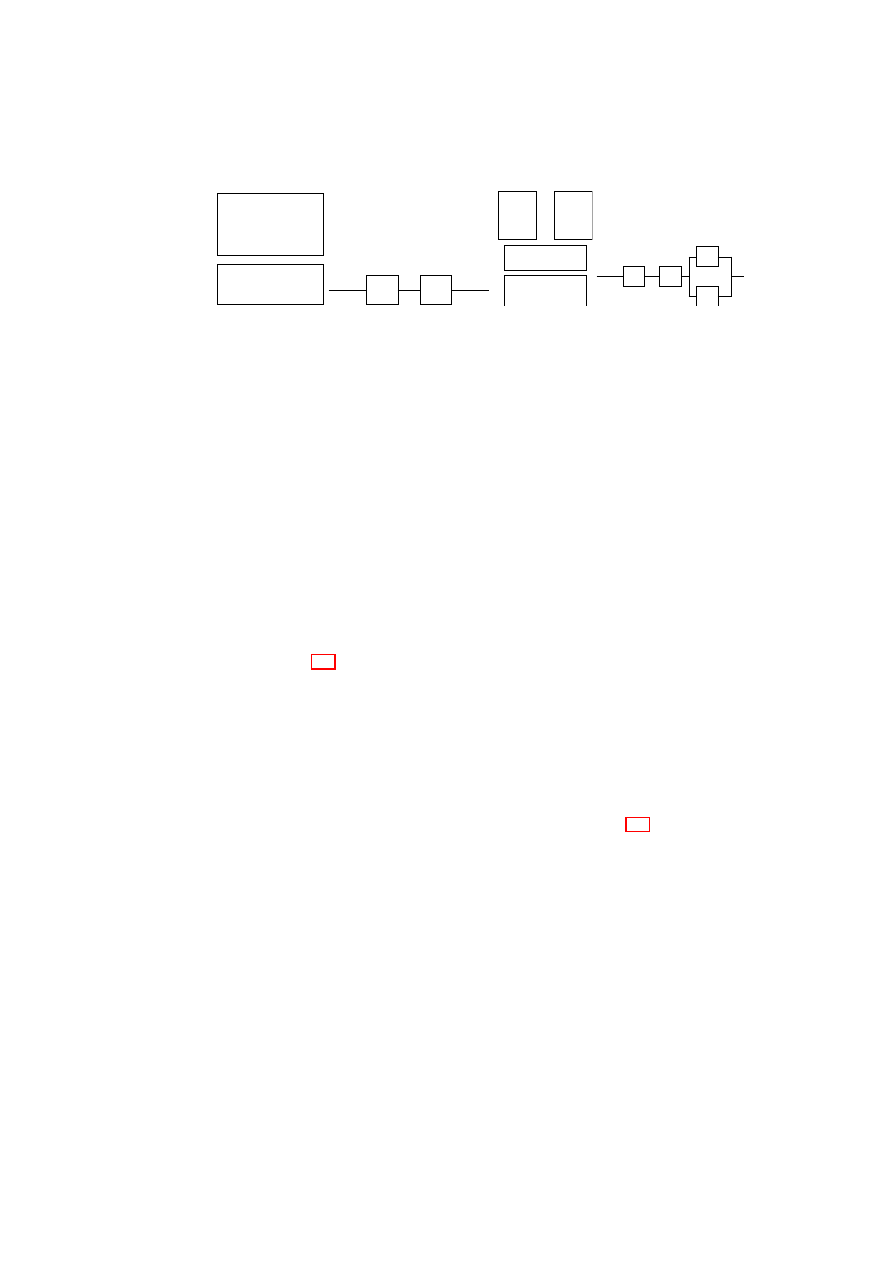

In order to structure the demonstrator, we have defined the following domains

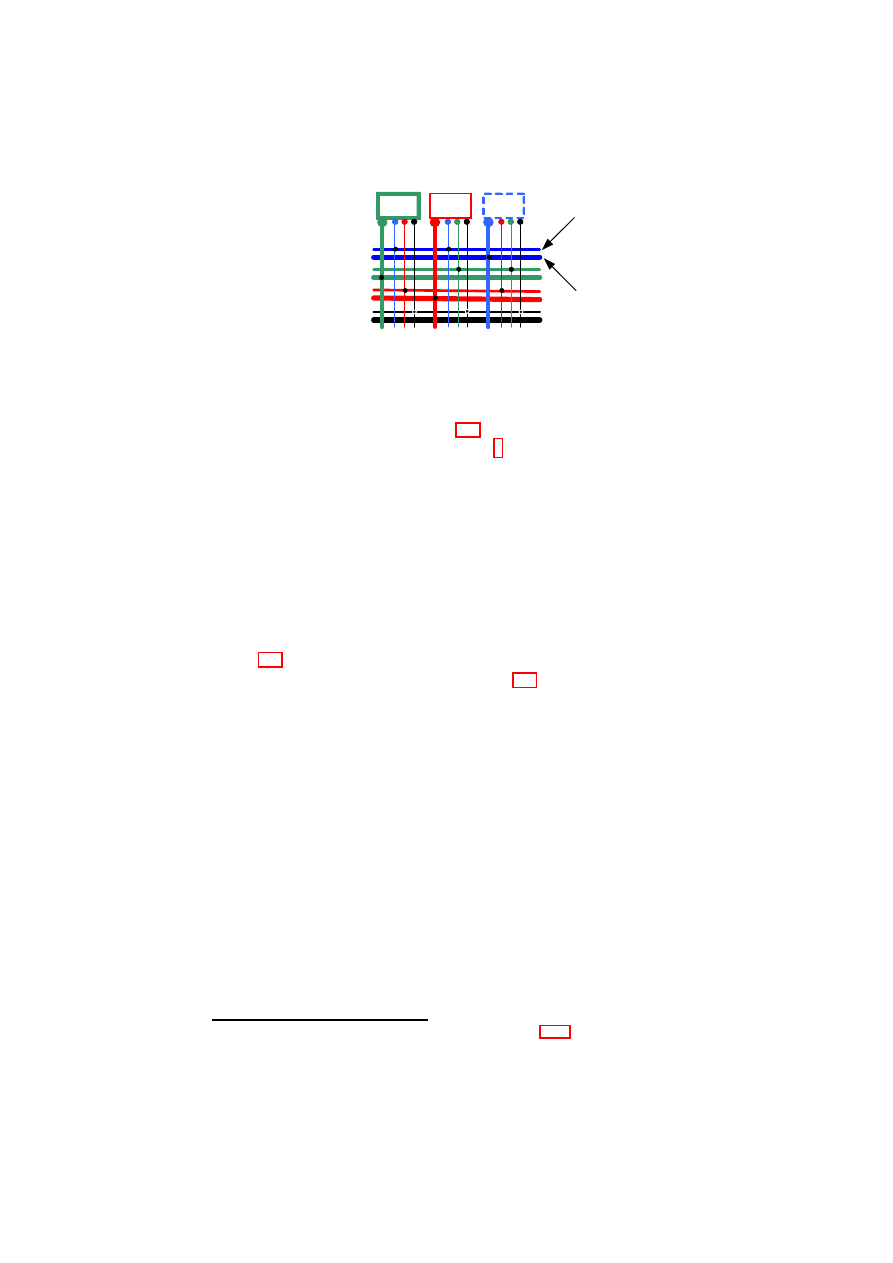

(see Figure 2.1):

Customer application zone This domain constitutes a network of a given cus-

tomer. It transports the application traffic of a given customer. Ordinary

users can access this network.

Customer management zone This domain is the management network of a given

customer. It is used for provisioning of virtual machines, monitoring ma-

chines, and management of application zones of this customer. Administra-

tors of each customer can access the corresponding management zone.

Datacenter management zone This network is used by the datacenter adminis-

trators to manage the overall datacenter. This includes setting up new do-

mains, assigning resources, or removing domains. This domain is also used

for management communication between the different domains.

Demilitarized Zone The DMZ allows customers to connect to the Internet and

allows outsiders to establish connections to a TVD. The former is done by

establishing gateway machines that connect to the internal zone and DMZ.

The latter is achieved by TVD proxy factories connecting to the DMZ.

Storage Area Network The storage area network provides virtual storage to all

physical hosts in a datacenter. The management components can then con-

nect selected storage unites to given virtual machines.

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

CHAPTER 2. SECURE VIRTUALIZED DATACENTERS

11

Cust. 1-A

Cust. 1-B

Cust. 2-A

Cust. 2-B

Cust 2 Mgmt

Cust 1 Mgmt

Datacenter Mgmt

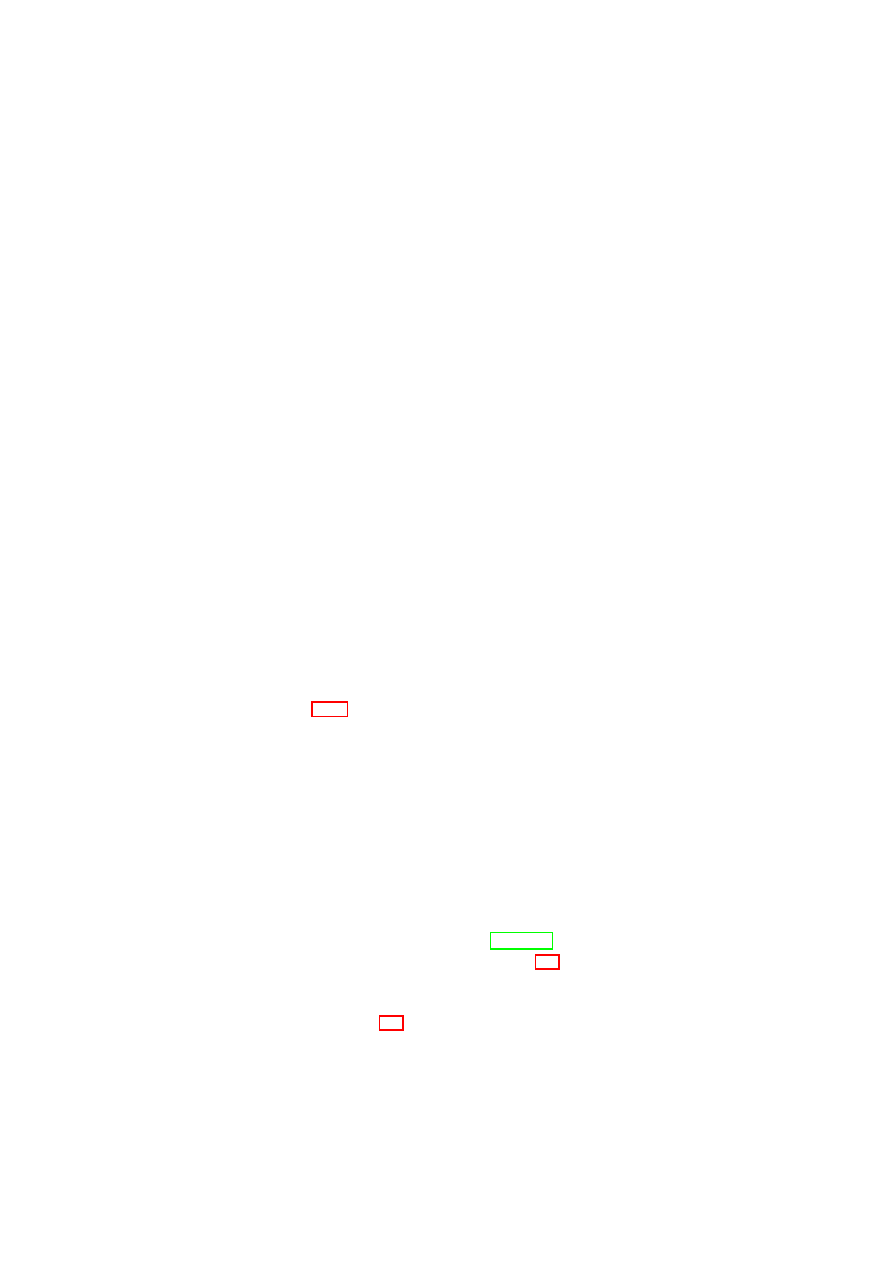

Figure 2.1: Trusted Virtual Domains of a Virtual Datacenter

These network allows clear isolation of different customers while providing a

backbone management network for the TVDC infrastructure to communicate.

Figure 2.2 depicts the details of the networking for a single user-level TVD.

It is important to note that the datacenter hosts are booted from the network us-

ing the Preboot eXecution Environment (PXE). This allows the administrators to

seamlessly add and remove physical hosts.

VDC

SAN

DMZ

TVDusr

TVDmgn

TVDproxy

Factory

TVDproxy

vSwitch

(mgnt.)

vSwitch

(user)

XenAPI

APIfilter

Xen

DC Host Platform

TVD

Master

F

ire

w

a

ll

In

te

rn

e

t

DHCP

Server

PXEboot

Server

DC infrastructure components

DNS

Server

NFS

Server

NFS

Server

TVD mgnt.

Server VM

TVD Guest

VM

VDC mgnt.

Server

Figure 2.2: Networking Details of a Virtual Datacenter

2.2.2

Multi-Tenant Management

An important goal of our prototype is to enable heterogeneous management. This

means that each customer should be able to use arbitrary existing management

tools to manage their domains: While the datacenter operator uses given software

to assign resources quotas to each customer, the respective customer can then use

his existing management tools to use these resources to build virtual machines on

assigned hosts.

We implement this multi-tenant concept by providing filtered instances of the

XenAPI to individual customers while providing the complete XenAPIs only to the

datacenter operator.

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

12

OpenTC D05.5 – Design and Concept of a Trusted Virtual Datacenter

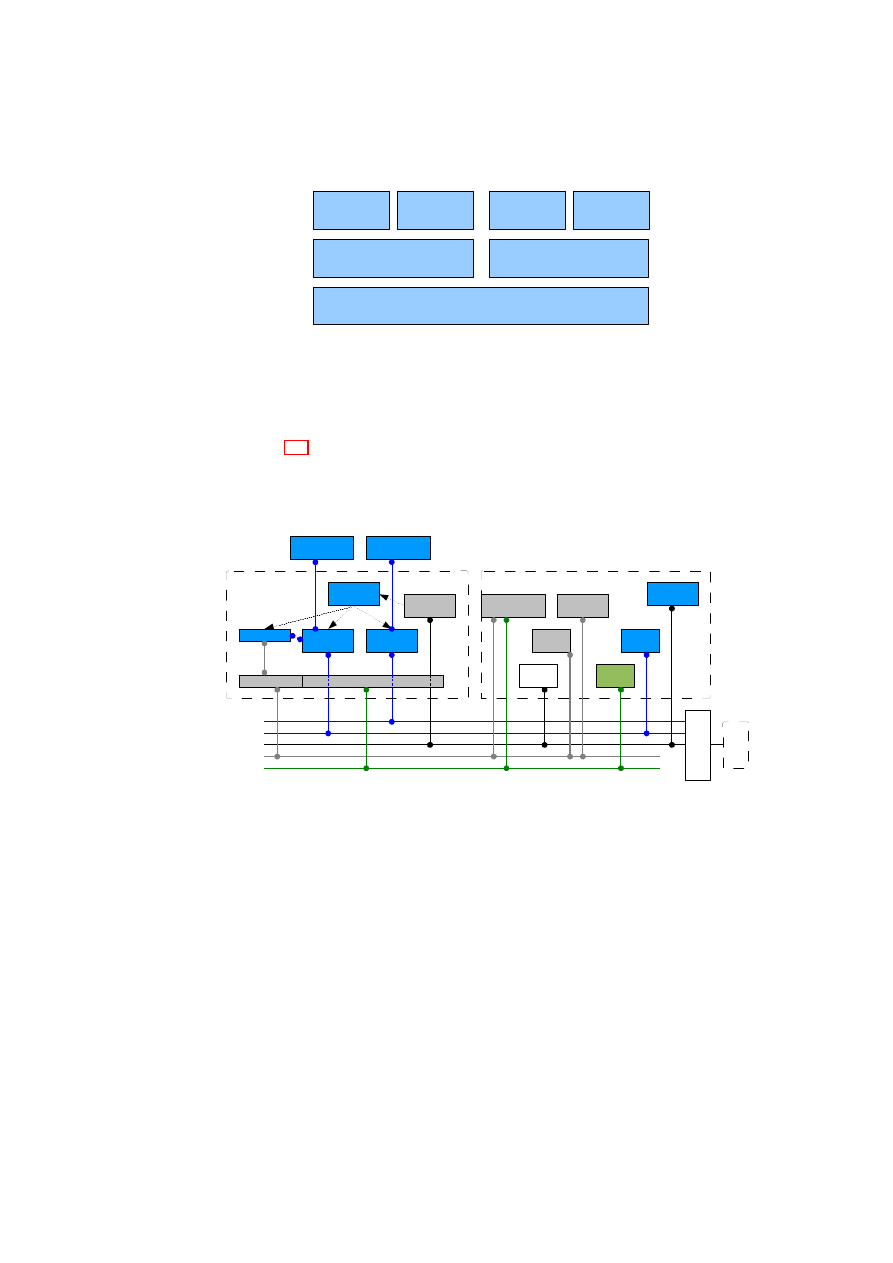

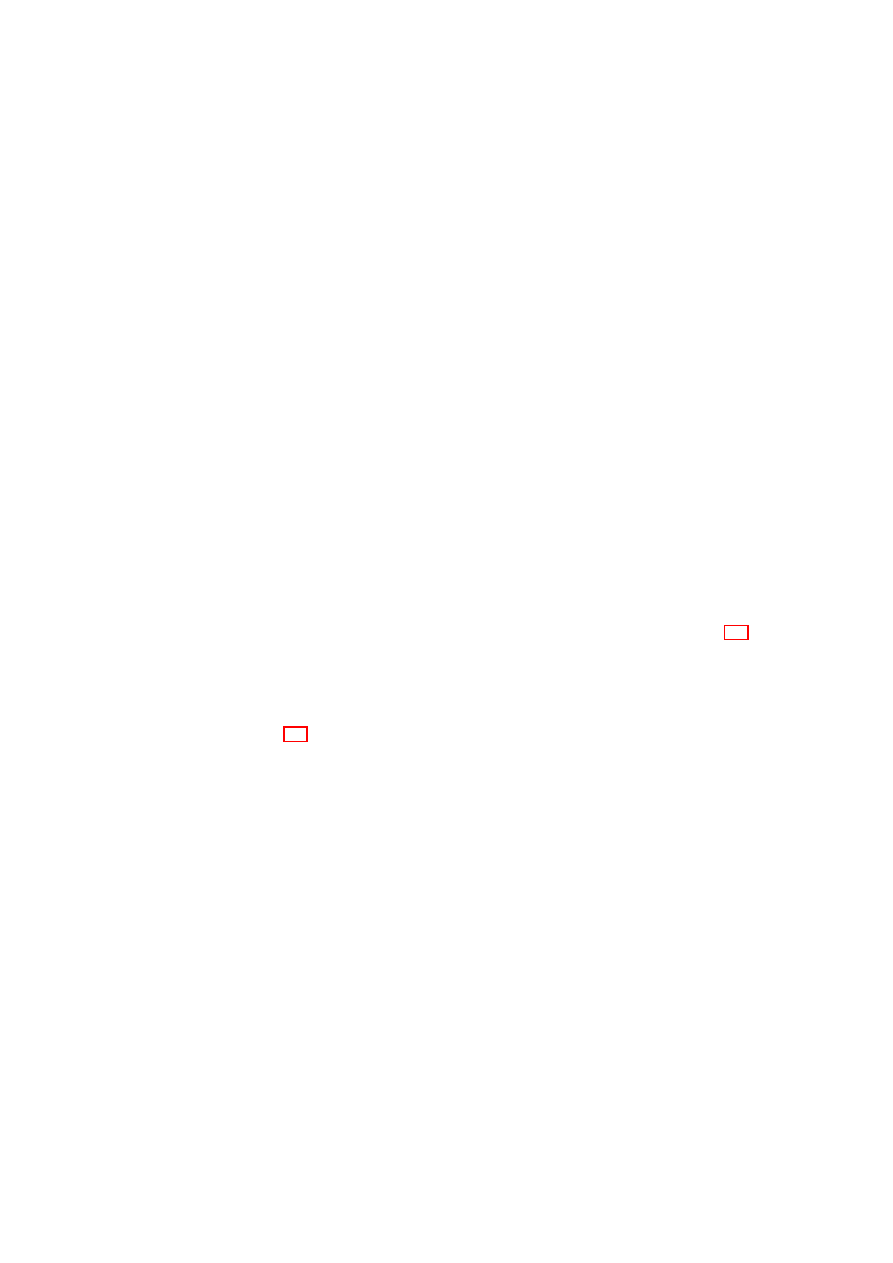

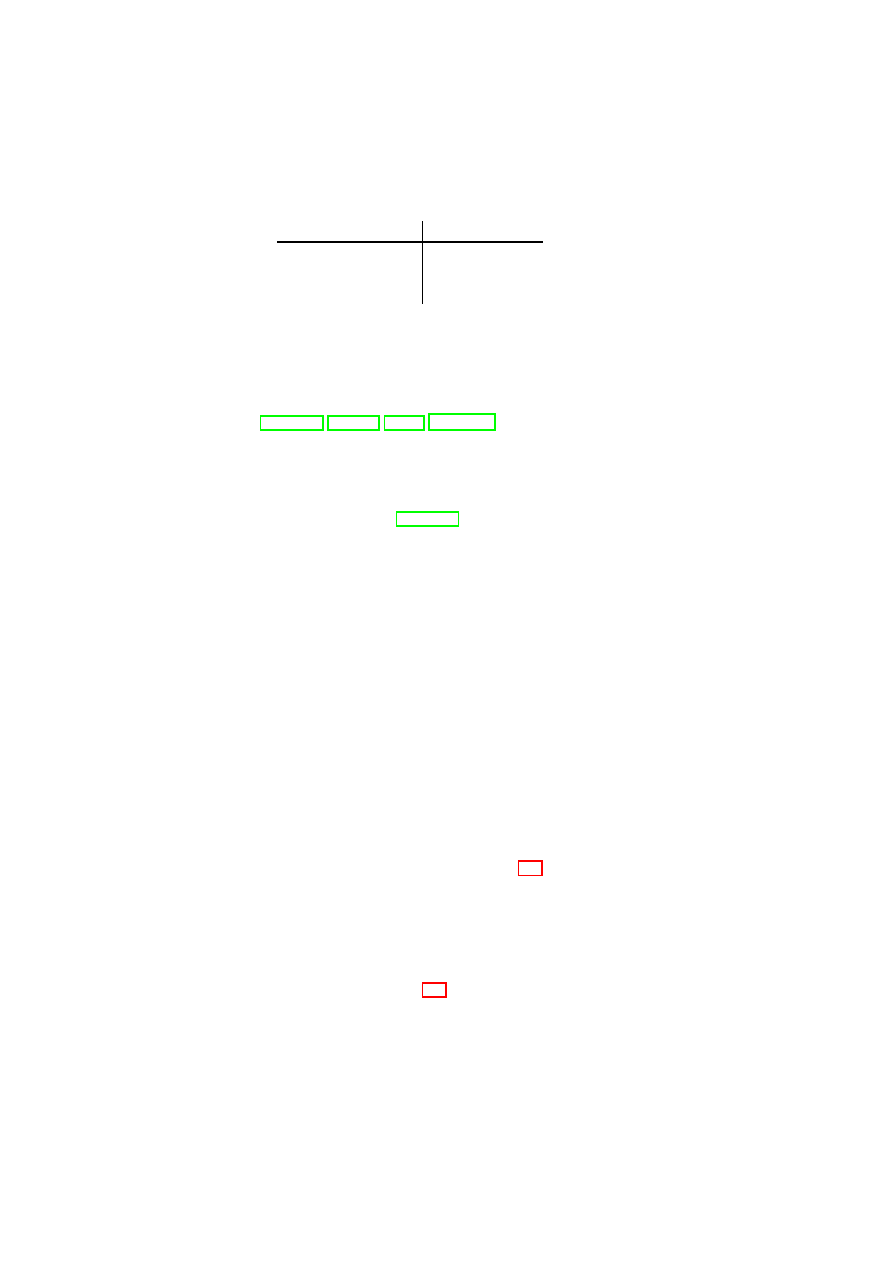

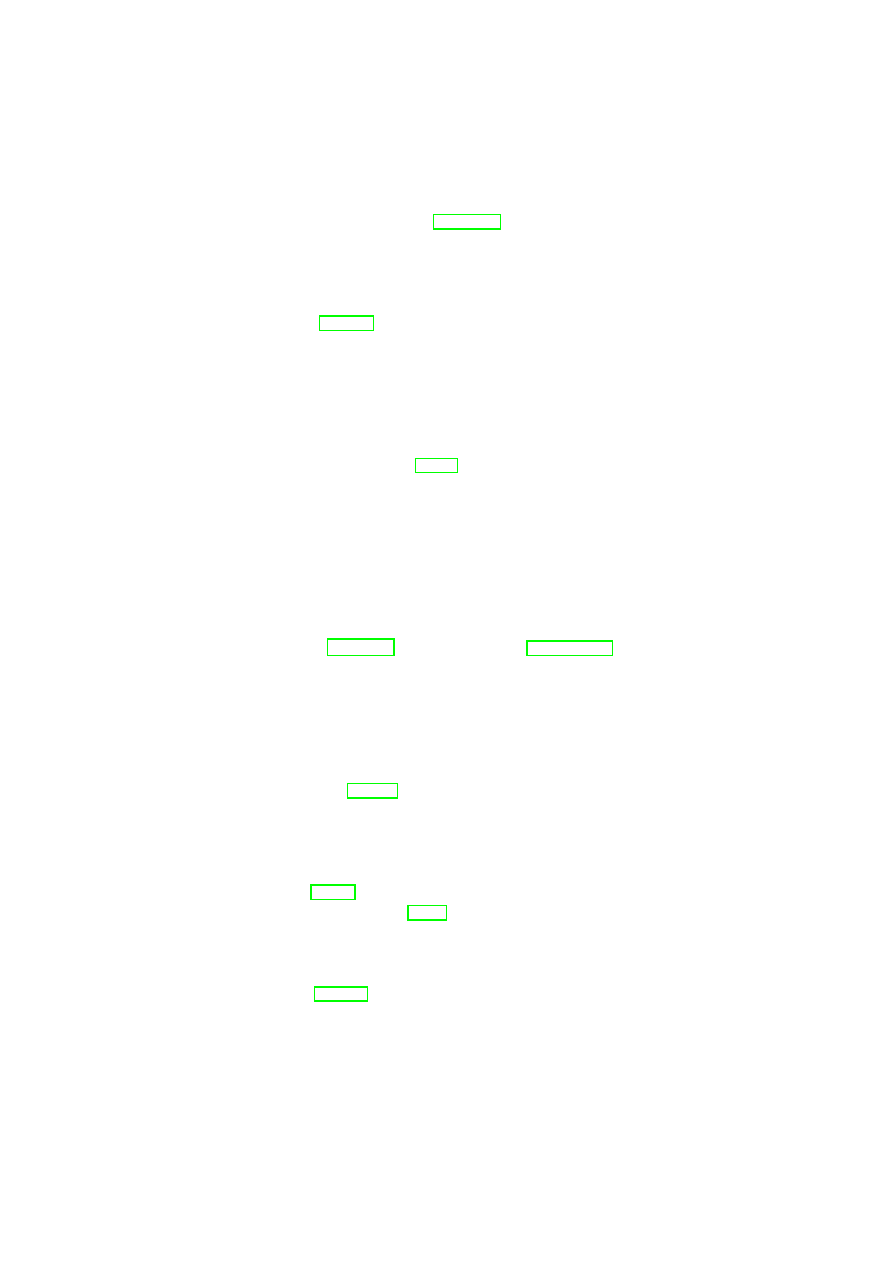

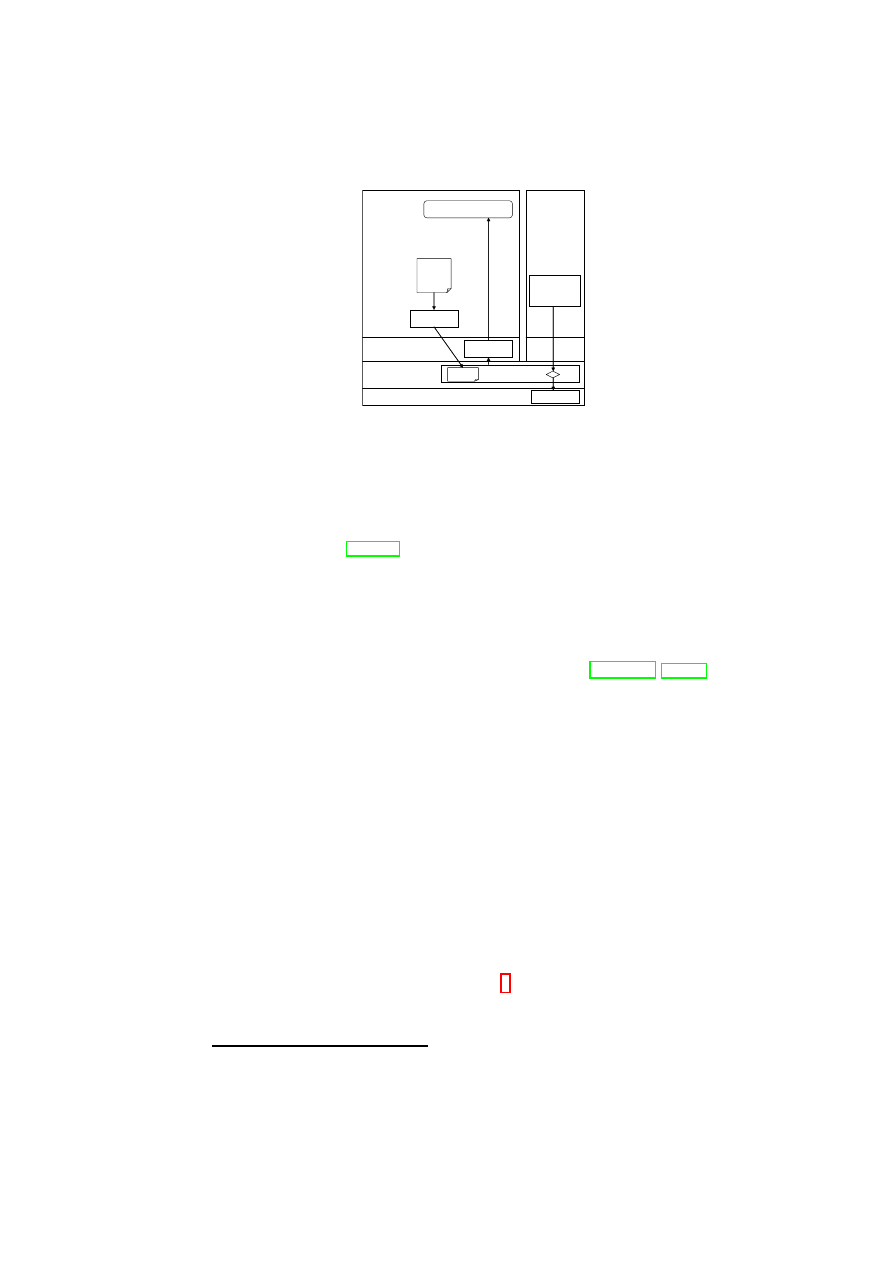

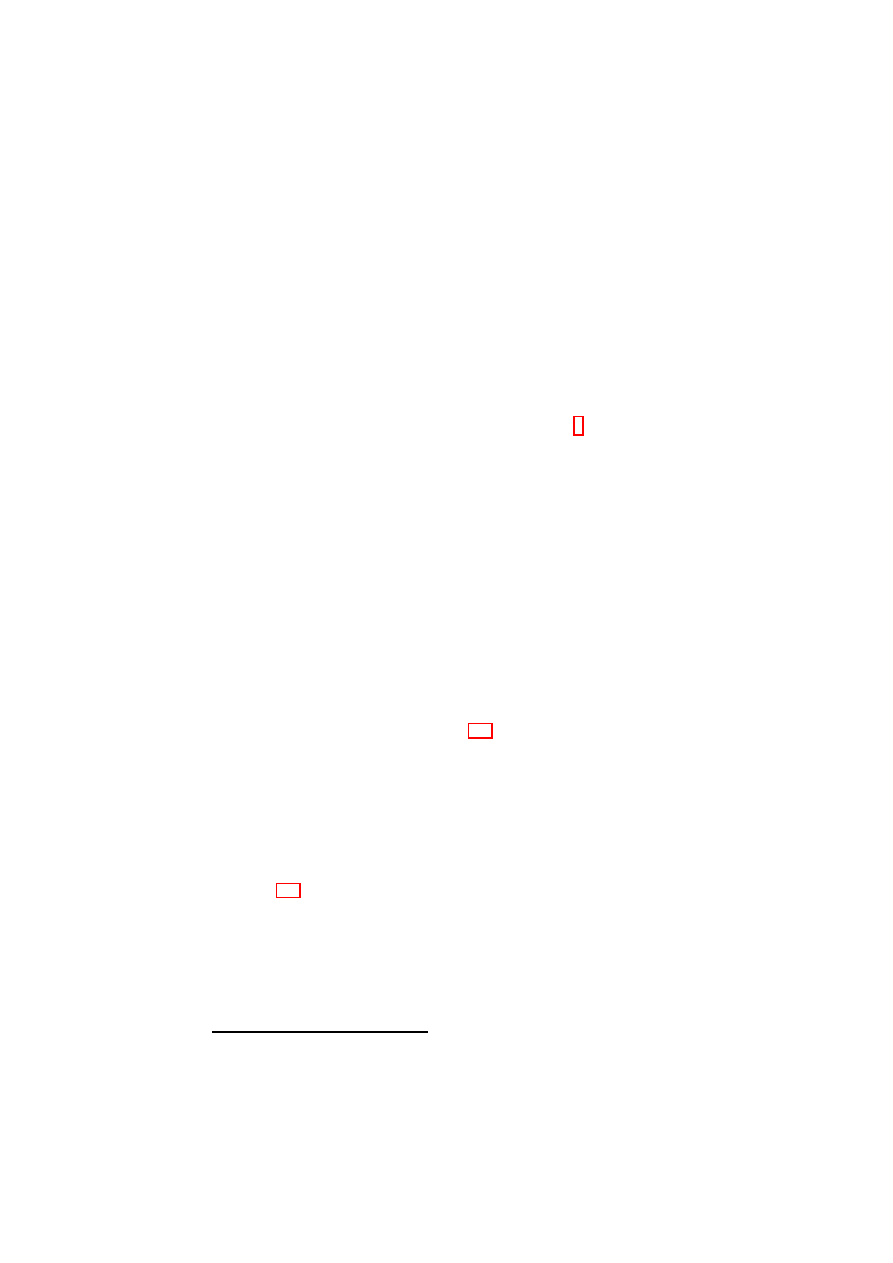

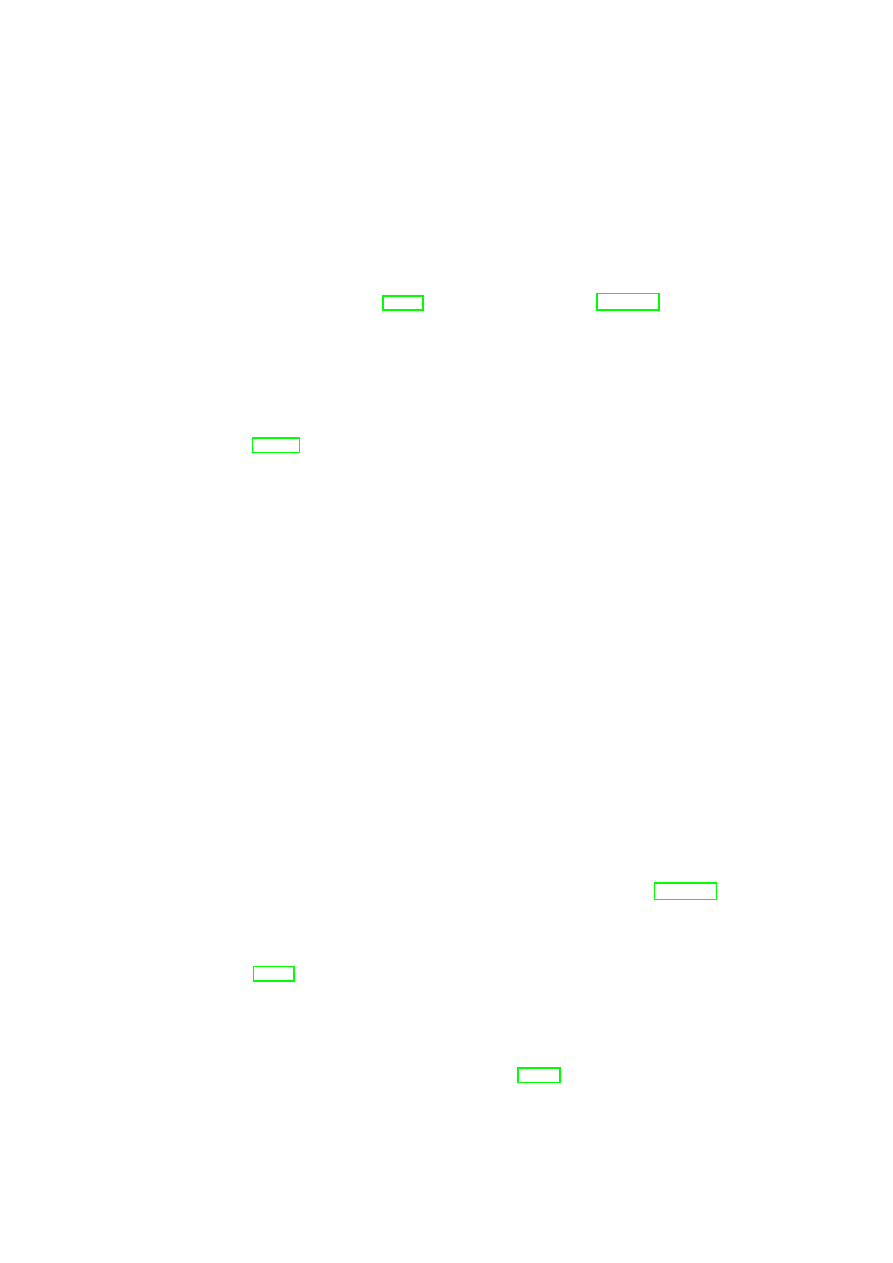

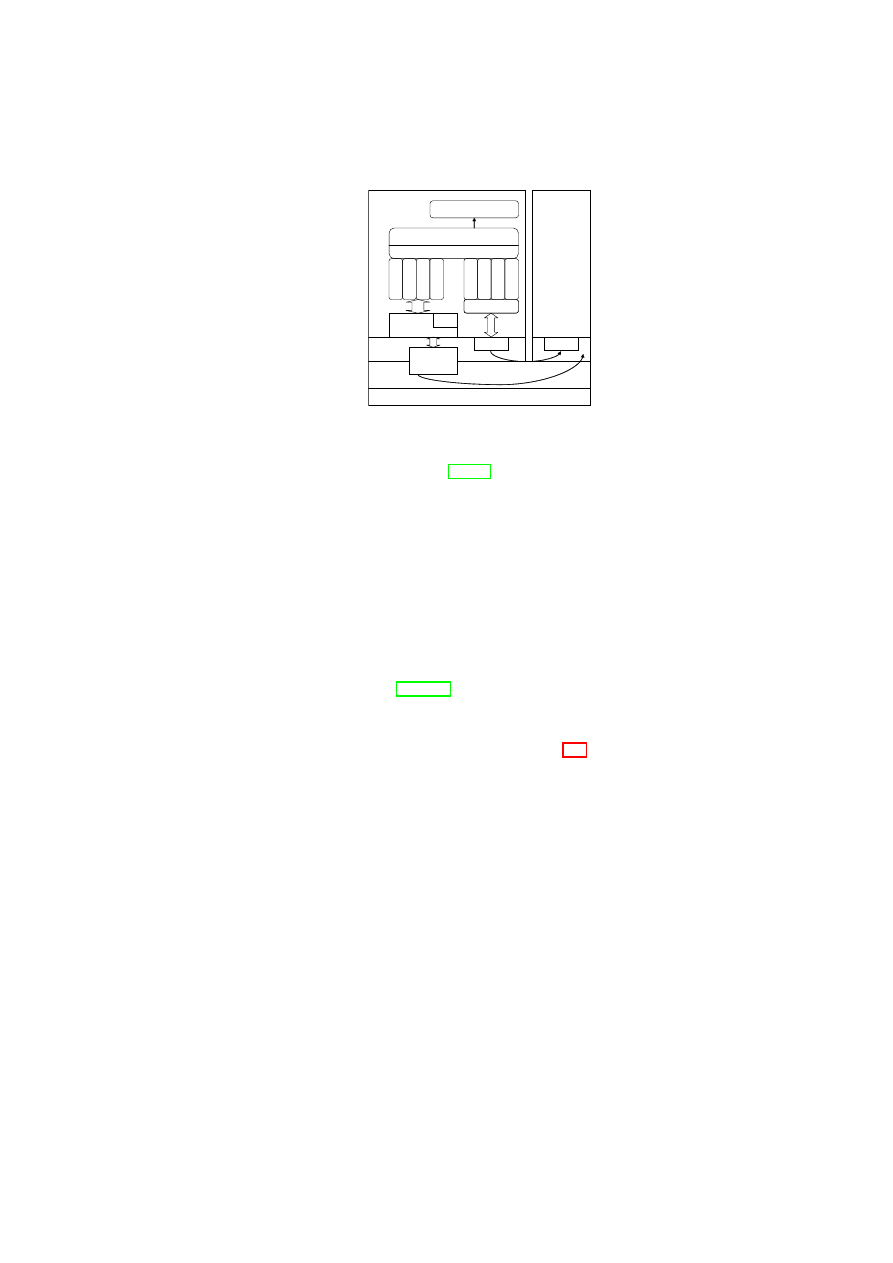

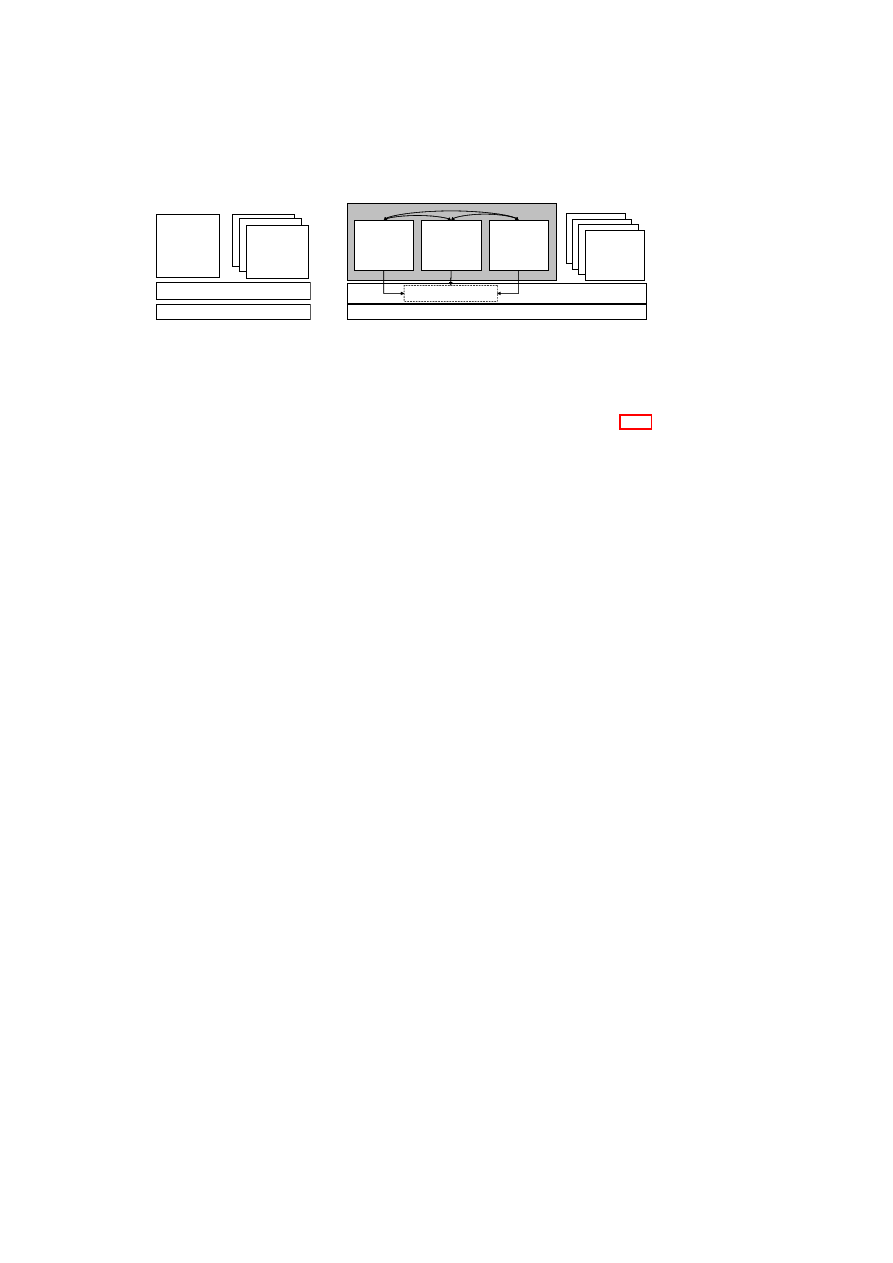

This is depicted in Figure 2.3: For each customer, a XenAPI filter component is

providing the corresponding filtered XenAPI service to the management network

of the given customer. The basic idea is that the filter obtains the list of VMs

that belong to a given customer and strips all other machines from the returned

information. Similarly, other elements (storage/network) that do not belong to this

customer are stripped.

The XenAPI filter then forwards commands to the internal XenAPI provider.

This provider in turn forwards non-security commands to the existing XenAPI pro-

vided by

xend

while forwarding security extensions to the OpenTC compartment

manager.

API-TVDfilter

(limit visibility of resrcs.)

IF on

Mgnt. net

IF on

Mgnt. net

TVD Proxy

(Enforcing TVD policies,

controlling vNet)

TVD Proxy

(Enforcing TVD policies,

controlling vNet)

API-TVDfilter

(limit visibility of resrcs.)

Compartment Manager

(starting/stopping of

SecServ managed VMs)

TVD Proxy

(Enforcing TVD policies,

controlling vNet)

XenAPI (Xend)

API-basefilter

(intercept VM commands)

API-TVDfilter

(limit visibility of resrcs.)

TVD Proxy Factory

(spawning & directory of proxies)

[VM uuids]

VM.start

VM.stop

VM.destroy

IF on

Mgnt. net

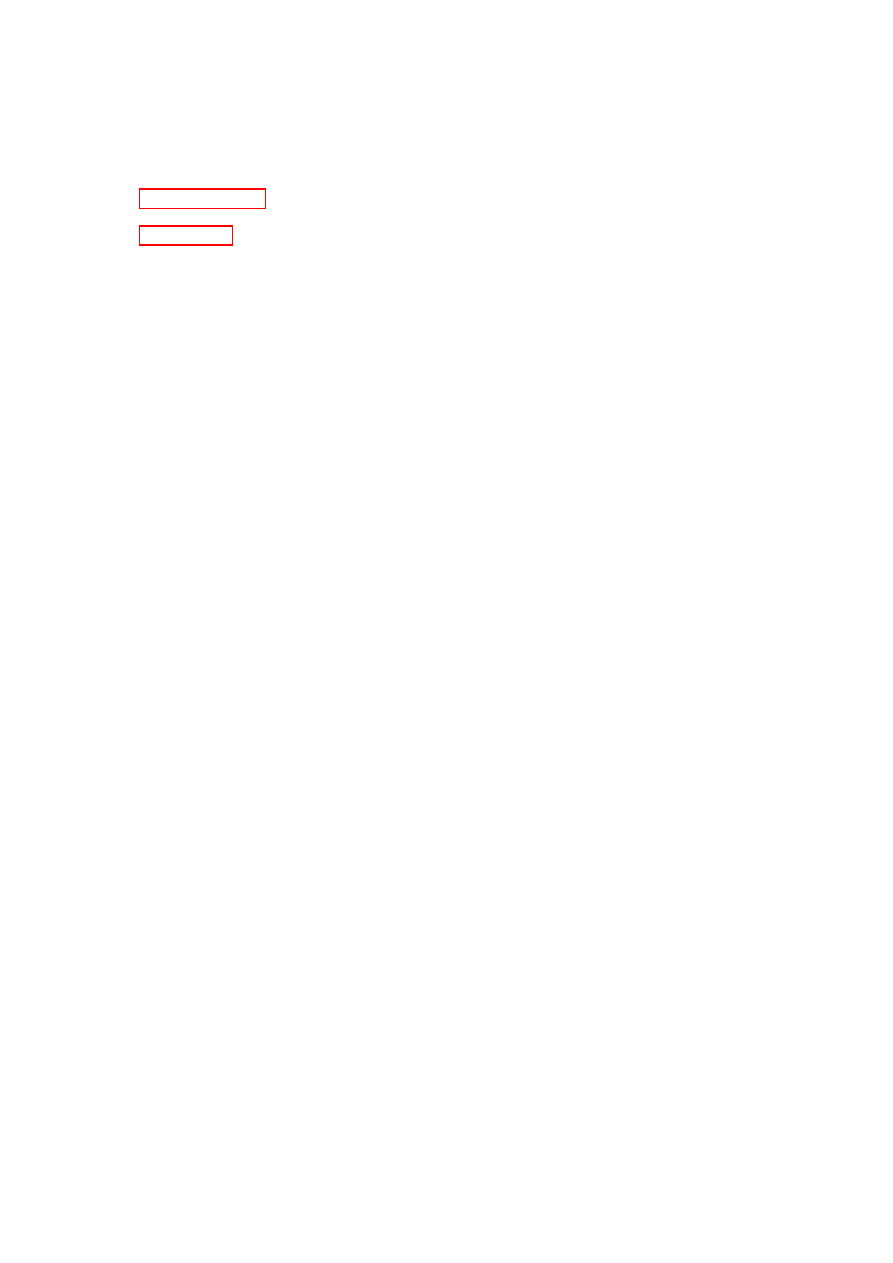

Figure 2.3: Enabling Multi-Tenant by Filtering the XenAPI

Overall this approach allows customers to run any XenAPI-based manage-

ment software. By using the XenAPI-based CIM

1

provider from

http://wiki.

xensource.com/xenwiki/XenCim

, customers can furthermore use any ex-

isting CIM-based management tool to manage their domains.

Similarly, the datacenter operator can directly access the underlying XenAPI

to manage the overall datacenter.

1

This refers to the DMTF System Virtualization, Partitioning, and Clustering Working Group

(SVPC WG). See

www.dmtf.org

.

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

Chapter 3

Policy Enforcement for Virtual

Datacenters

Serdar Cabuk, Chris I. Dalton, Dirk Kuhlmann (HP) Konrad Eriksson,

HariGovind V. Ramasamy, Matthias Schunter (IBM), Gianluca Ramunno (POL),

Ahmad-Reza Sadeghi, Christian Stüble(RUB)

In this chapter wee describe a security management framework that helps re-

alize the abstraction of TVDs by guaranteeing reliable isolation and flow control

between domain boundaries.

After introducing the key concepts such as Trusted Virtual Domains, we ex-

plain the security policies that are enforced in a virtual datacenter in Section 3.1.

The key idea is that the datacenter management can declare a inter-TVD policy

that defines how customers are isolated. Each customer (or domain of a customer)

can then define a intra-TVD security policy that defines how its internal security

is handled. Both policies hold for all resources and can subsequently be refined.

In Section 3.2 we then discuss how these policies are enforced. For enforcement

we focus on the two most important resources that are potentially shared. The key

idea is to provide virtual networks and storage that is assigned to a given domain

and where access by other domains is restricted.

3.1

Security Policies for Virtual Data Centers

Data centers provide computing and storage services to multiple customers.

Customers are ideally given dedicated resources such as storage and physical ma-

chines. Resources such as the Internet connection, however, may be shared be-

tween multiple customers. Each customer has a set of security requirements and

may often specify which security mechanisms must be used to satisfy these require-

ments. For example, different customers may specify different anti-virus software

to be used for their VMs and different virus scanning intervals.

To model and implement these heterogeneous requirements, we introduce a

domain-based security model for enforcing unified security policies in virtualized

13

14

OpenTC D05.5 – Design and Concept of a Trusted Virtual Datacenter

data centers. We focus on isolation policies that mimic physical separation of data

center customers. Our goal is to logically separate customer networks, storage,

VMs, users, and other virtual devices. The core idea is to use this isolation as a

foundation for guaranteeing desired security properties within each virtual domain

while managing shared services under mutually agreed policies.

3.1.1

High-level Policy Model

Our security model is based on TVDs [BGJ

+

05], which isolate their resources from

resources of other TVDs. In the following, we distinguish between the following

resource types:

Virtual Processing Elements: Virtual Processing Elements (VPE) are active ele-

ments (subjects), such as physical or virtual machines, that can be member

of one or more TVDs.

Virtual Infrastructure Elements: Virtual Infrastructure Elements (VIE) are pas-

sive elements (objects), such as physical or virtual disks, that can be member

of one or more TVDs.

Note that computing platforms that host VMs but are not directly member of a TVD

are not considered as VPEs. Moreover, the security model includes two high-level

policies defining the security objectives that must be provided by the underlying

infrastructure:

Inter-TVD Policy: By default, each TVD is isolated from the “rest of the world”.

The high-level information-exchange policy defines whether and how infor-

mation can be exchanged with other TVDs. If no information exchange with

other TVDs is permitted, no resources can be shared unless the data-center

operator can guarantee that these resources guarantee isolation. If flow is in

principle allowed, sub-policies further qualify the exact flow control policy

for the individual resources.

Intra-TVD Policy: Domain policies allow domain owners (e.g., customers) to

define the security objectives within their own TVDs. Examples of such

policies include how the internal communication is to be protected and un-

der what conditions resources (e.g., storage, machines) can join a particular

TVD.

3.1.2

Security Objectives and Policy Enforcement Points

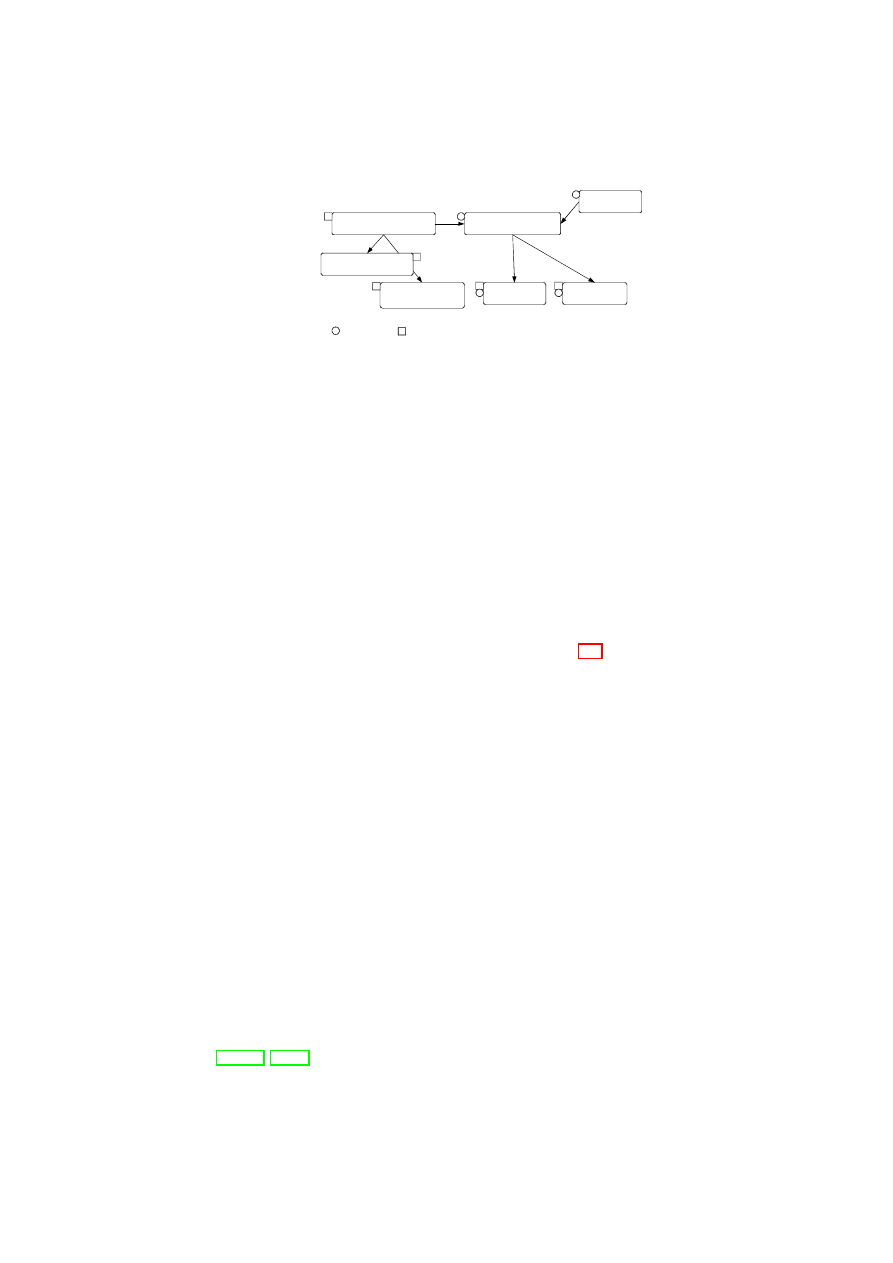

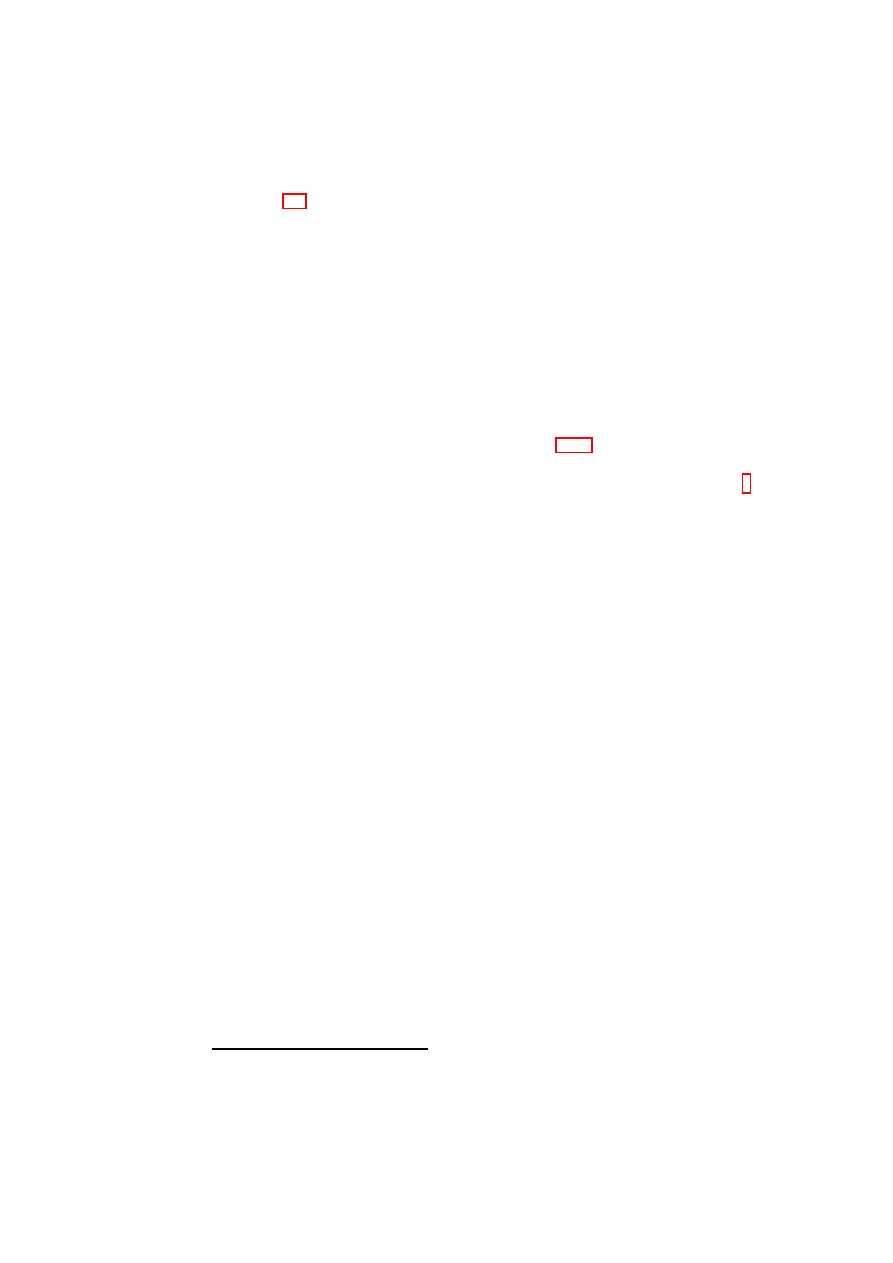

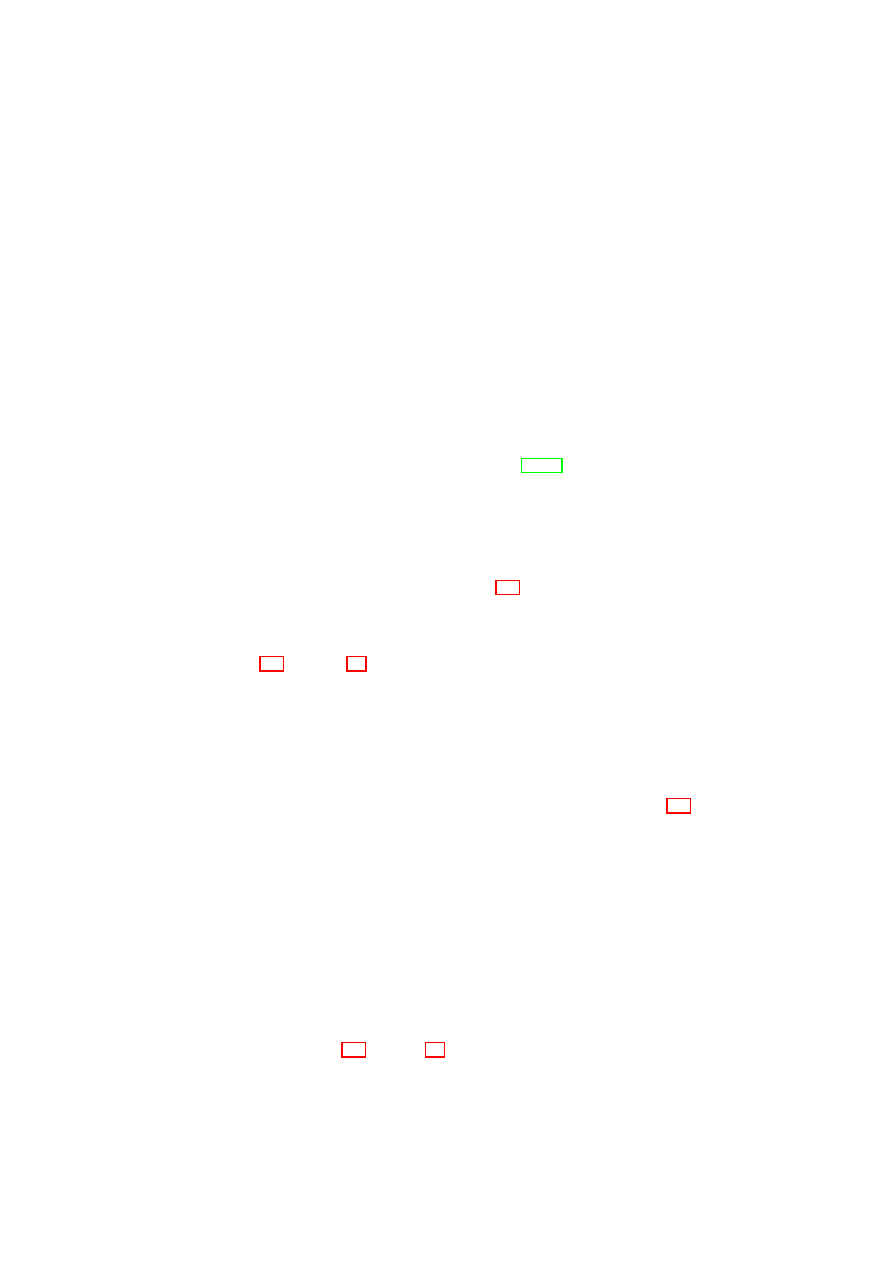

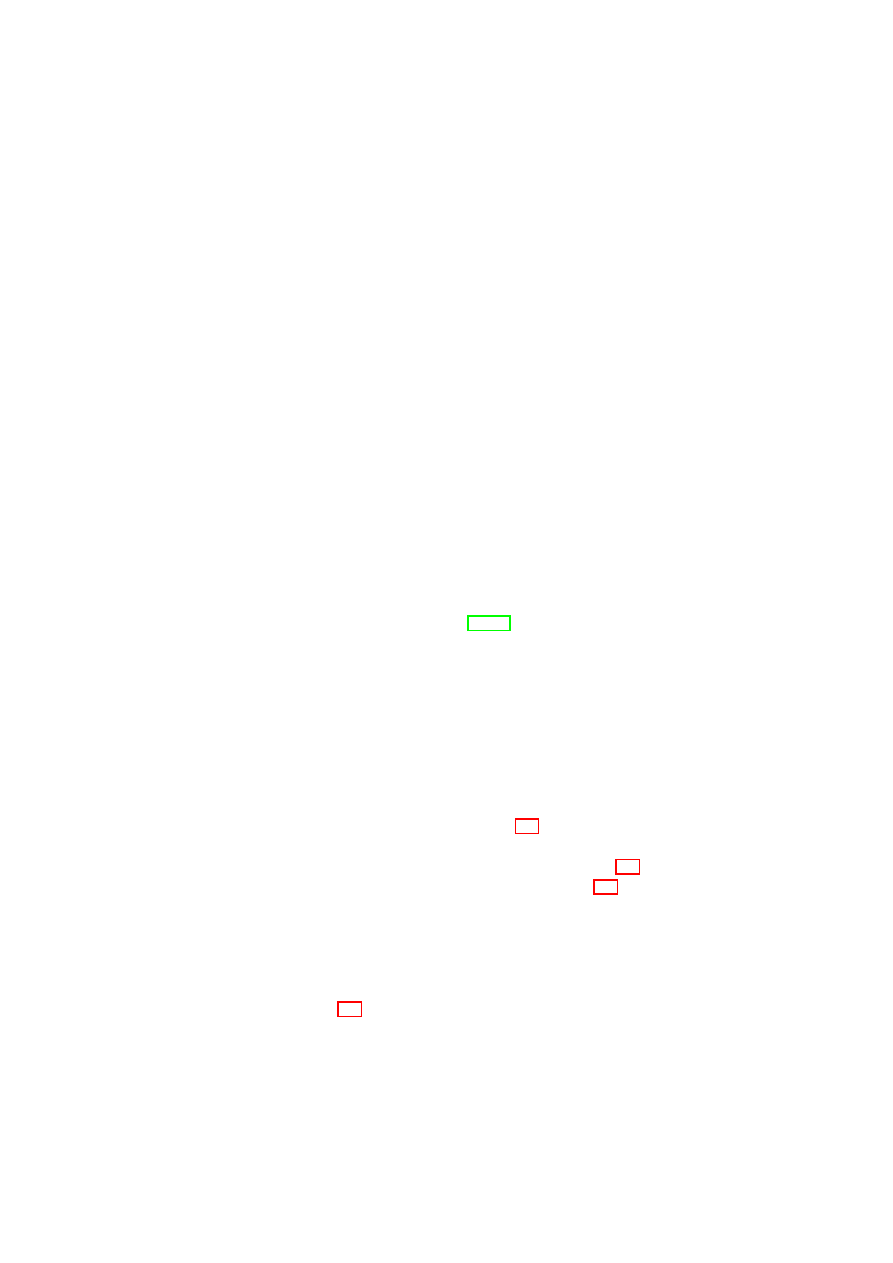

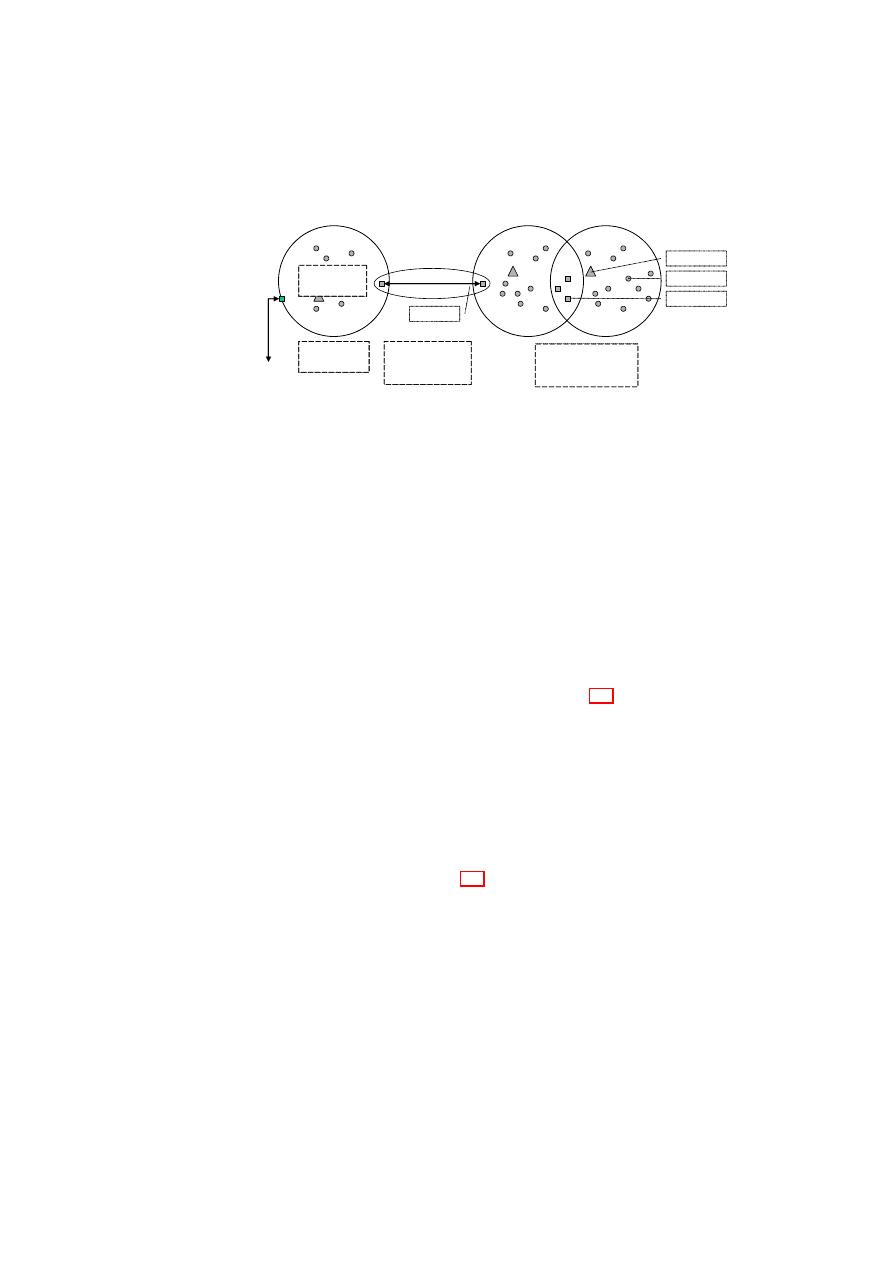

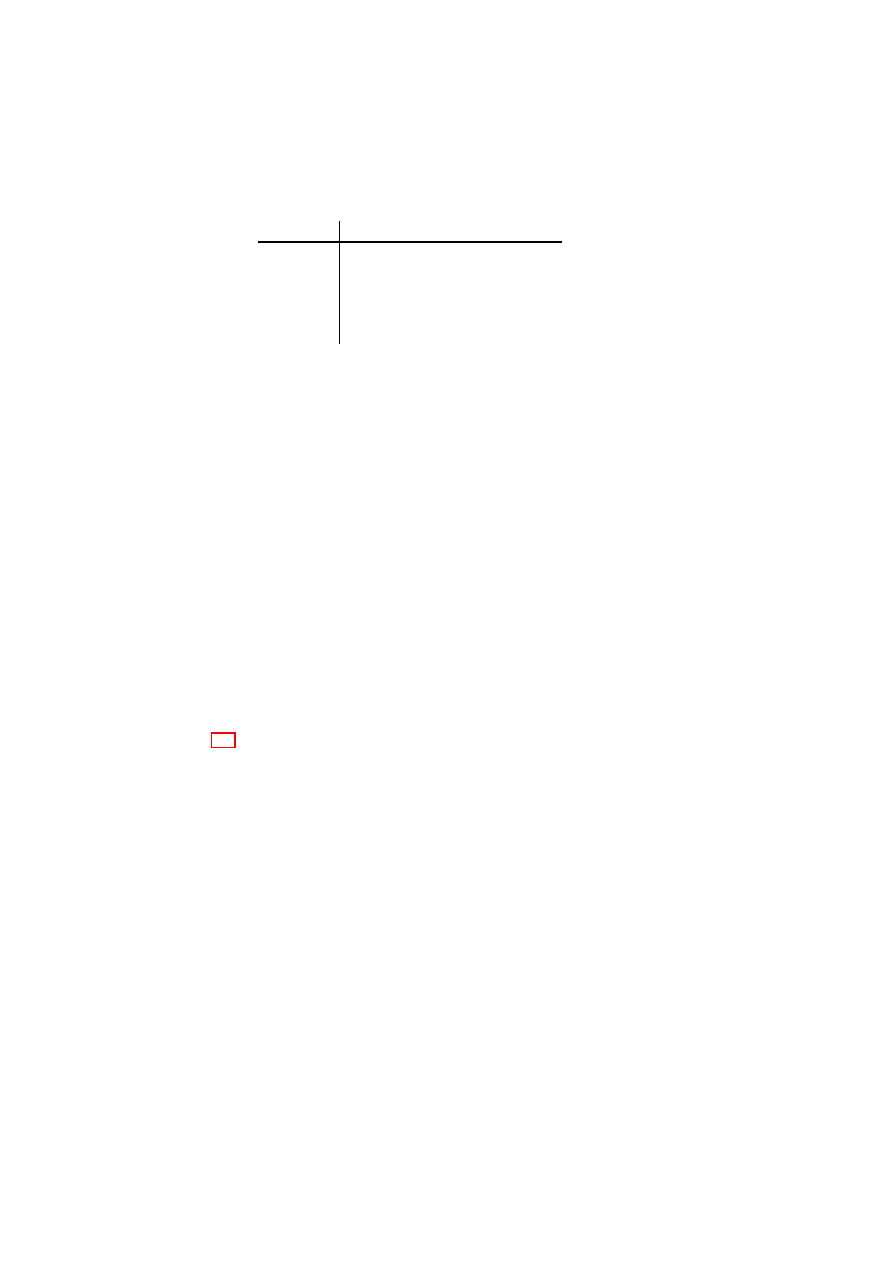

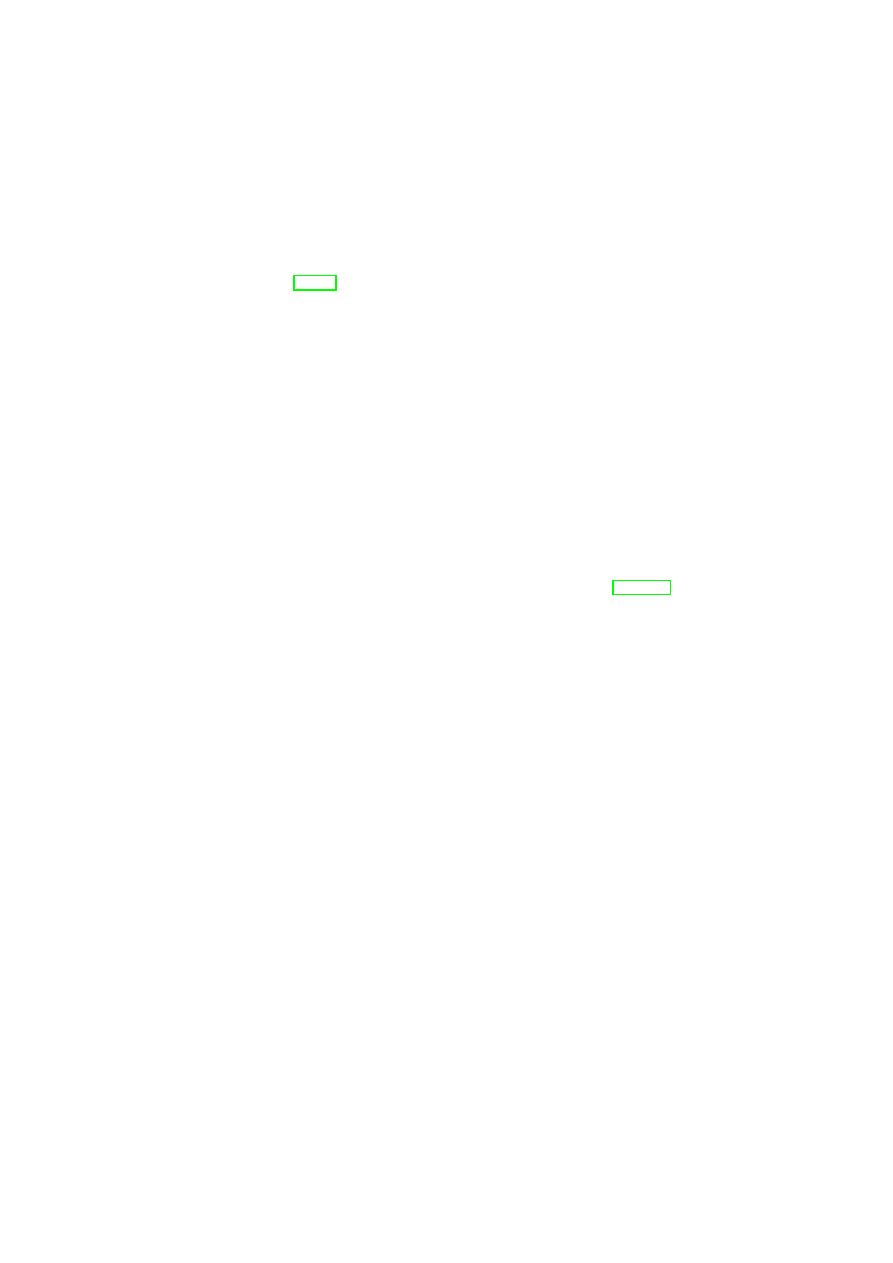

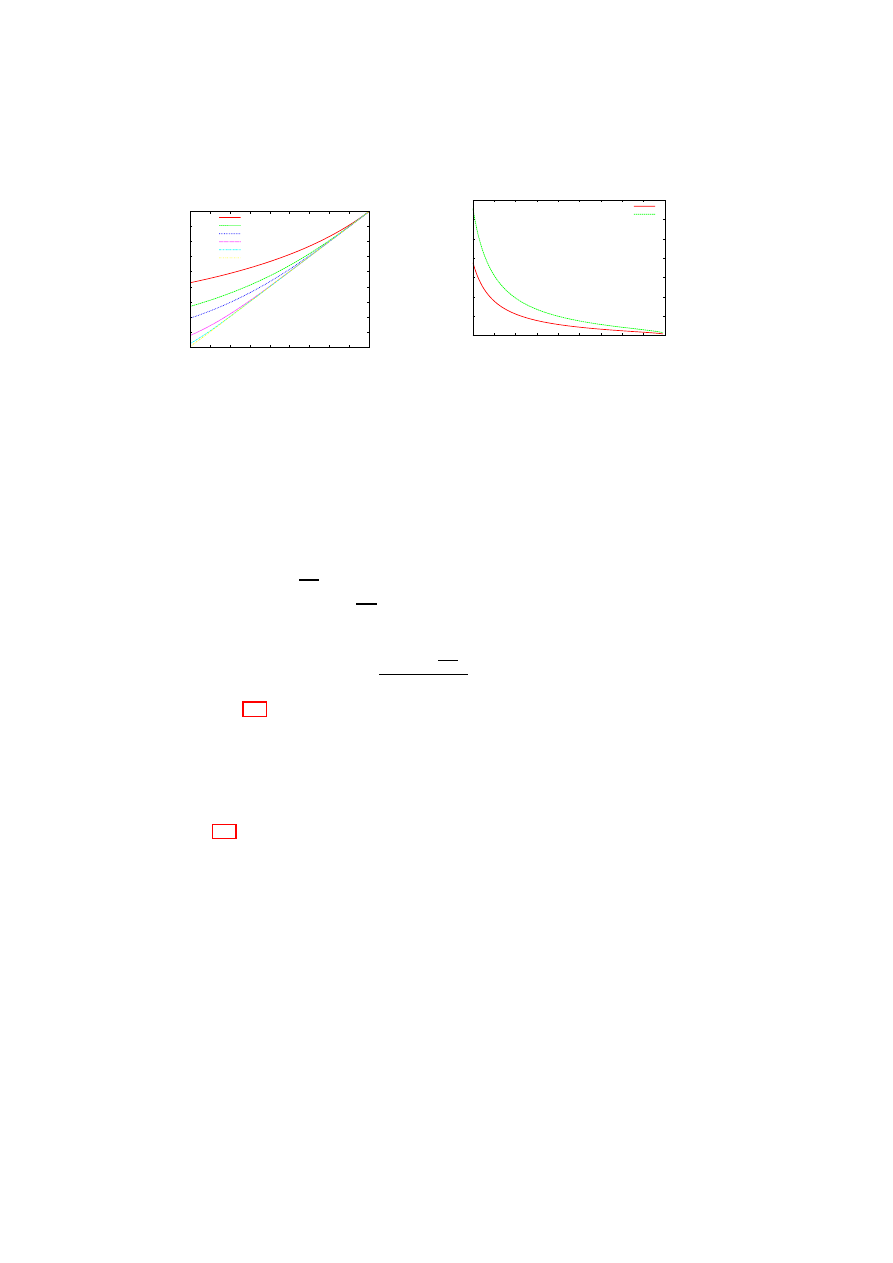

Policies are enforced across different resources in the TVD infrastructure (see Fig-

ure 3.1). The basis of all policies is isolation at the boundary of each TVD.

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

CHAPTER 3. POLICY ENFORCEMENT FOR VIRTUAL DATACENTERS

15

TVD A

TVD B

Internet

Membership

Constraints

Flow

enforcement

by two gateways

Isolation

enforcement

TVD C

Flow

enforcement

by shared machines

TVD AB

Gateway

Server

Clients

Gateways

Machine Types:

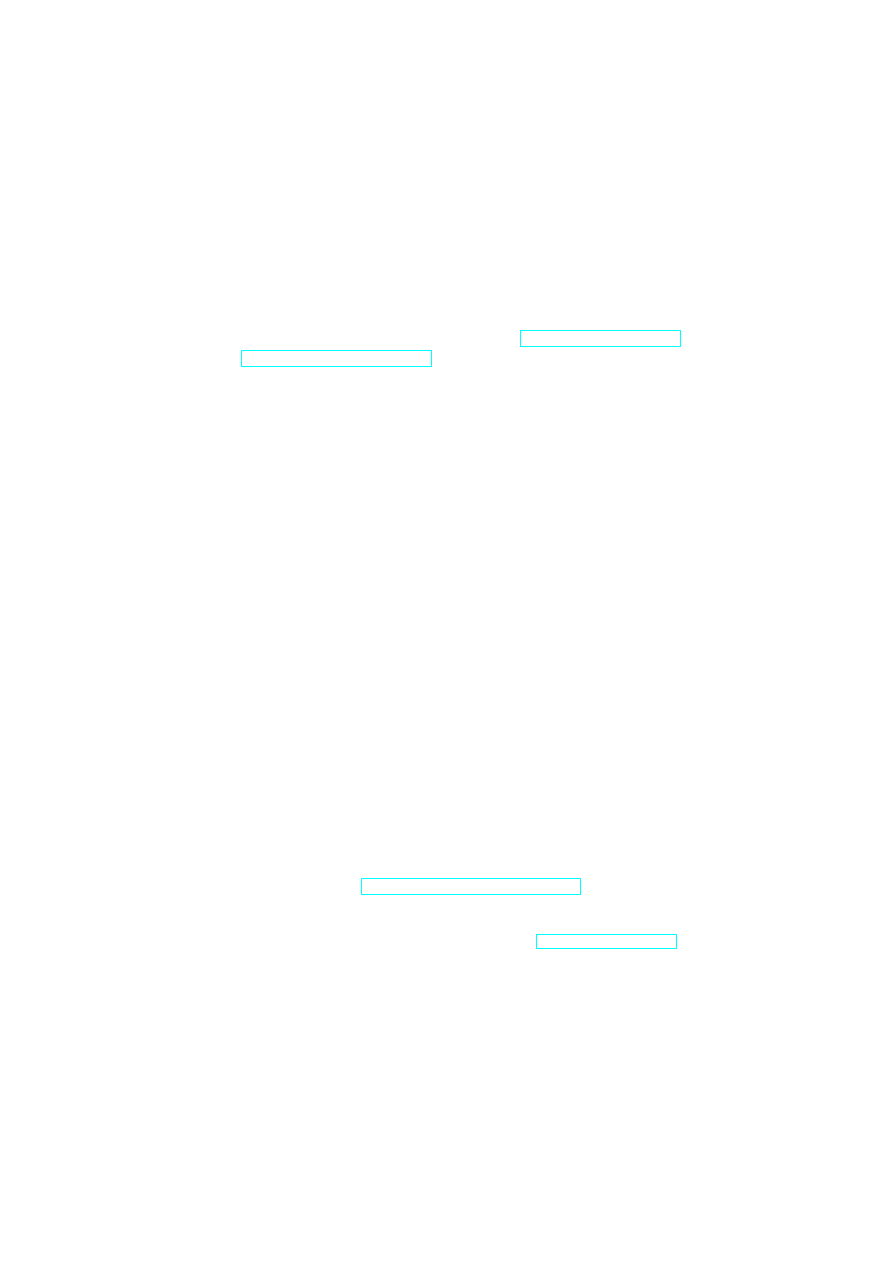

Figure 3.1: Types of TVD Policy Enforcement.

By default, each resource is associated with a single domain. This achieves a

basic level of isolation. If an information flow between TVDs is allowed, resources

can also be member of different TVDs. For example, a TVD can allow certain types

of resources on certain hosts to provide services also to other domains. Each TVD

defines rules regarding in-bound and out-bound information flow for restricting

communication with the outside world. The underlying policy-enforcement infras-

tructure then has to ensure that only resources trusted by all TVDs are shared.

Architecturally, there are two ways of enforcing such rules, depending on the

trust between the TVDs. The first method involves two shared resources connected

by an intermediate domain. In this method, each TVD enforces its side of the flow

control by means of its own shared resource. An example of this type of connection

is the one that exists between TVD A and TVD B in Figure 3.1. This method is used

when the trust level between TVD A and TVD B is low, and the two cannot agree

on a shared resource that is mutually trusted. The shared resource in TVD A will

enforce TVD A’s policies regarding in-bound traffic from TVD B, even if the shared

resource in TVD B does not enforce TVD B’s policies regarding out-bound traffic.

The shared resources can be thought of being a part of a “neutral” TVD (TVD AB)

with its own set of membership requirements.

The second method that requires

shared trust is to establish one or more shared resources that are accessed from

both TVDs while allowing controlled information flow. This mechanism is used

between TVD B and TVD C in Figure 3.1.

Security within a domain is finally obtained by defining and enforcing member-

ship requirements that resources have to satisfy prior to being admitted to the TVD

and for retaining the membership. This may also include special requirements for

different machine types: Because, for example, shared resources play a key role in

restricting information flow between TVDs, the software on those machines may

be subject to additional integrity verification as compared to the software on regular

VPEs.

Flow control policies define the allowed traffic flow between two domains and

how the domains should be protected. Membership policies define domain-internal

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

16

OpenTC D05.5 – Design and Concept of a Trusted Virtual Datacenter

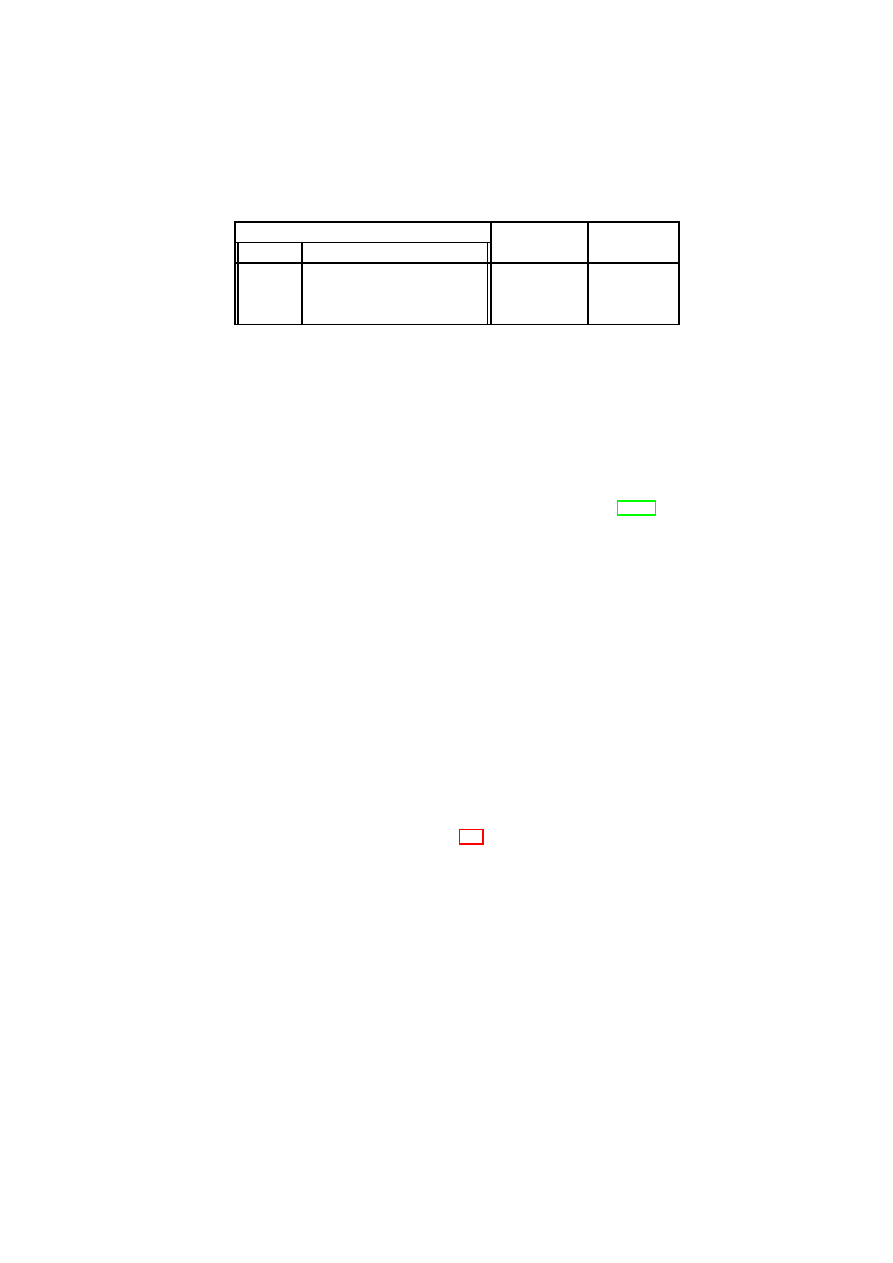

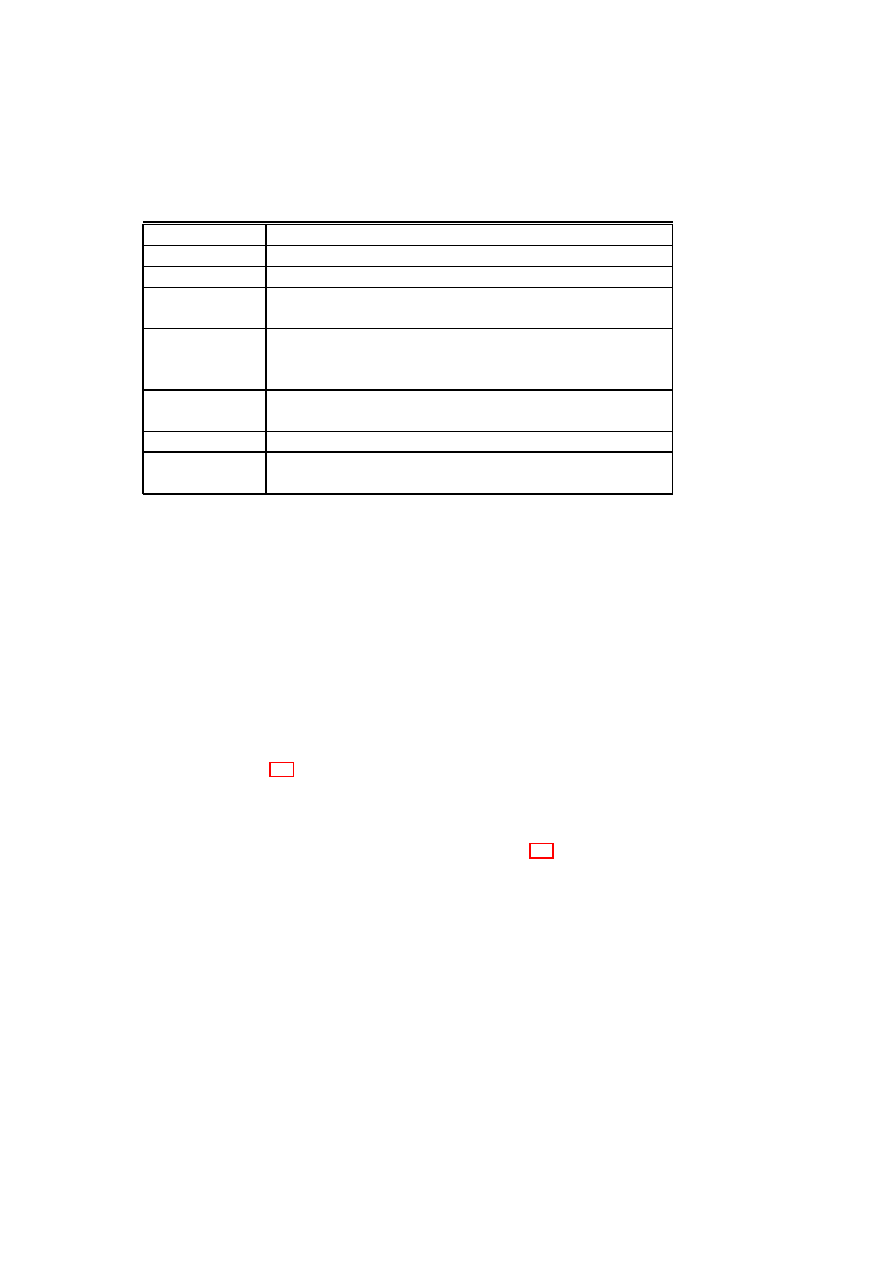

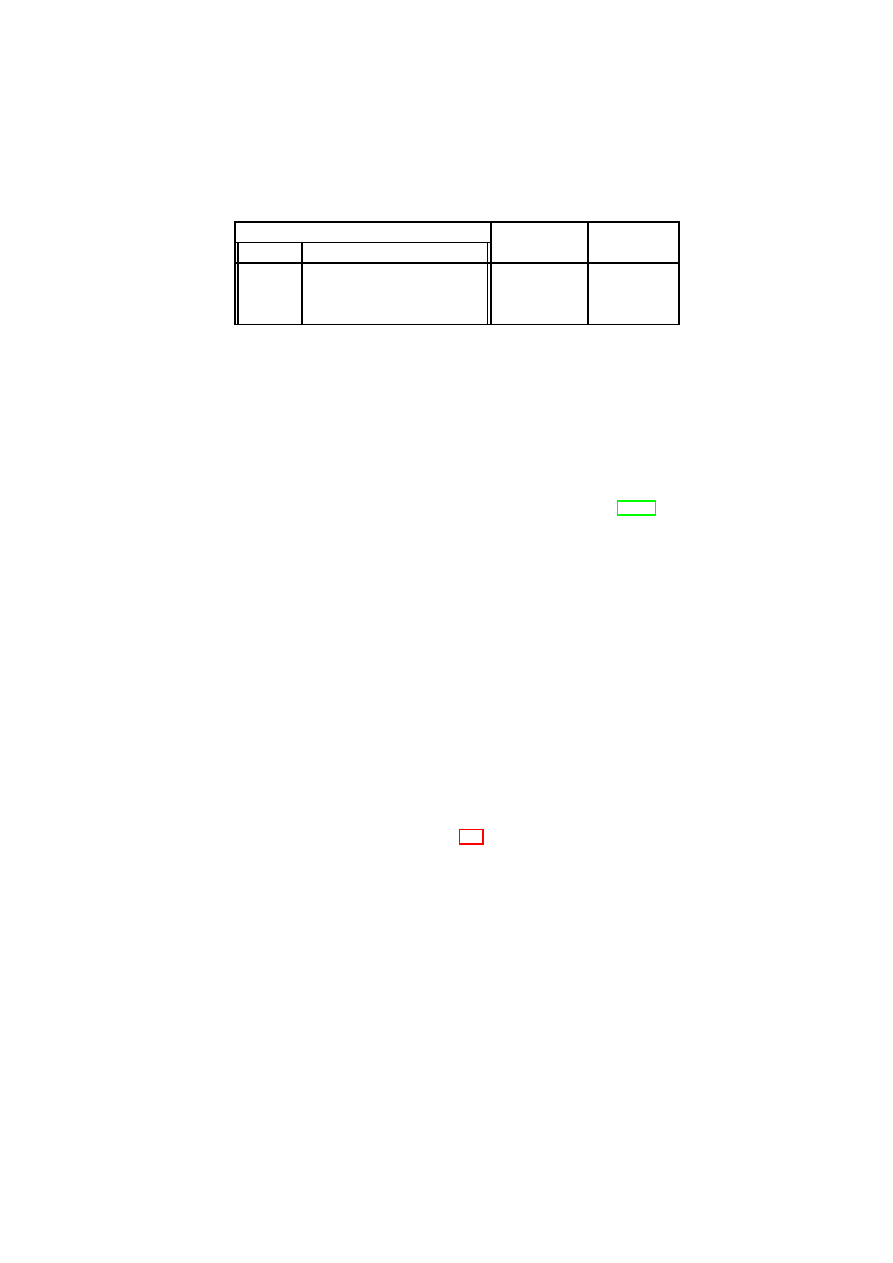

Table 3.1: High-level Directed Flow Control Matrix for Internet

D

I

, DMZ

D

D

,

and Intranet

D

i

(

1

allows information flow, whereas

0

denies it).

From / to

D

I

D

D

D

i

D

I

1

1

0

D

D

0

1

1

D

i

0

1

1

security objectives as well as the prerequisites for becoming a member of a domain.

Isolation and Permitted Flows in Data Centers

Proper customer separation in a virtualized environment requires the logical sep-

aration of all resources that belong to one TVD from those of another TVD. As

mentioned above, this can either be achieved by assigning each resource only to a

single domain or by enforcing flow control to limit the information flow through

shared resources that belong to multiple TVDs.

By default, a VPE and its local memory belong to a single TVD. If VPEs are

assigned to two or more TVDs they act as a gateway allowing controlled infor-

mation flow between TVDs. This includes their system image and swap-files. To

consistently enforce flow control between domains, we need to control the data

flow in shared VPEs, storage, and other devices.

Allowed information flows can be represented by a simple flow control matrix

as depicted in Table 3.1. Note that this matrix is directional, i.e., it might allow

flows in one direction but not in the opposite direction. If flow policies between

two TVDs are asymmetric, only shared resources that can enforce these policies

are permitted. Device-specific policies (network, storage) can then refine these

basic rules. If an information flow is not permitted, then also shared resources are

not permitted between these TVDs.

Membership Requirements

Membership requirements define under what conditions resources may join a do-

main. From a high-level policy perspective, several criteria can be applied to decide

whether an entity is allowed to join a domain, for example:

•

Certificates: An authority defined by the TVD policy can certify a resource

to be member of a TVD.

•

Attestation Credentials: A resource may prove its right to join a TVD us-

ing integrity credentials, e.g., by means of Trusted Computing functionality,

such as remote attestation.

•

White-listing: Only entities that are listed on a white-list can join.

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

CHAPTER 3. POLICY ENFORCEMENT FOR VIRTUAL DATACENTERS

17

In general, a resource may need to show proper credentials to prove that it ful-

fills certain properties [SS05] before allowing the resource to join the TVD. The

validations of these properties are usually done on a per-type basis. This means

that, e.g., the requirements for a shared resource are usually stronger than the re-

quirements for a TVD-internal resource.

One way of formalizing the network membership requirements is to define a

function

M

:

T

→

2

P

, where

(

P,

≤

)

is a lattice of security properties. A machine

m

with a set

p

m

of security properties may be permitted to join the TVD

t

if and

only if

∀

p

∈

M

(

t

) :

∃

p

′

∈

p

m

such that

p

′

≥

p

. In other words,

m

is permitted to

join

t

if and only if there is at least one property of

m

that satisfies each security

requirement of

t

.

3.1.3

Example Policy Refinements for Protected Resources

Policies alone are not sufficient to enforce customer separation. Ultimately, one

needs to transform these policies into data-center configurations and security mech-

anisms specific to each resource (e.g., VLAN configuration). To do so, we intro-

duce a policy management scheme that accepts high-level domain policies and

transforms them into resource-specific low-level policies and configurations.

Refinement Model

The high-level policy defines the basic flow control, protection, and admission

requirements. We aim at enforcing these high-level objectives throughout all re-

sources in the data center.

In the high-level model, flow control is specified by a simple matrix that de-

fines whether flows are permitted. This however is not sufficiently fine-grained for

specific resources. TVDs, for example, want to restrict their flow across bound-

aries by means of firewall rules. As a consequence, we need to introduce a notion

of policy refinement [Wes01], because as translation moves towards lower levels of

abstraction, it will require additional information (e.g., physical arrangement of the

data center, “subjective” trust information) to be correctly and coherently executed.

Our notion of policy refinement mandates the enforcement of “no flow” objec-

tives while allowing each resource to refine what it means so that flows are permit-

ted and how exactly unauthorized flows shall be prevented. Similarly, we do not

allow resources to deviate from the confidentiality/integrity objectives; however,

certain resources can be declared trusted so that they may enforce these objectives

without additional security mechanisms such as encryption or authentication.

Similarly, the fact that admission is restricted is then refined by specific admis-

sion control policies that are enforced by the underlying infrastructure.

Note that conflict detection and resolution [Wes01, LS99] can later be used to

extend this simple notion of refinement. However, we currently stay on the safe

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

18

OpenTC D05.5 – Design and Concept of a Trusted Virtual Datacenter

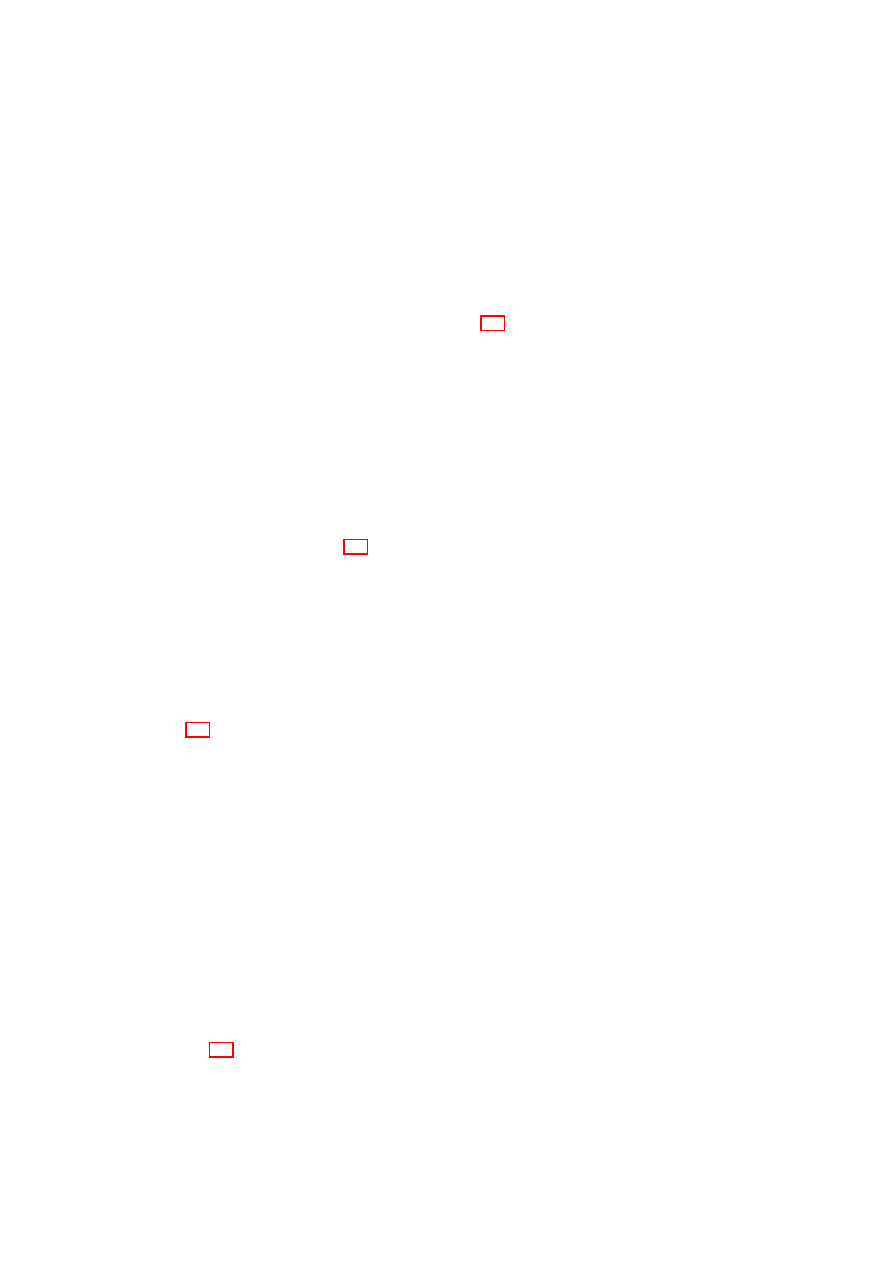

Table 3.2: Example Network Flow Control Policy Matrix for Three TVDs.

Enforced by/flow to

D

I

D

D

D

i

D

I

1

P

ID

0

D

D

0

1

P

Di

D

i

0

P

Di

1

side: connections are only possible if both TVDs allow them. Similarly, if one

domain requires confidentiality, information flows are only allowed to TVDs that

also require confidentiality. Other schemes for more elaborate flow control have

been proposed in [EASH05, CBL07, N. 01, FWH

+

01].

Network Security Policies

We now survey the policy model of [CDRS07] and show how it related to the

corresponding high-level policy. Similar to our high-level policies, there are two

types of policies governing security in the network. The first limits flow between

networks, whereas the second defines membership requirements to each network.

Network Security Policies across TVDs

A policy covers isolation and flow con-

trol between TVDs as well as integrity and confidentiality against outsiders. These

basic security requirements are then mapped to appropriate policies for each re-

source. For example, from a networking perspective, isolation refers to the re-

quirement that, unless the inter-TVD policies explicitly allow such an information

flow, a dishonest VPE in one TVD cannot (1) send messages to a dishonest VPE in

another TVD (information flow), (2) read messages sent on another TVD (confi-

dentiality), (3) alter messages transmitted on another TVD (data integrity), and (4)

become a member of another TVD network (access control).

TVDs often constitute independent organizational units that may not trust each

other. If this is the case, a communication using another TVD can be established

(see the communication between TVD A and B in Figure 3.1).

The advantage of such a decentralized enforcement approach is that each TVD

is shielded from security failures in other TVDs. For networks, the main inter-

TVD security objectives are information flow control among the TVDs as well as

integrity and confidentiality protection of the channel.

An information flow control matrix is a simple way of formalizing these

system-wide flow control objectives. Table 3.2 shows a sample matrix for the three

example TVDs introduced earlier. Each matrix element represents a policy spec-

ifying both permitted in-bound and out-bound flows between a pair of TVDs, as

enforced by one of the TVDs. The

1

elements along the matrix diagonal convey

the fact that there is free information flow within each TVD. The

0

elements in the

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

CHAPTER 3. POLICY ENFORCEMENT FOR VIRTUAL DATACENTERS

19

matrix are used to specify that there should be no direct information flow between

two TVDs, e.g., between the Internet

D

I

and the intranet

D

i

. Care must be taken

to ensure that the pairwise TVD policies specified in the information flow control

matrix do not accidentally contradict each other or allow undesired indirect flow.

In our practical realization these network flow policies are implemented by firewall

rules.

Intra-TVD Network Security Policy

Within a TVD, all VPEs can freely com-

municate with each other while observing TVD-specific integrity and confidential-

ity requirements. For this purpose, the underlying infrastructure may ensure that

intra-TVD communication only takes place over an authenticated and encrypted

channel (e.g., IPSec), or alternatively, a trusted network

1

.

Towards Storage Security Policies

Virtual disks attached to VPEs must retain the advantages offered by storage vir-

tualization while at the same time enforcing TVD security policies. Advantages

of storage virtualization include improved storage utilization, simplified storage

administration, and the flexibility to accommodate heterogeneous physical storage

devices.

Different groups of virtual disks may exist for different purposes. For example,

the disk images that can be mounted for web-server VMs would be different from

those for database-server VMs. A virtual disk may be attached to multiple VMs

Inter-TVD Storage Security

A virtual disk has a single label corresponding to

the TVD it belongs to. Whenever a virtual machine operates on virtual storage, the

global flow matrix described in Section 3.1 needs to be satisfied. This is guaranteed

by the following policy refinement rules that define the allowed interactions:

1. A machine in domain

TVD

A

can write to a disk of domain

TVD

B

iff flow

from domain

TVD

A

to domain

TVD

B

is permitted.

2. A machine in domain

TVD

A

can read from a disk of domain

TVD

B

iff flow

from domain

TVD

B

to domain

TVD

A

is permitted.

Table 3.3 shows the resulting disk flow control policy. Note that as flow within

a domain is always allowed, this implies that disks of the same domain as the

machine may always be mounted read/write.

By default, we consider the content of a disk to be confidential. That is, if

a given domain does not declare a given storage medium as trusted, we deploy

whole-disk encryption using a key that is maintained by the TVD. Another aspect

reflected in the disk policies is the fact that we have a notion of blank disks. Once

1

A network is called trusted with respect to a TVD security objective if it is trusted to enforce

the given objective transparently. For example, a server-internal Ethernet can often be assumed to

provide confidentiality without any need for encryption.

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

20

OpenTC D05.5 – Design and Concept of a Trusted Virtual Datacenter

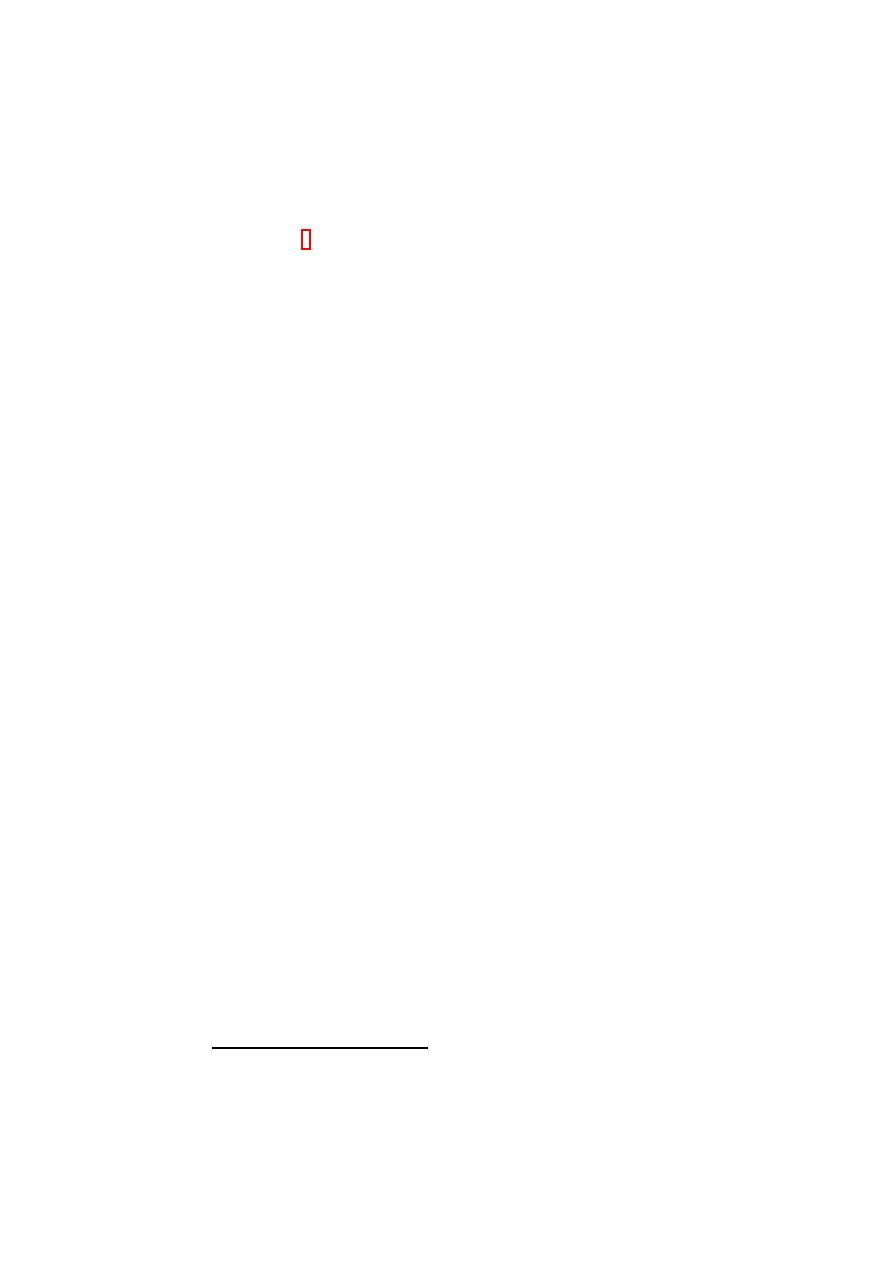

Table 3.3: Example of a Refined Disk Policy Matrix for Three TVDs.

Disk/VPE

D

I

D

D

D

i

D

I

r/w

w

0

D

D

r

r/w

r/w

D

i

0

r/w

r/w

Blank

r/

r/

r/

w

→

D

I

w

→

D

D

w

→

D

i

they are written by another domain, they change color, and are then associated with

this other domain.

Intra-TVD Storage Security

For protecting the data in a particular TVD, virtual

storage may in addition specify whether the disk is encrypted, which conditions on

the system must be satisfied before a disk may be re-mounted by a VPE that has

previously unmounted the disk, and whether shared mounting by multiple systems

is allowed. To be compatible with a high-level policy, a disk either has to be trusted

or else confidentiality requires encryption while integrity requires hash-trees pro-

tecting the disk. Similarly, membership restrictions require bookkeeping of disks

and management of access of VPEs to disks.

3.2

Unified Policy Enforcement for Virtual Data Centers

In this section, we introduce a TVD-based policy enforcement framework that

orchestrates the deployment and enforcement of the type of policies we presented

in Section 3.1 across the data center. Existing storage and network virtualization

technologies as well as existing Trusted Computing components (in software and

hardware) are the building blocks of our solution. Our framework (1) combines

these technologies to realize TVDs and (2) orchestrates them using the TVD in-

frastructure, which provisions the appropriate security mechanisms.

3.2.1

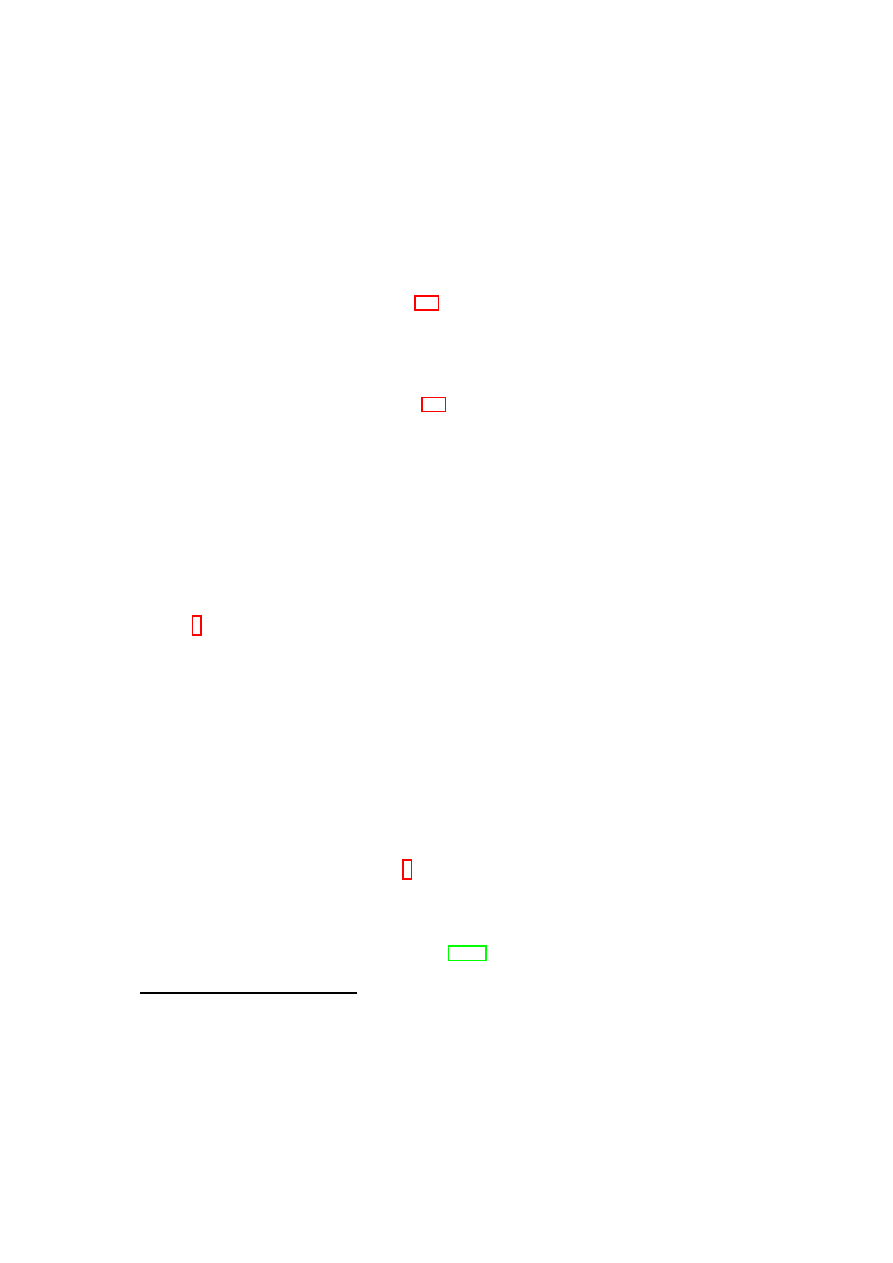

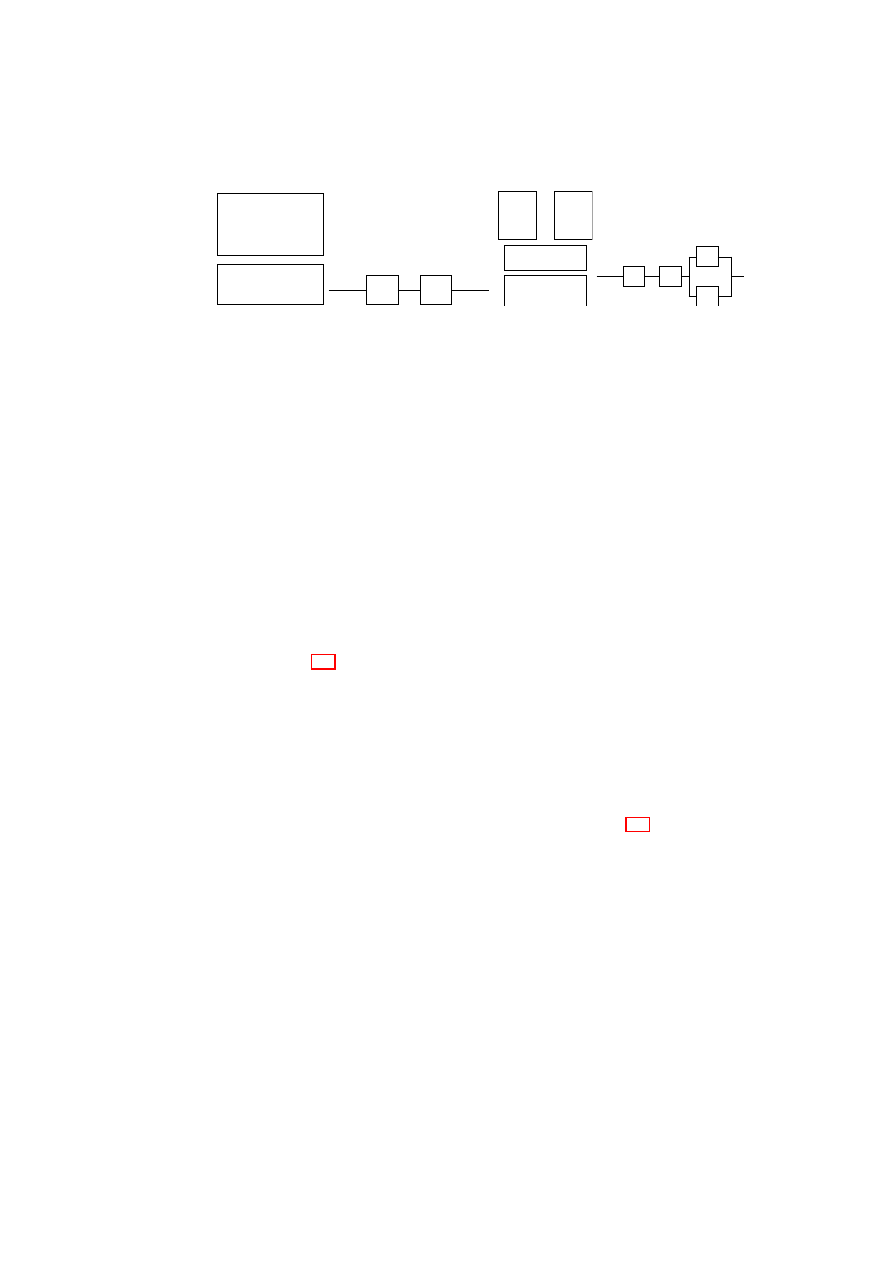

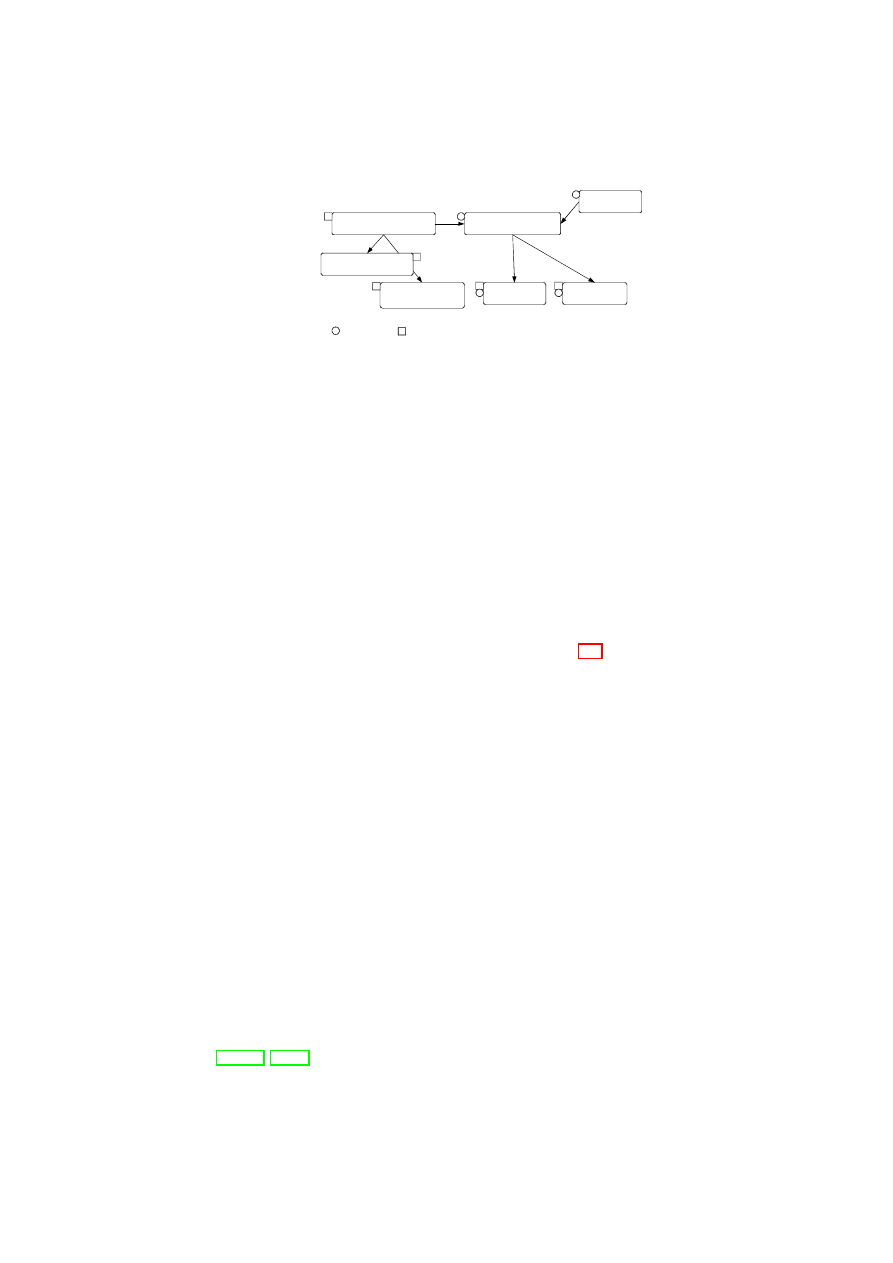

TVD Infrastructure

The TVD infrastructure consists of a management layer and an enforcement

layer. The TVD management layer includes TVD masters, proxies, and factories,

whereas the TVD enforcement layer consists of various security services. Each

TVD is identified by a unique TVD master that orchestrates TVD deployment and

configuration. The TVD master can be implemented as a centralized entity or have

a distributed fault-tolerant implementation. The TVD master contains a repository

of high-level TVD policies and credentials (e.g., VPN keys). The master also ex-

poses a TVD management API through which the TVD owner can specify those

policies and credentials. In the deployment phase, the TVD master first verifies the

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

CHAPTER 3. POLICY ENFORCEMENT FOR VIRTUAL DATACENTERS

21

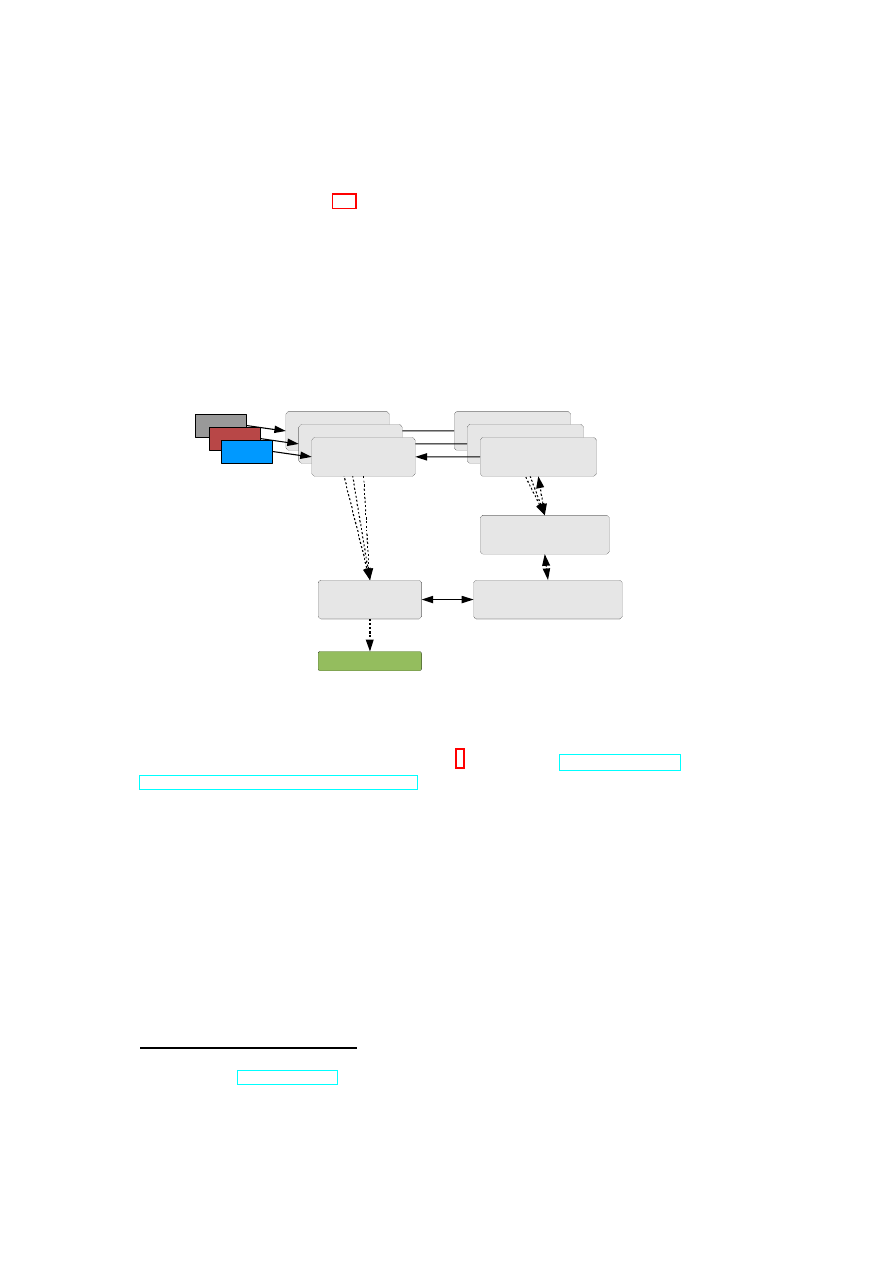

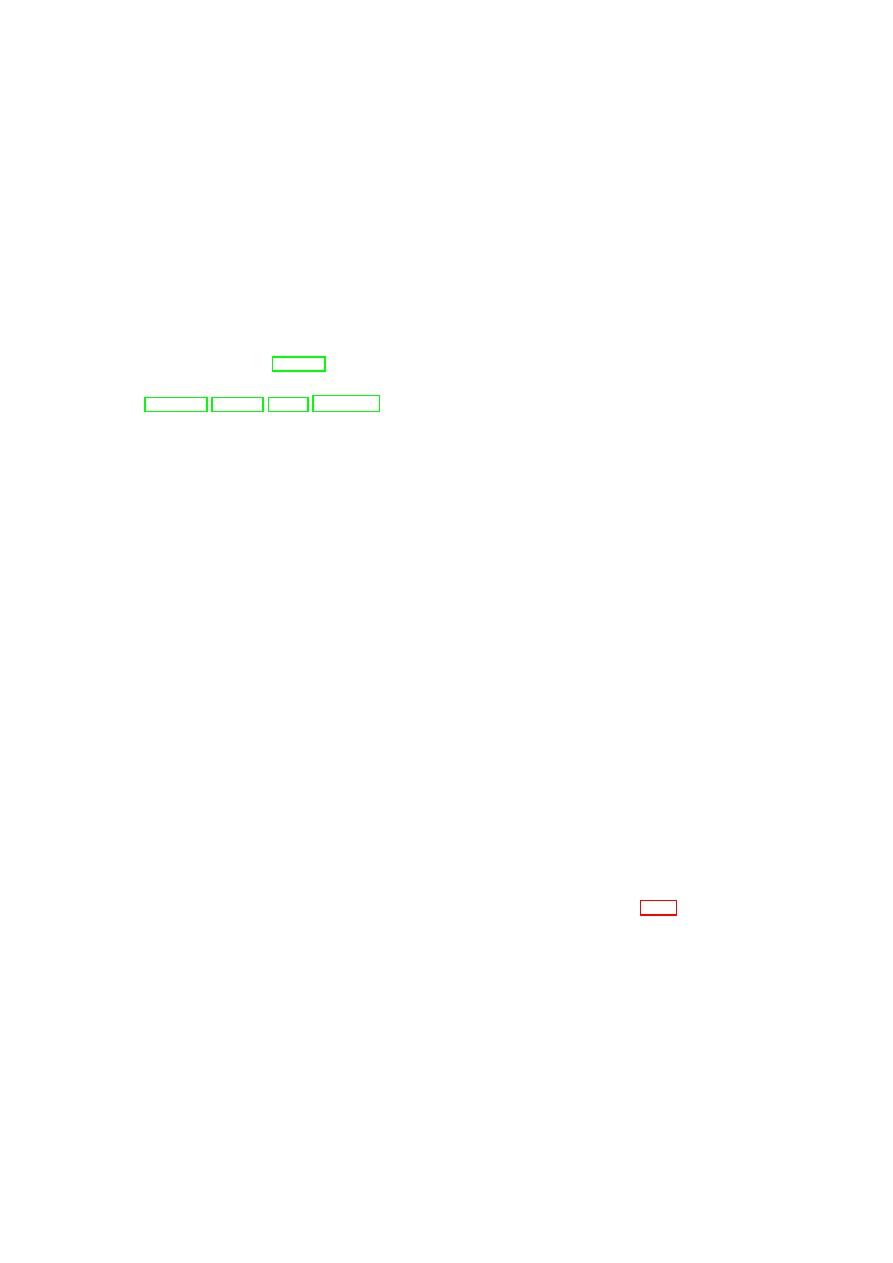

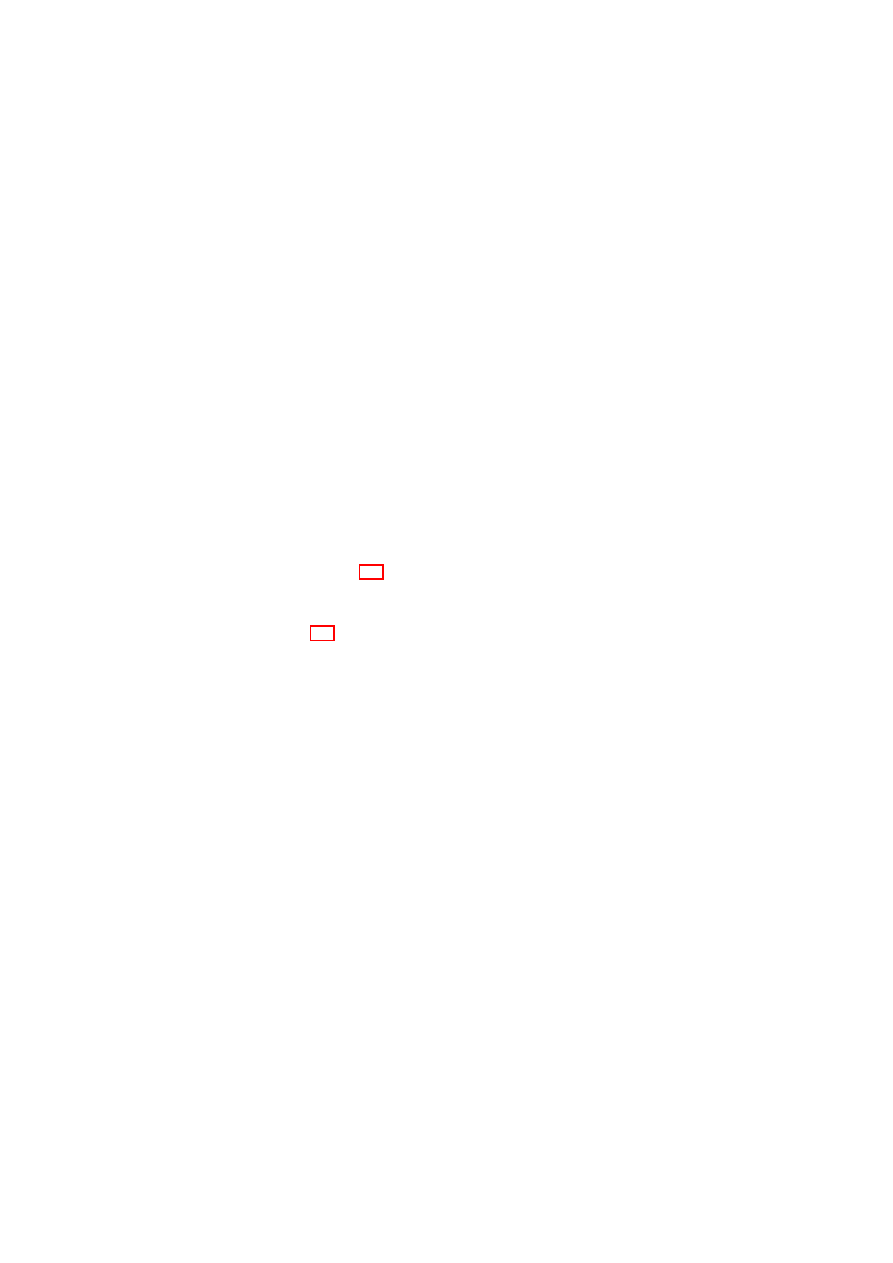

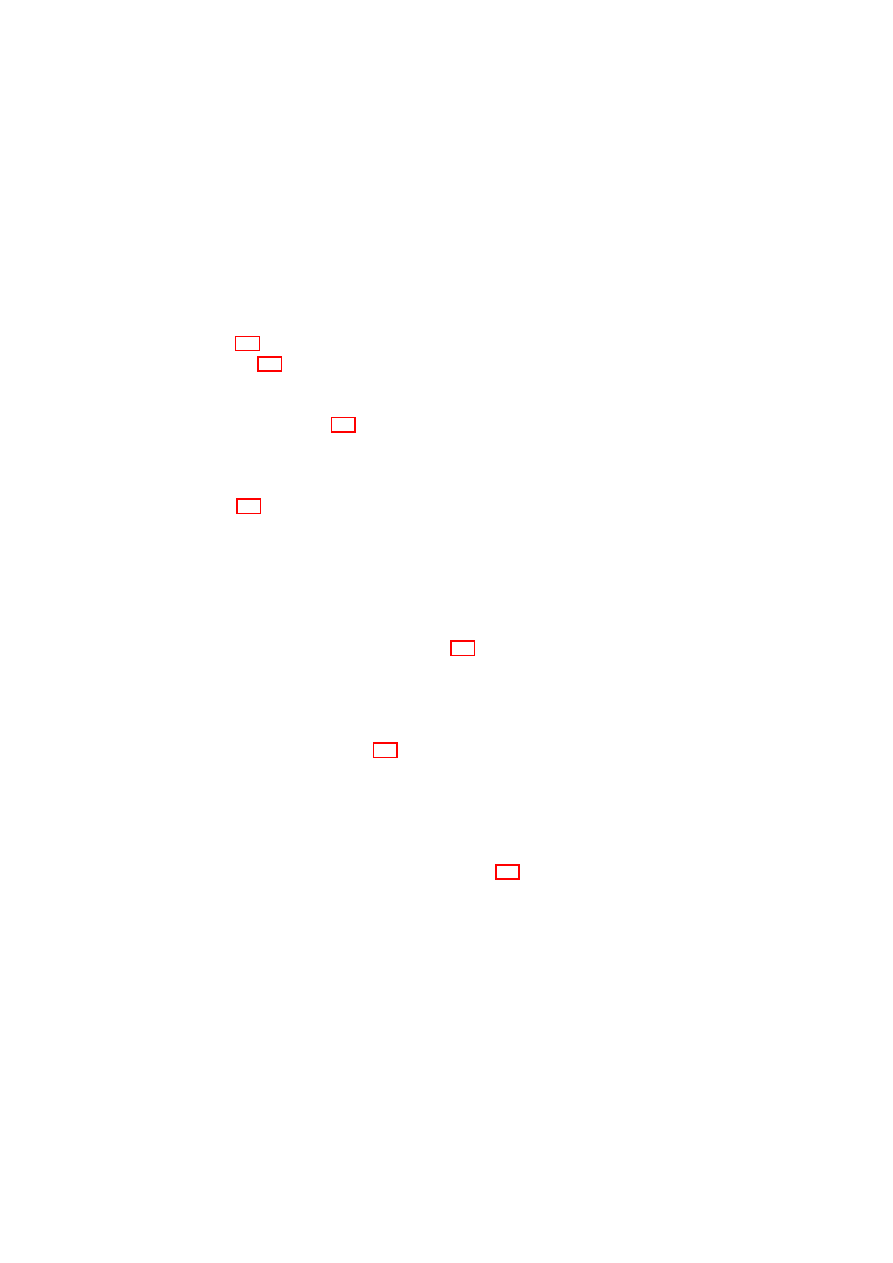

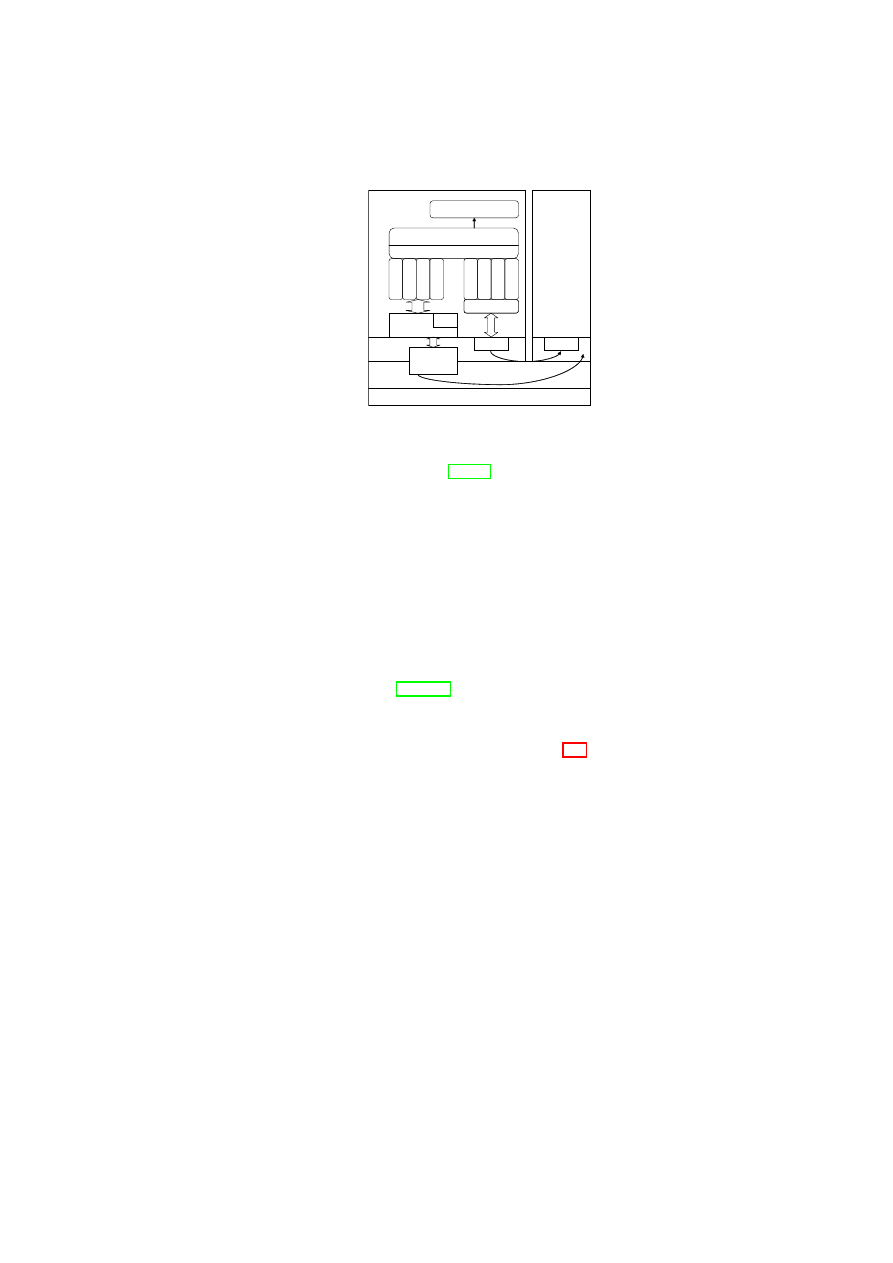

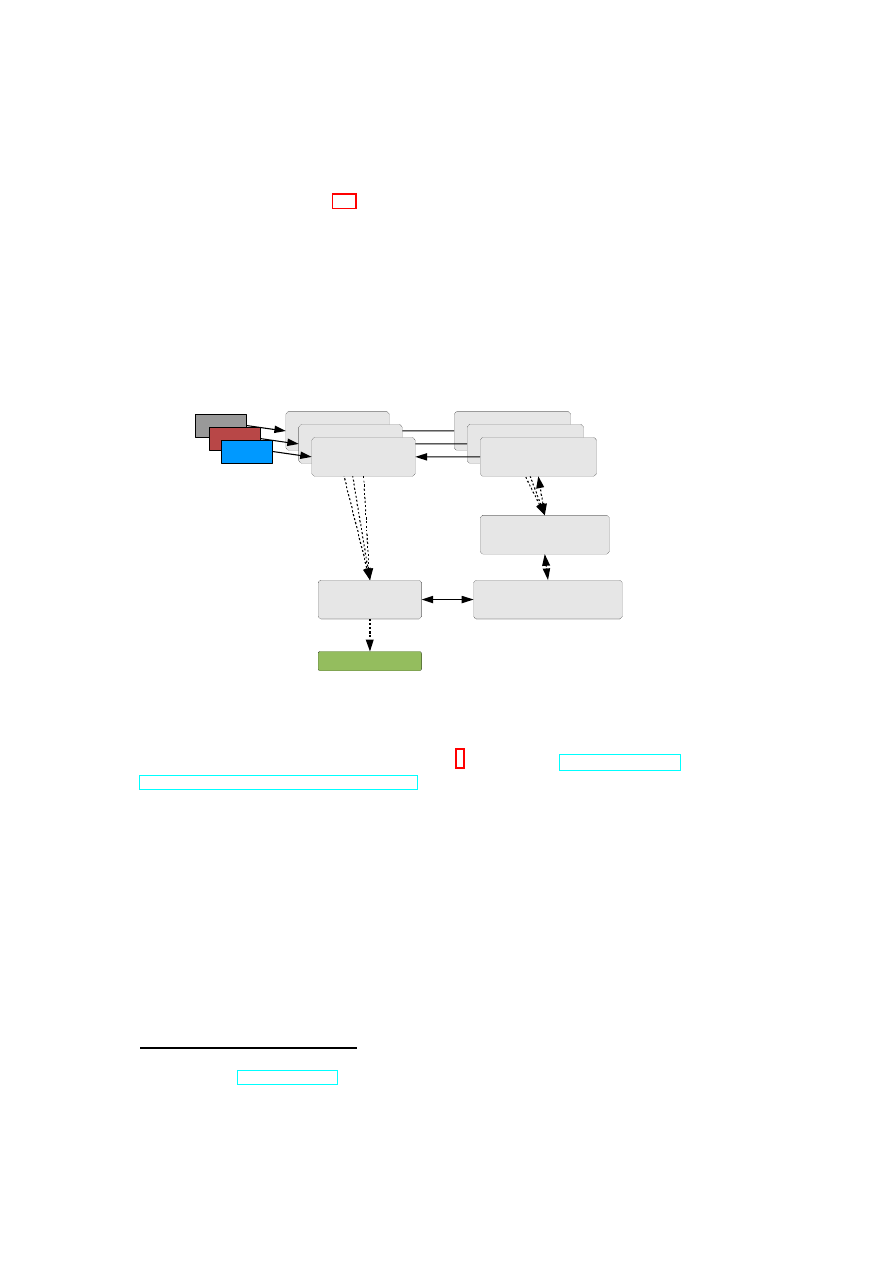

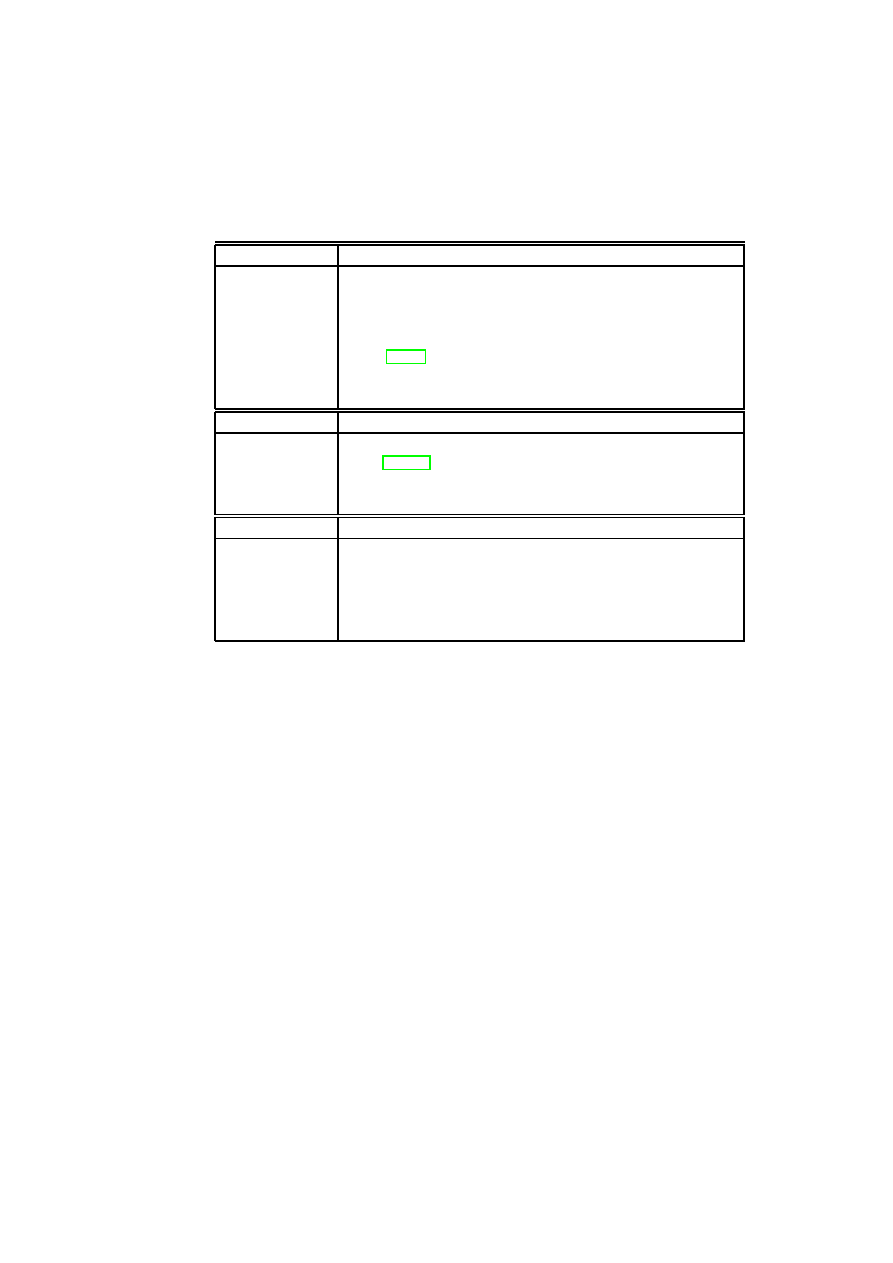

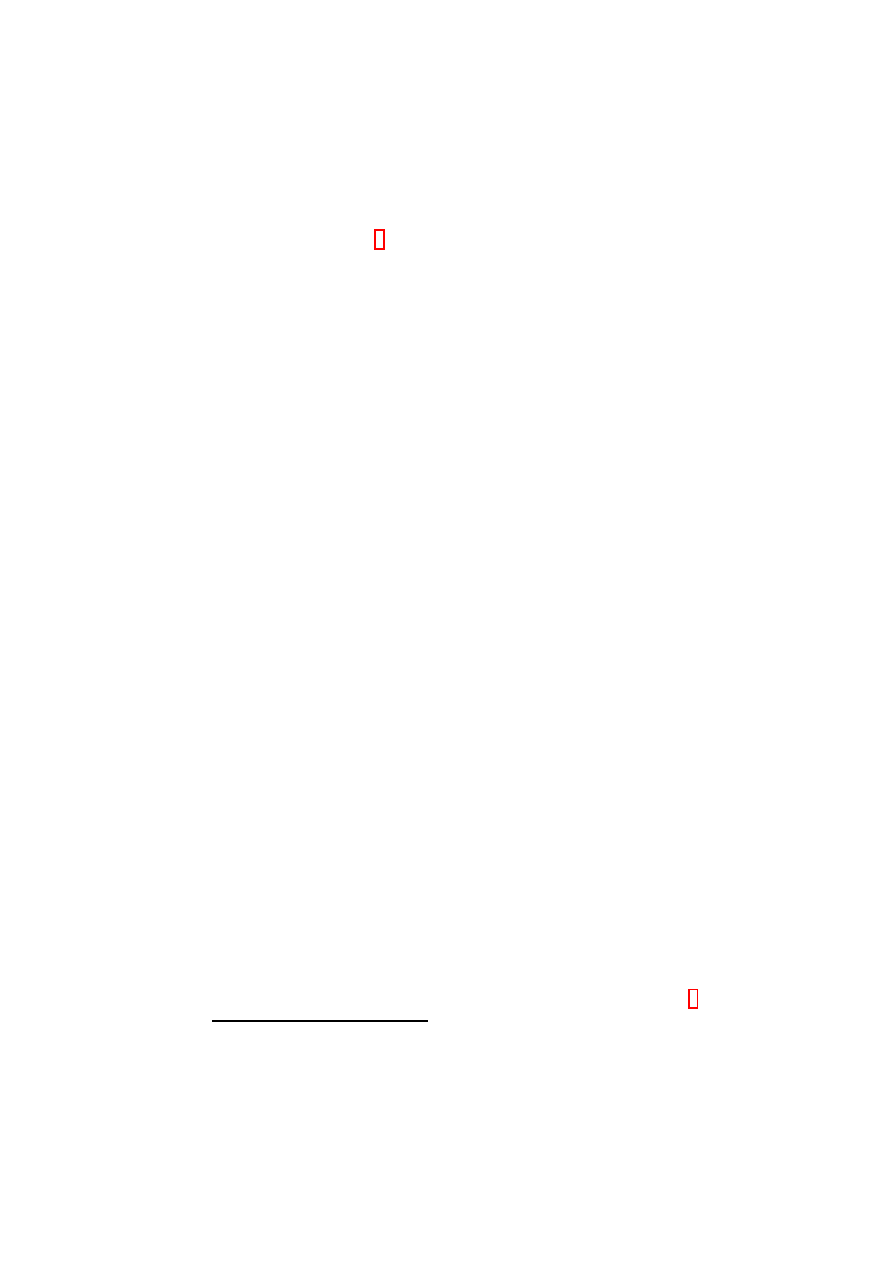

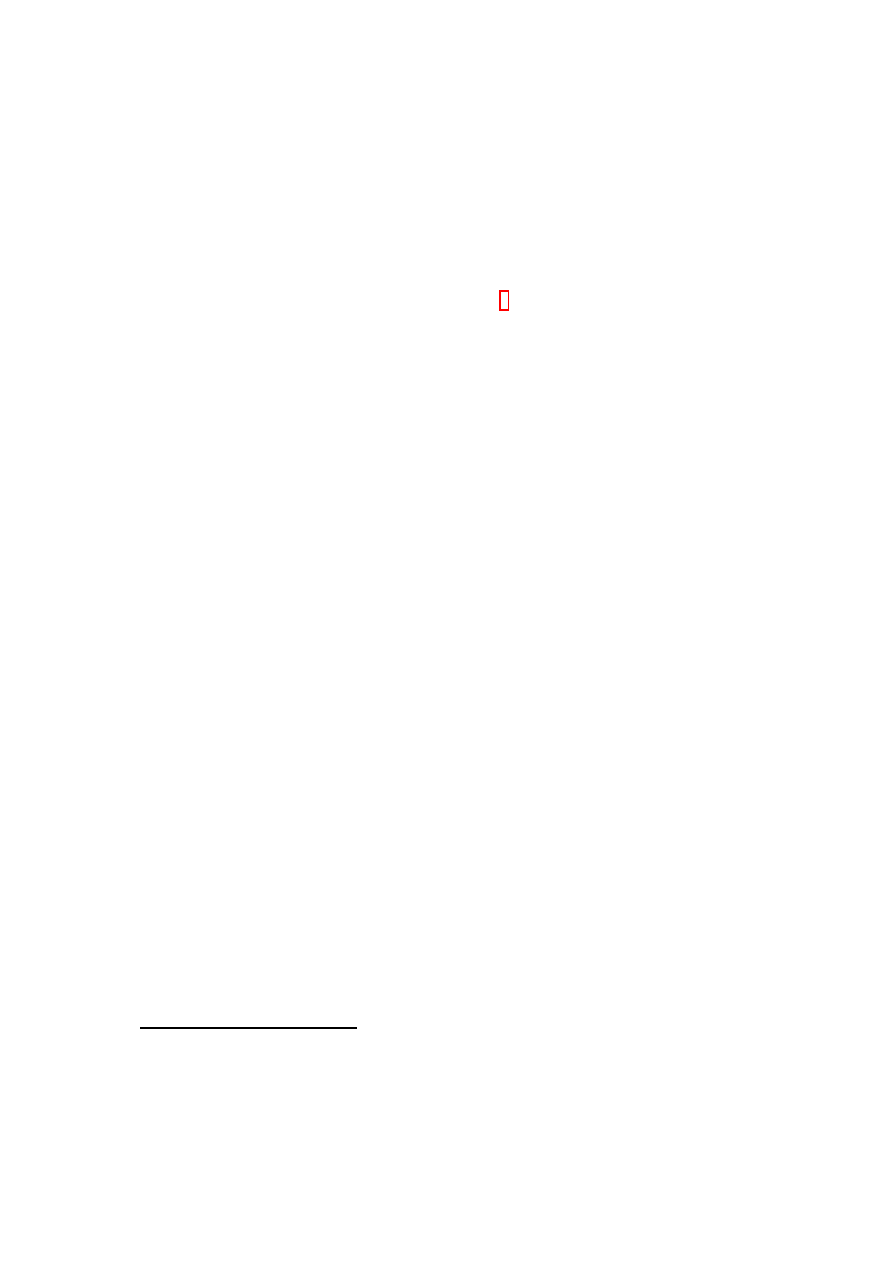

Compartment Manager

TVD Proxy

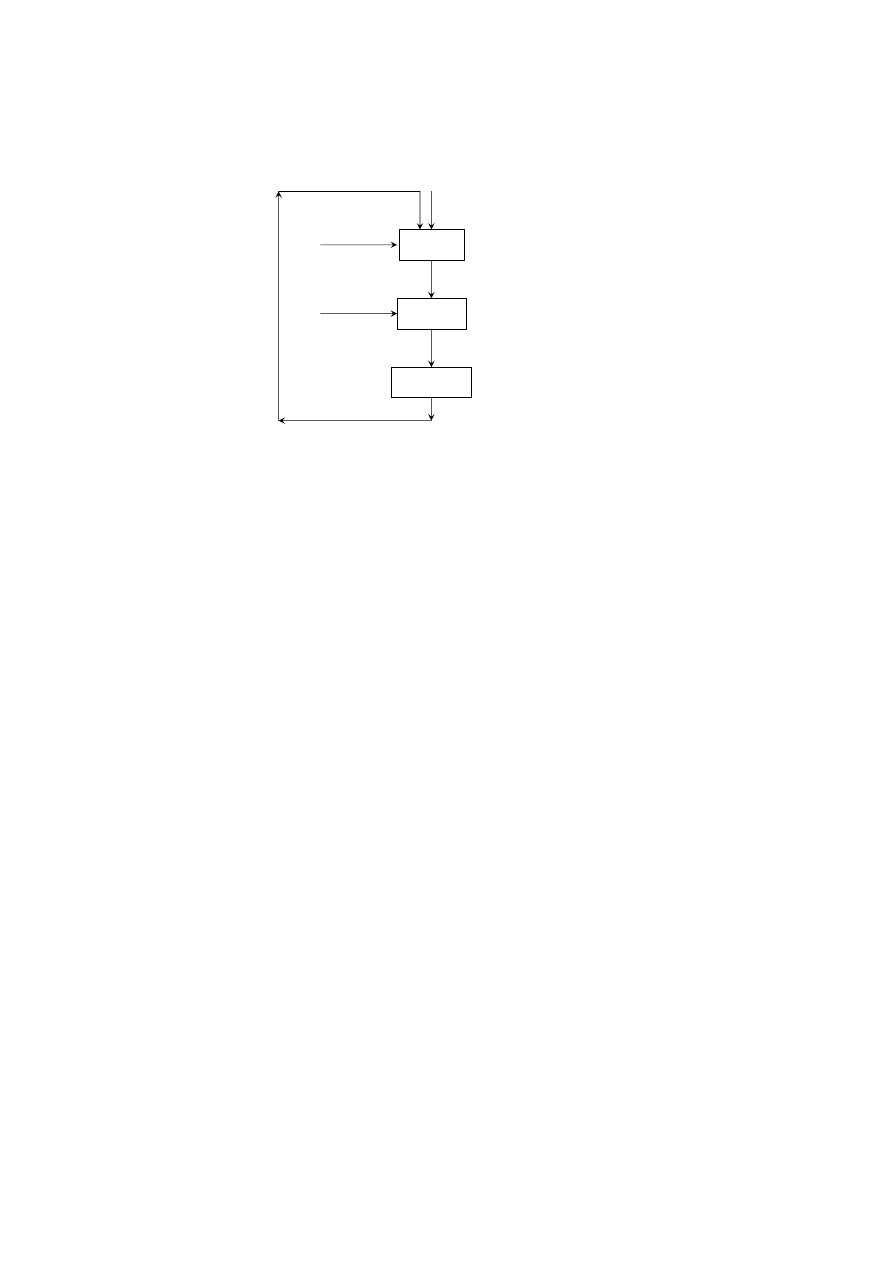

TVD Master

Network Manager

(sub-proxy)

Storage Manager

(sub-proxy)

Virtual Device Manager

Integrity Manager

TVD component

Security service

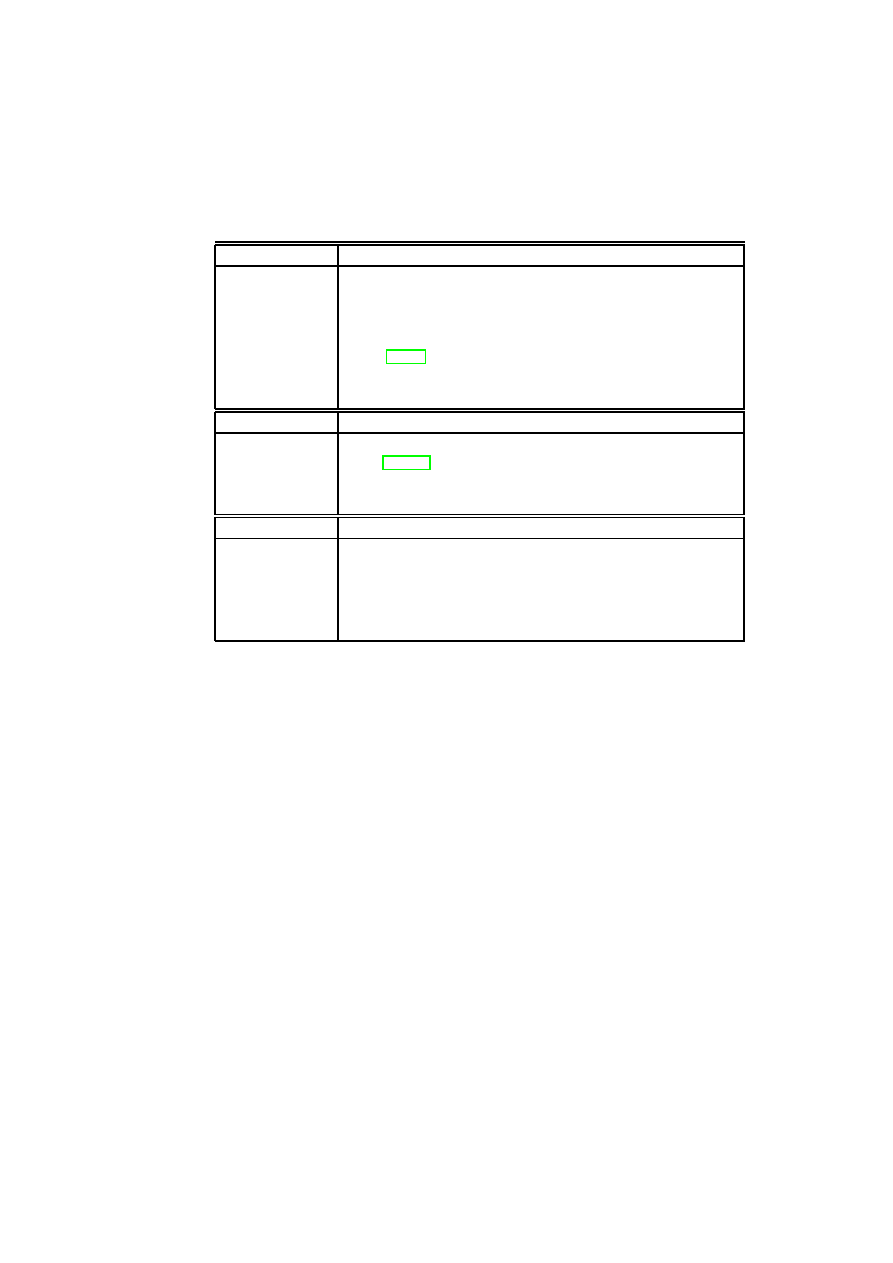

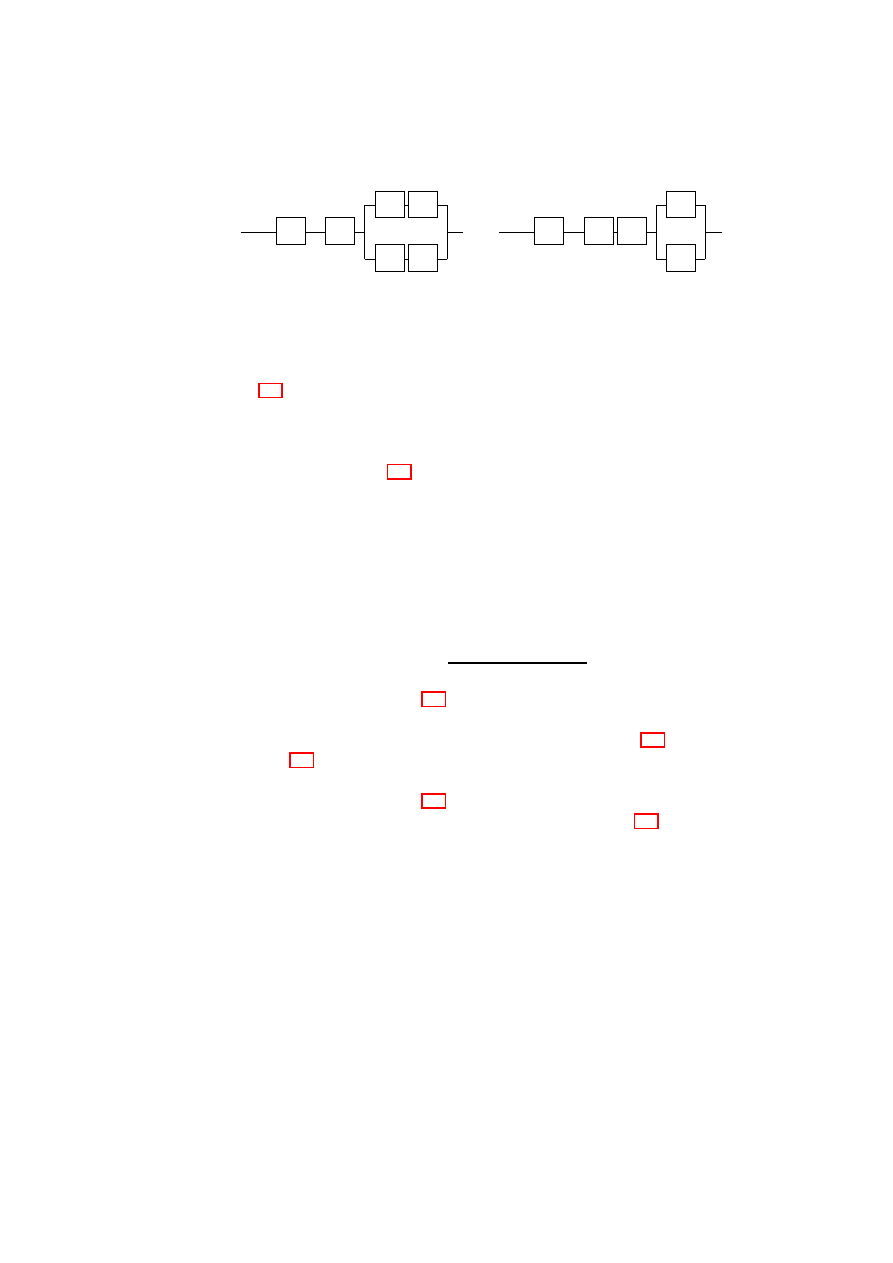

Figure 3.2: TVD Components and Security Services.

suitability and capability of the physical host (which we refer to as pre-admission

control). It then uses a generic TVD factory service to spawn a TVD proxy, which

acts as the local delegate of the TVD master dedicated to that particular host. The

TVD proxy is responsible for (1) translation of high-level TVD policies into low-

level platform-specific configurations, (2) configuration of the host and security

services with respect to the translated policies, and (3) interaction with the security

services in TVD admission and flow control.

Security services implement the security enforcement layer of our TVD in-

frastructure. They run in a trusted execution environment on each physical host

(e.g., Domain-0 in Xen) and (1) manage the security configuration of the hyper-

visor, (2) provide secure virtualization of resources (e.g., virtual devices) to the

VMs, and (3) provide support to TVD proxies in enforcing flow and access con-

trol policies within and across TVD boundaries. Figure 3.2 shows a high-level list

of security services and their interaction with the TVD components. Most impor-

tantly, the compartment manager service manages the life-cycle of VMs in both

para-virtualized and fully virtualized modes. This service works in collaboration

with the TVD proxy to admit VMs into TVDs. The integrity manager service

implements Trusted Computing extensions and assists the TVD proxy in host pre-

admission and VM admission control. The virtual network manager and virtual

storage manager services are invoked by the TVD proxy. They implement re-

source virtualization technologies and enforce parts of the high-level TVD policies

that are relevant to their operation. Lastly, the virtual device manager service han-

dles the secure resource allocation and setup of virtual devices assigned to each

VM.

Our TVD infrastructure is geared towards automated deployment and enforce-

ment of security policies specified by the TVD master. Automated refinement and

translation of high-level policies into low-level configurations are of particular in-

terest. For example, for information flow between two hosts in a trusted data-center

environment, other mechanisms need to be in place than for a flow between two

hosts at opposite ends of an untrusted WAN link. In the latter case, the hosts should

be configured to allow communication between them only through a VPN tunnel.

Another important consideration is policy conflict detection and resolution

[Wes01, LS99]. In fact, conflicting high-level policies (e.g., a connection being

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

22

OpenTC D05.5 – Design and Concept of a Trusted Virtual Datacenter

allowed in the inter-TVD policy but disallowed in the intra-TVD policy) can po-

tentially result in an incorrect configuration of the underlying infrastructure. We

cannot solely rely on the TVD owner to specify conflict-free policies. It is im-

portant to detect policy conflicts and provide feedback to the owner in case one is

detected. In the present prototype, policy refinement is performed manually. The

result is a set of configuration files that we use for configuring the security services

at the policy enforcement layer (e.g., the virtual networking infrastructure). In fu-

ture work, we will investigate the automation of this step using, for example, the

IETF policy model [RYG00] and various graph-based mechanisms from the litera-

ture. We will also investigate different techniques for resolving conflicting policies

[EASH05, CBL07, N. 01, FWH

+

01].

3.2.2

Virtual Networking Infrastructure

Virtual networking (VNET) technologies enable the seamless interconnection of

VMs that reside on different physical hosts as if they were running on the same

machine. In our TVD framework, we employ multiple technologies, including

virtual switches, Ethernet encapsulation, VLAN tagging, and VPNs, to virtualize

the underlying network and securely group VMs that belong to the same TVD. A

single private virtual network is dedicated to each TVD, and network separation

is ensured by connecting the VMs at the Ethernet level. Logically speaking, we

provide a separate “virtual infrastructure” for each TVD in which we control and

limit the sharing of network resources (such as routers, switches) between TVDs.

This also provides the TVD owner with the freedom to deploy a wide range of

networking solutions on top of the TVD network infrastructure. Network address

allocations, transport protocols, and other services are then fully customizable by

the TVD owner and work transparently as if the VMs were in an isolated physical

network. To maintain secrecy and confidentiality of network data (where neces-

sary), network communication is established over encrypted VPN tunnels. This

enables the transparent use of untrusted networks between physical hosts that con-

tain VMs within the same TVD to provide a seamless view of the TVD network.

In this section, we introduce the technologies we use to implement a security-

enhanced VNET infrastructure for TVD owners. The concept of virtual switching

is central to our architecture, which is then protected by existing VPN technologies

that provide data confidentiality and integrity where needed. The VNET infras-

tructure acts as the local enforcer of VNET policies. As described in Section 3.1.3,

these policies are based on the high-level TVD policies and translated into net-

work configurations by the TVD proxy. The proxy then deploys the whole VNET

infrastructure with respect to the translated configuration.

Virtual Switching

The virtual switch (vSwitch) is the central component of the virtual networking

infrastructure and operates similarly to a physical switch. It is responsible for

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

CHAPTER 3. POLICY ENFORCEMENT FOR VIRTUAL DATACENTERS

23

VM

VM

Eth

erIP

Host C

Host D

Host A

VPN

WAN

vSwitch

VM

VM

VM

VM

VM

VM VM

VM

vSwitch

vSwitch

VM

VM

Tag

ge

r

vSwitch

Eth

erIP

VLAN Switch

Host B

VM

VM

Tag

ge

r

vSwitch

VPN

VPN

Tag

ge

r

Eth

erIP

Eth

erIP

VPN

Eth

erIP

VPN

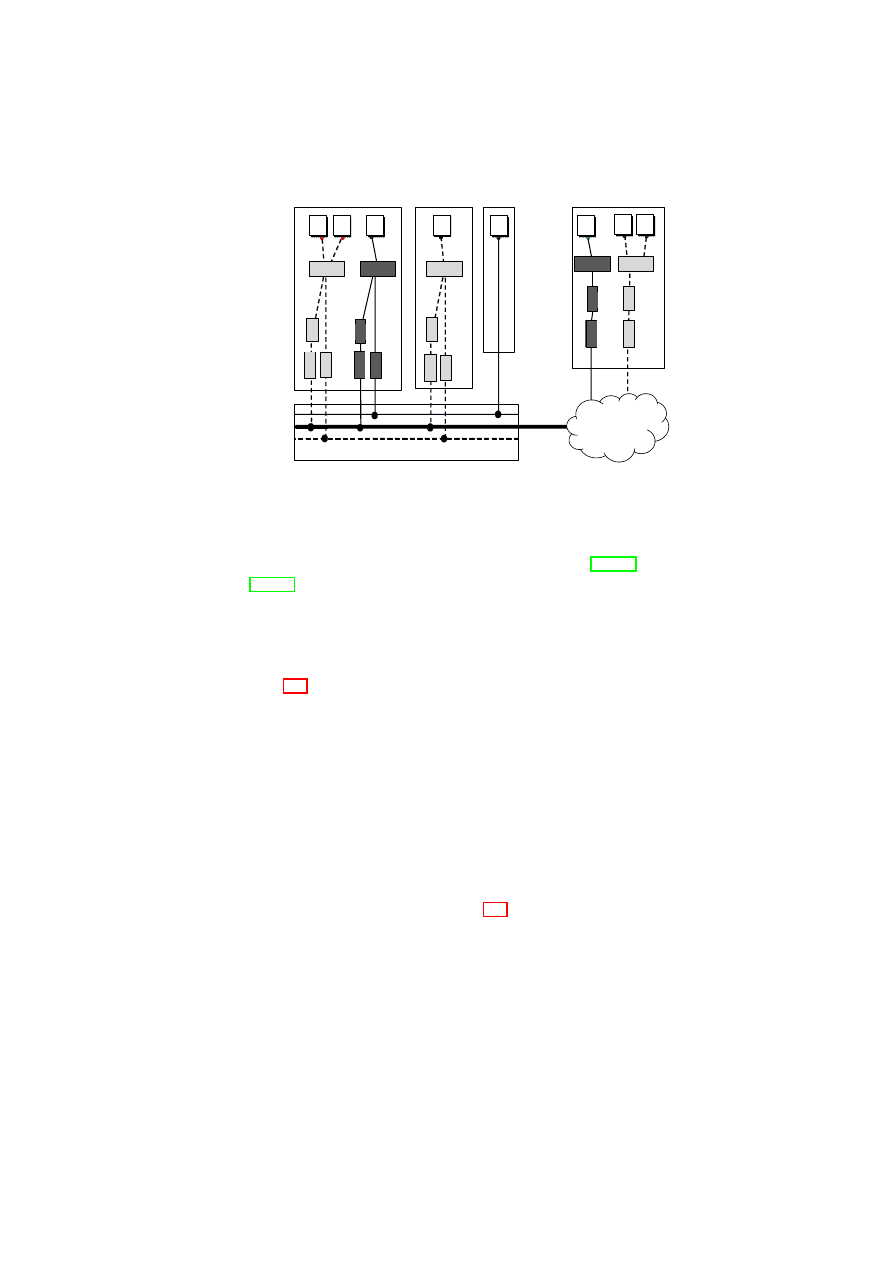

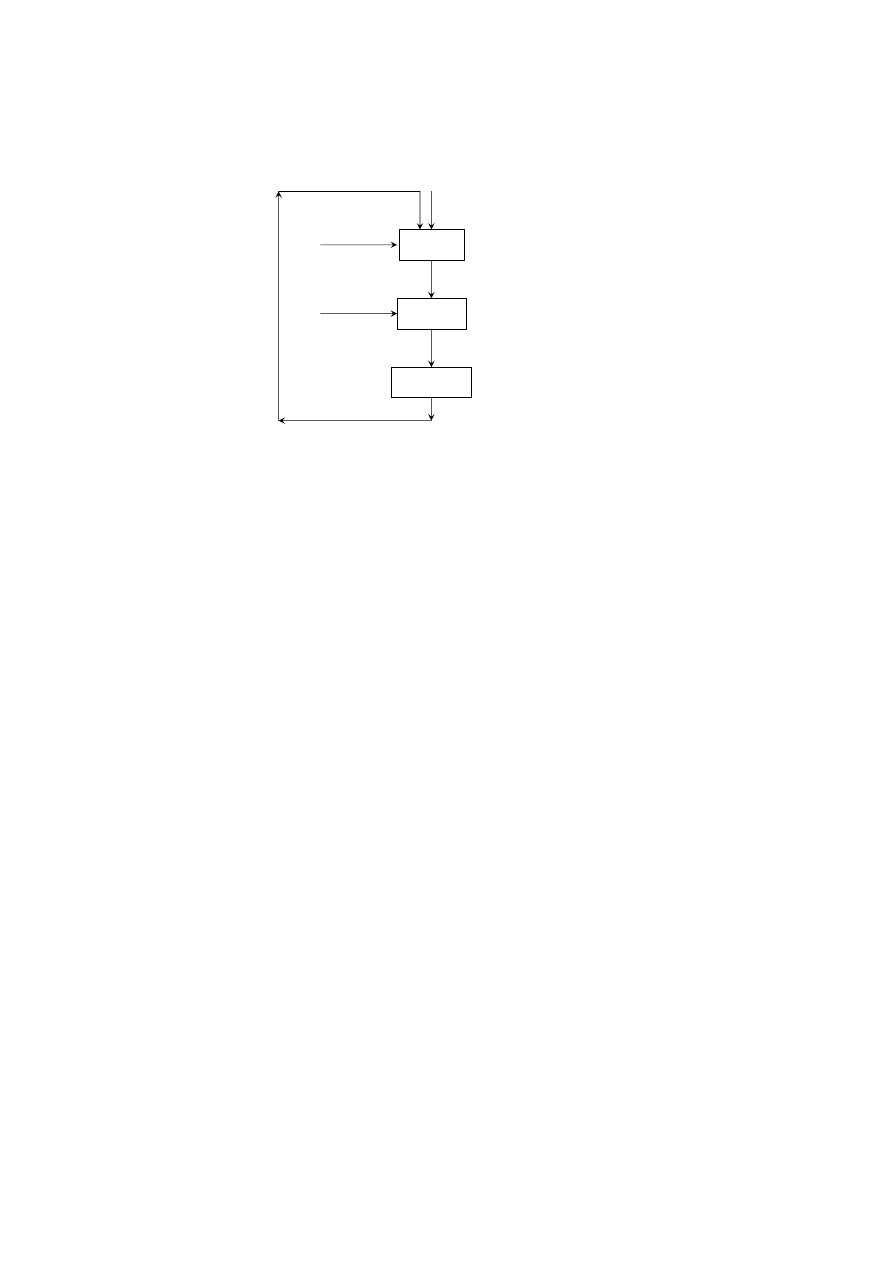

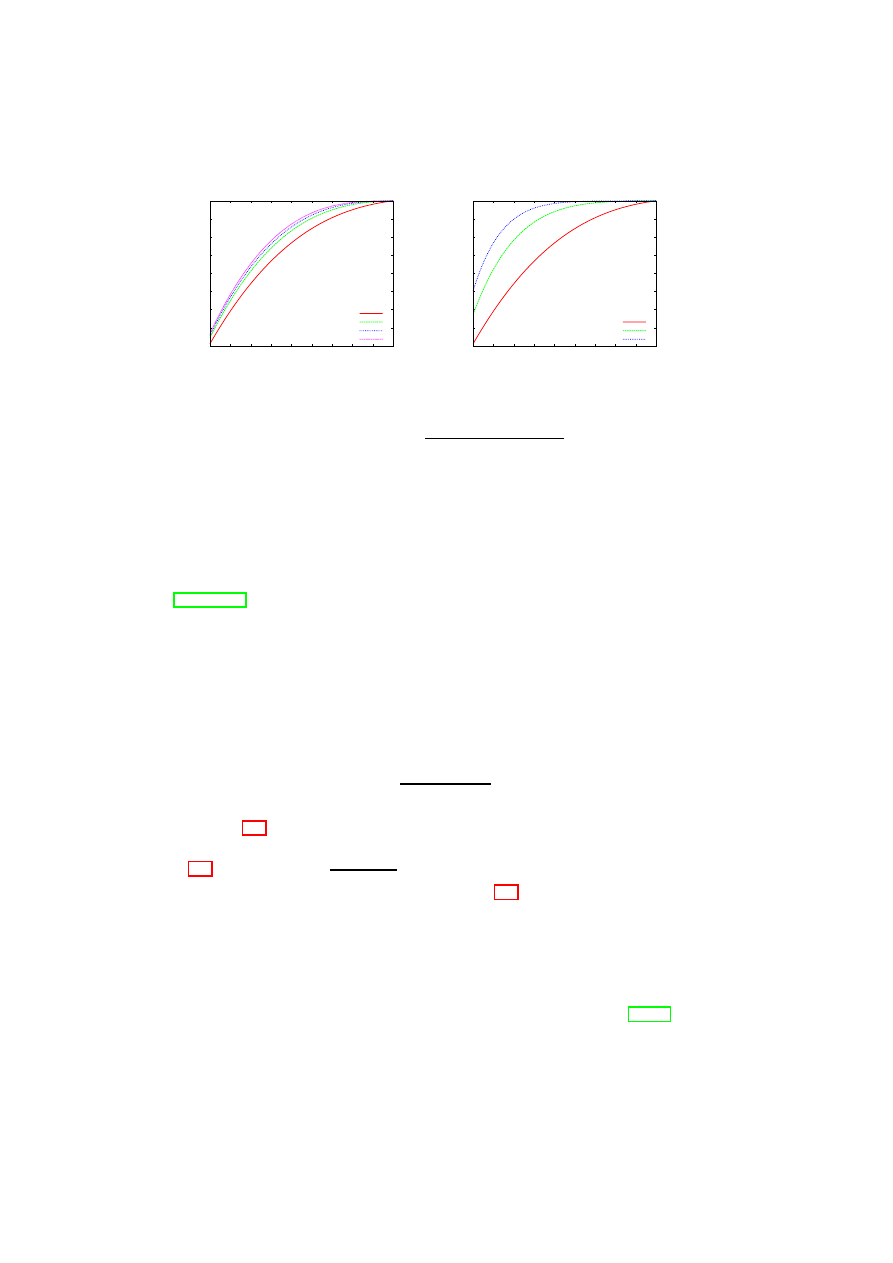

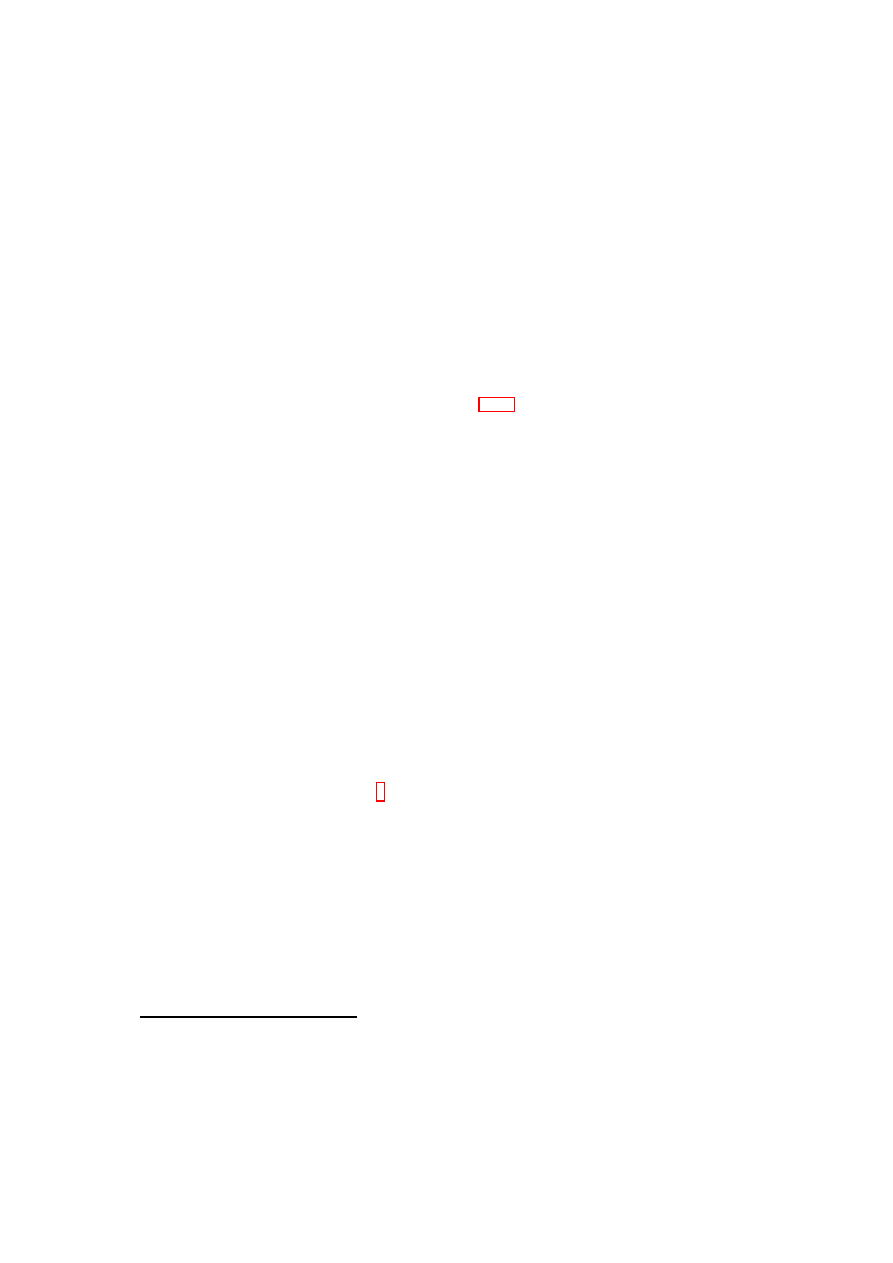

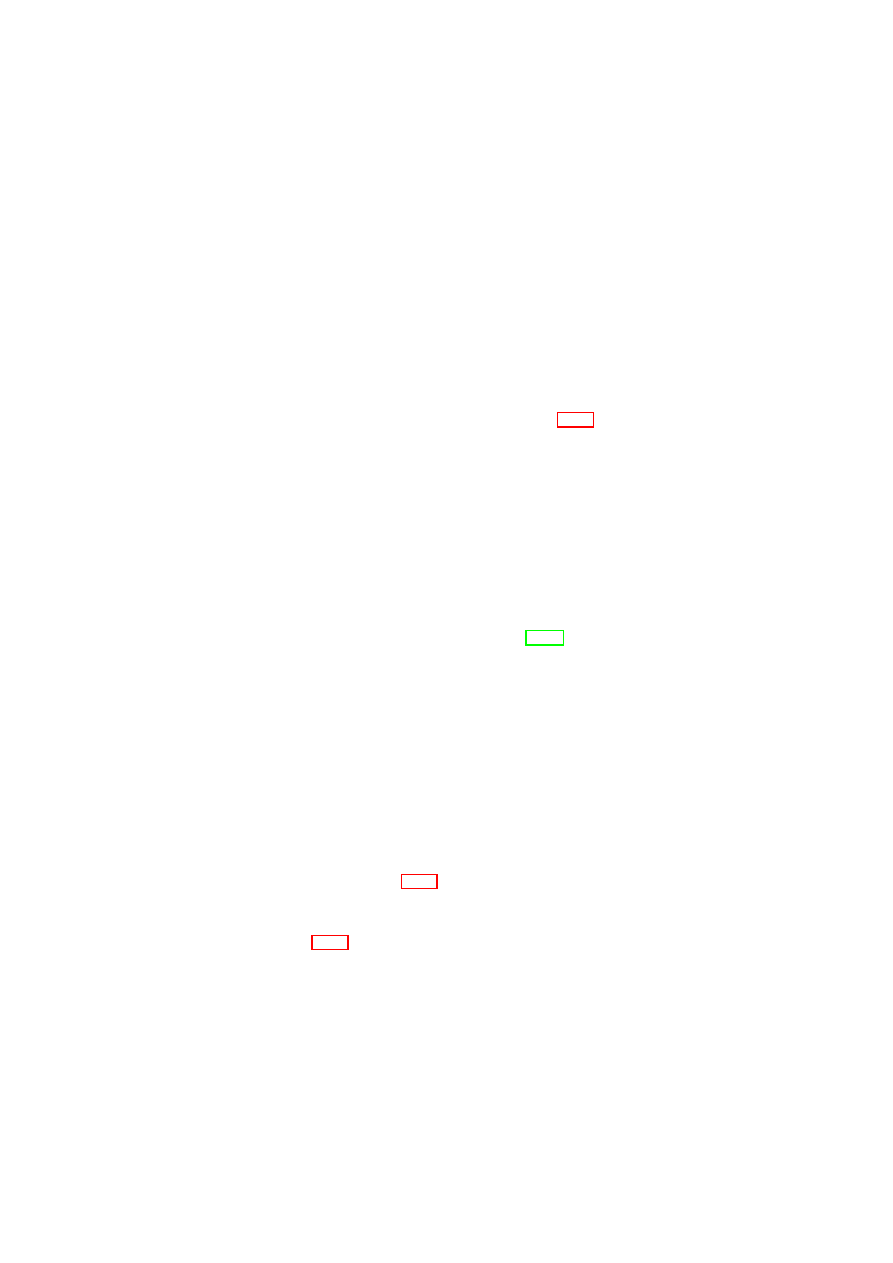

Figure 3.3: General vSwitch Architecture.

network virtualization and isolation, and enables a virtual network to span multi-

ple physical hosts. To do so, the vSwitch uses EtherIP [IET02] and VLAN tag-

ging [IEE98] to insert VLAN membership information into every network packet.

The vSwitch also implements the necessary address-mapping techniques to direct

packets only to those machines that host member VMs. Virtual switches provide

the primitives for implementing higher-level security policies for networking and

are configured by the higher-level TVD management layer.

Figure 3.3 illustrates an example architecture in which physical machines host

multiple VMs with different TVD memberships (the light and dark shades indicate

different TVDs). Hosts A, B, and D are virtualized machines, whereas Host C is

non-virtualized. Furthermore, Hosts A, B, and C reside on the same LAN, and thus

can communicate directly using the trusted physical infrastructure without further

protection (e.g., traffic encryption). For example, the light VMs hosted on Hosts

A and B are inter-connected using the local VLAN-enabled physical switch. In

this case, the physical switch separates the TVD traffic from other traffic passing

through the switch using VLAN tags. Similarly, the dark VMs hosted on Host

A and the non-virtualized Host C are seamlessly inter-connected using the local

switch. In contrast, connections that require IP connectivity are routed over the

WAN link. The WAN cloud in Figure 3.3 represents the physical network in-

frastructure able to deal with TVD-enabled virtual networks; it can include LANs

with devices capable of VLAN tagging and gateways to connect the LANs to each

other over (possibly insecure) WAN links. For connections that traverse untrusted

medium, we employ EtherIP encapsulation to denote TVD membership and addi-

tional security measures (such as encryption) to ensure compliance with the confi-

dentiality and integrity requirements.

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

24

OpenTC D05.5 – Design and Concept of a Trusted Virtual Datacenter

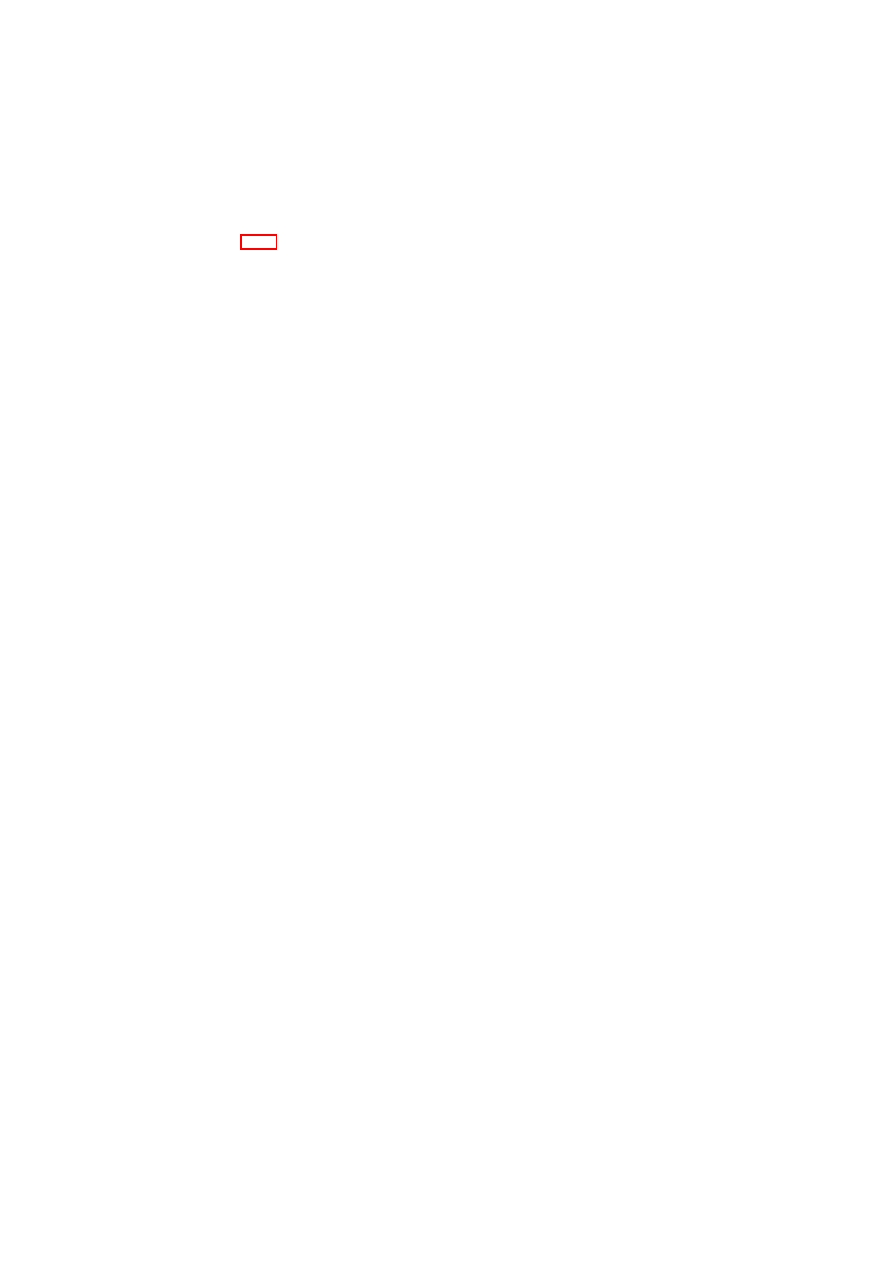

Consolidation

Access Control & Encryption

TVD A

TVD B

TVD C

Heterogeneous

Physical Storage

Per-TVD

Raw Storage

Per-TVD

Virtual Storage

TVD A

TVD B

TVD C

Metadata

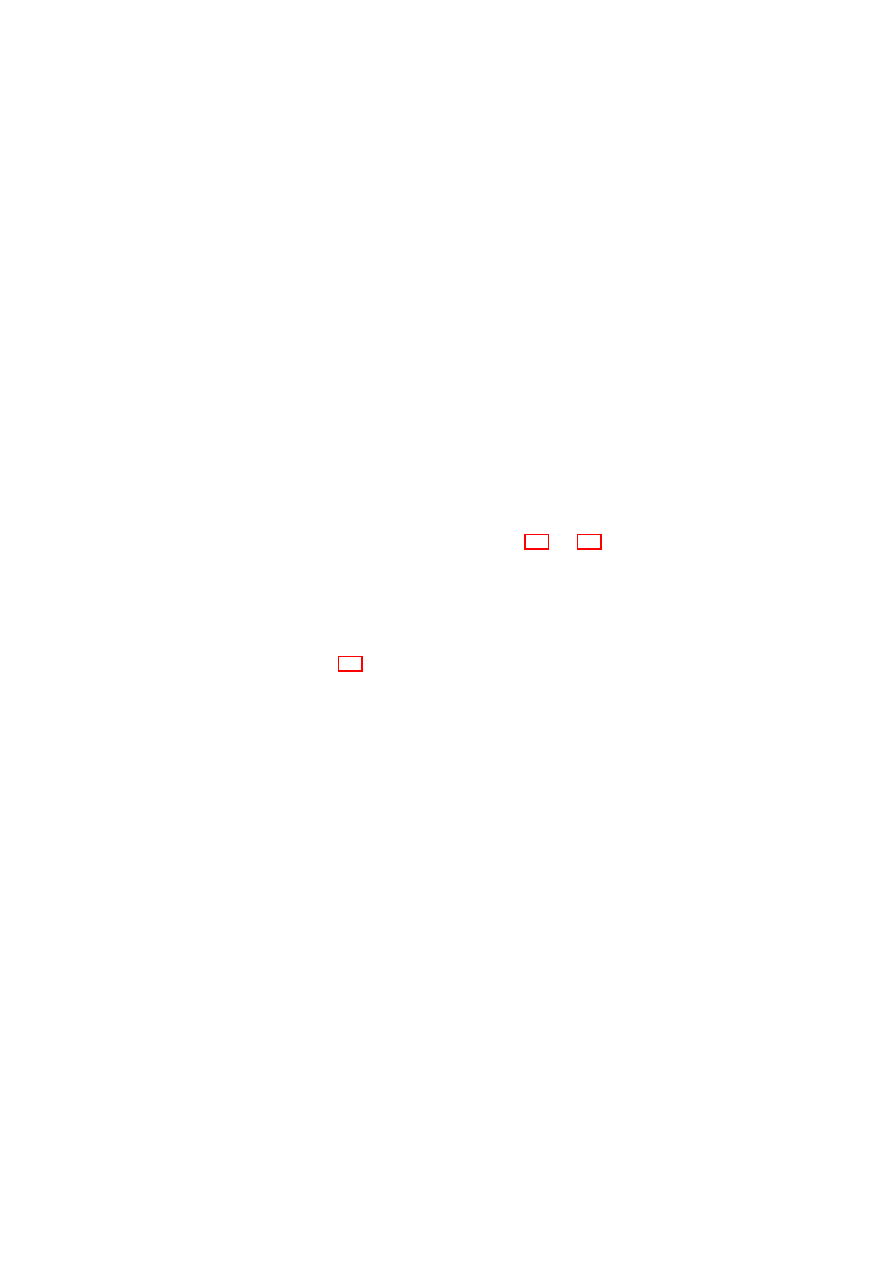

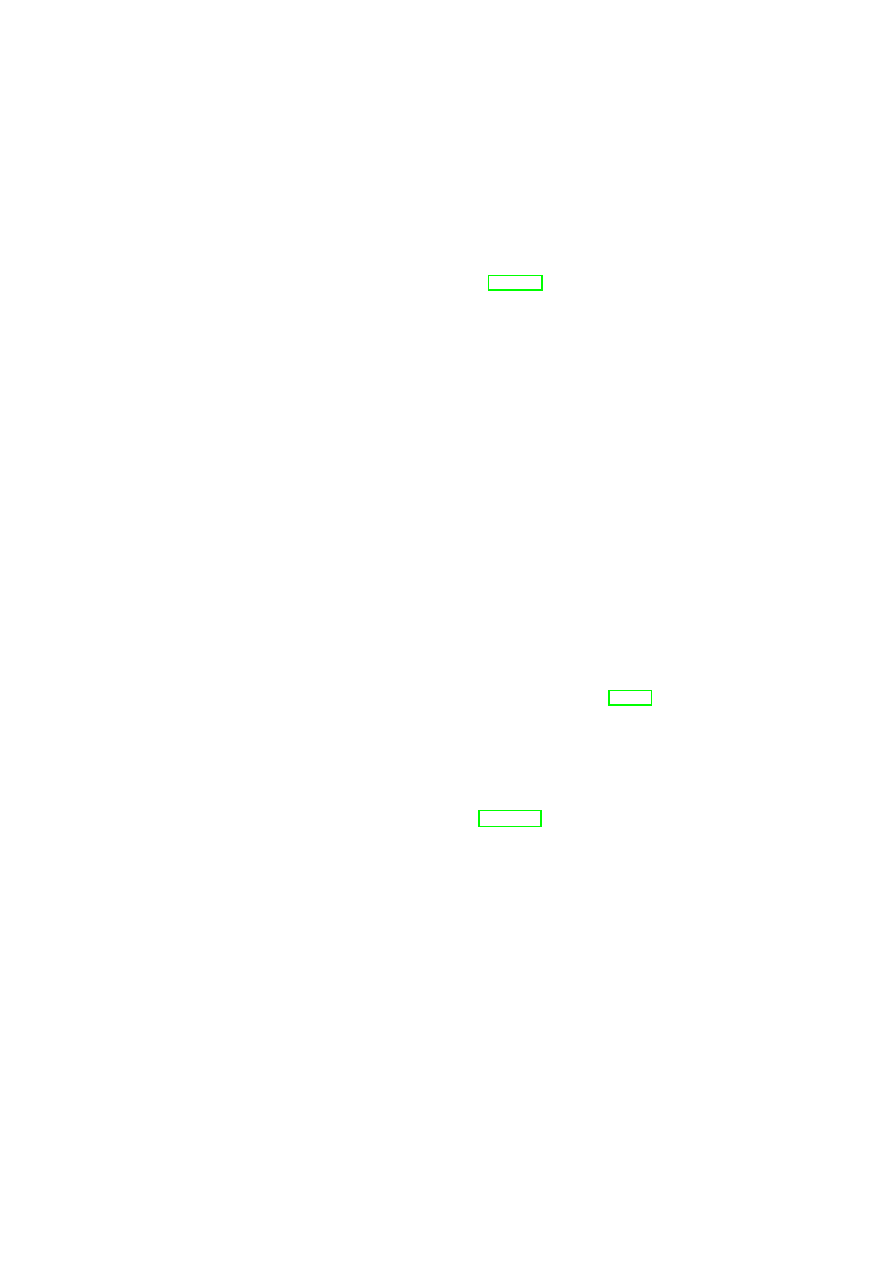

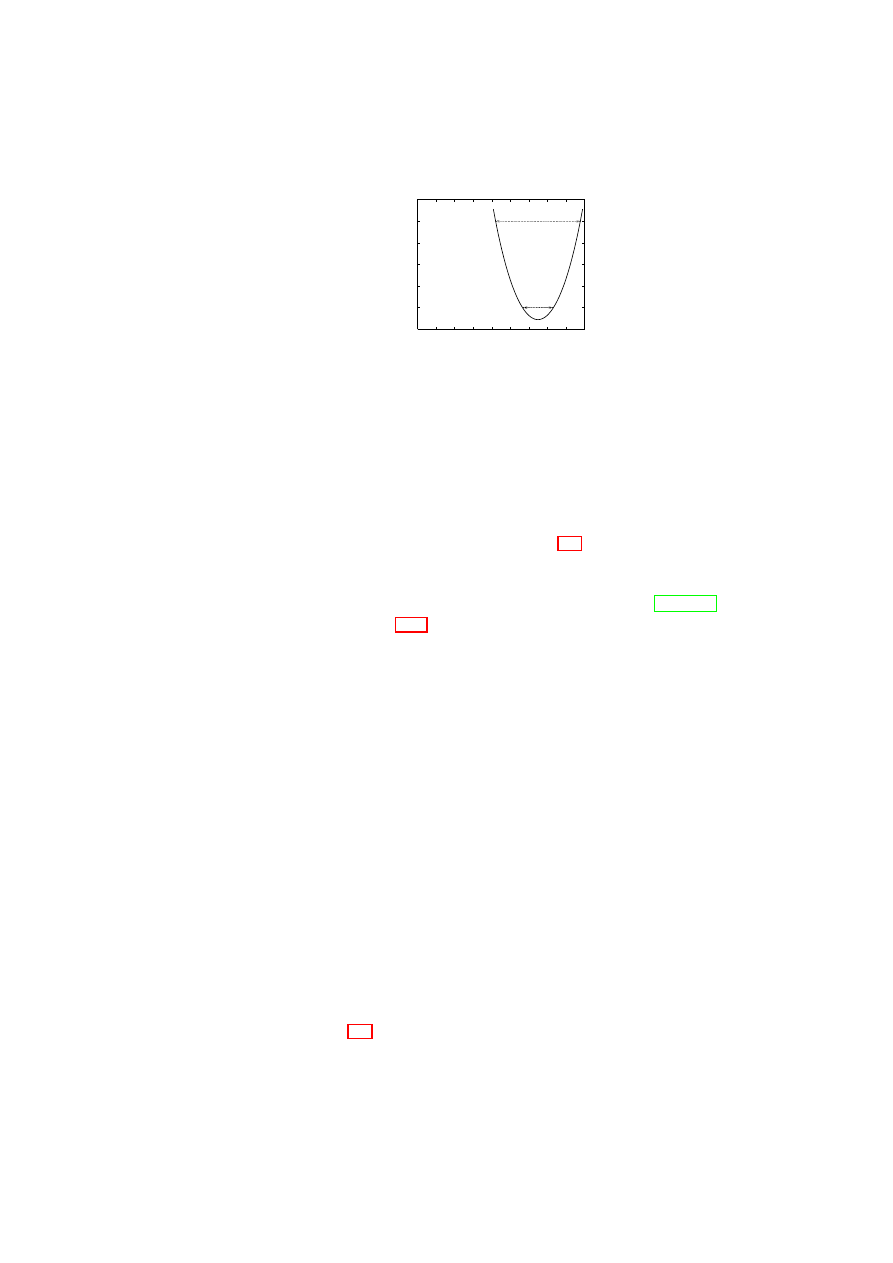

Figure 3.4: Security Enforcement for Virtualized Storage.

Virtual Private Networking

In Figure 3.3, VMs hosted on Host D are connected to the other machines over a

WAN link. A practical setting in which such a connection might exist would be

an outsourced remote resource connected to the local data center through the In-

ternet. As an example, lightly shaded VMs on Host D connect to the lone VM on

Host B over this untrusted link. In this setting, we use a combination of EtherIP

encapsulation and VPN technology to ensure the confidentiality and integrity of

the communication. To do so, we use point-to-point VPN tunnels with OpenVPN

that are configured via the TVD proxy from the TVD policies. This enables re-

configuration of the topology and the involved VPNs within a TVD from a single

administration point, the TVD Master.

TVD policies distributed from the TVD master to the TVD proxy also include

the secret key for the VPN along with other VPN-specific settings. On a physical

host, the VPN’s endpoint is represented as a local virtual network interface (vif)

that is plugged into the appropriate vSwitch controlled by the TVD proxy. The

vSwitch then decides whether to tunnel the communication between VMs, and if

so, uses the VPN module to establish the tunnel and access the VPN secret for

traffic encryption and decryption.

3.2.3

Virtual Storage Infrastructure

We focus on a simplified security management of virtualized storage. Broadly

speaking, storage virtualization abstracts away the physical storage resource(s). It

is desirable to allow a storage resource to be shared by multiple host computers,

and also to provide a single storage device abstraction to a computer irrespective

of the underlying physical storage, which may be a single hard disk, a set of hard

disks, a Storage Area Network (SAN), etc. To satisfy both requirements, stor-

age virtualization is typically done at two levels. The first level of virtualization

involves aggregating all the (potentially heterogeneous) physical storage devices

into one or more virtual storage pools. The aggregation allows more centralized

and convenient data management. The second level of virtualization concerns the

unified granularity (i.e., blocks or files) at which data in each pool is presented to

the higher-level entities (operating systems, applications, or VMs).

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

CHAPTER 3. POLICY ENFORCEMENT FOR VIRTUAL DATACENTERS

25

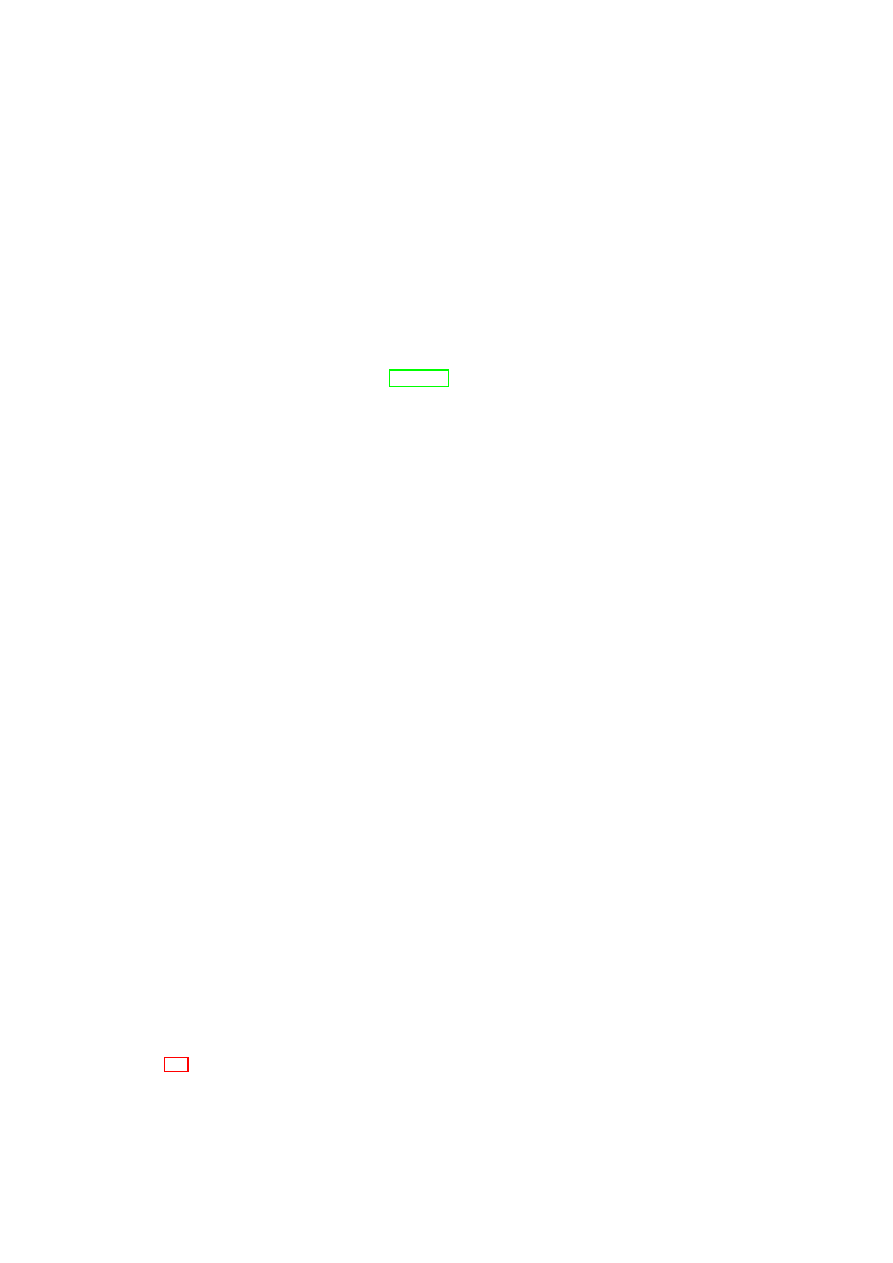

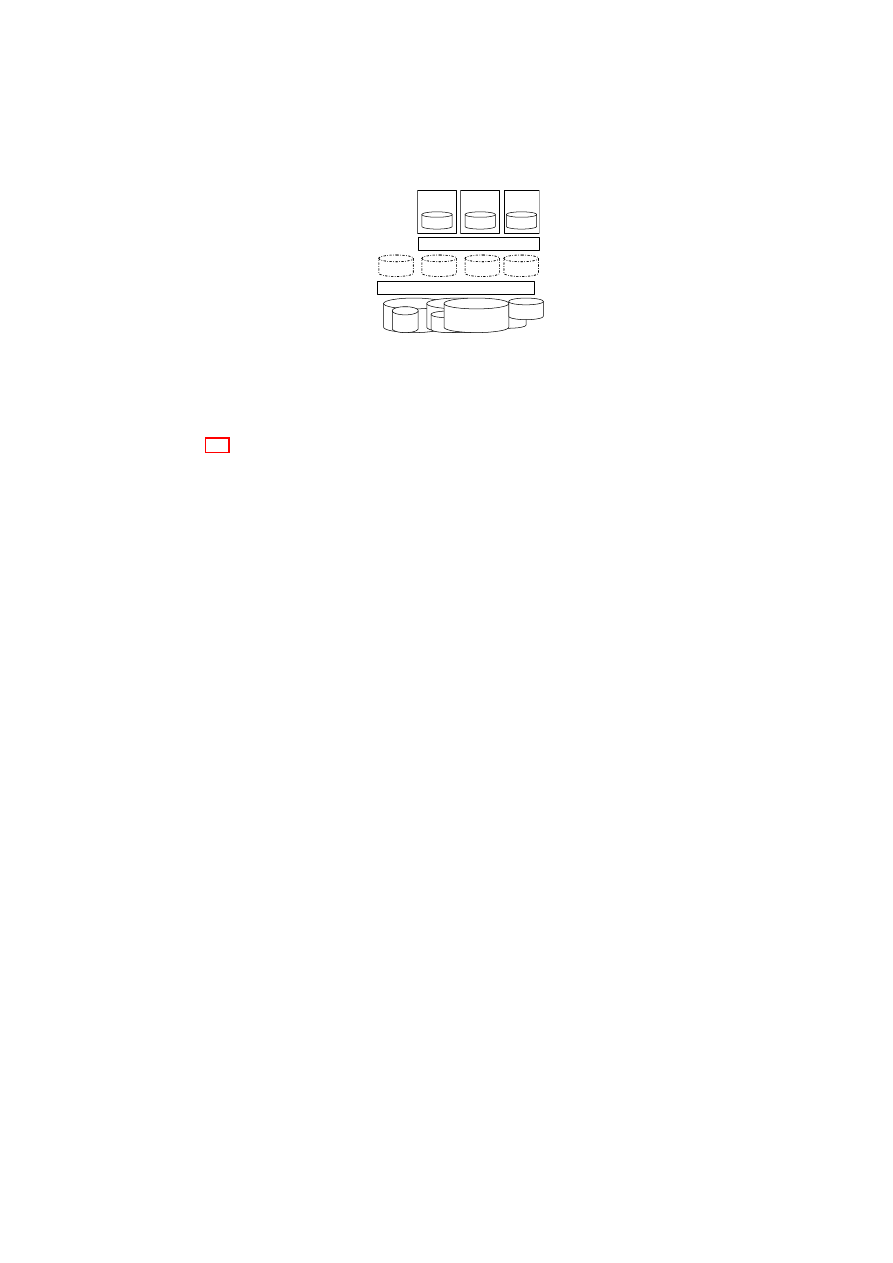

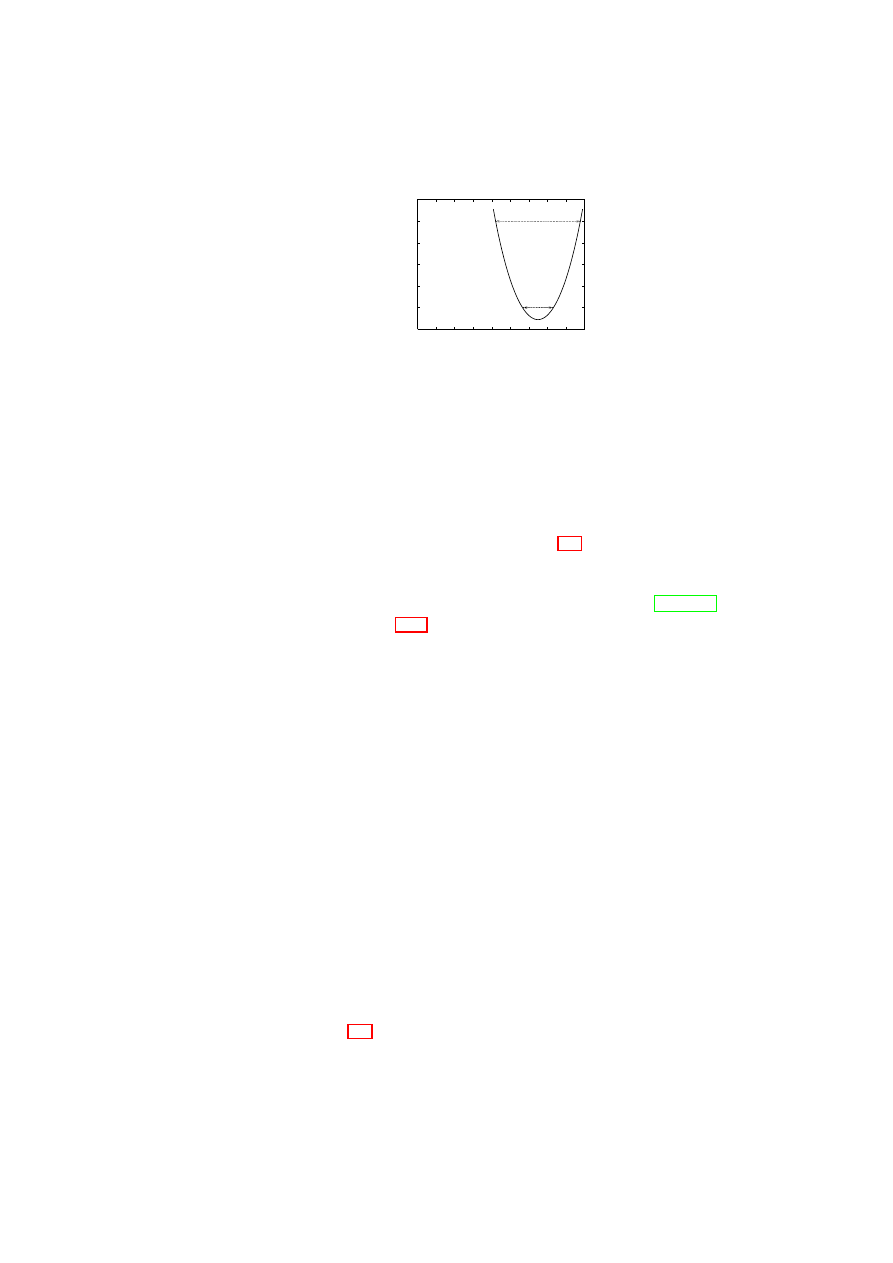

Figure 3.4 shows our storage security enforcement architecture, in which exist-

ing heterogeneous physical storage devices are consolidated into a joint pool. This

virtual storage pool is then subdivided into raw storage for each TVD. Each raw

storage volume has an owner TVD (indicated by the labels TVD A, TVD B, and

TVD C at the per-TVD raw storage layer in the figure). In addition, when a volume

may have to be shared among multiple TVDS, there is also a set of member TVDs

associated with it. The access control and encryption layer helps enforce the owner

TVD’s storage-sharing policies, e.g., enforcing read, write, create, and update ac-

cess permissions for the member TVDs. This layer is a logical layer that in reality

consists of the virtual storage managers (part of the security services) located on

each physical platform containing the owner TVD’s VPEs. The owner TVD’s vir-

tual storage manager on each physical platform is responsible for enforcing the

owner TVD’s storage security policies (see Section 3.1.3) on these volumes. If a

certain intra-TVD security policy requires confidentiality and does not declare the

medium as trusted, the disk is encrypted using a key belonging to the owner TVD.

2

If conditions for (re-)mounting a disk have been defined, the disk is also encrypted

and the key is sealed against the TCB while including these conditions into the un-

sealing instructions. The policy and meta-data are held on a separate raw volume

that is only accessible by the data-center infrastructure.

An administrator of a domain may request that a disk be mounted to a partic-

ular VM in a particular mode (read/write). In Xen, the disk is usually mounted in

the management machine Domain-0 as a back-end device and then accessed by a

guest domain via a front-end device. The virtual storage manager on the platform

validates the mount request against the policies of both the TVD the VM is part

of and the owner TVD for the disk. Once mounted, appropriate read-write per-

missions are granted based on the flow control policy for the two TVDs, e.g., read

access is granted only if the policies specified in the disk policy matrix allow the

VM’s TVD such an access to the disk belonging to the owner TVD.

3.2.4

TVD Admission Control

When a VM is about to join a TVD, different properties will be verified by the

local TVD proxy to ensure that policies of all the TVDs that the VM is currently

a member of as well as of the TVD that it wants to join are not violated. If the

verification is successful, then the VM will be connected to that TVD. The TVD

admission control protocol is the procedure by which the VM gets connected to the

TVD. In the case of a VM joining multiple TVDs, the admission control protocol

is executed for each of those TVDs. We now describe the steps of the protocol.

We assume that the computing platform that executes the VM provides mech-

anisms that allow remote parties to convince themselves about its trustworthiness.

Example mechanisms include trusted (authenticated) boot and the remote attesta-

tion protocol based on TPM technology.

2

For efficiency reasons, we currently do not provide integrity protection.

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

26

OpenTC D05.5 – Design and Concept of a Trusted Virtual Datacenter

TVD Proxy Initialization Phase:

To allow a VM to join a TVD, the platform

hosting the VM needs access to the TVD policy, and upon successful admission,

to TVD secrets, such as the VPN key. For this purpose, TVD proxy services are

started on the platform for each TVD whose VPEs may be hosted . The TVD proxy

can be started at boot time of the underlying hypervisor, by a system service, or by

the VM itself, as long as the TVD proxy is strongly isolated from the VM.

Pre-Admission Phase:

When a VPE belonging to the TVD is going to be hosted

on the platform for the first time, the TVD master has to establish a trust relation

with the platform running the VM, specifically with the TVD proxy. We call this

step the pre-admission phase, and it involves the establishment of a trusted channel

between the TVD master and the TVD proxy. The trusted channel allows the TVD

master to verify the integrity of the TVD proxy and the underlying platform. After

the trusted channel is established and the correct configuration of proxy verified,

the TVD master can send the TVD policies and credentials (such as VPN key) to

the TVD proxy.

Admission Control Phase:

The Compartment Manager (part of the platform se-

curity services shown in Figure 3.2) is responsible for starting new VMs. The

Compartment Manager loads the VM configuration and enforces the security di-

rectives with the help of the Integrity Manager (also part of the platform security

services shown in Figure 3.2). The security directives may include gathering the

VM state information, such as the VM configuration, kernel, and disk(s) that are

going to be attached to the VM.

If the VM configuration states that the VM should join one or more TVDs,

then the Compartment Manager interacts with the corresponding TVD proxy and

invokes TPM functions to attest the state of the VM. The TVD proxy verifies cer-

tain properties before allowing the VM to join the TVD. More concretely, the TVD

proxy has to ensure that

•

the VM fulfills the integrity requirements of the TVD;

•

the information flow policies of all TVDs the VM will be a member of will

not be violated;

•

the VM enforces specific information flow rules between TVDs if such rules

are required by the TVD policy, and that

•

the underlying platform (e.g., the hypervisor and attached devices) fulfills

the security requirements of the TVD.

Platform verification involves matching the security requirements with the plat-

form’s capabilities and mechanisms instantiated on top of these capabilities. For

example, suppose that data confidentiality is a TVD requirement. Then, if hard

disks or network connections are not trusted, additional mechanisms, such as block

encryption or VPN (respectively), need to be instantiated to satisfy the requirement.

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

CHAPTER 3. POLICY ENFORCEMENT FOR VIRTUAL DATACENTERS

27

TVD Join Phase:

If the VM and the provided infrastructure fulfill all TVD re-

quirements, a new network stack is created and configured as described in Sec-

tion 3.2.2. Once the Compartment Manager has started the VM, it sends an attach

request to the corresponding TVD vSwitch. Once the VM is connected to the

vSwitch, it is a member of the TVD.

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

Part II

Building Blocks and Future

Directions

28

Chapter 4

Automated Provisioning of

Secure Virtual Networks

S. Cabuk, C. Dalton (HPL), H. Ramasamy, M. Schunter (IBM)

4.1

Introduction to Secure Virtual Networking

Virtualization allows the abstraction of the real hardware configuration of a com-

puter system. A particular challenge of virtualization is isolation between poten-

tially distrusting virtual machines and their resources. The machine virtualization

alone provides reasonable isolation of computing resources such as memory and

CPU between guest domains. However, the network remains to be a shared re-

source as all traffic from guests eventually pass through a shared network resource

(e.g., a physical switch) and end up on the shared physical medium. Today’s VMM

virtual networking implementations provide simple mechanisms to bridge all VM

traffic through the actual physical network card of the physical machine. This level

of isolation can be sufficient for individual and small enterprise purposes. How-

ever, a large-scale infrastructure (e.g., a virtualized data center) that hosts services

belonging to multiple customers require further guarantees on customer separation,

e.g., to avoid accidental or malicious data leakage.

Outline

Our focus is on security-enhanced network virtualization, which (1) al-

lows groups of related VMs running on separate physical machines to be connected

together as though they were on their own separate network fabric, and (2) enforces

cross-group security requirements such as isolation, authentication, confidentiality,

integrity, and information flow control. The goal is to group related VMs (e.g.,

VMs belonging to the same customer in a data center) distributed across several

physical machines into virtual enclave networks, so that each group of VMs has

the same protection as if the VMs were hosted on a separate physical LAN. Our

solution for achieving this goal also takes advantage (whenever possible) of the

29

30

OpenTC D05.5 – Design and Concept of a Trusted Virtual Datacenter

fact that some VMs in a group may be co-hosted on the same hardware; it is not

necessary to involve the physical network during information flow between two

such VMs.

The concept of Trusted Virtual Domains or TVDs was put forth by Bussani

et al. [BGJ

+

05] to provide quantifiable security and operational management for

business and IT services, and to simplify overall containment and trust manage-

ment in large distributed systems. Informally speaking, TVDs can be thought of

as security-enhanced variants of virtualized network zones, in which specified se-

curity policies can be automatically enforced. We describe the first practical real-

ization of TVDs using a secure network virtualization framework that guarantees

reliable isolation and flow control requirements between TVD boundaries. The re-

quirements are specified by TVD policies, which are enforced dynamically despite

changing TVD membership, policies, and properties of the member VMs. The

framework is based on existing and well-established network virtualization tech-

nologies such as Ethernet encapsulation, VLAN tagging, virtual private networks

(VPNs), and network access control (NAC) for configuration validation.

Our main contributions are (1) combining standard network virtualization tech-

nologies to realize TVDs, and (2) orchestrating them through a management frame-

work that is oriented towards automation. In particular, our solution aims at auto-

matically instantiating and deploying the appropriate security mechanisms and net-

work virtualization technologies based on an input security model, which specifies

the required level of isolation and permitted network flows.

The remainder of the chapter is organized as follows. In Section 4.2, we pro-

vide an overview of our security objectives and describe the high-level framework

to achieve the objectives. We introduce the networking components required for

our framework in Section 4.3 and describe how they can be orchestrated to enforce

TVD policies. In Section 4.4, we present the TVD security model and the compo-

nents constituting the TVD infrastructure. In Section 4.5, we cover the dynamic

aspects of TVD deployment including TVD establishment, population, and admis-

sion control. In Section 4.6, we describe a Xen-based prototype implementation

of our secure virtual networking framework and report our performance results in

Section 4.7. Finally, we conclude and discuss future extensions in Section 4.8.

4.2

Design Overview

At a high level, our secure network virtualization framework consists of a network-

ing infrastructure and a TVD infrastructure. The networking infrastructure enables

the mapping from physical to logical network topologies that we use to separate

customer networks. The TVD infrastructure configures the network devices and

instantiates the appropriate set of mechanisms to realize the TVD security objec-

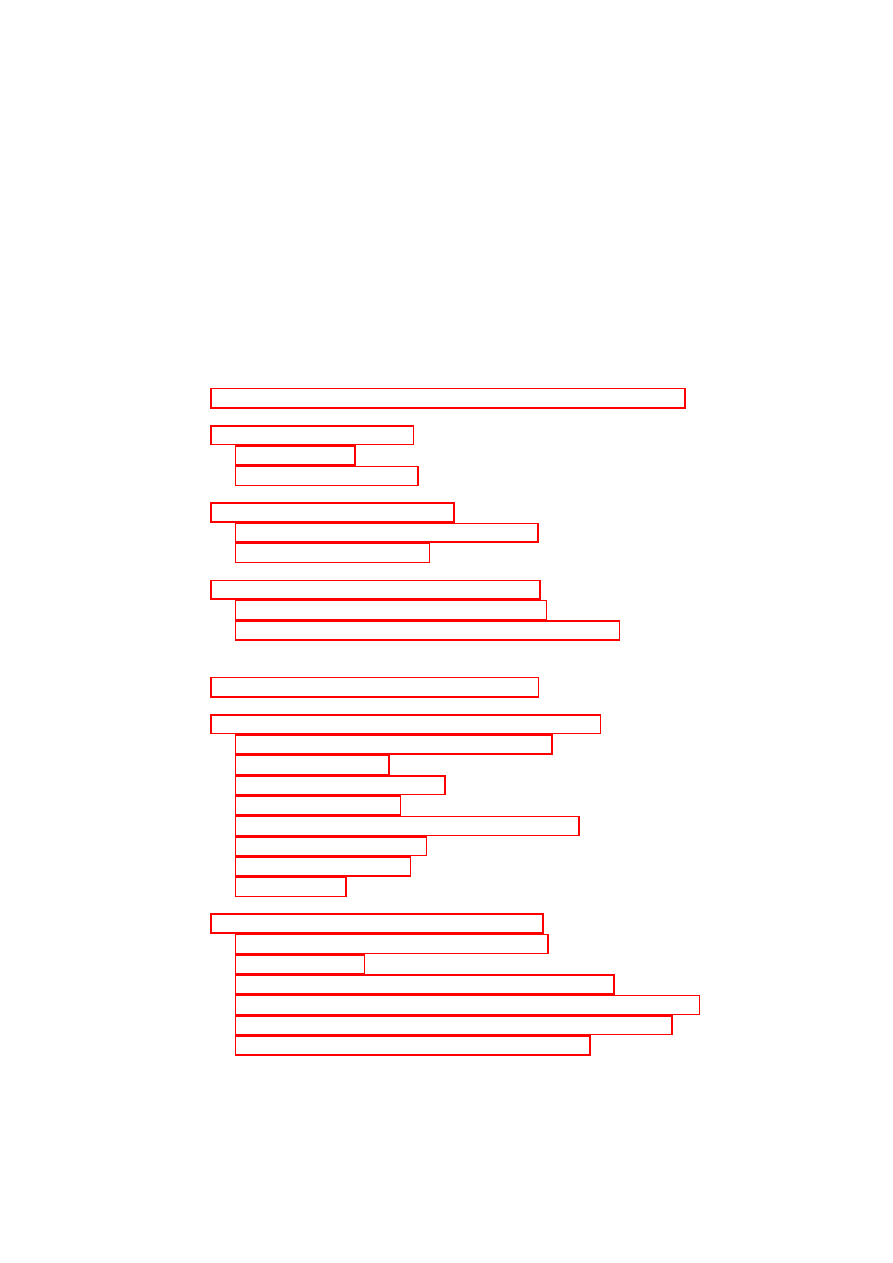

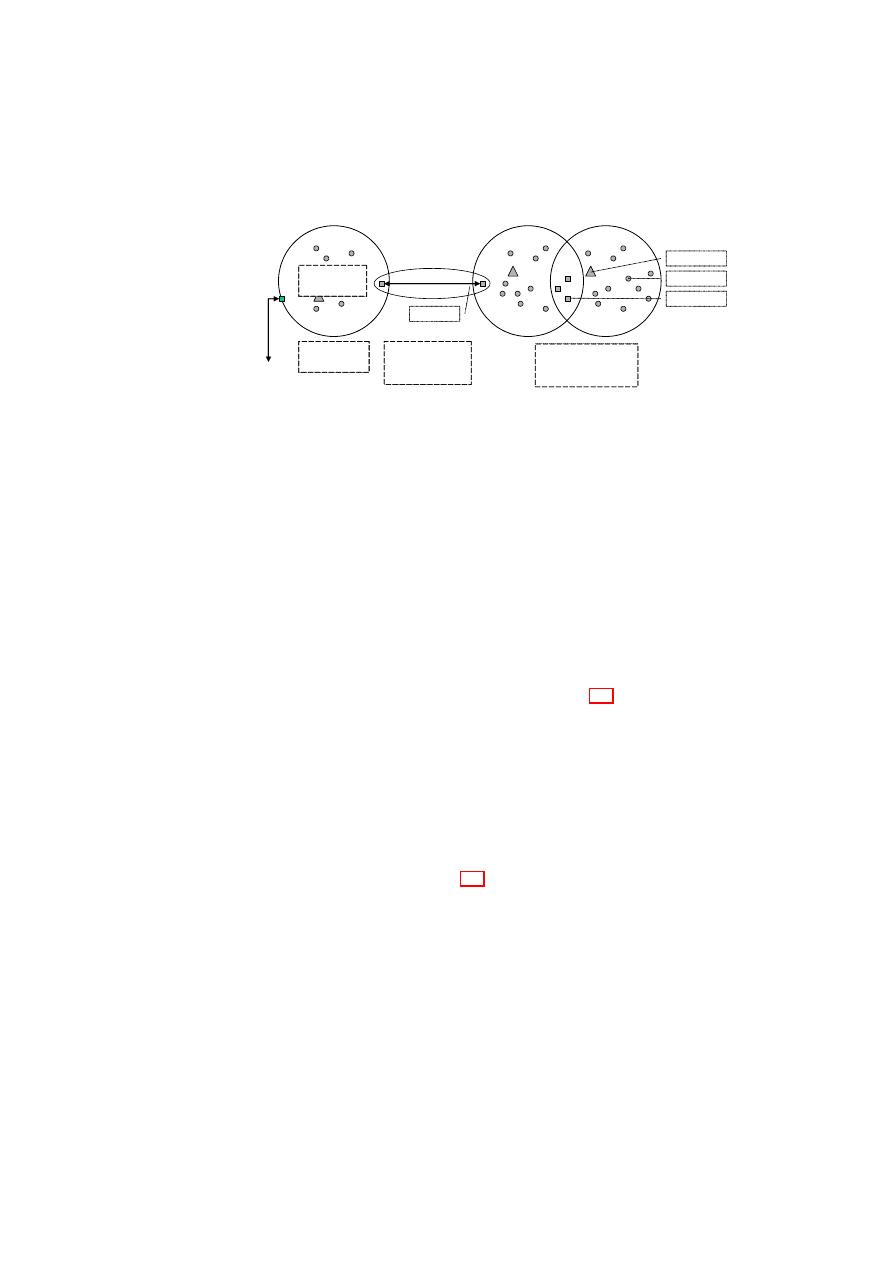

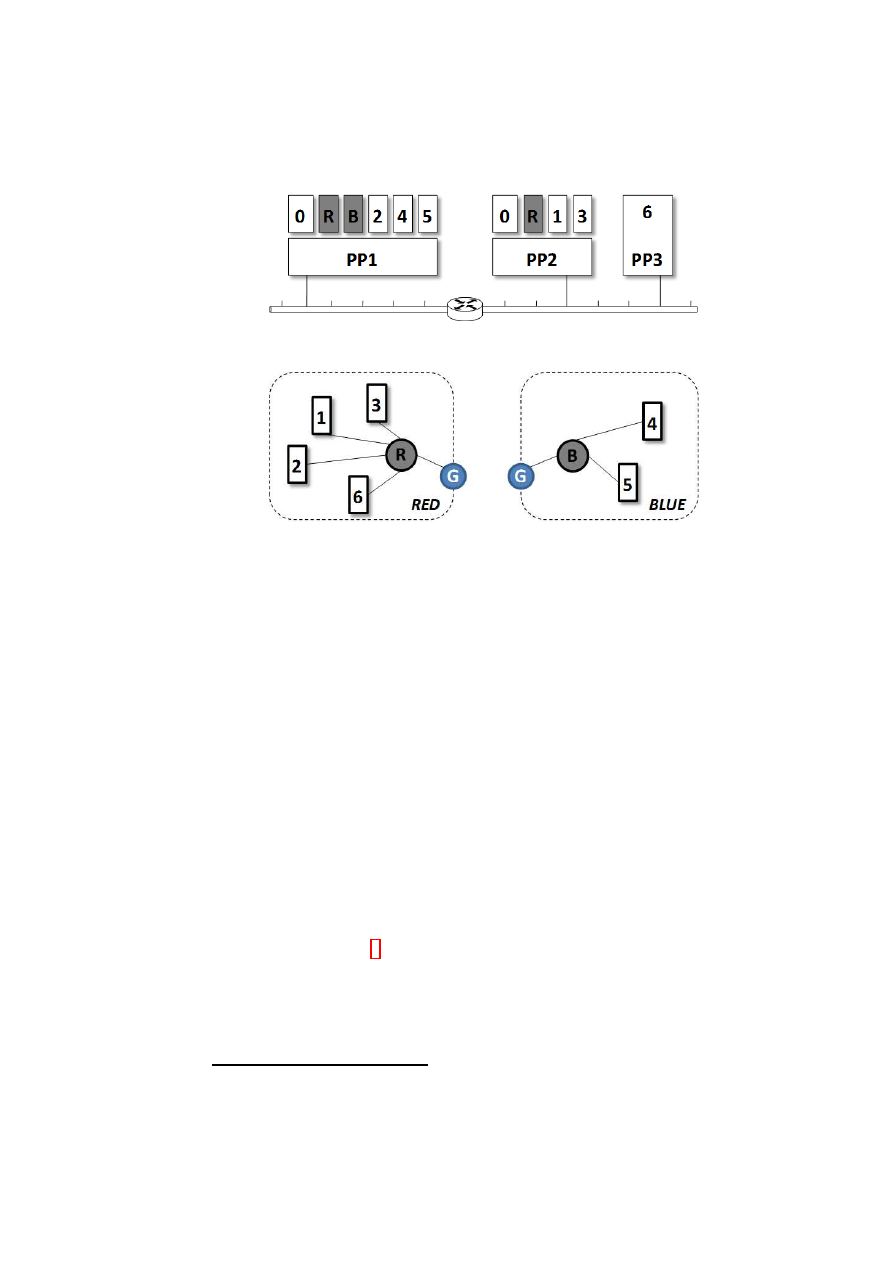

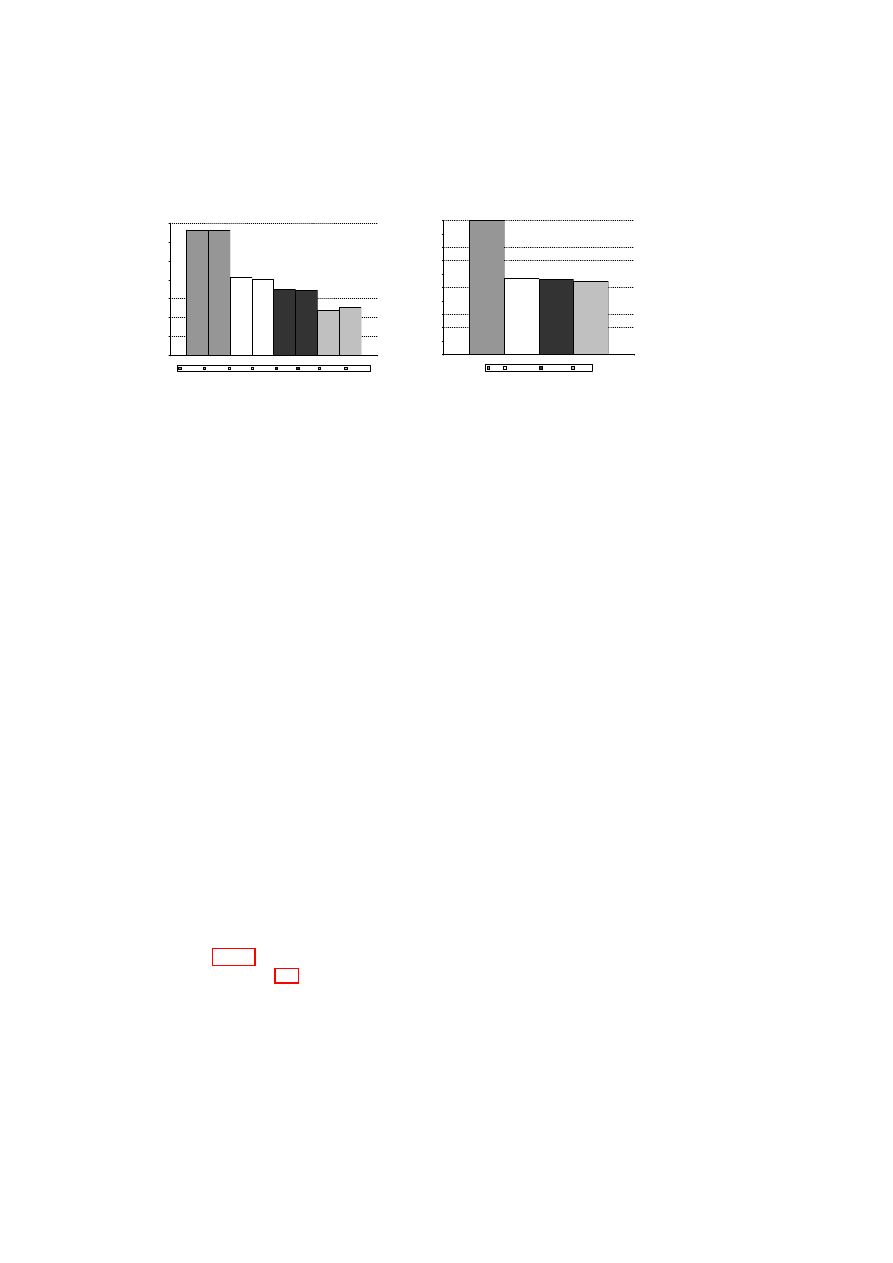

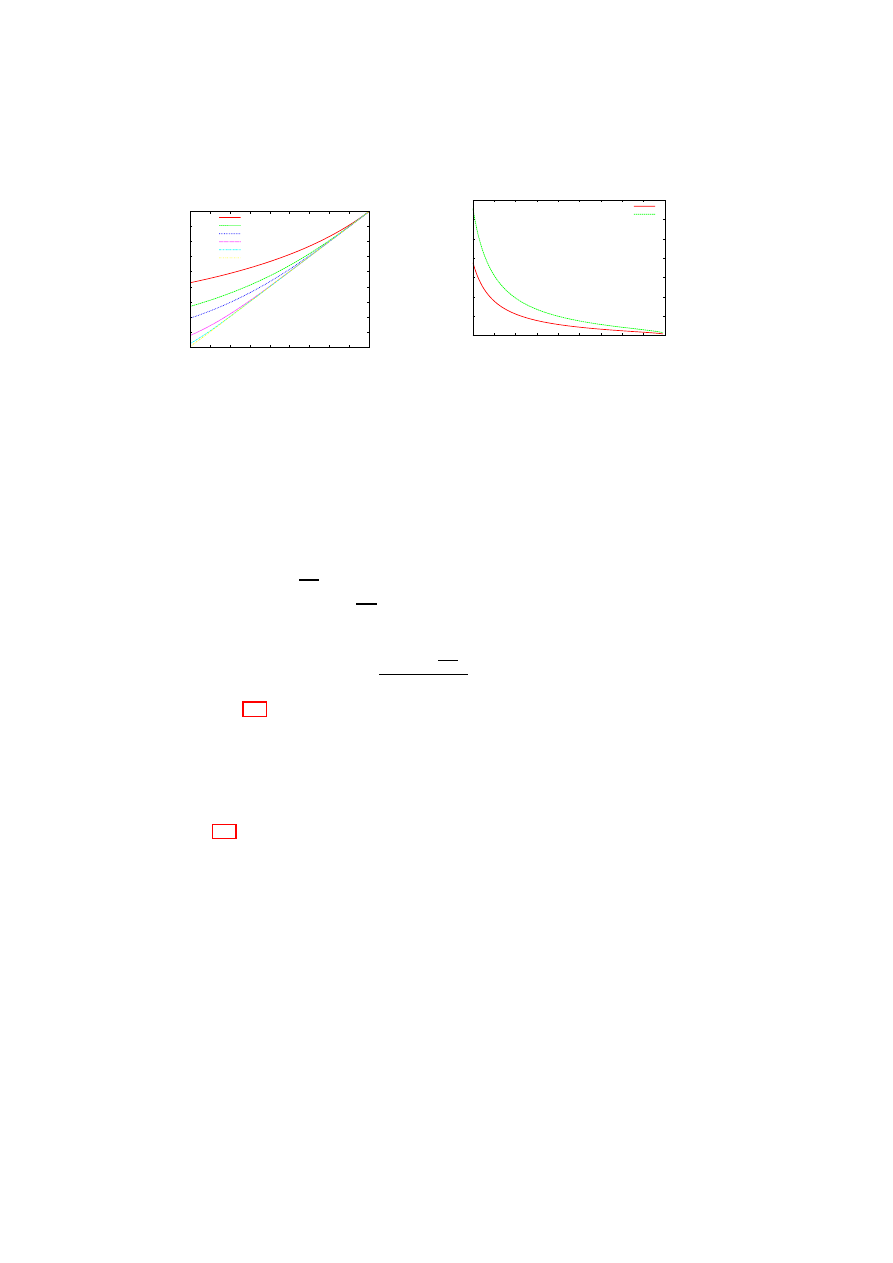

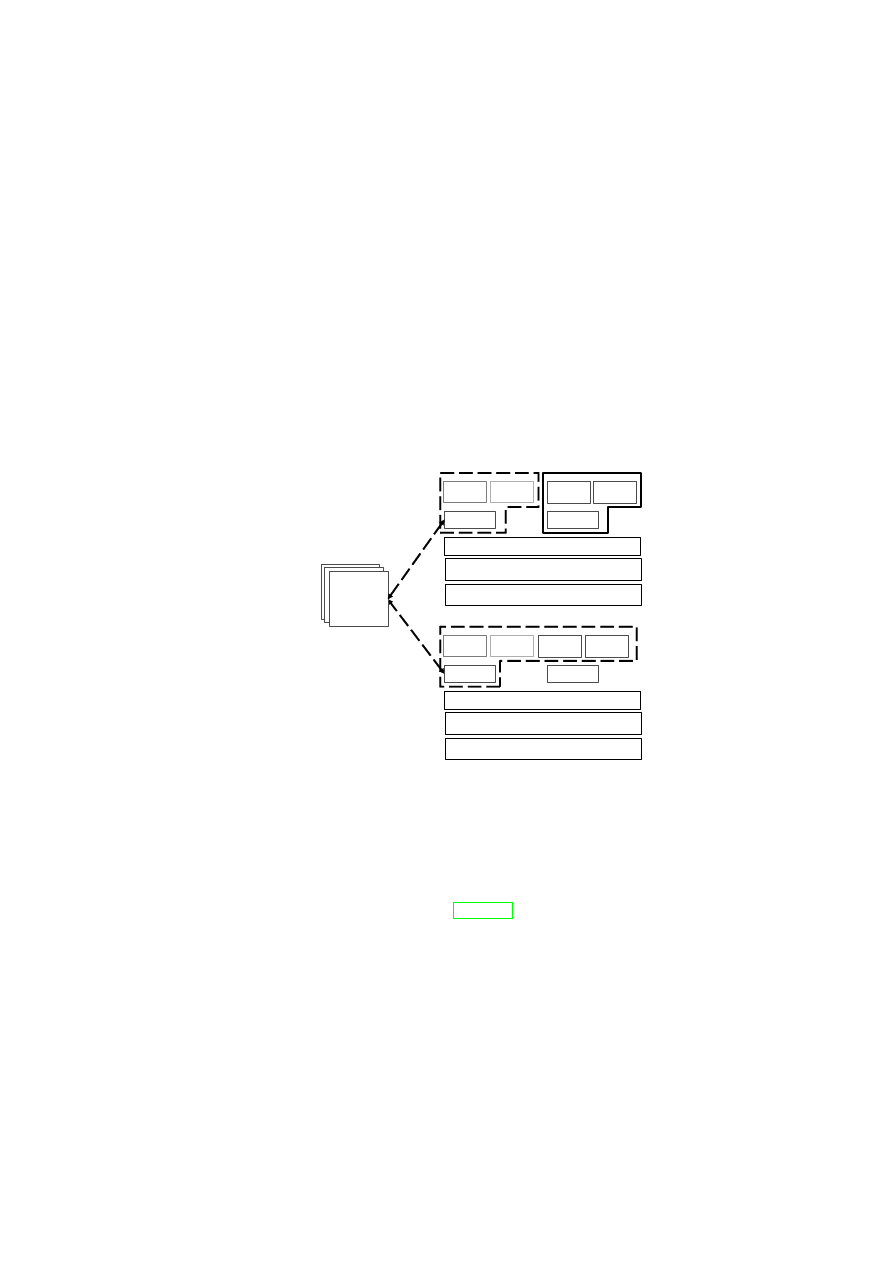

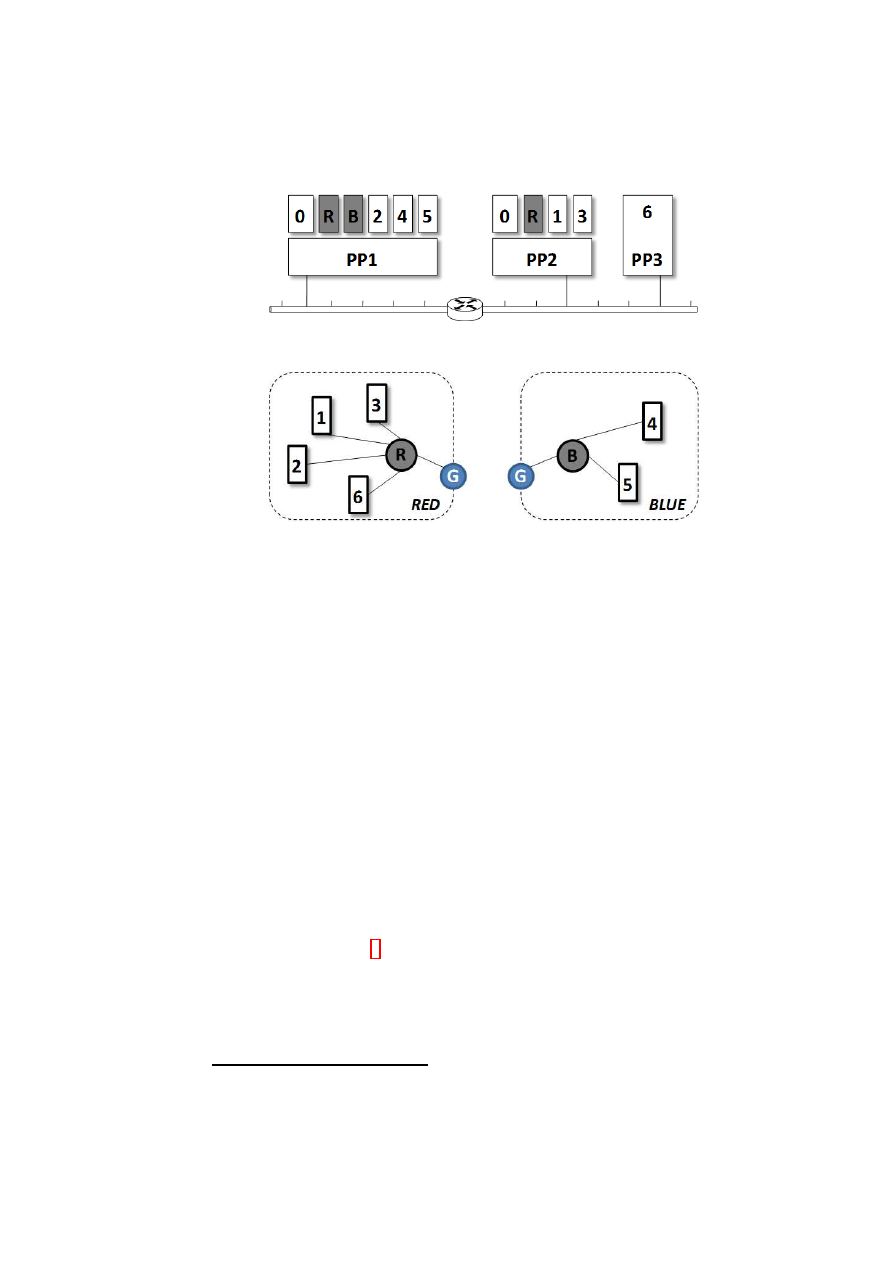

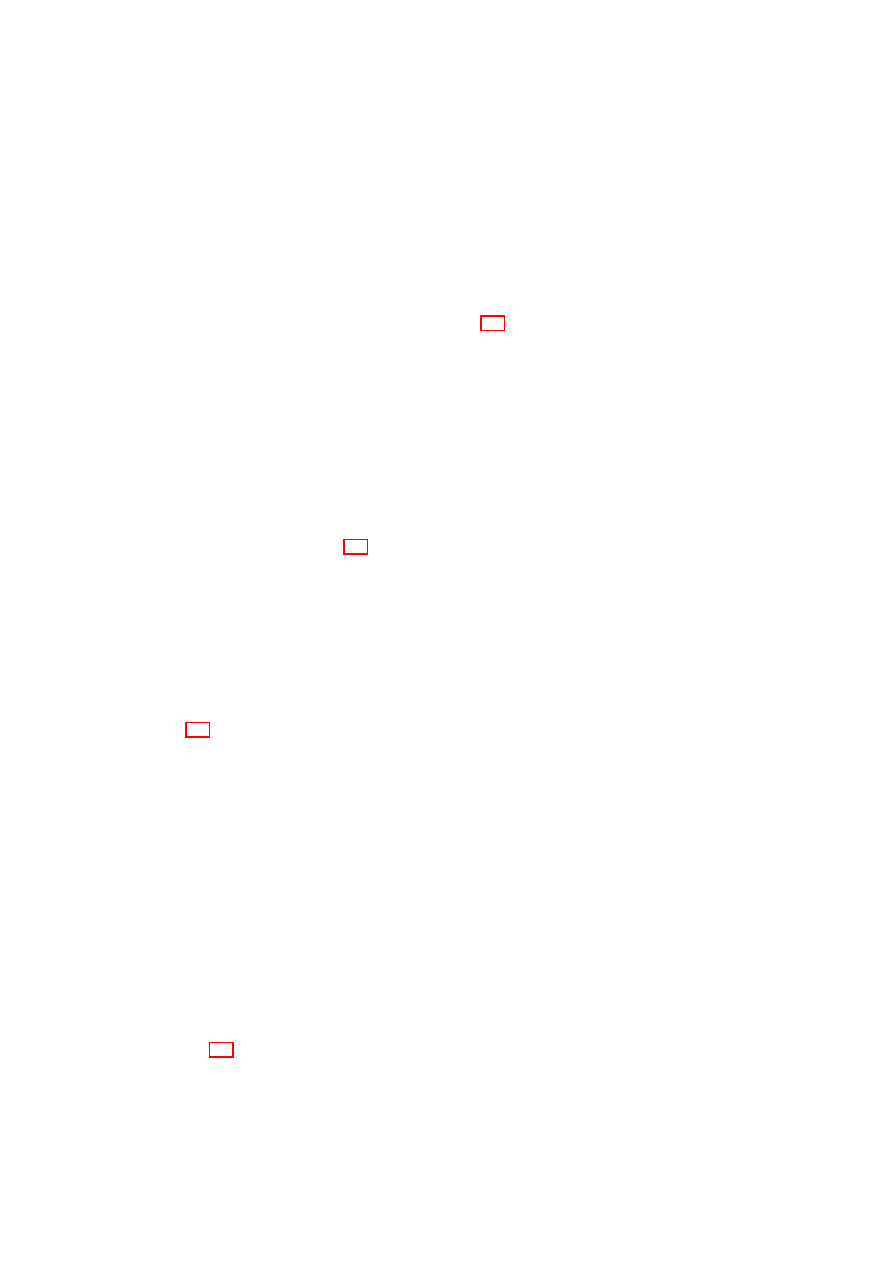

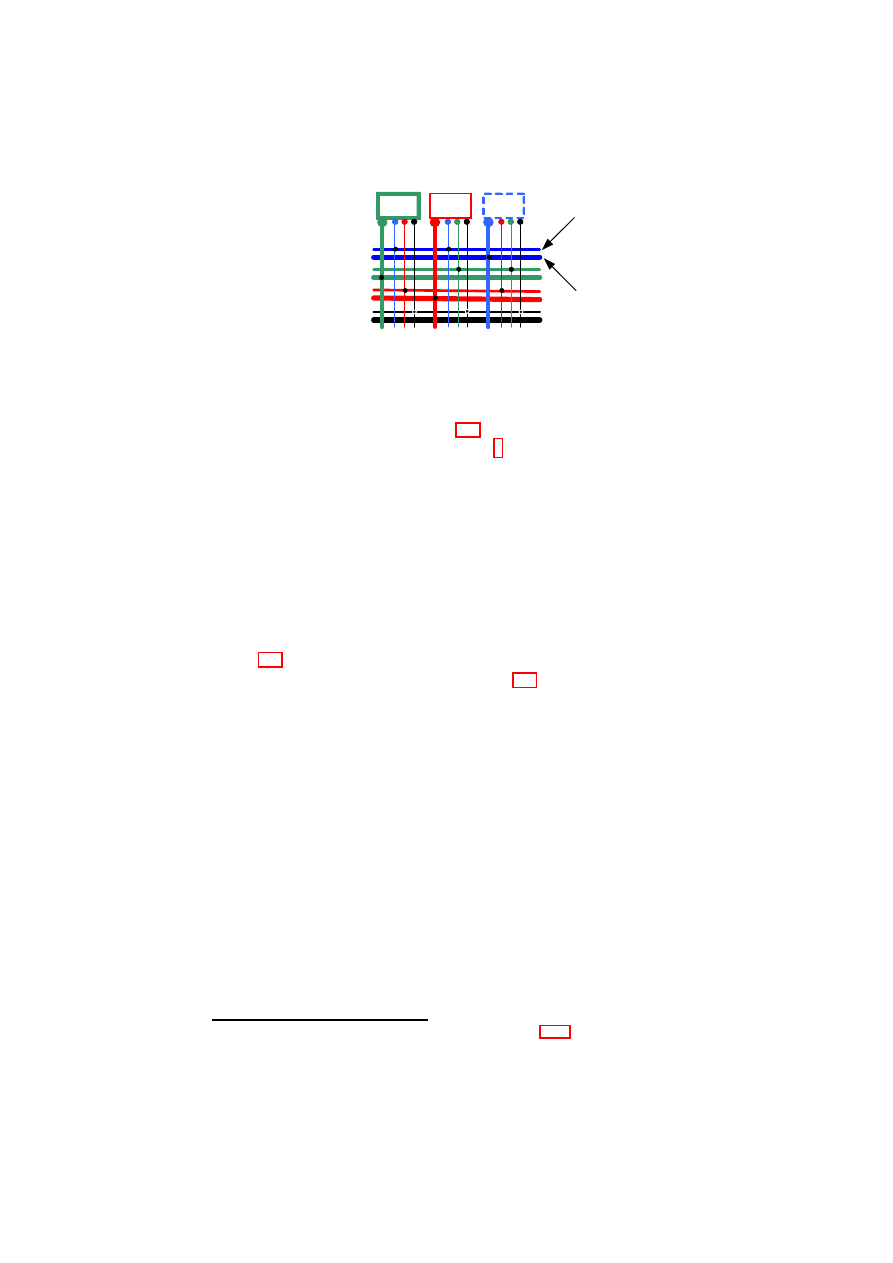

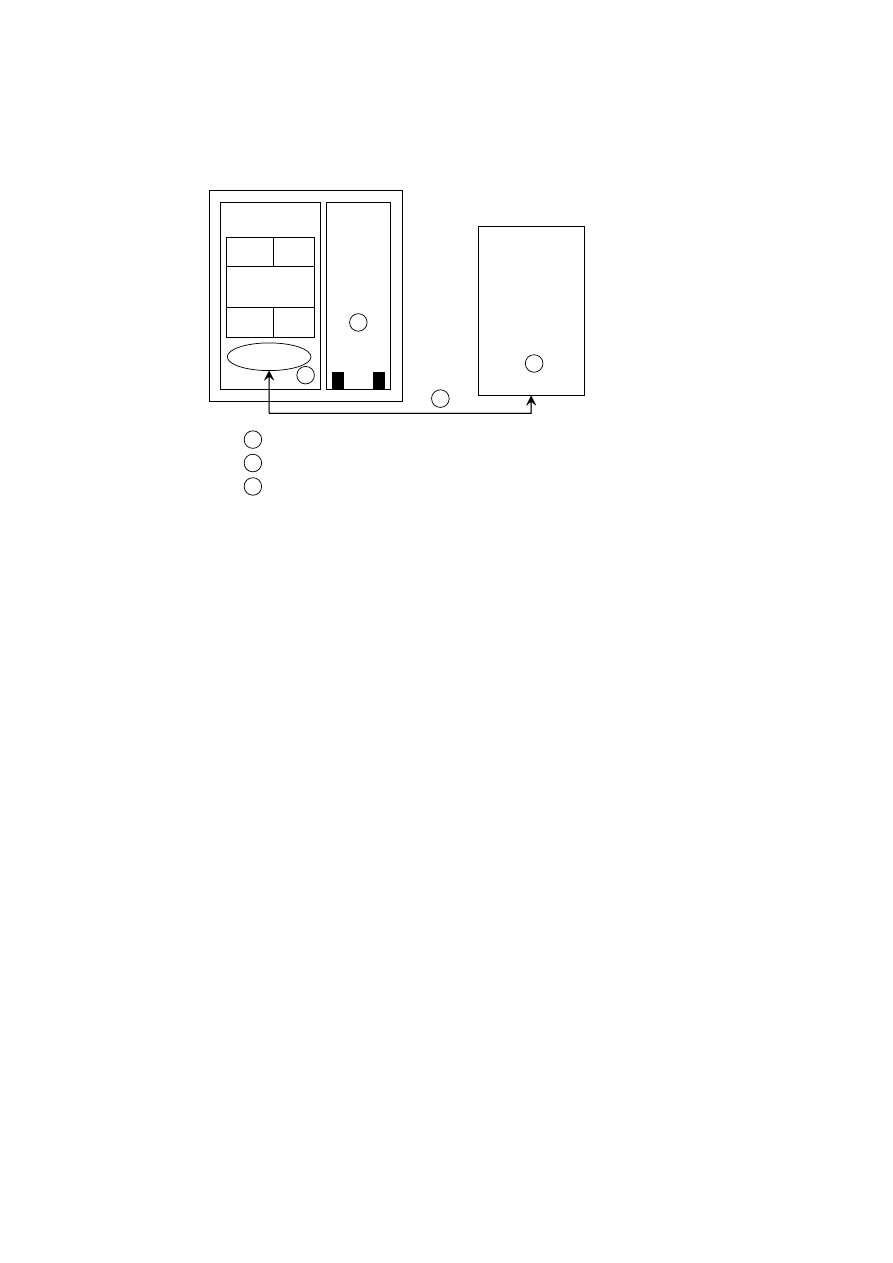

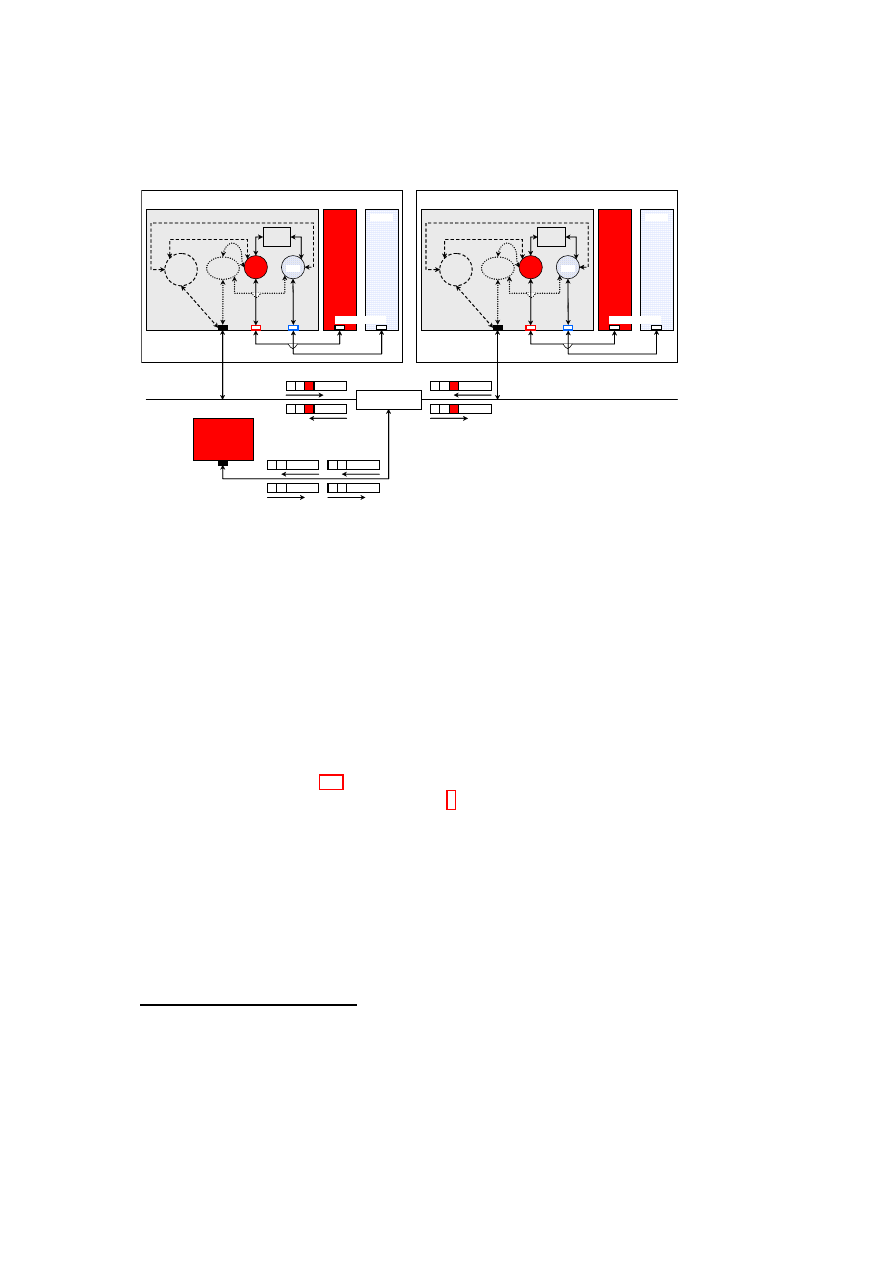

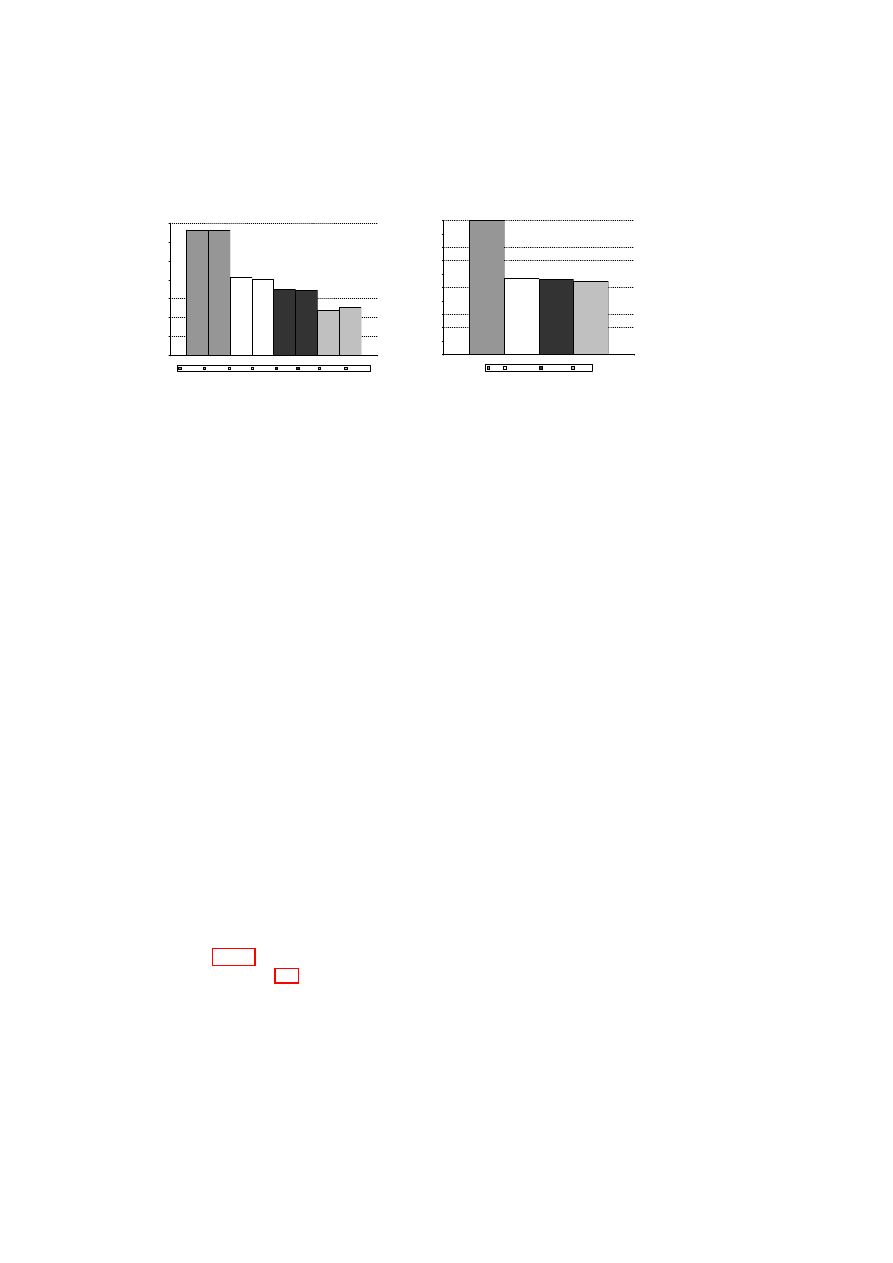

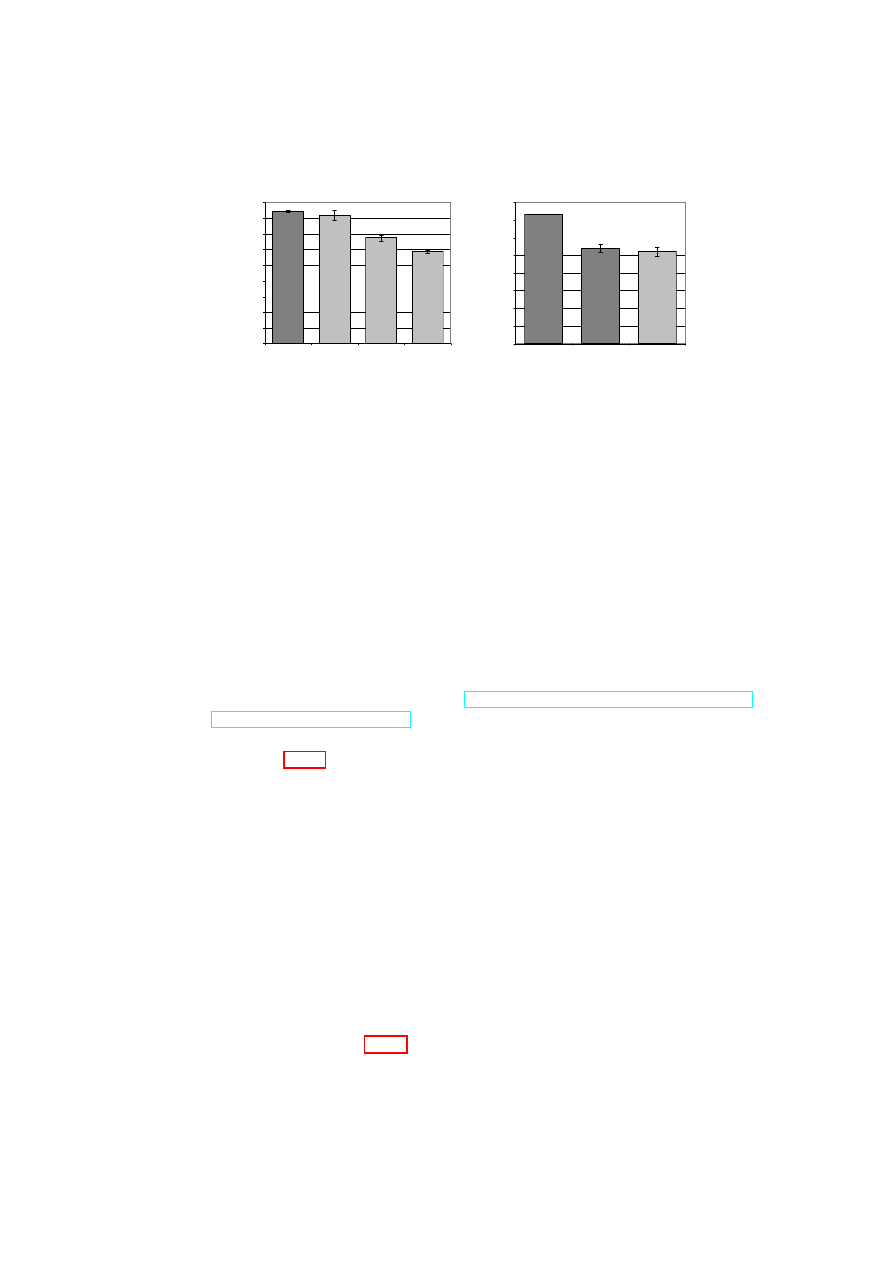

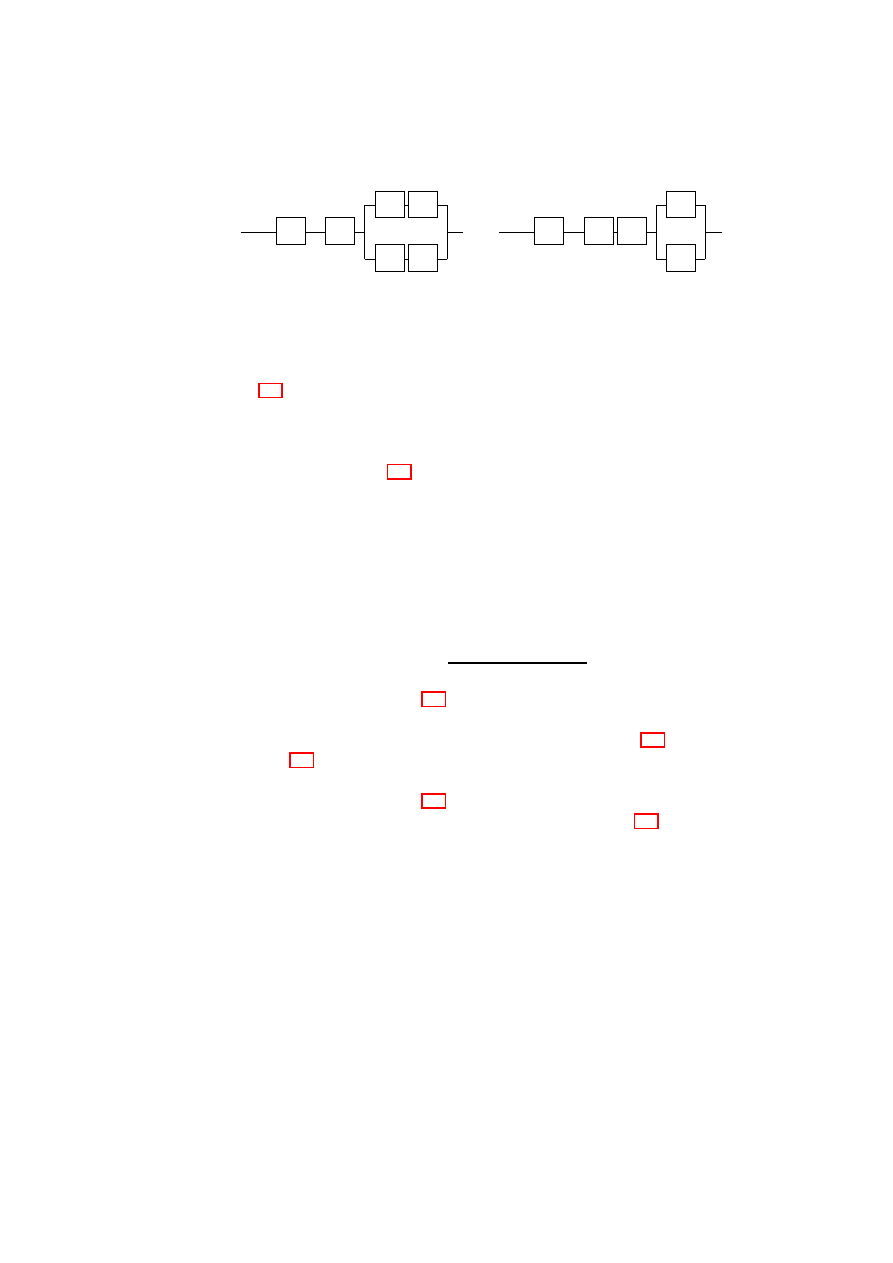

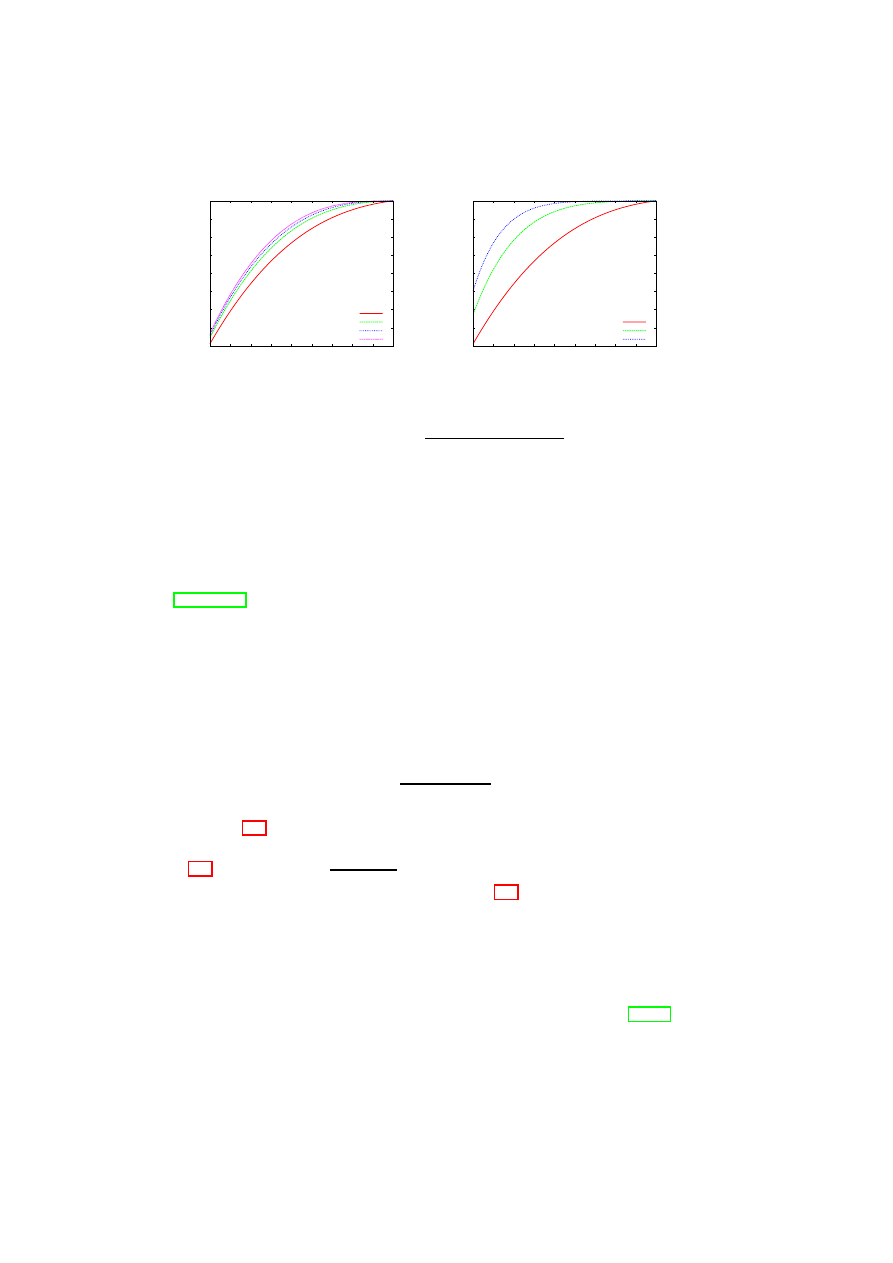

tives. Figure 4.1 illustrates an example mapping. Figure 4.1(a) shows the actual

layout of the physical infrastructure that includes virtualized and non-virtualized

platforms and networking devices. Figure 4.1(b) shows the logical layout as seen

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

CHAPTER 4. AUTOMATED PROVISIONING OF SECURE VIRTUAL NETWORKS

31

(a) Physical view.

(b) Logical view.

Figure 4.1: An example TVD-based virtual network mapping.

R

and

B

provide

switching for red and blue TVDs, respectively.

G

is a virtual gateway that controls

inter-TVD communication (not shown in (a) for clarity).

by each TVD owner (i.e., red and blue). In this section, we provide an overview

of the mechanisms that enable this mapping while enforcing TVD policies within

and across domains.

4.2.1

High-level Objectives

We are specifically interested in virtualized data centers that host multiple cus-

tomers with potentially conflicting interests. In this setting, the TVD-based net-

working framework has the following functional and security objectives:

Virtual Networking The framework should support arbitrary logical network

topologies that involve virtualized and non-virtualized platforms alike.

Customer Separation The framework should provide sufficient isolation guaran-

tees to customers, i.e., information leakage should be minimized between

customer domains

1

.

Unified Policy Enforcement The framework should enforce domain policies

within and across domains in a seamless manner. The policies define in-

tegrity, confidentiality, and isolation requirements for each domain, and in-

formation flow control requirements between any two domains.

1

In this chapter, we do not address indirect communication channels (e.g., covert channels).

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

32

OpenTC D05.5 – Design and Concept of a Trusted Virtual Datacenter

Autonomous Management The framework should deploy and maintain customer

TVDs in an automated manner, and provide customers the tools to manage

their TVDs with no direct or side effect on other customer TVDs.

4.2.2

Overview of Networking Infrastructure

Virtual network extensions enable groups of related VMs running on separate phys-

ical machines to be connected together as though they were on their own separate

network fabric. This allows the creation of arbitrary virtual LAN (VLAN) topolo-

gies independent of the particular underlying physical network topology. It also

provides a level of isolation between VLAN segments.

A virtual network is comprised of virtual and physical network entities. The

central VLAN component is a virtual switch. There is a single virtual switch per

VLAN segment. A VM appears on a particular VLAN segment if one of its virtual

network interface cards (Venice) is “plugged” into one of the switch ports on the

virtual switch forming that segment. The virtual switch behaves like a normal

physical switch and coordinates traffic to and from member VMs. Figure 4.1(b)

shows two virtual switches

R

and

B

that coordinate the traffic for red and blue

segments. In the foregoing arrangement, each virtual switch resides in a small VM

of its own as shown in Figure 4.1(a). Additional VPN and NAC modules can be

employed to strengthen security properties on untrusted communication lines and

to authenticate VMs prior to VLAN admission, respectively.

4.2.3

Overview of TVD Infrastructure

A TVD is represented by a set of distributed virtual processing elements (VPE)

(e.g., virtual machines and virtual switches) and a communication medium in-

terconnecting the VPEs. The TVD provides a policy and containment boundary

around those VPEs. At a high level, the TVD policy has three aspects: (1) mem-

bership requirements for the TVD, (2) interaction among VPEs of that TVD, and

(3) interaction of the TVD with other TVDs and the outside world. VPEs within

each TVD can usually communicate freely and securely with each other. At the

same time, they are sufficiently isolated from outside VPEs, including those be-

longing to other TVDs. From a networking perspective, isolation loosely refers to

the requirement that a dishonest VPE in one TVD cannot send messages to a dis-

honest VPE in another TVD, unless the inter-TVD policies explicitly allow such

an information flow.

Each TVD has an associated infrastructure whose purpose is to provide a uni-

fied level of security to member VPEs, while restricting the interaction with VPEs

outside the TVD to pre-specified, well-defined means only. Unified security within

a domain is obtained by defining and enforcing membership and communication

requirements that the VPEs and networks have to satisfy before being admitted to

the TVD and for retaining the membership. Unified security across domains is

obtained by defining and enforcing flow requirements that the VPEs have to sat-

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

CHAPTER 4. AUTOMATED PROVISIONING OF SECURE VIRTUAL NETWORKS

33

isfy to be able to interact with VPEs in other TVDs. To do so, each TVD defines

rules regarding in-bound and out-bound network traffic. Their purpose is to restrict

communication with the outside world.

The central element in the TVD infrastructure is the TVD master which keeps

track of high-level TVD policies and secrets. TVD masters maintain control over

member VPEs using TVD proxies. There is a single TVD master per TVD and a

single TVD proxy per physical platform. A VPE appears on a particular TVD if

it complies with the membership requirements enforced by the TVD proxy con-

trolling that domain on that physical platform. VPEs communicate freely within

a TVD. Communication across TVDs is controlled by entities configured by TVD

proxies.

4.2.4

Overview of TVD-based Virtual Networking

Virtual networking and TVD infrastructures play complementary roles in meet-

ing the objectives listed earlier. In essence, the VNET infrastructure provides the

tools and mechanisms to enable secure virtual networking in a data center. The

TVD infrastructure defines unified domain policies, and automatically configures

and manages these mechanisms inline with the policy. It also provides customers

the necessary tools to manage their TVDs. Any action by the customer is then

seamlessly reflected on the underlying networking infrastructure. This two-layer

architecture has many advantages:

1. The policy (TVD) and enforcement (VNET) layers are clearly separated

from each other, which yields a modular framework. As a result, other tech-

nologies (e.g., VNET) can be easily deployed in place of the current offering.

2. The policy layer is not restricted to network-type policies. Thus, same poli-

cies can be used to configure and manage, for example, mechanisms to en-

force storage isolation.

3. The policy layer provides a high-level abstraction to customers so that they

can manage their TVDs without worrying about the specifics of the underly-

ing enforcement mechanisms.

4. Automated deployment, configuration, and management of customer TVDs

are desirable capabilities in a virtualized data center that can effectively re-

duce operational costs and leverage virtualization to the fullest extent.

4.3

Networking Infrastructure

In this section, we present the network virtualization layer that enables the creation

of arbitrary virtual topologies on top of the underlying physical network. This

layer also provides the basic mechanisms to help enforce TVD admission and flow

policies at a higher level.

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

34

OpenTC D05.5 – Design and Concept of a Trusted Virtual Datacenter

4.3.1

Aims and Security Requirements

The main aim of our network virtualization extensions is to allow groups of related

VMs running on separate physical machines to be connected together as though

they were on their own separate network fabric. In particular, we would like to cre-

ate arbitrary virtual network topologies independently of the underlying physical

network topology. For example, a group of related VMs may have to be connected

directly together on the same VLAN segment even though, in reality, they may be

at opposite ends of a WAN link separated by many physical LAN segments. Fur-

ther, multiple segmented virtual networks may have to be established on a single

physical network segment to achieve improved security properties such as network

isolation. Our network virtualization extensions are required to inter-operate with

existing non-virtualized entities (e.g., standard client machines on the Internet) and

allow our virtual networks to connect to real networks.

Virtual networking extensions must also adhere to security requirements such

as isolation and confidentiality. In particular, our extensions must isolate the vir-

tual resources used by different VLAN segments as well as the traffic generated

by each. Network connections can be established between VMs that belong to

the same VLAN segment and VMs that belong to different VLAN segments (i.e.,

TVDs). These connections must be secured to meet the security requirements

whenever they are established over untrusted physical infrastructures. Addition-

ally, all entities (virtual or physical) that seek membership to a network segment

must be properly authenticated prior to being admitted to the network.

4.3.2

Network Virtualization

One option for virtual networking is to virtualize at the IP level. However, to

avoid problems regarding the support for non-IP protocols and IP support services

(such as ARP) that sit directly on top of the Ethernet protocol, we have chosen to

virtualize at the Ethernet level.

Our network virtualization extensions allow multiple VMs belonging to dif-

ferent VLAN segments to be securely hosted on one or more physical machine.

The framework provides isolation among various segments using a combination of

VLANs and virtual private networks (VPNs). Each VLAN segment is an Ethernet

broadcast domain and there is one internal VLAN for each security domain (i.e.,

TVD)

2

. An external VLAN may be used for communication with other TVDs and

TVD-external entities. In the absence of a trusted physical network, each VLAN

segment can employ an optional VPN layer to provide authentication, integrity,

and confidentiality.

The virtual networking framework is comprised of a mixture of physical and

virtual networking components. Physical components include physical hosts and

standard physical networking devices such as VLAN-enabled switches and routers.

Virtual entities include VMs, vNICs, virtual switches, virtual routers, and virtual

2

In this chapter, we use both VLAN segment and TVD to denote a security domain.

OpenTC Document D05.5/V01 – Final R7343/2008/11/11/OpenTC Public (PU)

CHAPTER 4. AUTOMATED PROVISIONING OF SECURE VIRTUAL NETWORKS

35

gateways. Each VM has a number of vNICs where each can be associated with

at most one VLAN segment. Each VLAN segment is represented by a virtual

switch or a vSwitch. A VM appears on a particular VLAN if one of its vNICs

is “plugged” into one of the switch ports on the vSwitch forming that segment.

The vSwitch behaves like a normal physical switch. Ethernet broadcast traffic

generated by a VM connected to the vSwitch is passed to all VMs connected to

that vSwitch. Like a real switch, the vSwitch also builds up a forwarding table

based on observed traffic so that non-broadcast Ethernet traffic can be delivered in

a point-to-point fashion to improve bandwidth efficiency.

The vSwitch is designed to operate in a distributed fashion. The VMM on each

physical machine hosting a VM connected to a particular VLAN segment hosts part

of the vSwitch forming that VLAN segment. A component of the VMM captures

the Ethernet frames coming out of a VM’s vNIC. This component is configured

to know which vSwitch the VM is supposed to be connected to. We describe the