D05.6 Final Report of OpenTC Workpackage 5

(M37) November 2008

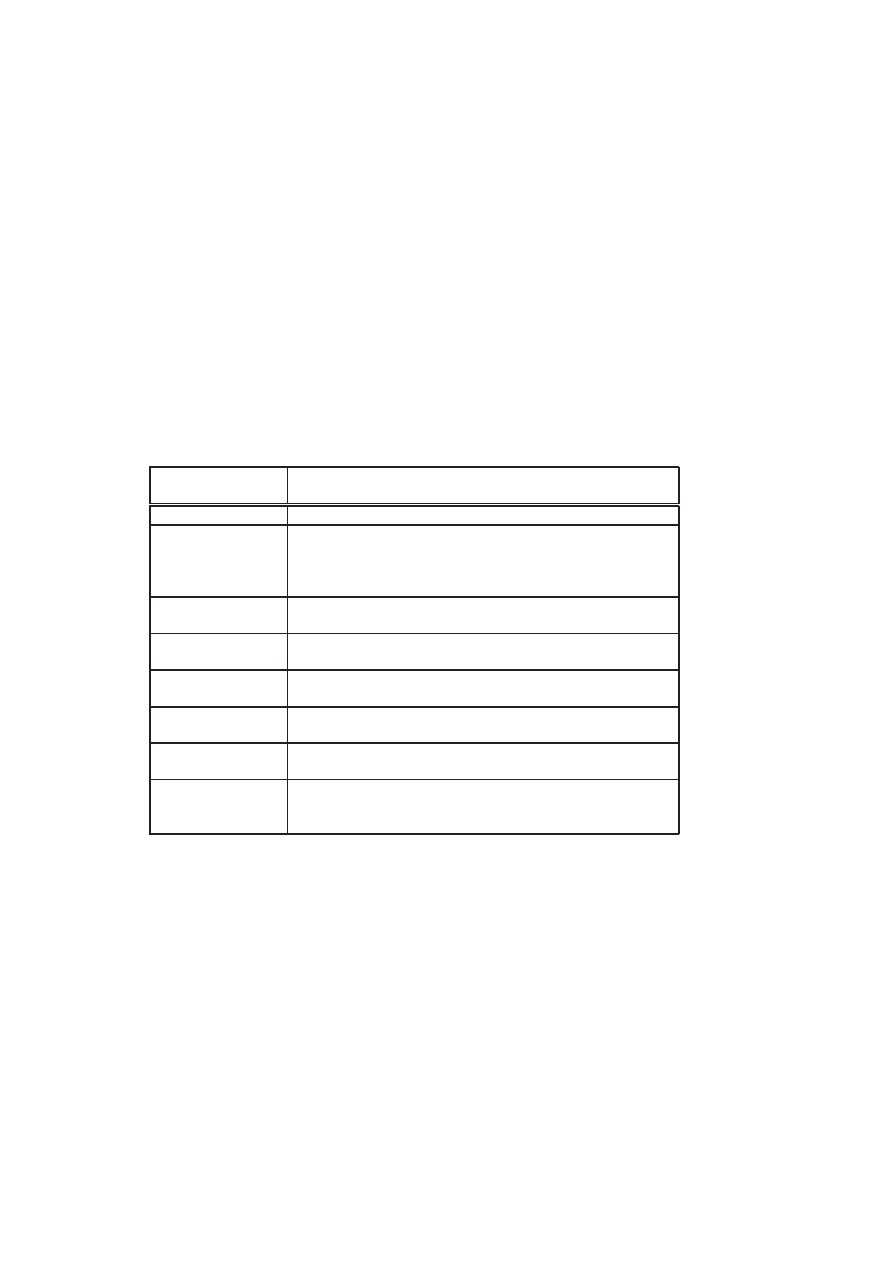

Project number

IST-027635

Project acronym

Open_TC

Project title

Open Trusted Computing

Deliverable Type

Report

Reference number

IST-027635/D05.6/V01 Final

Title

D05.6 Final Report of OpenTC Workpackage

5

WPs contributing

WP05

Due date

November 2008 (M37)

Actual submission date

January 15th, 2009

Responsible Organisation

IBM (Matthias Schunter)

Authors

CUCL, HP, IBM, IAIK, ITAS, RUB, Polito

Abstract

This report summarizes and consolidates the

main results from OpenTC Workpackage 05

“SecurityManagement and Infrastructure”.

Keywords

OpenTC, Virtualization, Trusted Computing,

Security Services, Security Management,

Virtual Systems

Dissemination level

Public

Revision

V01 Final

Instrument

IP

Start date of the

project

1

st

November 2005

Thematic Priority

IST

Duration

42 months

A

BSTRACT

This report summarizes and consolidates the main results from OpenTC Workpackage

05 “Security Management and Infrastructure”. The goal of Workpackage 5 has been to

develop mechanisms for managing security of virtual systems while leveraging trusted

computing technologies for verifiability and protection.

Part I of the deliverable introduces our goals and surveys the approach we have

taken from a high-level perspective. Part II then describes key building blocks in detail.

Part III describes the WP5 concepts in the demonstrator and concludes the deliverable

by evaluating our prototype and documenting our future outlook.

C

ONTRIBUTORS AND

A

CKNOWLEDGEMENTS

The following people were the main contributors to this report (alphabetically by or-

ganisation): Theodore Hong, Eric John, Derek Murray (CUCL); Serdar Cabuk, Chris

Dalton, Dirk Kuhlmann, David Plaquin (HP); Konrad Eriksson, Bernhard Jansen,

HariGovind V. Ramasamy, Matthias Schunter, Axel Tanner, Andreas Wespi, Diego

Zamboni (IBM); Peter Lipp, Martin Pirker (IAIK); Arnd Weber (ITAS); Frederik

Armknecht, Yacine Gasmi, Ahmad-Reza Sadeghi, Patrick Stewin, Martin Unger

(RUB); Gianluca Ramunno, Davide Vernizzi (Polito).

We would like to thank our reviewer Peter Lipp from IAIK Graz. Furthermore, we

would like to thank the other members of the OpenTC project for helpful discussions

and valuable contributions to the research that is documented in this report.

2

If you need further information, please visit our website

www.opentc.net

or contact the

coordinator:

Technikon Forschungs-und Planungsgesellschaft mbH

Burgplatz 3a, 9500 Villach, AUSTRIA

Tel.+43 4242 23355 -0

Fax. +43 4242 23355 -77

Email

coordination@opentc.net

The information in this document is provided “as is”, and no guarantee

or warranty is given that the information is fit for any particular purpose.

The user thereof uses the information at its sole risk and liability.

Final Report of OpenTC Workpackage 5

OpenTC Workpackage 5

1

OpenTC Deliverable D05.6

V01 – Final Revision. 7628 (OpenTC Public (PU))

2009/01/15

2

OpenTC D05.6 – Final Report of OpenTC Workpackage 5

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

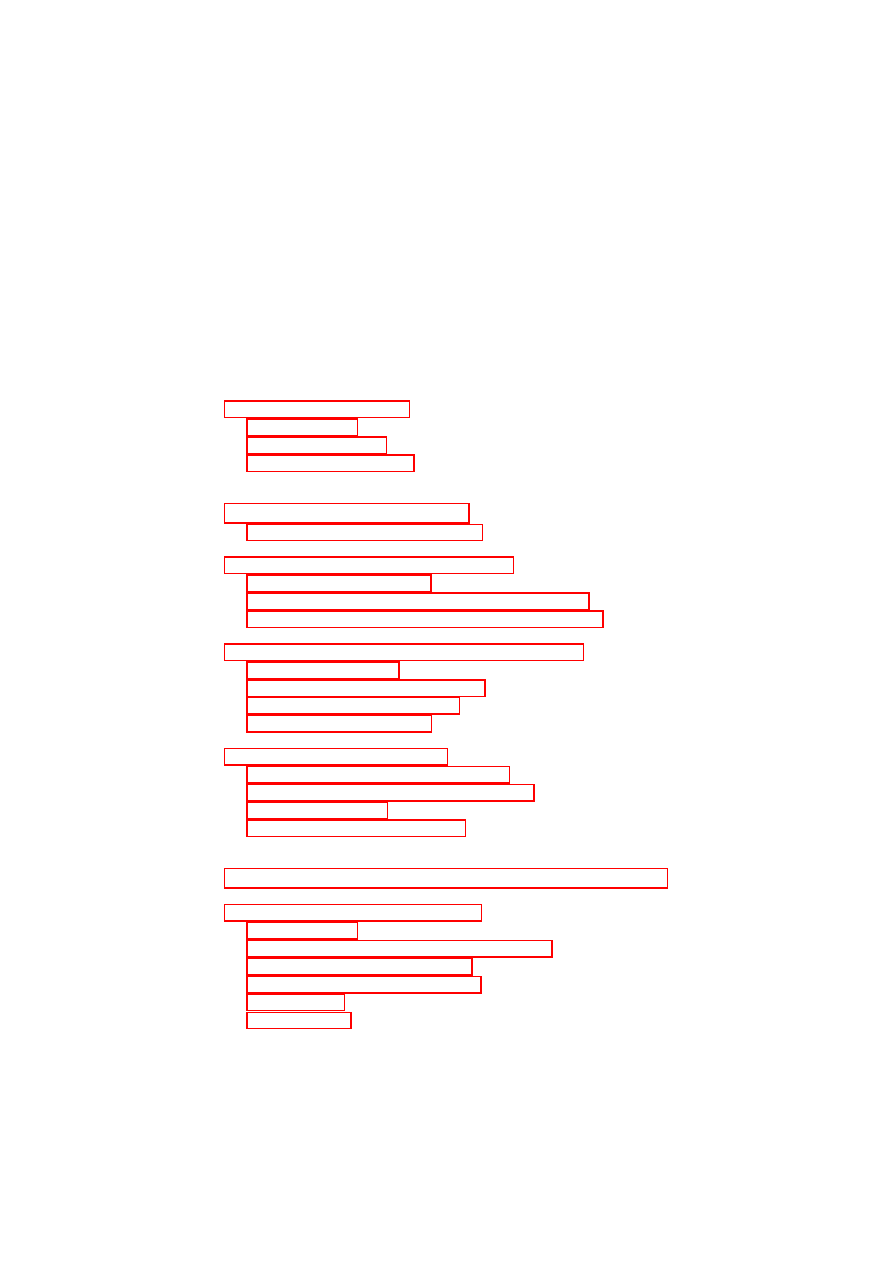

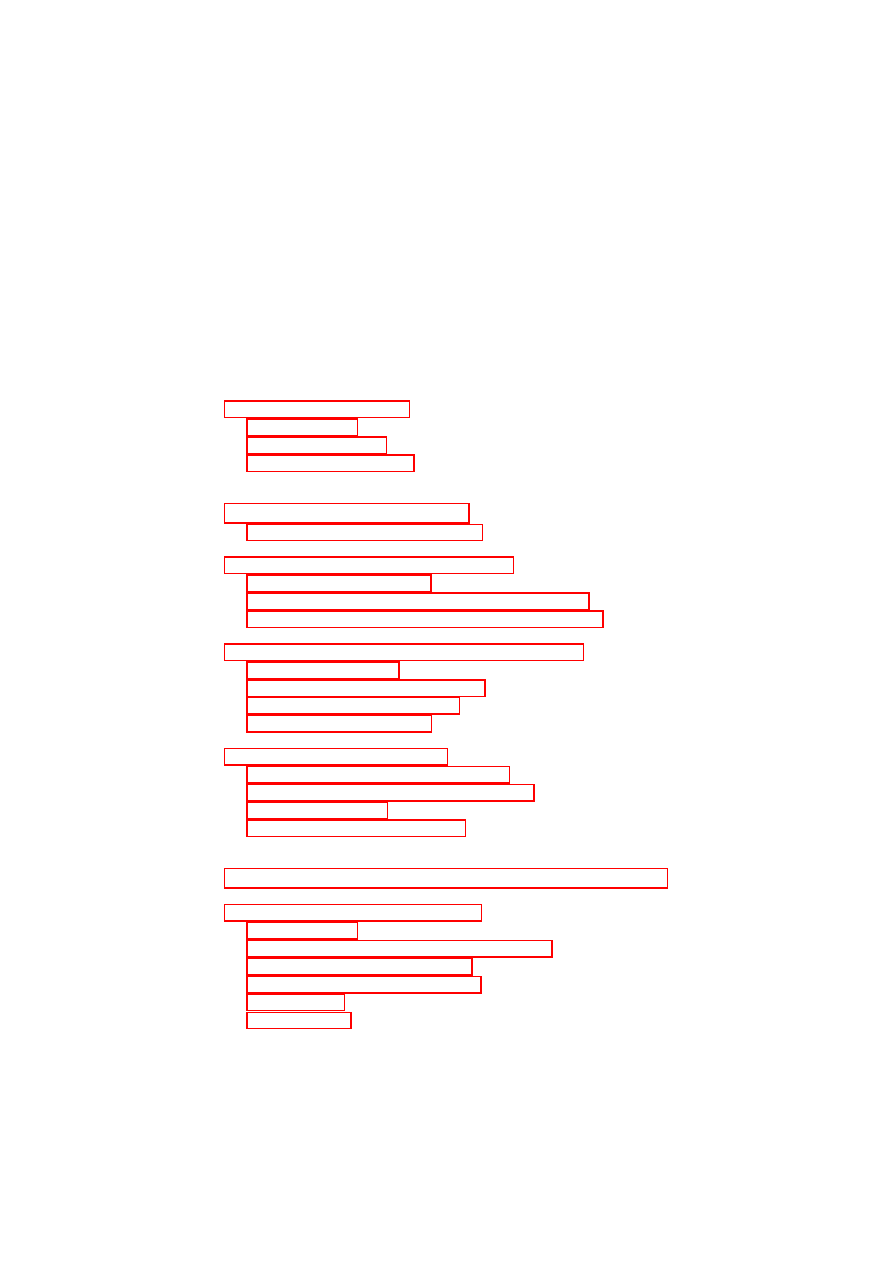

Contents

1

Introduction and Outline

7

1.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7

1.2

Our Contribution . . . . . . . . . . . . . . . . . . . . . . . . . . . .

8

1.3

Outline of this Report . . . . . . . . . . . . . . . . . . . . . . . . . .

8

I

Overview and Related Work

11

1.4

Dependability of Virtual Systems . . . . . . . . . . . . . . . . . . . .

12

2

Security Policies for Virtual Data Centers

15

2.1

High-level Policy Model . . . . . . . . . . . . . . . . . . . . . . . .

15

2.2

Security Objectives and Policy Enforcement Points . . . . . . . . . .

16

2.3

Example Policy Refinements for Protected Resources . . . . . . . . .

18

3

Unified Policy Enforcement for Virtual Data Centers

22

3.1

TVD Infrastructure . . . . . . . . . . . . . . . . . . . . . . . . . . .

22

3.2

Virtual Networking Infrastructure

. . . . . . . . . . . . . . . . . . .

23

3.3

Virtual Storage Infrastructure . . . . . . . . . . . . . . . . . . . . . .

25

3.4

TVD Admission Control . . . . . . . . . . . . . . . . . . . . . . . .

26

4

Background and Related Work

29

4.1

Overview of Trusted Virtual Domains . . . . . . . . . . . . . . . . .

29

4.2

Trusted Computing – The TCG Approach . . . . . . . . . . . . . . .

30

4.3

Trusted Channels . . . . . . . . . . . . . . . . . . . . . . . . . . . .

31

4.4

Secure Network Virtualization . . . . . . . . . . . . . . . . . . . . .

31

II

Building Blocks of the OpenTC Security Architecture

33

5

Policy Enforcement and Compliance

34

5.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

34

5.2

Formal Integrity Model for Virtual Machines

. . . . . . . . . . . . .

35

5.3

The PEV Integrity Architecture . . . . . . . . . . . . . . . . . . . . .

38

5.4

Realization using Xen and Linux . . . . . . . . . . . . . . . . . . . .

41

5.5

Use Cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

43

5.6

Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

49

3

4

OpenTC D05.6 – Final Report of OpenTC Workpackage 5

6

Hierarchical Integrity Management

51

6.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

51

6.2

Design Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . .

52

6.3

Basic Integrity Management . . . . . . . . . . . . . . . . . . . . . .

54

6.4

Hierarchical Integrity Management . . . . . . . . . . . . . . . . . . .

58

6.5

Policy Verification for Security Services . . . . . . . . . . . . . . . .

63

6.6

Implementation in Xen . . . . . . . . . . . . . . . . . . . . . . . . .

64

6.7

Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

68

7

Secure Virtualized Networking

69

7.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

69

7.2

Design Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . .

70

7.3

Networking Infrastructure

. . . . . . . . . . . . . . . . . . . . . . .

74

7.4

TVD Infrastructure . . . . . . . . . . . . . . . . . . . . . . . . . . .

81

7.5

Auto-deployment of Trusted Virtual Domains . . . . . . . . . . . . .

87

7.6

Implementation in Xen . . . . . . . . . . . . . . . . . . . . . . . . .

92

7.7

Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

95

8

Public Key Infrastructure

96

8.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

96

8.2

Basic Trusted Computing PKI . . . . . . . . . . . . . . . . . . . . .

97

8.3

Trusted Platform Agent . . . . . . . . . . . . . . . . . . . . . . . . .

100

8.4

XKMS mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

102

8.5

Open Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

110

9

Trusted Channels using OpenSSL

112

9.1

Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

112

9.2

Requirement Analysis . . . . . . . . . . . . . . . . . . . . . . . . . .

114

9.3

Basic Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . .

115

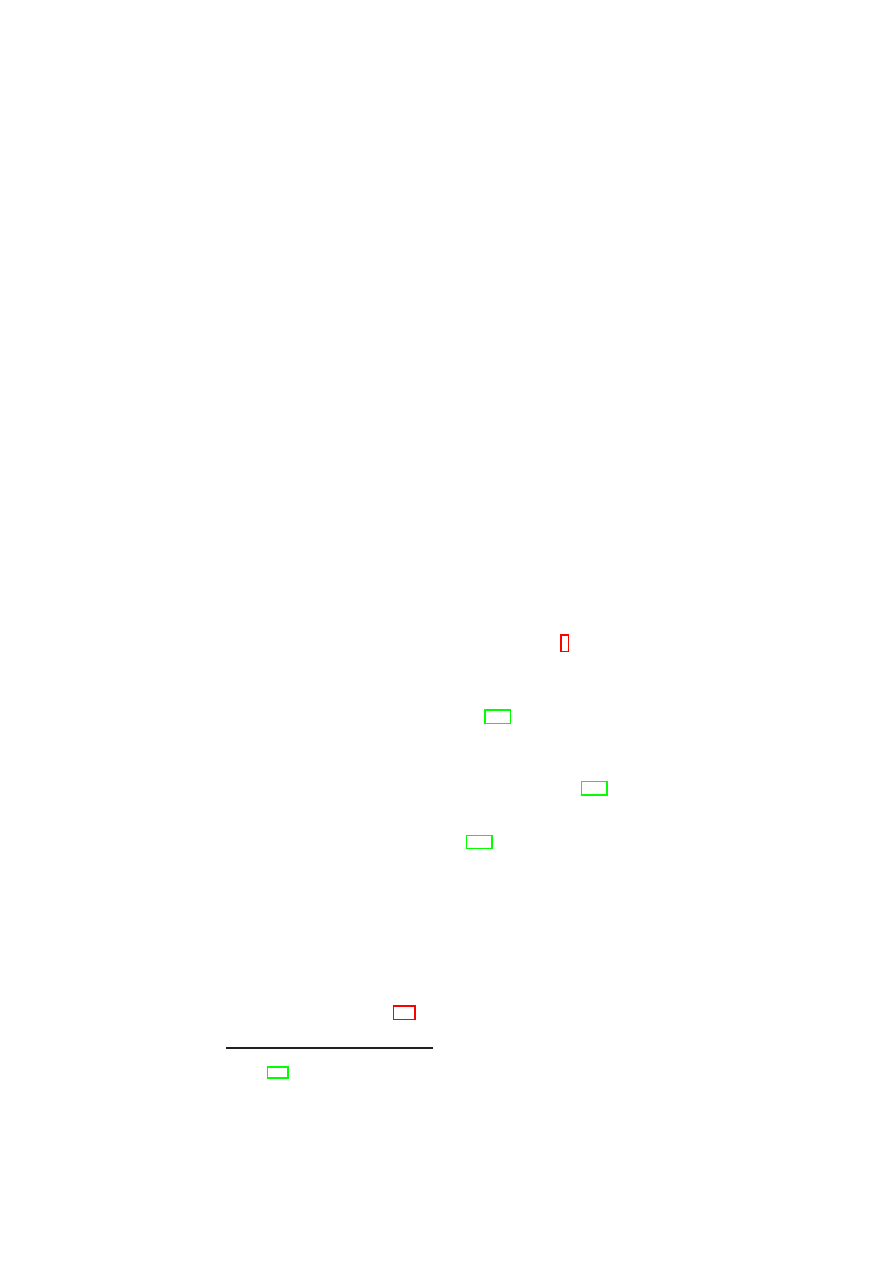

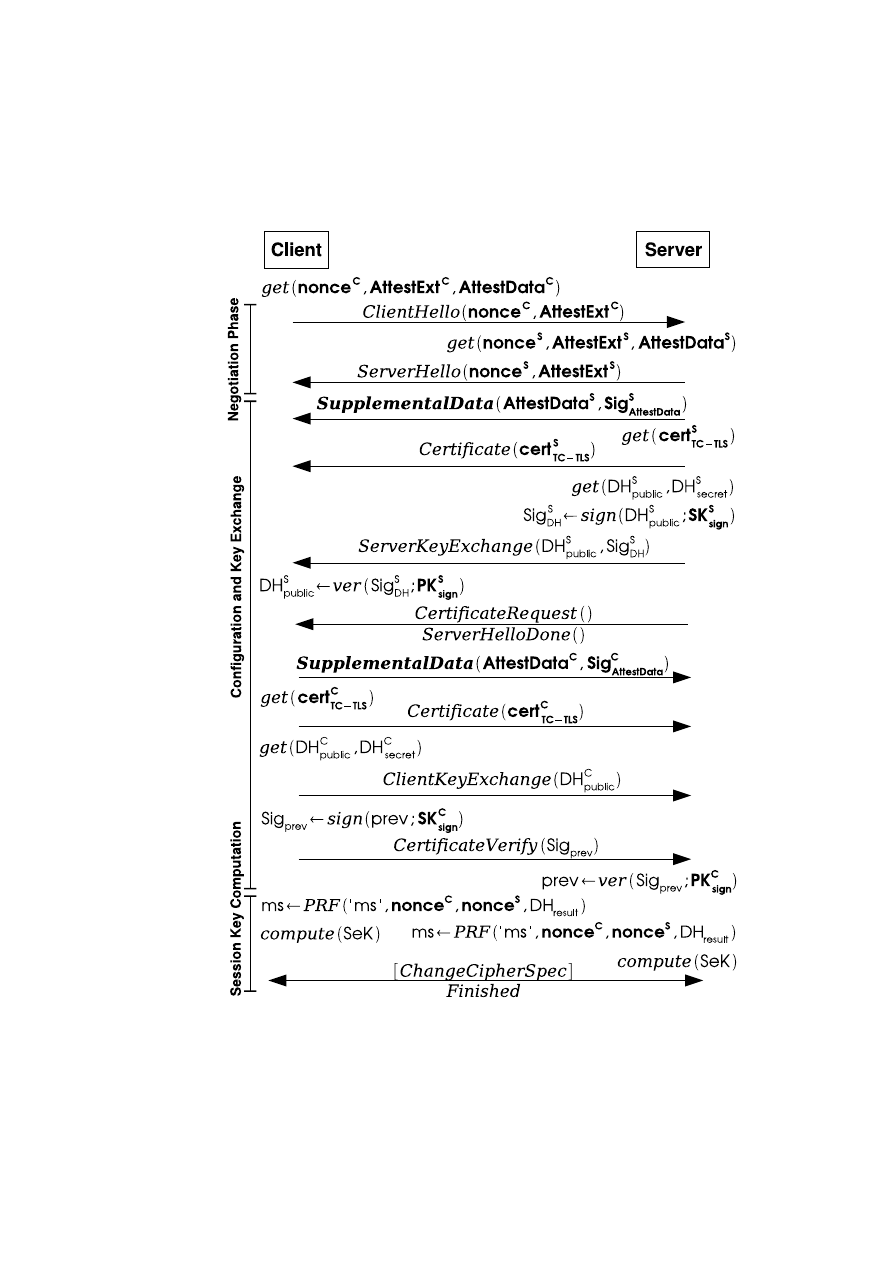

9.4

Adapted TLS Handshake . . . . . . . . . . . . . . . . . . . . . . . .

116

9.5

Detailed Description of Attestation Data Structures . . . . . . . . . .

119

9.6

Generic System Architecture . . . . . . . . . . . . . . . . . . . . . .

122

9.7

A Trusted Channel Implementation with OpenSSL . . . . . . . . . .

124

9.8

Security Considerations . . . . . . . . . . . . . . . . . . . . . . . . .

127

9.9

Functional Considerations . . . . . . . . . . . . . . . . . . . . . . .

128

9.10 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

129

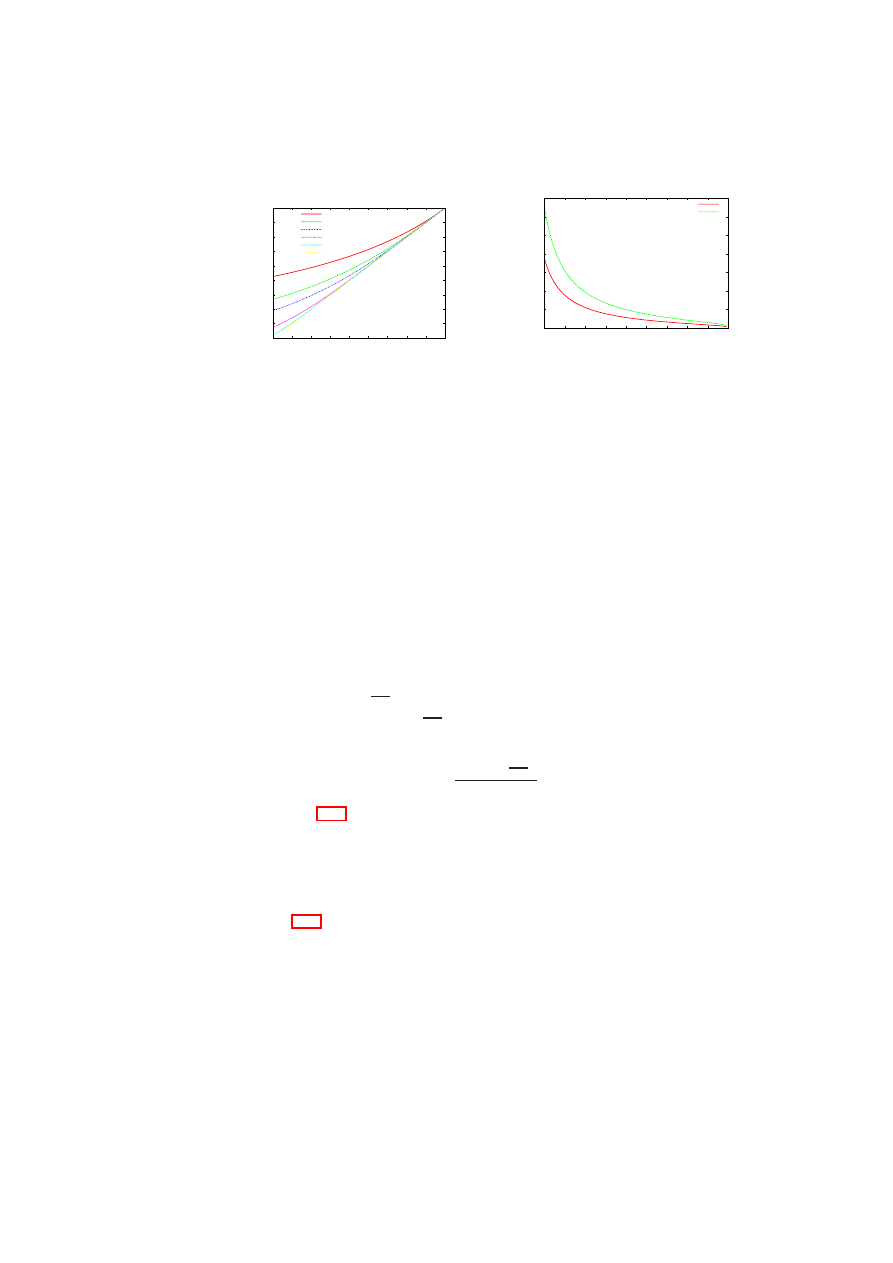

10 Towards Dependability and Attack Resistance

130

10.1 Introduction to Dependable Virtualization . . . . . . . . . . . . . . .

130

10.2 Using Virtualization for Dependability and Security . . . . . . . . . .

131

10.3 Xen-based Implementation of Intrusion Detection and Protection . . .

134

10.4 Quantifying the Impact of Virtualization on Node Reliability . . . . .

143

10.5 An Architecture for a More Reliable Xen VMM . . . . . . . . . . . .

147

III

Evaluation and Outlook

151

11 Security Management Demonstrator

152

11.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

152

11.2 Security Services . . . . . . . . . . . . . . . . . . . . . . . . . . . .

153

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

CONTENTS

5

11.3 TVD Master, Proxies and Proxy Factories . . . . . . . . . . . . . . .

155

11.4 Secure Virtual Network subsystem . . . . . . . . . . . . . . . . . . .

155

11.5 Trusted Channel Proxies . . . . . . . . . . . . . . . . . . . . . . . .

156

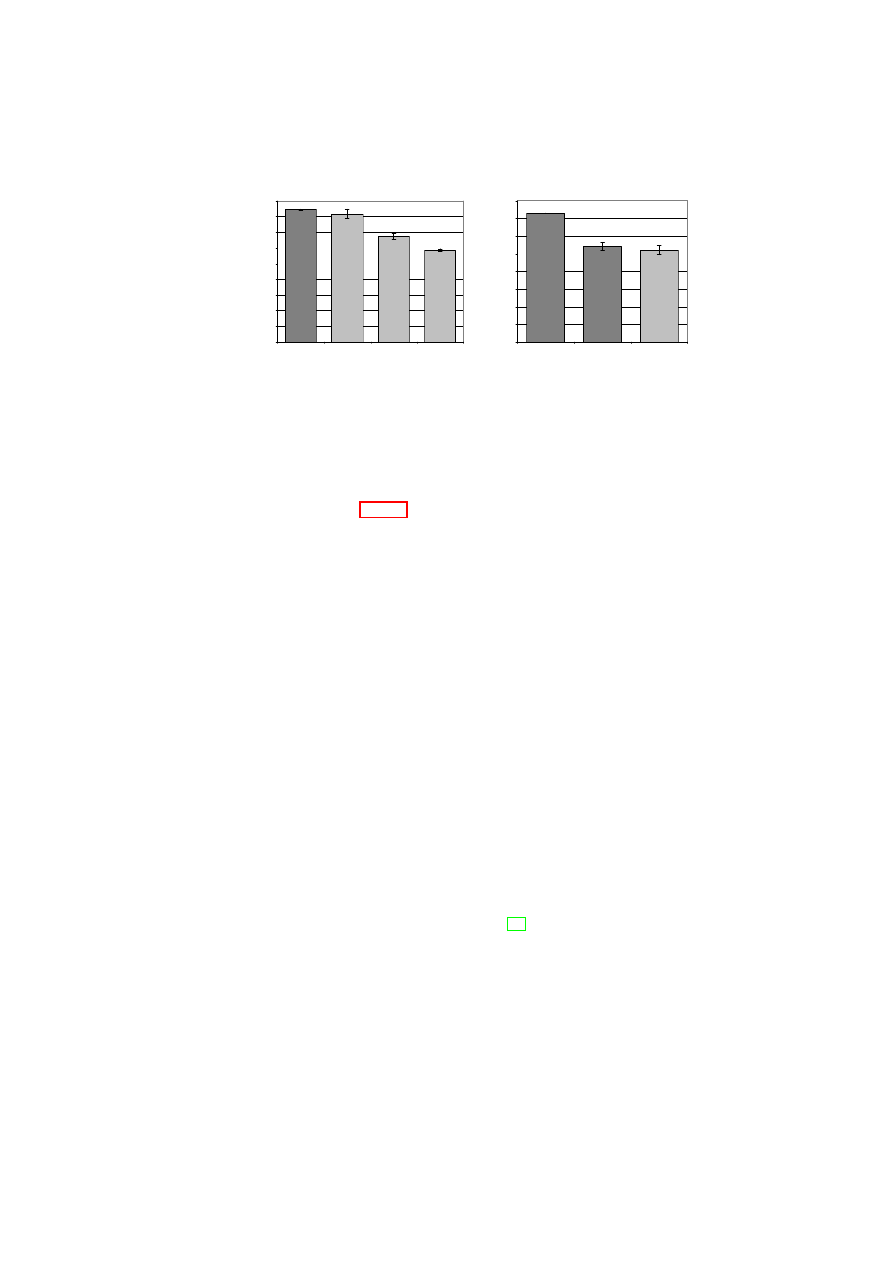

12 Evaluation of the Prototype

157

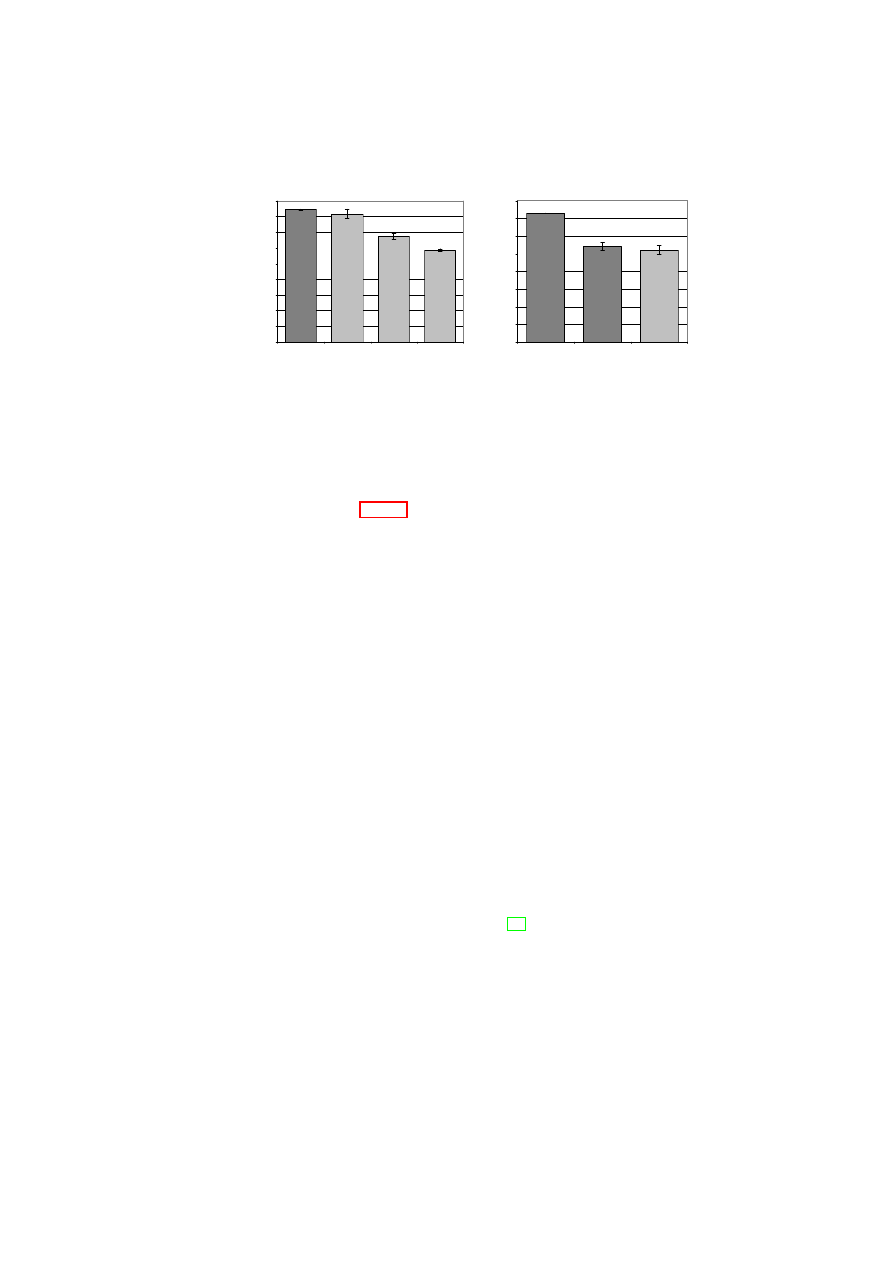

12.1 Performance Evaluation . . . . . . . . . . . . . . . . . . . . . . . . .

157

12.2 Limitations of our Prototype . . . . . . . . . . . . . . . . . . . . . .

159

13 Conclusion and Outlook

161

13.1 Lessons Learned

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

161

13.2 The Future of Secure Virtualization and Trusted Computing . . . . . .

162

13.3 Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

164

Bibliography

165

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

6

OpenTC D05.6 – Final Report of OpenTC Workpackage 5

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

Chapter 1

Introduction and Outline

1.1

Introduction

Hardware virtualization is enjoying a resurgence of interest fueled in part by its cost-

saving potential. By allowing multiple virtual machines to be hosted on a single phys-

ical server, virtualization helps improve server utilization, reduce management and

power costs, and control the problem of server sprawl.

A prominent example in this context is data centers. The infrastructure provider,

who owns, runs, and manages the data center, can transfer the cost savings to its cus-

tomers or outsourcing companies, whose virtual infrastructures are hosted on the data

center’s physical resources. A large number of the companies that outsource their op-

erations are small and medium businesses or SMBs, which cannot afford the costs of a

dedicated data center in which all the data center’s resources are used to host a single

company’s IT infrastructure. Hence, the IT infrastructure belonging to multiple SMBs

may be hosted inside the same data center facility. Today, even in such “shared” data

centers, each run on distinct physical resources and there is no resource sharing among

various customers. In this so-called physical cages model, the customers are physically

isolated from each other in the same data center.

Limited trust in the security of virtual datacenters is one major reason for customers

not sharing physical resources. Since management is usually performed manually, ad-

ministrative errors are commonplace. While this may lead to down times in virtual

datacenters used by a single customer, it can lead to information leakages to competi-

tors if the datacenter is shared. Furthermore, multiple organizations will only allow

sharing of physical resources if they can trust that security incidents cannot spread

across the isolation boundary separating two customers.

Security Objectives

Our main security objective is to provide isolation among dif-

ferent domains that is comparable

1

with the isolation obtained by providing one infras-

tructure for each customer. In particular, we require a security architecture that protects

those system components that provide the required isolation or allow to verifiably rea-

son about their trustworthiness of and also of any peer endpoint (local or remote) with

a domain, i.e., whether they conforms to the underlying security policy.

We achieve this by grouping VMs dispersed across multiple physical resources

into a virtual zone in which customer-specified security requirements are automatically

1

Note that unlike physical isolation, we do not solve the problem of covert channels.

7

8

OpenTC D05.6 – Final Report of OpenTC Workpackage 5

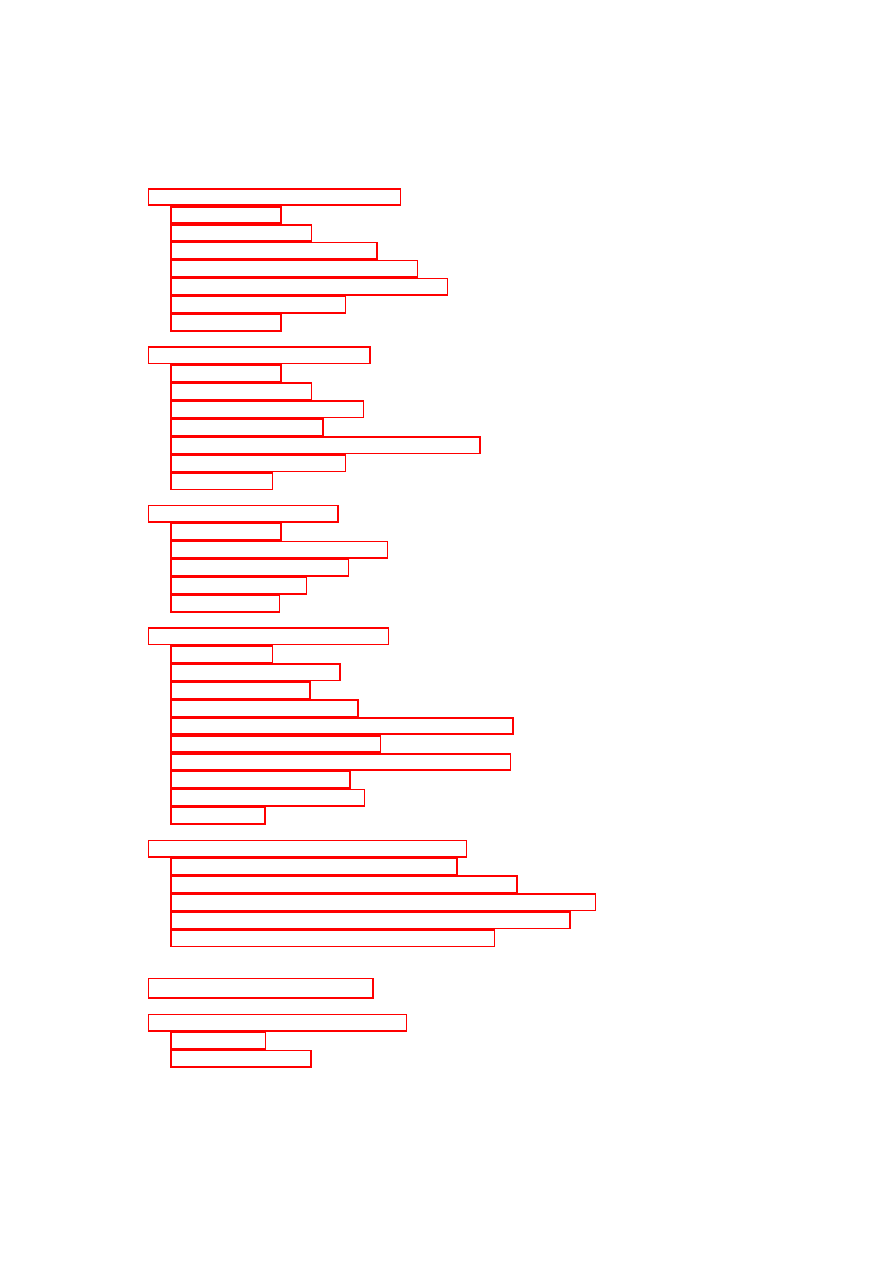

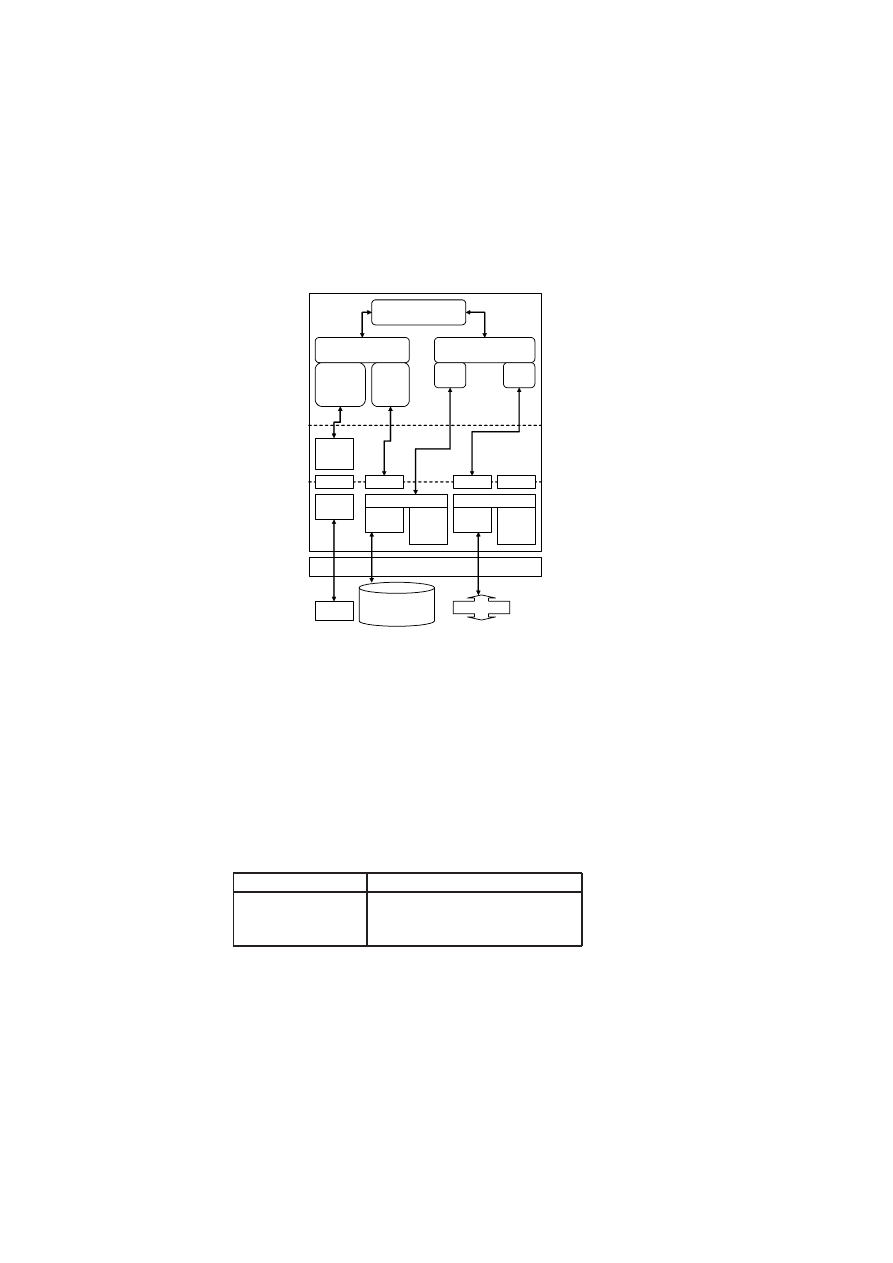

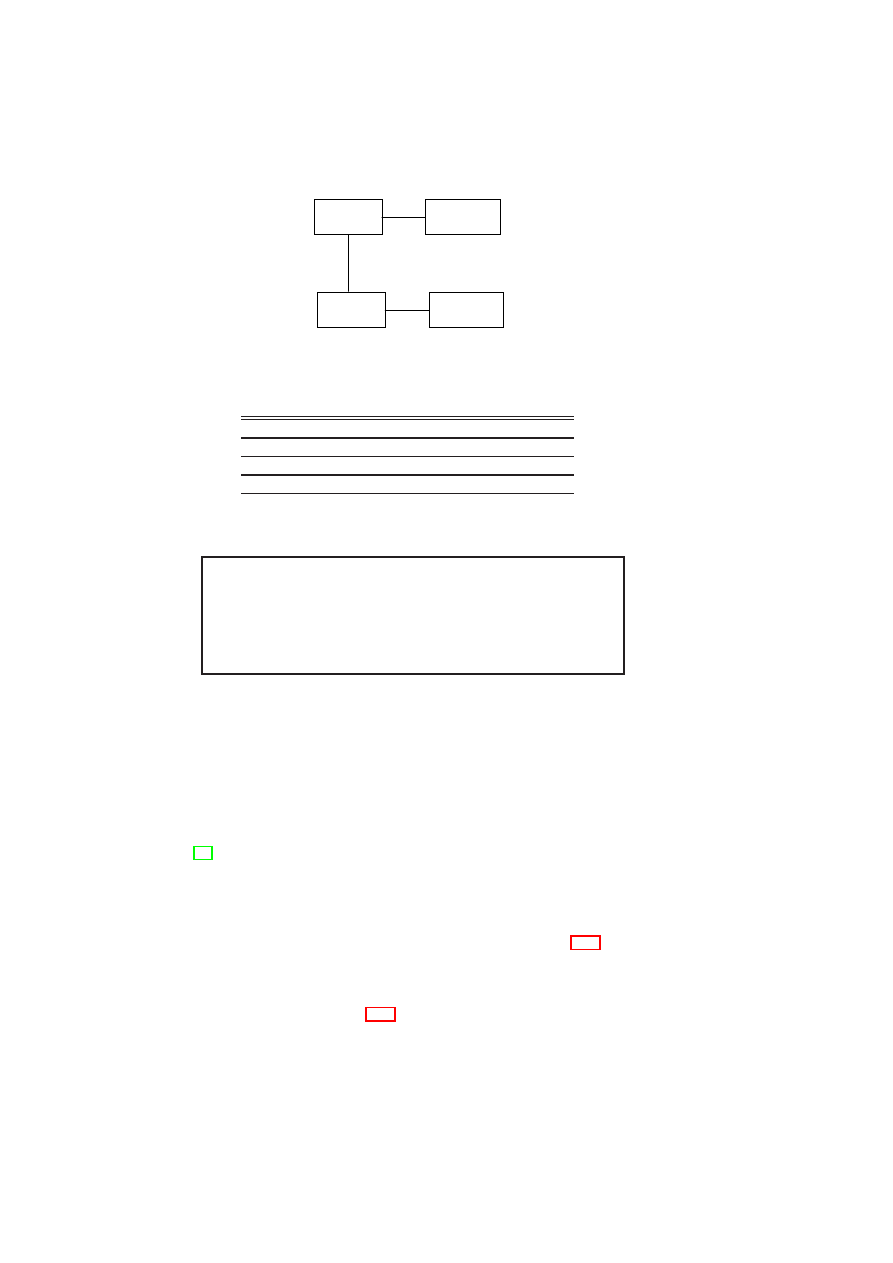

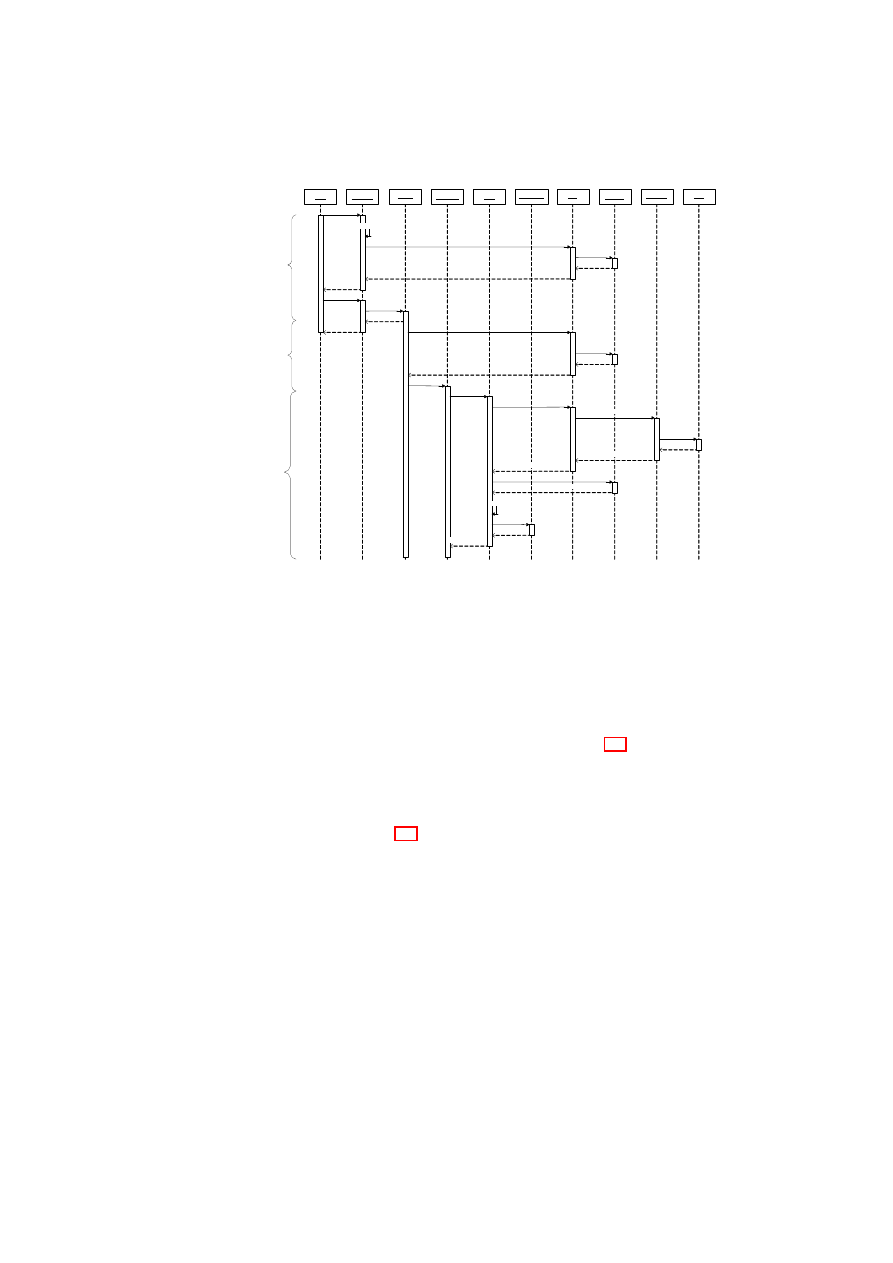

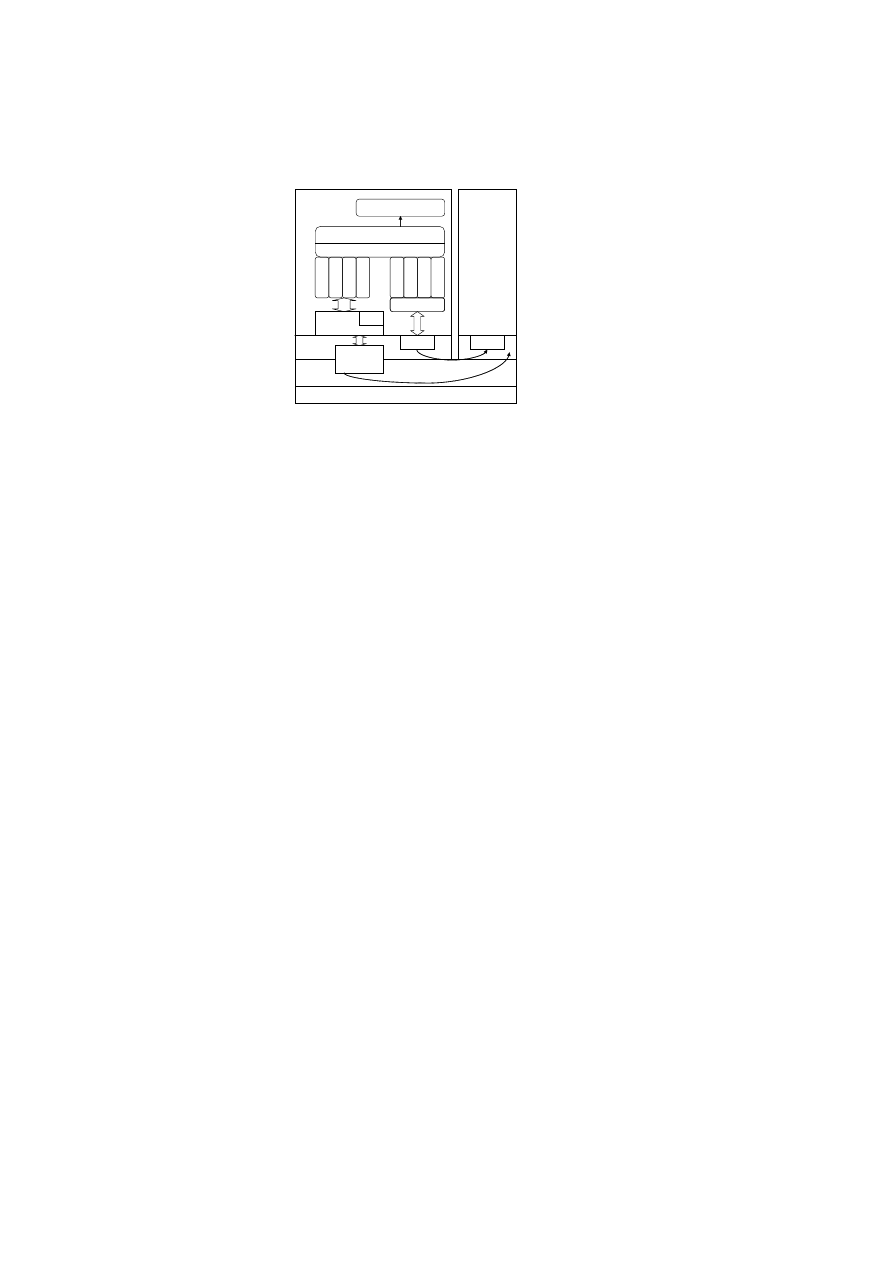

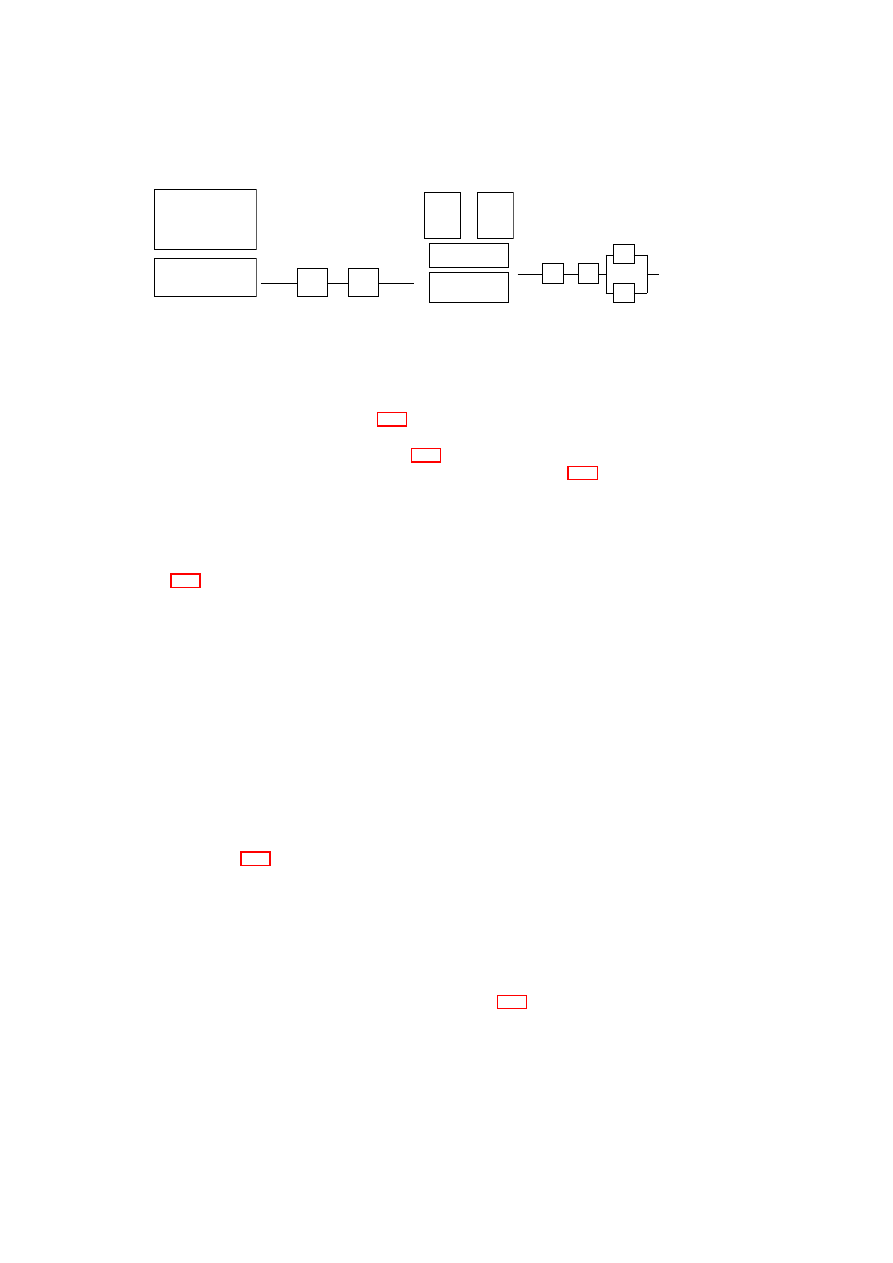

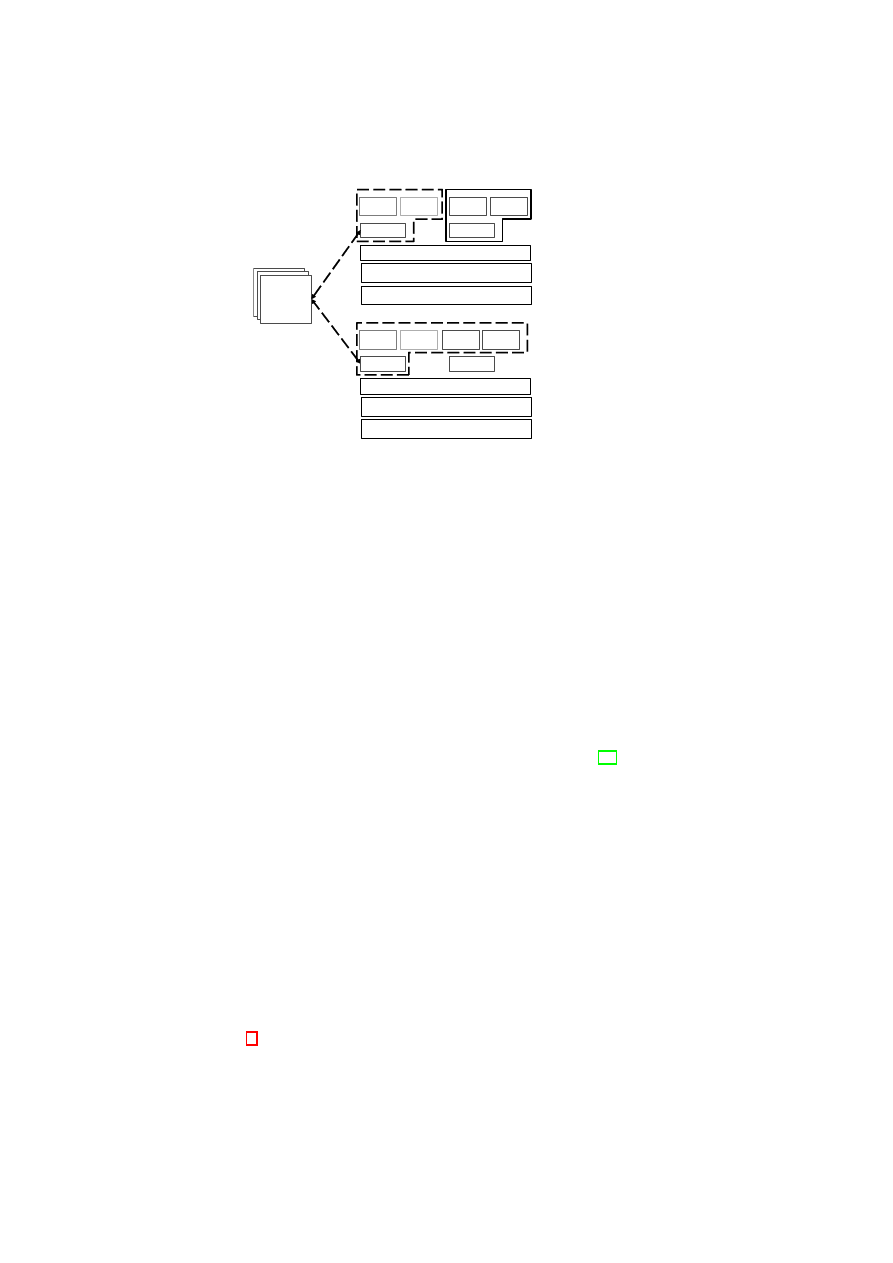

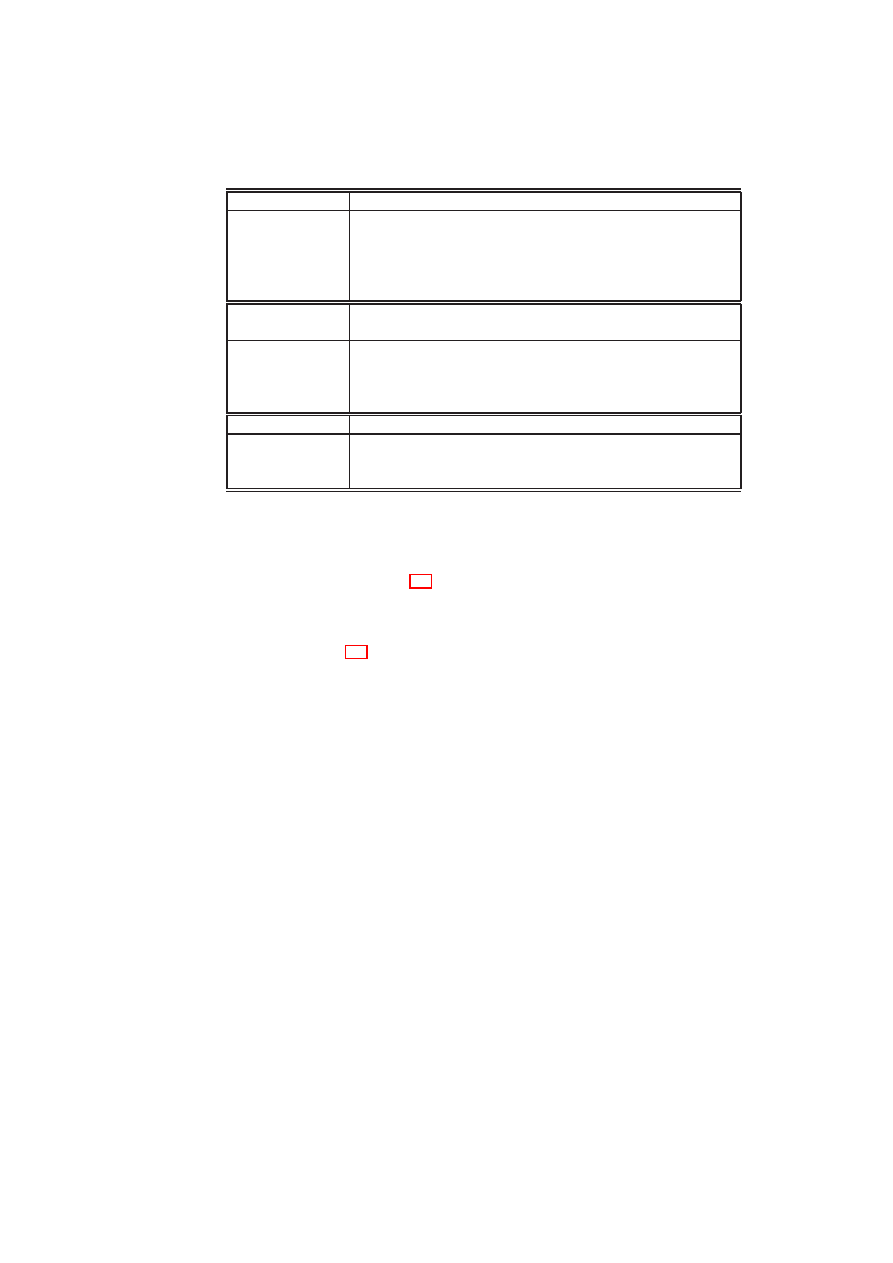

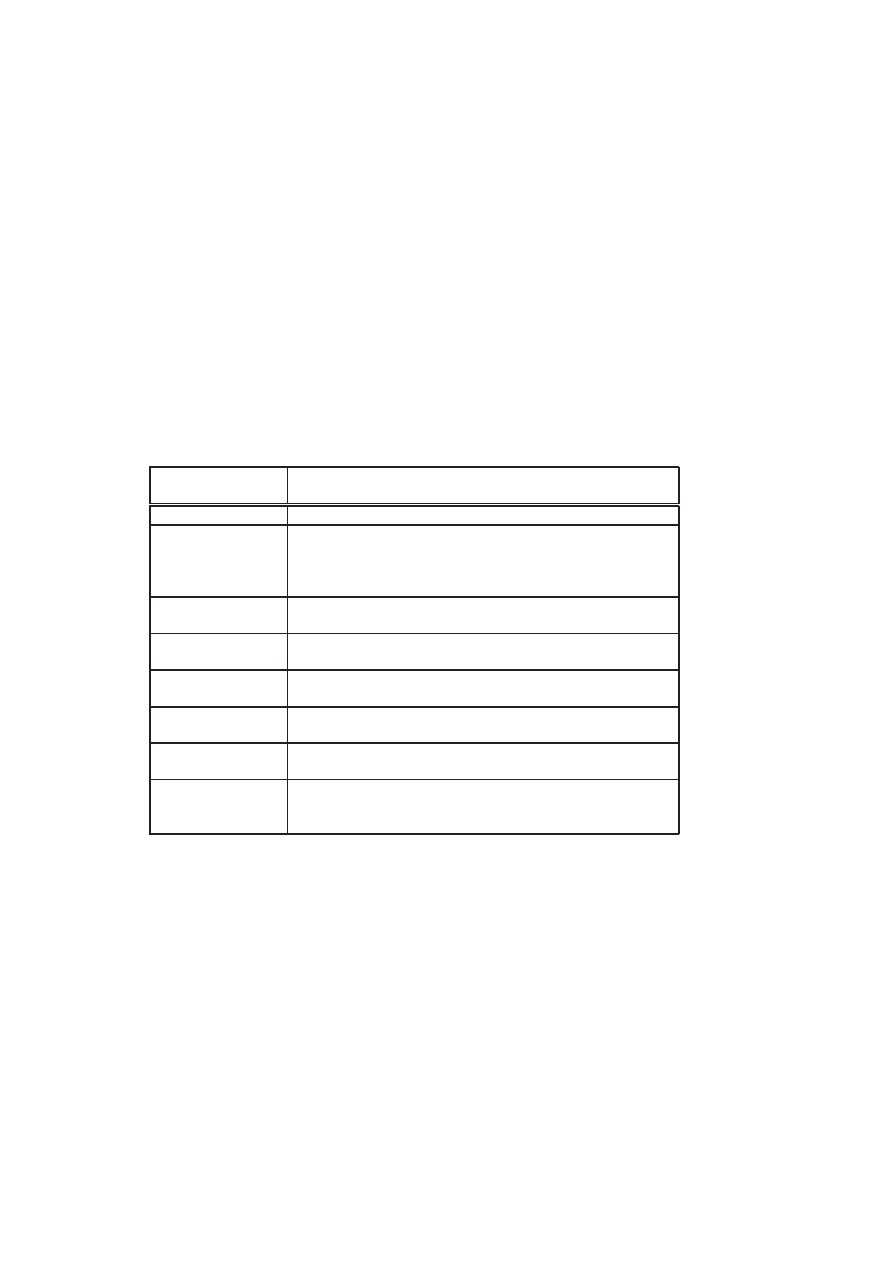

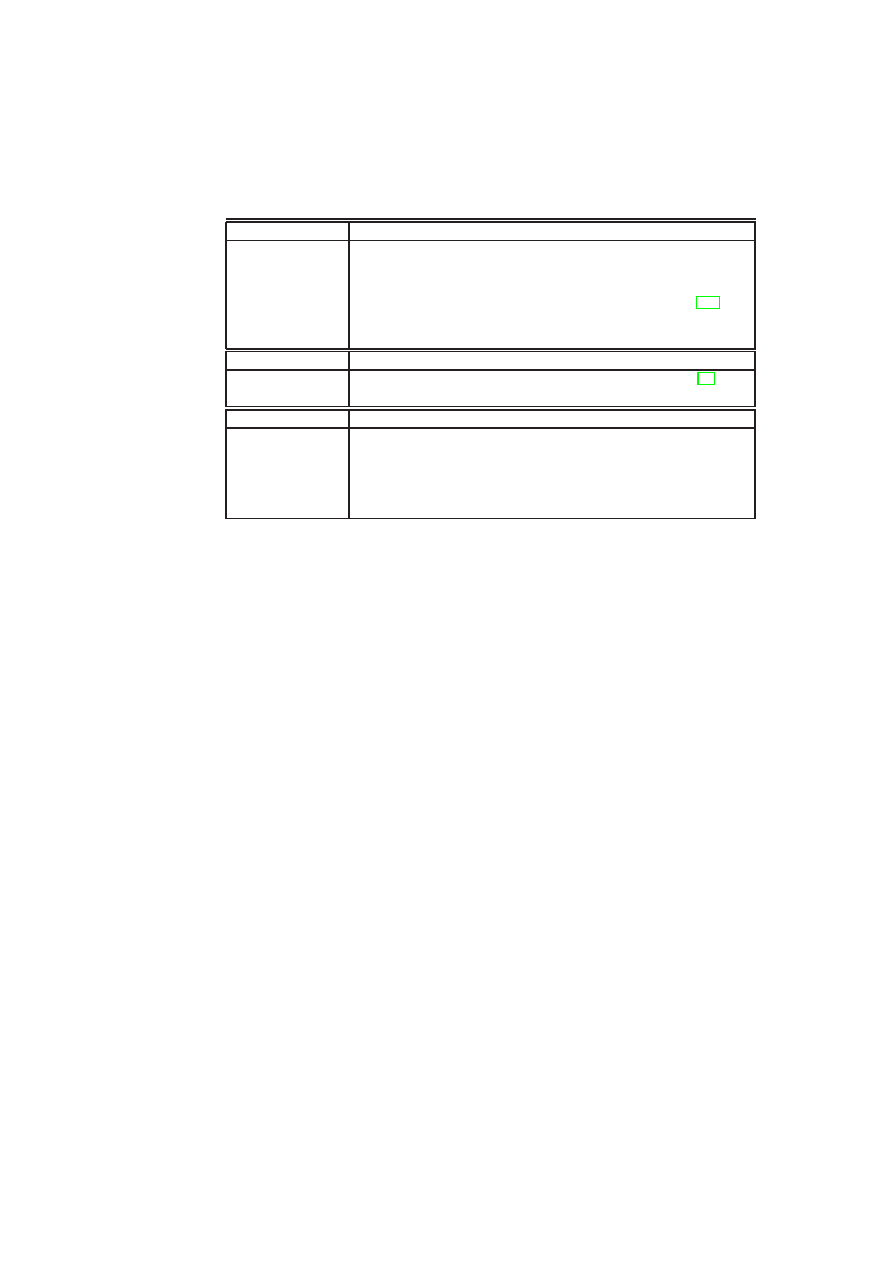

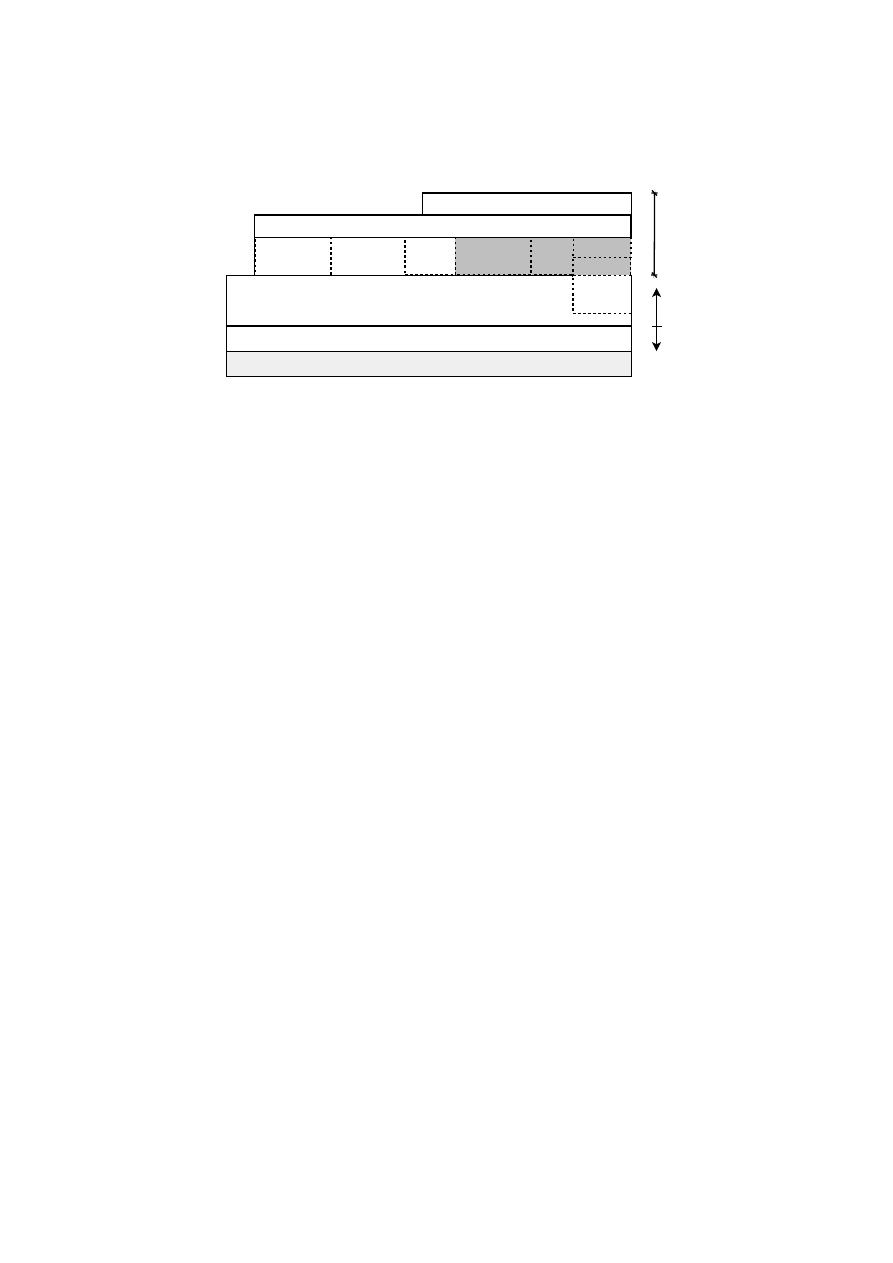

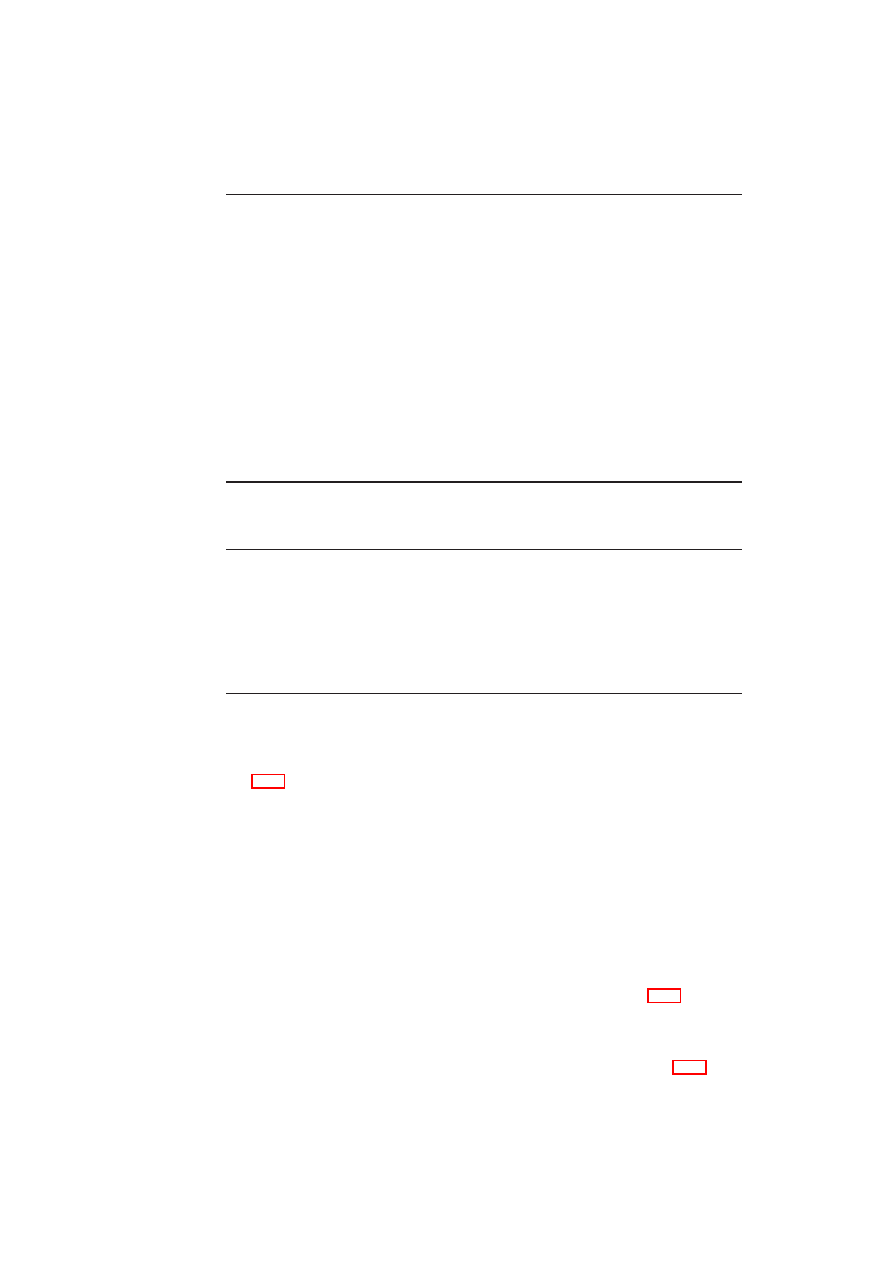

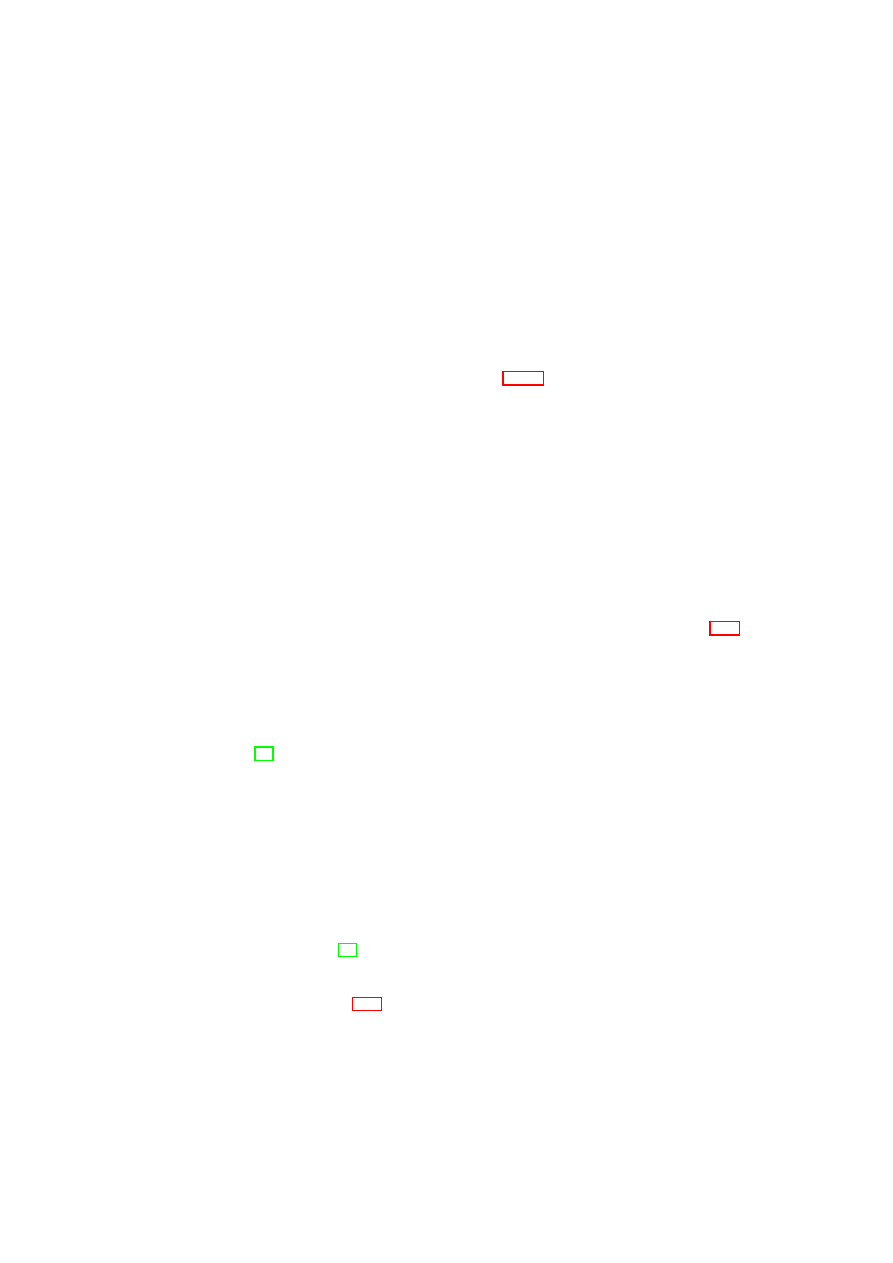

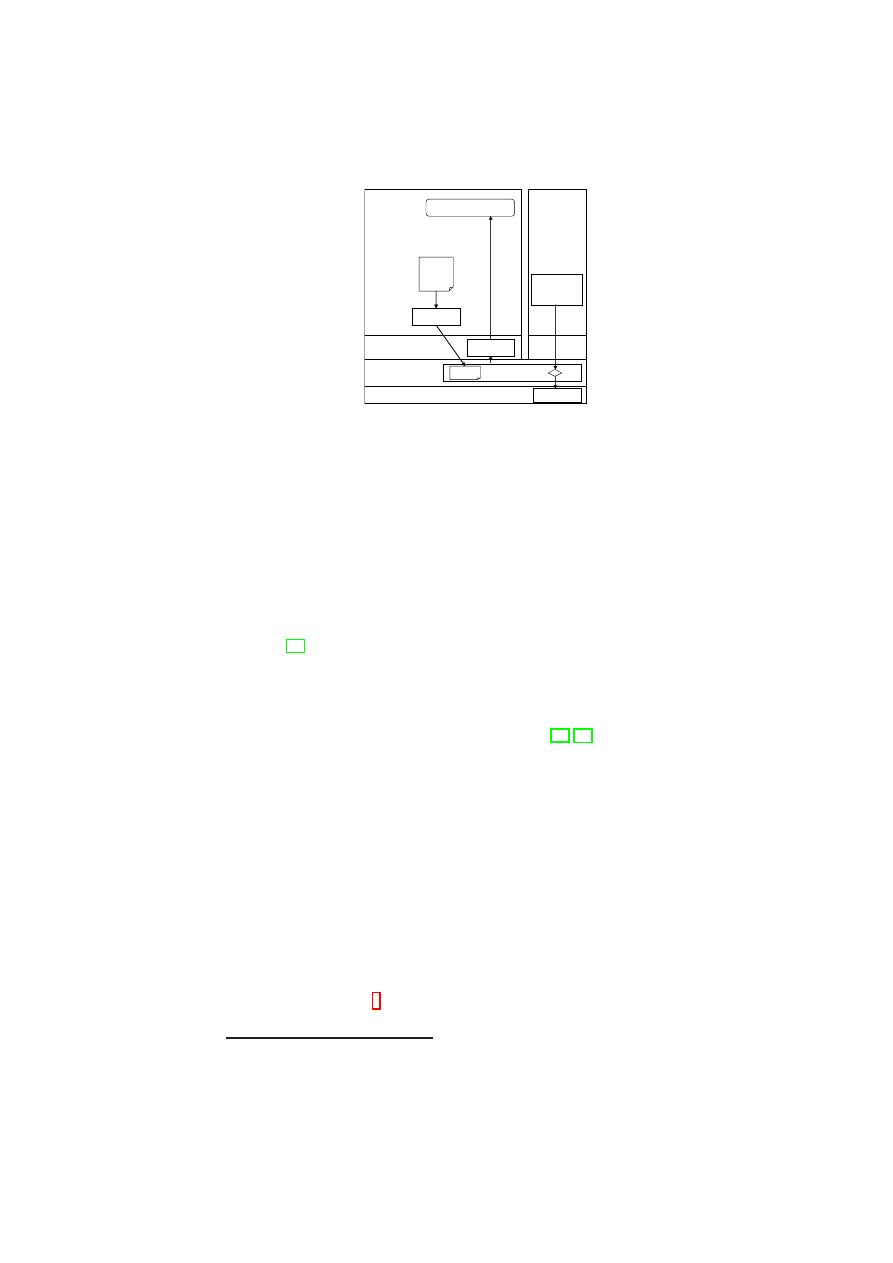

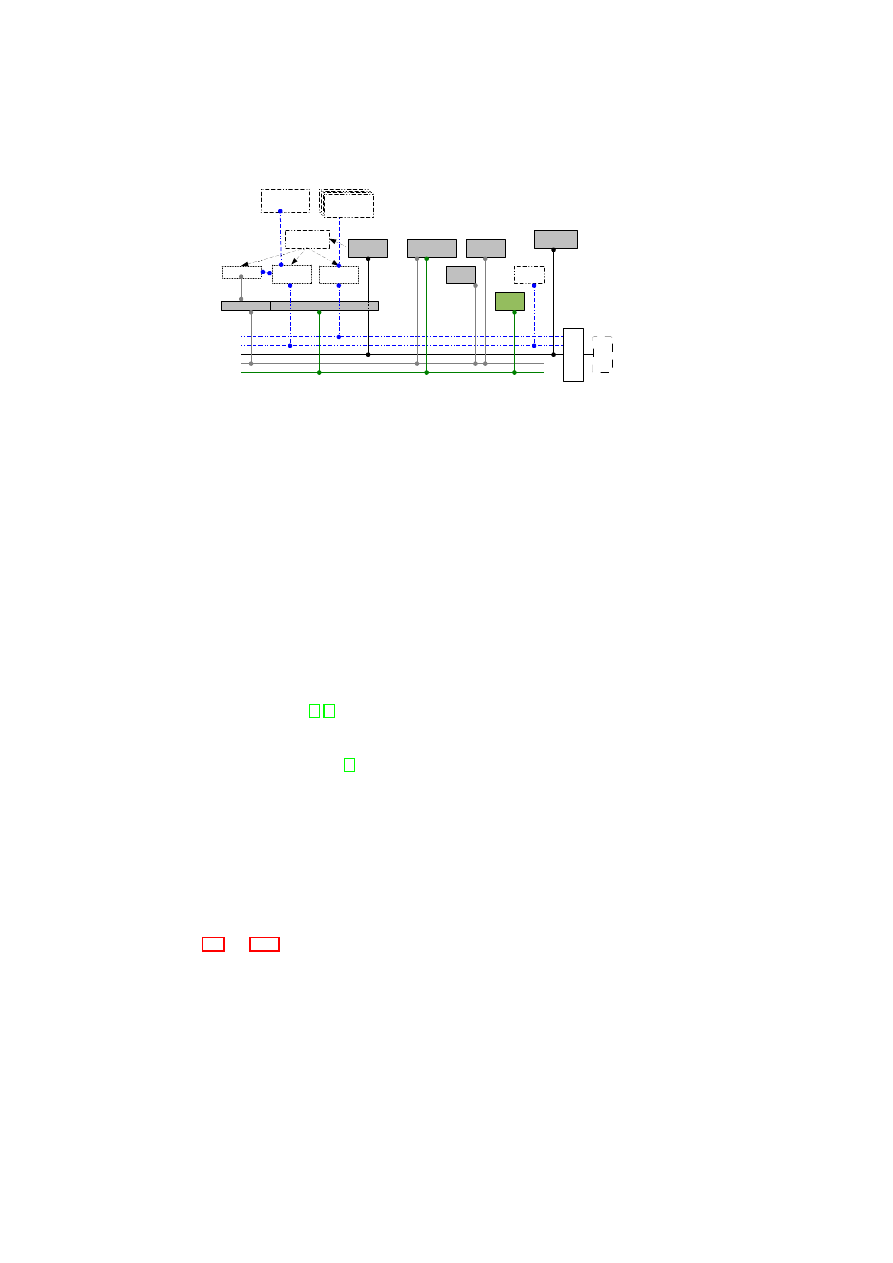

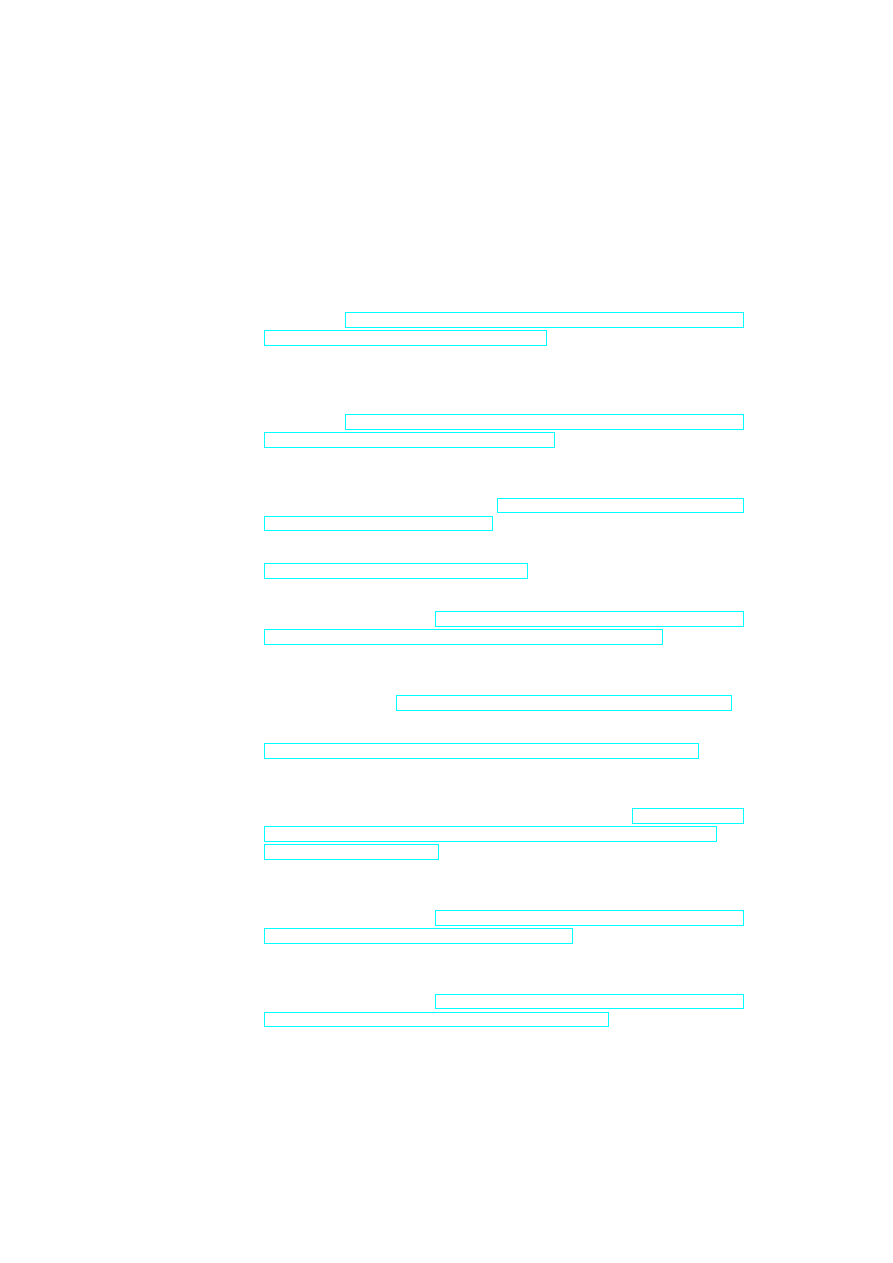

Hardware Platform

Hypervisor

Security Services

VM

A1

VM

A2

VM

A3

VM

A4

TVD1

Master

TVD1

Master

TVD1

Master

Proxy1

…

Proxy2

Hardware Platform

Hypervisor

Security Services

VM

B1

VM

B2

VM

B3

VM

B4

Proxy1

…

Proxy2

H

o

s

t

B

H

o

s

t

A

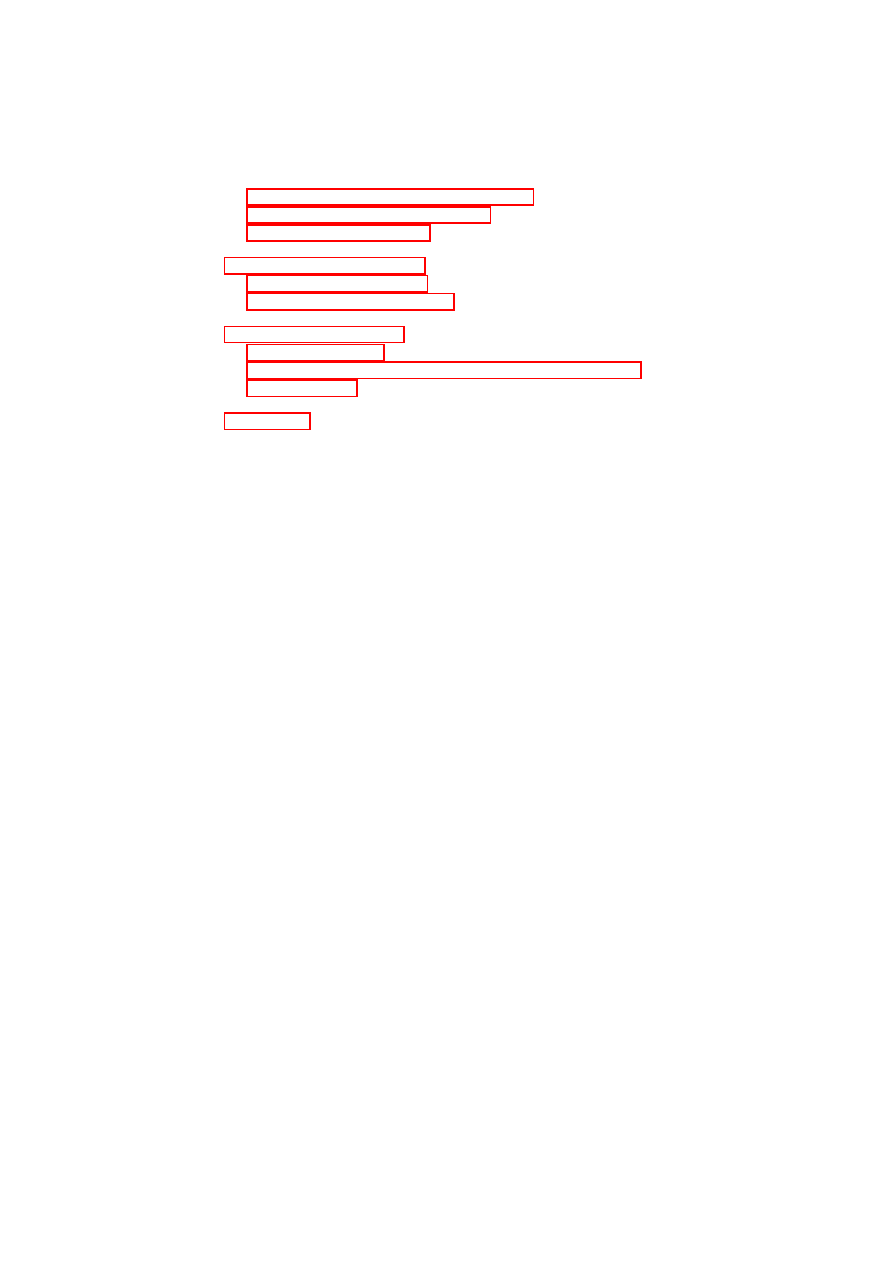

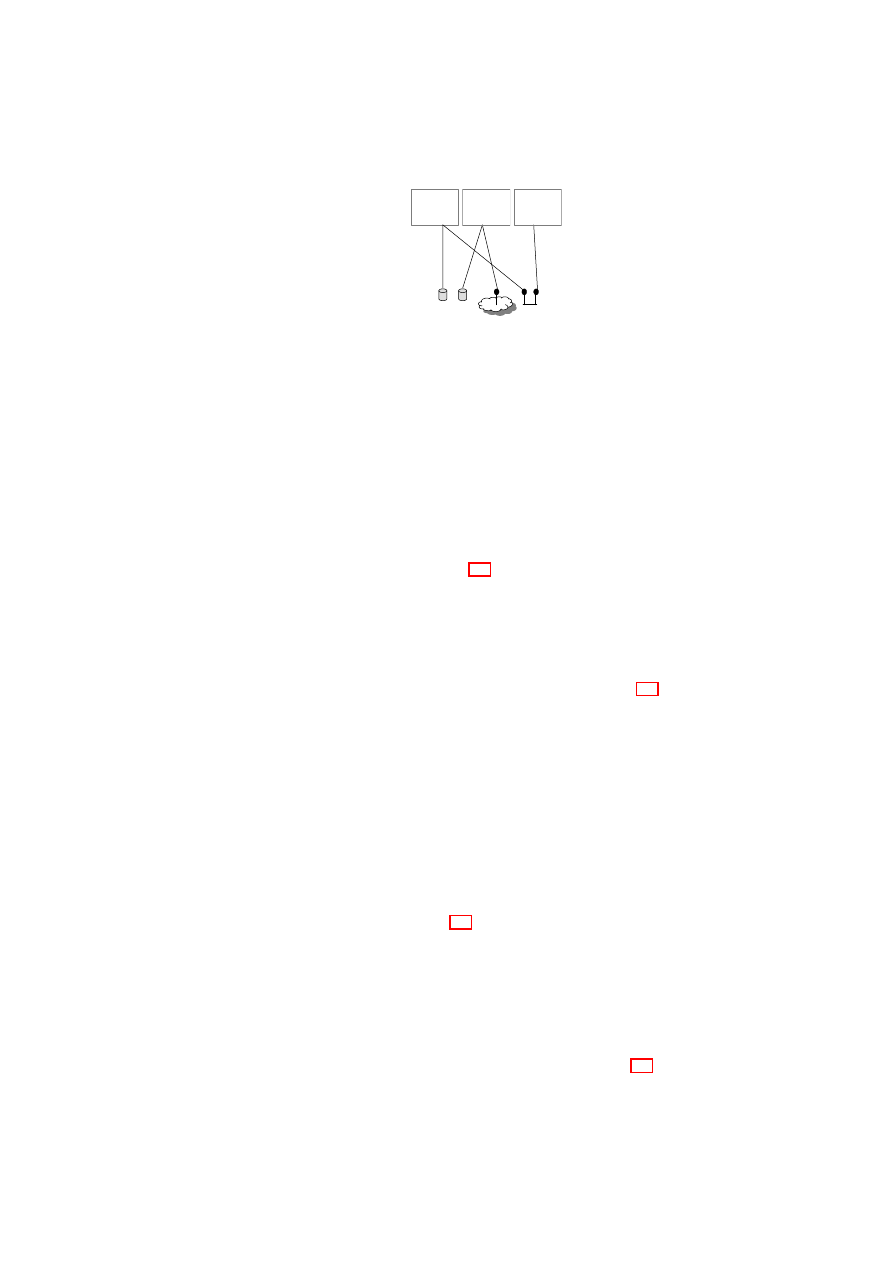

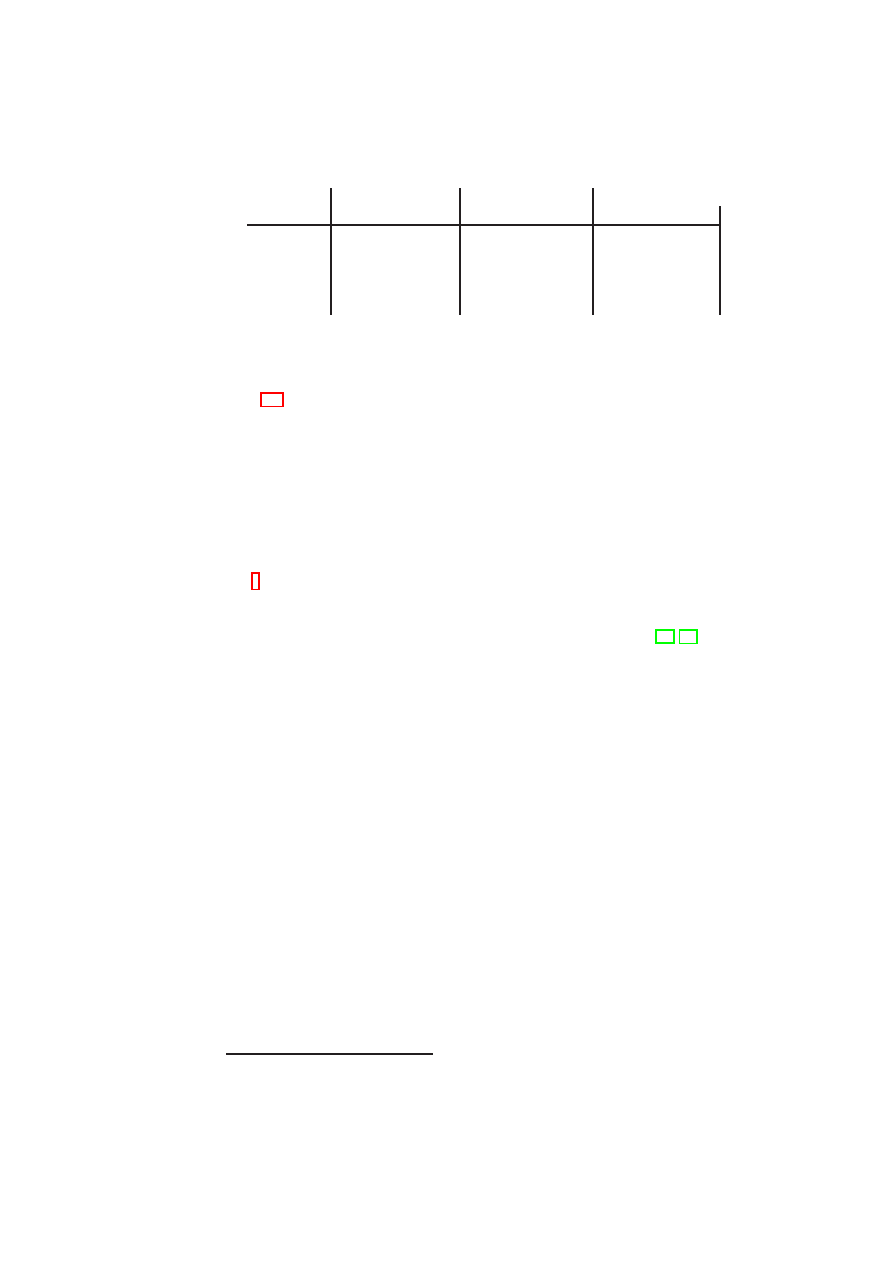

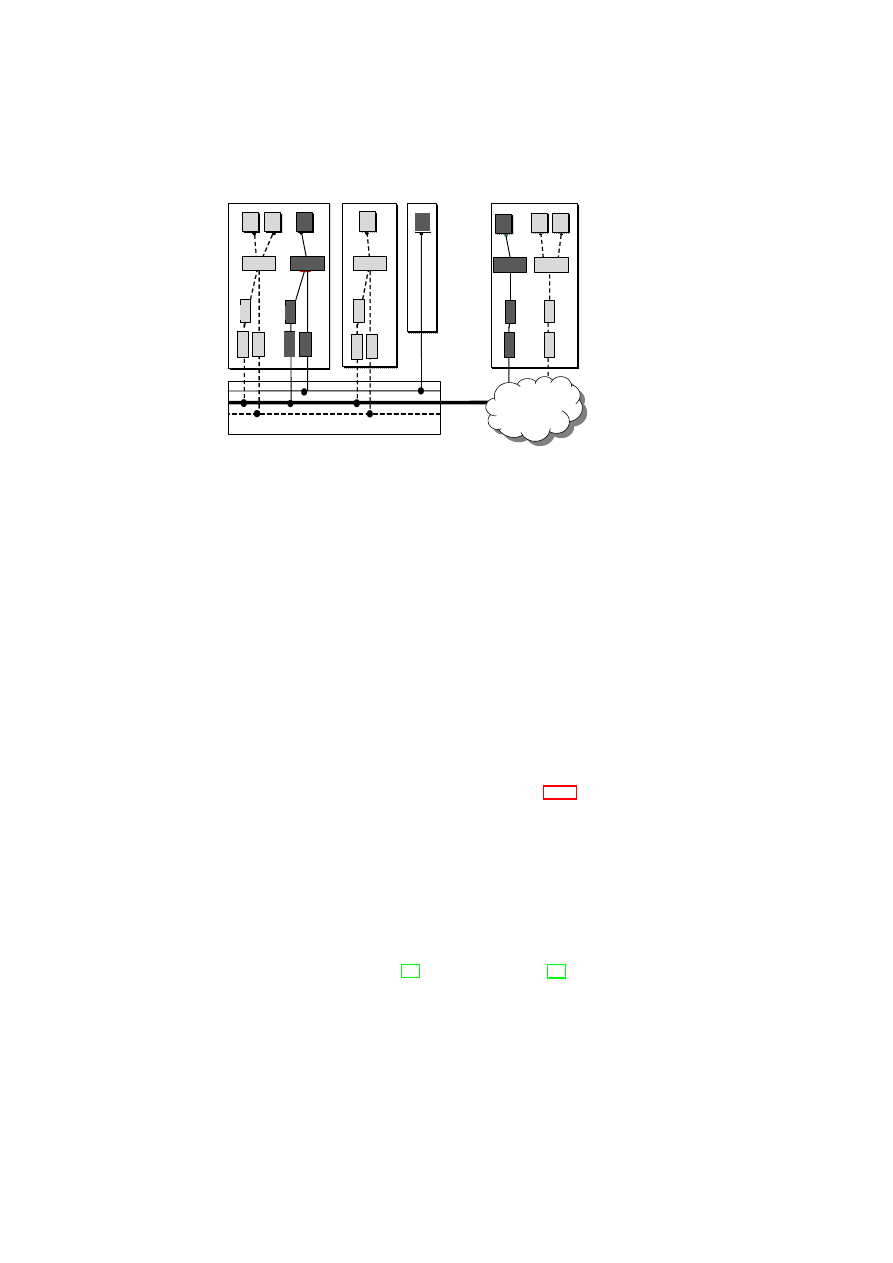

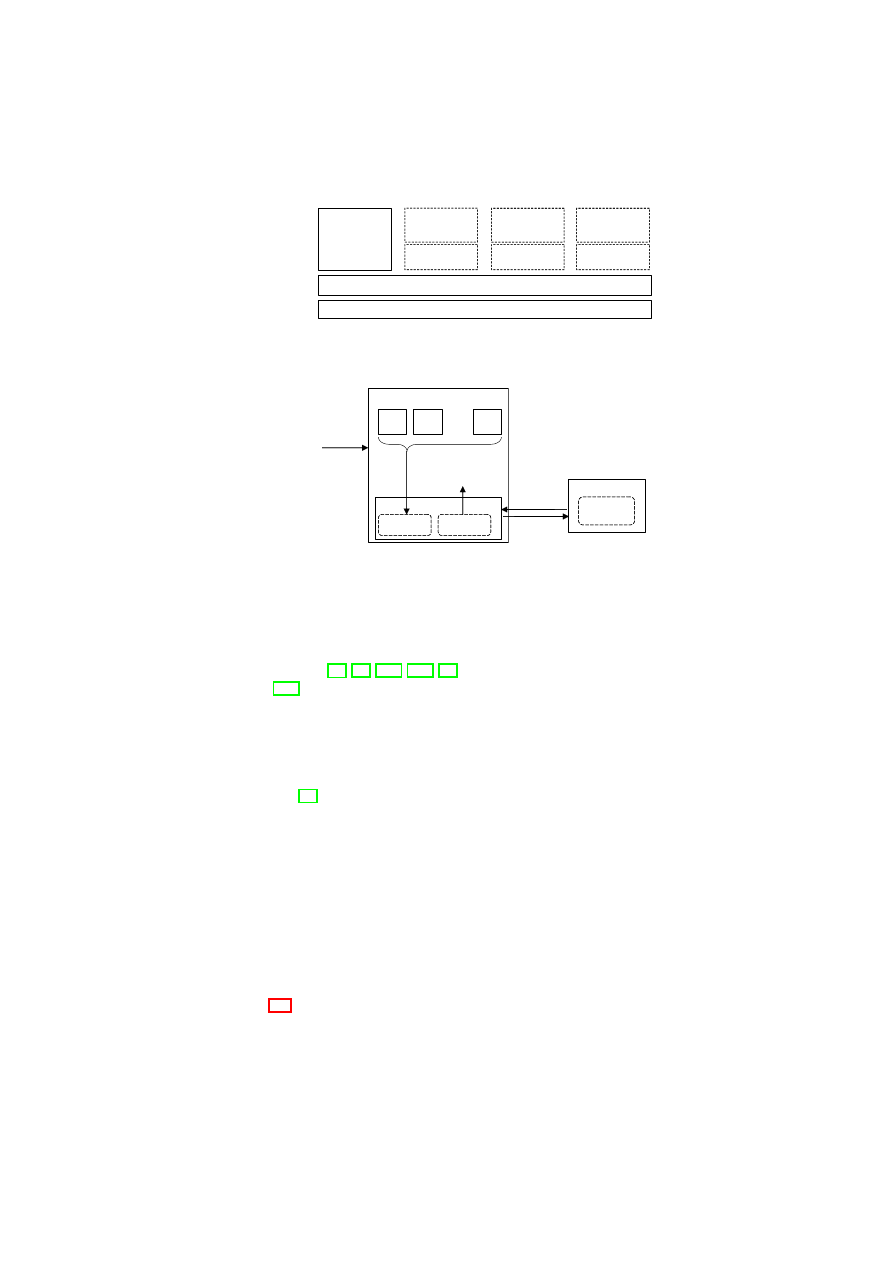

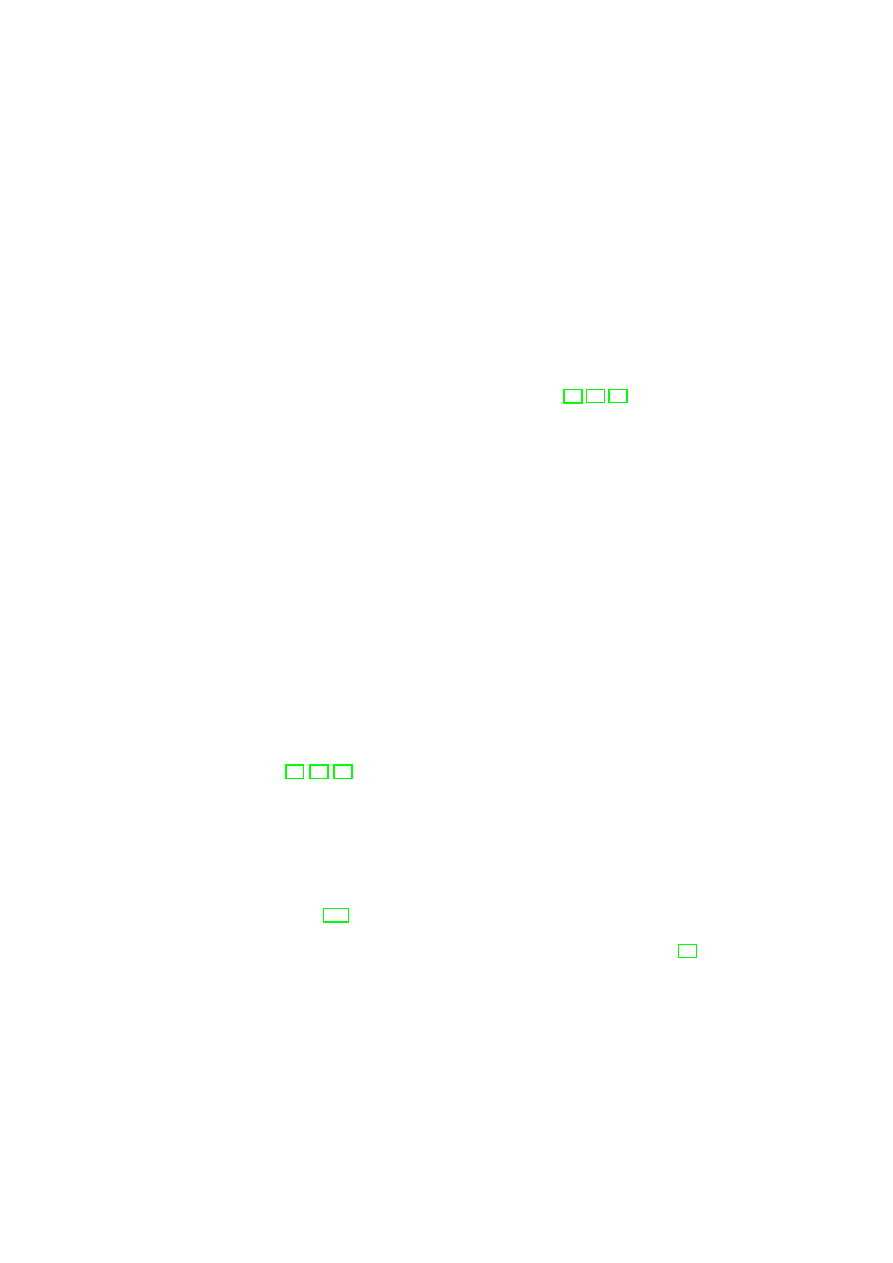

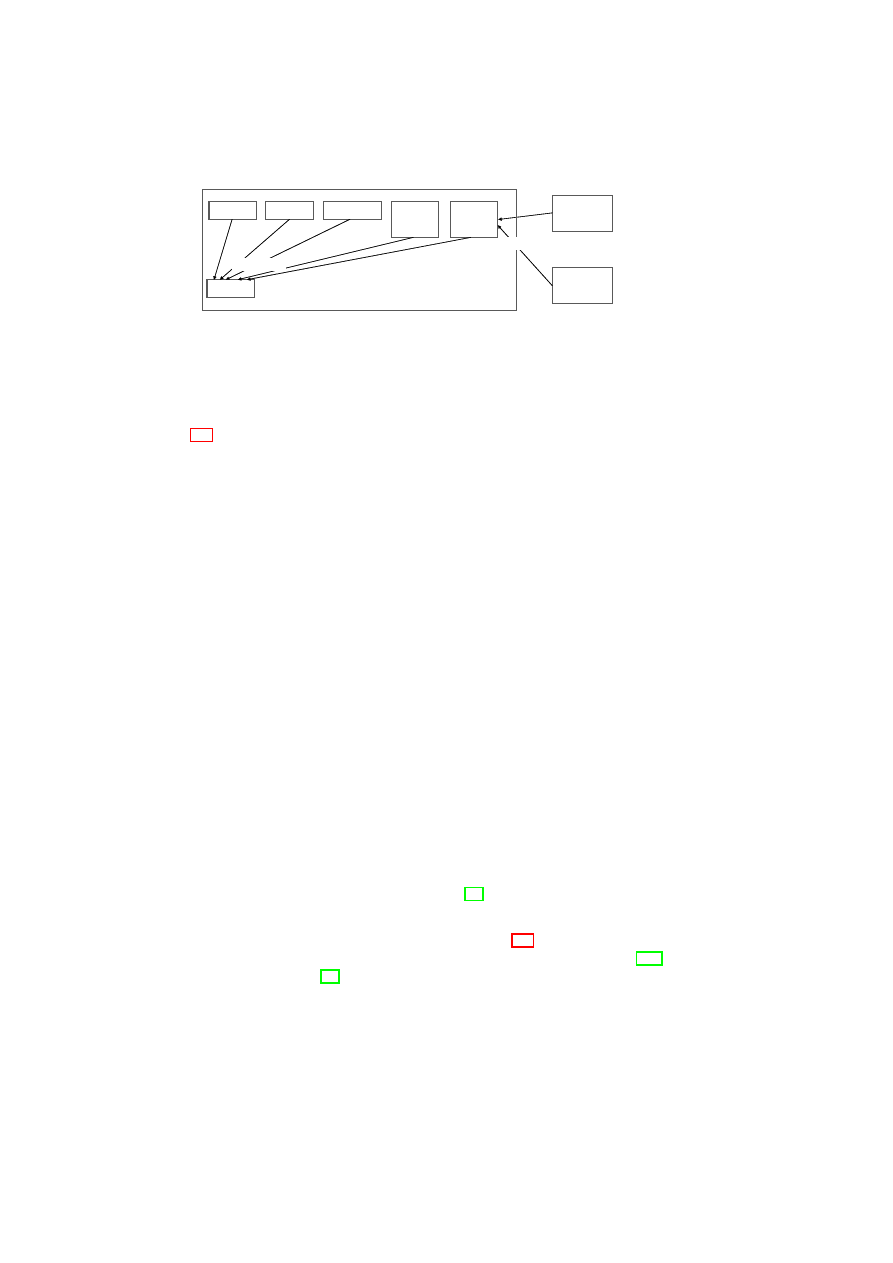

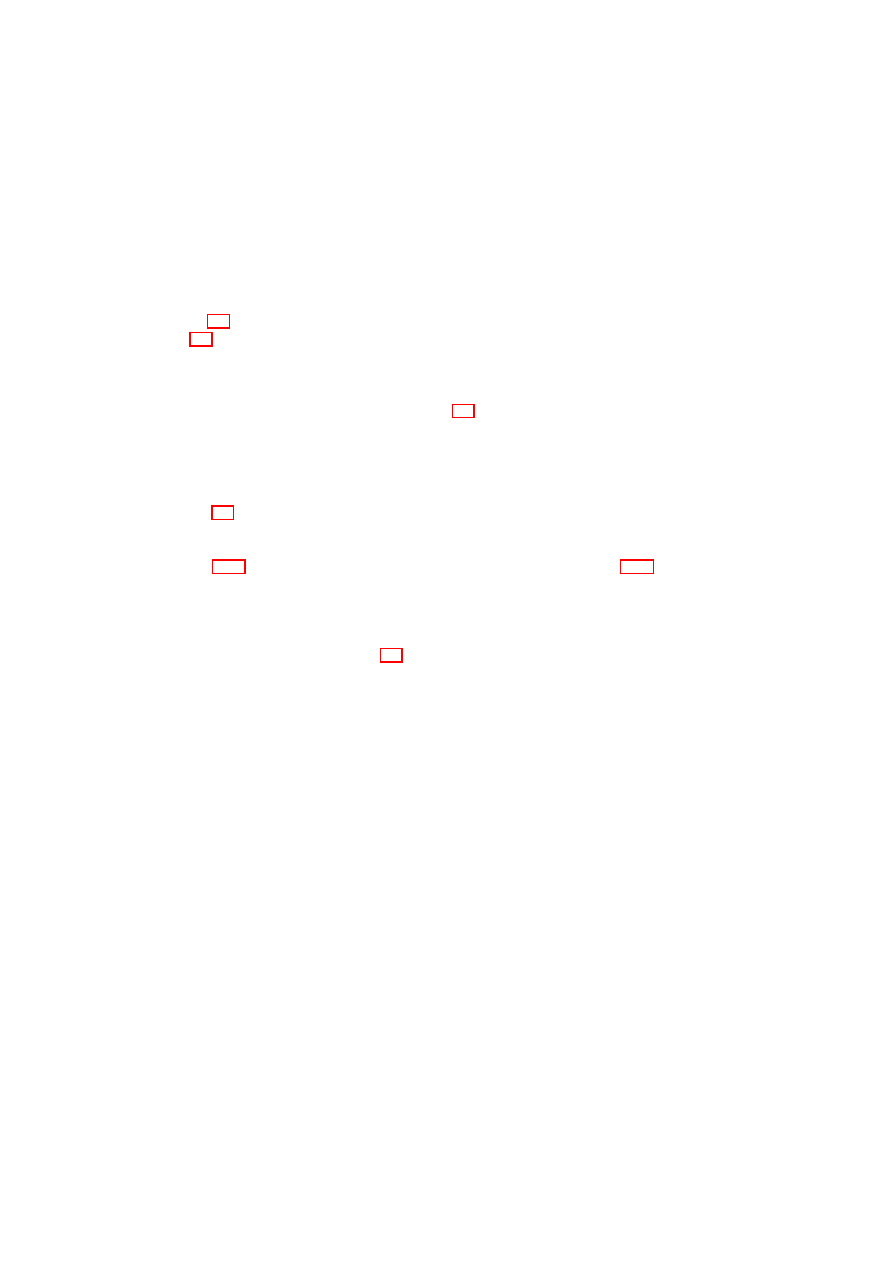

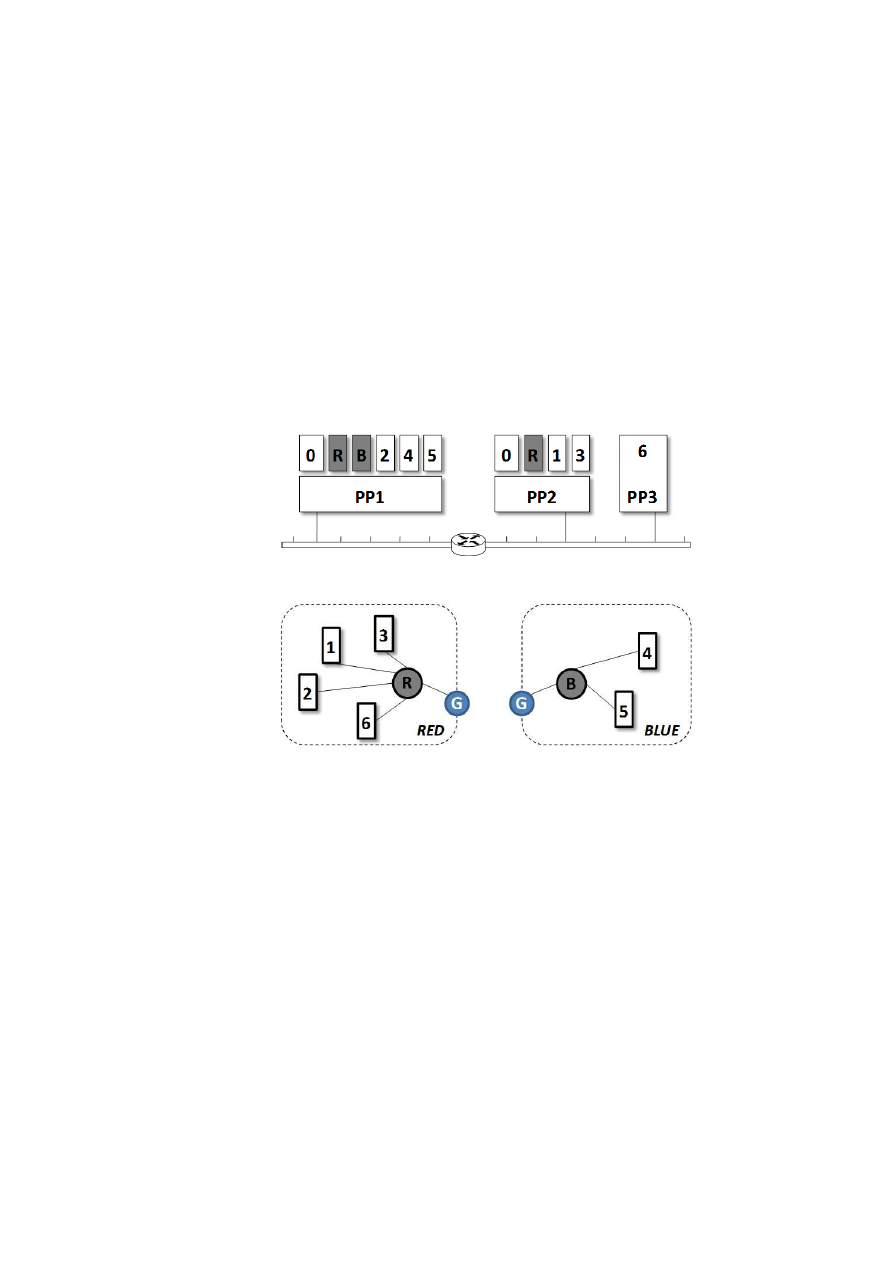

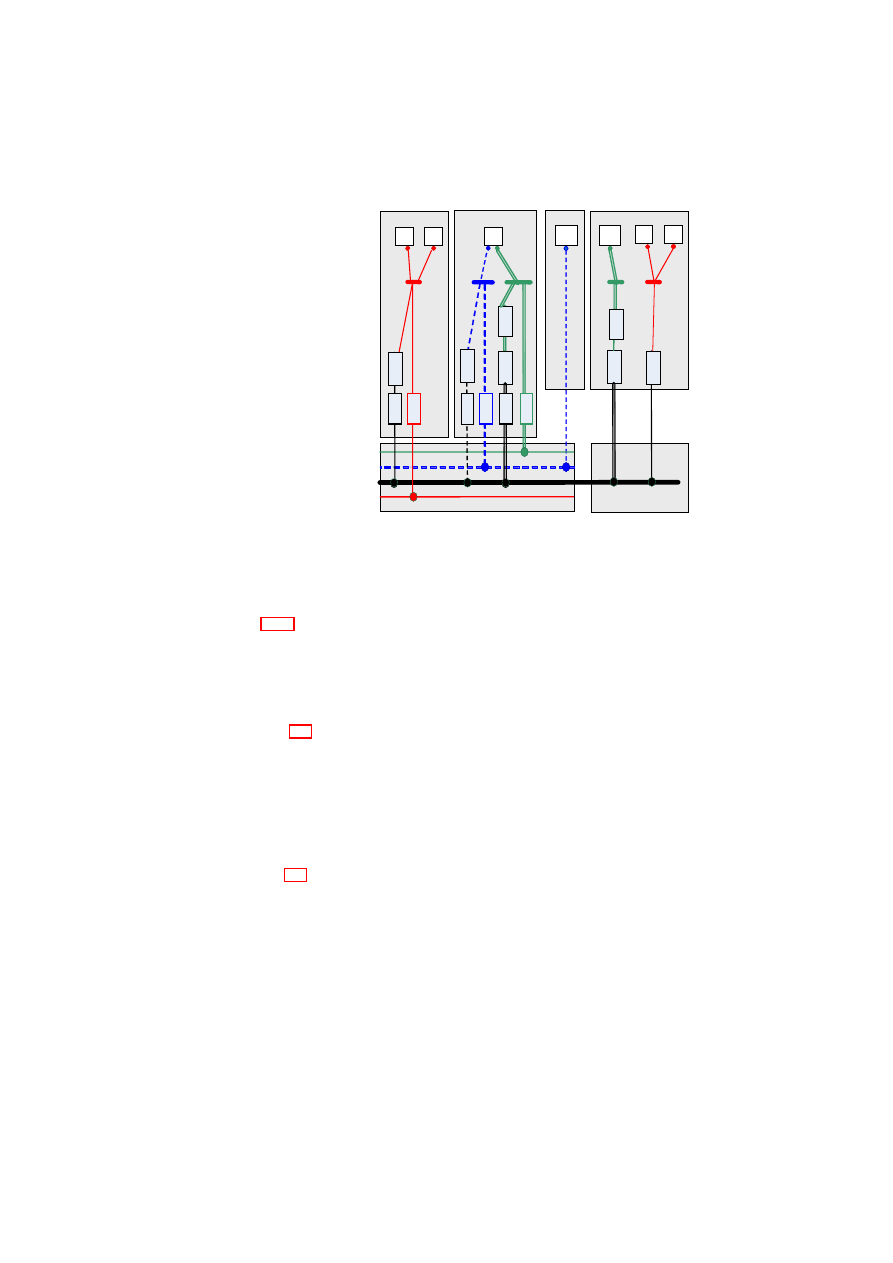

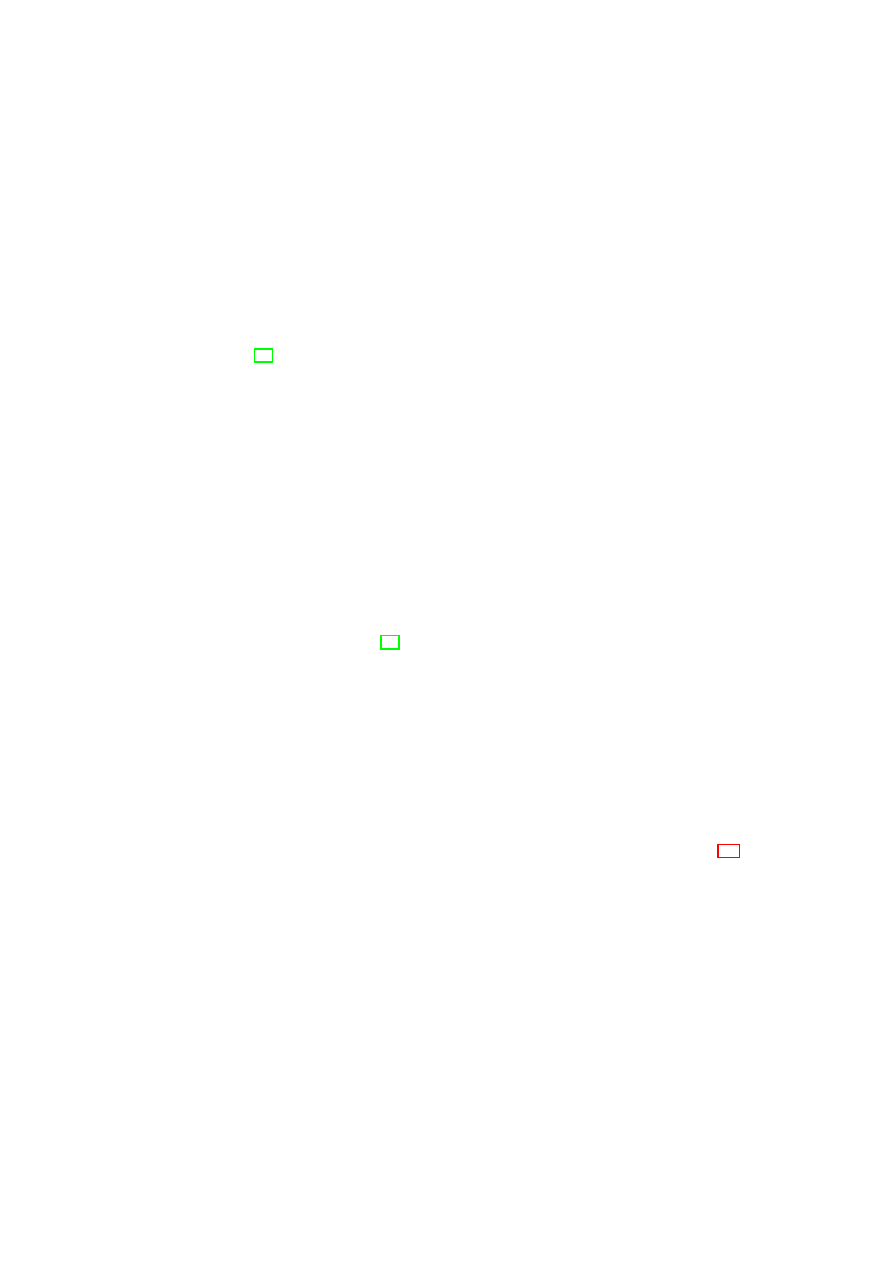

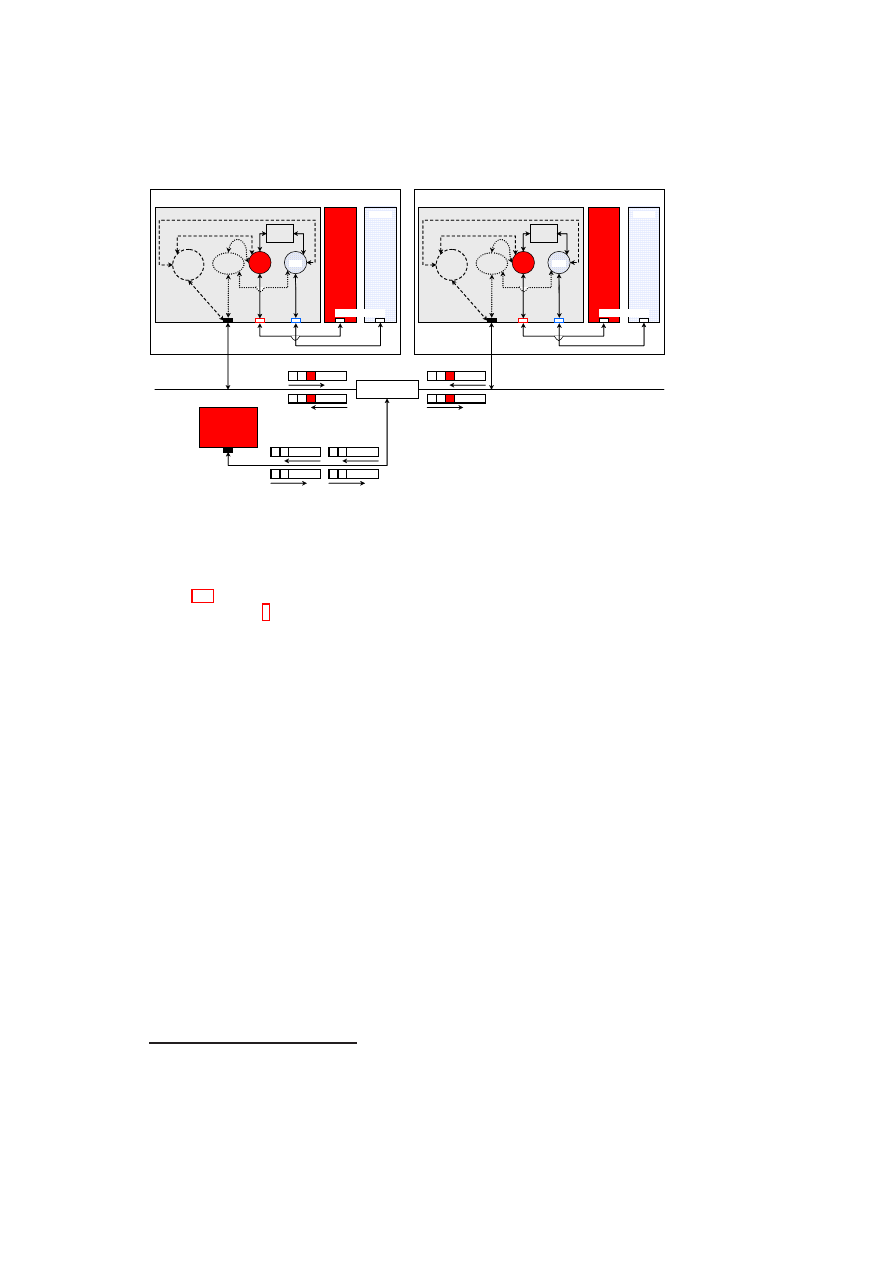

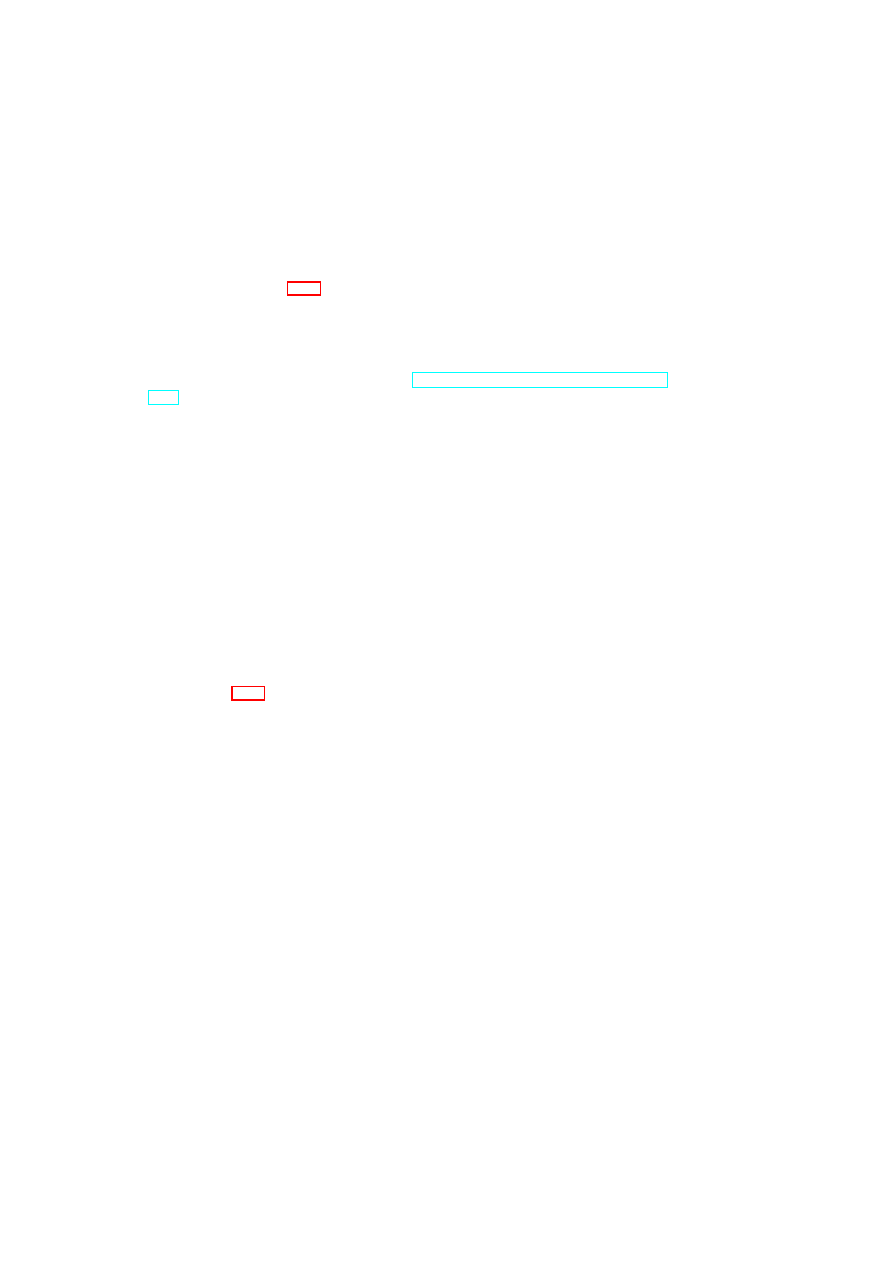

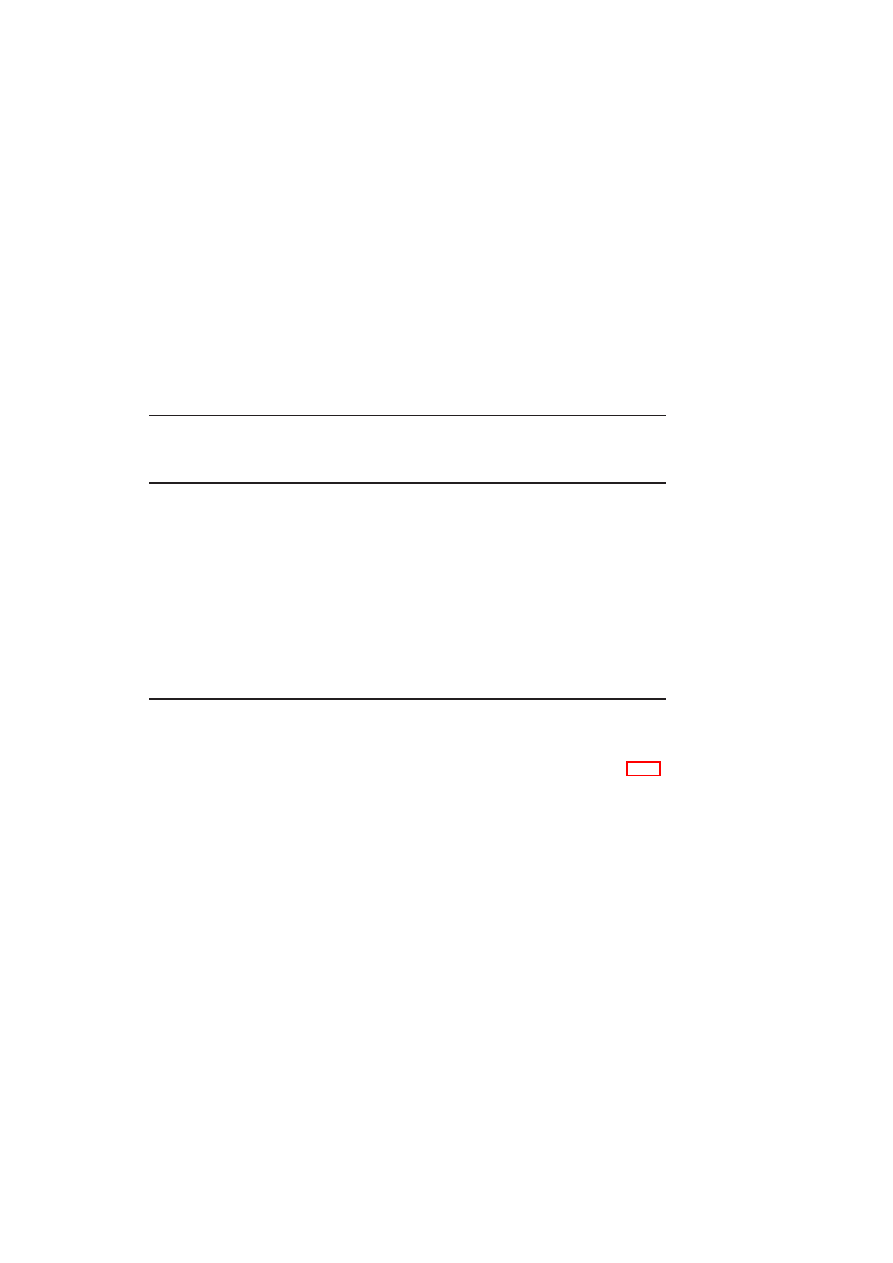

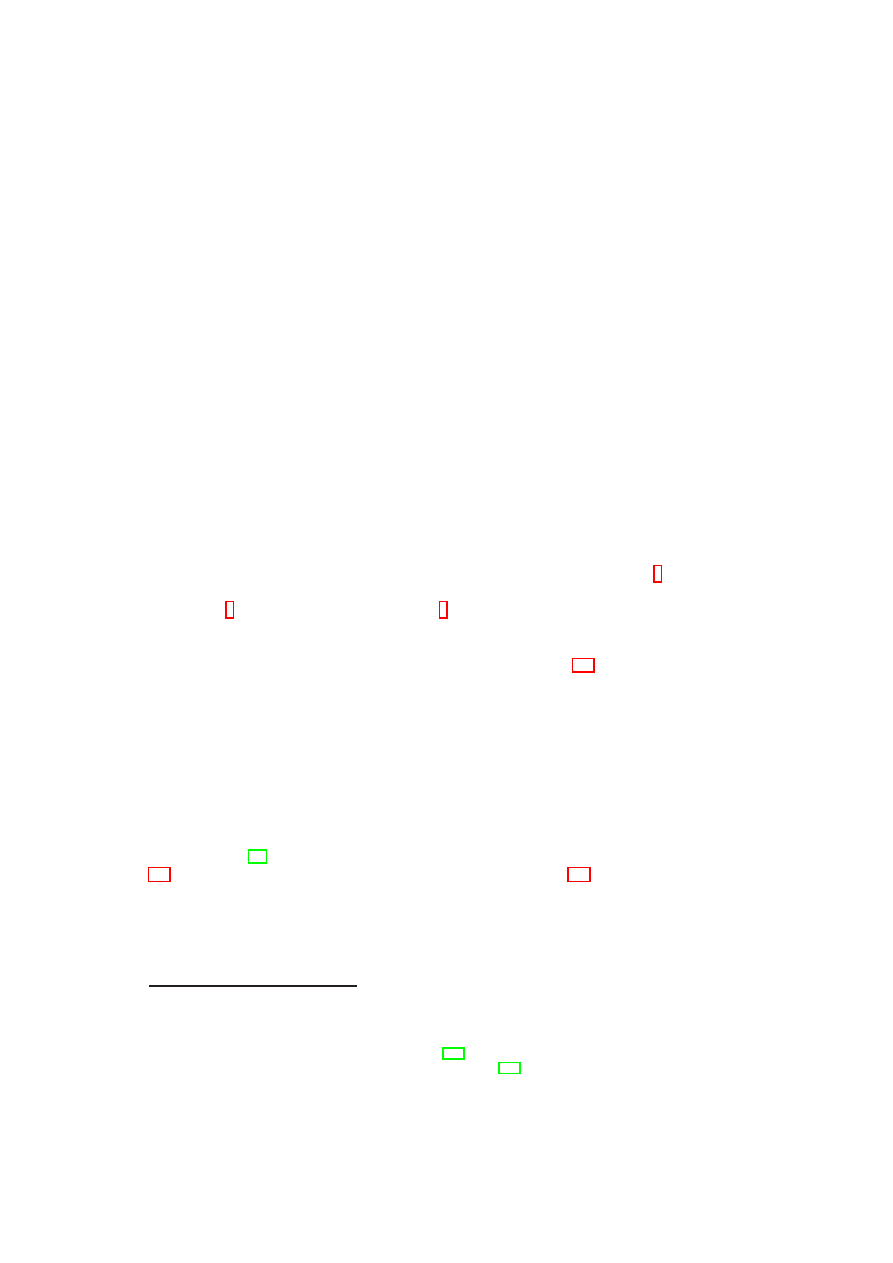

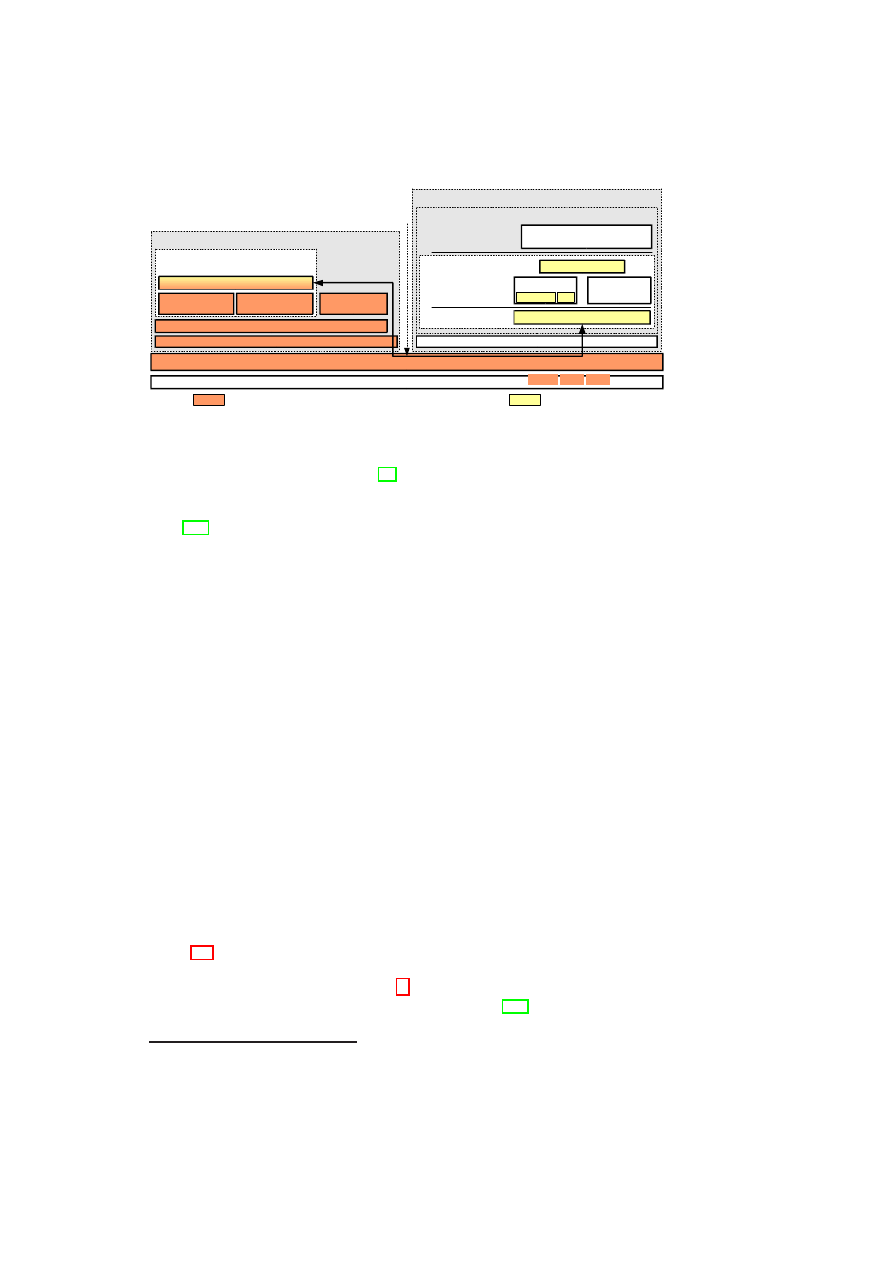

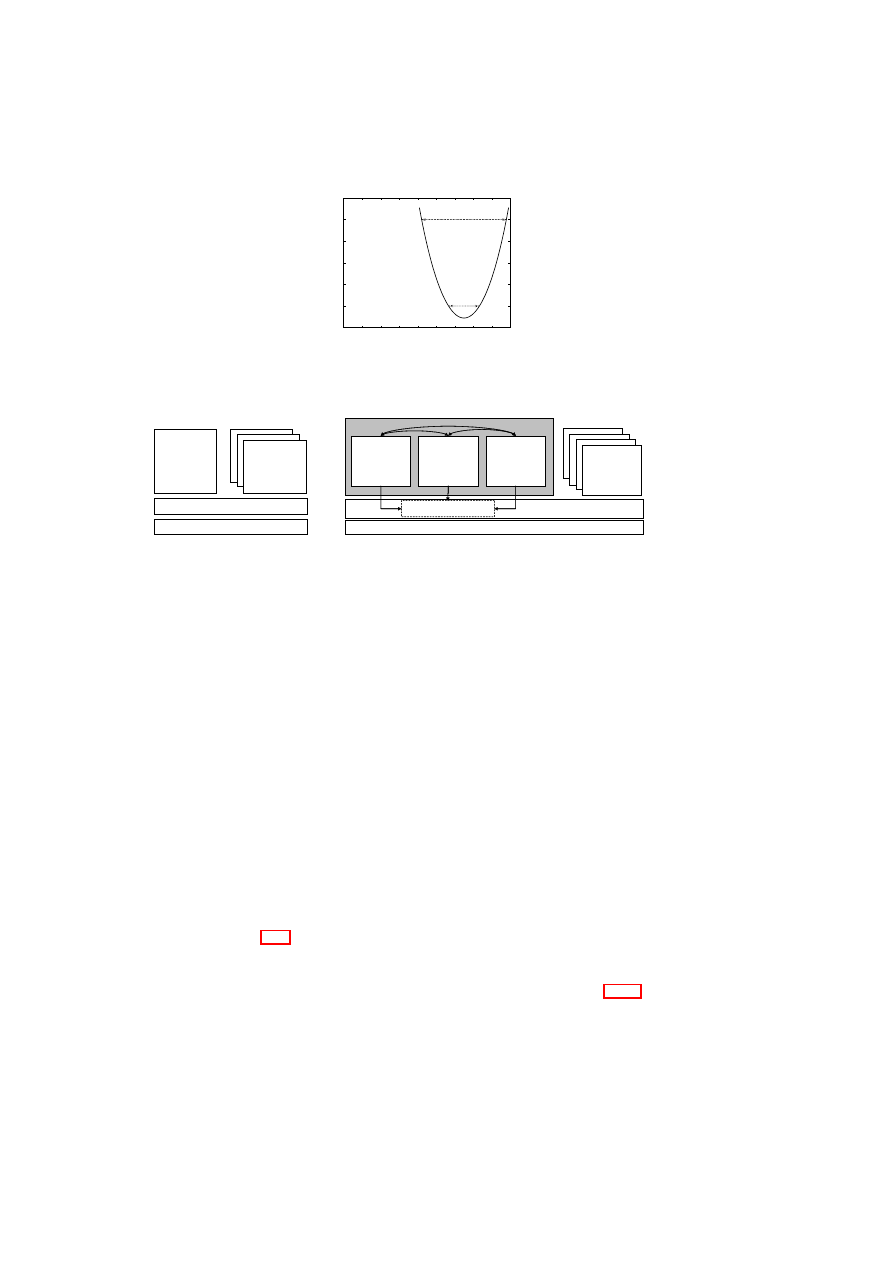

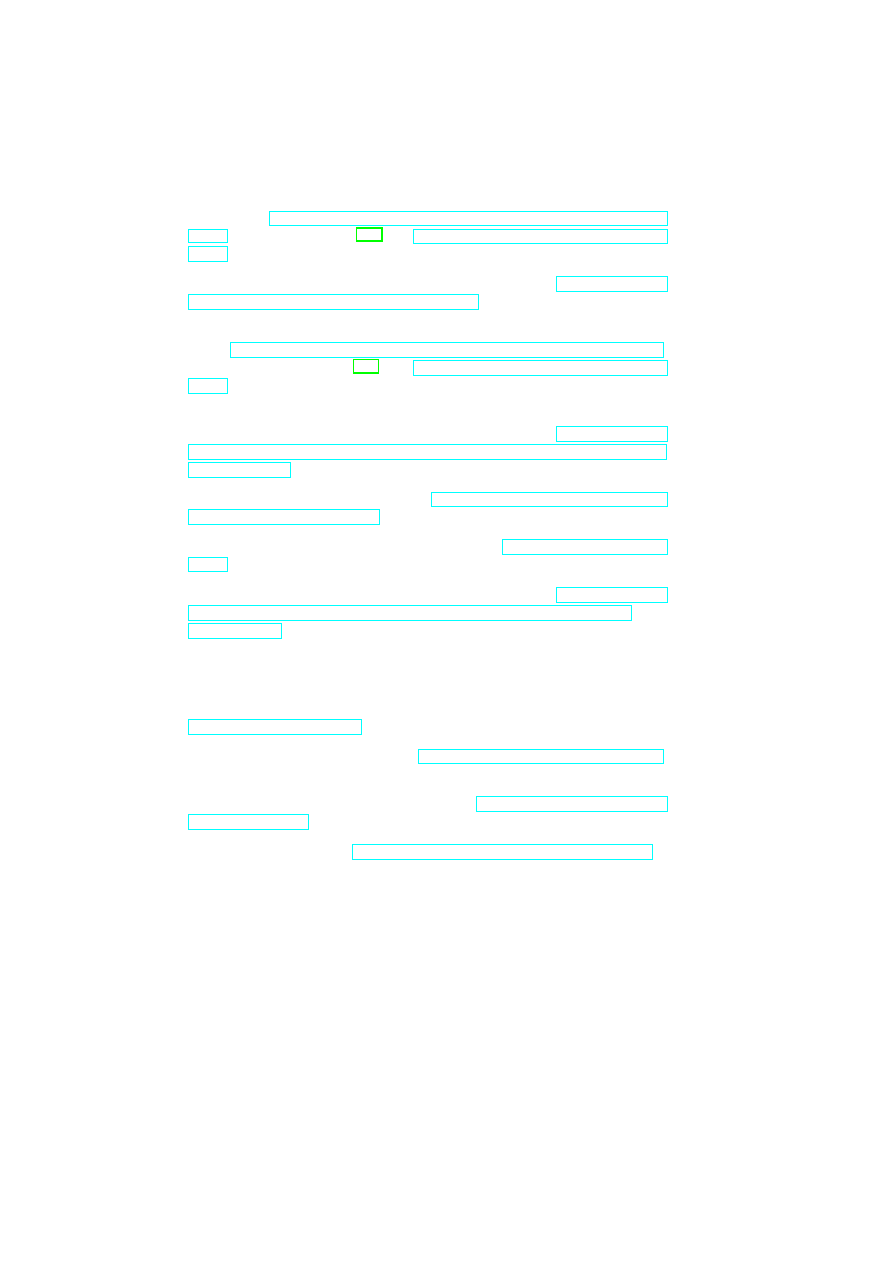

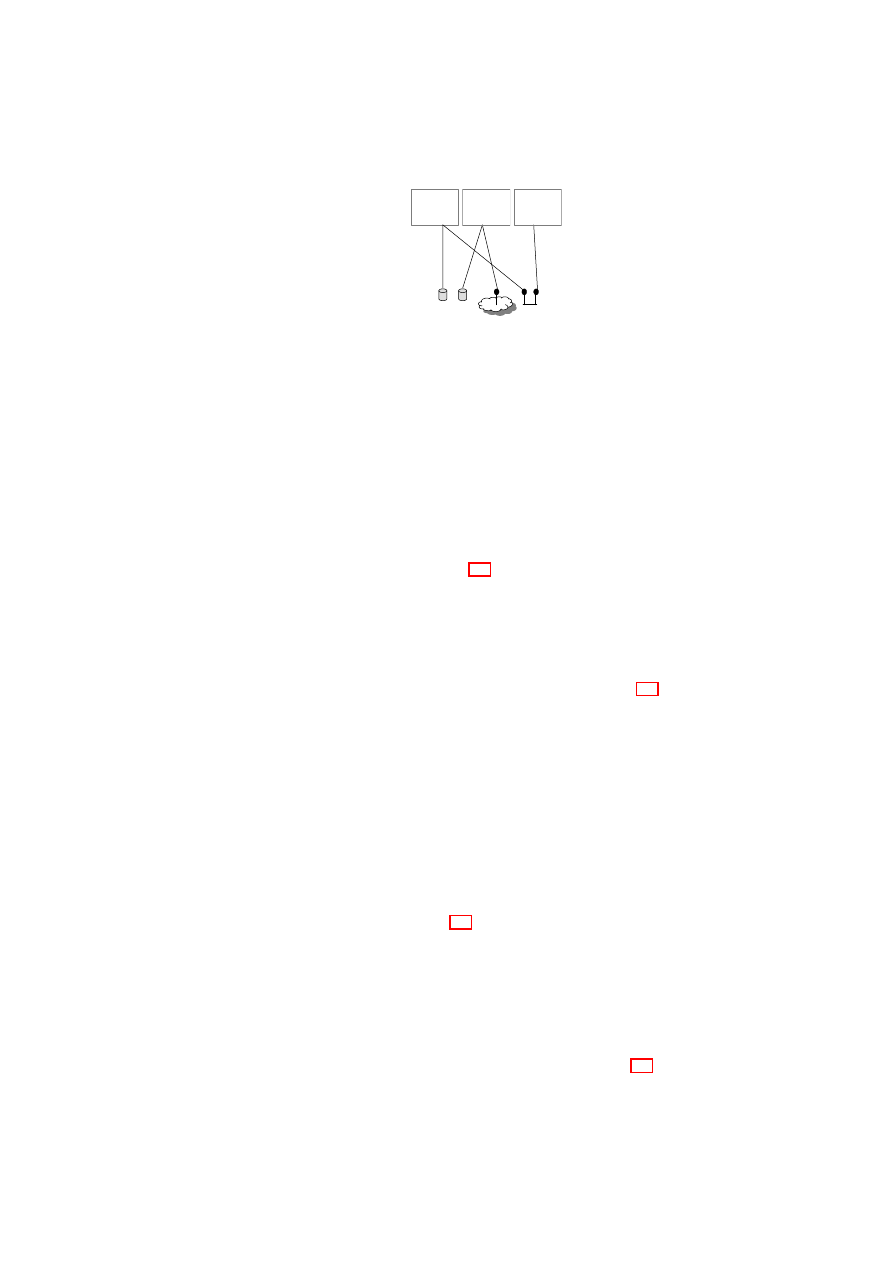

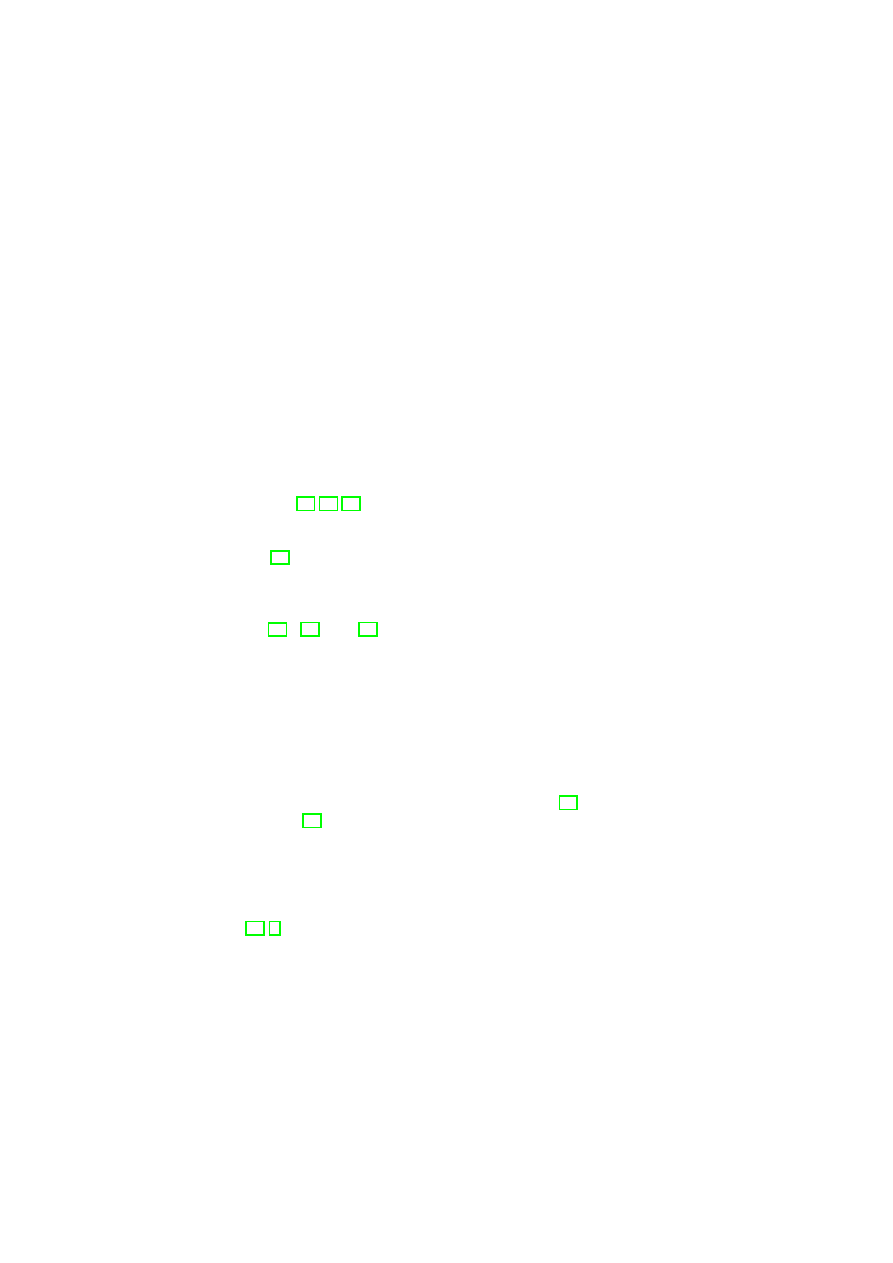

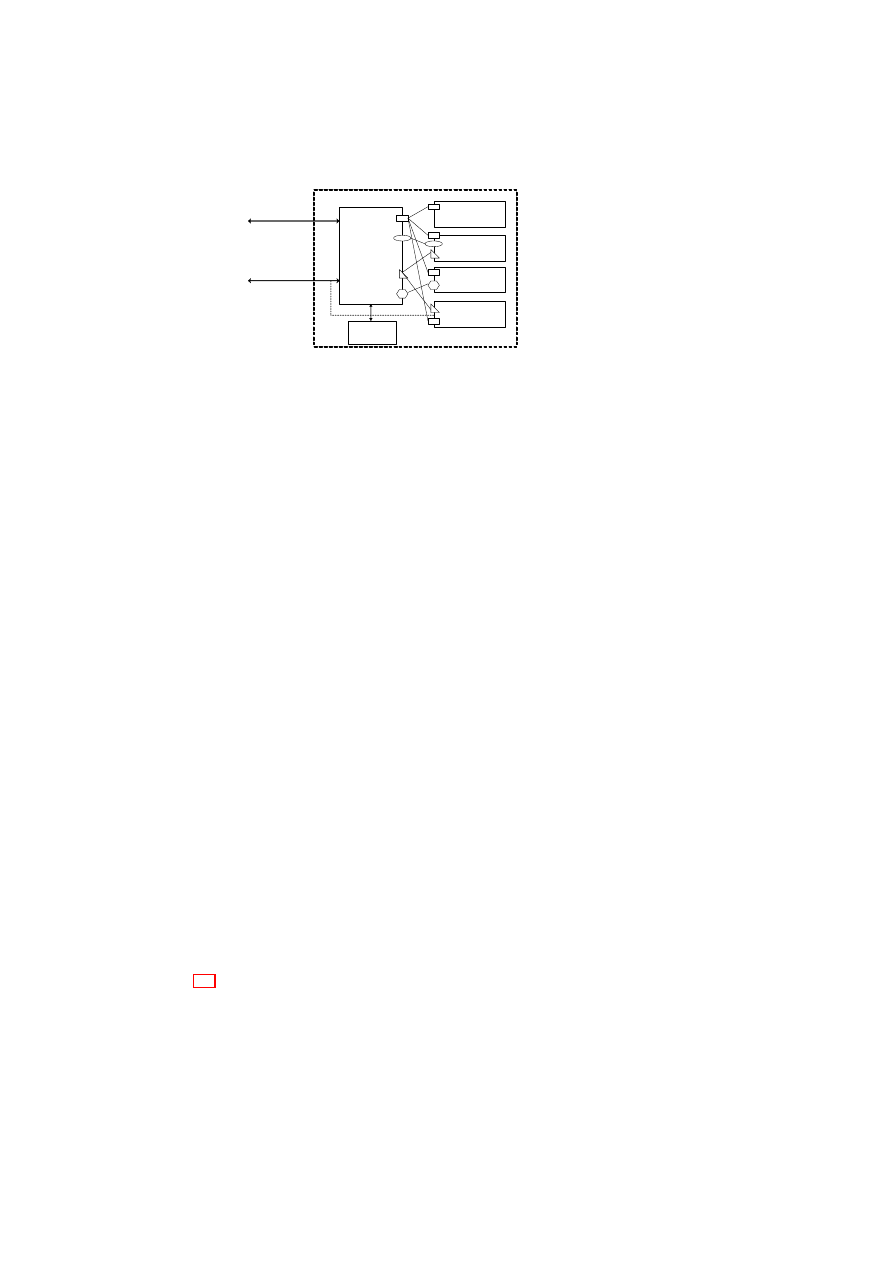

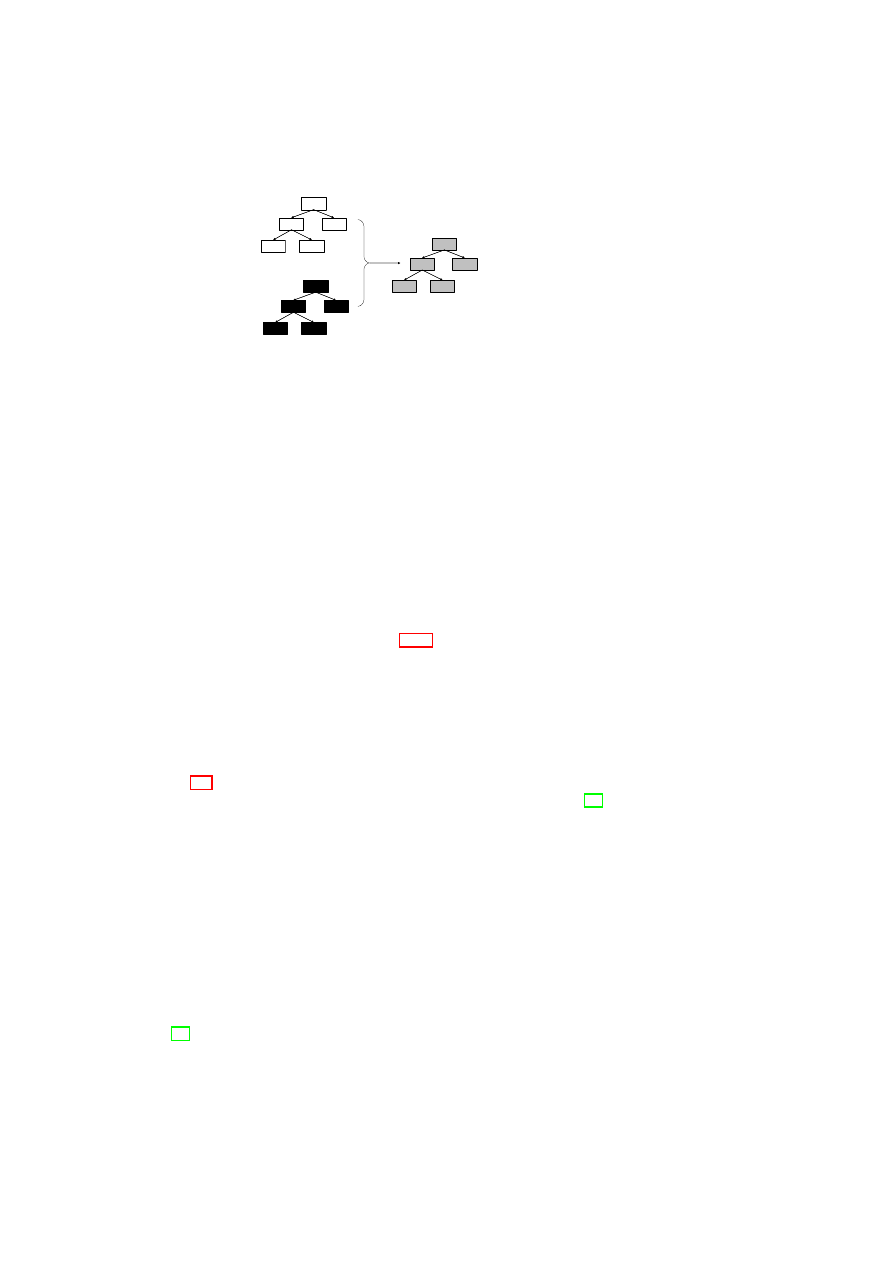

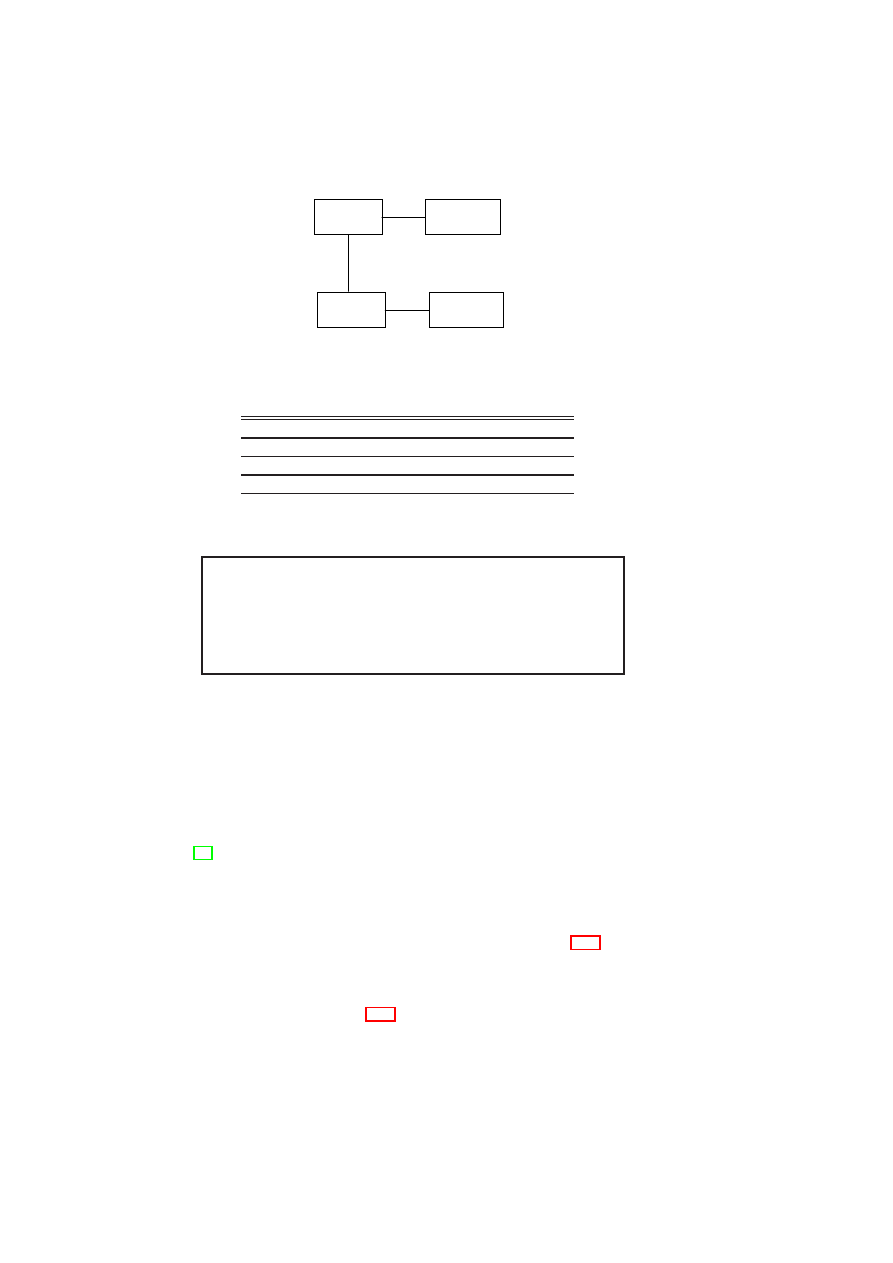

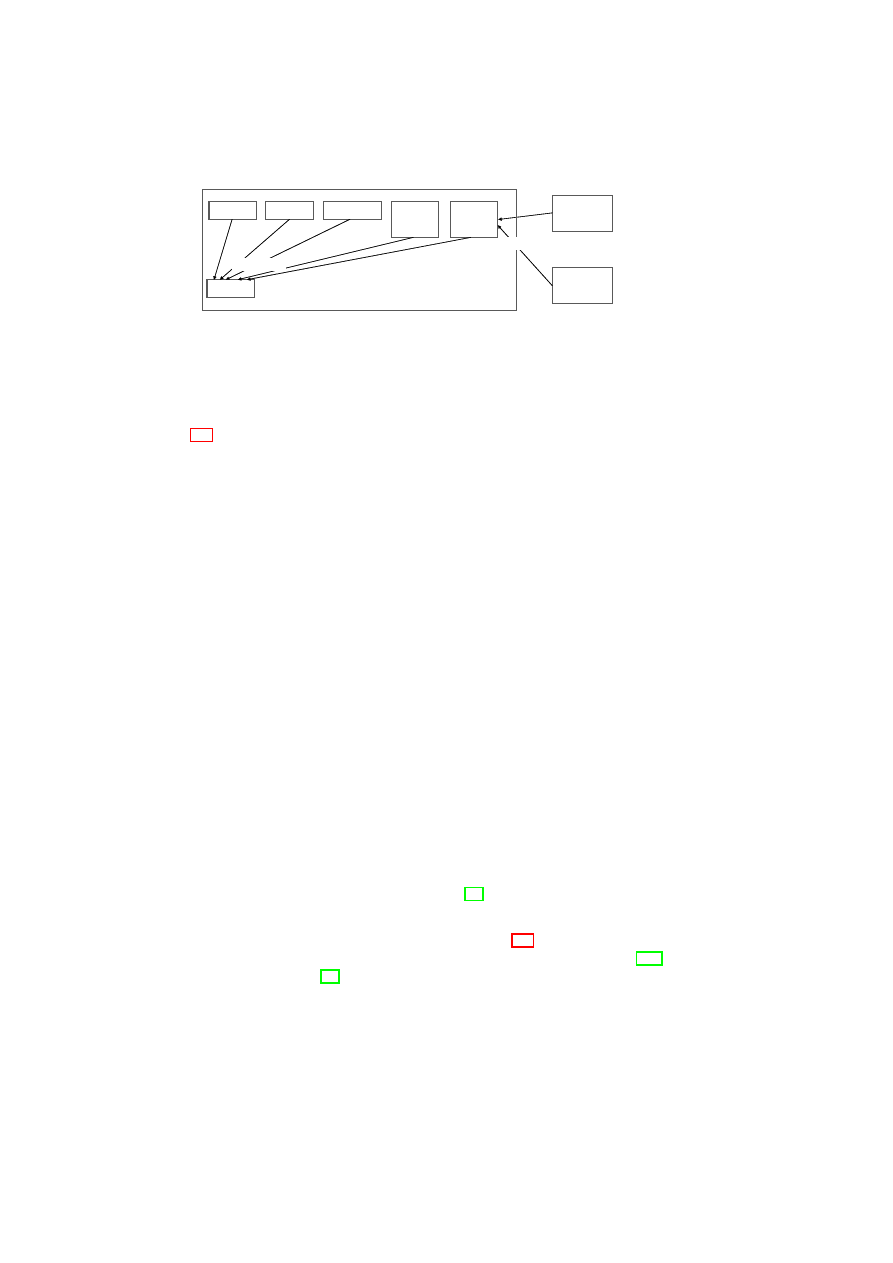

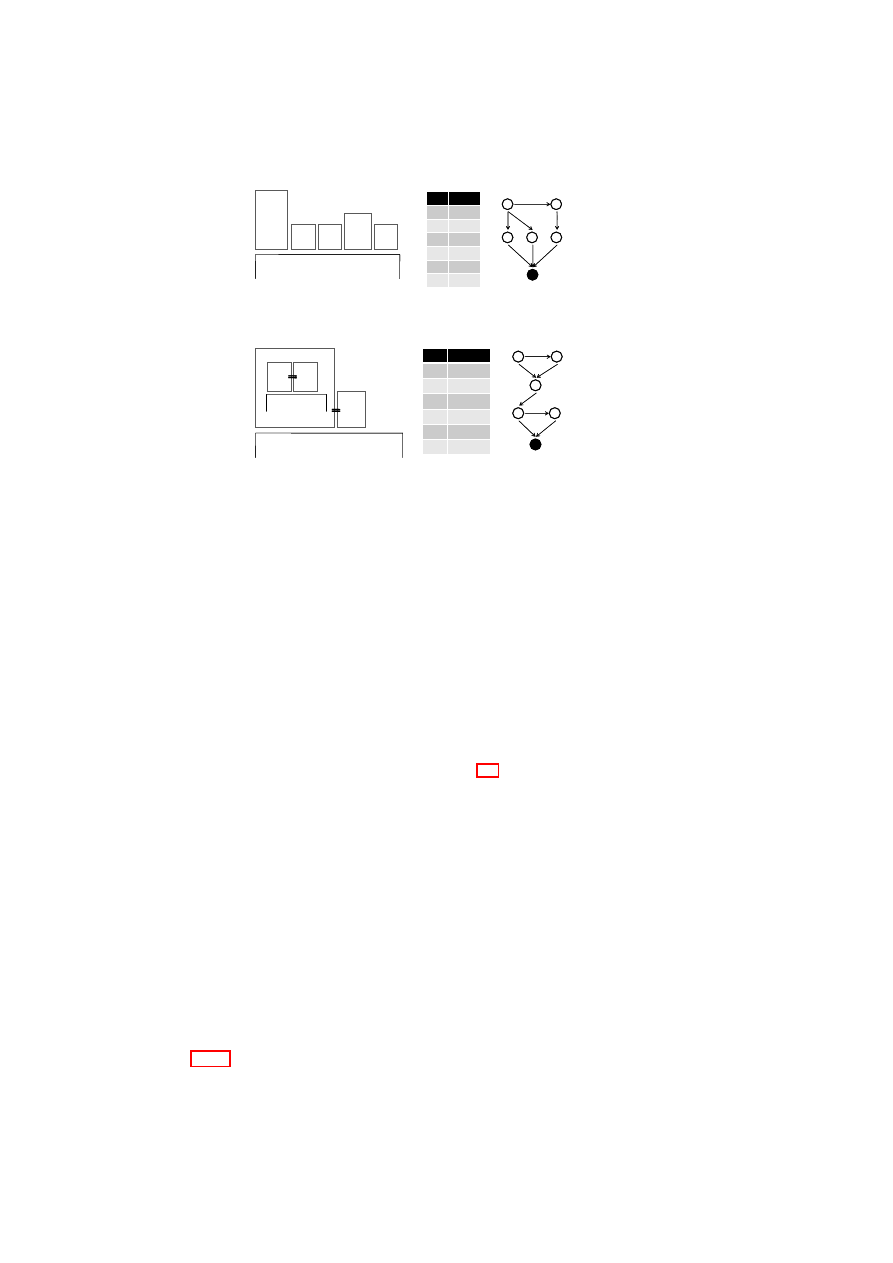

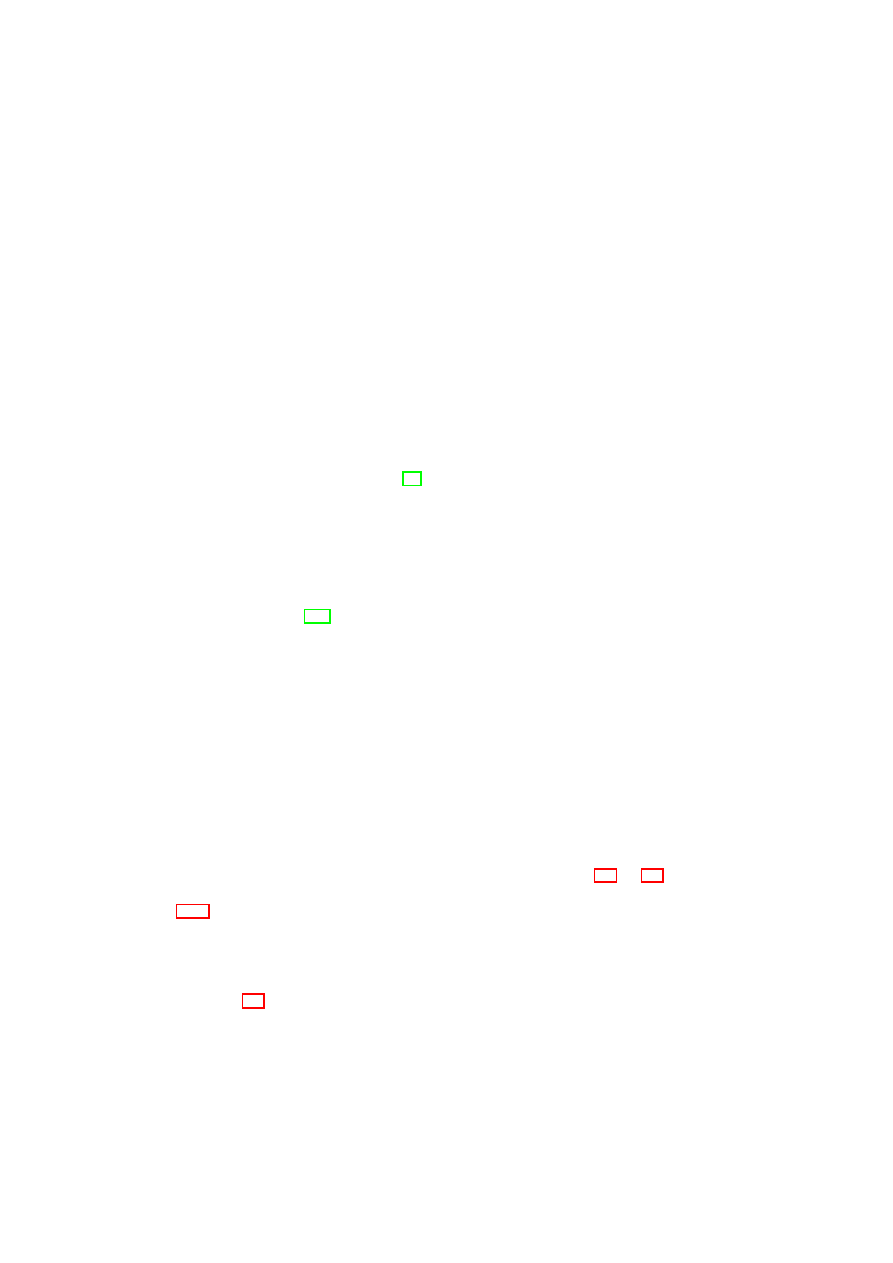

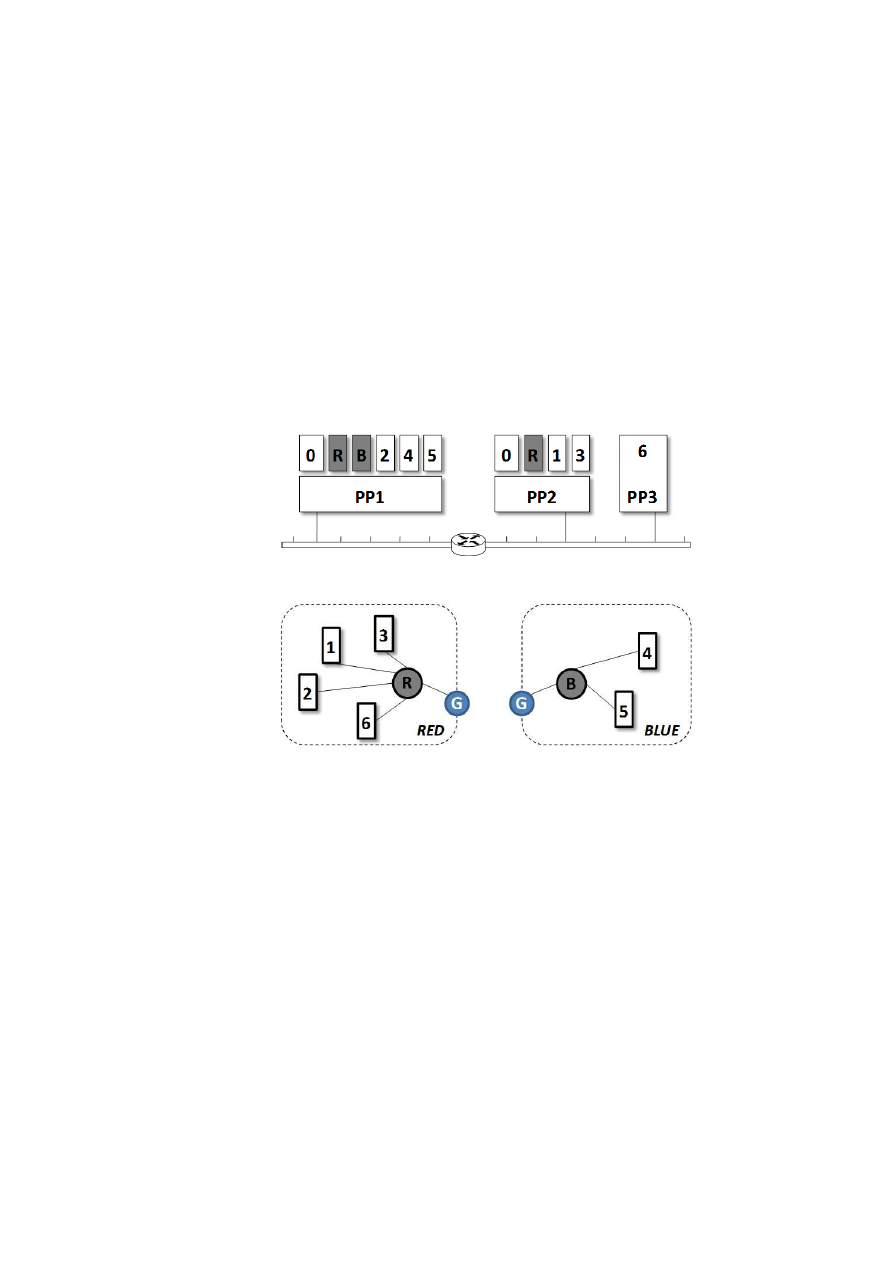

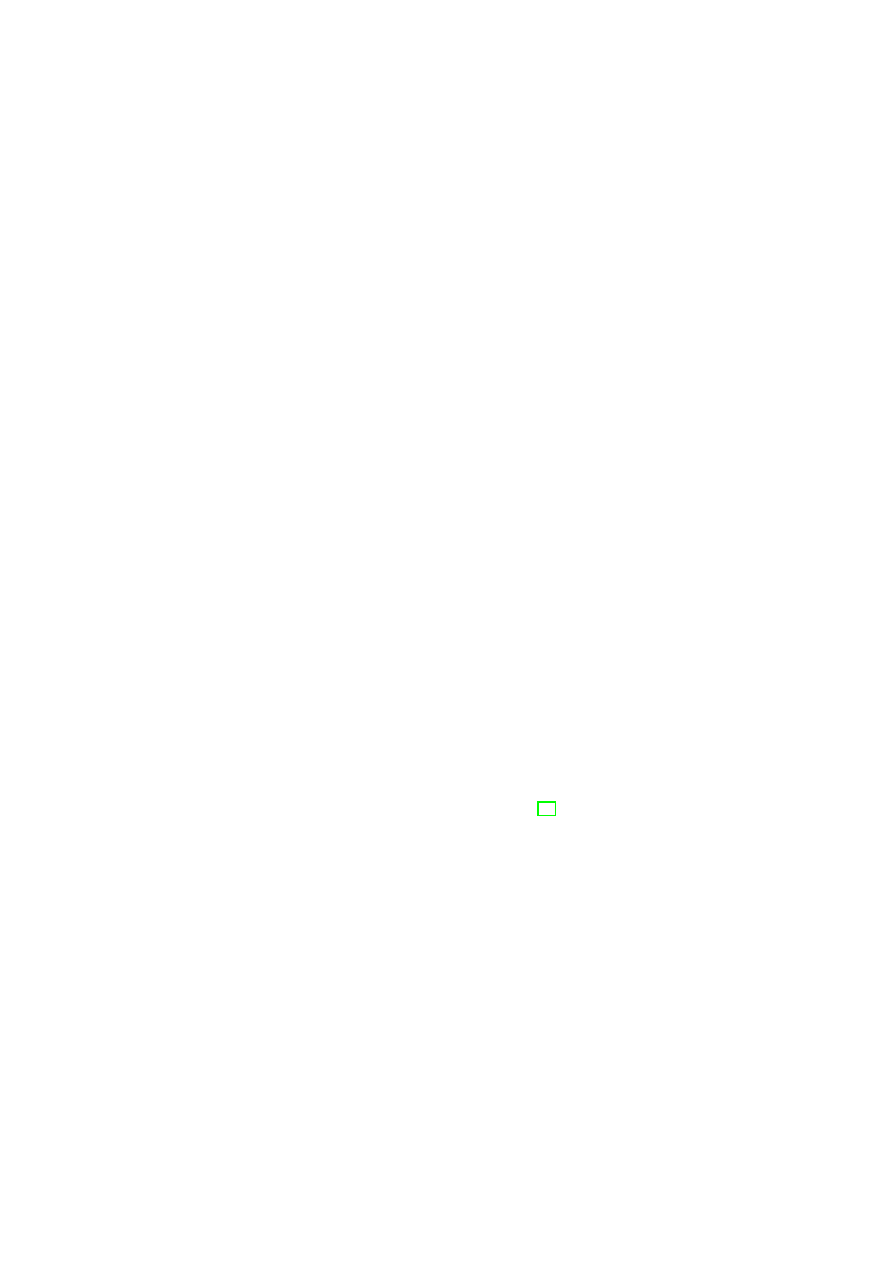

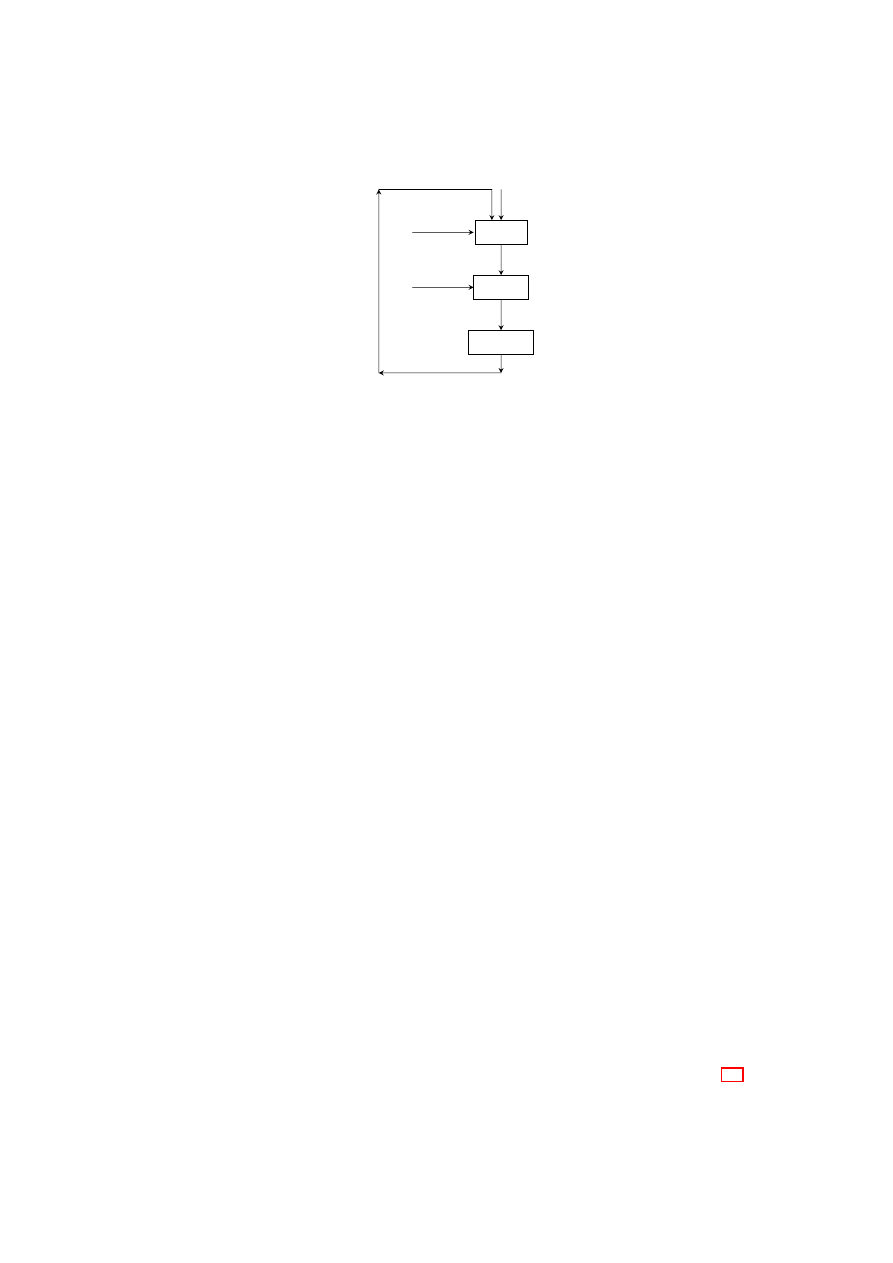

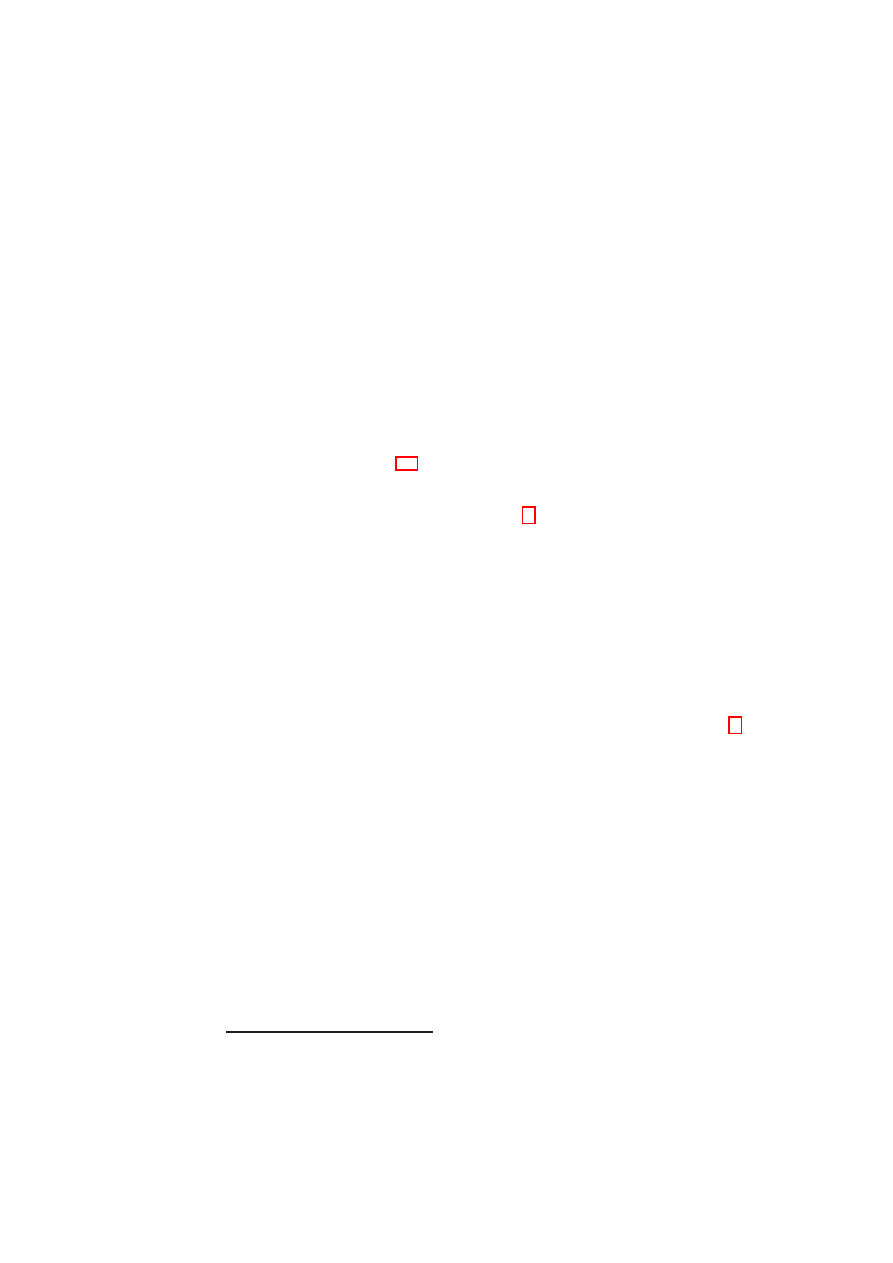

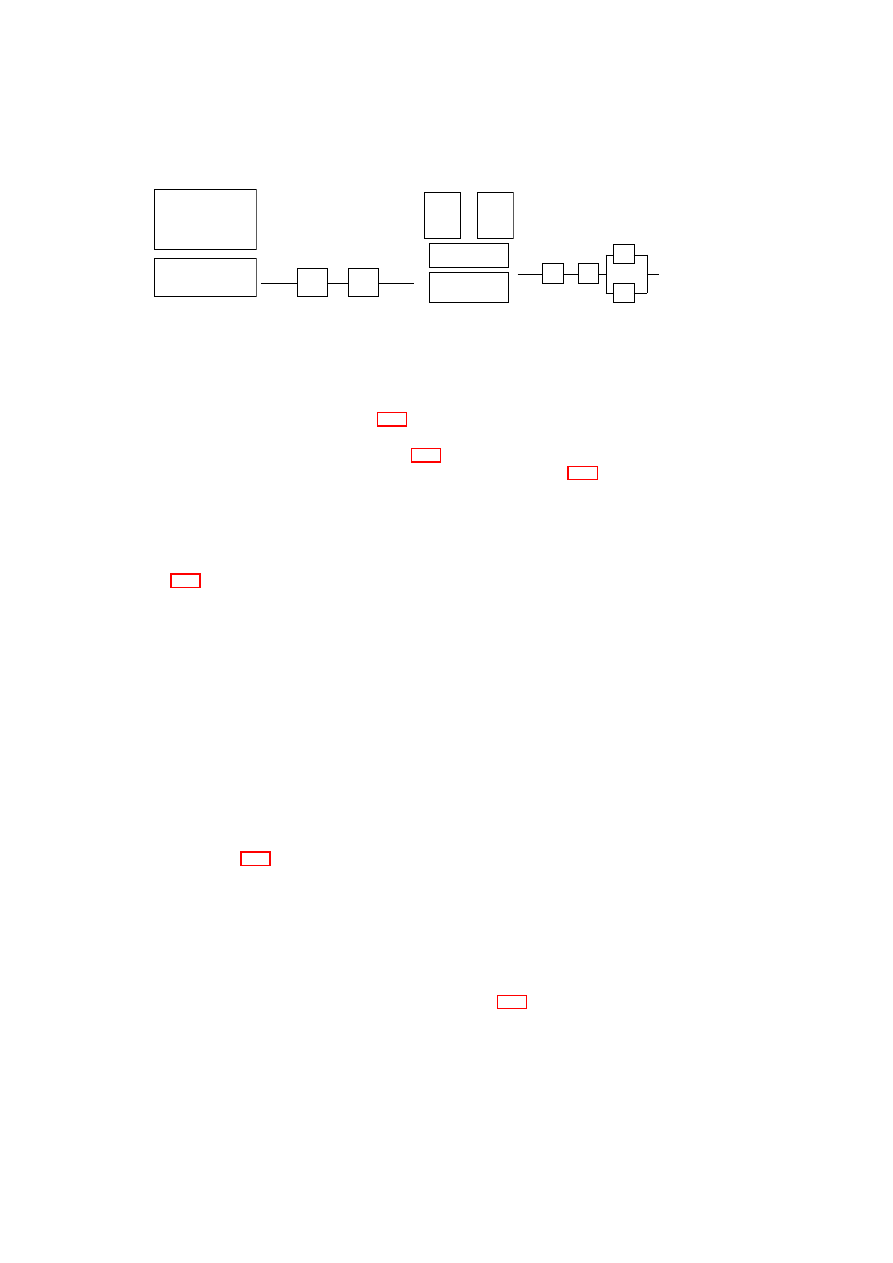

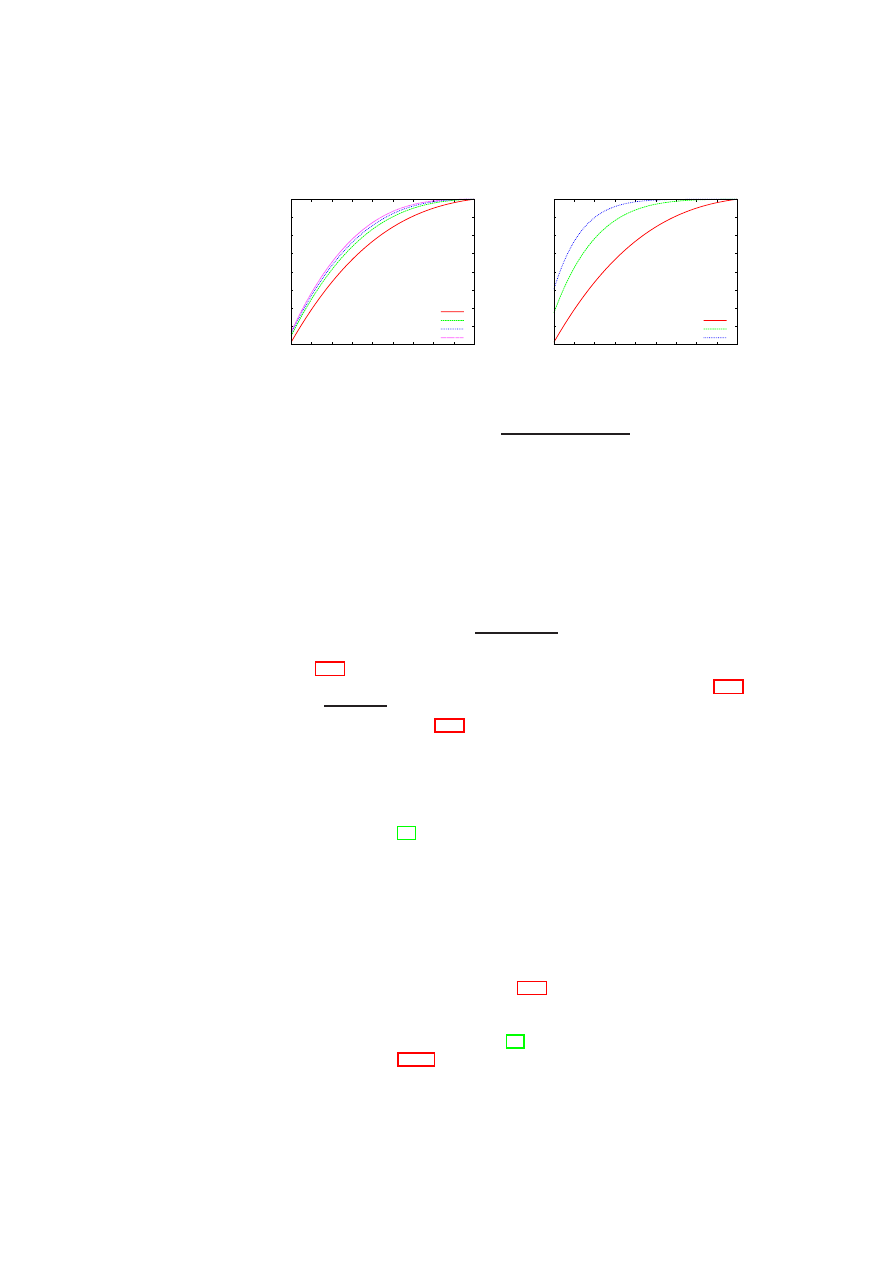

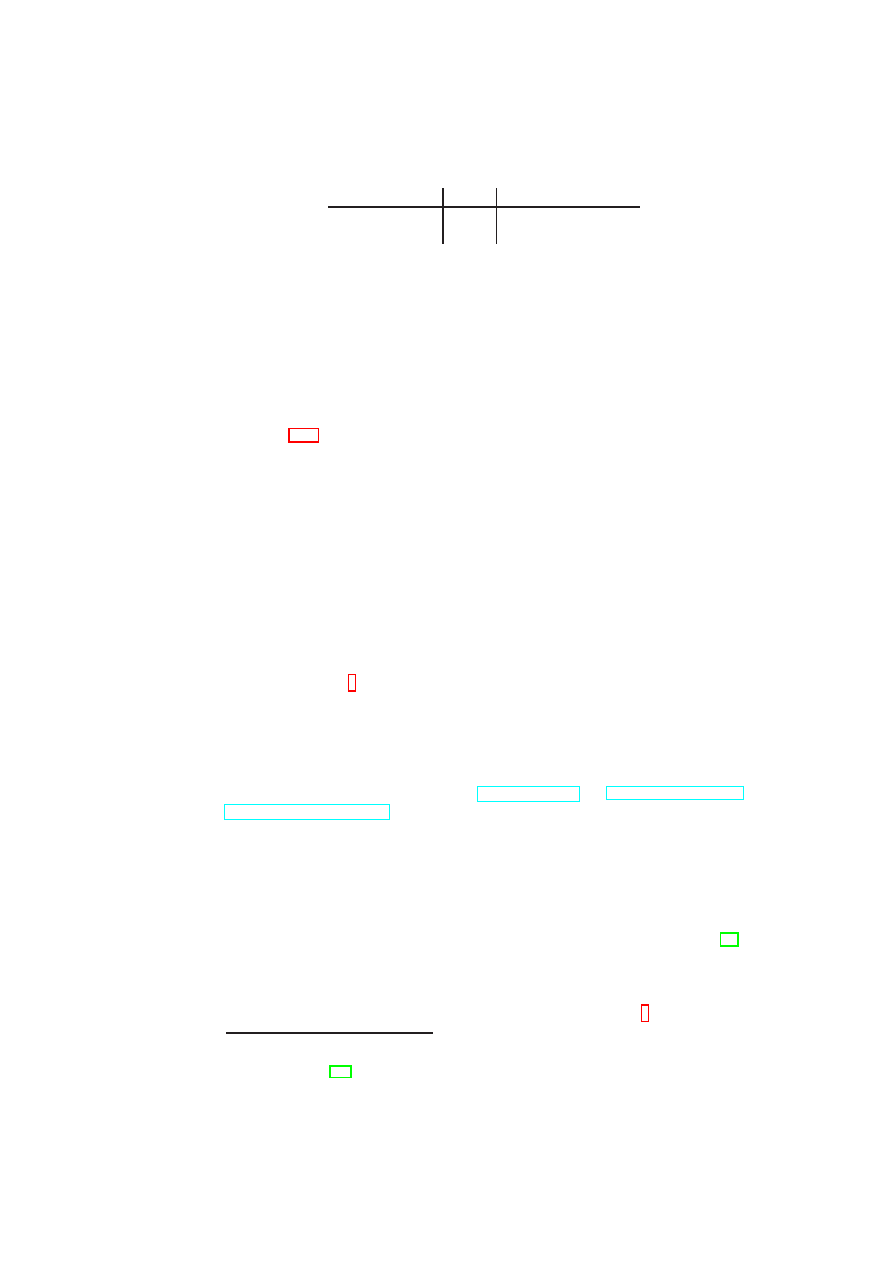

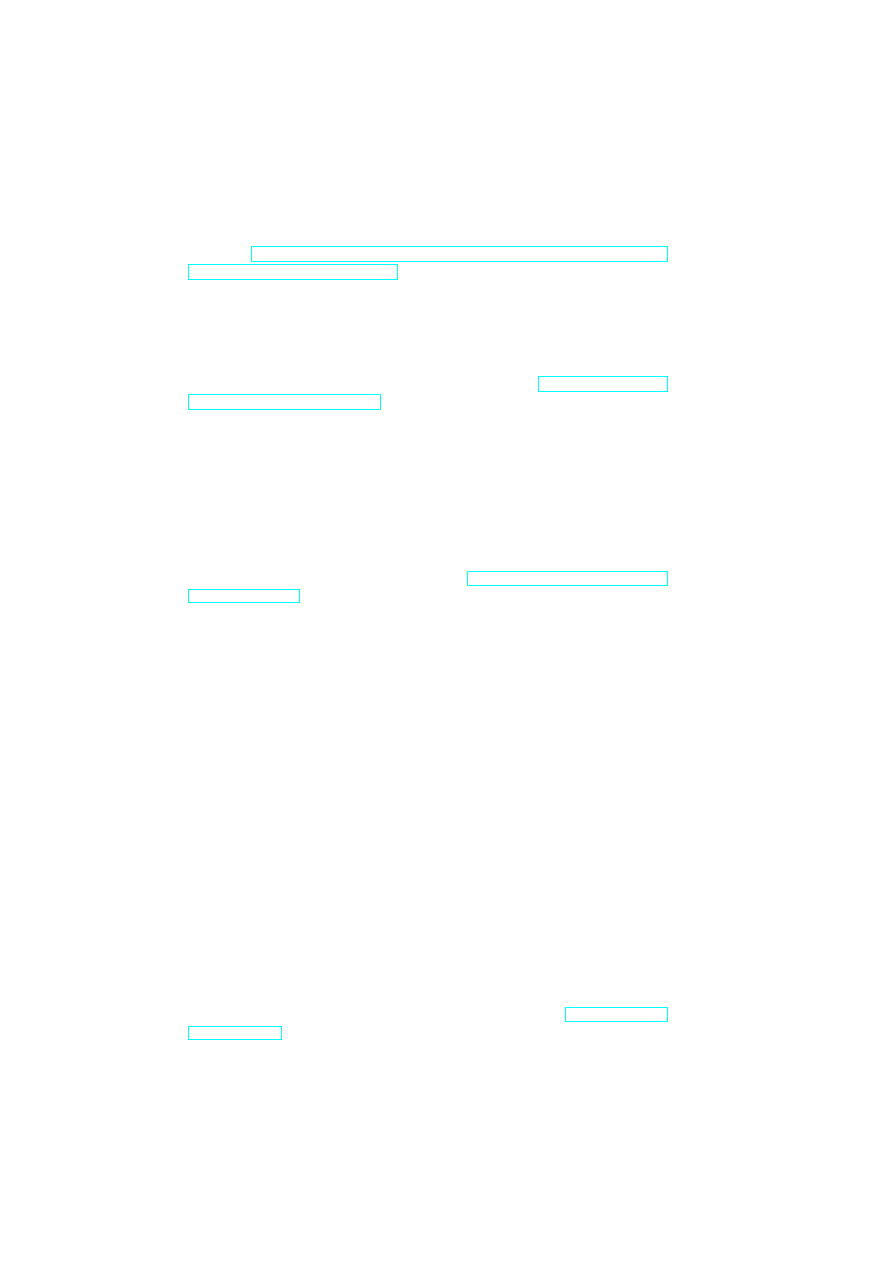

Figure 1.1: TVD Architecture: High-Level Overview.

enforced. Even if VMs are migrated (say, for load-balancing purposes) the logical

topology reflected by the virtual domain should remain unchanged. We deploy Trusted

Computing (TC) functionalities to determine the trustworthiness (assure the integrity)

of the policy enforcement components.

Such a model would provide better flexibility, adaptability, cost savings than to-

day’s physical cages model while still providing the main security guarantees required

for applications such as datacenters.

1.2

Our Contribution

In this deliverable, we provide a blueprint for realizing integrity and isolation in virtual

systems. We do this by supporting a logical cages model, in particular for virtualized

data centers, based on a concept called Trusted Virtual Domains or TVDs [16]. Based

on previous work, we describe a security management framework that helps to realize

the abstraction of TVDs by guaranteeing reliable isolation and flow control between

domain boundaries. Our framework employs networking and storage virtualization

technologies as well as Trusted Computing for policy verification. Our main contribu-

tions are (1) combining these technologies to realize TVDs and (2) orchestrating them

through a management framework that automatically enforces isolation among differ-

ent zones. In particular, our solution aims at automating the verification, instantiation

and deployment of the appropriate security mechanisms and virtualization technolo-

gies based on an input security model, which specifies the required level of isolation

and permitted information flows.

1.3

Outline of this Report

This report is structured in three parts. Part I surveys our work and summarizes related

work in Chapter 4. Part II describes selected building blocks of our security architecture

in detail. Part III describes WP5 components of our prototype and finally reflects on

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

CHAPTER 1. INTRODUCTION AND OUTLINE

9

our lessons learned and documents our outlook onto the future of trusted computing

and virtualization.

The first technical component in Part II is the integrity and assurance management

of the OpenTC Security Services. This has two aspects: In Section 5 we describe how

integrity statements about virtual machines can be made and how data can be bound

to the integrity of a machine. We also describe how to protect the privacy of users

using our system. In Section 6 we extend these results to cover hierarchical integrity

management, i.e., the integrity protection of packages of multiple virtual machines and

the related components.

The second component is our network security architecture described in Chapter 7.

It implements two key ideas. The first idea is to provide secure virtual networks (so-

called trusted virtual domains) that transparently connect VMs on multiple hosts. The

second idea is to automatically provision the required protection mechanisms such that

the networks guarantee a given set of user requirements.

The third component described in Chapter 8 is the public key infrastructure (PKI)

that manages the keys used four security mechanisms. It also includes trusted comput-

ing extensions to existing PKI standards.

The fourth component are trusted channels in Chapter 9 that enable to establish a

secure channel while verifying the integrity of the peer. This allows users to not only

guarantee the integrity of a given machine but also to securely connect to the machine

that has been validated.

The fifth and final concept is our approach to attack and failure resilience as doc-

ument in Chapter 10. This concept comprises three main ideas. The first is to use

introspection to detect viruses and other failures inside a running VM. The second is

to implement redundancy for VMs and key hypervisor components such as network or

disk drivers. The final idea combining them is to monitor VMs and rejuvenate VMs

that are failed or are at risk of failing. Overall, this allows a substantial increase in the

resiliency of the services running on this platform.

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

10

OpenTC D05.6 – Final Report of OpenTC Workpackage 5

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

Part I

Overview and Related Work

11

12

OpenTC D05.6 – Final Report of OpenTC Workpackage 5

1.4

Dependability of Virtual Systems

We now provide a sampling of related work in the area of using VMs for improving de-

pendability. We also compare our X-Spy intrusion detection framework with previous

hypervisor-based intrusion detection systems. Many of these works, including ours,

implicitly trust the virtualization layer to function properly, to isolate the VMs from

each other, and to control the privileged access of certain VMs to other VMs. Such a

trust can be justified by the observation that a typical hypervisor consists of some tens

of thousands lines-of-code (LOC), whereas a typical operating system today is on the

order of millions LOC [40]. This allows a much higher assurance for the code of a

hypervisor.

Bressoud and Schneider [15] implemented a primary-backup replication protocol

tolerant to benign faults at the VMM level. The protocol resolves non-determinism

by logging the results of all non-deterministic actions taken by the primary and then

applying the same results at the backups to maintain state consistency.

Double-Take [124] uses hardware-based real-time synchronous replication to repli-

cate application data from multiple VMs to a single physical machine so that the appli-

cation can automatically fail over to a spare machine by importing the replicated data in

case of an outage. As the replication is done at the file system level below the VM, the

technique is guest-OS-agnostic. Such a design could provide the basis for a business

model in which multiple client companies outsource their disaster recovery capability

to a disaster recovery hot-site that houses multiple physical backup machines, one for

each client.

Douceur and Howell [29] describe how VMMs can be used to ensure that VMs

satisfy determinism and thereby enable state machine replication at the VM level rather

than the application level. Specifically, they describe how a VM’s virtual disk and clock

can be made deterministic with respect to the VM’s execution. The design relieves the

application programmer of the burden of structuring the application as a deterministic

state machine. Their work is similar to Bressoud and Schneider’s approach [15] of

using a VMM to resolve non-determinism. However, the difference lies in the fact

that whereas Bressoud and Schneider’s approach resolves non-determinism using the

results of the primary machine’s computation, Douceur and Howell’s design resolves

non-determinism a priori by constraining the behavior of the computation.

Dunlap et al. describe ReVirt [31] for VM logging and replay. ReVirt encapsulates

the OS as a VM, logs non-deterministic events that affect the VM’s execution, and

uses the logged data to replay the VM’s execution later. Such a capability is useful

to recreate the effects of non-deterministic attacks, as they show later in [59]. Their

replay technique is to start from a checkpoint state and then roll forward using the log

to reach the desired state.

Joshi et al. [59] combine VM introspection with VM replay to analyze whether a

vulnerability was activated in a VM before a patch was applied. The analysis is based

on vulnerability-specific predicates provided by the patch writer. After the patch has

been applied, the same predicates can be used during the VM’s normal execution to

detect and respond to attacks.

Backtracker [64] can be used to identify which application running inside a VM was

exploited on a given host. Backtracker consists of an online component that records OS

objects (such as processes and files) and events (such as read, write, and fork), and an

offline component that generates graphs depicting the possible chain of events between

the point at which the exploit occurred and the point at which the exploit was detected.

An extension of Backtracker [66] has been used to track attacks from a single host

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

13

at which an infection has been detected to the originator of the attack and to other hosts

that were compromised from that host. The extension is based on identifying causal

relationships, and has also been used for correlating alerts from multiple intrusion de-

tection systems.

King et al. [65] describe the concept of time-traveling virtual machines (TTVMs),

in which VM replay is used for low-overhead reverse debugging of operating systems

and for providing debugging operations such as reverse break point, reverse watch

point, and reverse single step. Combining efficient checkpointing techniques with Re-

Virt, TTVMs can be used by programmers to go to a particular point in the execution

history of a given run of the OS. To recreate all relevant state for that point, TTVMs

log all sources of non-determinism.

Garfinkel and Rosenblum [40] introduced the idea of hypervisor-based intrusion

detection, and pointed out the advantages of this approach and its applicability not

only for detection, but also for protection. Their Livewire system uses a modified

VMware workstation as hypervisor and implements various polling-based and event-

driven sensors. Compared with Livewire, our X-Spy system employs more extensive

detection techniques (e.g., by checking not only processes, but also kernel modules

and file systems) and protection techniques (such as pre-checking and white-listing of

binaries, and kernel sealing) with an explicit focus on rootkit detection. In addition,

X-Spy enables easy forensic analysis.

Zhang et al. [132] and Petroni et al. [85] use a secure coprocessor as the basis for

checking the integrity of the OS kernel running on the main processor. However, as the

coprocessor can only read the memory of the machine monitored, only polling-based

intrusion detection is possible. In contrast, X-Spy can perform both polling-based

and event-driven intrusion detection. Specifically, it can intercept and deny certain

requested actions (such as suspicious system calls), and therefore has the capability to

not only detect but also protect.

Laureano et al. [71] employ behavior-based detection of anomalous system call se-

quences after a learning phase in which “normal” system calls are identified. Processes

with anomalous system call sequences are labeled suspicious. For these processes, cer-

tain dangerous system calls will in turn be blocked. The authors describe a prototype

based on a type-II hypervisor, namely, User-Mode Linux (UML) [28].

The ISIS system of Litty [75] is also based on UML. ISIS runs as a process in the

host operating system and detects intrusions in the guest operating system by using

the

ptrace

system call for instrumenting the guest UML kernel. Unlike X-Spy, ISIS

focuses mostly on intrusion detection and not protection.

Jiang et al. [57] describe the VMwatcher system, in which host-based anti-malware

software is used to monitor a VM from within a different VM. X-Spy and VMwatcher

are similar in that both use the hypervisor as a bridge for cross-VM inspection, and both

tackle the semantic gap problem. While their work focuses on bridging the semantic

gap on a multitude of platforms (hypervisors and operating systems), our work focuses

on employing more extensive detection mechanisms (such as checking not only pro-

cesses, but also kernel modules, network connections, and file systems) on a single

hypervisor. In contrast to X-Spy, VMwatcher does not include event-driven detection

methods or protection techniques.

The Strider GhostBuster system by Beck et al. [12] is similar to X-Spy in that both

use a differential view of system resources. Strider GhostBuster compares high-level

information (such as information obtained by an OS command) with low-level infor-

mation (e.g., kernel information) to detect malicious software trying to hide system

resources from the user and administrator. However, such a comparison has limited

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

14

OpenTC D05.6 – Final Report of OpenTC Workpackage 5

effectiveness as detection takes place in the same (potentially compromised) OS envi-

ronment. Beck et al. also compare the file system view obtained from a potentially

compromised OS with the view obtained from an OS booted from a clean media. The

disadvantage of such an approach is that it requires multiple reboots and is limited to

checking only persistent data (such as file system) and not run-time data.

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

Chapter 2

Security Policies for Virtual

Data Centers

Data centers provide computing and storage services to multiple customers. Customers

are ideally given dedicated resources such as storage and physical machines. In the

physical cages approach, only few resources such as the Internet connection may be

shared between multiple customers. For cost efficiency, our logical cages approach

promotes securely extending sharing to other resources such as storage and networks.

This is enabled by preventing unauthorized information exchange via shared resources.

To model and implement the logical caging approach, we introduce a domain-based

security model for enforcing unified security policies in virtualized data centers. We

focus on isolation policies that mimic physical separation of data center customers. Our

goal is to logically separate networks, storage, VMs, users, and other virtual devices of

one customer from another customer. For our purposes, we define domain isolation as

the ability to enforce security policies within a domain independently of other domains

that may co-exist on the same infrastructure and interact with that domain. The core

idea is to use this isolation property as a foundation for guaranteeing desired security

properties within each virtual domain while managing shared services under mutually

agreed policies.

We now explain the policies that describe this controlled information exchange in a

virtualized data center. In Section 3 we describe the individual components that enable

us to enforce these policies.

2.1

High-level Policy Model

The security model includes two high-level policies defining the security objectives

that must be provided by the underlying infrastructure:

Inter-TVD Policy: By default, each TVD is isolated from the outside world. The

high-level information-exchange policy defines whether and how information

can be exchanged with other TVDs. If no information flow with other TVDs is

permitted, no resources can be shared unless the data center operator can guar-

antee that the isolation is preserved. If information flow to/from other TVDs is

allowed, sub-policies further qualify the exact information flow policy for the

individual resources.

15

16

OpenTC D05.6 – Final Report of OpenTC Workpackage 5

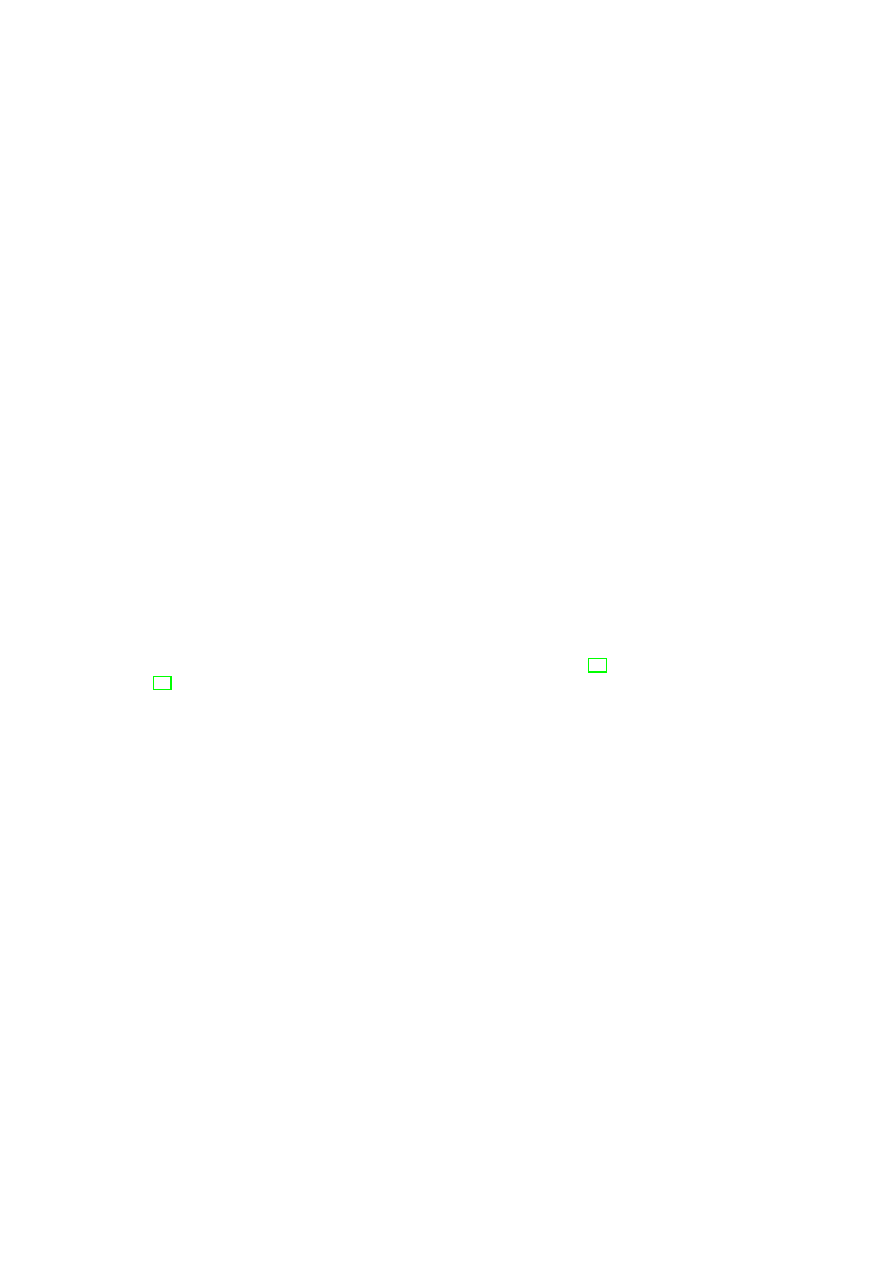

VPE

1

(Role

1)

Roles of Single-Domain

Machines (subjects):

Multi-Domain Resources

(objects)

Operations[parameter]

(mode):

VPE

2

(Role

2)

VPE

3

(Role

3)

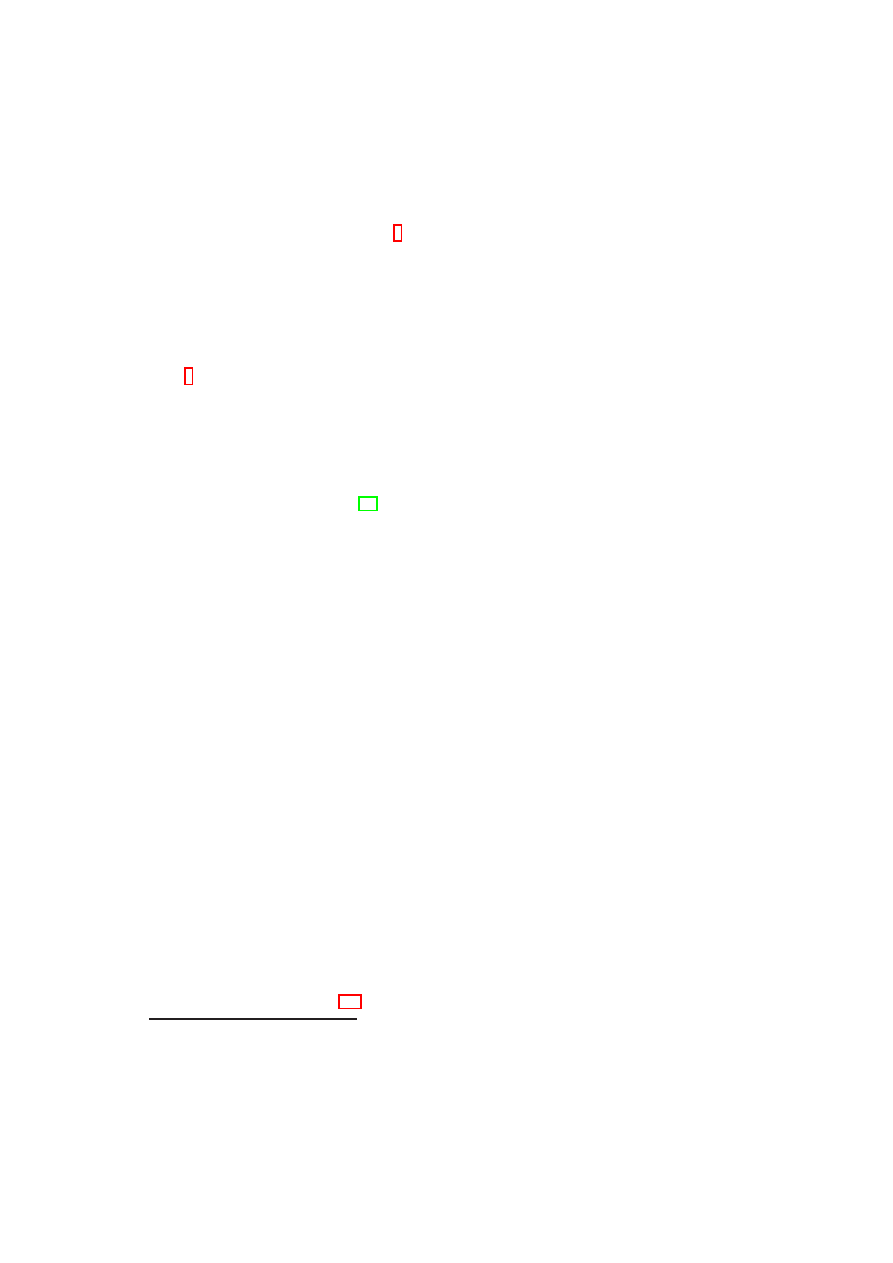

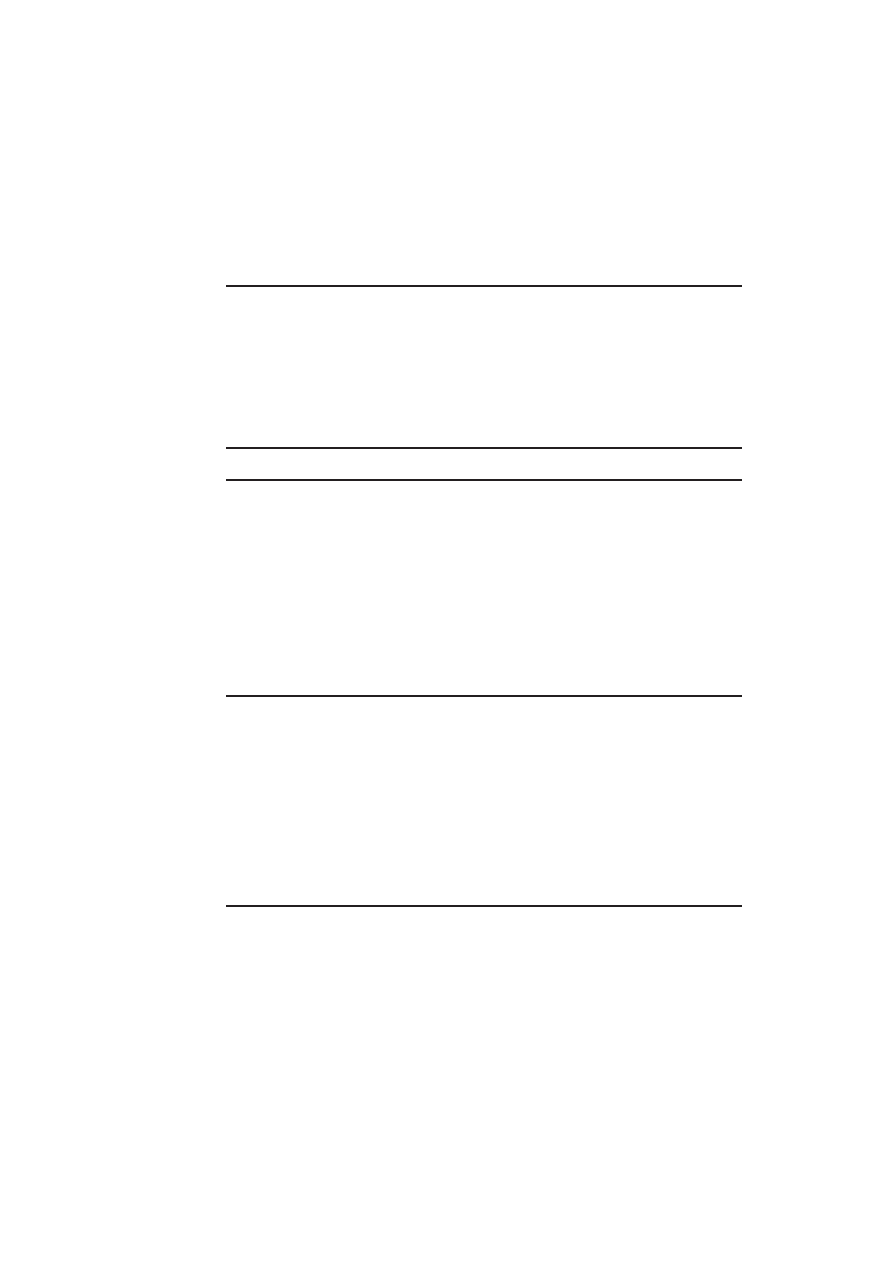

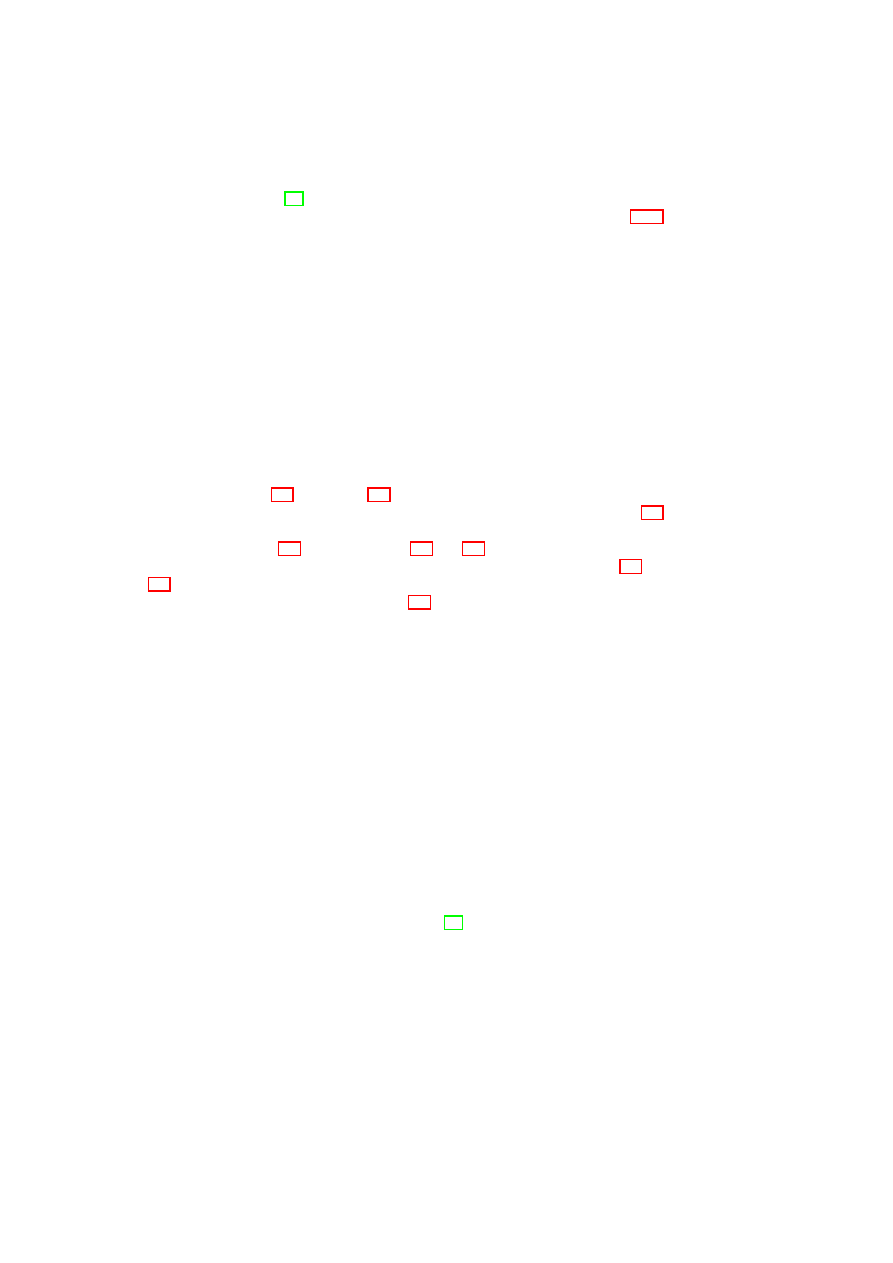

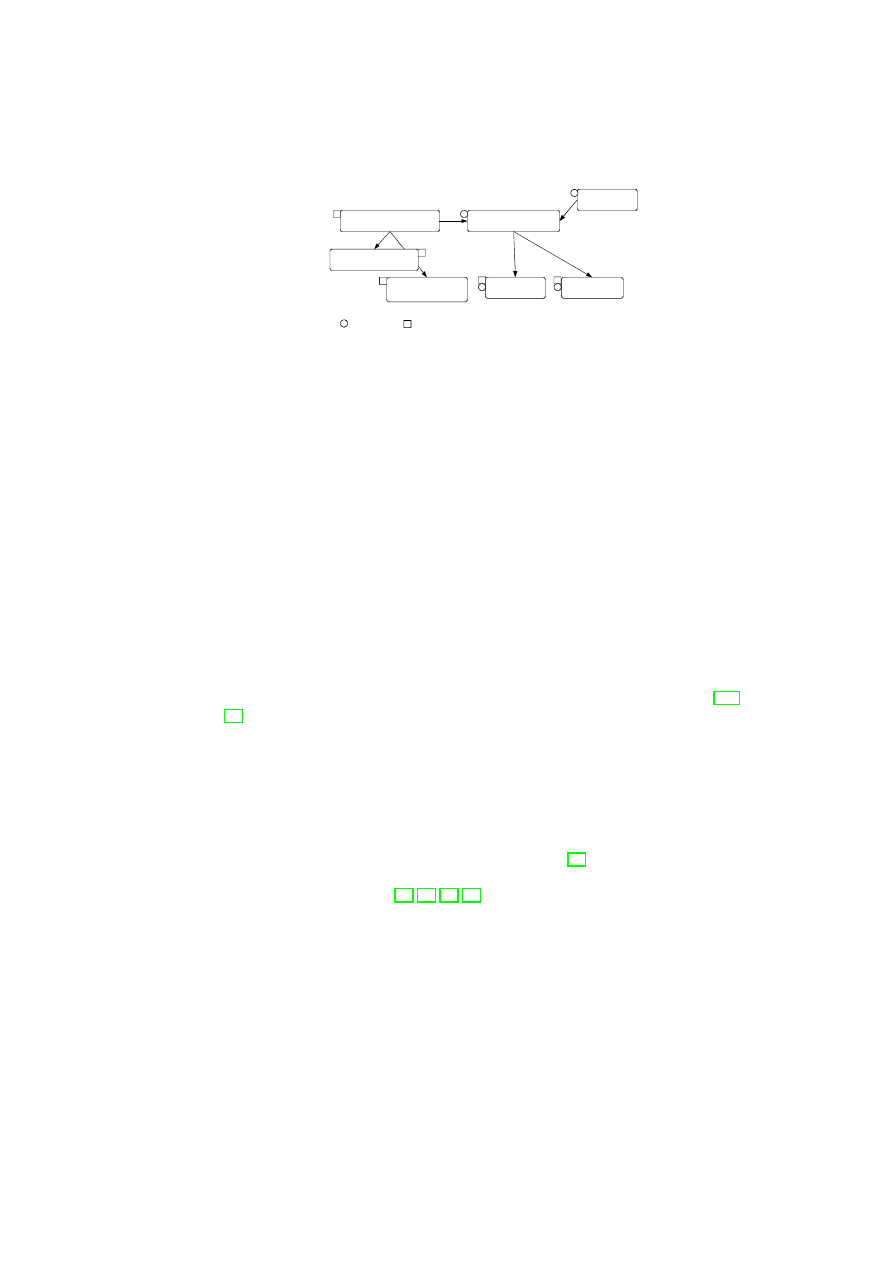

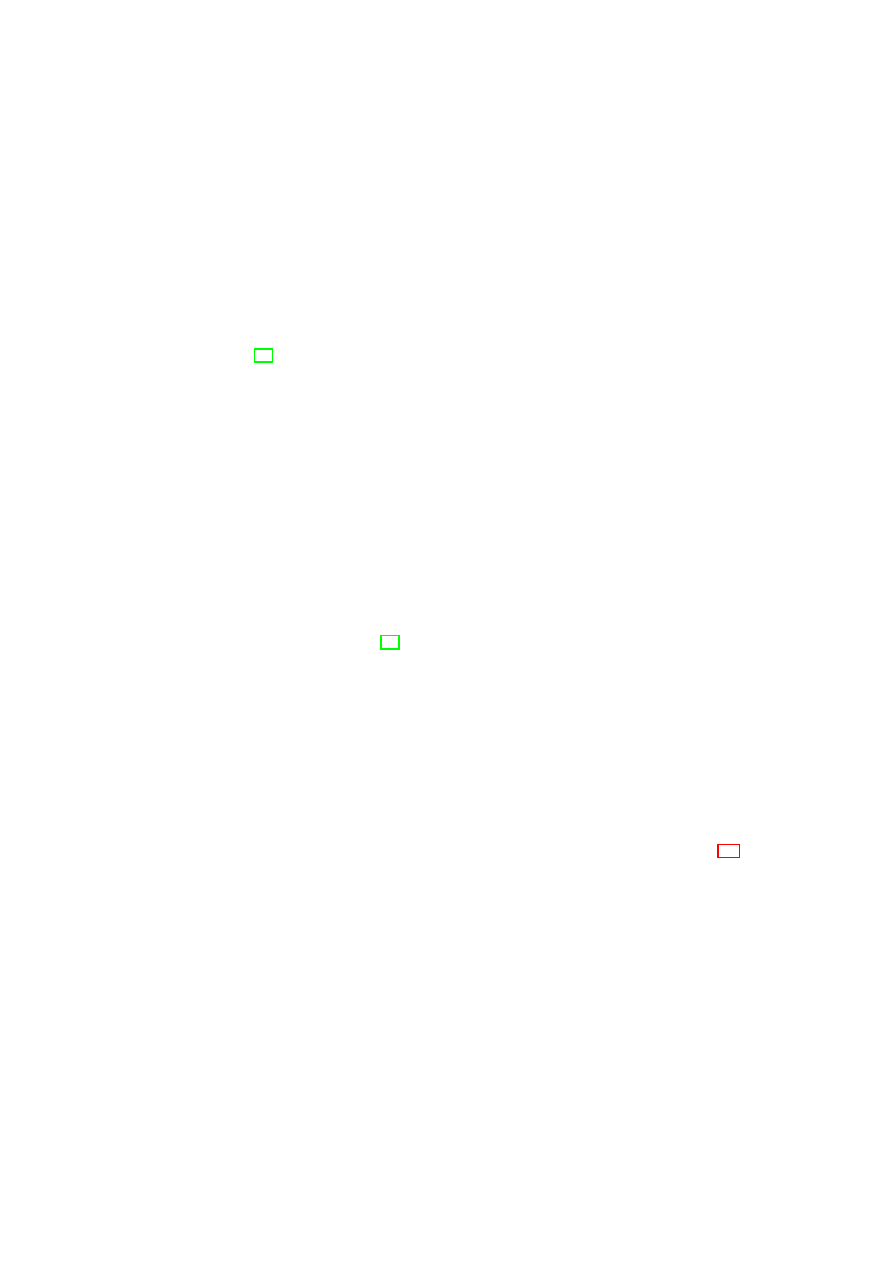

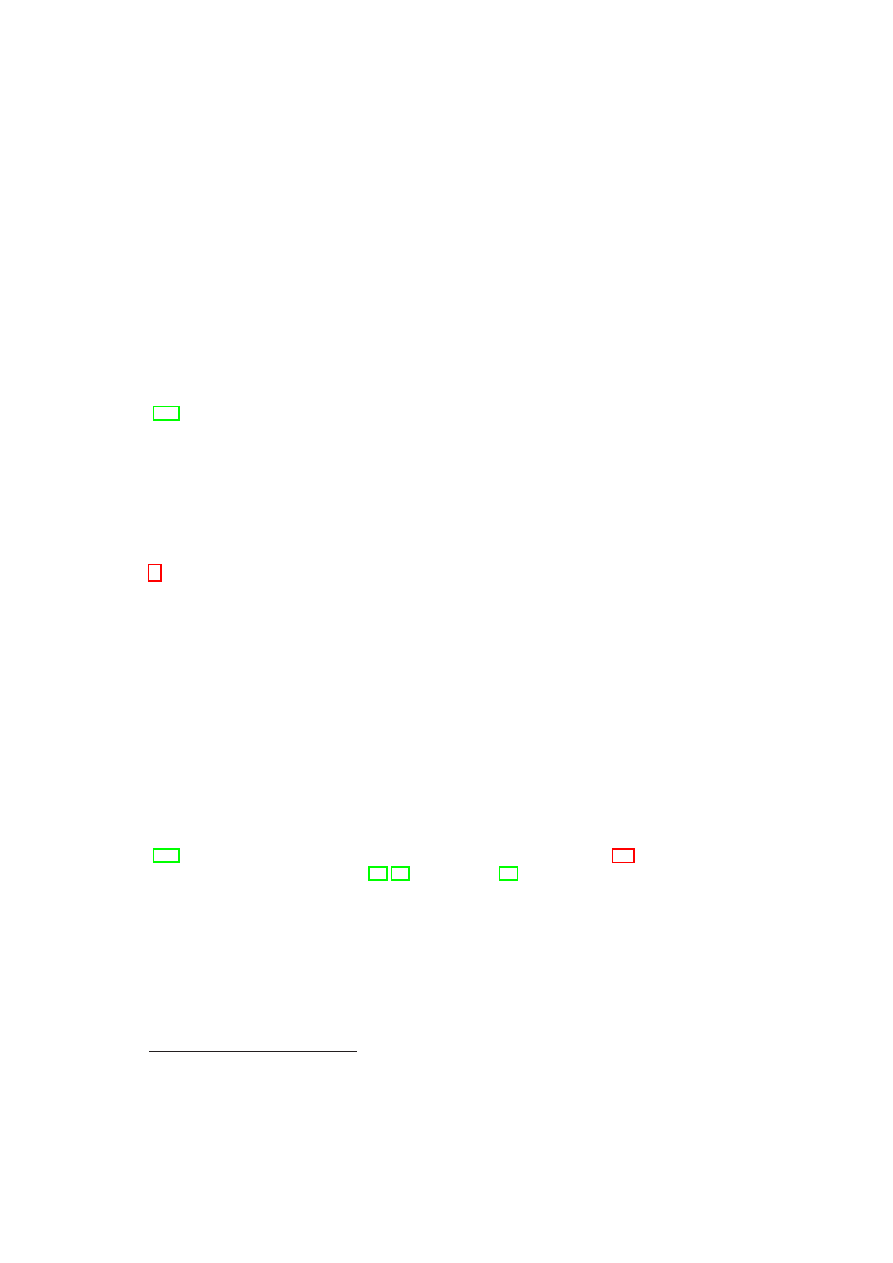

Figure 2.1: Policy Model: Single-TVD Machines operate on Shared Resources

Intra-TVD Policy: Domain policies allow TVD owners (e.g., customers) to define the

security objectives within their own TVDs. Examples of such policies include

how the internal communication is to be protected and under what conditions

resources (e.g., storage, machines) can join a particular TVD.

We further define more fine-grained policies by the use of roles that can be assigned

to any member VM, say to a member machine. This allows us to define and enforce

role-based policies within and across TVDs. For example, machines can now assume

internal or gateway roles with corresponding permissions; while a workstation may not

be allowed to connect to non-TVD networks, machines with the “firewall” role can be

allowed to connect to selected other networks. Figure 2.1 depicts three VMs in a single

TVD. Each VM is given different levels of access to resources with respect to their role

for that TVD.

2.2

Security Objectives and Policy Enforcement Points

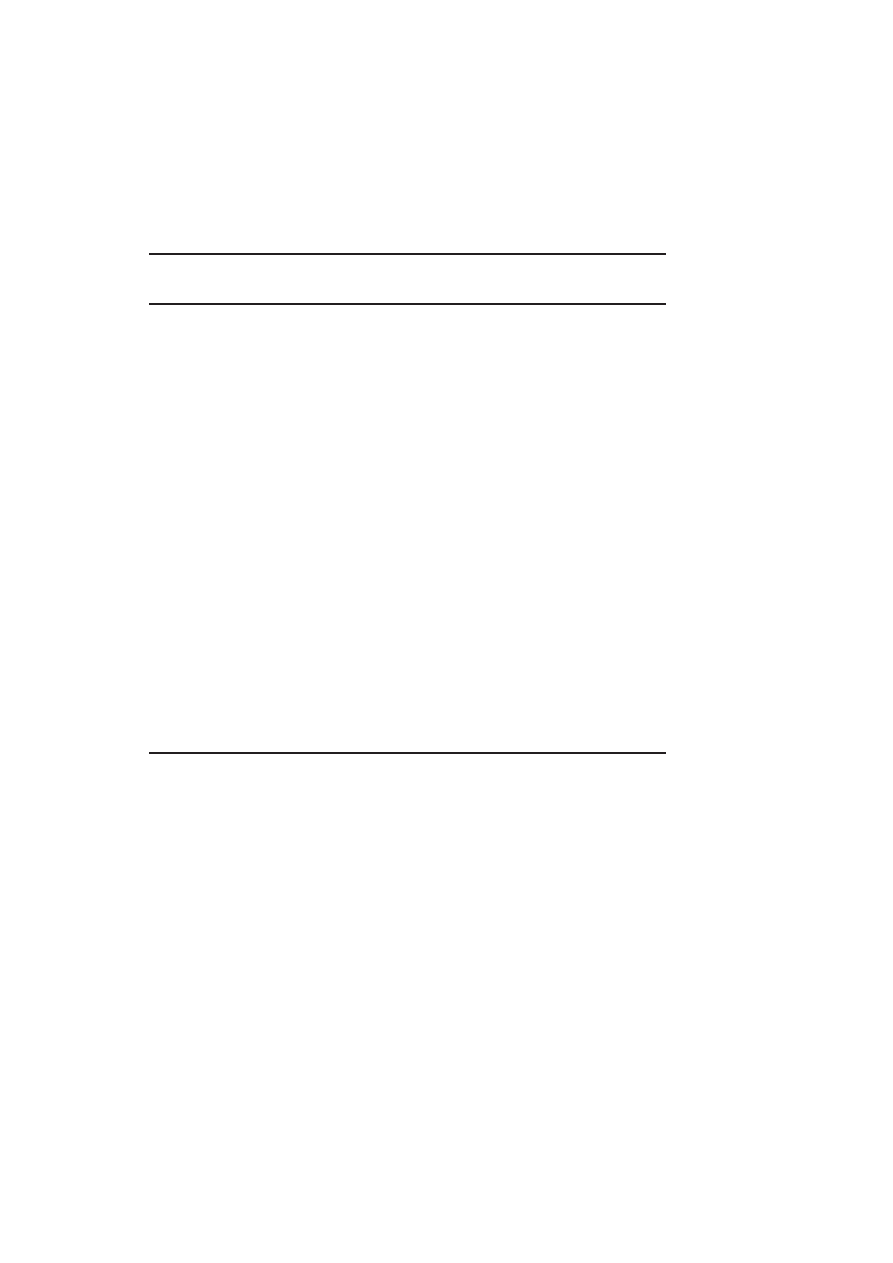

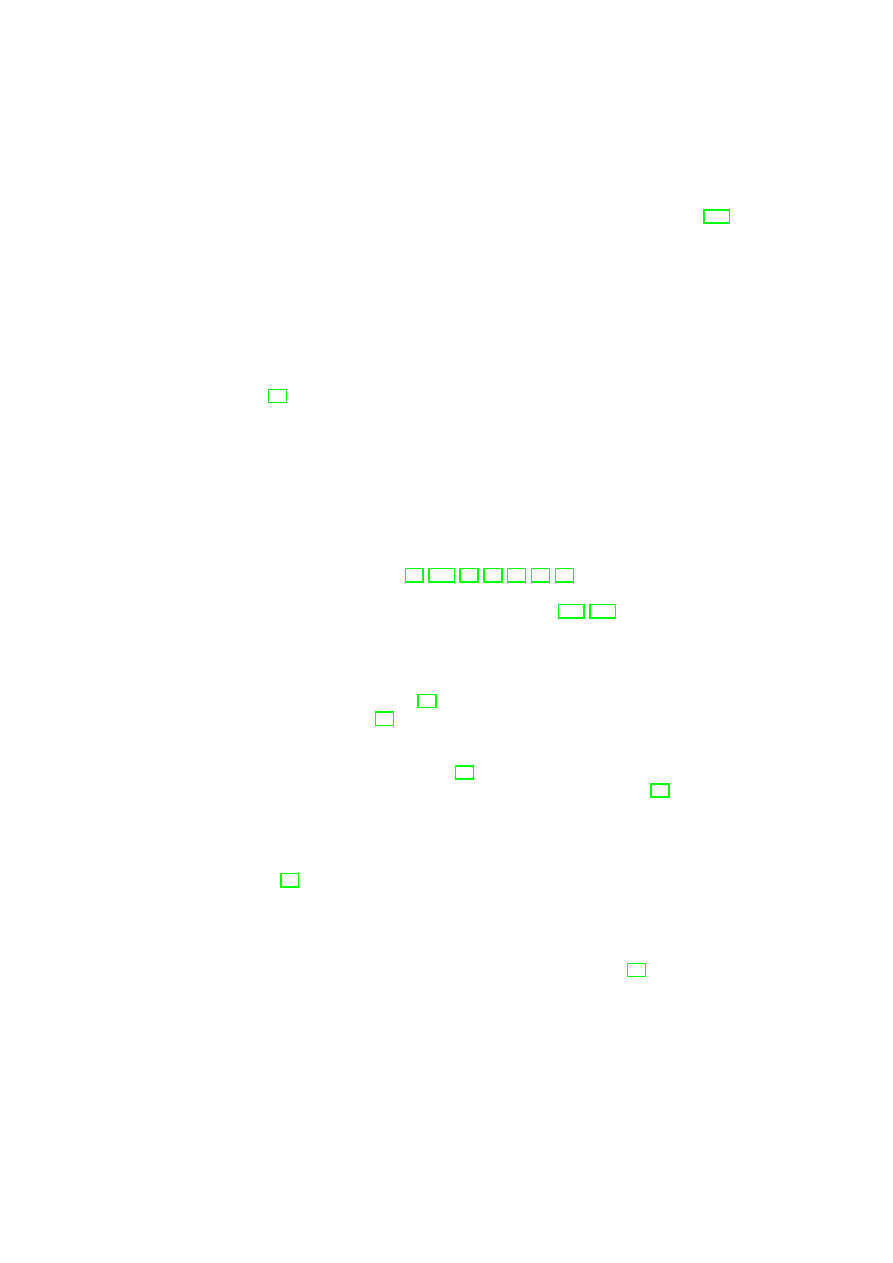

Policies are enforced for all shared resources in the TVD infrastructure (see Figure 2.2).

The basis of all policies is isolation at the boundary of each TVD. By default, each

resource is associated with a single domain. This achieves a basic level of isolation. If

information flow between TVDs is allowed, resources can also be member of different

TVDs. For example, a TVD can allow certain types of resources on certain hosts to

provide services also to other domains. Each TVD defines rules regarding in-bound

and out-bound information flow for restricting communication with the outside world.

The underlying policy-enforcement infrastructure then has to ensure that only resources

trusted by all TVDs are shared.

Architecturally, there are two ways of enforcing such rules, depending on the trust

between the TVDs. The first method involves two shared resources connected by an

intermediate domain. In this method, each TVD enforces its side of the flow control

by means of its own shared resource. An example of this type of connection is the one

that exists between TVD A and TVD B in Figure 2.2. This method is used when the

trust level between TVD A and TVD B is low, and the two cannot agree on a shared

resource that is mutually trusted. The shared resource in TVD A will enforce TVD A’s

policies regarding in-bound traffic from TVD B, even if the shared resource in TVD B

does not enforce TVD B’s policies regarding out-bound traffic. The shared resources

can be thought of being a part of a “neutral” TVD (TVD AB) with its own set of mem-

bership requirements. The second method that requires shared trust is to establish one

or more shared resources that are accessed from both TVDs while allowing controlled

information flow. This mechanism is used between TVD B and TVD C in Figure 2.2.

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

CHAPTER 2. SECURITY POLICIES FOR VIRTUAL DATA CENTERS

17

TVD A

TVD B

Internet

Membership

Constraints

Flow Enforcement

by Single-trust

Shared Resources

Isolation

Enforcement

TVD C

Flow Enforcement

by Mutually Trusted

Shared Resources

TVD AB

Shared Resource

Internal

Trusted/Shared

Resource Types:

Machine in Role

Figure 2.2: Usage Control for Shared Resources: Machines use resources belonging to

TVDs.

From / to

D

I

D

D

D

i

D

I

1

1

0

D

D

0

1

1

D

i

0

1

1

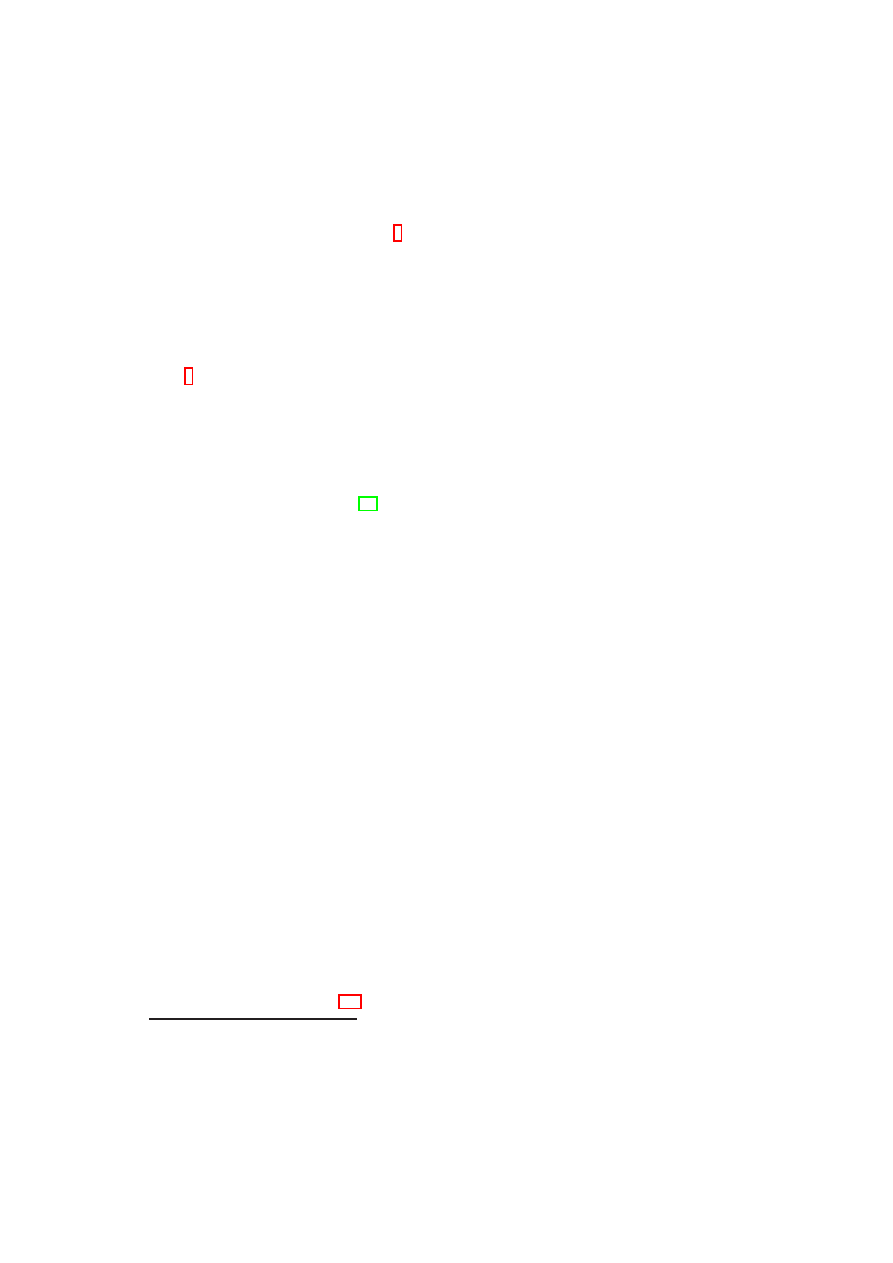

Table 2.1: High-level Directed Flow Control Matrix for Internet

D

I

, DMZ

D

D

, and

Intranet

D

i

.

Security within a virtual domain is finally obtained by defining and enforcing mem-

bership requirements that resources have to satisfy prior to being admitted to the TVD

and for retaining the membership. This may also include special requirements for

different machine types: Because, for example, shared resources play a key role in

restricting information flow between TVDs, the software on those machines may be

subject to additional integrity verification as compared to the software on regular VMs.

2.2.1

Permitted Flows in Data Centers

At a high level flow control policies define the allowed traffic flow between two do-

mains and how the domains should be protected. Allowed information flows can be

represented by a simple flow control matrix as depicted in Table 2.1, where

1

allows in-

formation flow and

0

denies it. This example implements a basic enterprise policy that

regulates incoming flow from untrusted outside entities (

D

I

) through a semi-trusted

intermediary domain (

D

D

), and disallows any outgoing flow. Note that this matrix is

directional, i.e., it might allow flows in one direction but not in the opposite direction.

If flow policies between two TVDs are asymmetric, only shared resources that can

enforce these policies are permitted.

Device-specific policies (network, storage) can then refine these basic rules. If an

information flow is not permitted, then also shared resources are not permitted between

these TVDs.

2.2.2

Membership Requirements

Membership requirements define under what conditions resources may join a domain.

From a high-level policy perspective, several criteria can be applied to decide whether

an entity is allowed to join a domain, for example:

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

18

OpenTC D05.6 – Final Report of OpenTC Workpackage 5

•

Certificates: An authority defined by the TVD policy can certify a resource to

be member of a TVD. A common example is that an enterprise issues machine

certificates to allow its machines to join the corporate network.

•

Integrity Proofs: A resource may prove its right to join a TVD using integrity

proofs. It may, e.g., prove that the integrity of the base operating system is intact

and that all required patches have been applied [103].

•

User-identity: Only machines operated by a certain user can join. This can be

validated by user-name/password or by a cryptographic token.

In general, a resource may need to show proper credentials to prove that it fulfills

certain properties before allowing the resource to join the TVD [96]. More formally,

a machine

m

is permitted to join a TVD

t

if and only if there is at least one property

of

m

that satisfies each security requirement of

t

. The validations of these properties

are usually done on a per-type and role basis. For example, requirements for a shared

resource are usually stronger than the requirements for a TVD-internal resource.

2.3

Example Policy Refinements for Protected Re-

sources

Policies alone are not sufficient to enforce customer separation in a virtualized data

center. Ultimately, one needs to transform these policies into data center configurations

and security mechanisms specific to each resource (e.g., VLAN configuration). To do

so, we introduce a policy management scheme that accepts high-level domain policies

and transforms them into resource-specific low-level policies and configurations. In

Section 11 we demonstrate a prototype based on this architecture that enforces high-

level TVD policies by lower-level network and infrastructure configurations, which is

then deployed onto each physical platform to assist customer separation.

2.3.1

Refinement Model

The high-level policy defines the basic flow control, protection, and admission require-

ments. We aim at enforcing these high-level objectives throughout all resources in the

data center.

In the high-level model, flow control across customer domains is specified by a

simple matrix such as the one in Figure 2.1 that defines whether flows are permit-

ted. This however is not sufficiently fine-grained for specific resources. TVDs, for

example, want to restrict their flow across boundaries by means of firewall rules. As

a consequence, we need to introduce a notion of policy refinement [127], because as

translation moves towards lower levels of abstraction, it will require additional infor-

mation (e.g., physical arrangement of the data center, “subjective” trust information) to

be correctly and coherently executed.

Our notion of policy refinement mandates the enforcement of “no flow” objec-

tives while allowing each resource to refine what it means so that flows are permitted

and how exactly unauthorized flows shall be prevented. Similarly, we do not allow

resources to deviate from the confidentiality/integrity objectives; however, certain re-

sources can be declared trusted so that they may enforce these objectives without addi-

tional security mechanisms such as encryption or authentication.

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

CHAPTER 2. SECURITY POLICIES FOR VIRTUAL DATA CENTERS

19

Flow to

→

D

I

D

D

D

i

Enforced by

↓

gate

internal

gate

internal

gate

internal

D

I

1

1

P

ID

0

0

0

D

D

0

0

1

1

P

Di

0

D

i

0

0

P

Di

0

1

1

Table 2.2: Example Network Flow Control Policy Matrix for Three TVDs.

Similarly, the fact that admission is restricted is then refined by specific admission

control policies that are enforced by the underlying infrastructure.

Note that conflict detection and resolution [127, 76] can later be used to extend this

simple notion of refinement. However, we currently stay on the safe side: Connections

are only possible if both TVDs allow them. Similarly, if one domain requires confi-

dentiality, information flows are only allowed to TVDs that also require confidentiality.

Other schemes for more elaborate flow control have been proposed in [33, 17, 32, 38].

2.3.2

Network Security Policies

We now survey the policy model of [18] and show how it related to the corresponding

high-level policy. Similar to our high-level policies, there are two types of policies

governing security in the network. The first limits flow between networks, whereas the

second defines membership requirements to each network.

Network Security Policies across TVDs

A policy covers isolation and flow control

between TVDs as well as integrity and confidentiality against outsiders. These basic

security requirements are then mapped to appropriate policies for each resource. For

example, from a networking perspective, isolation refers to the requirement that, unless

the inter-TVD policies explicitly allow such an information flow, a dishonest VM in one

TVD cannot (1) send messages to a dishonest VM in another TVD (information flow),

(2) read messages sent on another TVD (confidentiality), (3) alter messages transmitted

on another TVD (data integrity), and (4) become a member of another TVD network

(access control).

TVDs often constitute independent organizational units that may not trust each

other. If this is the case, a communication using another TVD can be established (see

the communication between TVD A and B in Figure 2.2). The advantage of such a

decentralized enforcement approach is that each TVD is shielded from security failures

in other TVDs, thus contribute to domain isolation. For networks, the main inter-

TVD security objectives are controlled information sharing among the TVDs as well

as integrity and confidentiality protection of the channel.

While the high-level model specifies whether information exchange is allowed be-

tween domains or not, we now refine this policy as follows:

•

We refine the active elements (subjects) of given domains by introducing roles

that machines can play. This allows us to set different permissions to boundary

machines as compared to internal machines.

•

In case information flow is permitted in principle, we refine the network secu-

rity policies by introducing flow control rules that can further restrict the actual

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

20

OpenTC D05.6 – Final Report of OpenTC Workpackage 5

information exchange. A network policy may disallow flow even though it has

been allowed from a high-level policy perspective.

An information flow control matrix is a simple way of formalizing these network con-

nectivity objectives. Table 2.2 shows a sample matrix for the three example TVDs

introduced earlier. Each matrix element represents a policy specifying permitted con-

nections between a pair of TVDs, as enforced by one of the TVDs. The depicted

policies

P

x

that limit information exchange will be implemented by firewall rules that

are used to program the boundary firewalls. The

1

values along the matrix diagonal

convey the fact that there is free information exchange within each TVD. The

0

val-

ues in the matrix are used to specify that there should be no direct information flow

between two TVDs, e.g., between the Internet

D

I

and the intranet

D

i

. Care must be

taken to ensure that the pairwise TVD policies specified in the information flow control

matrix do not accidentally contradict each other or allow undesired indirect flow.

Intra-TVD Network Security Policy

Within a TVD, all VMs can freely commu-

nicate with each other while observing TVD-specific integrity and confidentiality re-

quirements. For this purpose, the underlying infrastructure may ensure that intra-TVD

communication only takes place over an authenticated and encrypted channel (e.g.,

IPSec), or alternatively, a trusted network

1

.

2.3.3

Towards Storage Security Policies

Virtual disks attached to VMs must retain the advantages offered by storage virtualiza-

tion while at the same time enforcing TVD security policies. Advantages of storage

virtualization include improved storage utilization, simplified storage administration,

and the flexibility to accommodate heterogeneous physical storage devices. Similar to

network, we now show a refinement of the high-level TVD policies into access control

policies for VMs in certain roles to disks belonging to a domain.

Inter-TVD Storage Security

A virtual disk has a single label corresponding to the

TVD it belongs to. Whenever a virtual machine operates on virtual storage, the global

flow matrix described in Section 2 needs to be satisfied. For flexibility, each TVD can

define a set of storage policies that govern usage and security of its storage. A single

policy is then assigned to and enforced for each storage volume.

As the starting point of our storage policy refinement, we define a maximum per-

mission policy as follows:

1. Any machine in domain

TVD

A

playing any role can write to a disk of domain

TVD

B

iff flow from domain

TVD

A

to domain

TVD

B

is permitted.

2. Any machine in domain

TVD

A

playing any role can read from a disk of domain

TVD

B

iff flow from domain

TVD

B

to domain

TVD

A

is permitted.

3. Any single machine in any domain can read/write mount a blank disk. After data

is written, the disk changes ownership and is now assigned to the domain of the

machine who has written data.

1

A network is called trusted with respect to a TVD security objective if it is trusted to enforce the given

objective transparently. For example, a server-internal Ethernet can often be assumed to provide confiden-

tiality without any need for encryption.

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

CHAPTER 2. SECURITY POLICIES FOR VIRTUAL DATA CENTERS

21

Flow to

→

D

I

D

D

D

i

Disk

↓

gate

internal

gate

internal

gate

internal

D

I

r/w

r/w

w

0

0

0

D

D

r

0

r/w

r/w

r/w

0

D

i

0

0

r/w

0

r/w

r/w

Blank

r/

0

r/

0

r/

0

w

→

D

I

0

w

→

D

D

0

w

→

D

i

0

Table 2.3: Example of a Refined Disk Policy Matrix for Three TVDs.

Table 2.3 shows the resulting maximum disk access control policy. Actual policies are

then valid with respect to a maximum-permission policy for a domain if they permit

a subset of its permissions. Note that as flow within a domain is always allowed,

this implies that disks of the same domain as the machine may always be mounted

read/write.

Intra-TVD Storage Security

By default, we consider the content of a disk to be

confidential while the storage medium (possibly remote) is deemed to be untrusted. As

a consequence, if a given domain does not declare a given storage medium as trusted,

we deploy whole-disk encryption using a key that is maintained by the TVD infrastruc-

ture.

2

Another aspect reflected in the disk policies is the fact that we have a notion of

blank disks. Once they are written by another domain, they change color, and are then

associated with this other domain while being encrypted under the corresponding key.

In the future, it would be desirable to have integrity-protected storage [24, 89] where

the TVD can validate that its content have not been changed by untrusted entities.

For protecting the data in a particular TVD, virtual storage may in addition specify

which conditions on the system must be satisfied before a disk may be re-mounted by a

VM that has previously unmounted the disk, and whether shared mounting by multiple

systems is allowed. Note that these membership restrictions require bookkeeping of

disks and management of access of VMs to disks.

2

Note that the VM only sees unencrypted storage, i.e., the TVD infrastructure automatically loops in

encryption.

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

Chapter 3

Unified Policy Enforcement for

Virtual Data Centers

In this section, we introduce a TVD-based policy enforcement framework that or-

chestrates the deployment and enforcement of the type of policies we presented in

Section 2 across the data center. Existing storage and network virtualization technolo-

gies as well as existing Trusted Computing components (in software and hardware) are

the building blocks of our solution. Our framework (1) combines these technologies to

realize TVDs and (2) orchestrates them using the TVD infrastructure, which provisions

the appropriate security mechanisms.

3.1

TVD Infrastructure

The TVD infrastructure consists of a management layer and an enforcement layer.

The TVD management layer includes TVD masters, proxies, and factories, whereas

the TVD enforcement layer consists of various security services. Each TVD is iden-

tified by a unique TVD Master that orchestrates TVD deployment and configuration.

The TVD Master can be implemented as a centralized entity (as in our prototype de-

scribed in Section 11) or have a distributed fault-tolerant implementation. The TVD

Master contains a repository of high-level TVD policies and credentials (e.g., VPN

keys). The Master also exposes a TVD management API through which the TVD

owner can specify those policies and credentials. In the deployment phase, the TVD

Master first verifies the suitability and capability of the physical host (which we refer to

as pre-admission control). It then uses a generic TVD Factory service to spawn a TVD

Proxy, which acts as the local delegate of the TVD Master dedicated to that particular

host. The TVD Proxy is responsible for (1) translation of high-level TVD policies into

low-level platform-specific configurations, (2) configuration of the host and security

services with respect to the translated policies, and (3) interaction with the security

services in TVD admission and flow control.

Security services implement the security enforcement layer of our TVD infrastruc-

ture. They run in a trusted execution environment on each physical host (e.g., Domain-0

in Xen) and (1) manage the security configuration of the hypervisor, (2) provide secure

virtualization of resources (e.g., virtual devices) to the VMs, and (3) provide support

to TVD proxies in enforcing flow and access control policies within and across TVD

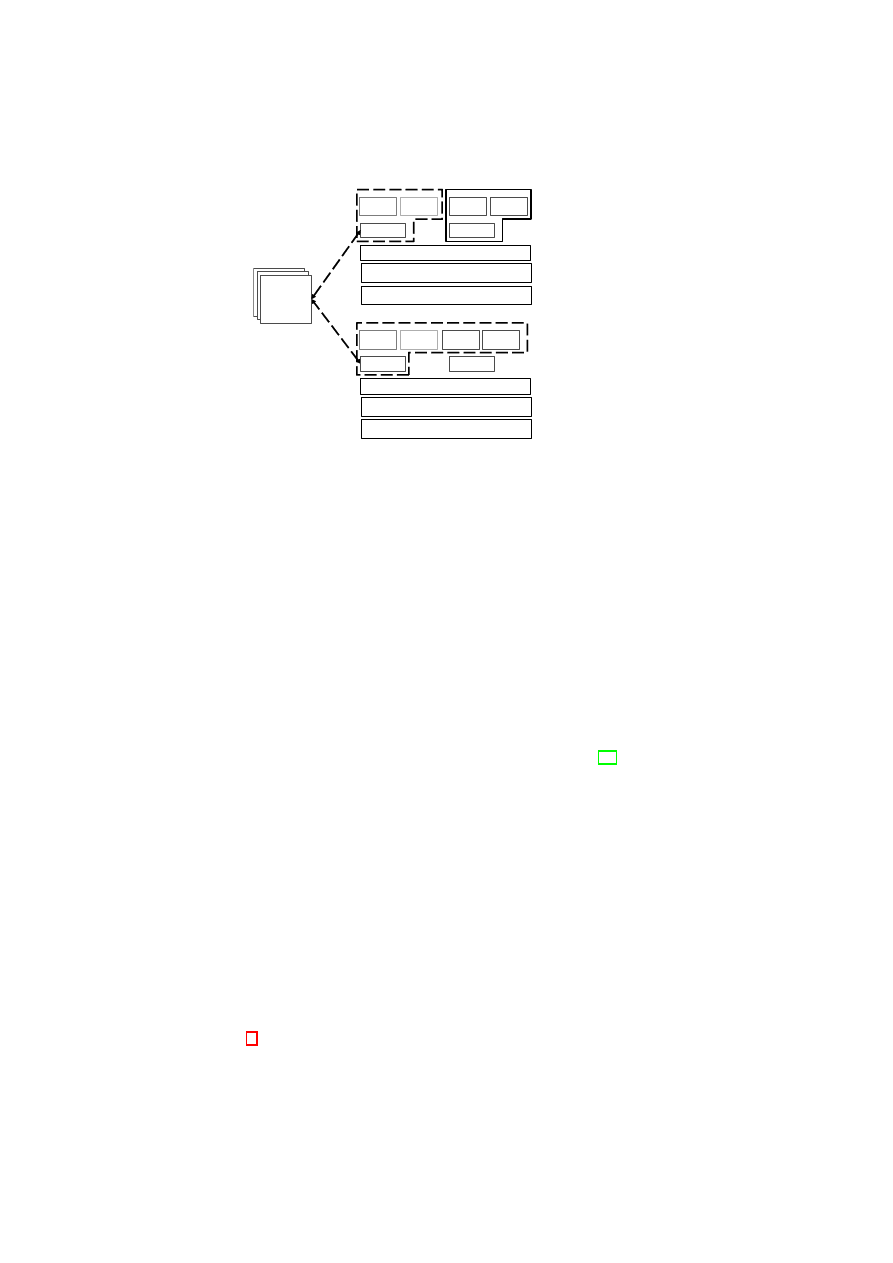

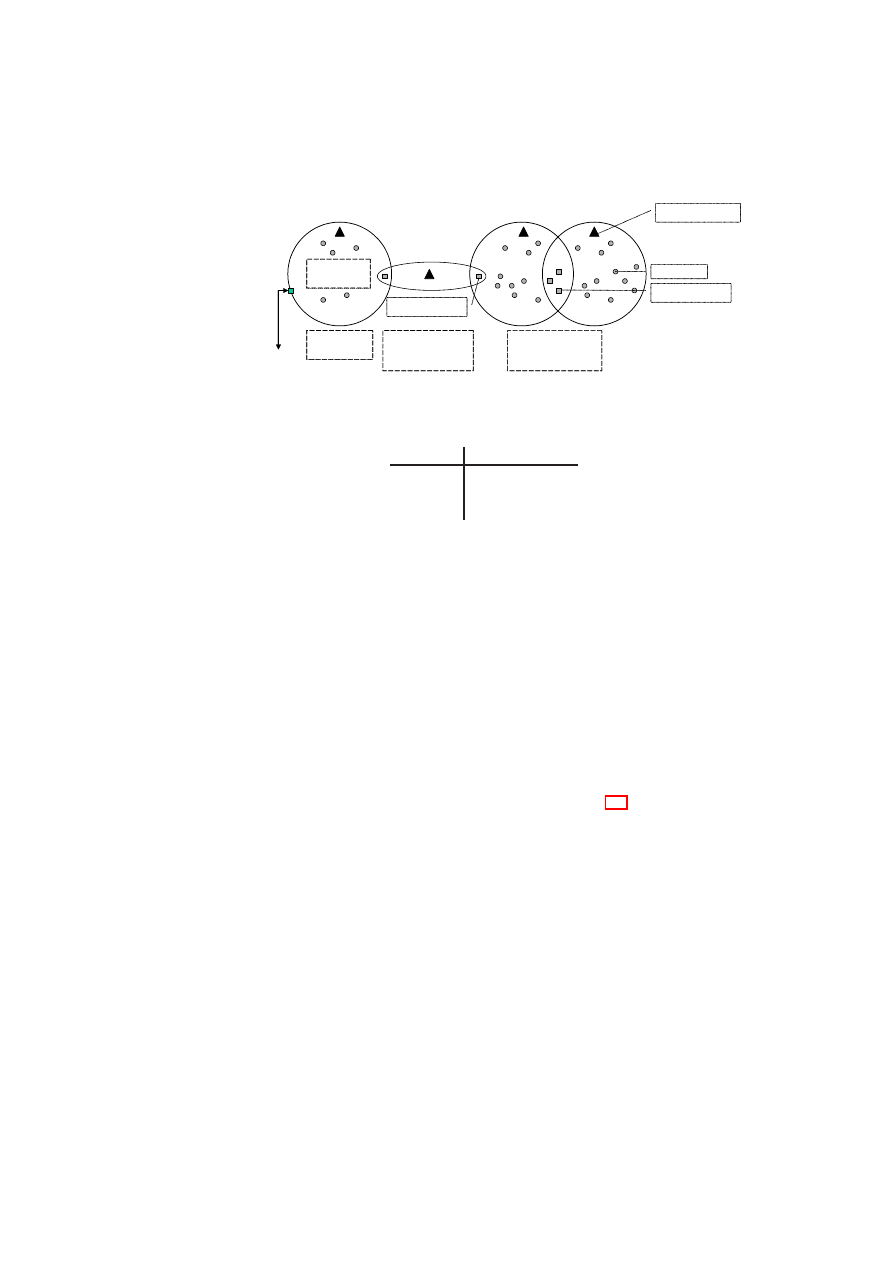

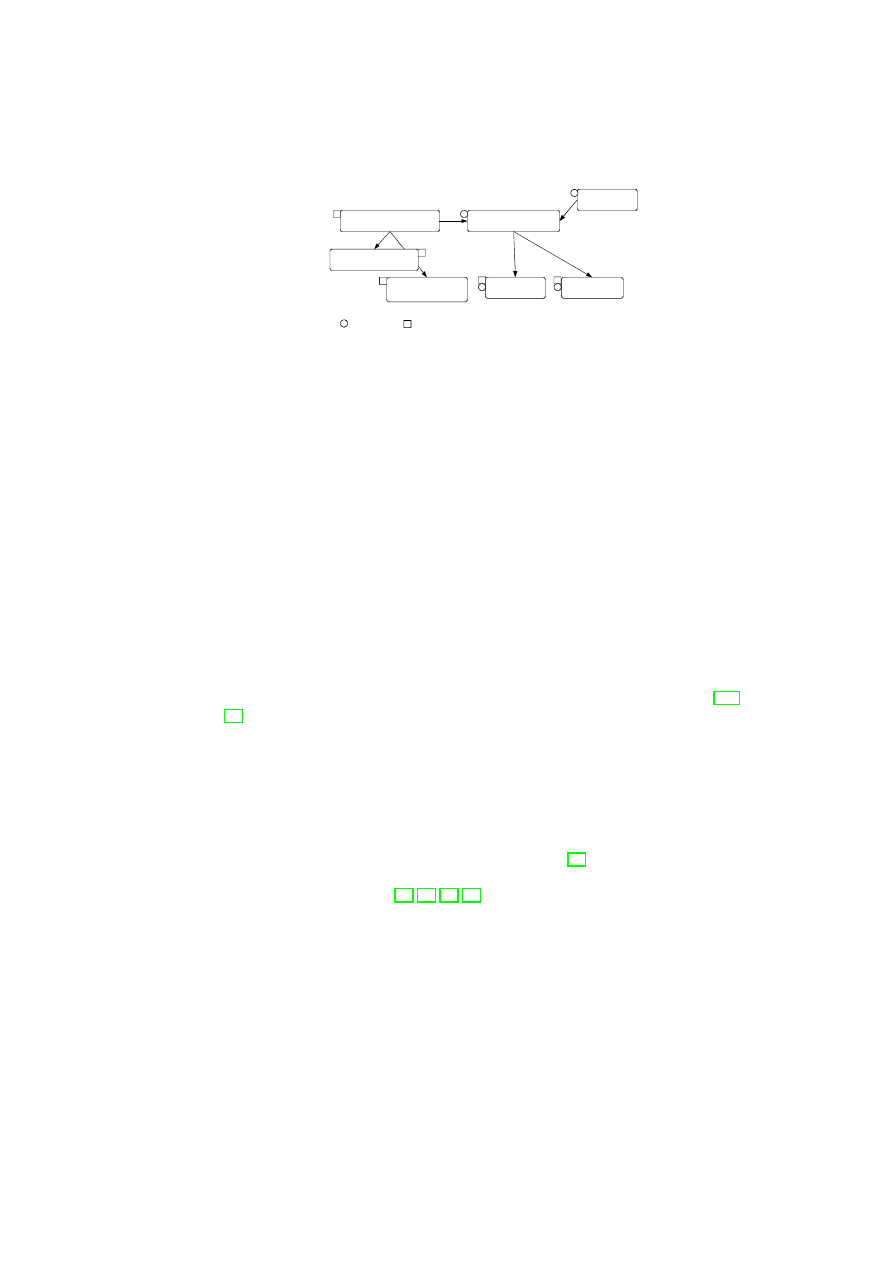

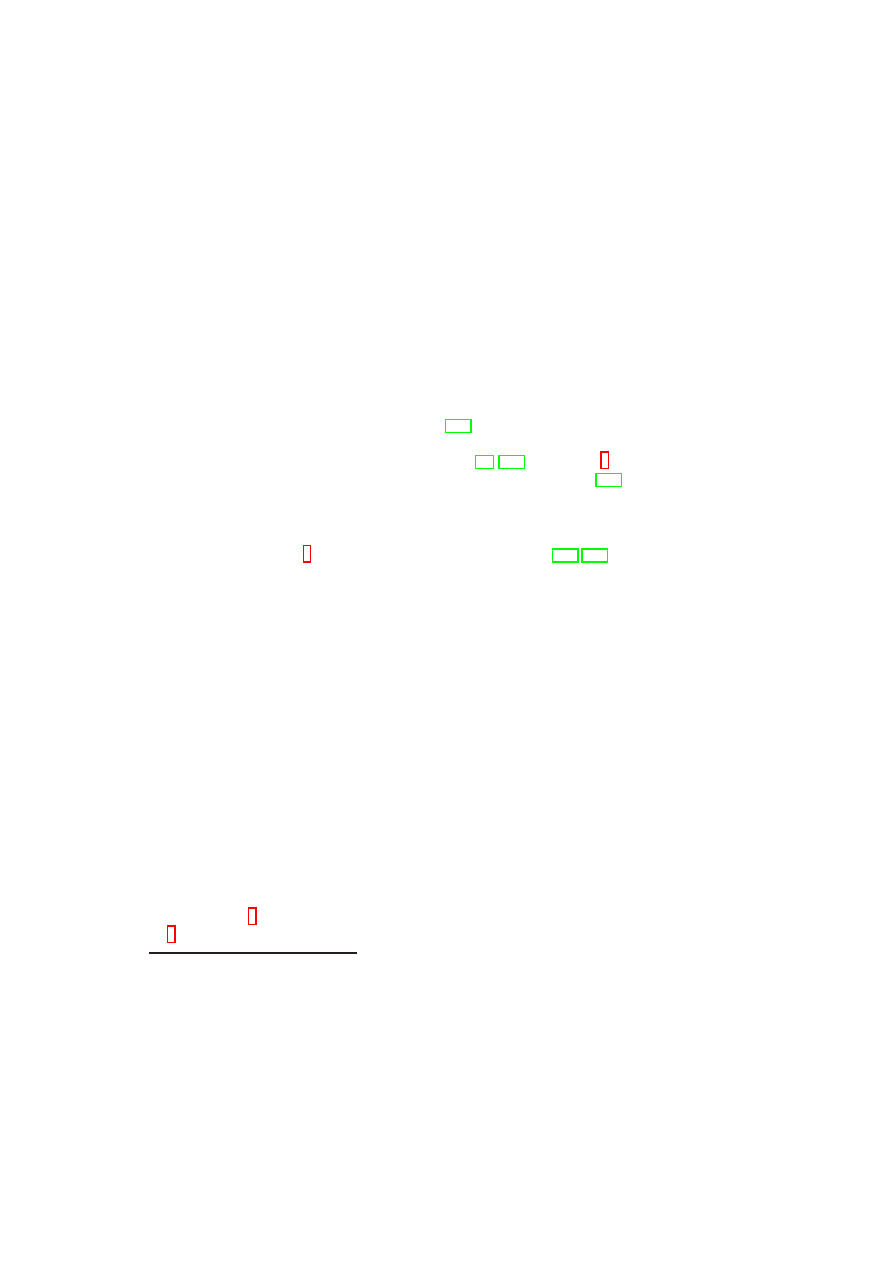

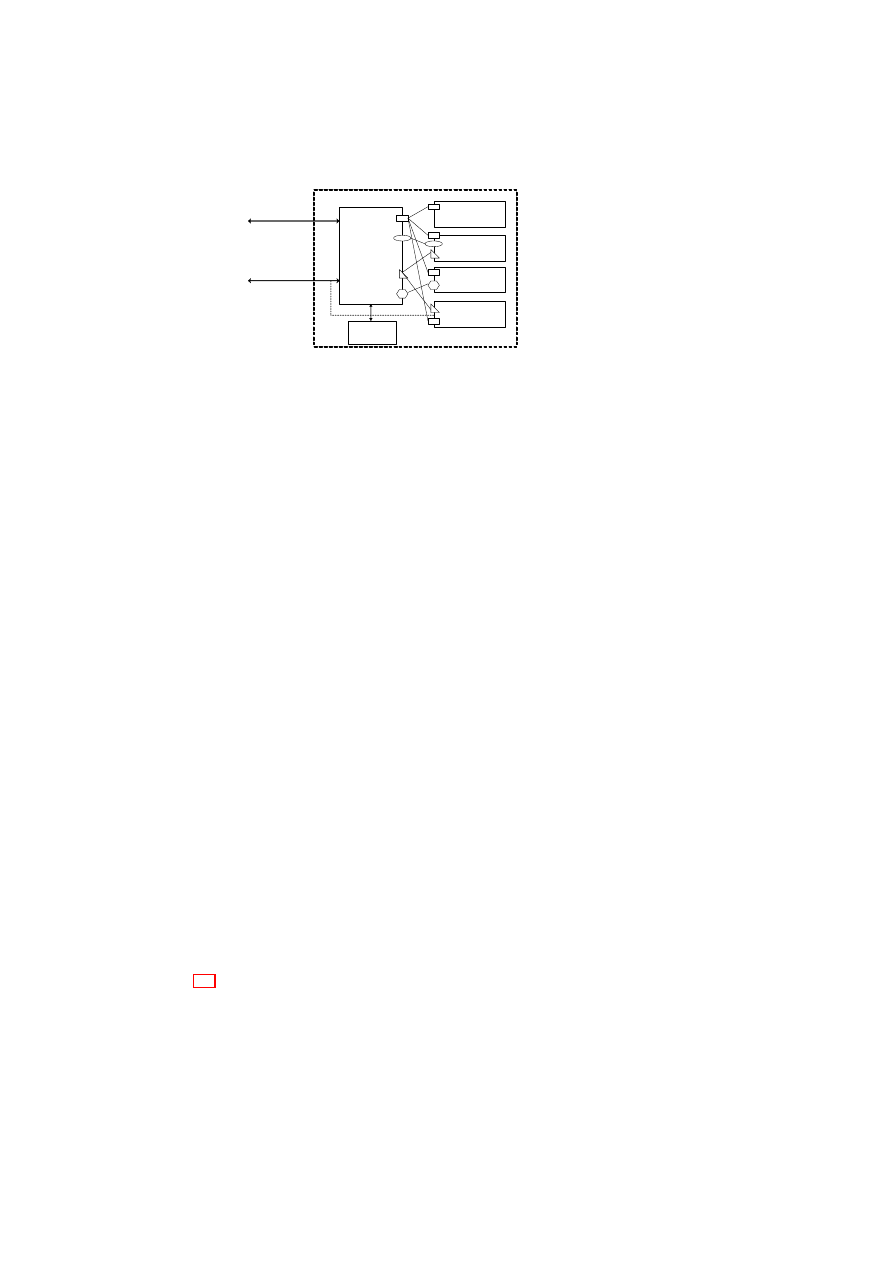

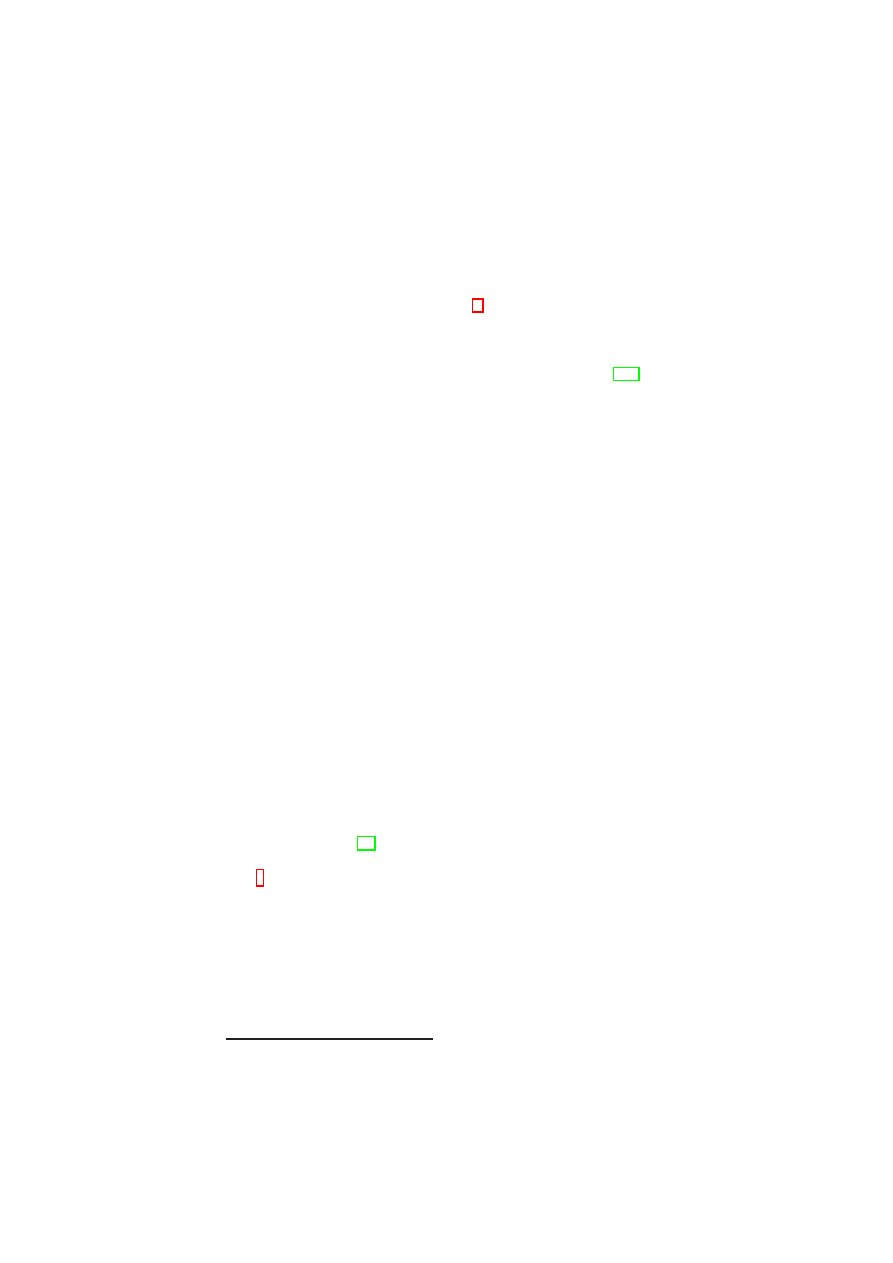

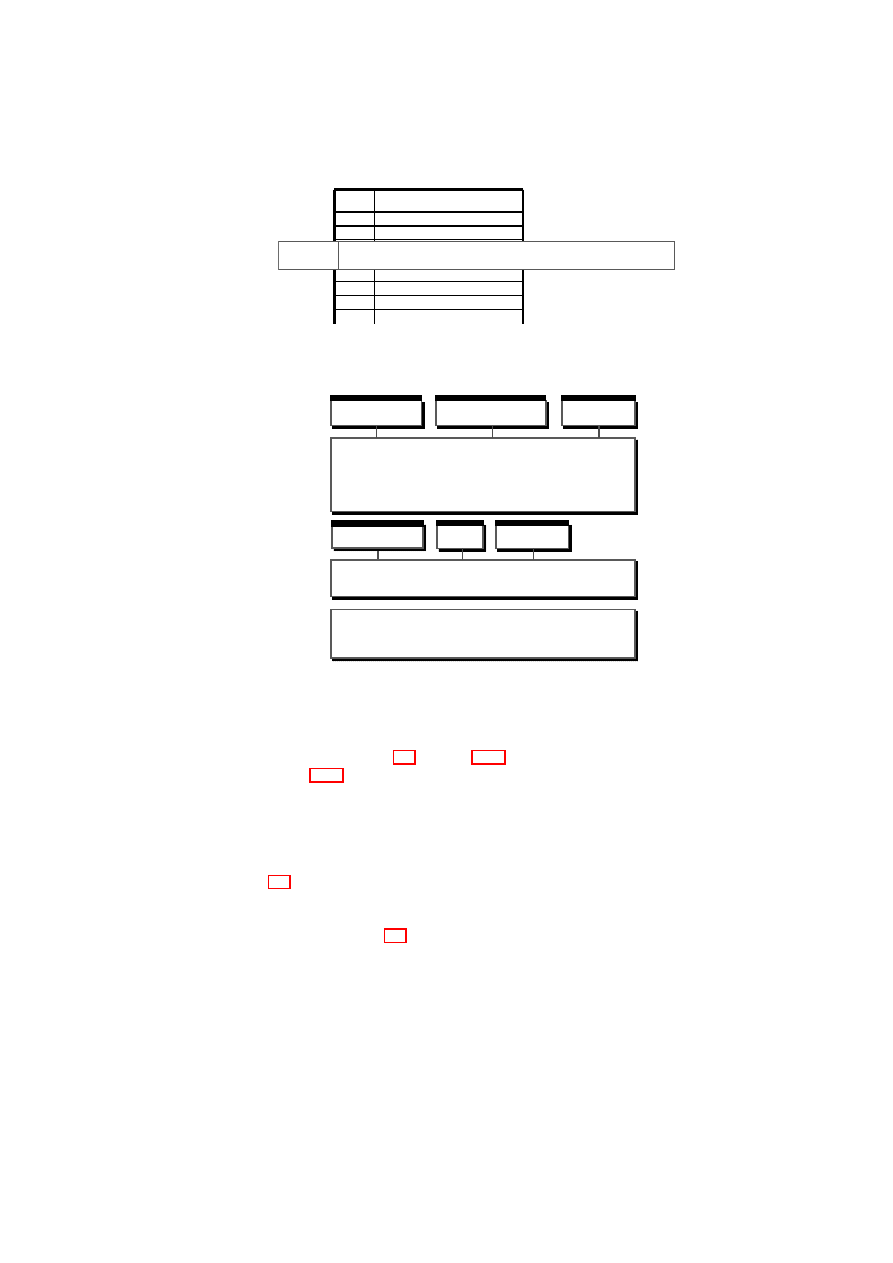

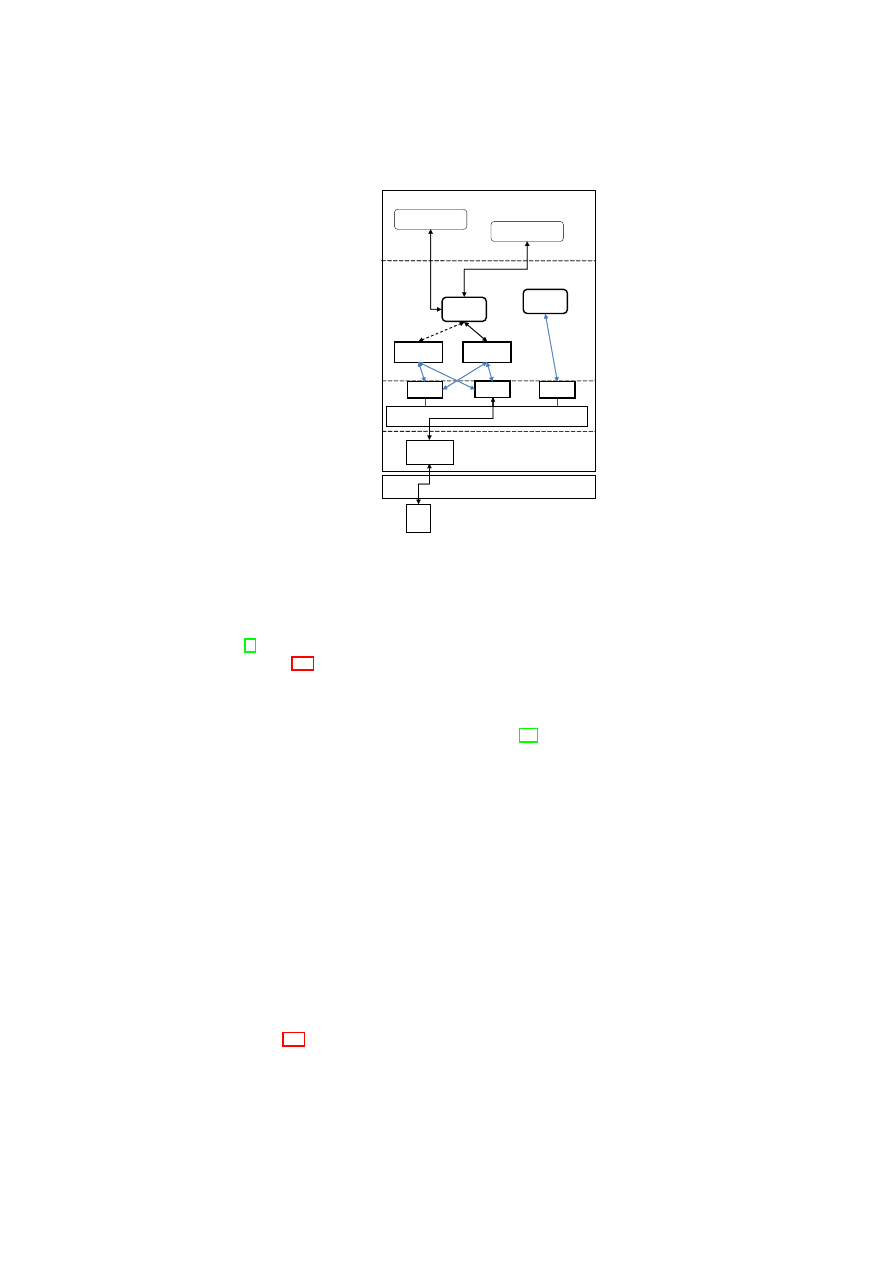

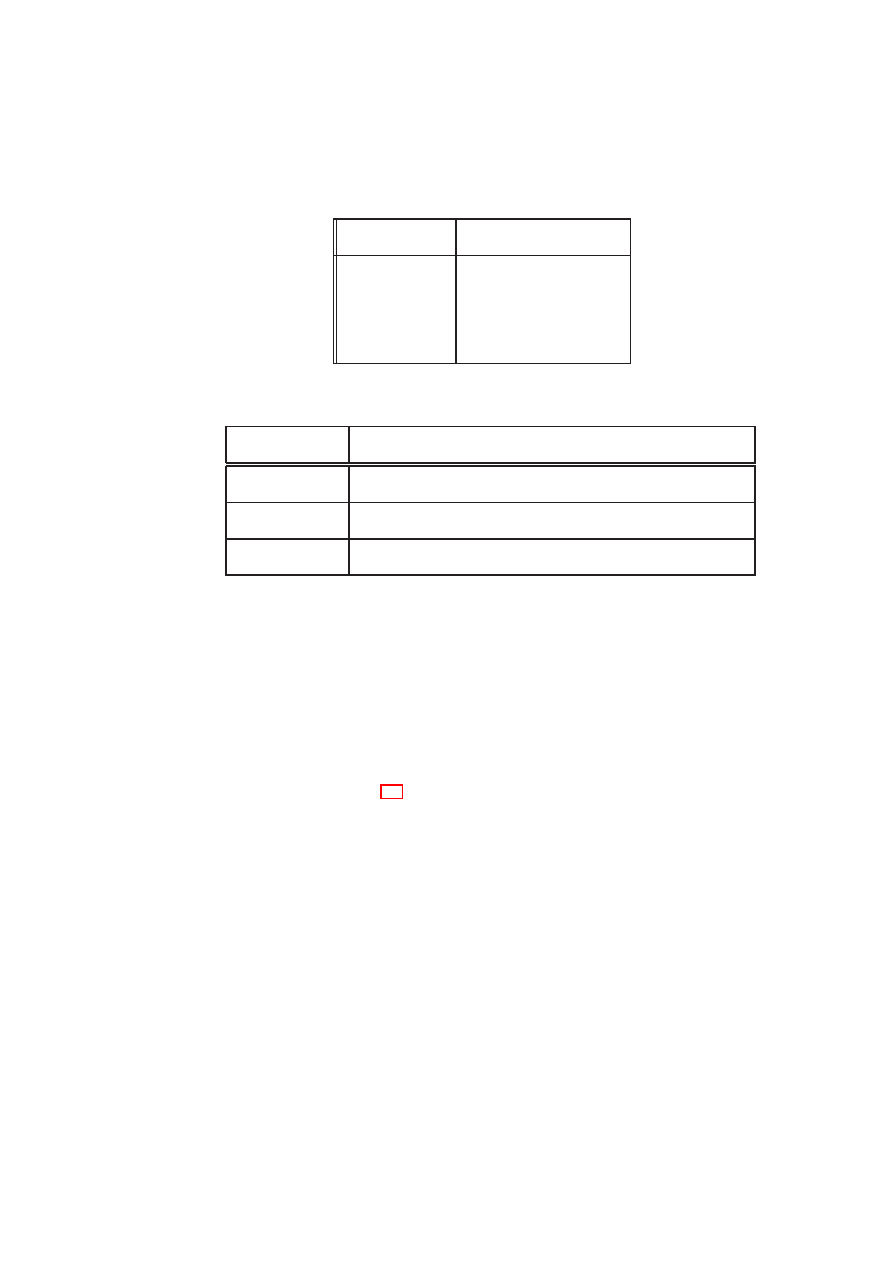

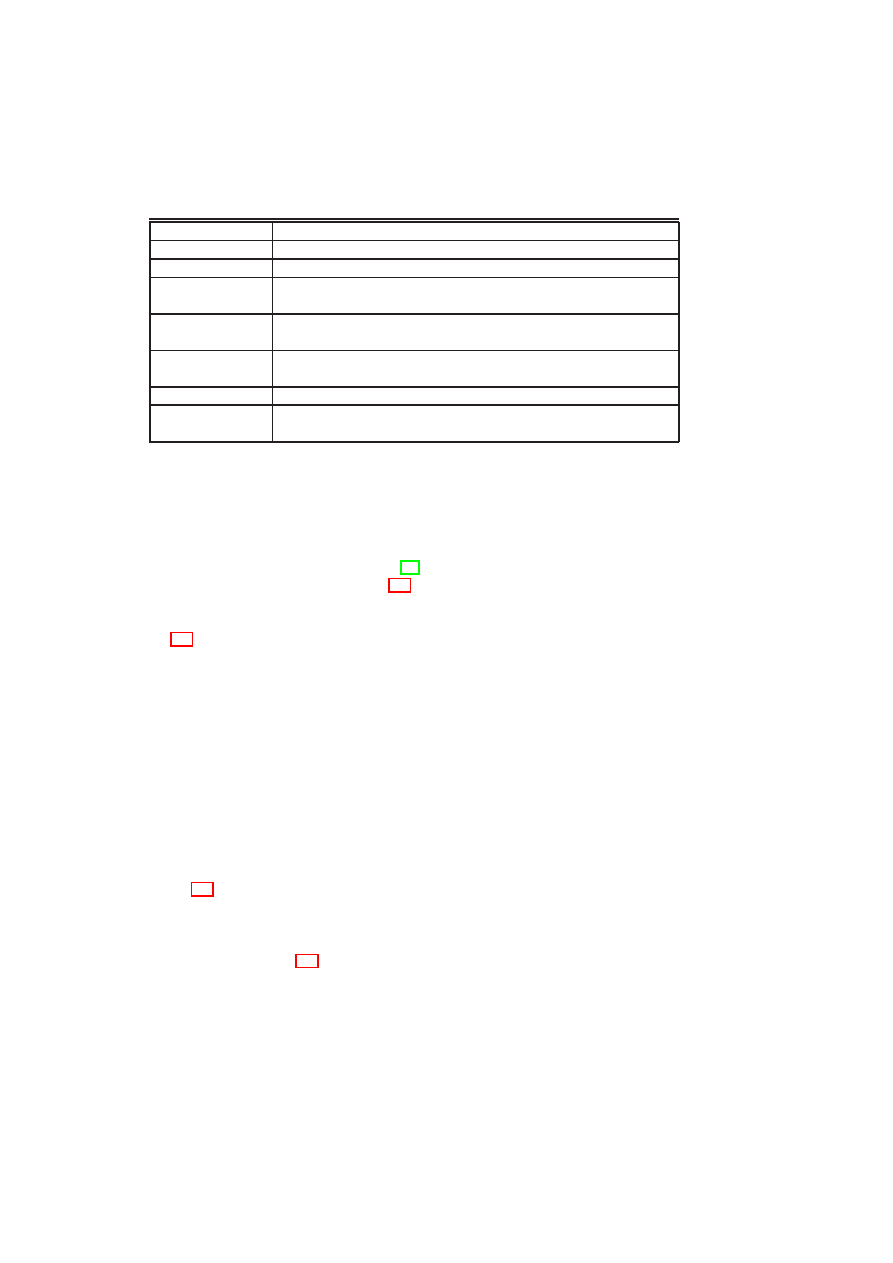

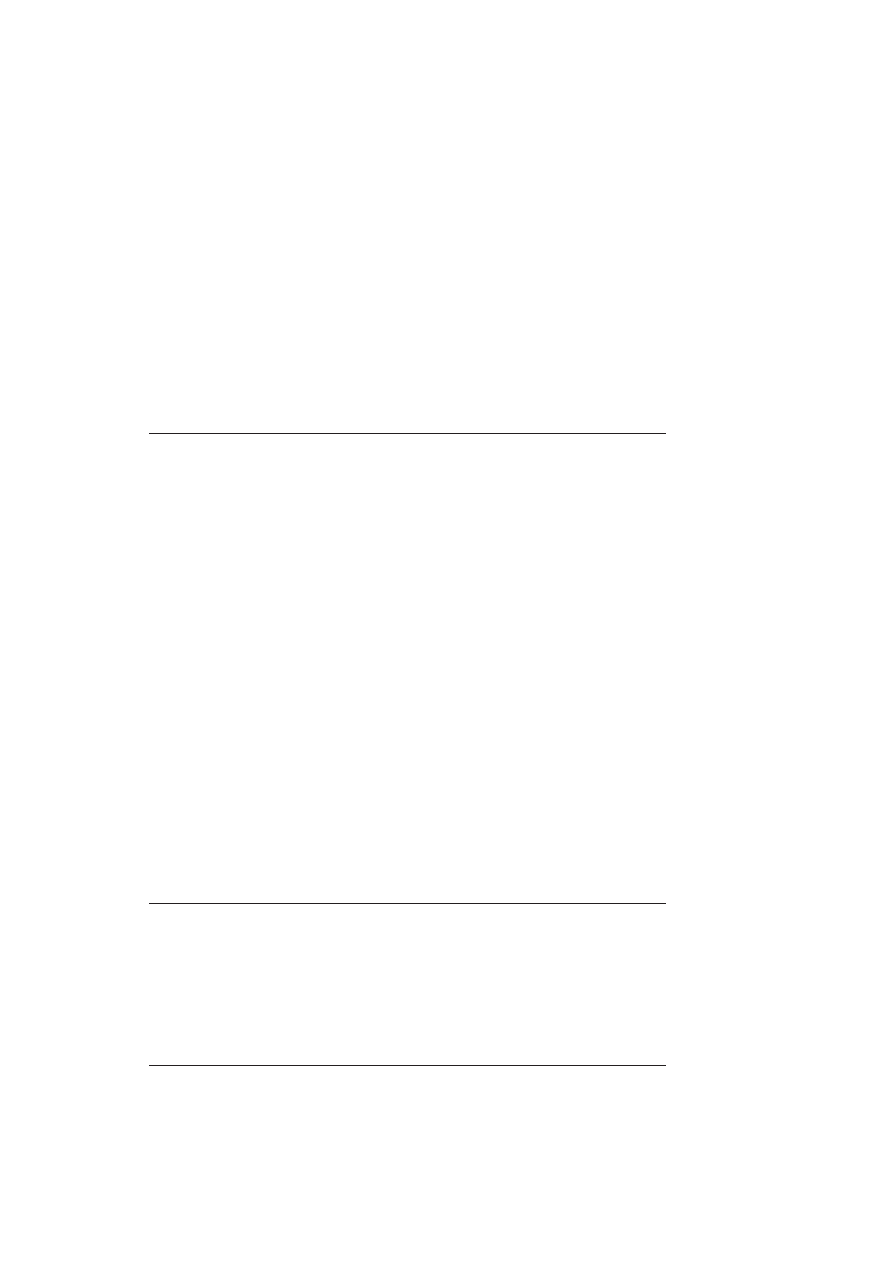

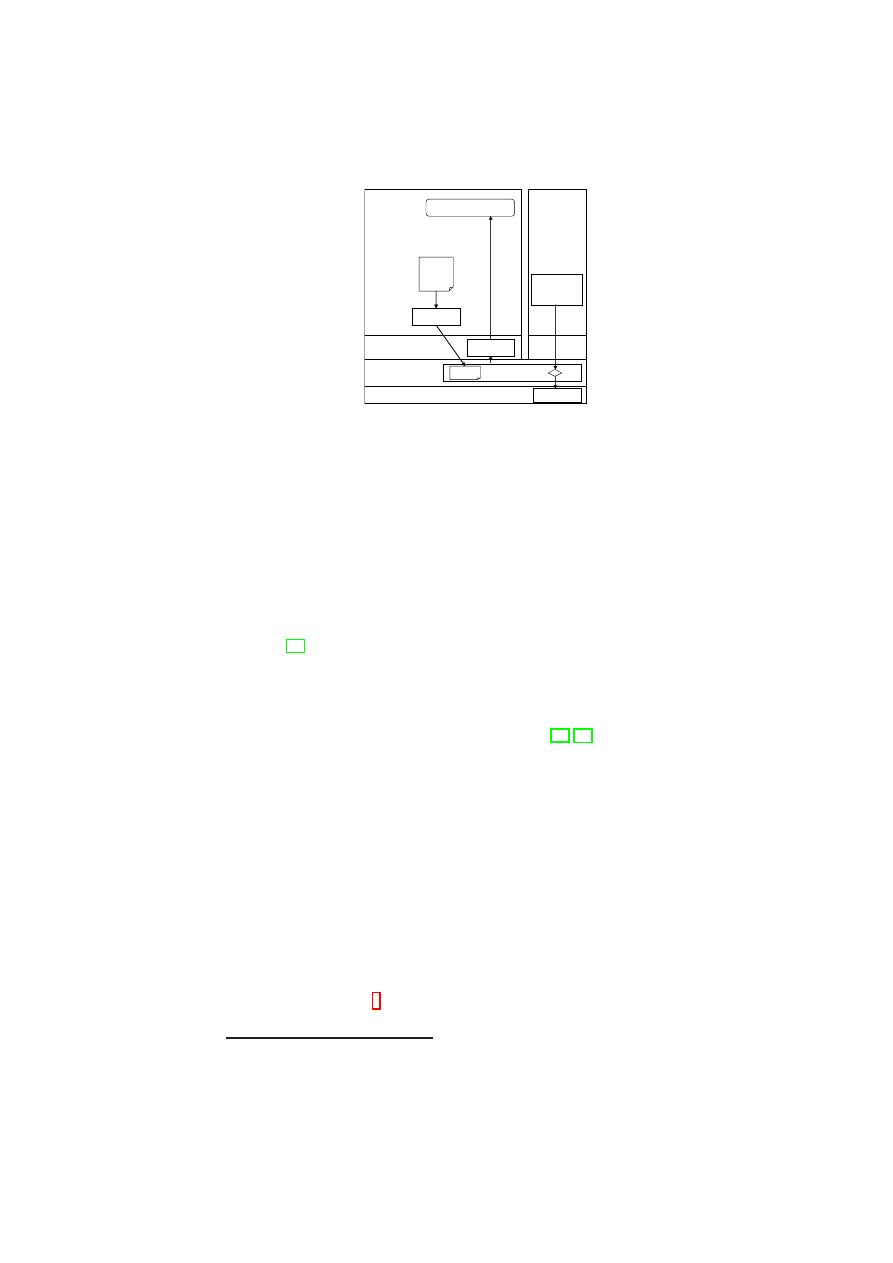

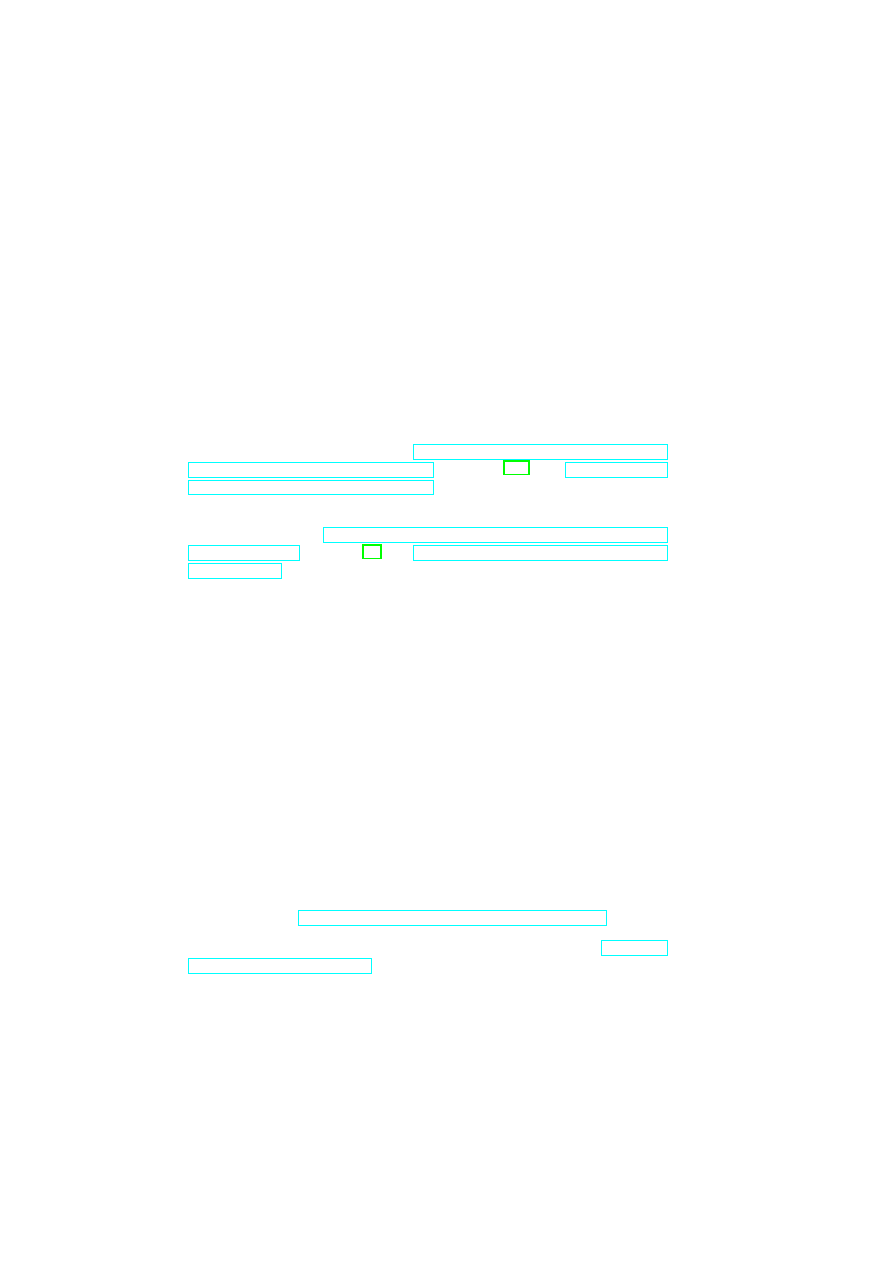

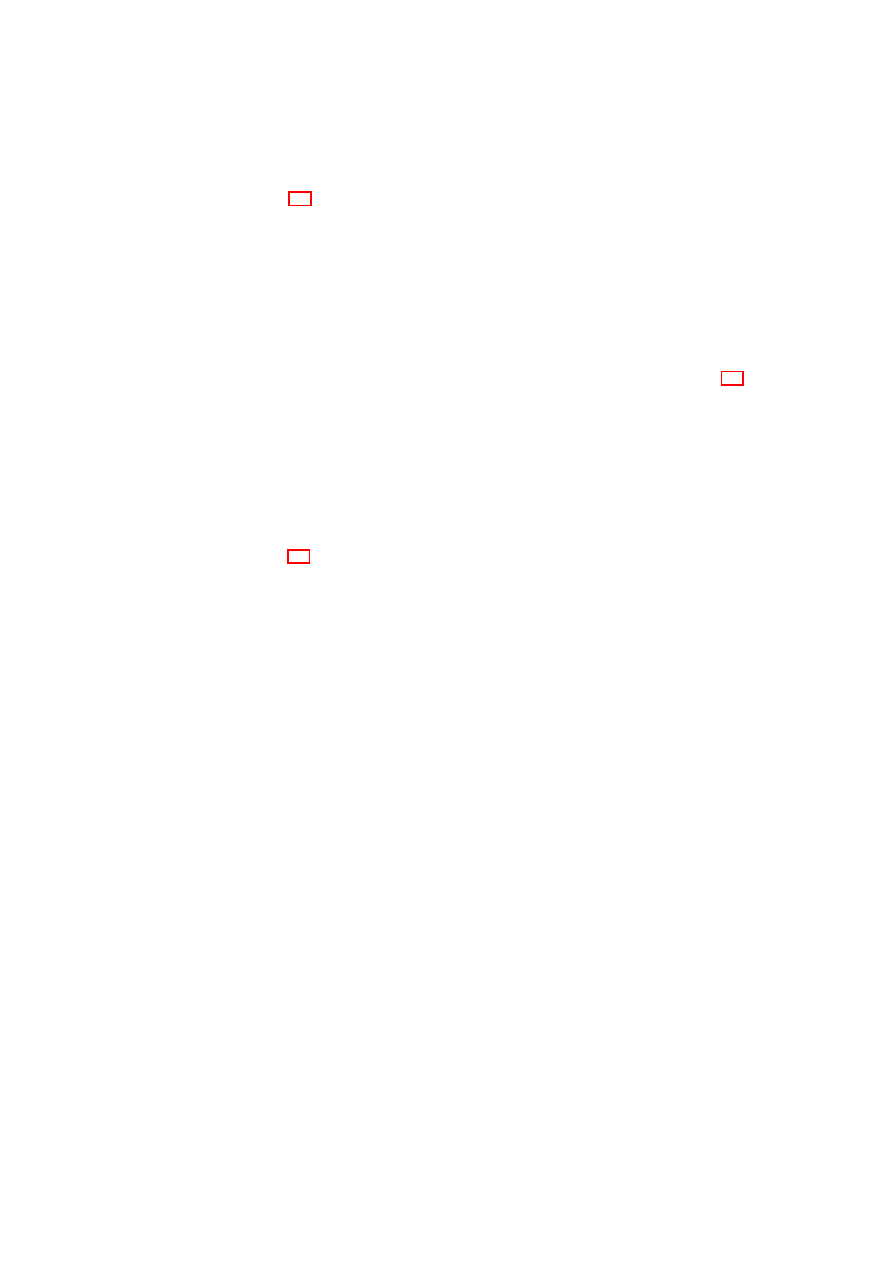

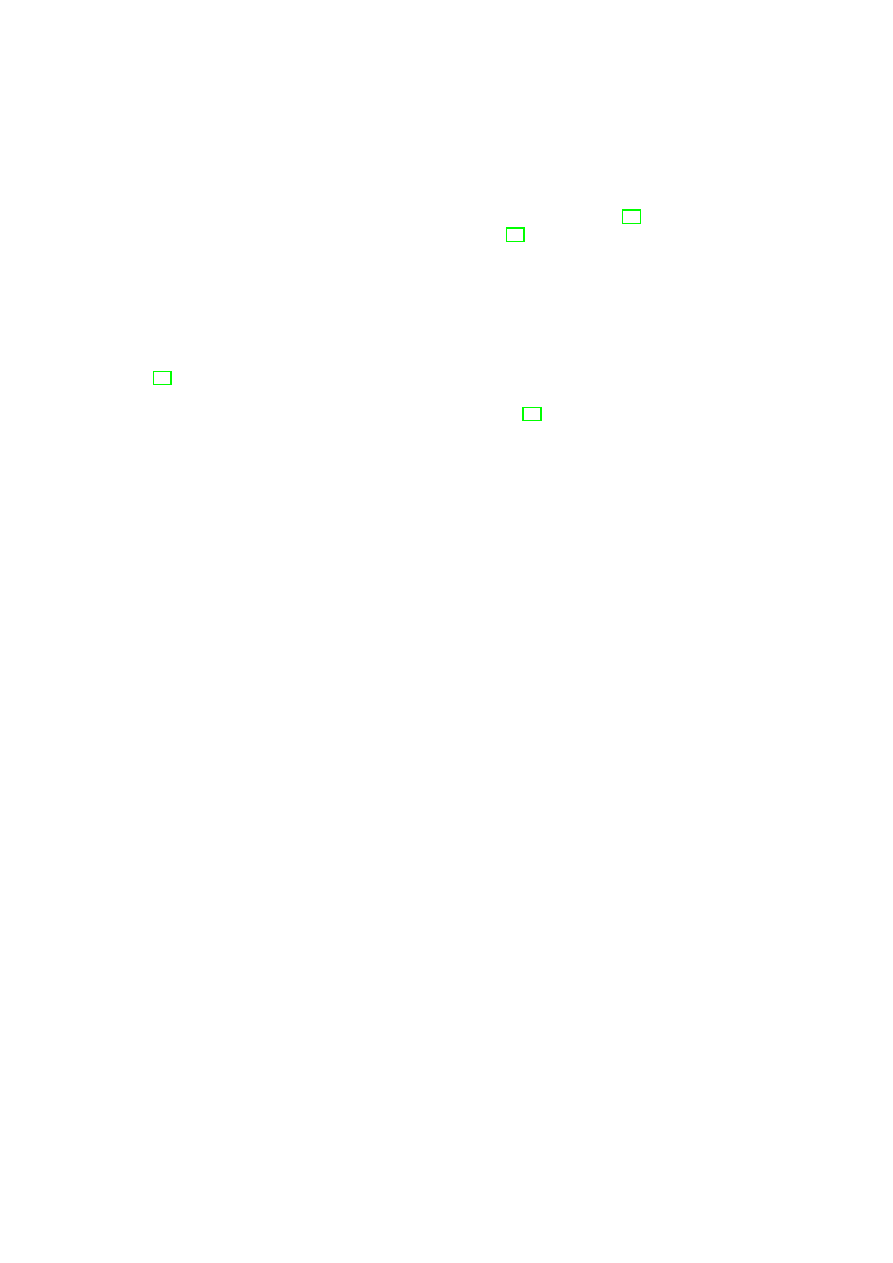

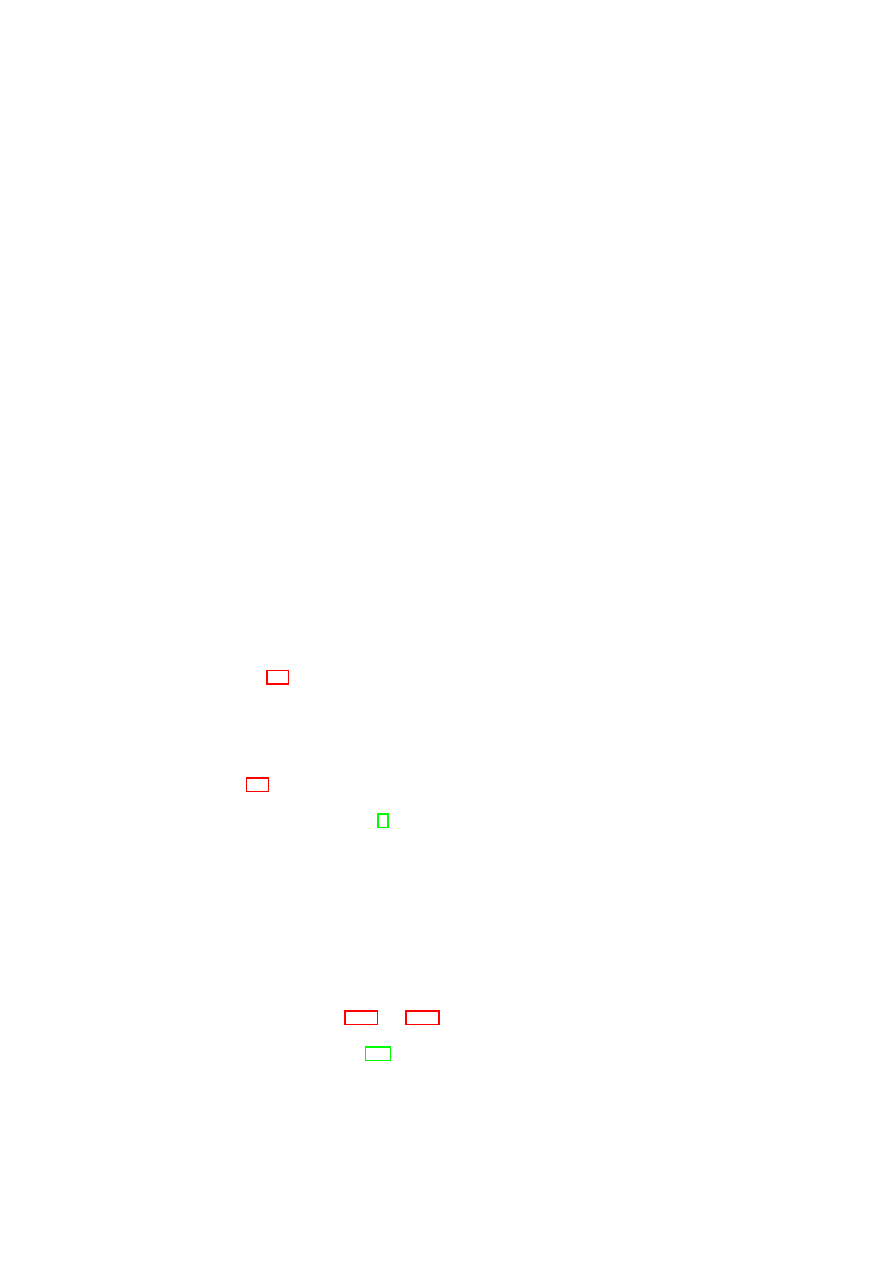

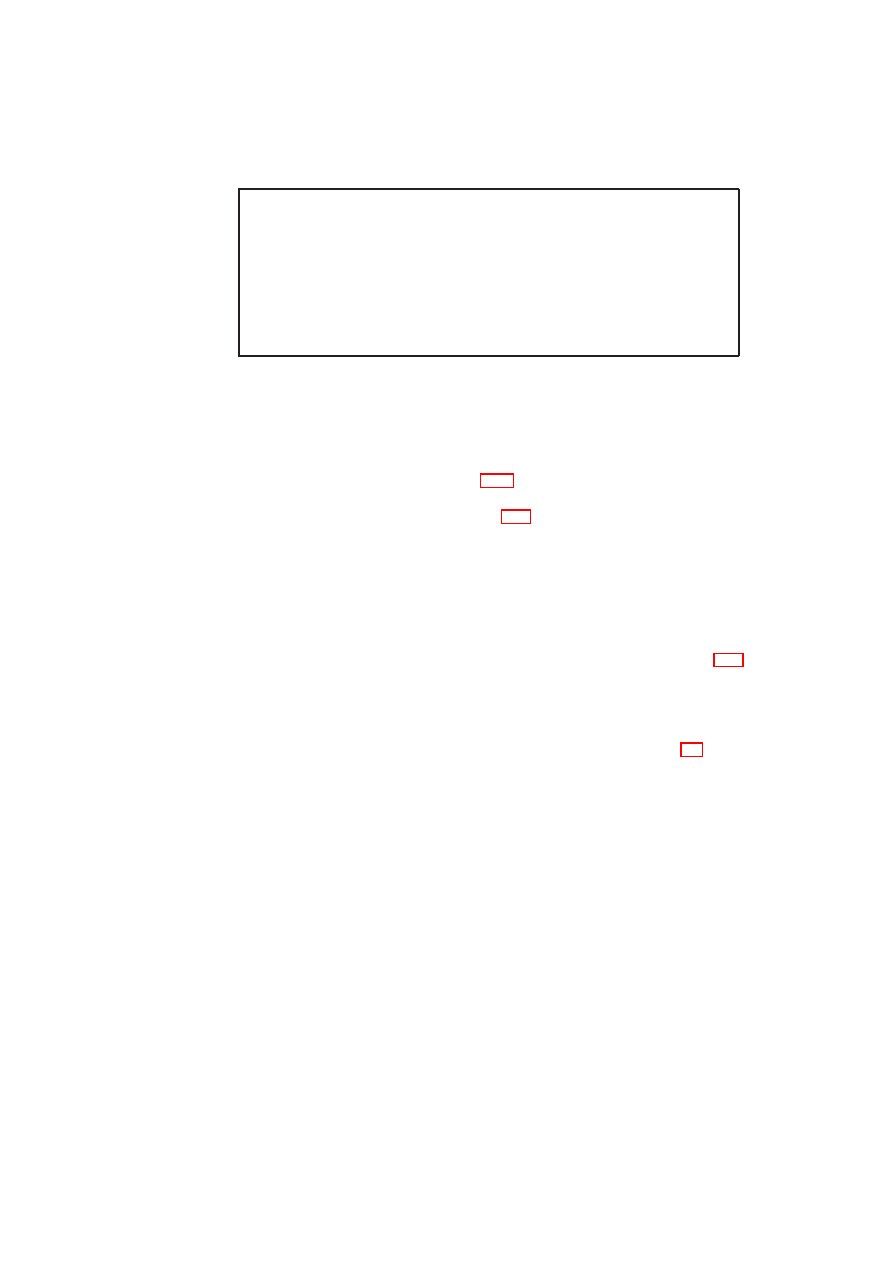

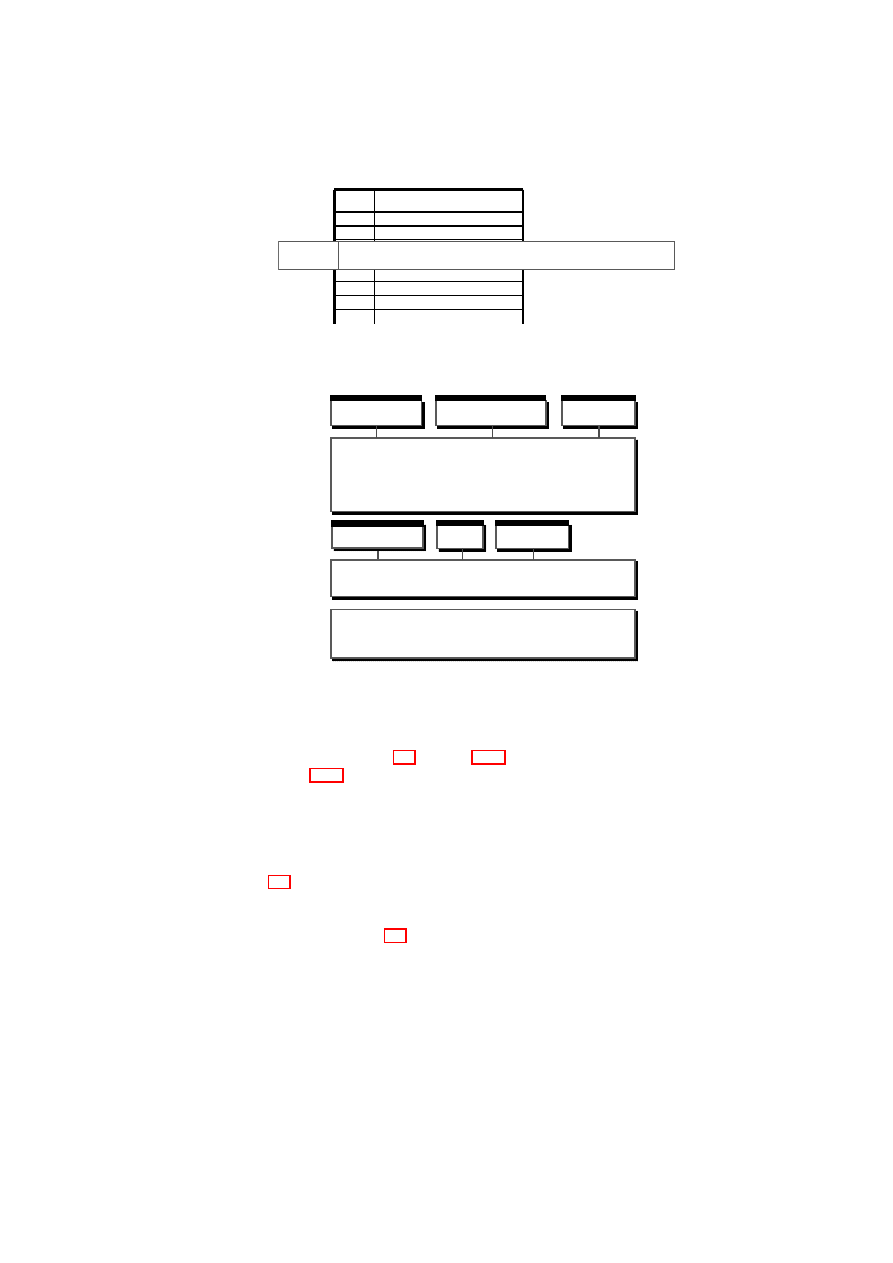

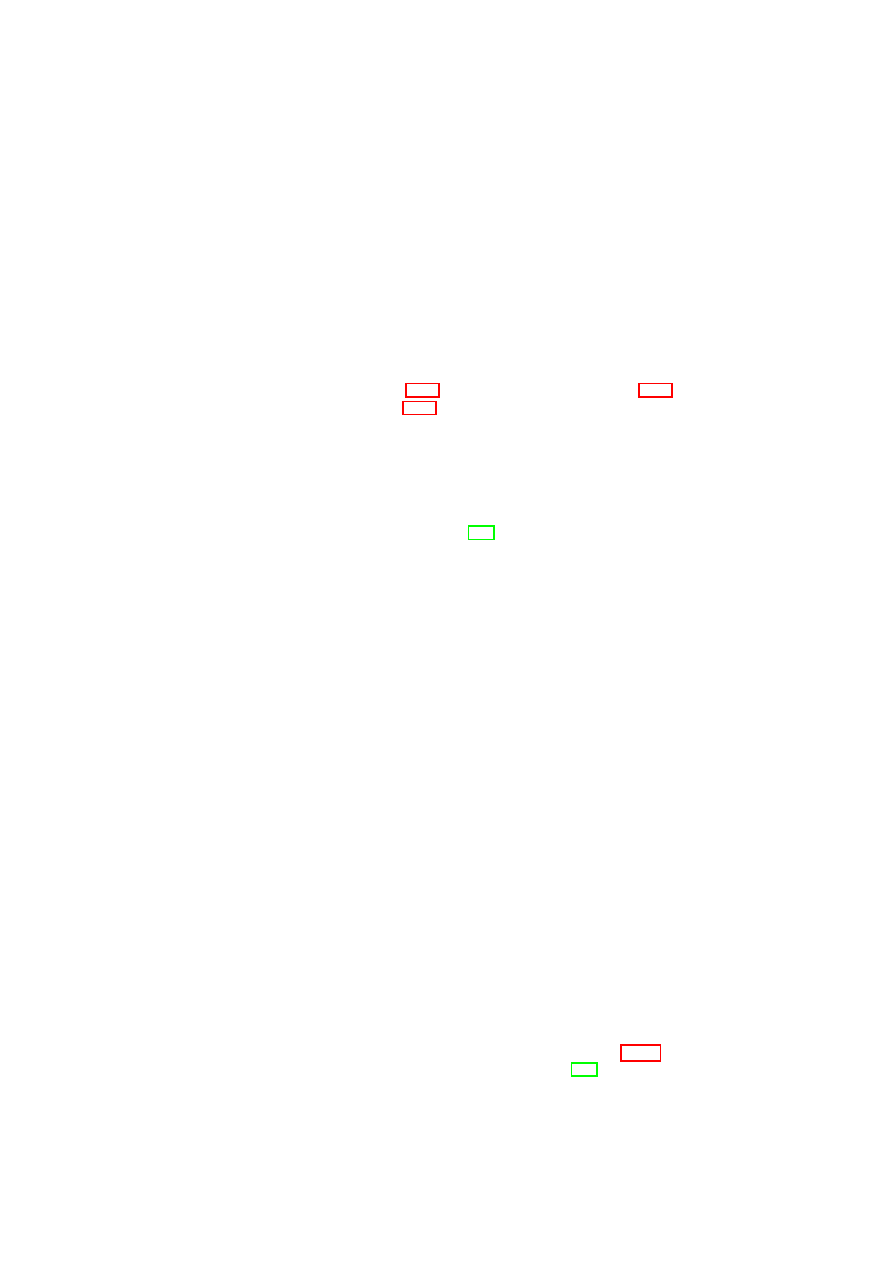

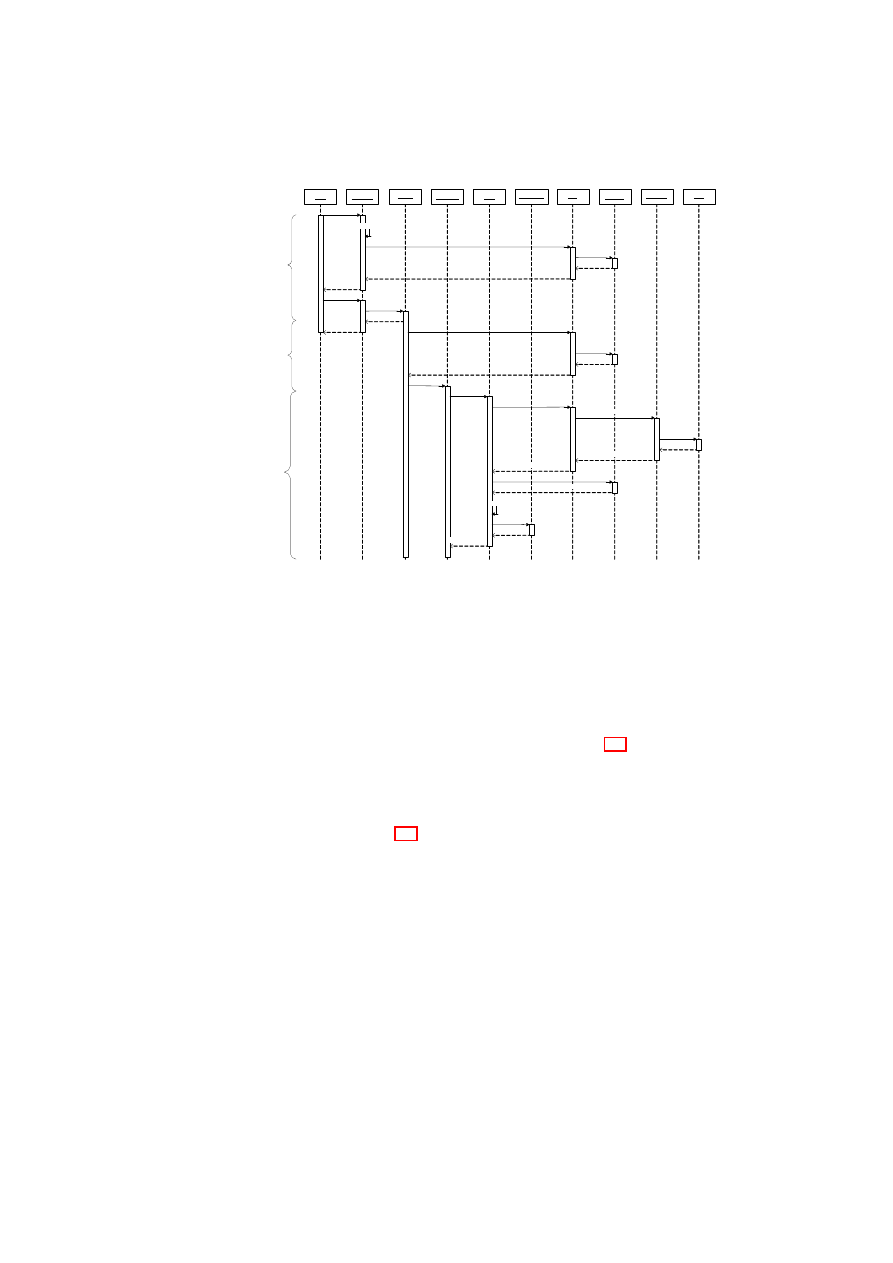

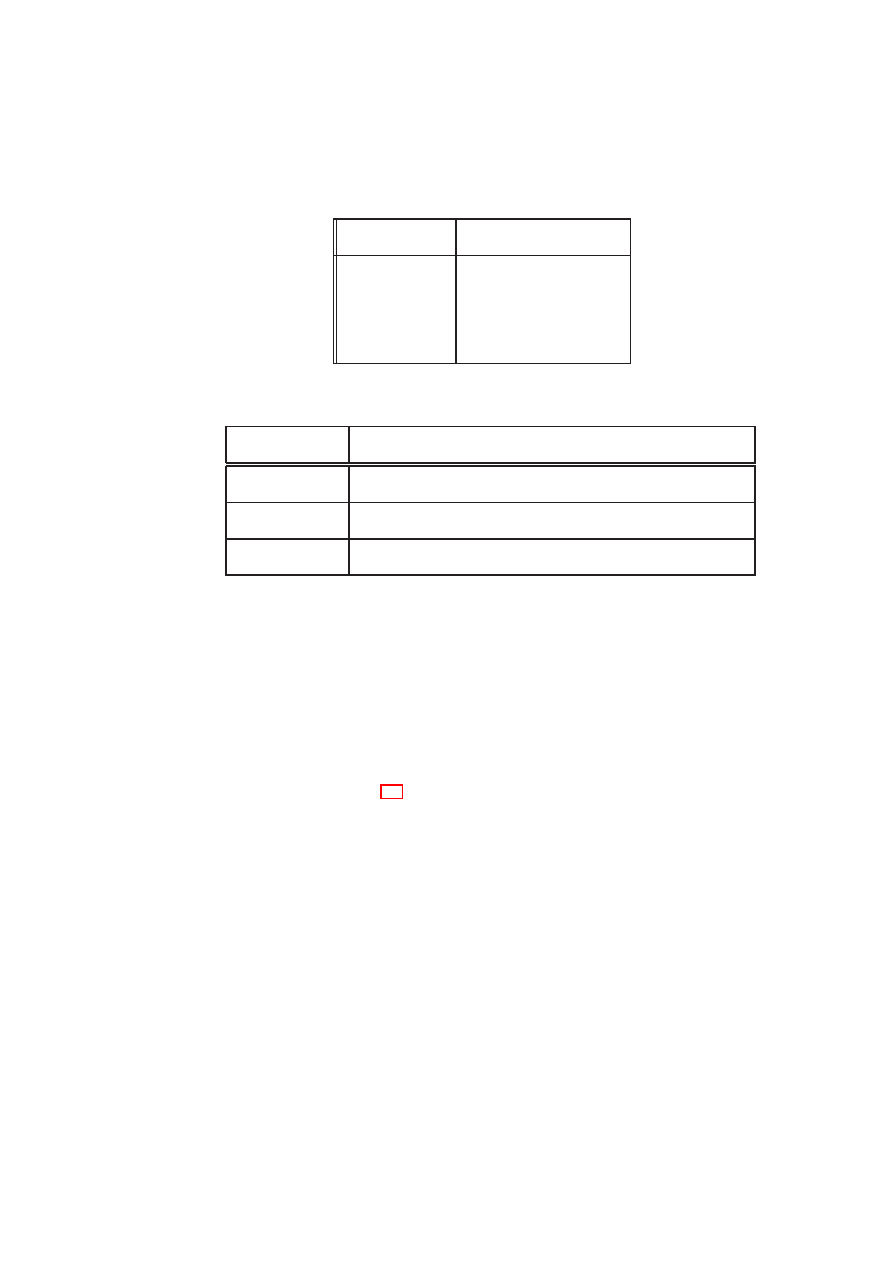

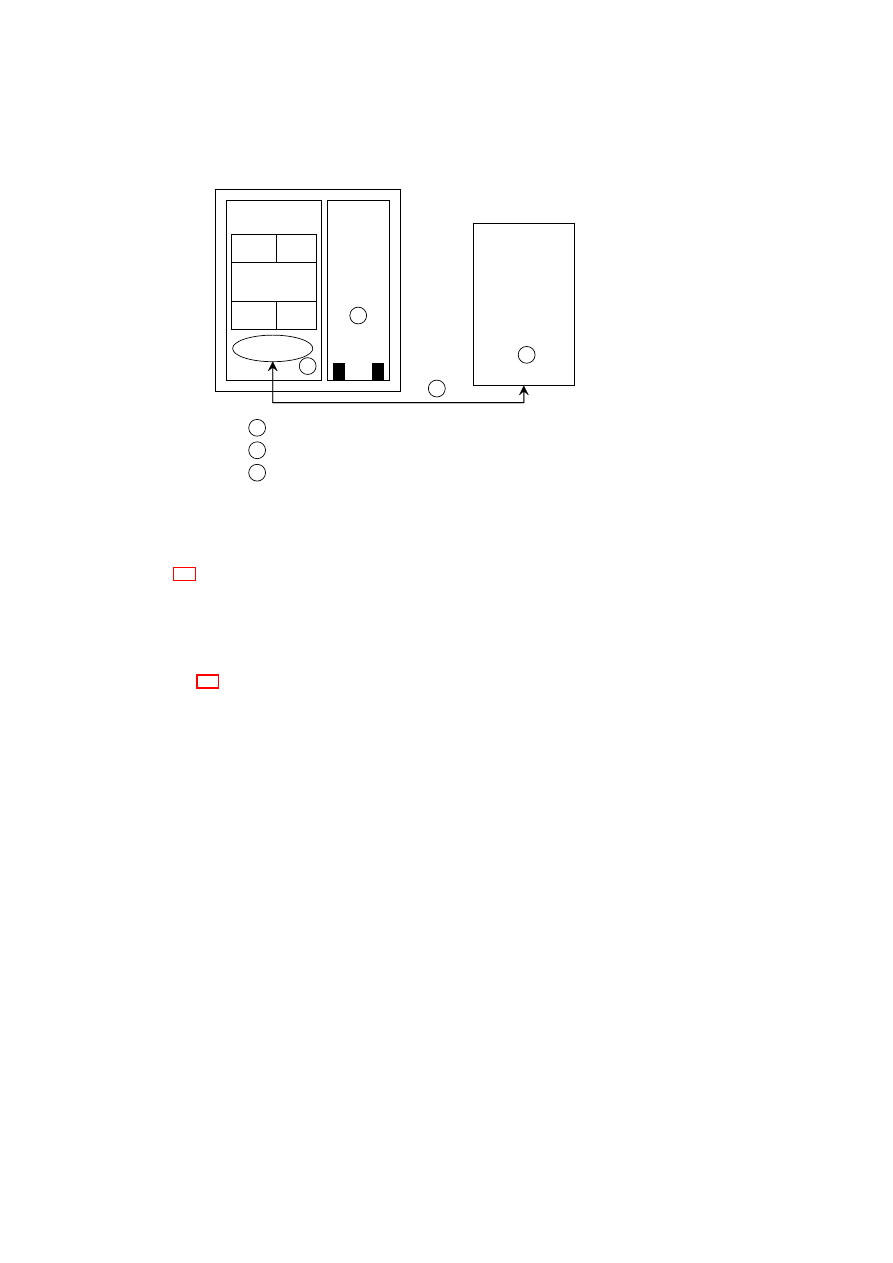

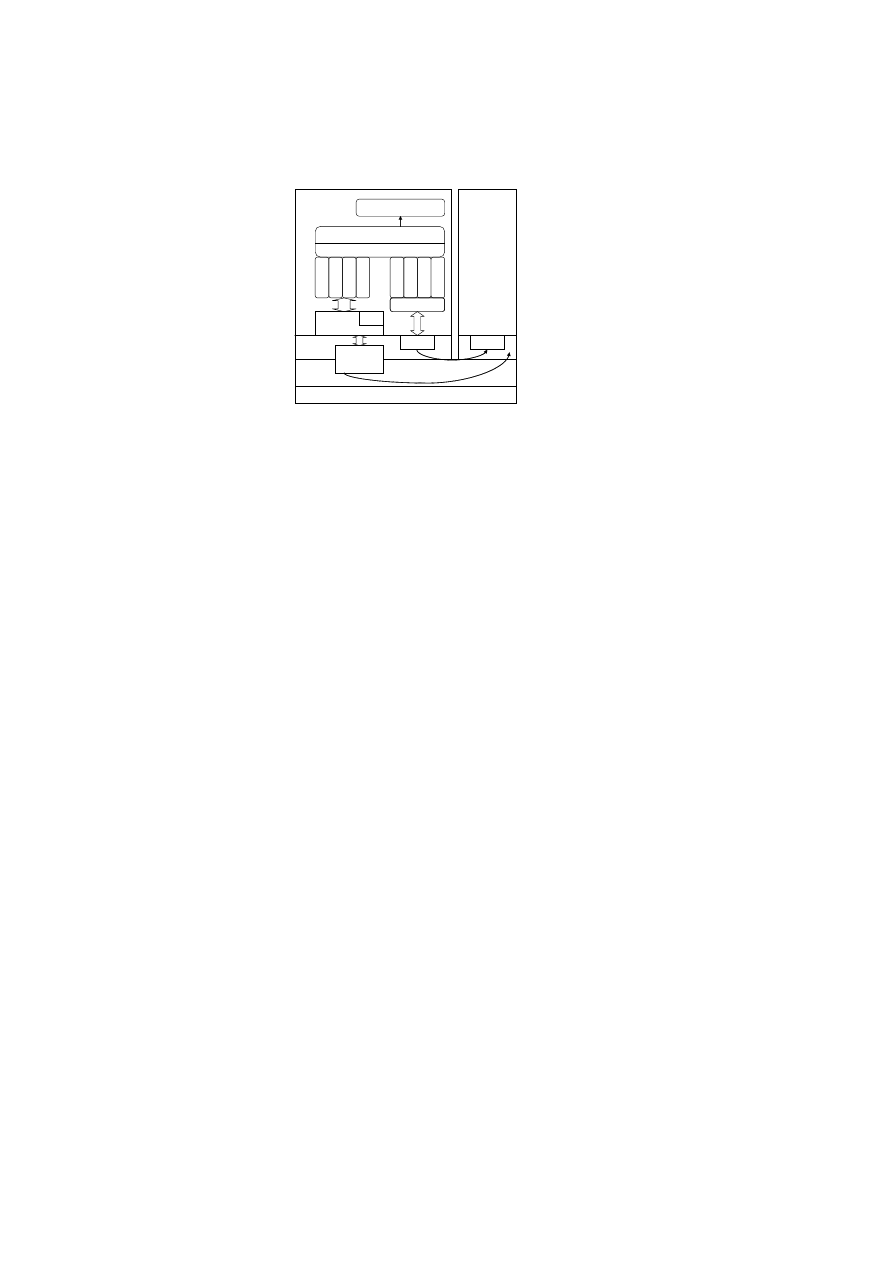

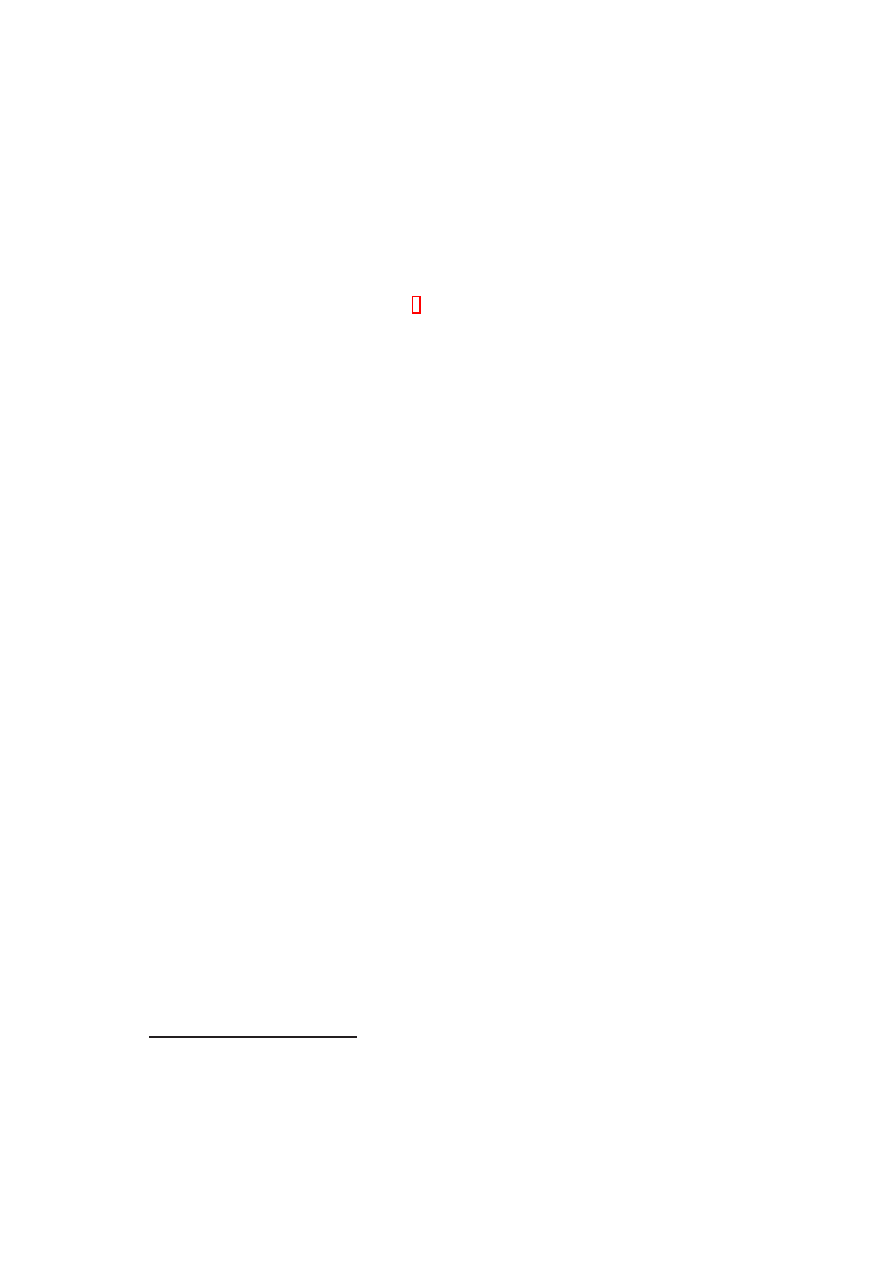

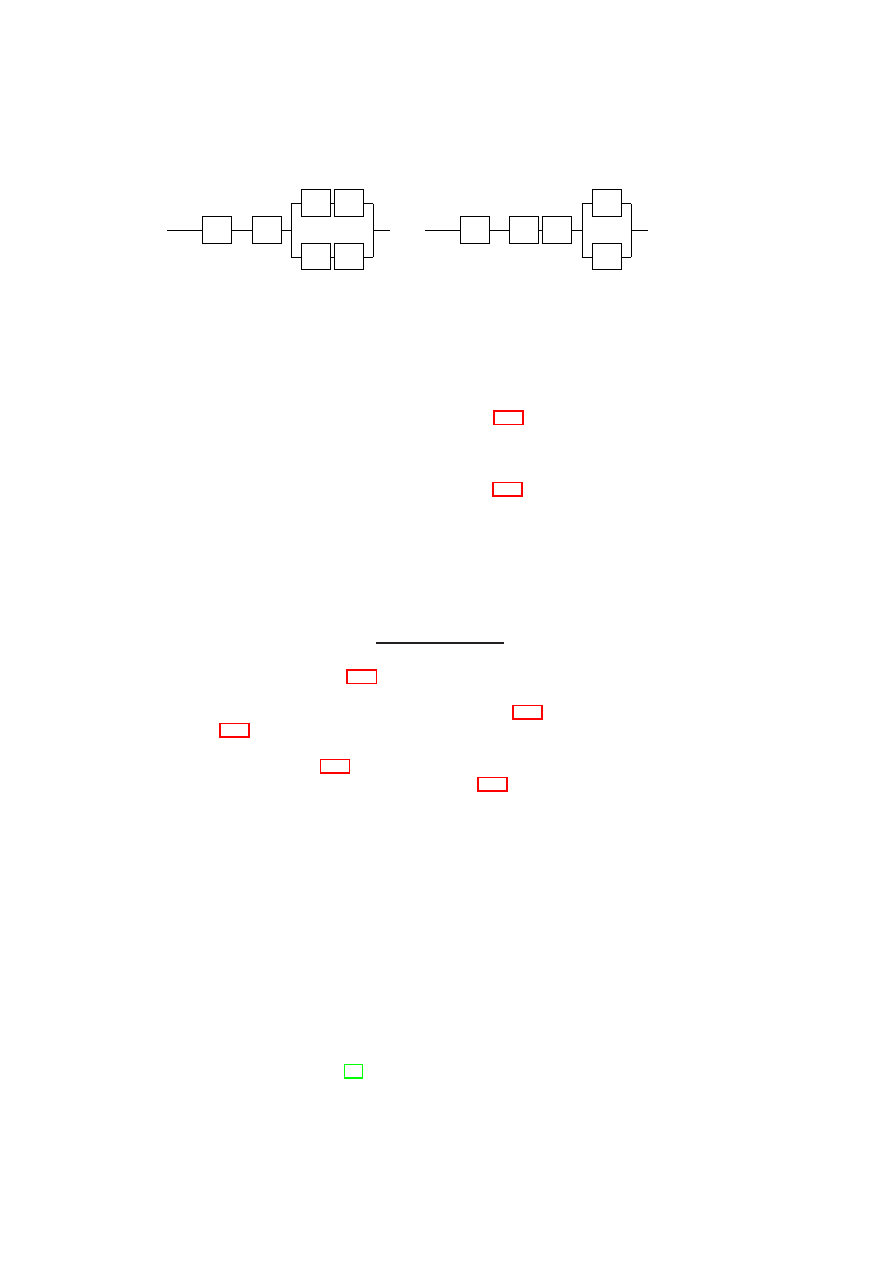

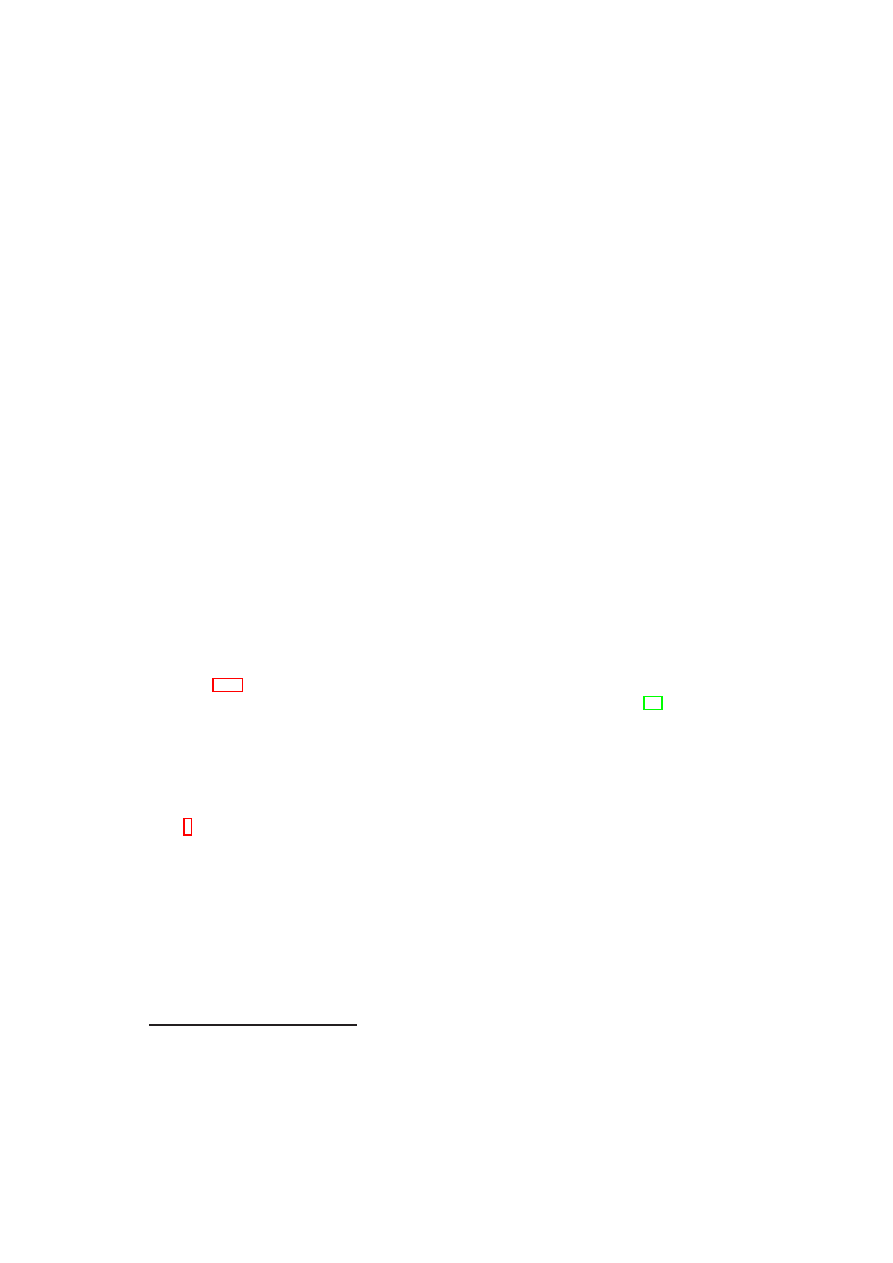

boundaries. Figure 3.1 shows a high-level list of security services and their interac-

22

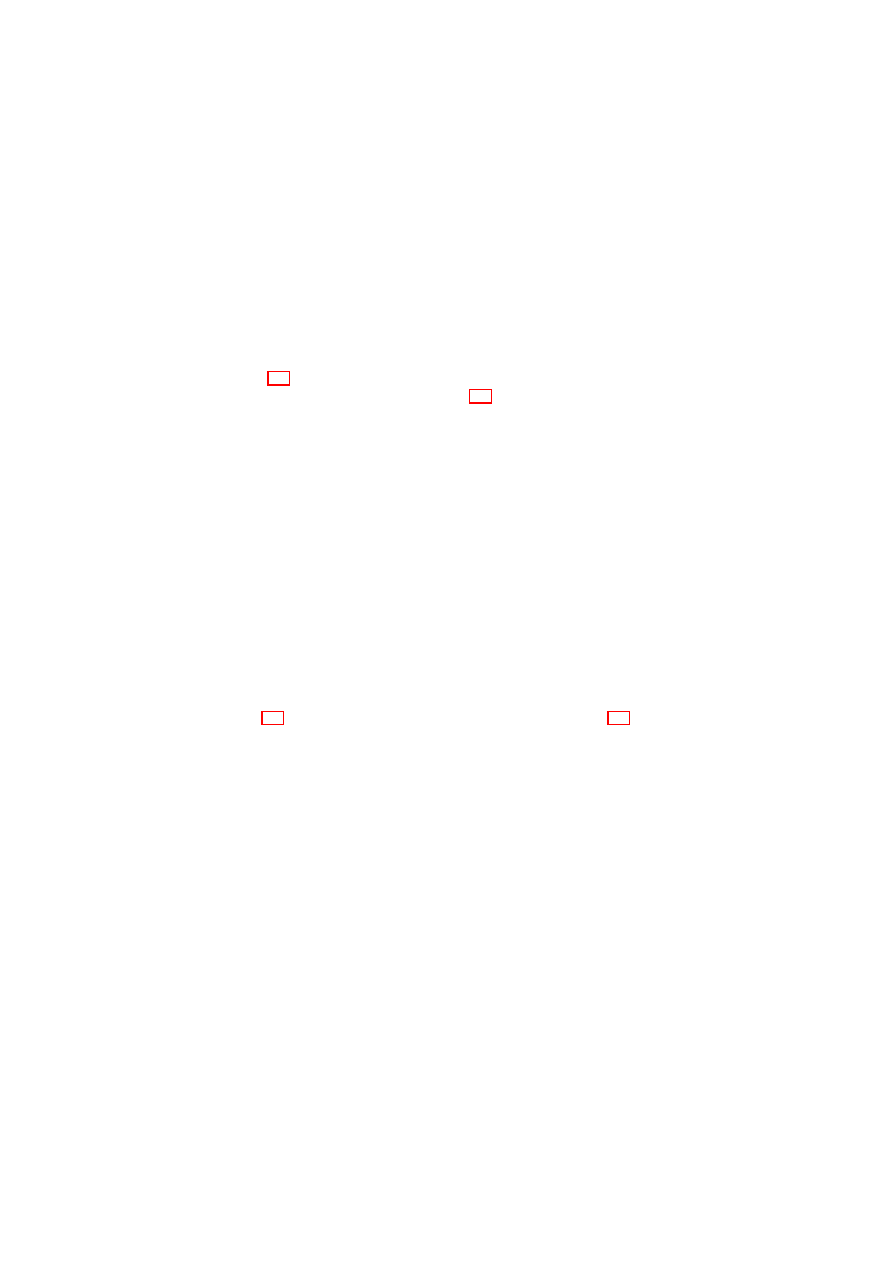

CHAPTER 3. UNIFIED POLICY ENFORCEMENT FOR VIRTUAL DATA CENTERS23

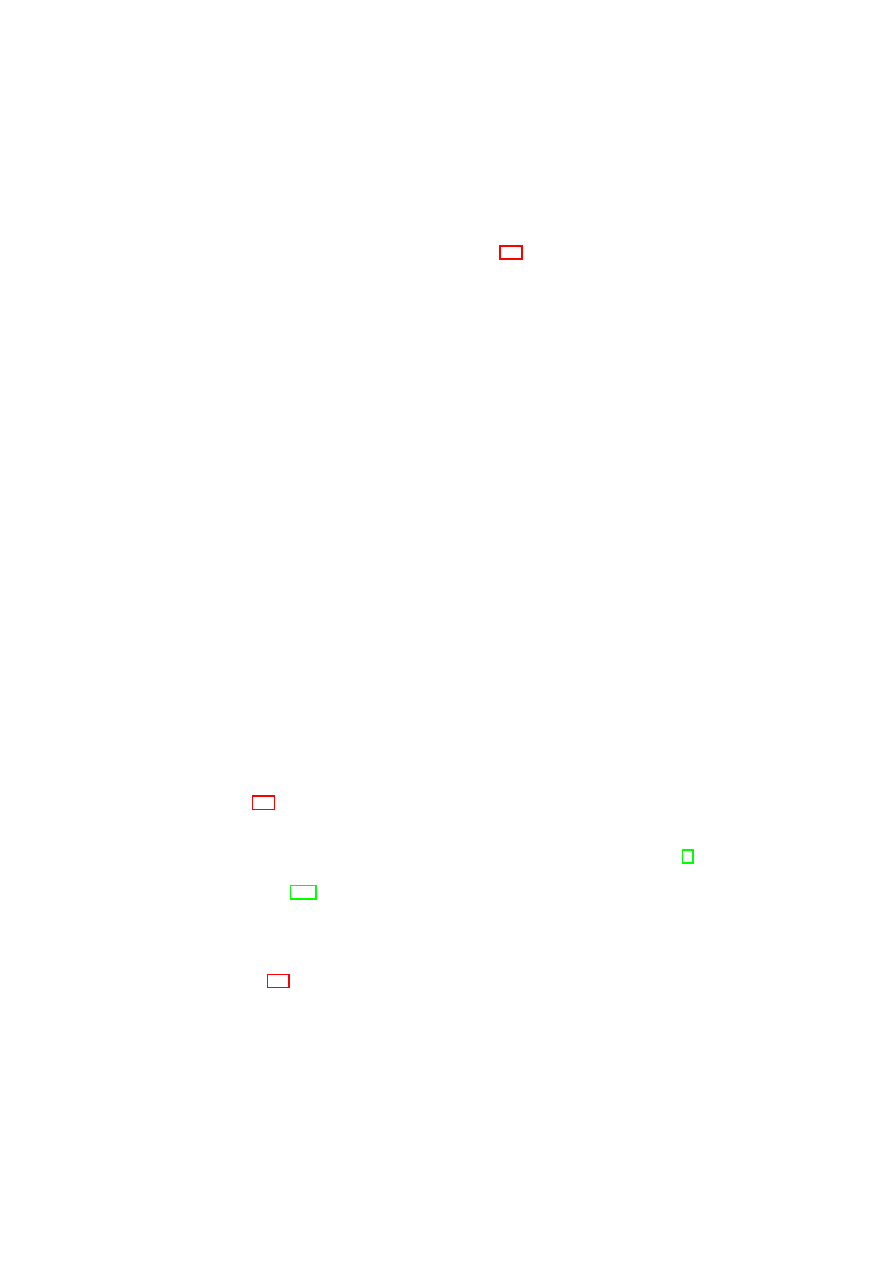

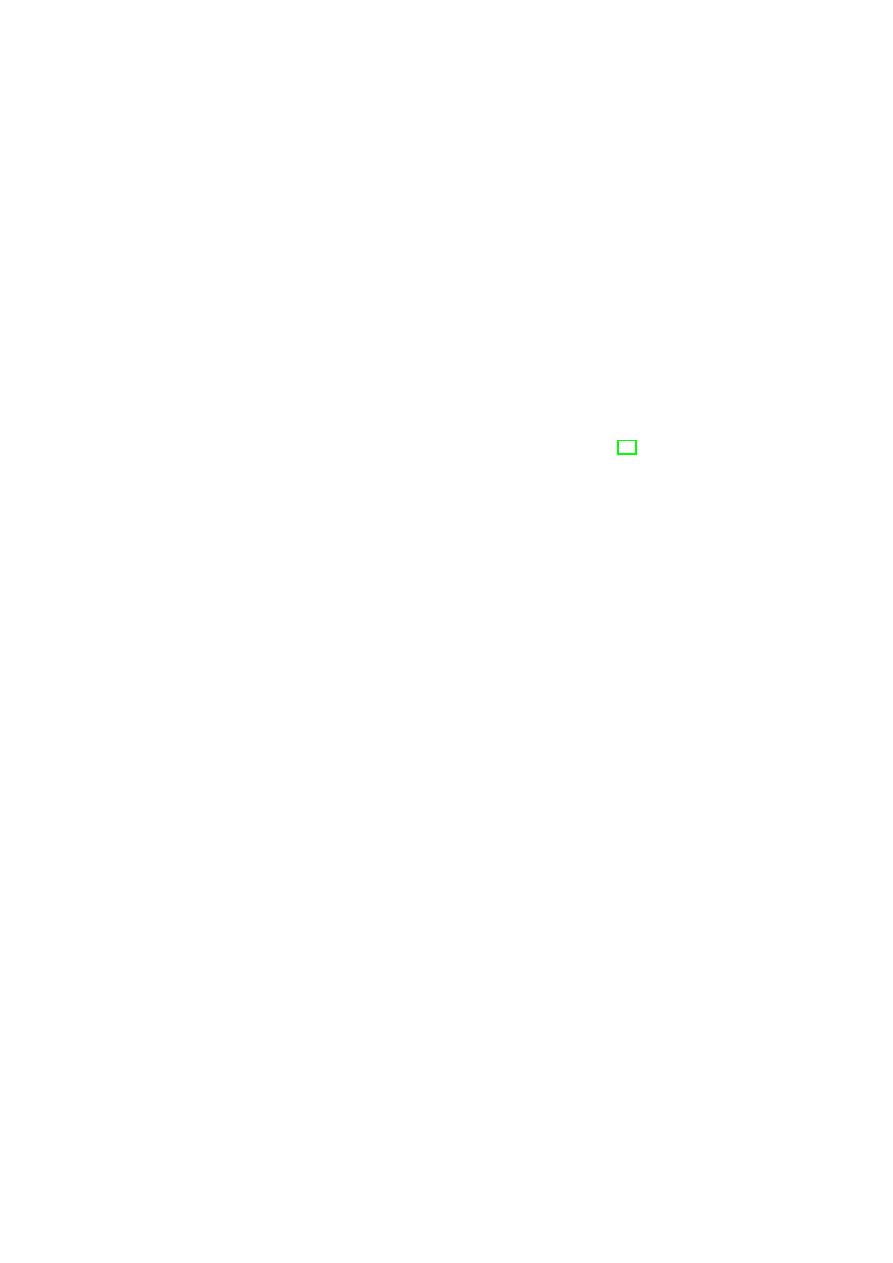

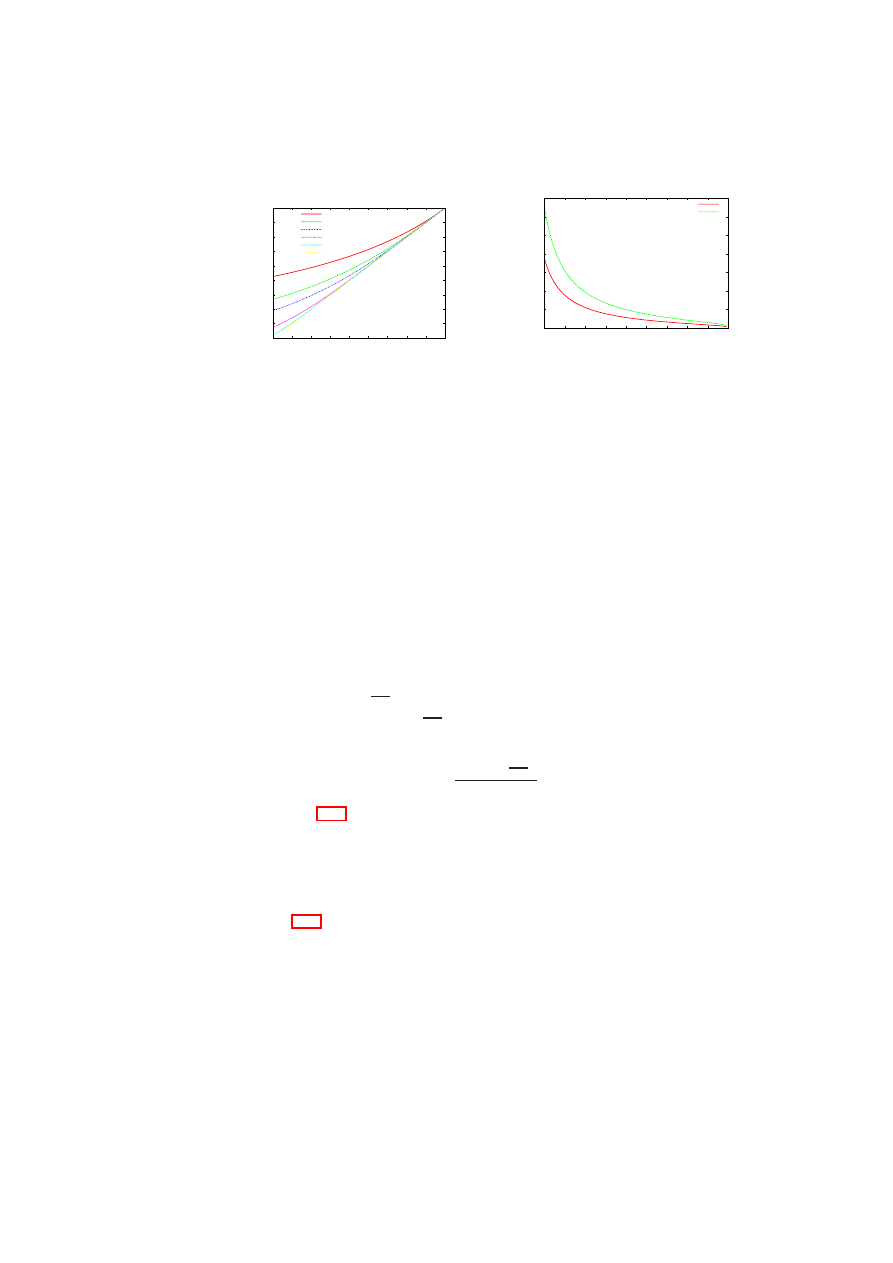

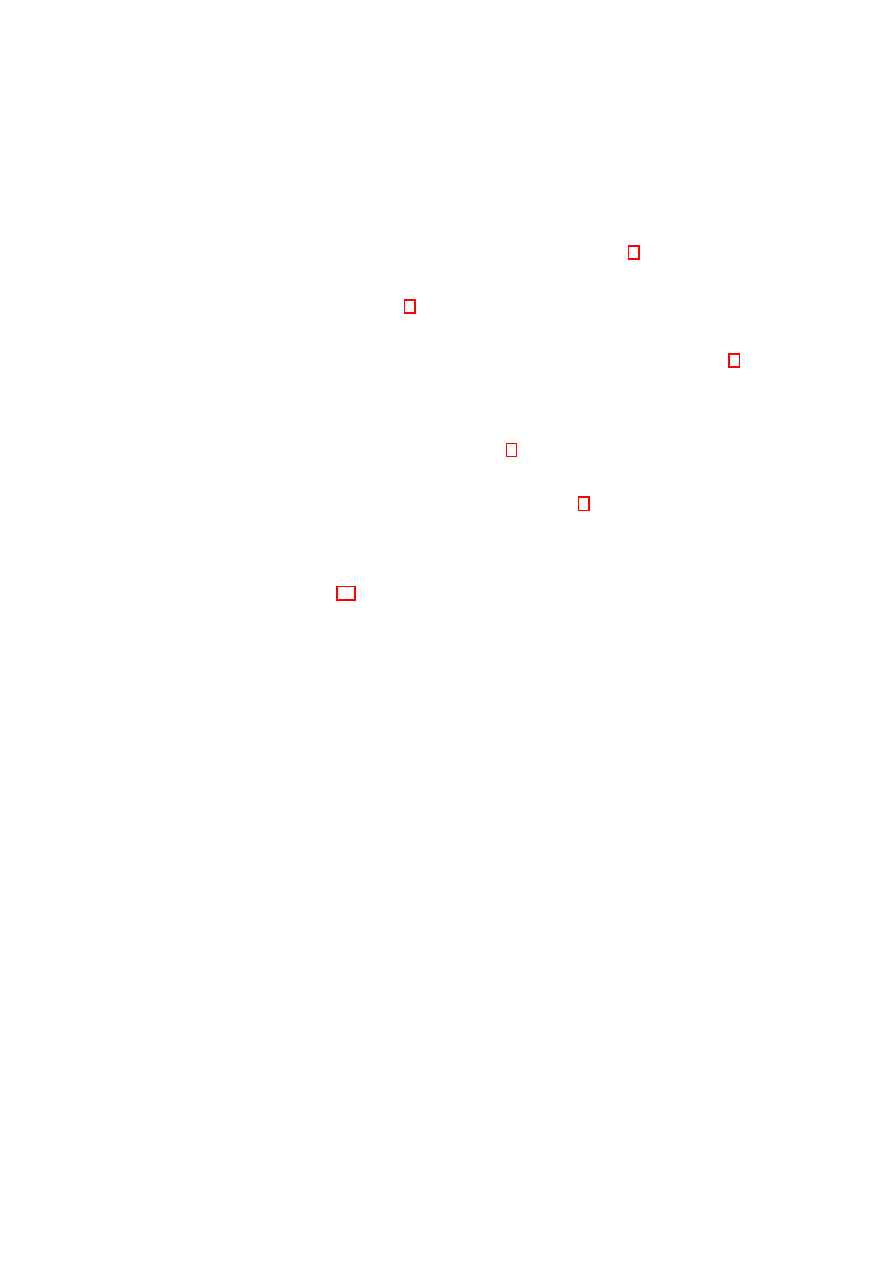

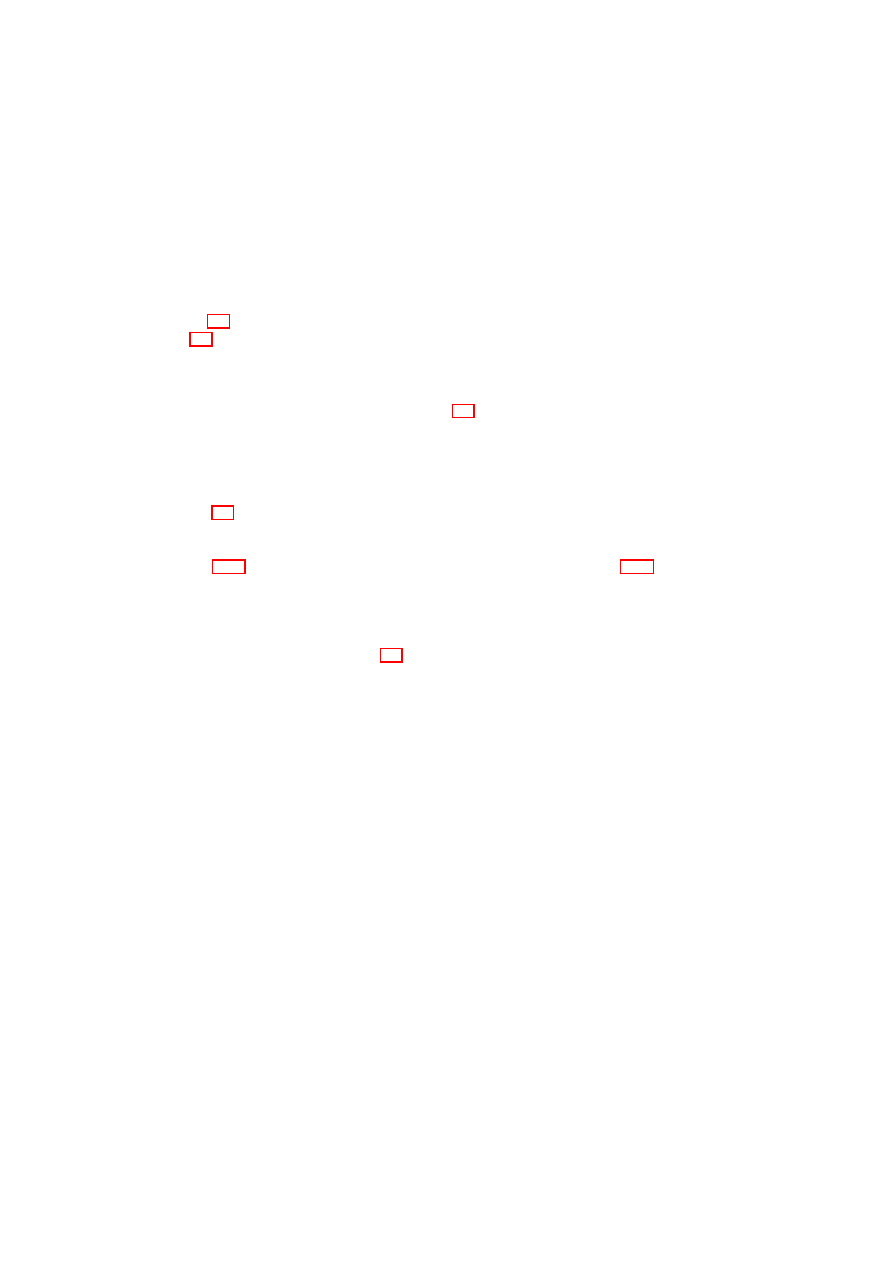

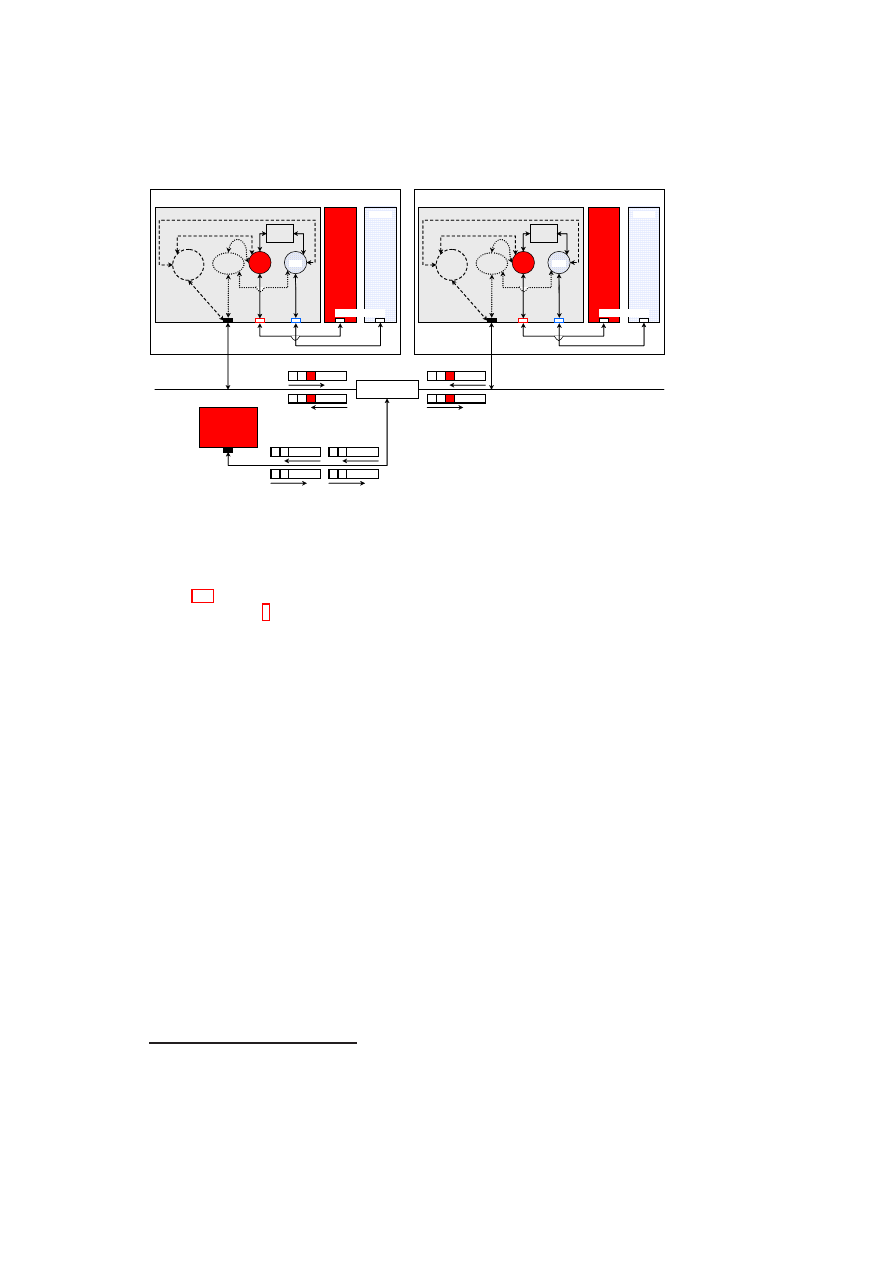

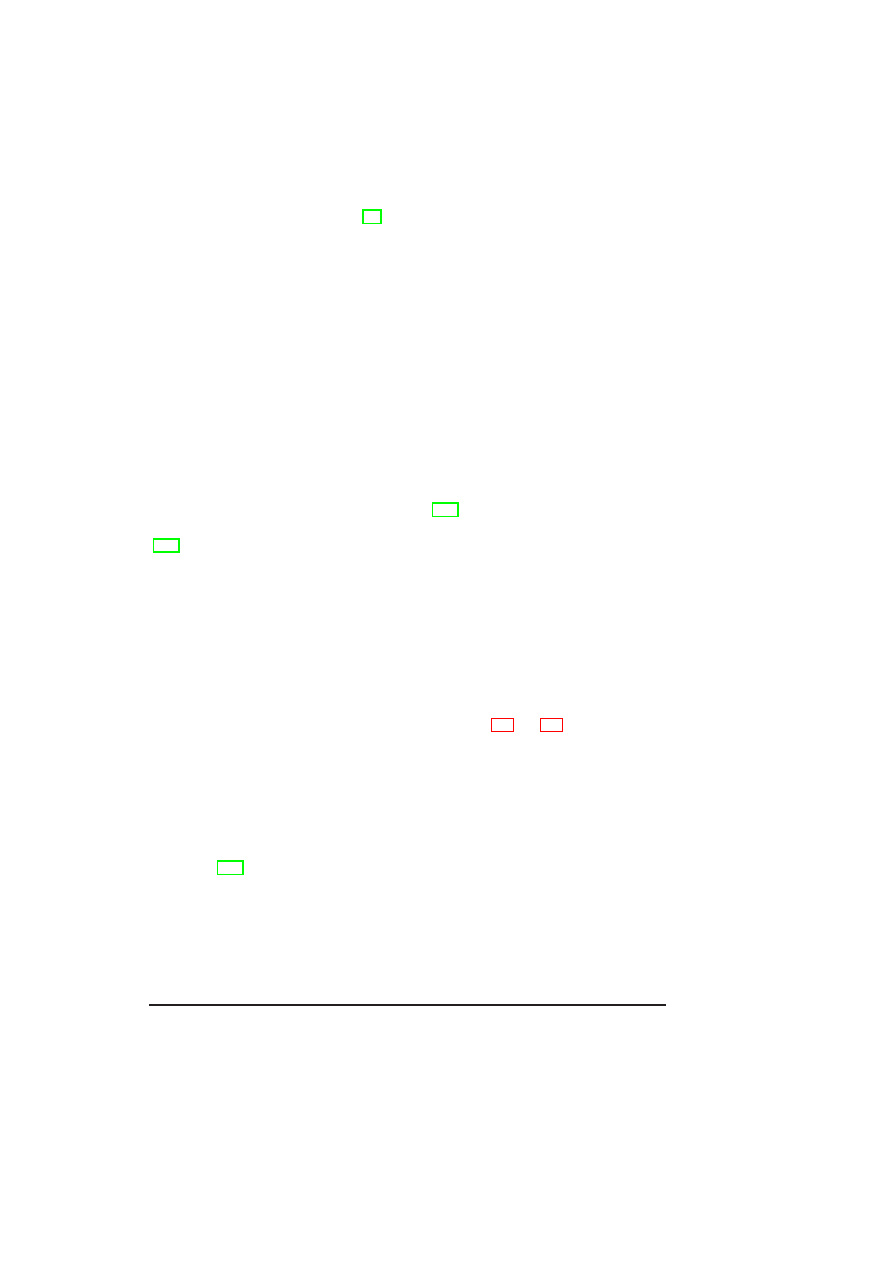

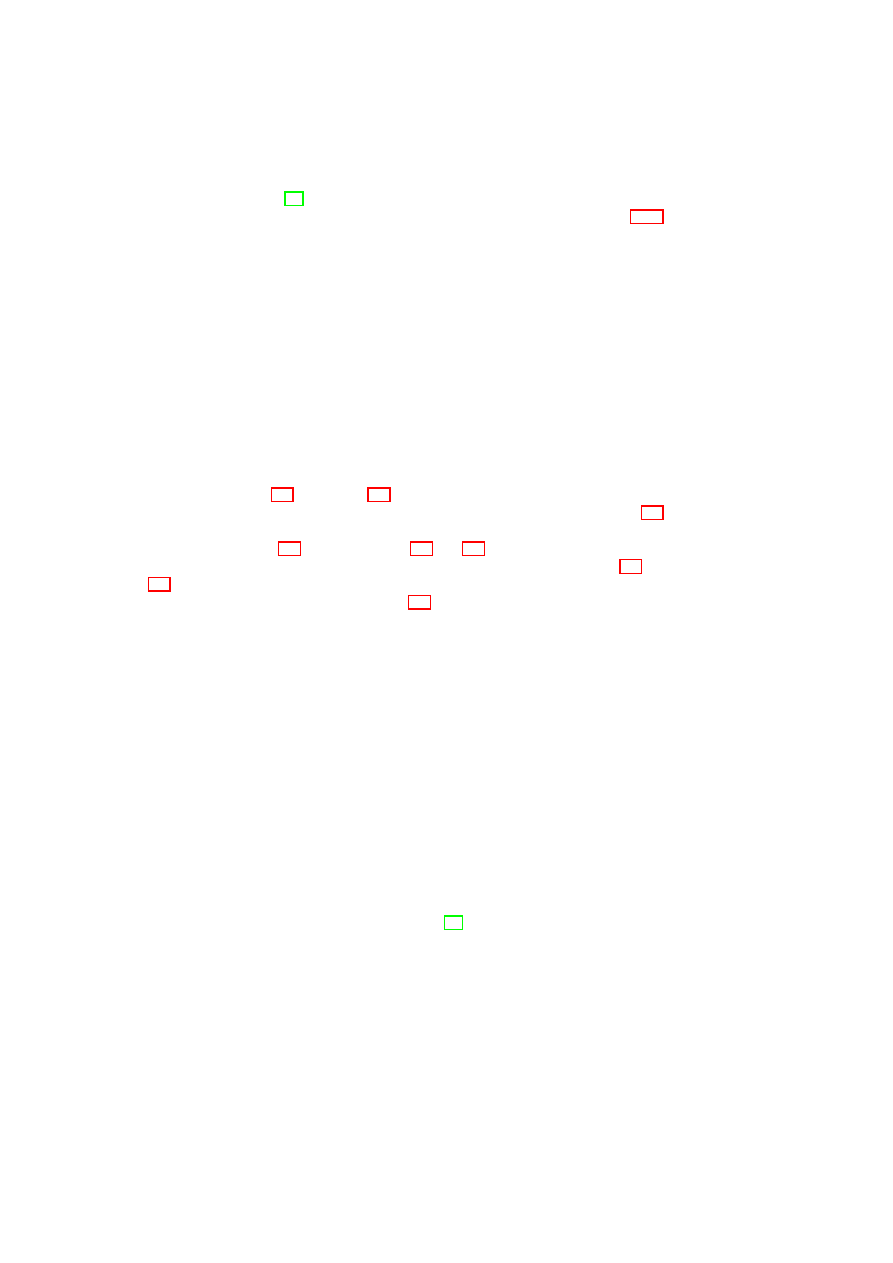

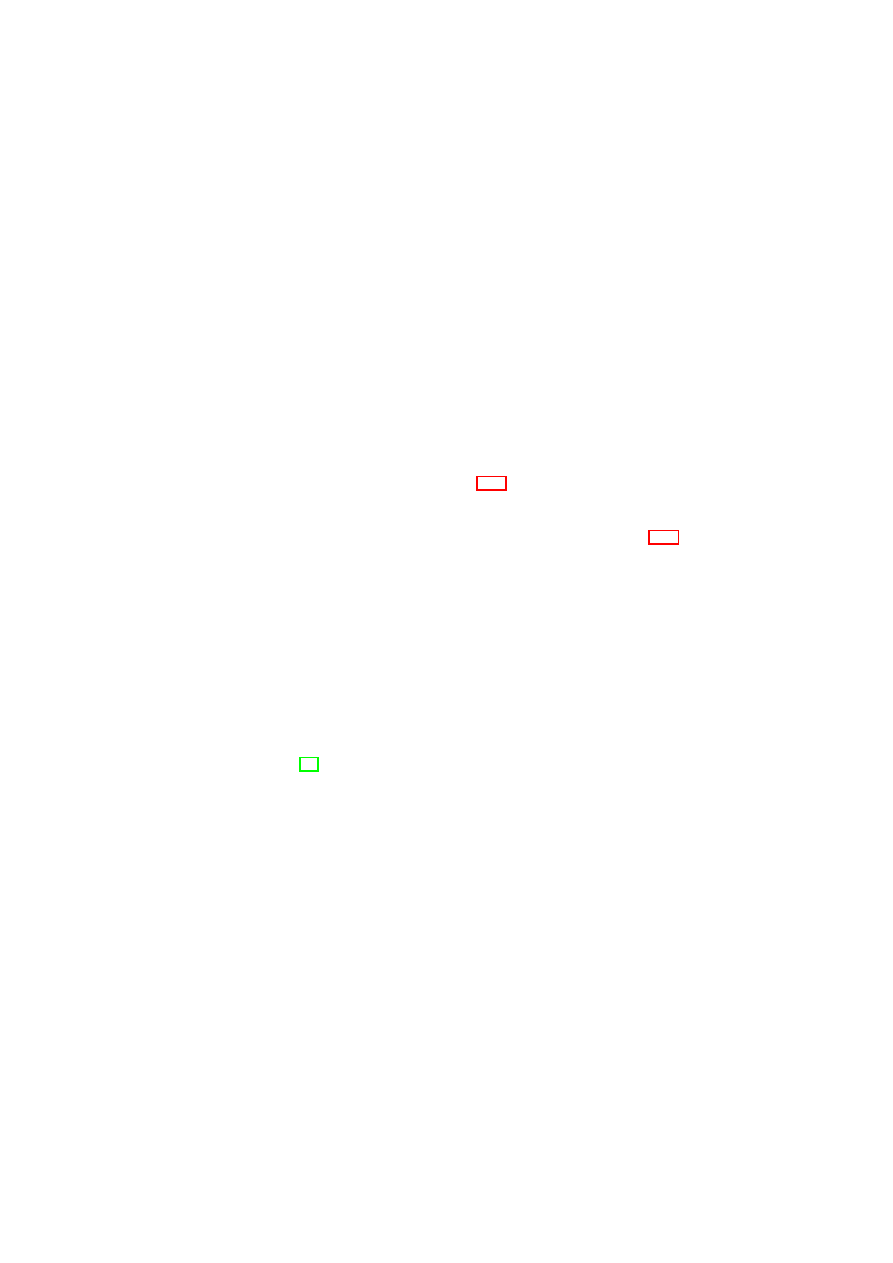

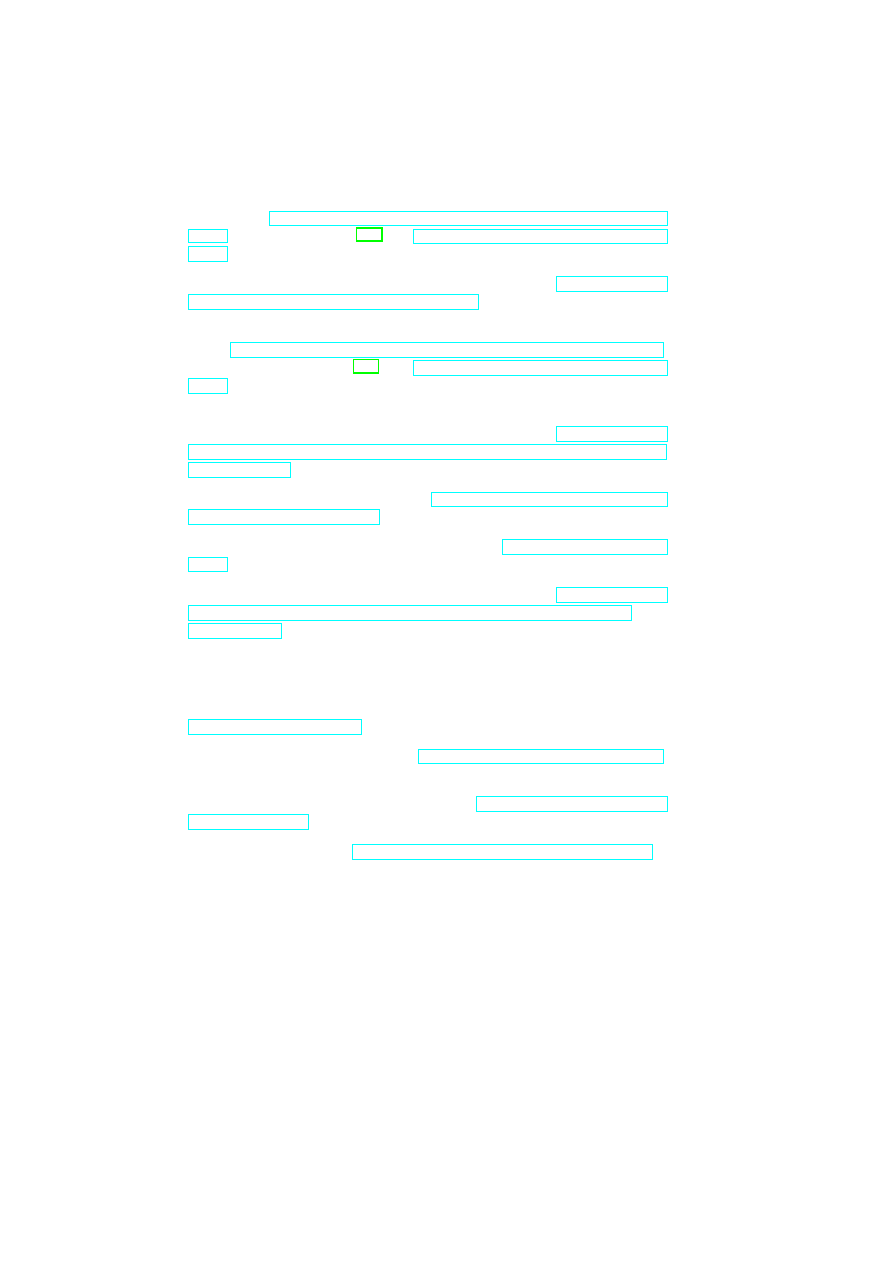

Compartment Manager

TVD Proxy

TVD Master

Network Manager

(sub-proxy)

Storage Manager

(sub-proxy)

Virtual Device Manager

Integrity Manager

TVD Component

Security Service

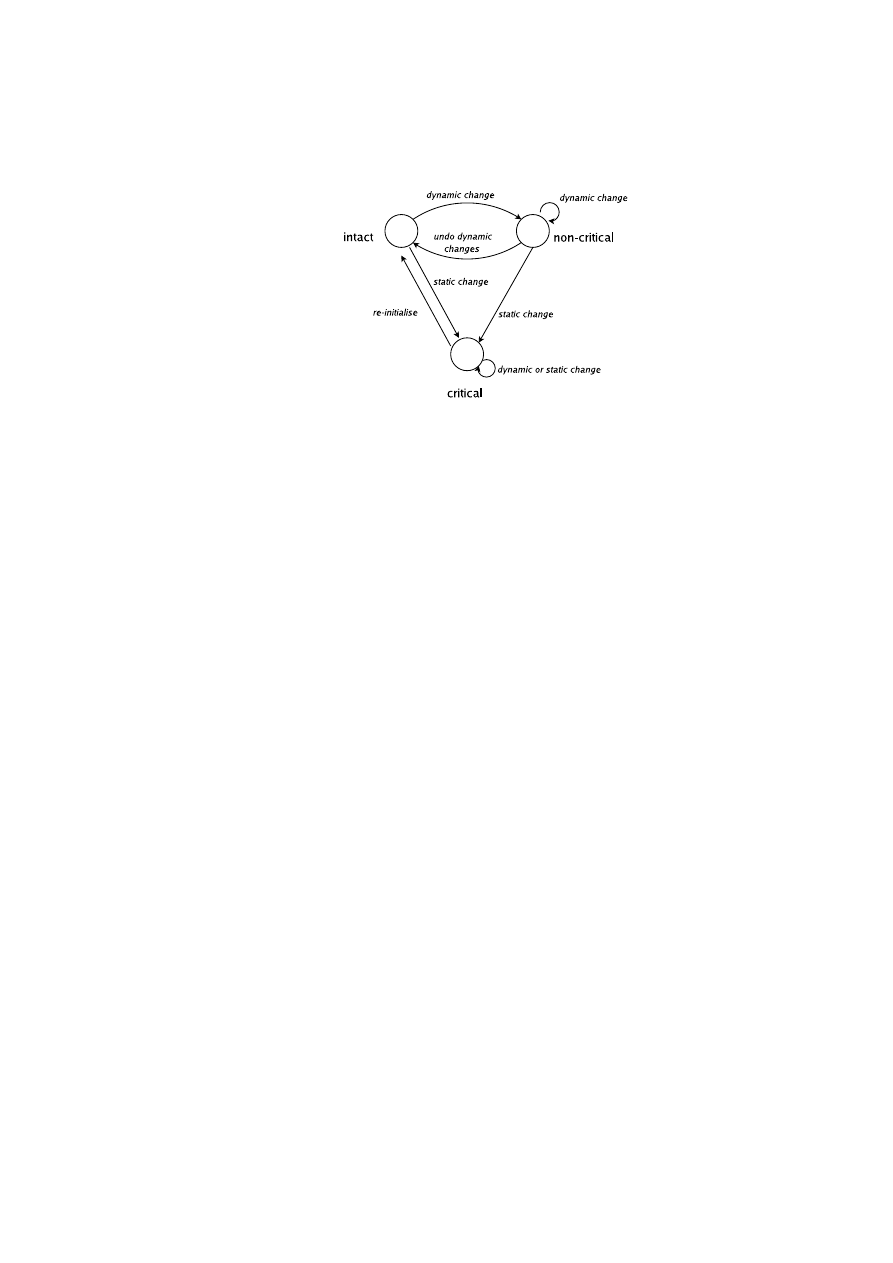

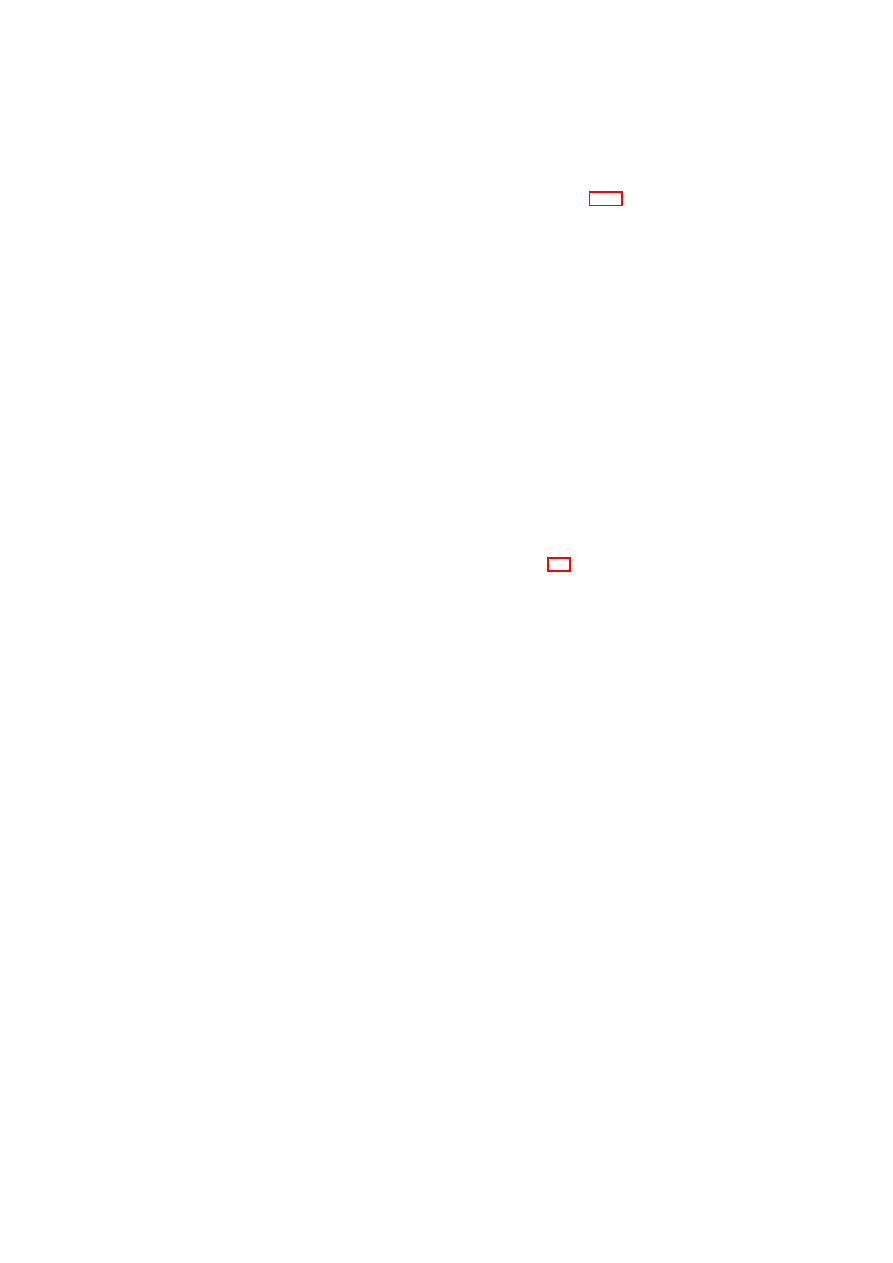

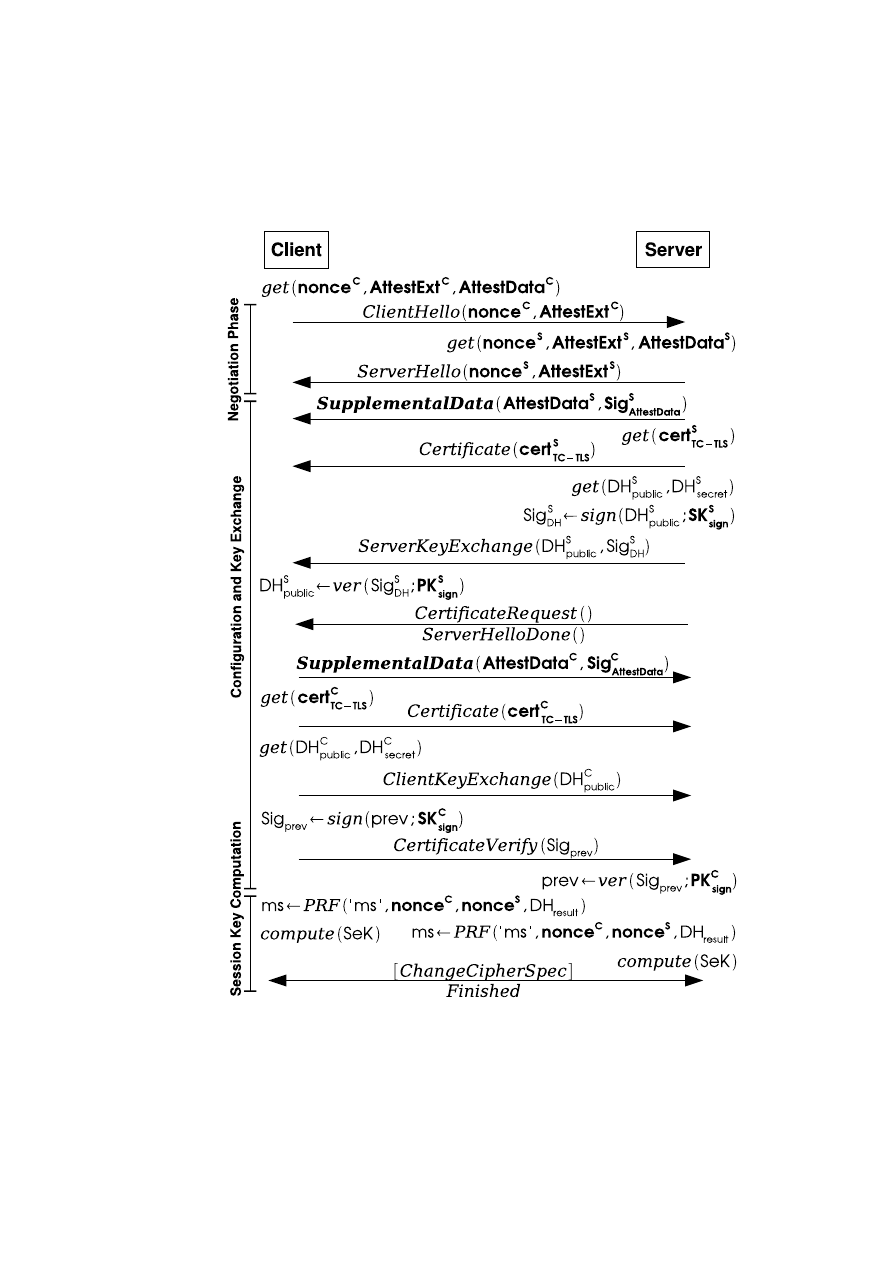

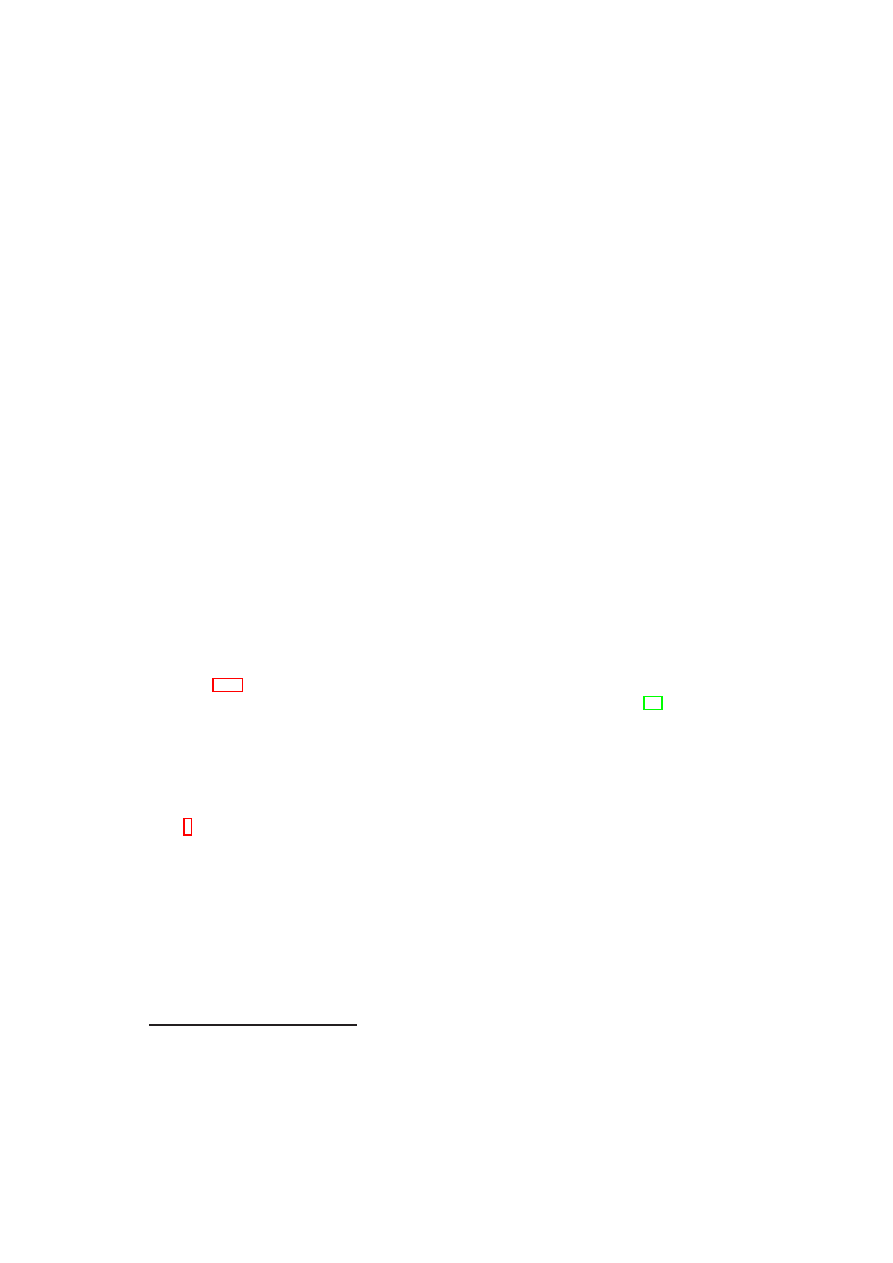

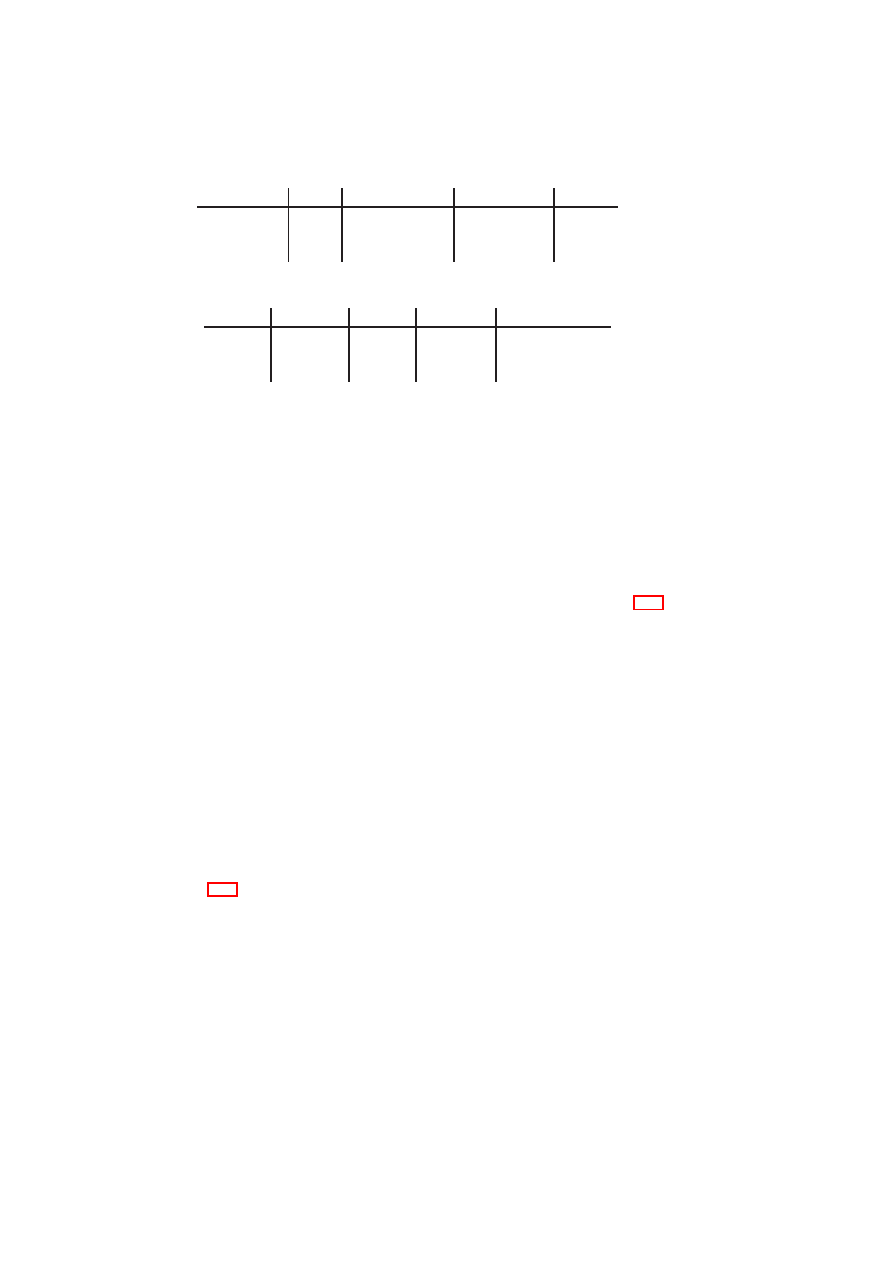

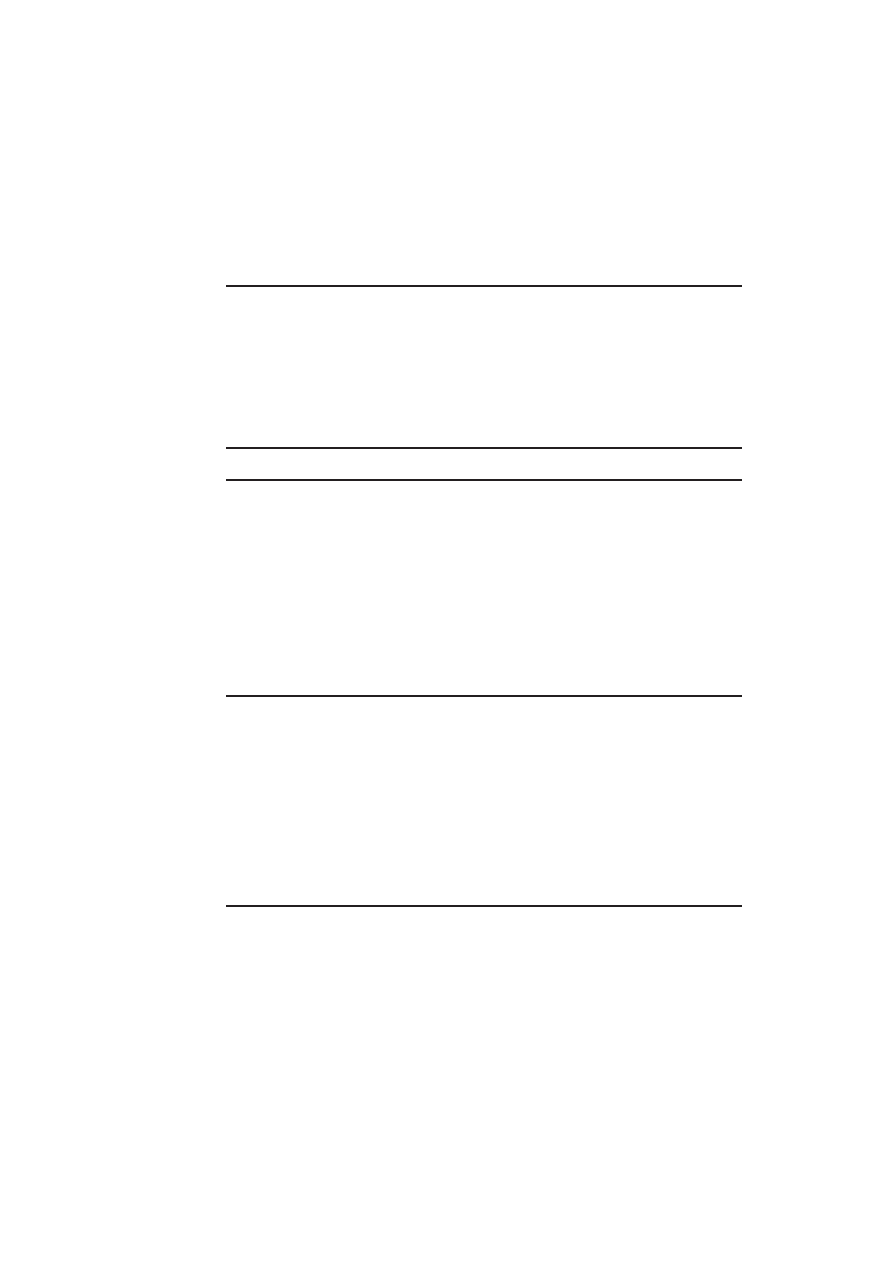

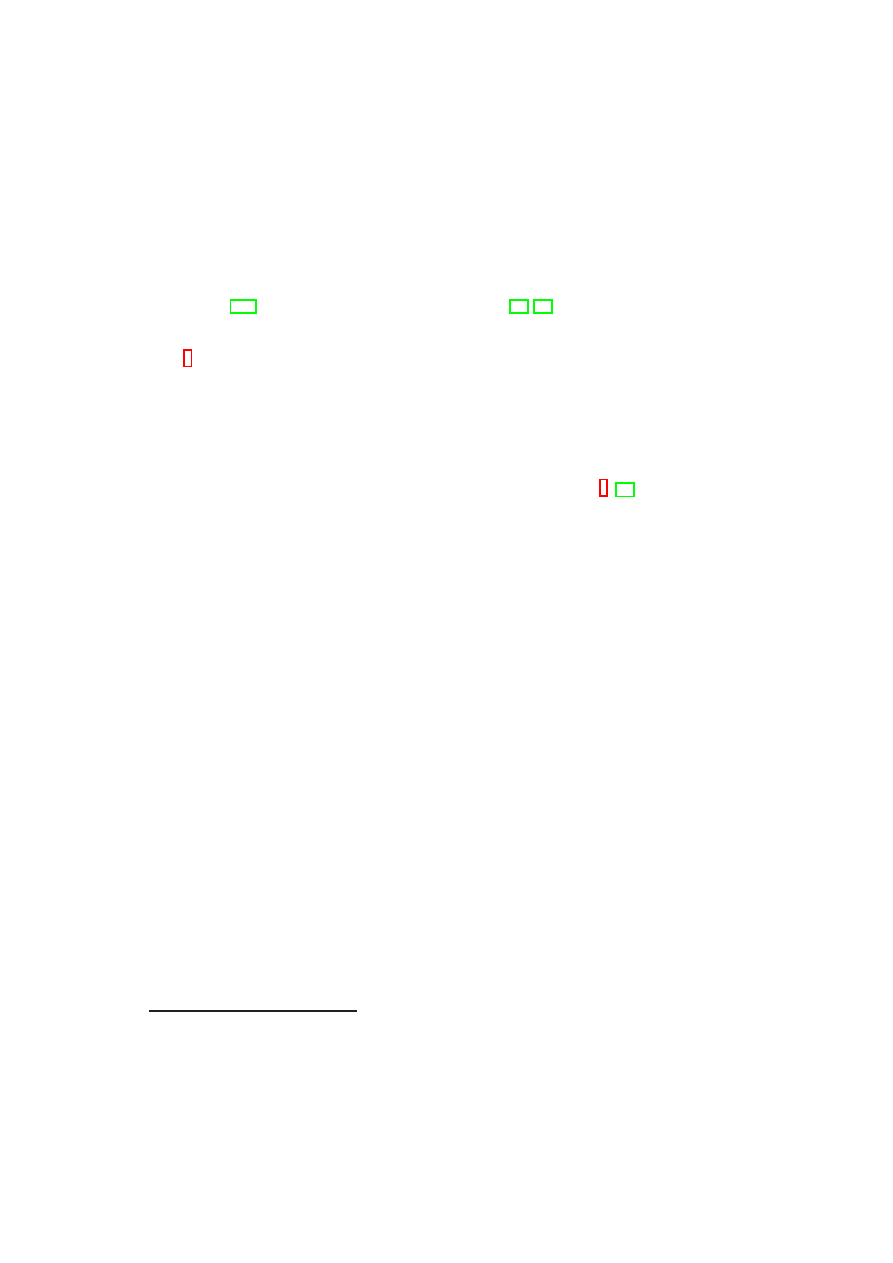

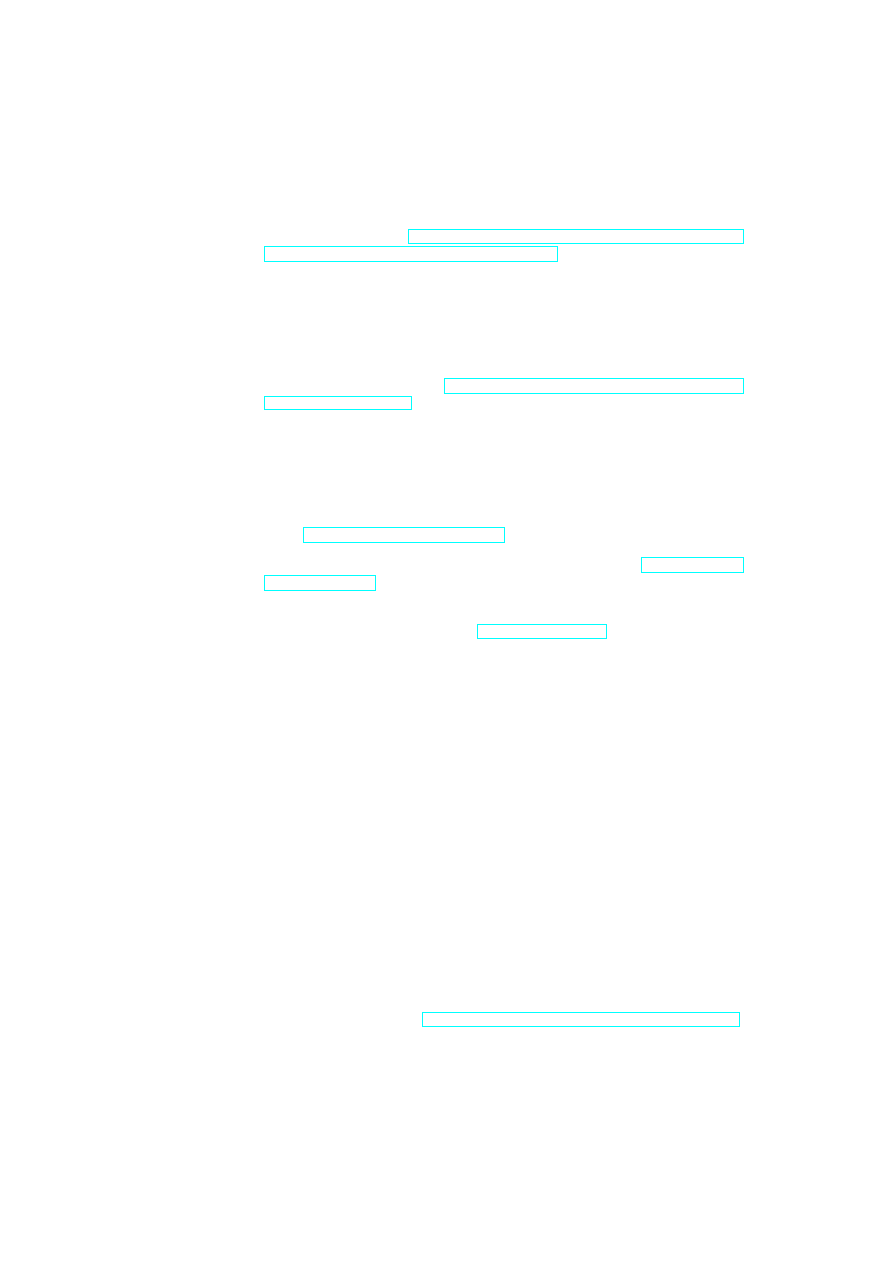

Figure 3.1: TVD Components and Security Services.

tion with the TVD components. Most importantly, the compartment manager service

manages the life-cycle of VMs in both para-virtualized and fully virtualized modes.

This service works in collaboration with the TVD Proxy to admit VMs into TVDs.

The integrity manager service implements Trusted Computing extensions and assists

the TVD Proxy in host pre-admission and VM admission control. The virtual network

manager and virtual storage manager services are invoked by the TVD Proxy. They

implement resource virtualization technologies and enforce parts of the high-level TVD

policies that are relevant to their operation. Lastly, the virtual device manager service

handles the secure resource allocation and setup of virtual devices assigned to each

VM.

Our TVD infrastructure is geared towards automated deployment and enforcement

of security policies specified by the TVD Master. Automated refinement and transla-

tion of high-level policies into low-level configurations are of particular interest. For

example, for information flow between two hosts in a trusted data center environment,

other mechanisms need to be in place than for a flow between two hosts at opposite

ends of an untrusted WAN link. In the latter case, the hosts should be configured to

allow communication between them only through a VPN tunnel.

Another important consideration is policy conflict detection and resolution [127,

76]. In fact, conflicting high-level policies (e.g., a connection being allowed in the

inter-TVD policy but disallowed in the intra-TVD policy) can potentially result in an

incorrect configuration of the underlying infrastructure. We cannot solely rely on the

TVD owner to specify conflict-free policies. It is important to detect policy conflicts

and provide feedback to the owner in case one is detected. In the present prototype,

policy refinement is performed manually. The result is a set of configuration files that

we use for configuring the security services at the policy enforcement layer (e.g., the

virtual networking infrastructure). In future work, we will investigate the automation

of this step using, for example, the IETF policy model [91] and various graph-based

mechanisms from the literature. We will also investigate different techniques for re-

solving conflicting policies [33, 17, 32, 38].

3.2

Virtual Networking Infrastructure

Virtual networking (VNET) technologies enable the seamless interconnection of VMs

that reside on different physical hosts as if they were running on the same machine.

In our TVD framework, we employ multiple technologies, including virtual switches,

Ethernet encapsulation, VLAN tagging, and VPNs, to virtualize the underlying net-

work and securely group VMs that belong to the same TVD. A single private virtual

network is dedicated to each TVD, and network separation is ensured by connecting

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

24

OpenTC D05.6 – Final Report of OpenTC Workpackage 5

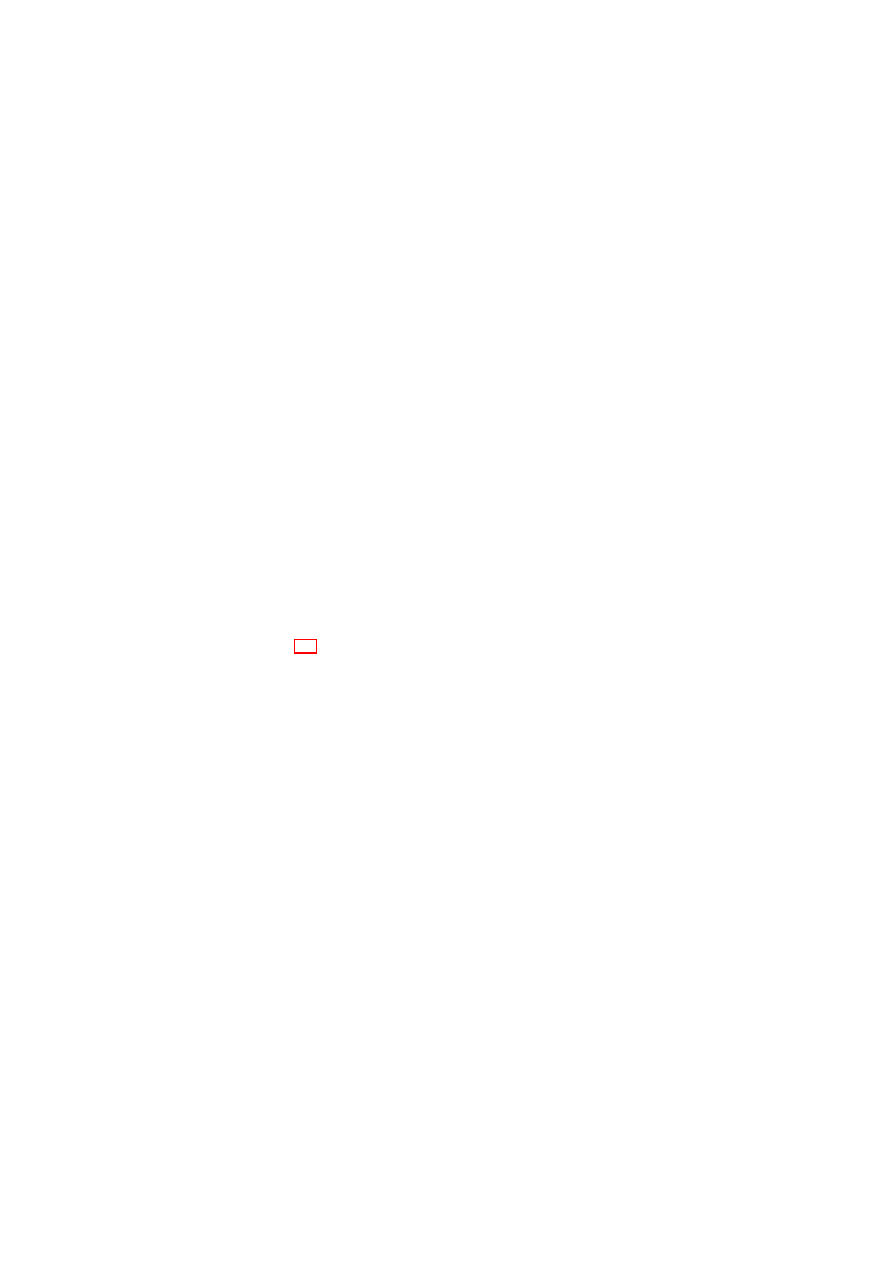

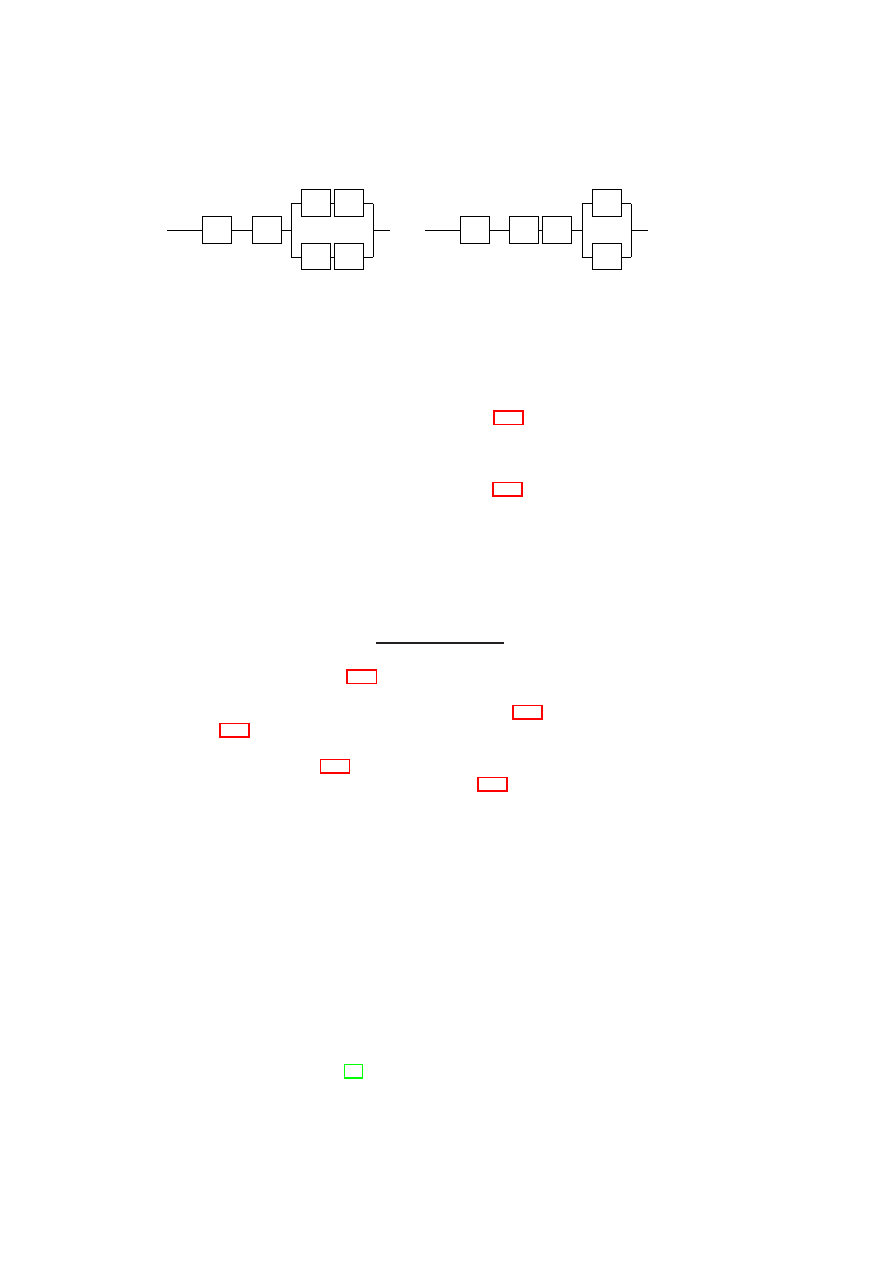

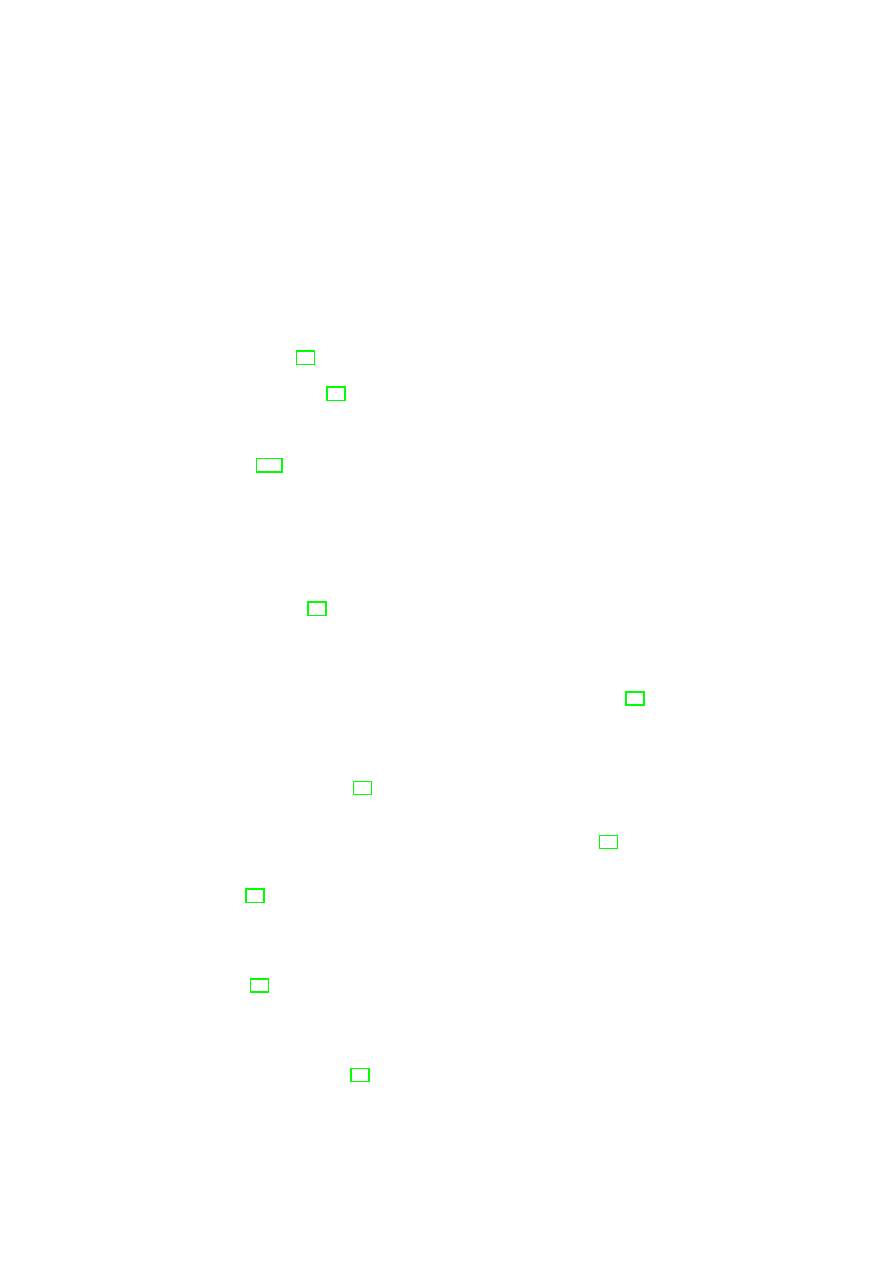

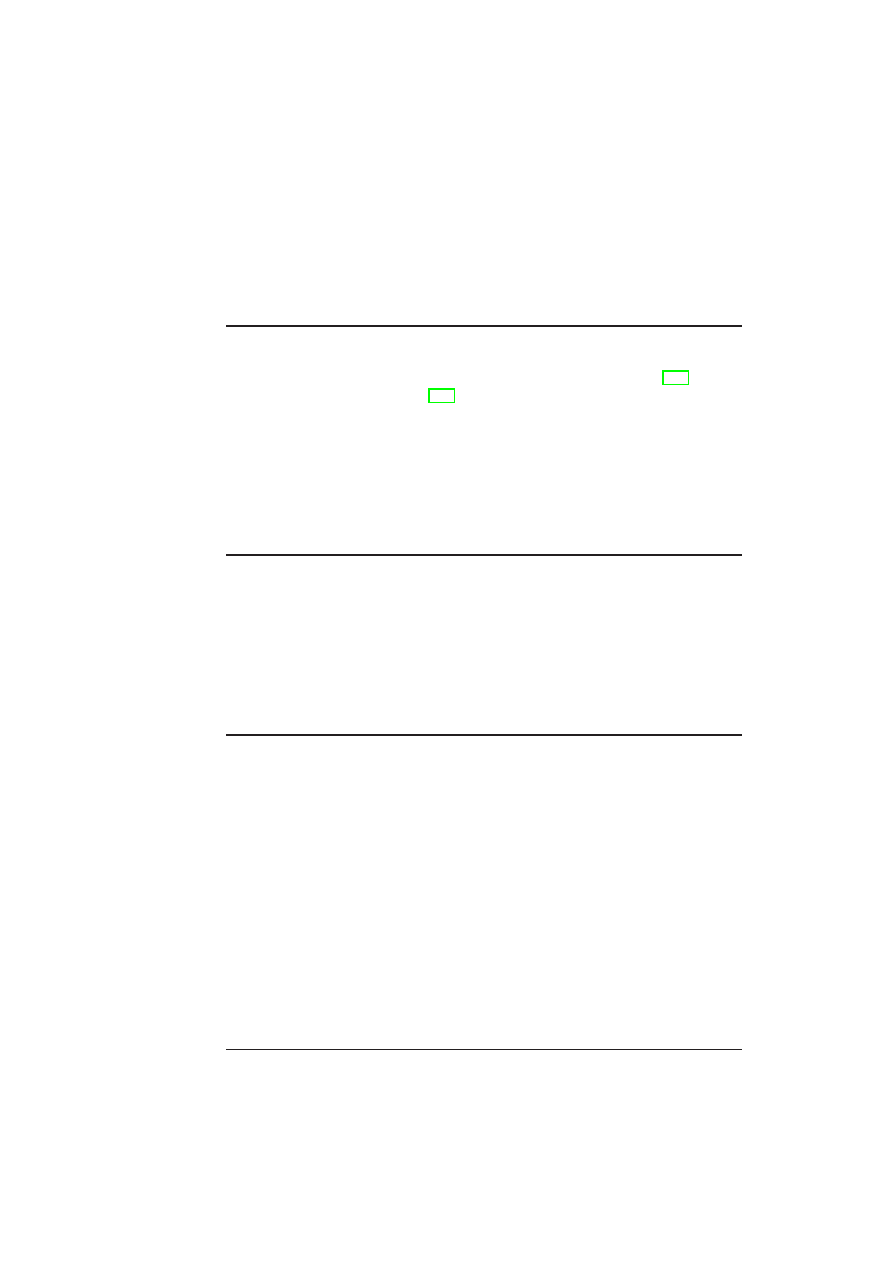

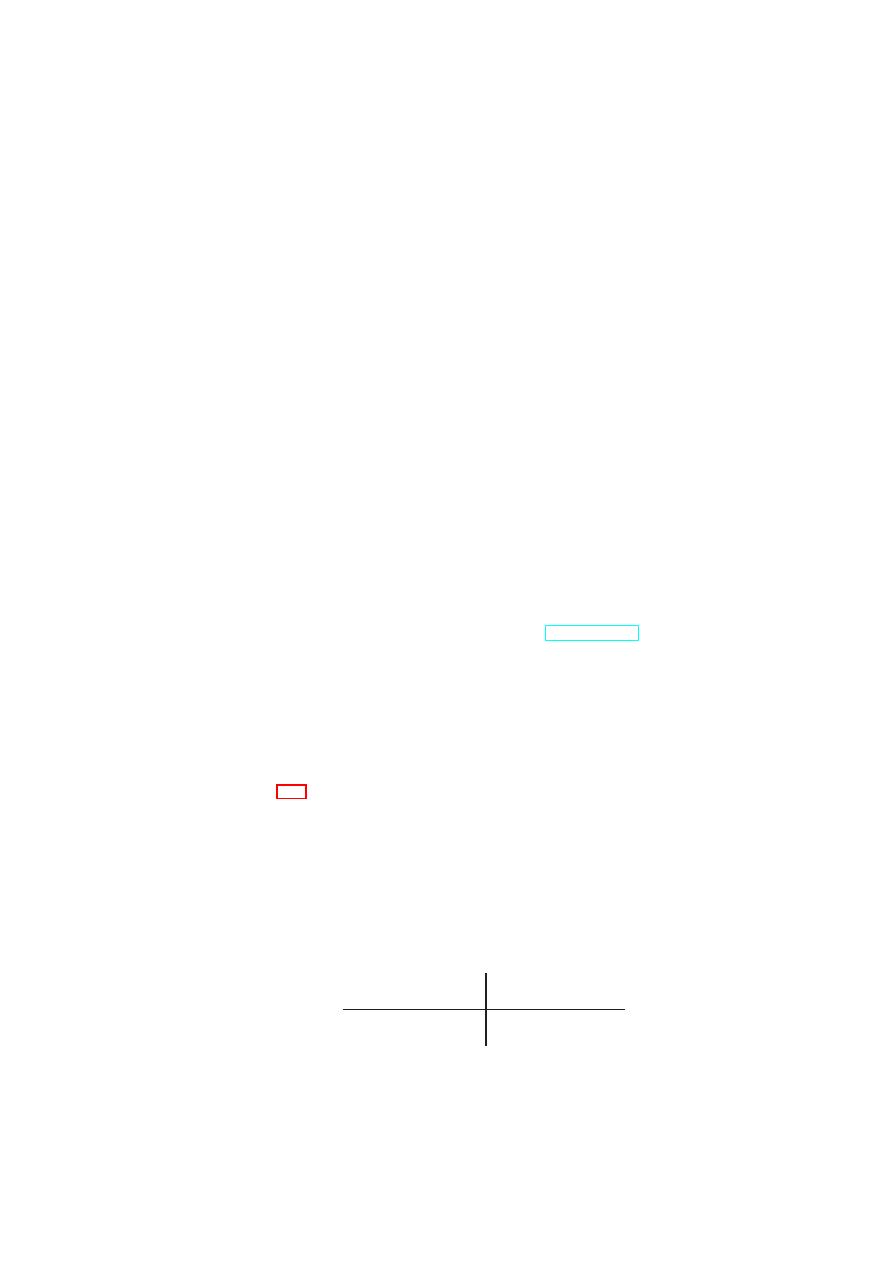

VM

VM

Eth

er

IP

Host C

Host D

Host A

VPN

WAN

WAN

vSwitch

VM

VM

VM

VM

VM

VM

VM

VM VM

VM

vSwitch

VM

VM

Tag

ge

r

vSwitch

Eth

er

IP

VLAN Switch

Host B

VM

VM

Tag

ge

r

vSwitch

VPN

Tag

ge

r

Eth

er

IP

Eth

er

IP

VPN

Eth

er

IP

VPN

vSwitch

VPN

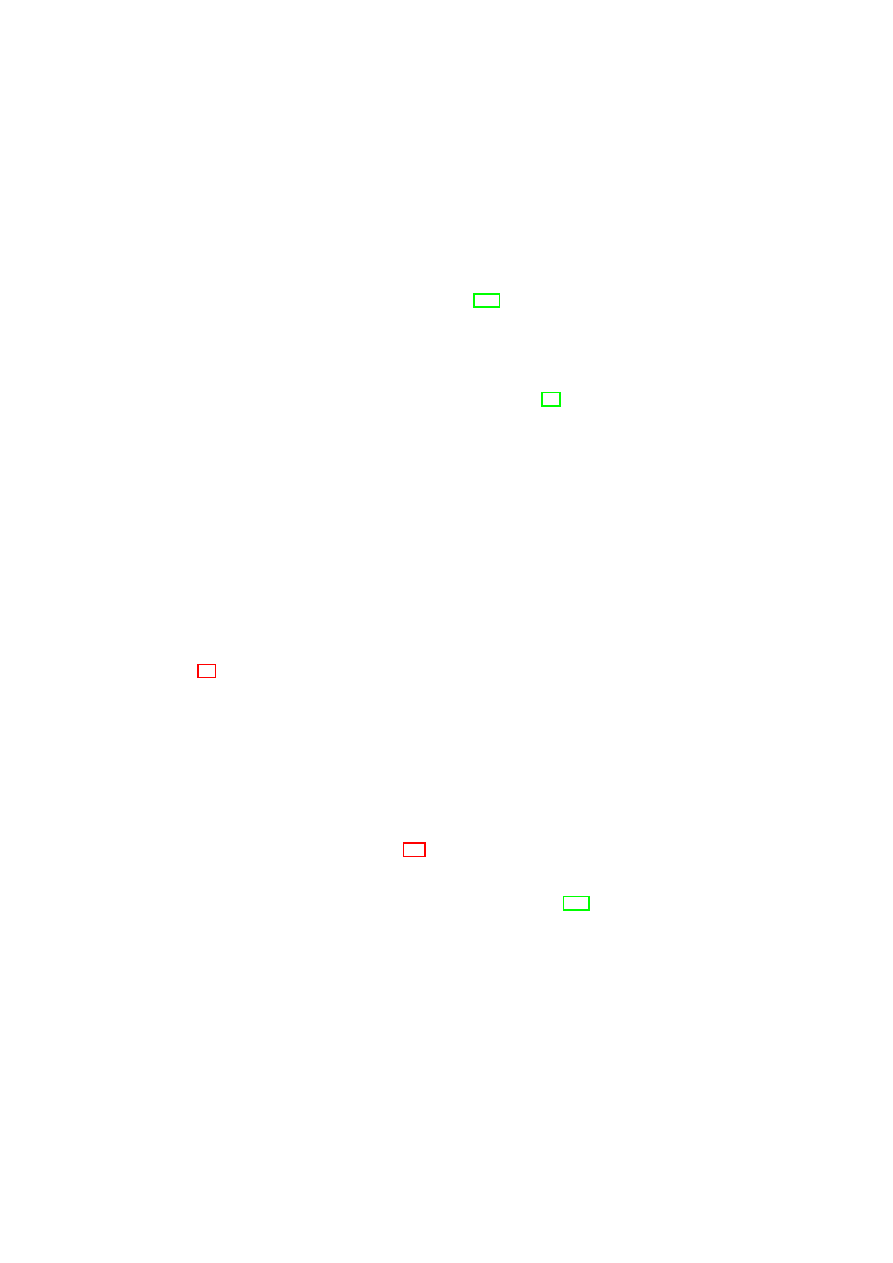

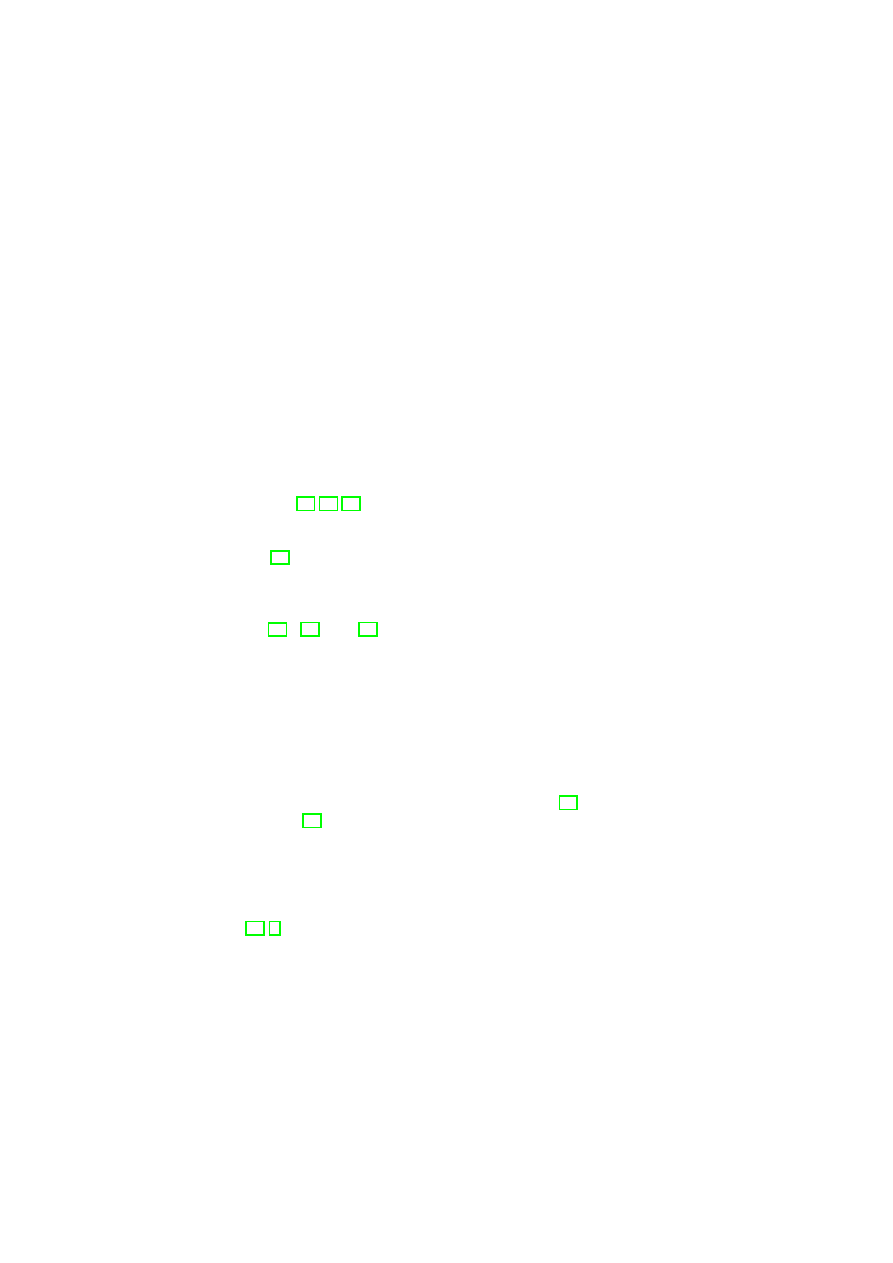

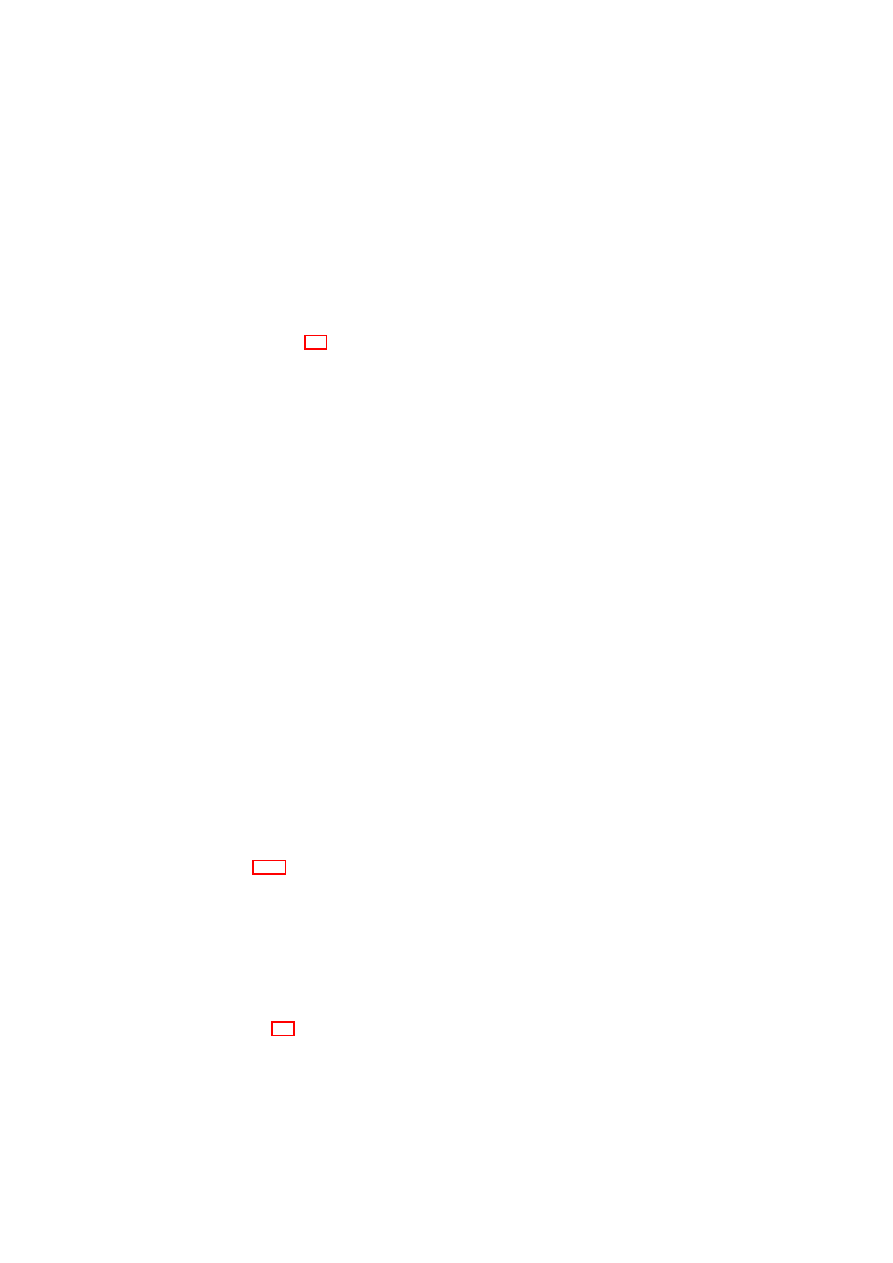

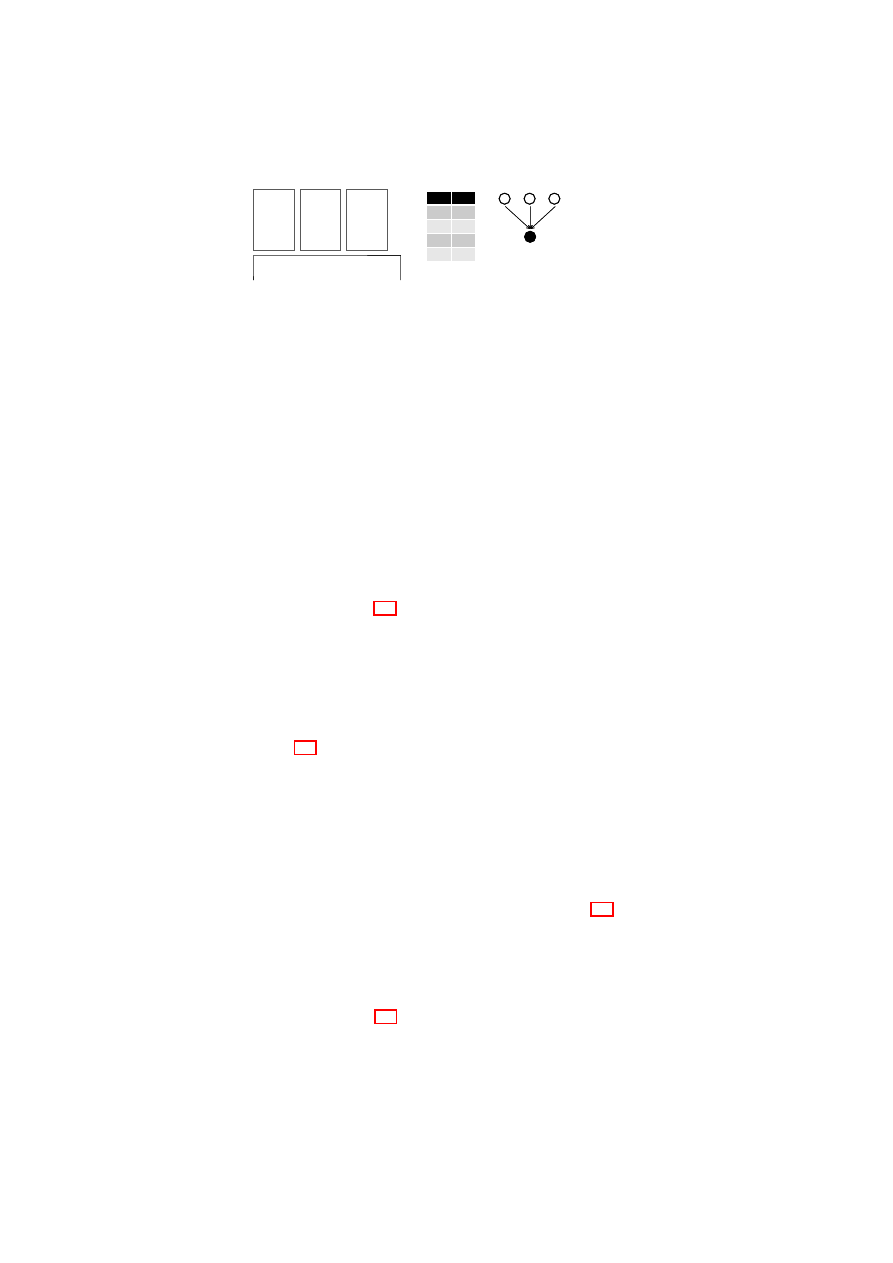

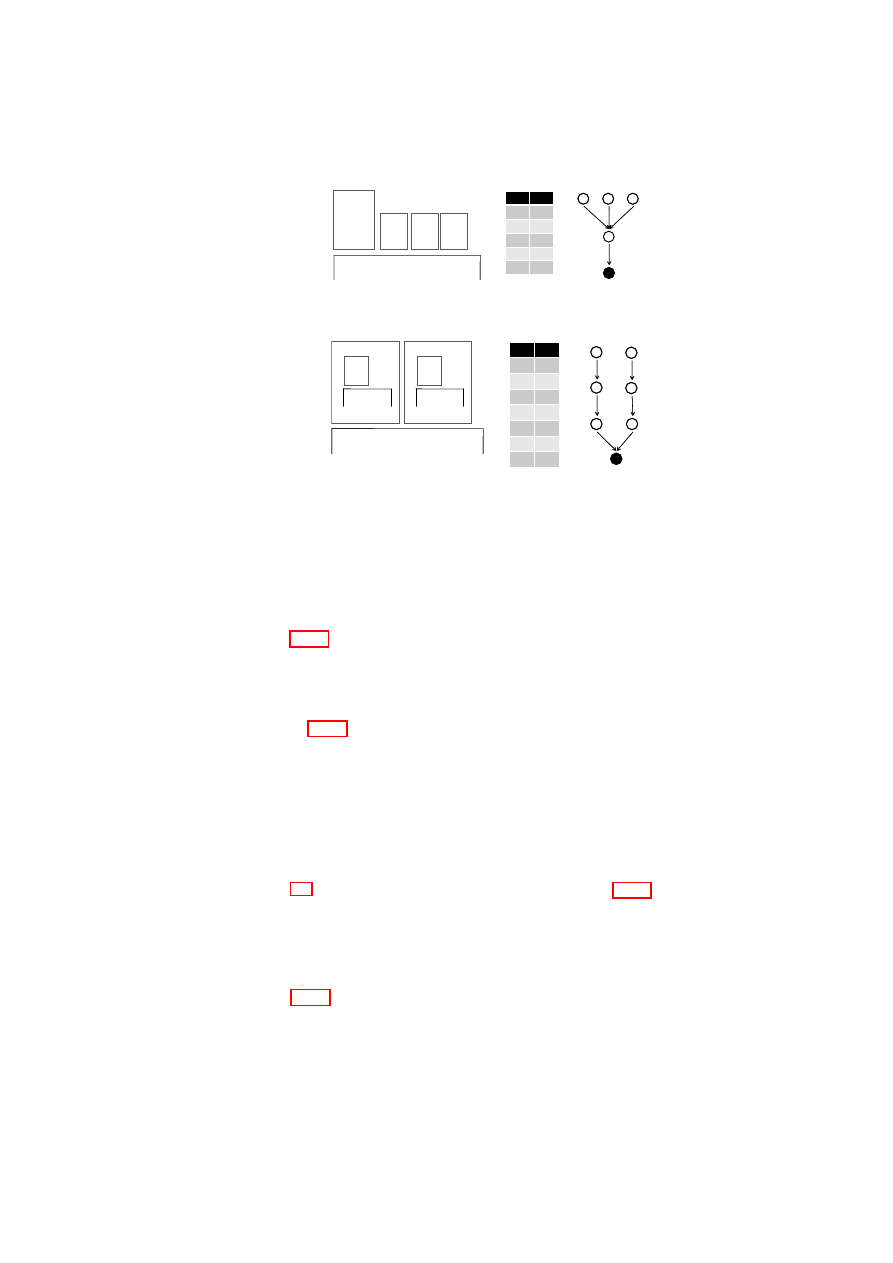

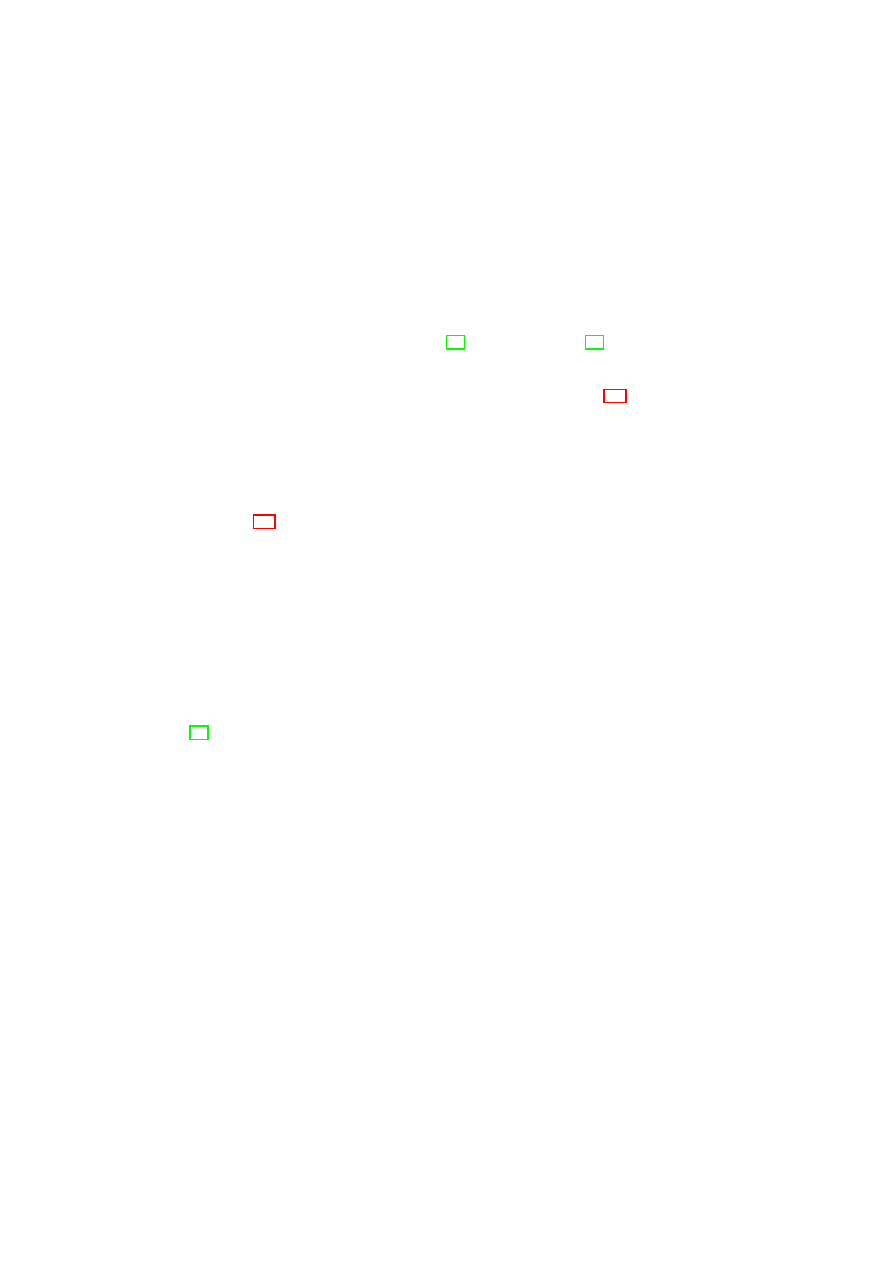

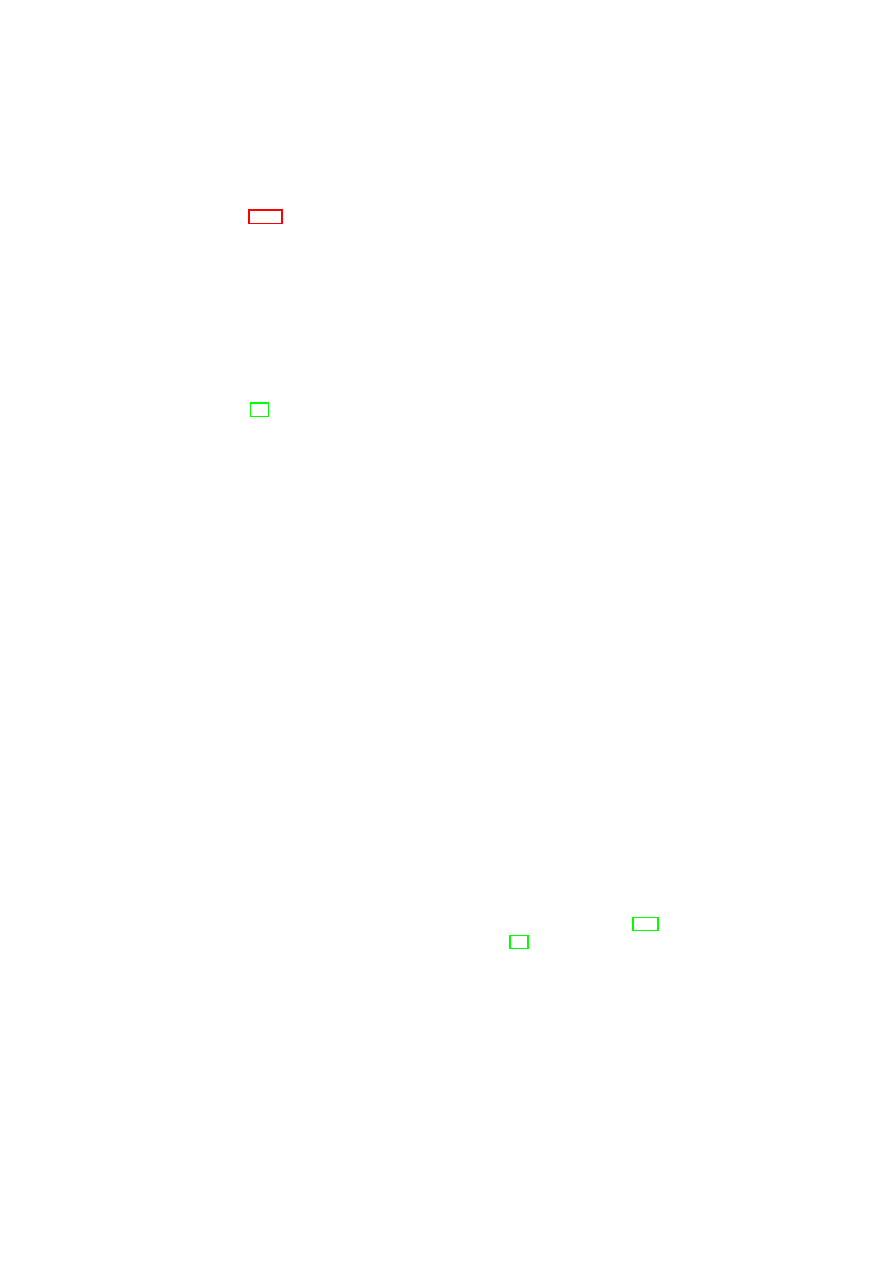

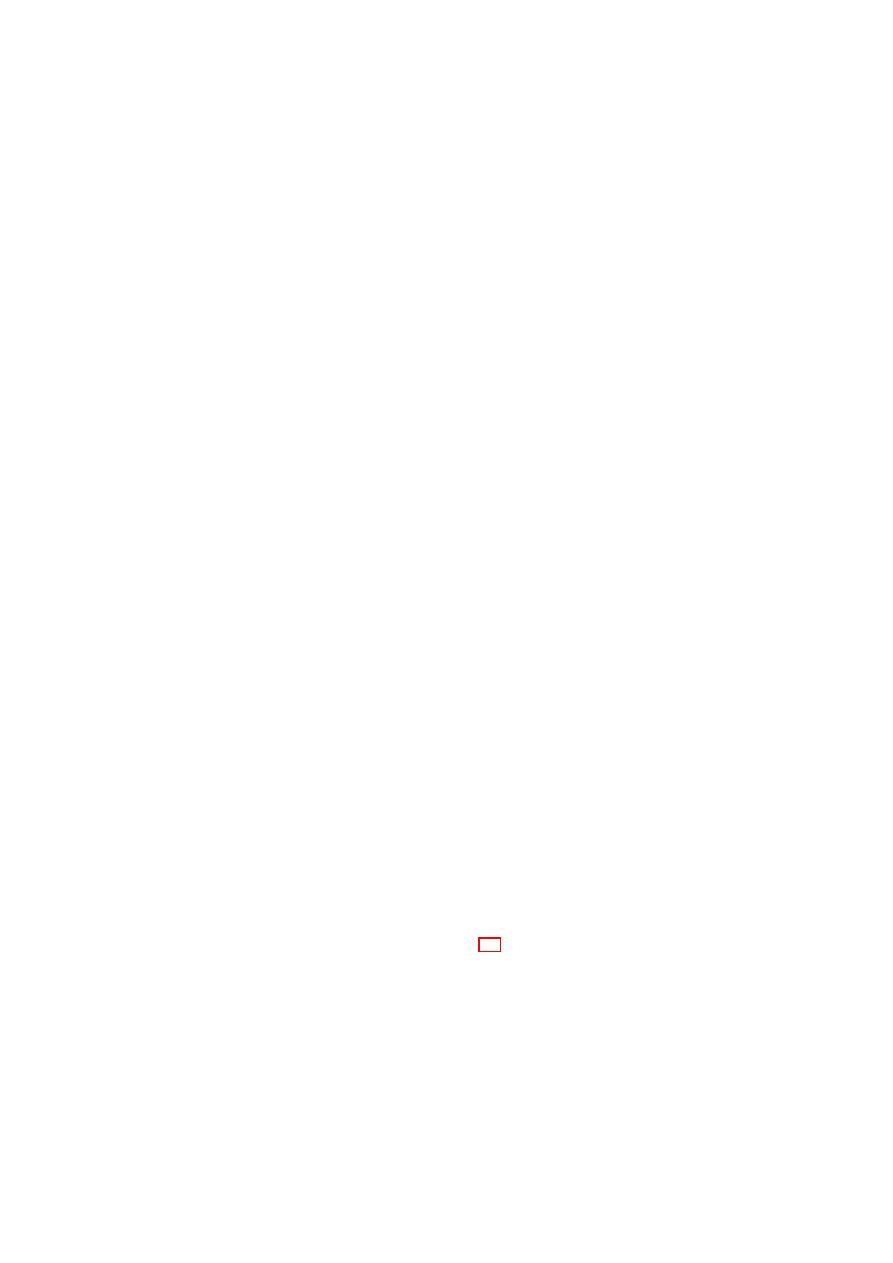

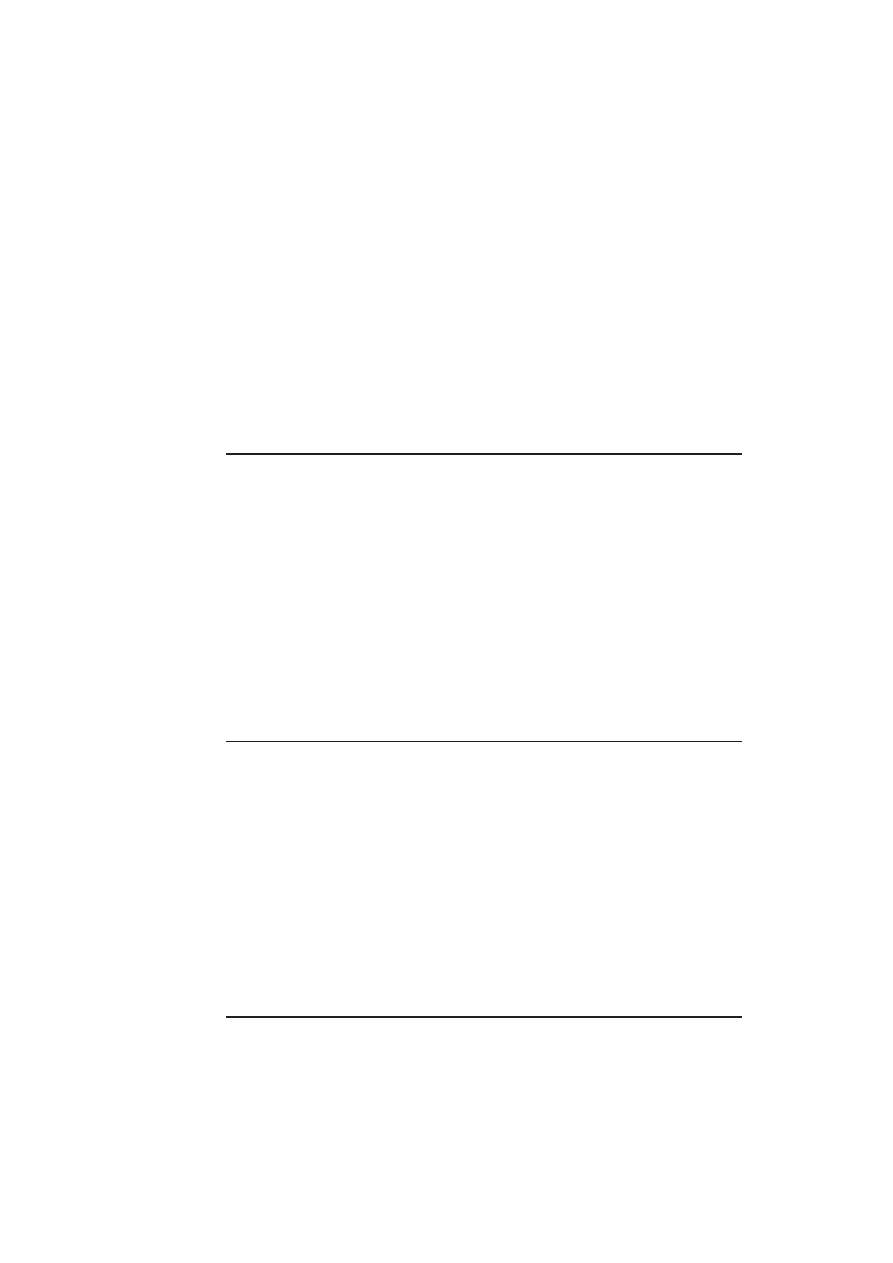

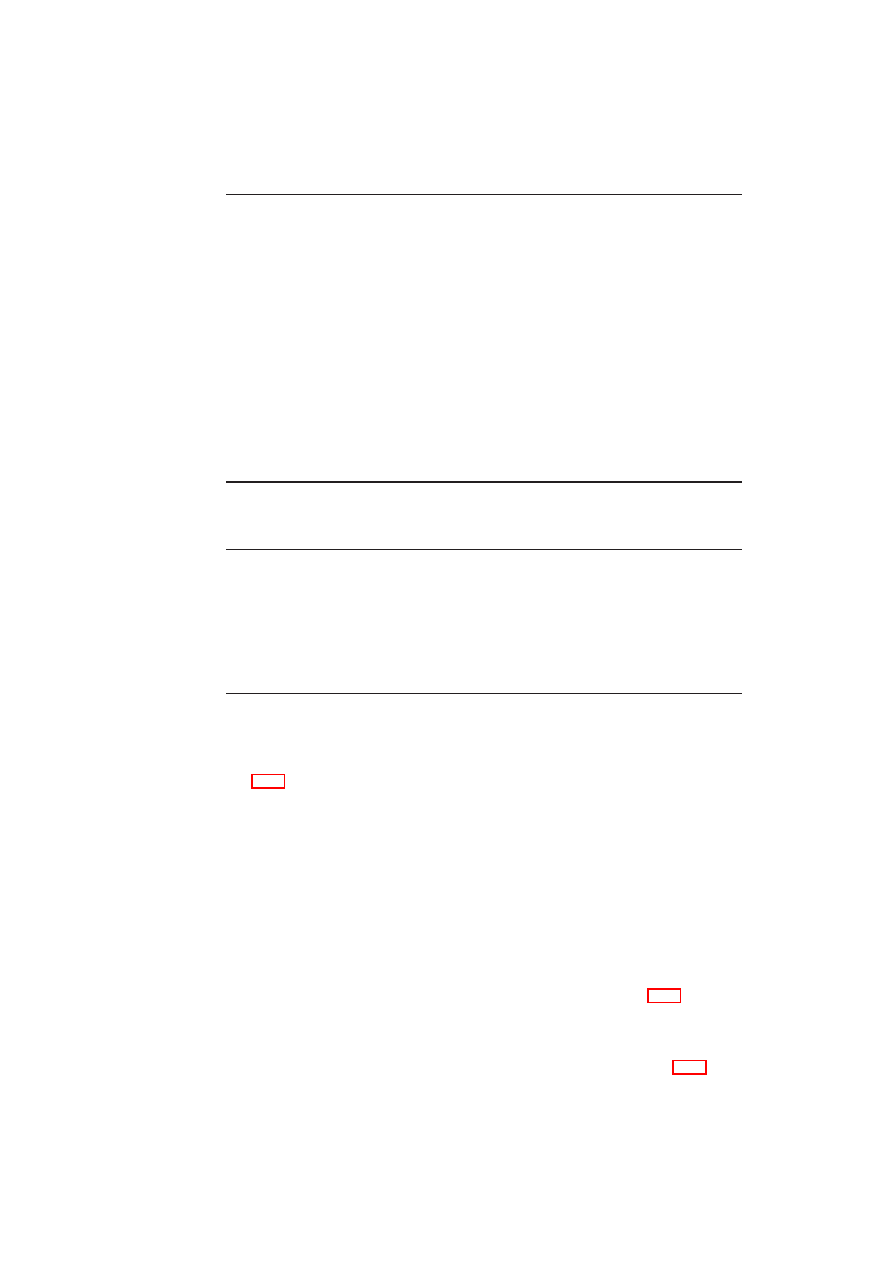

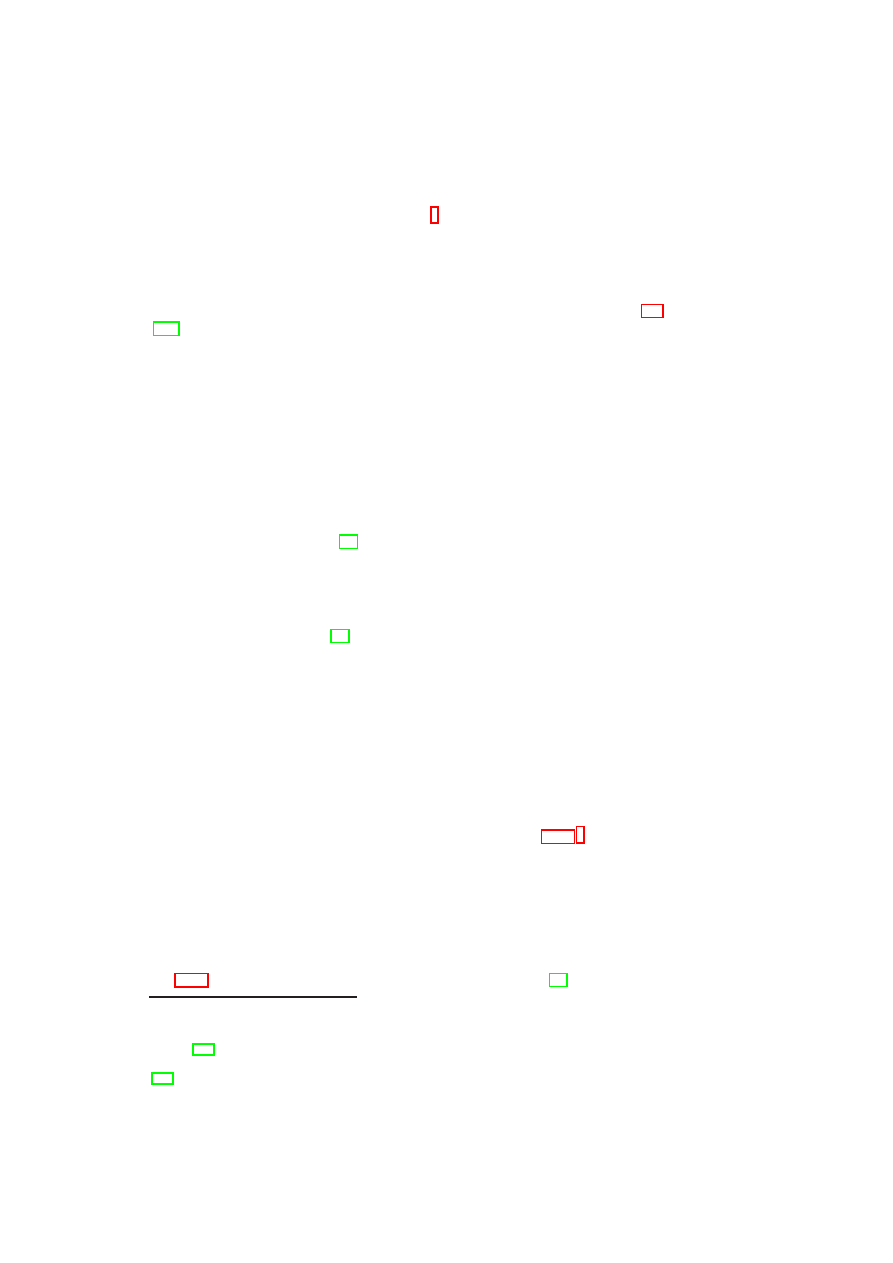

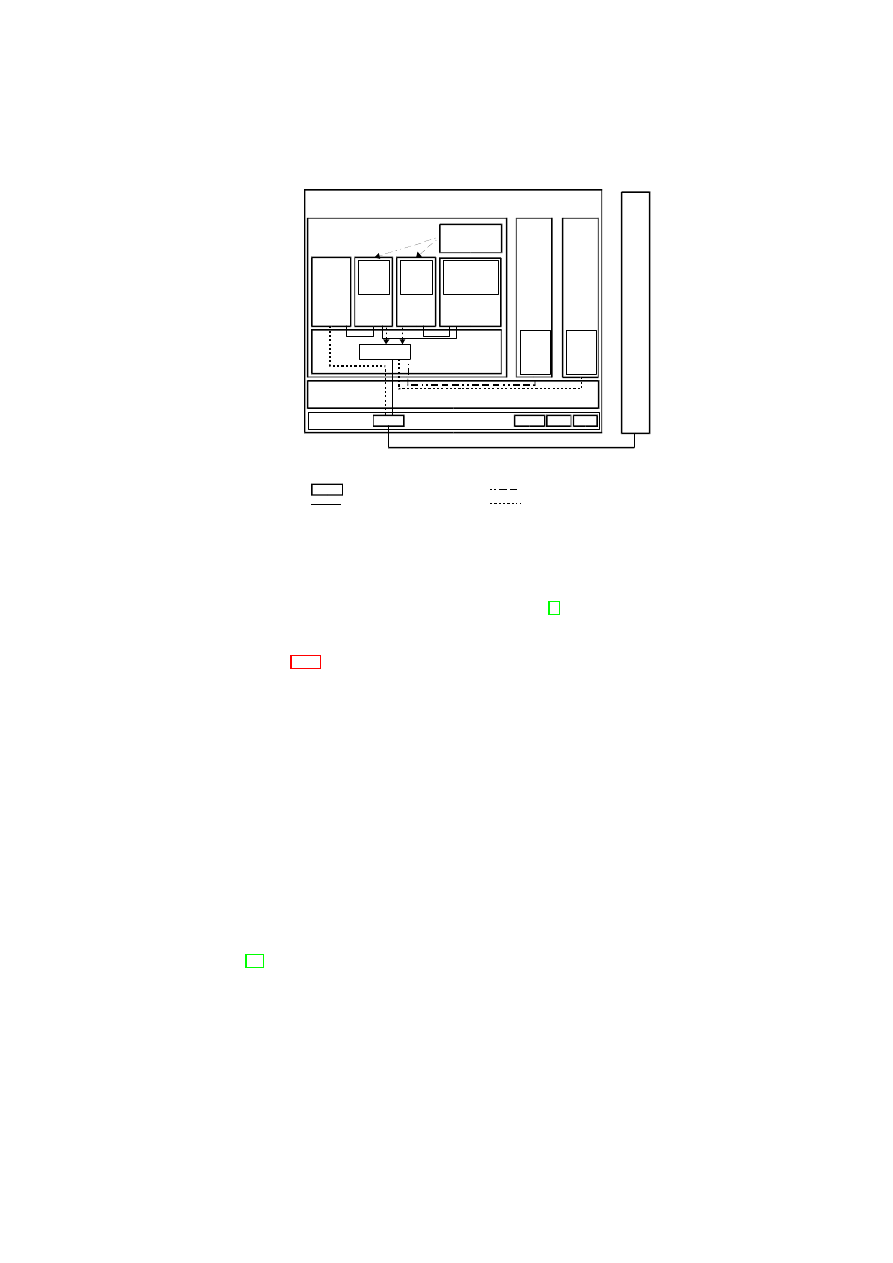

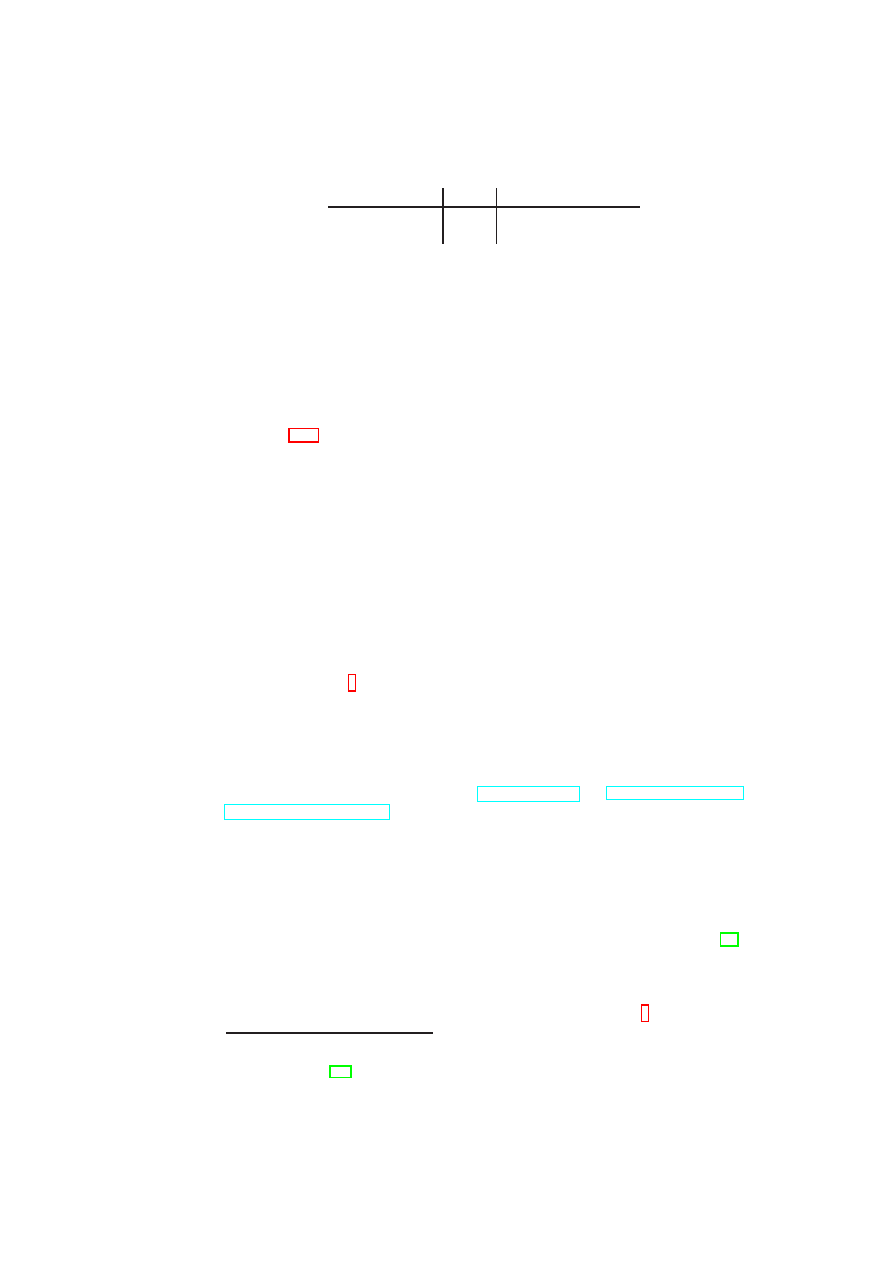

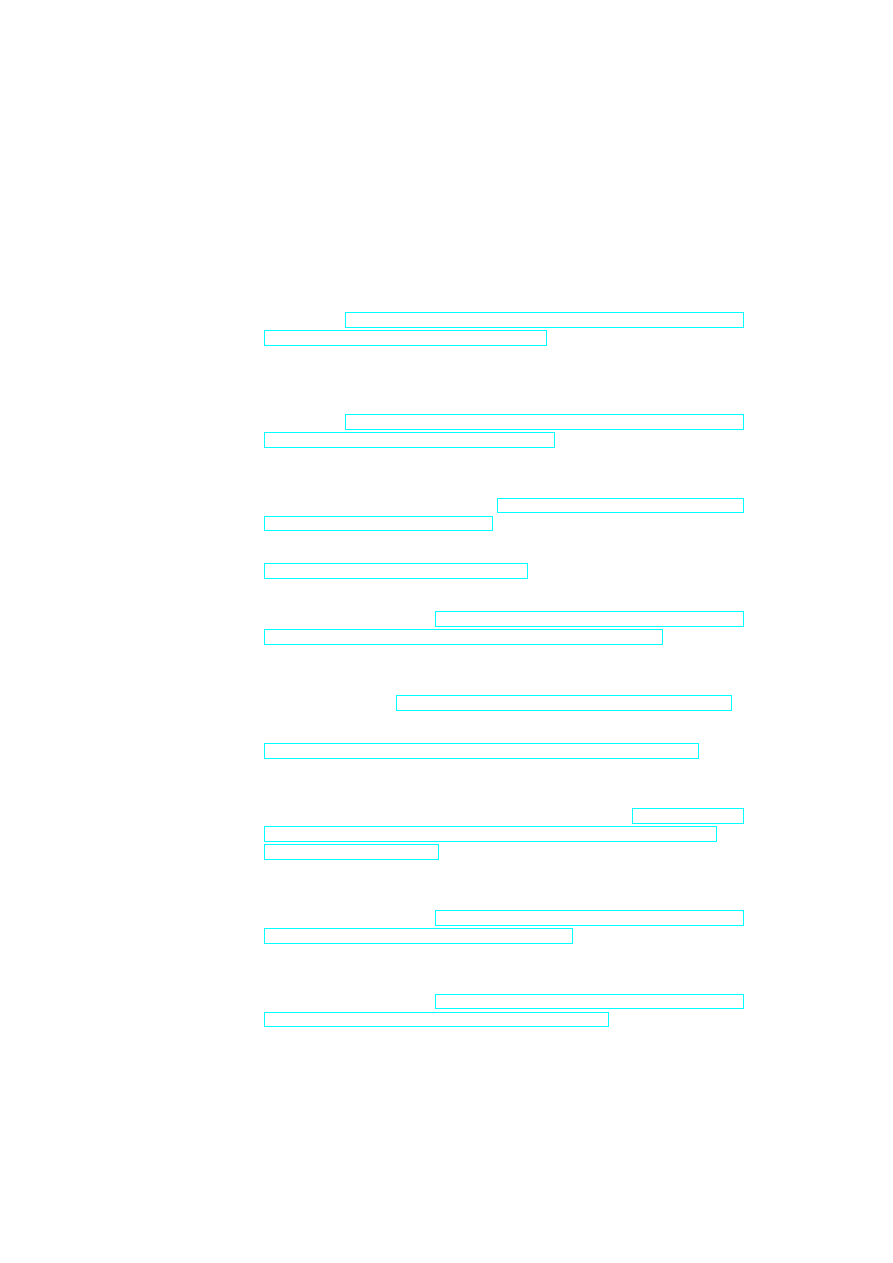

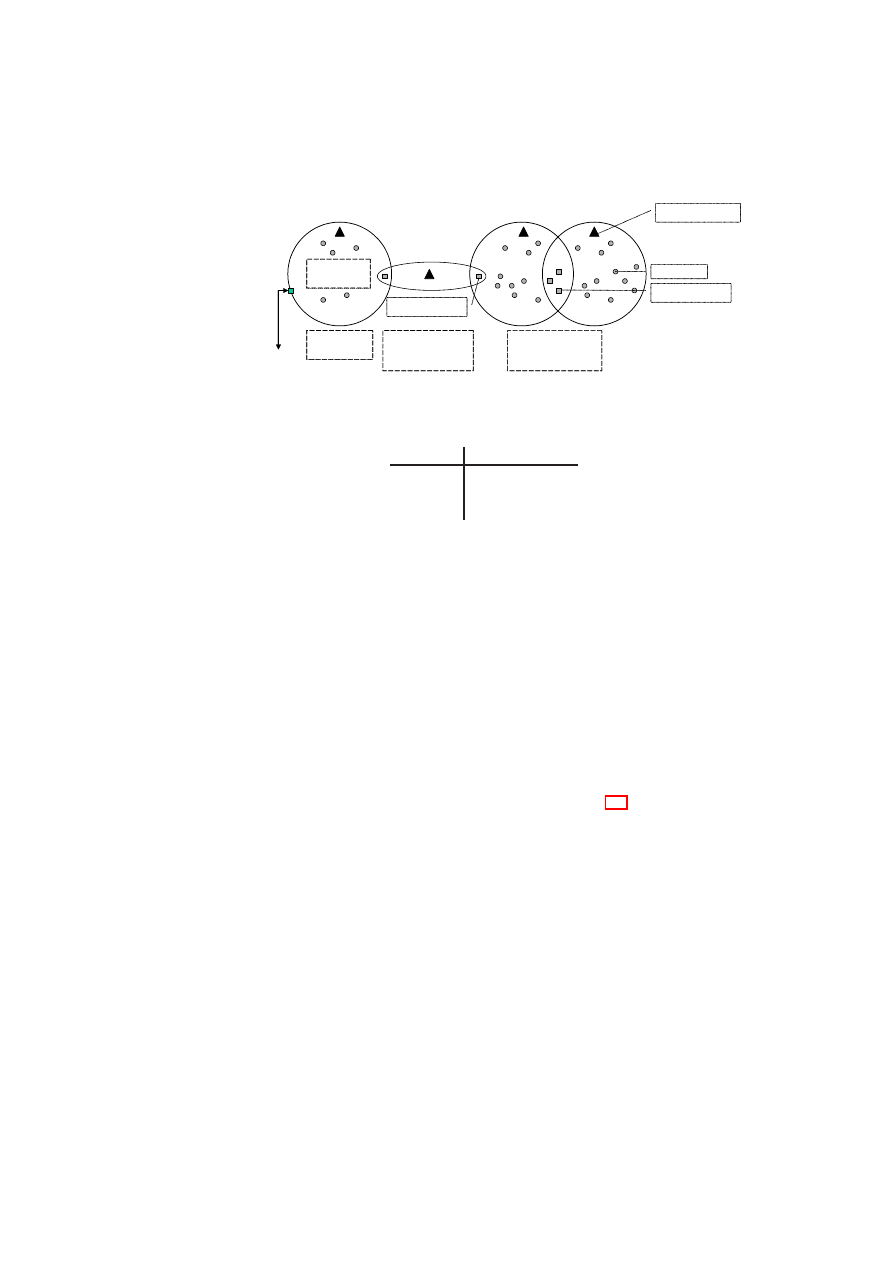

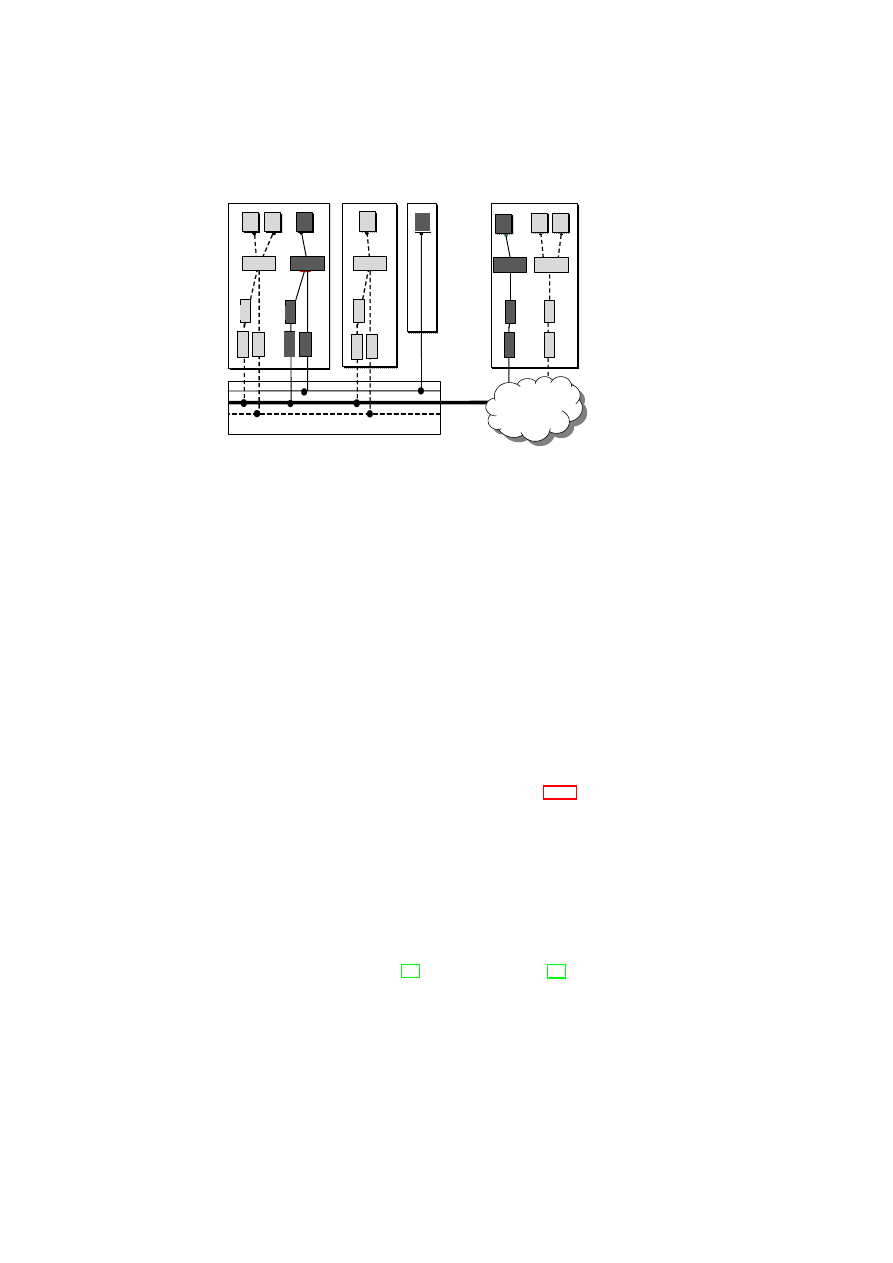

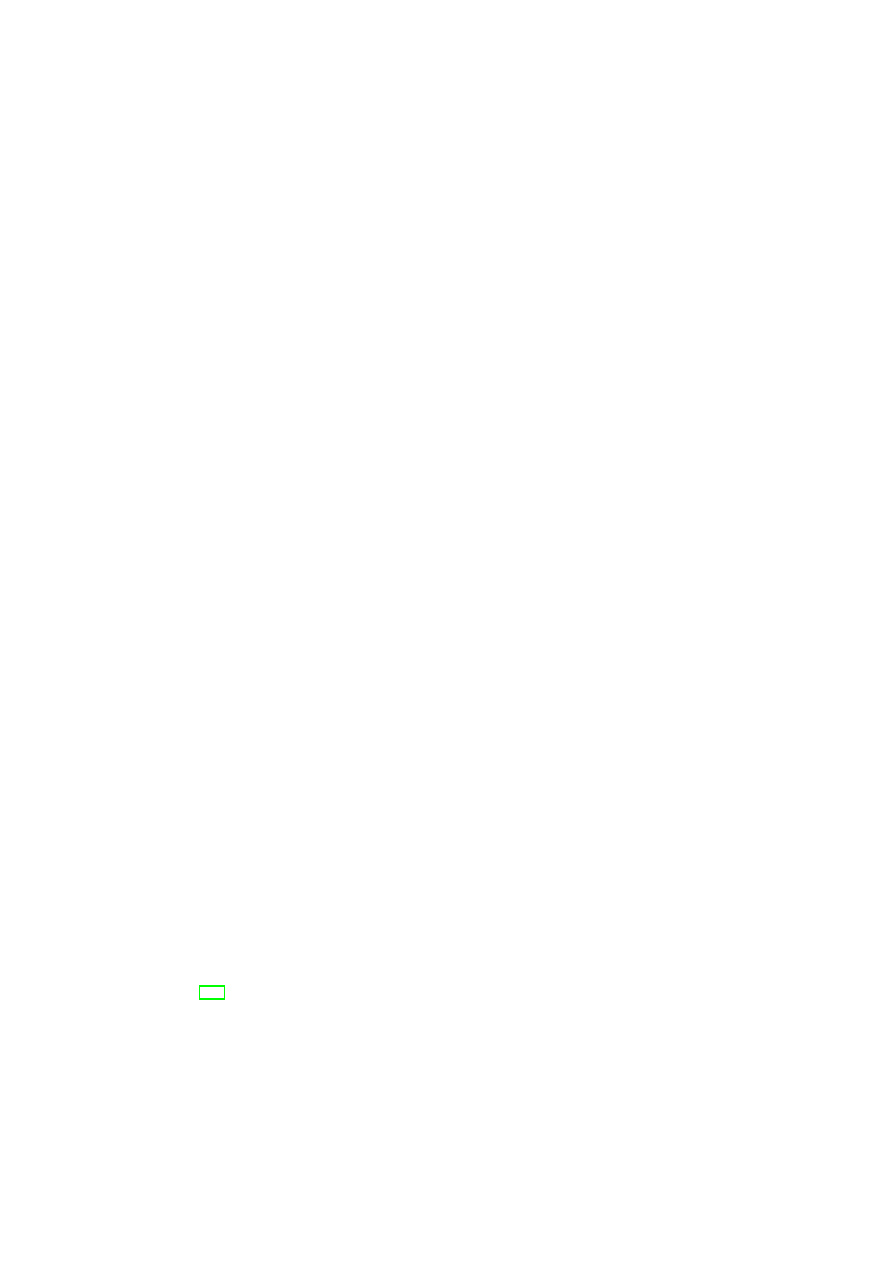

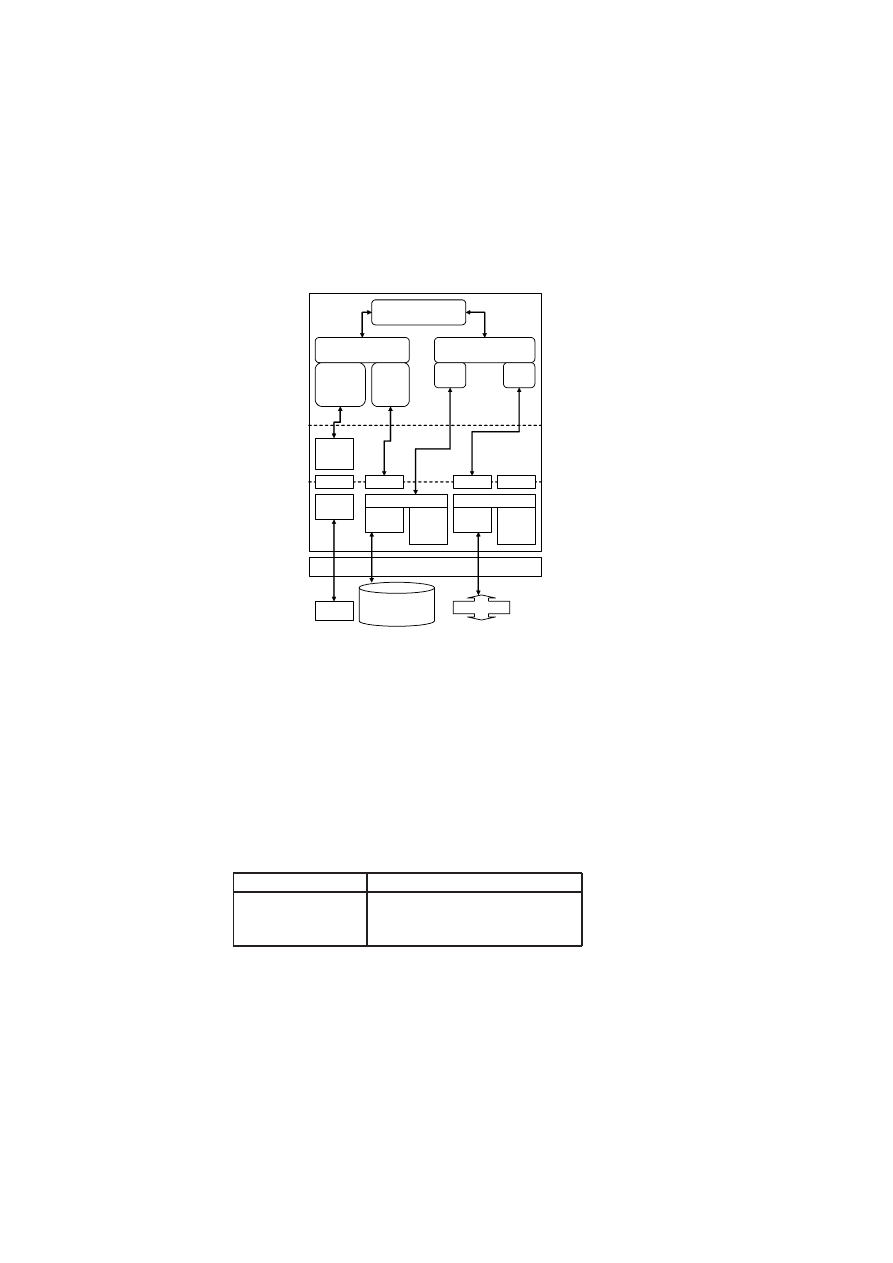

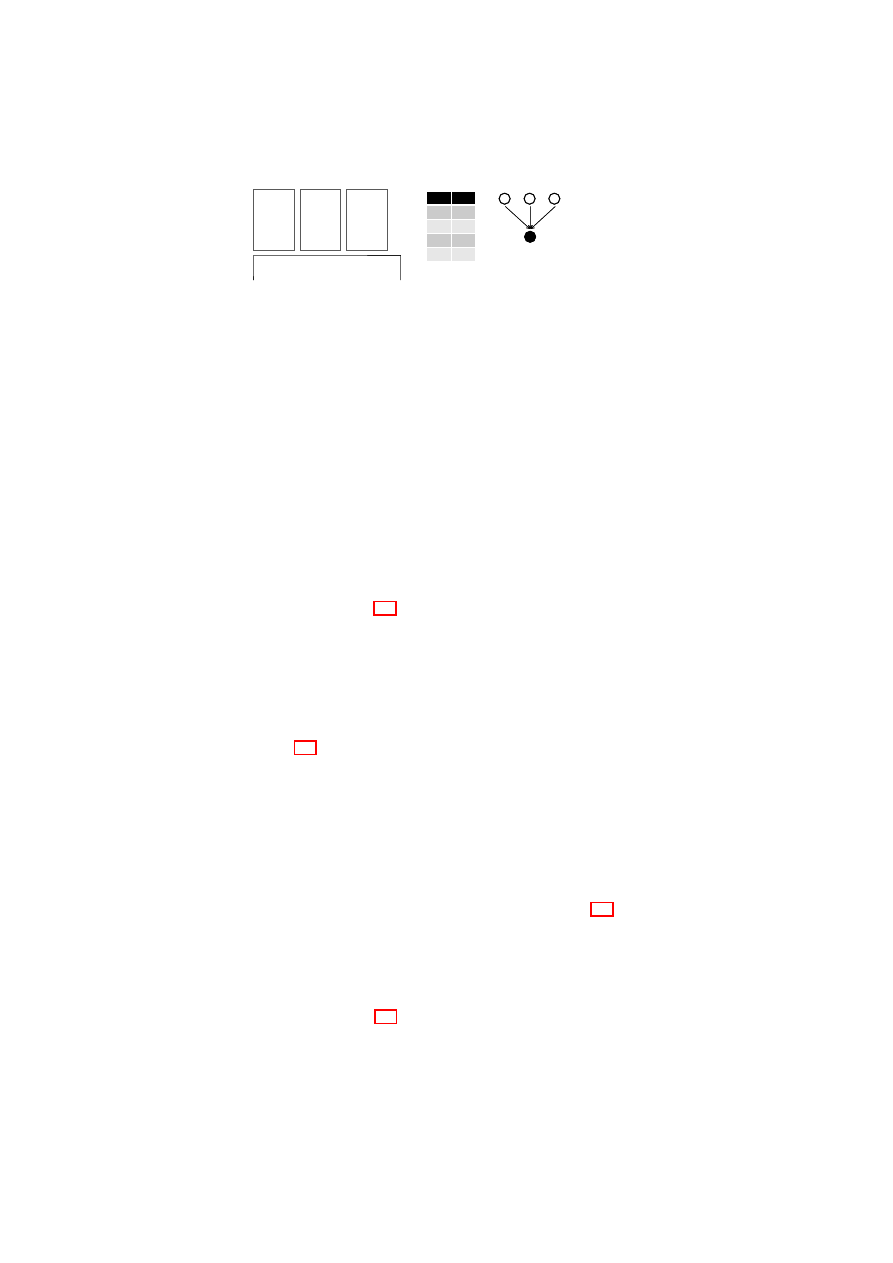

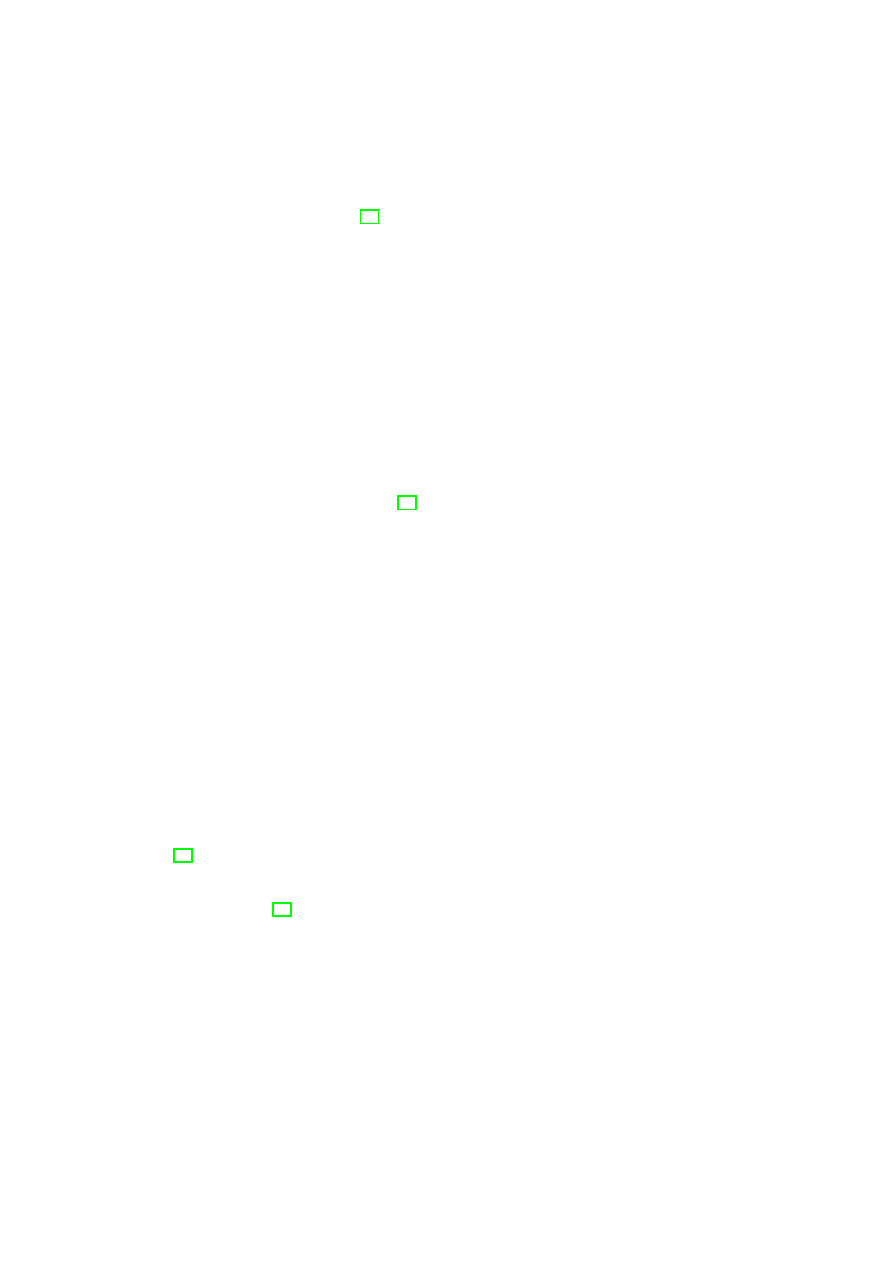

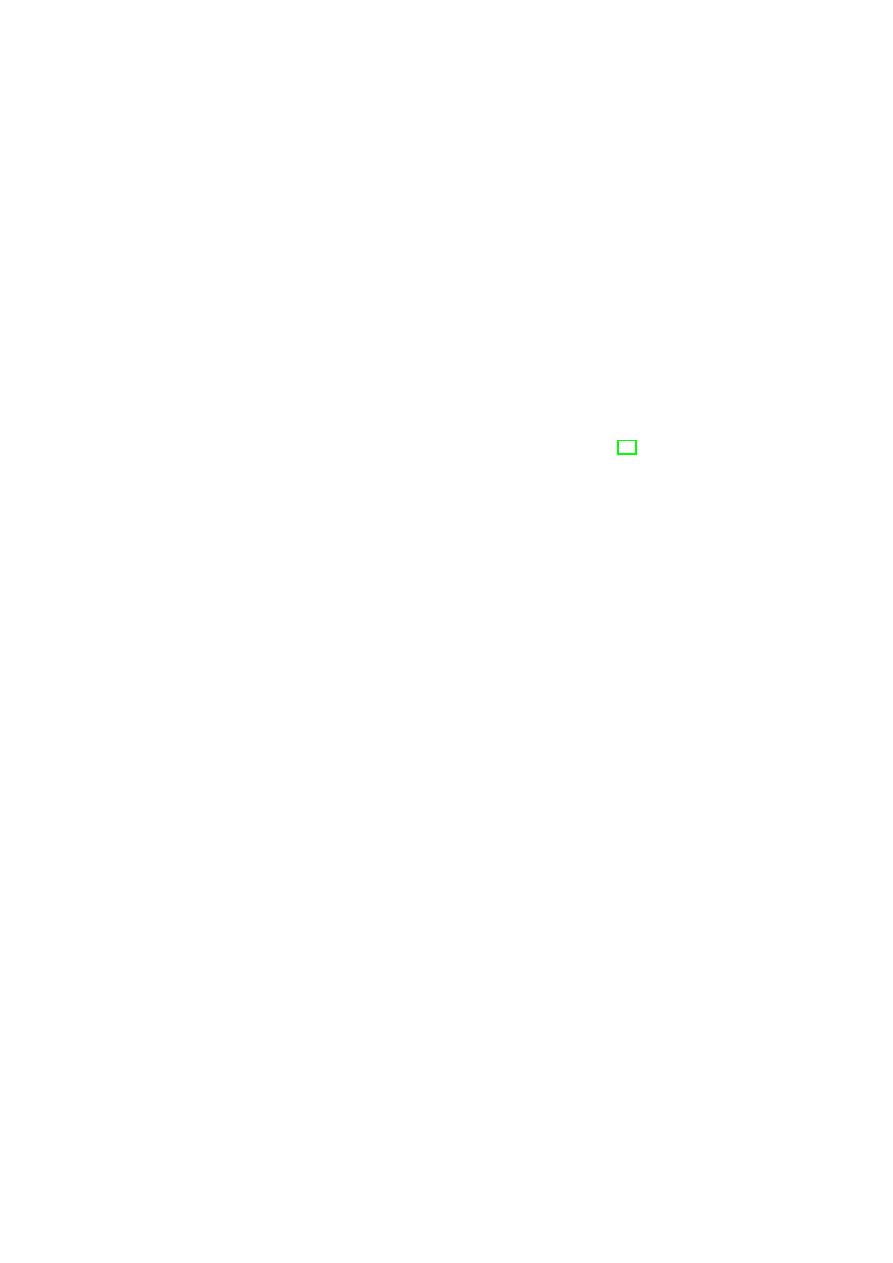

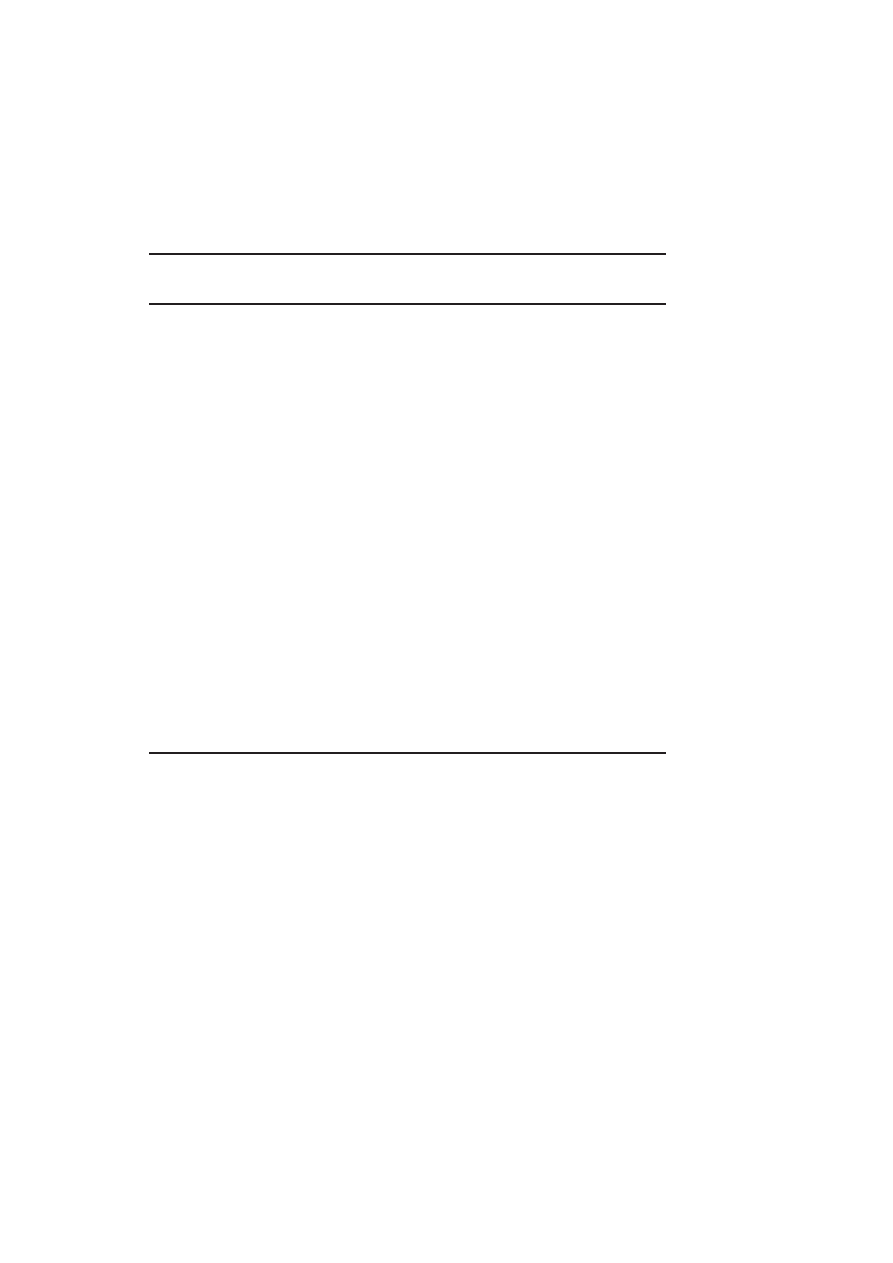

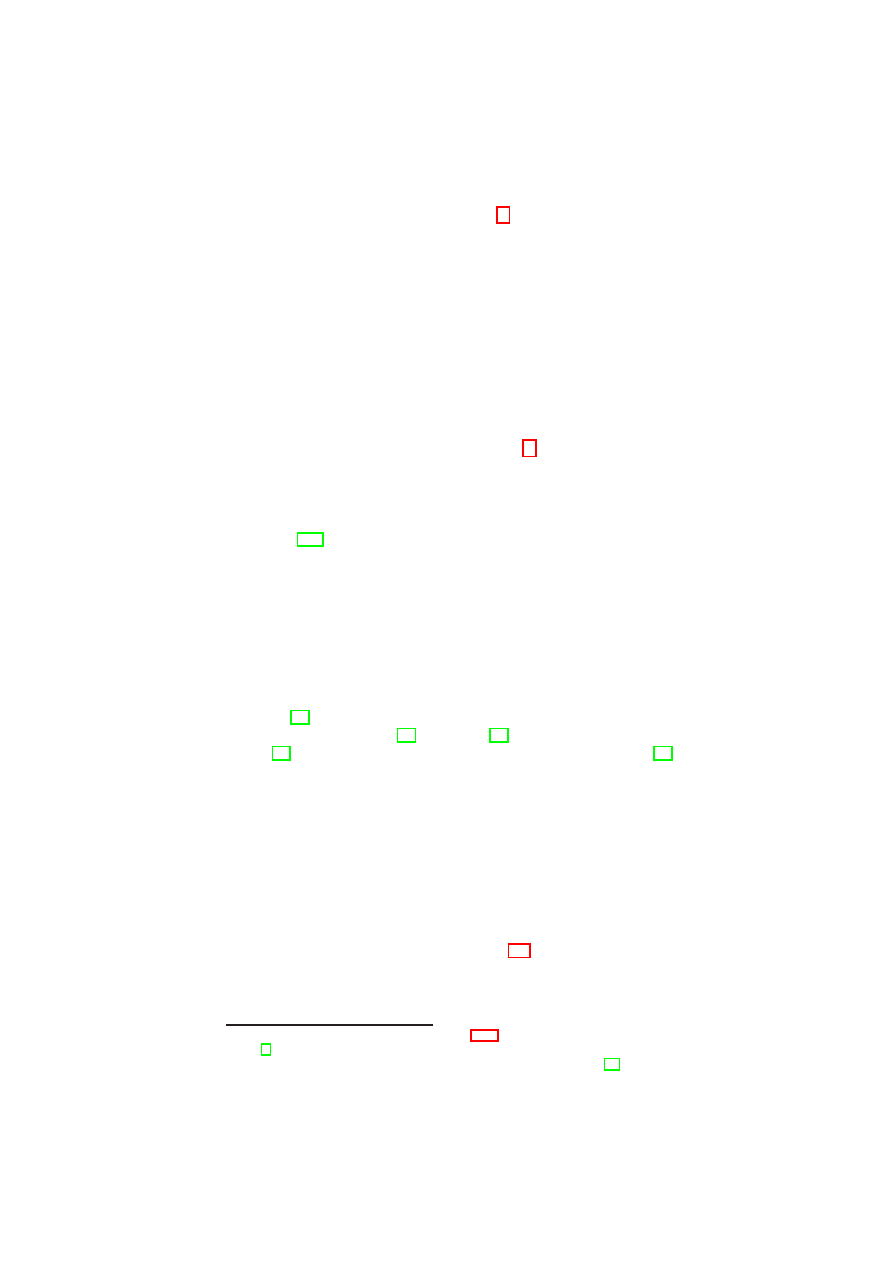

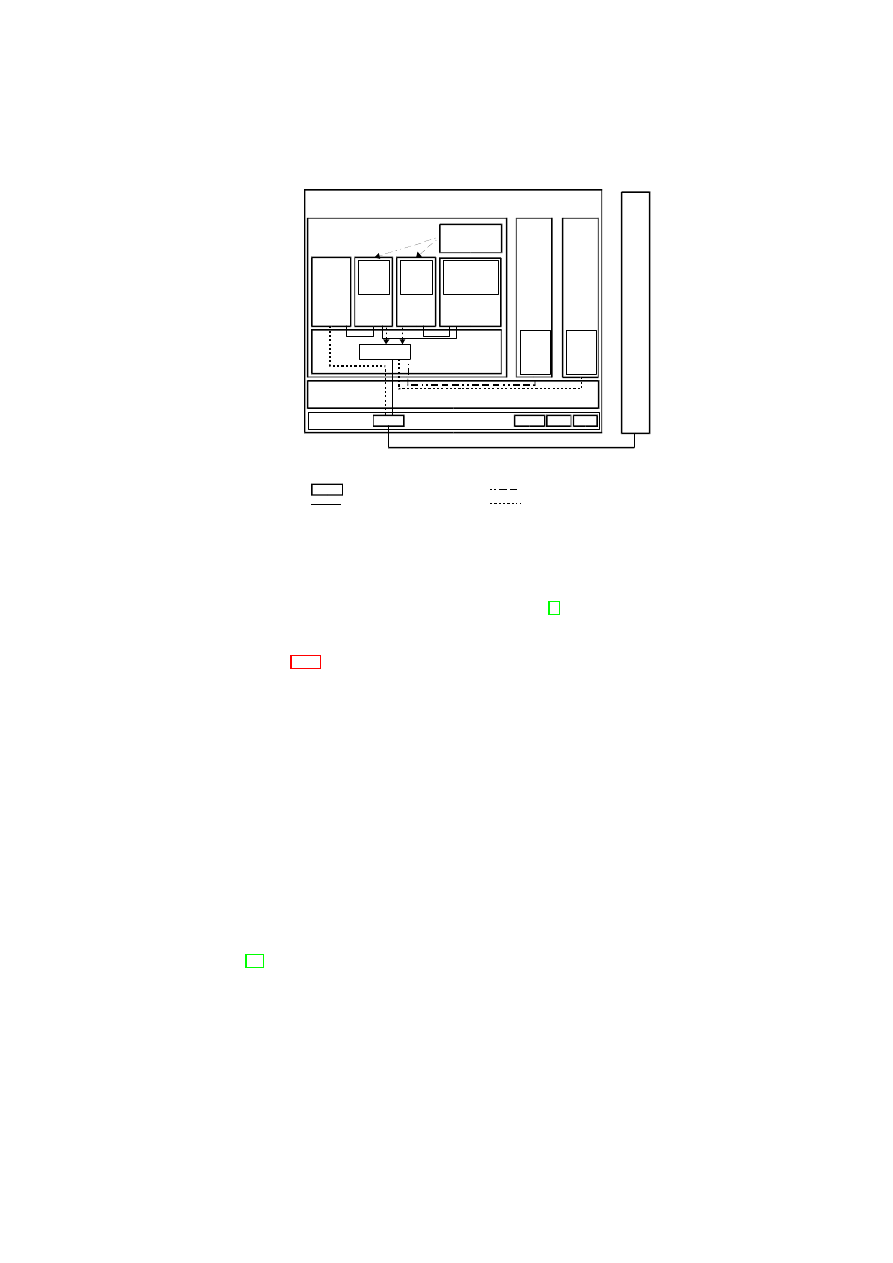

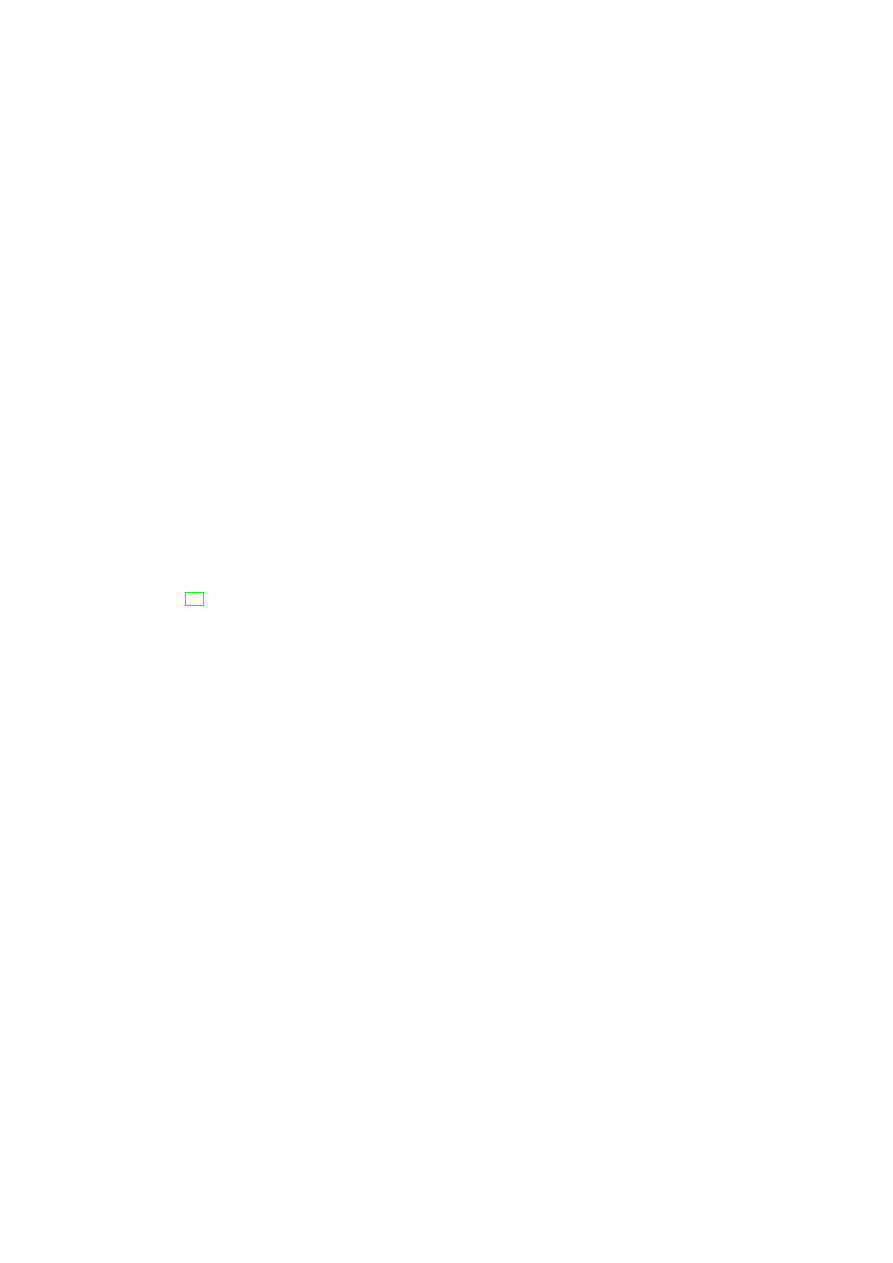

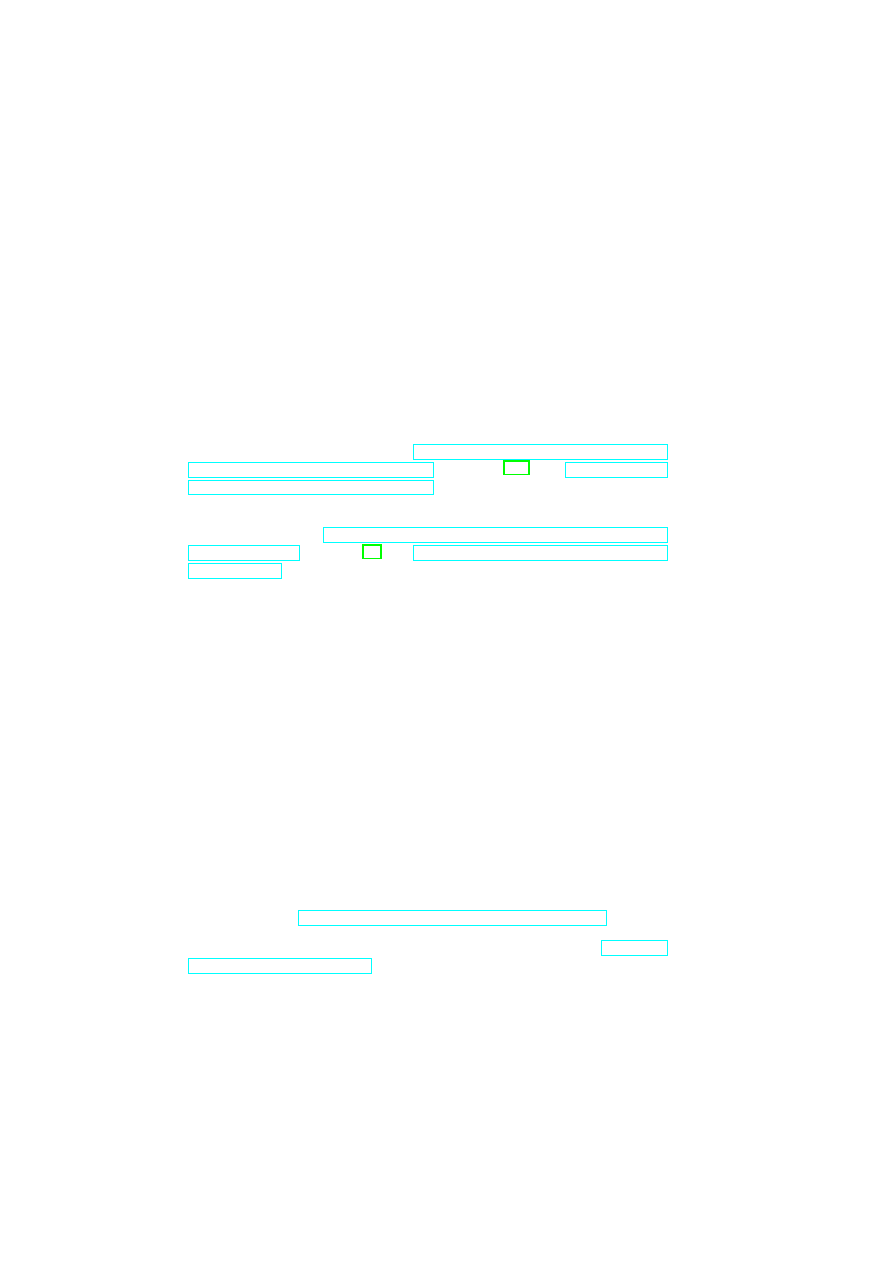

Figure 3.2: General vSwitch Architecture.

the VMs at the Ethernet level. Logically speaking, we provide a separate “virtual

infrastructure” for each TVD in which we control and limit the sharing of network re-

sources (such as routers, switches) between TVDs. This also provides the TVD owner

with the freedom to deploy a wide range of networking solutions on top of the TVD

network infrastructure. Network address allocations, transport protocols, and other ser-

vices are then fully customizable by the TVD owner and work transparently as if the

VMs were in an isolated physical network. To maintain secrecy and confidentiality of

network data (where necessary), network communication is established over encrypted

VPN tunnels. This enables the transparent use of untrusted networks between physical

hosts that contain VMs within the same TVD to provide a seamless view of the TVD

network.

In this section, we introduce the technologies we use to implement a security-

enhanced VNET infrastructure for TVD owners. The concept of virtual switching is

central to our architecture, which is then protected by existing VPN technologies that

provide data confidentiality and integrity where needed. The VNET infrastructure acts

as the local enforcer of VNET policies. As described in Section 2.3.2, these policies

are based on the high-level TVD policies and translated into network configurations by

the TVD Proxy. The Proxy then deploys the whole VNET infrastructure with respect

to the translated configuration.

3.2.1

Virtual Switching

The virtual switch (vSwitch) is the central component of the virtual networking in-

frastructure and operates similarly to a physical switch. It is responsible for network

virtualization and isolation, and enables a virtual network to span multiple physical

hosts. To do so, the vSwitch uses EtherIP [52] and VLAN tagging [49] to insert VLAN

membership information into every network packet. The vSwitch also implements the

necessary address-mapping techniques to direct packets only to those machines that

host member VMs. Virtual switches provide the primitives for implementing higher-

level security policies for networking and are configured by the higher-level TVD man-

agement layer.

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

CHAPTER 3. UNIFIED POLICY ENFORCEMENT FOR VIRTUAL DATA CENTERS25

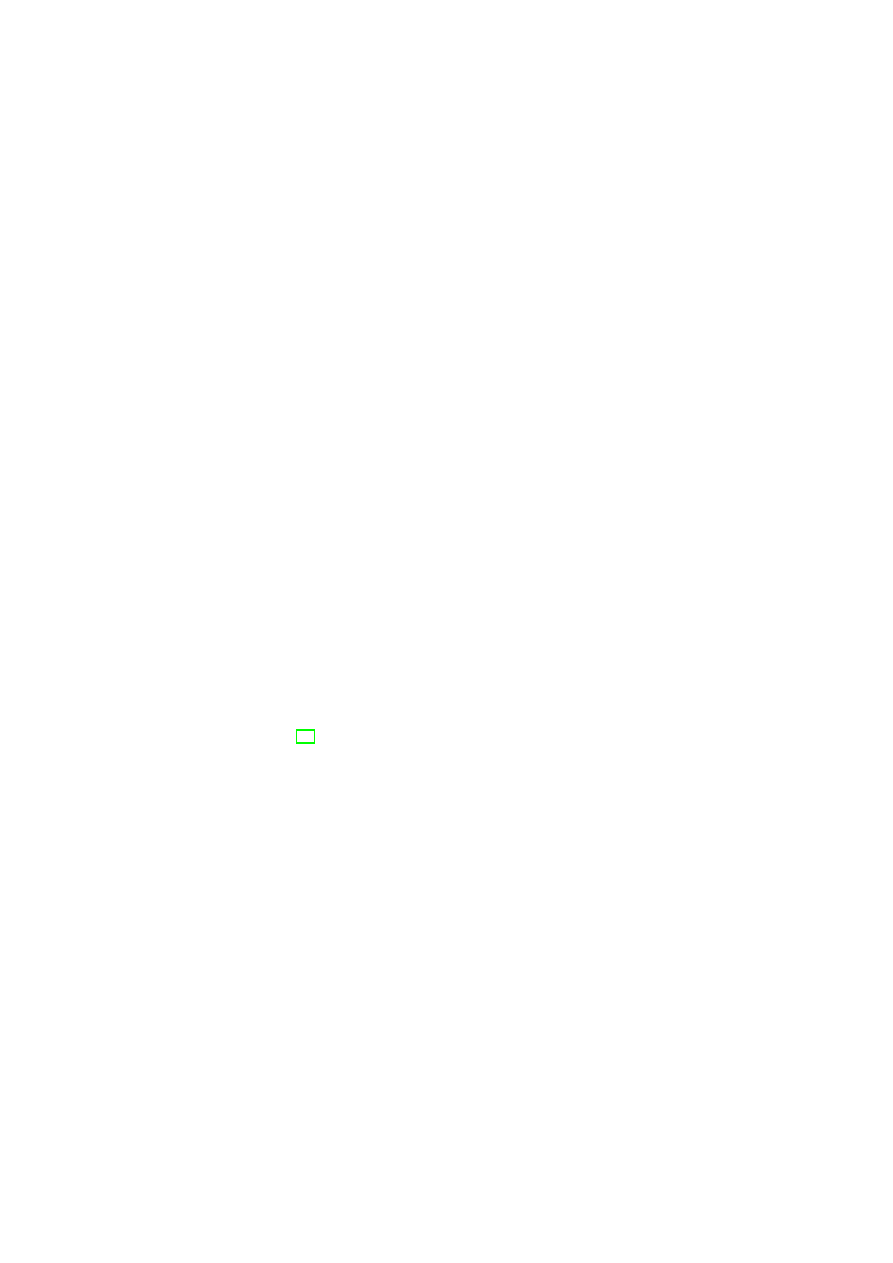

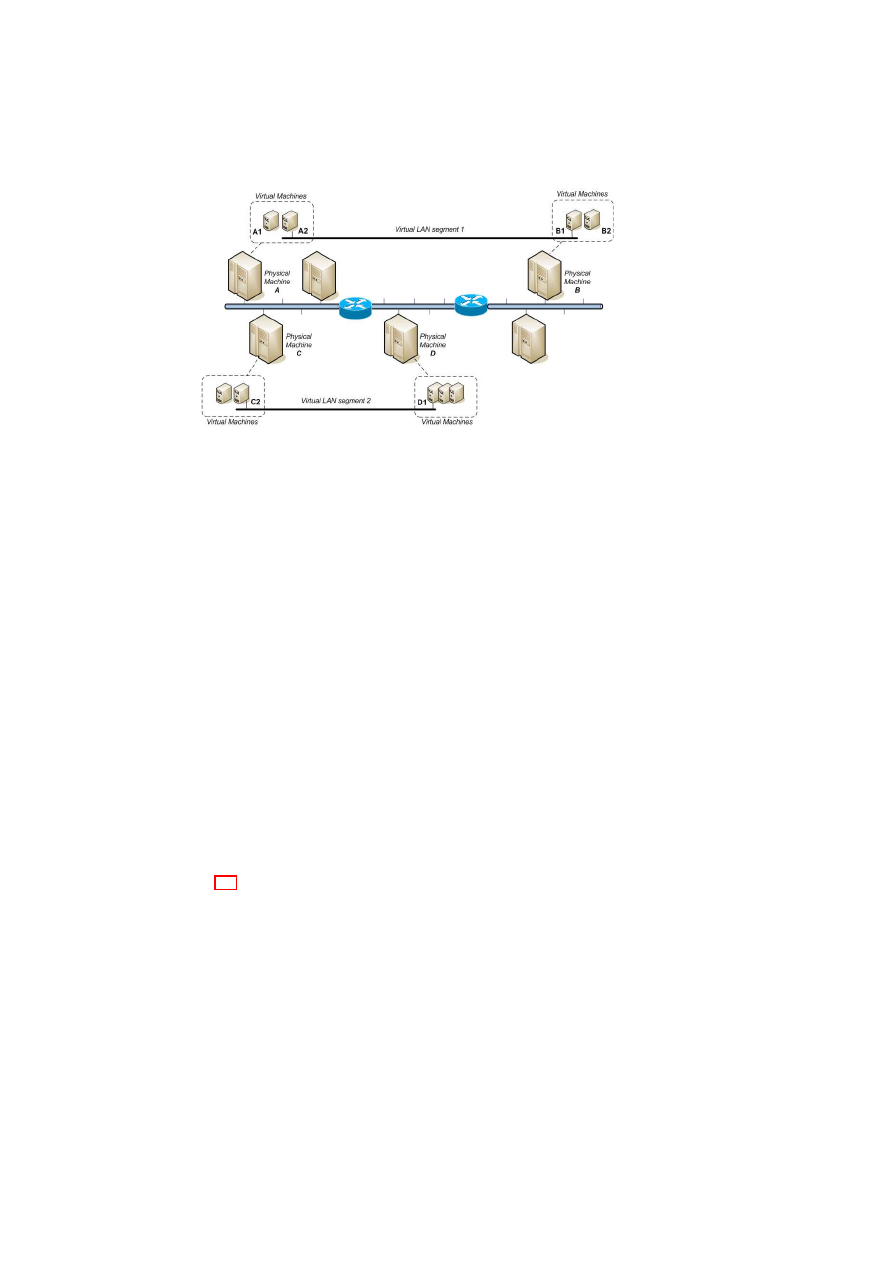

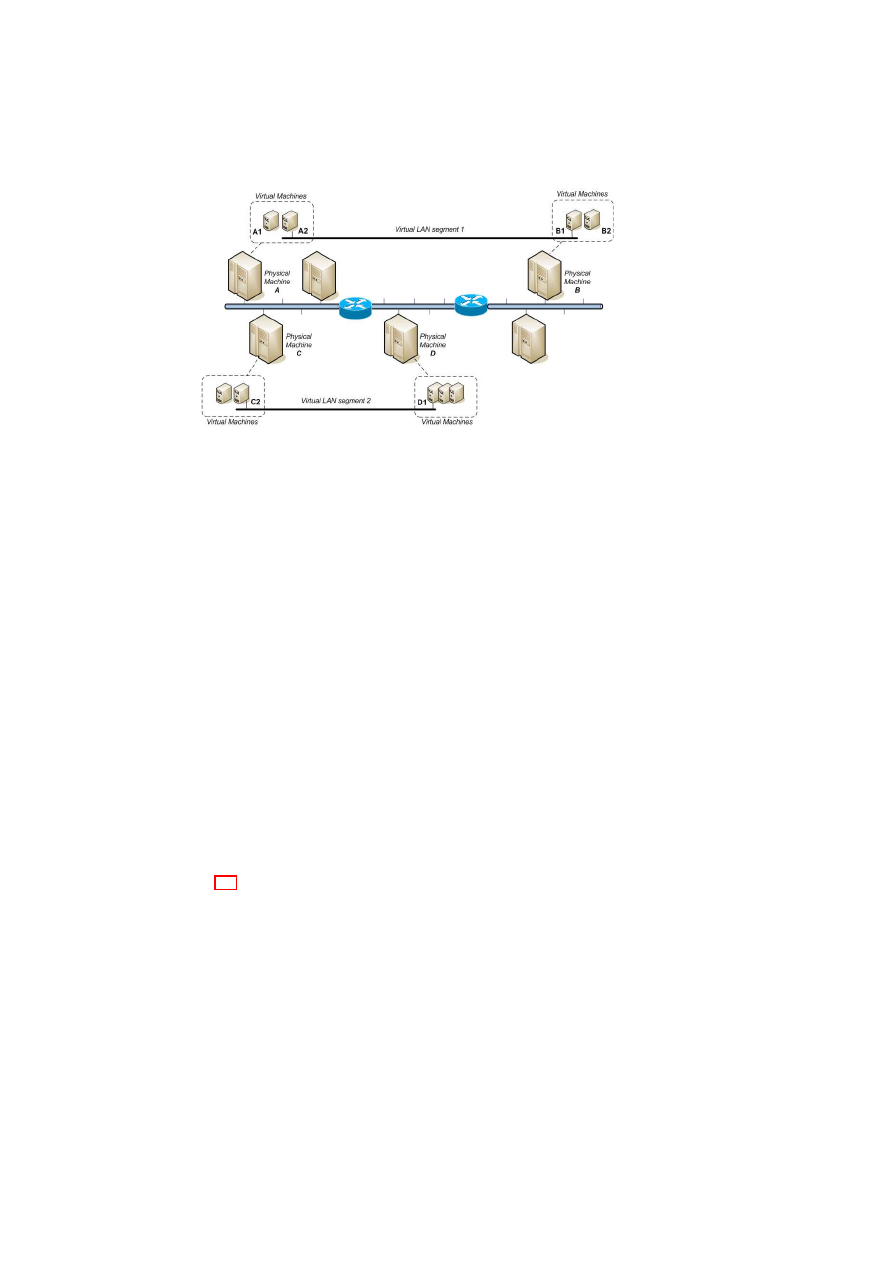

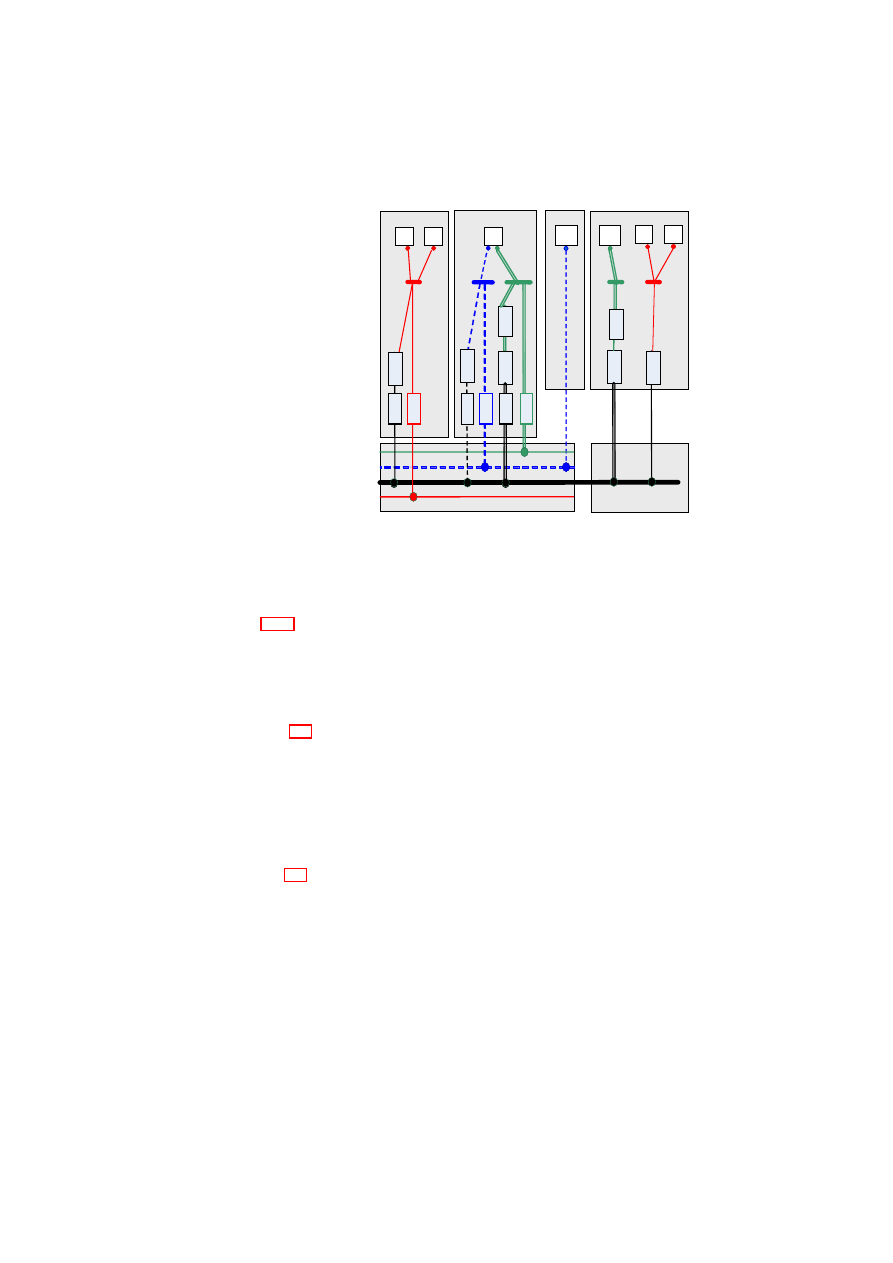

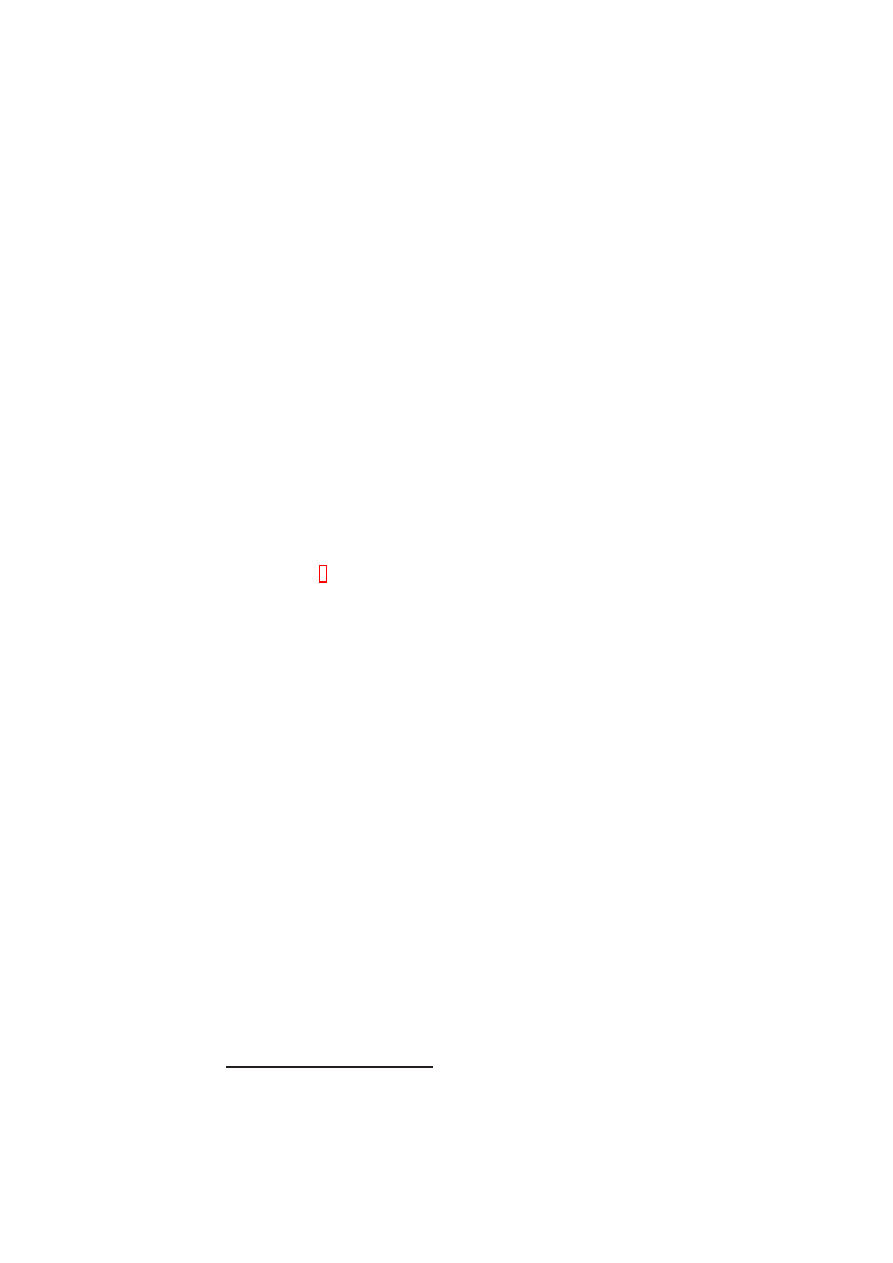

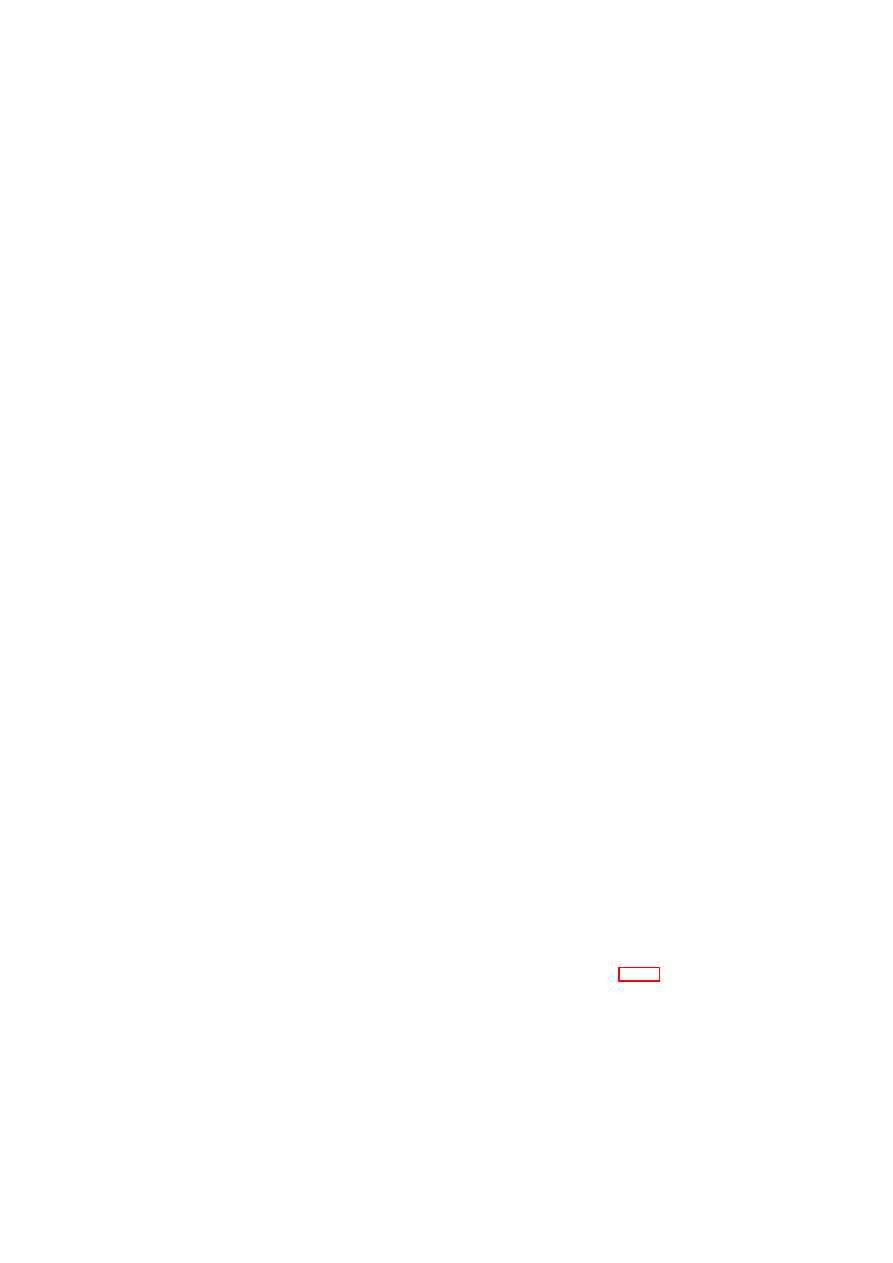

Figure 3.2 illustrates an example architecture in which physical machines host mul-

tiple VMs with different TVD memberships (the light and dark shades indicate dif-

ferent TVDs). Hosts A, B, and D are virtualized machines, whereas Host C is non-

virtualized. Furthermore, Hosts A, B, and C reside on the same LAN, and thus can

communicate directly using the trusted physical infrastructure without further protec-

tion (e.g., traffic encryption). For example, the light VMs hosted on Hosts A and B are

inter-connected using the local VLAN-enabled physical switch. In this case, the physi-

cal switch separates the TVD traffic from other traffic passing through the switch using

VLAN tags. Similarly, the dark VMs hosted on Host A and the non-virtualized Host

C are seamlessly inter-connected using the local switch. In contrast, connections that

require IP connectivity are routed over the WAN link. The WAN cloud in Figure 3.2

represents the physical network infrastructure able to deal with TVD-enabled virtual

networks; it can include LANs with devices capable of VLAN tagging and gateways

to connect the LANs to each other over (possibly insecure) WAN links. For connec-

tions that traverse untrusted medium, we employ EtherIP encapsulation to denote TVD

membership and additional security measures (such as encryption) to ensure compli-

ance with the confidentiality and integrity requirements.

3.2.2

Virtual Private Networking

In Figure 3.2, VMs hosted on Host D are connected to the other machines over a

WAN link. A practical setting in which such a connection might exist would be an

outsourced remote resource connected to the local data center through the Internet. As

an example, lightly shaded VMs on Host D connect to the lone VM on Host B over

this untrusted link. In this setting, we use a combination of EtherIP encapsulation and

VPN technology to ensure the confidentiality and integrity of the communication. To

do so, we use point-to-point VPN tunnels with OpenVPN that are configured via the

TVD Proxy from the TVD policies. This enables reconfiguration of the topology and

the involved VPNs within a TVD from a single administration point, the TVD Master.

TVD policies distributed from the TVD Master to the TVD Proxy also include the

secret key for the VPN along with other VPN-specific settings. On a physical host, the

VPN’s endpoint is represented as a local virtual network interface (vif) that is plugged

into the appropriate vSwitch controlled by the TVD Proxy. The vSwitch then decides

whether to tunnel the communication between VMs, and if so, uses the VPN module

to establish the tunnel and access the VPN secret for traffic encryption and decryption.

3.3

Virtual Storage Infrastructure

We focus on a simplified security management of virtualized storage. Broadly speak-

ing, storage virtualization abstracts away the physical storage resource(s). It is desir-

able to allow a storage resource to be shared by multiple host computers, and also to

provide a single storage device abstraction to a computer irrespective of the underly-

ing physical storage, which may be a single hard disk, a set of hard disks, a Storage

Area Network (SAN), etc. To satisfy both requirements, storage virtualization is typ-

ically done at two levels. The first level of virtualization involves aggregating all the

(potentially heterogeneous) physical storage devices into one or more virtual storage

pools. The aggregation allows more centralized and convenient data management. The

second level of virtualization concerns the unified granularity (i.e., blocks or files) at

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

26

OpenTC D05.6 – Final Report of OpenTC Workpackage 5

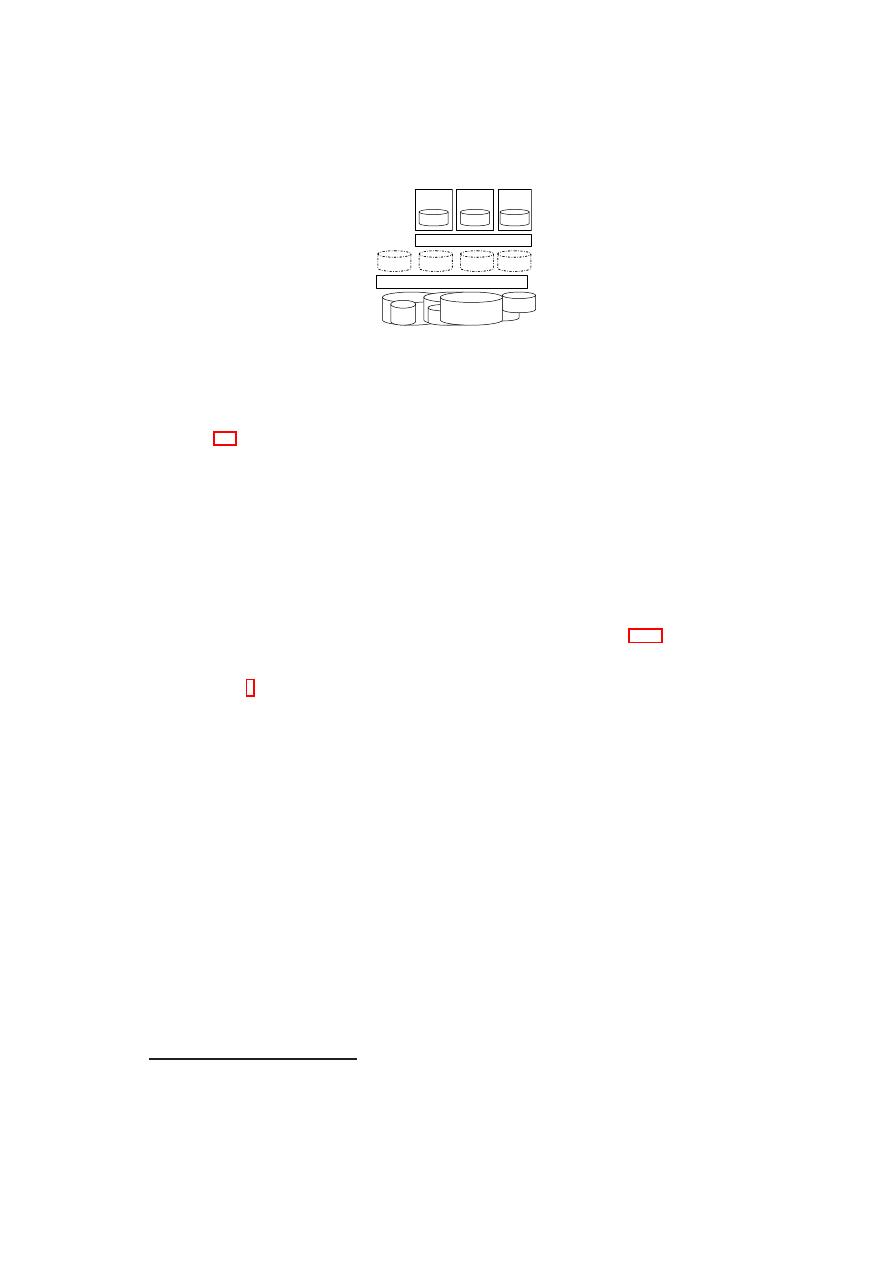

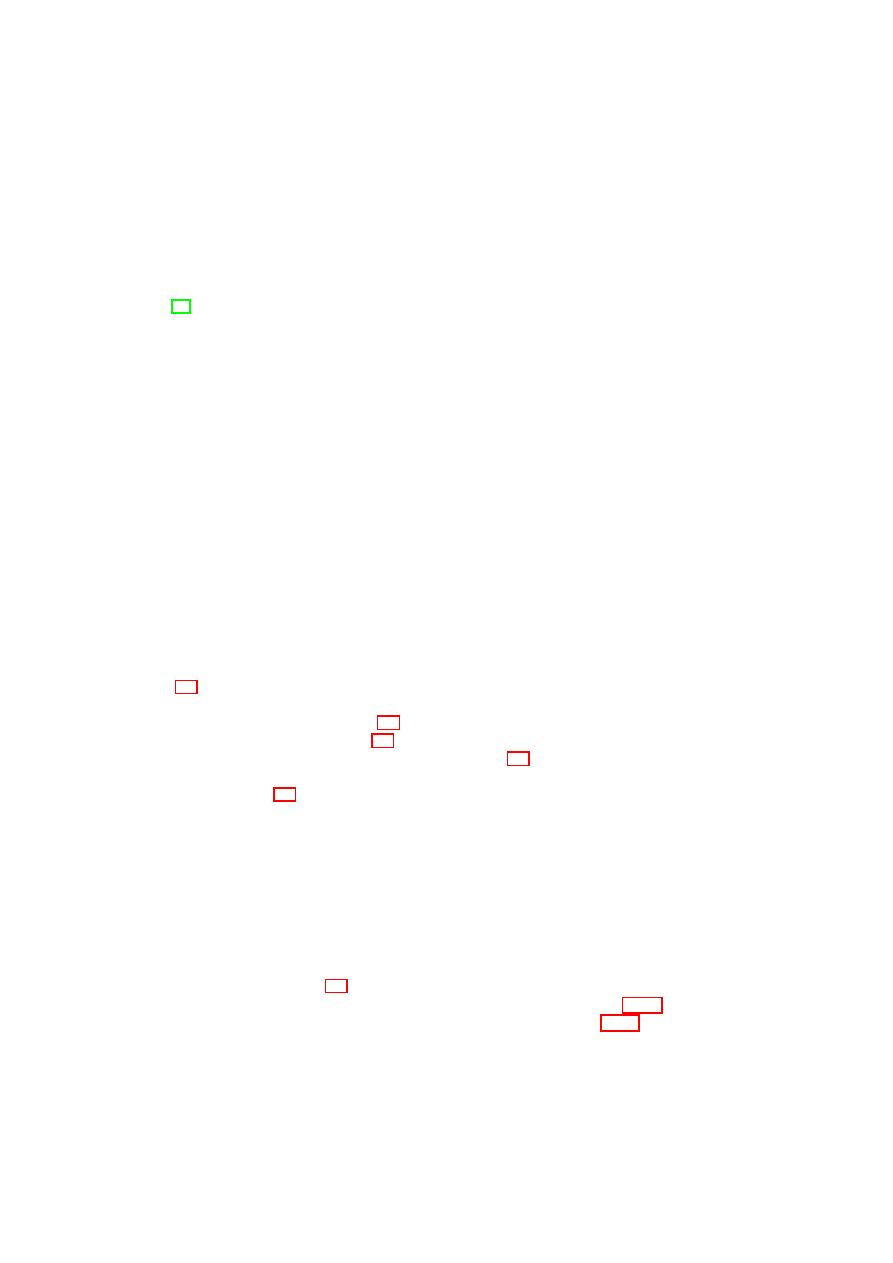

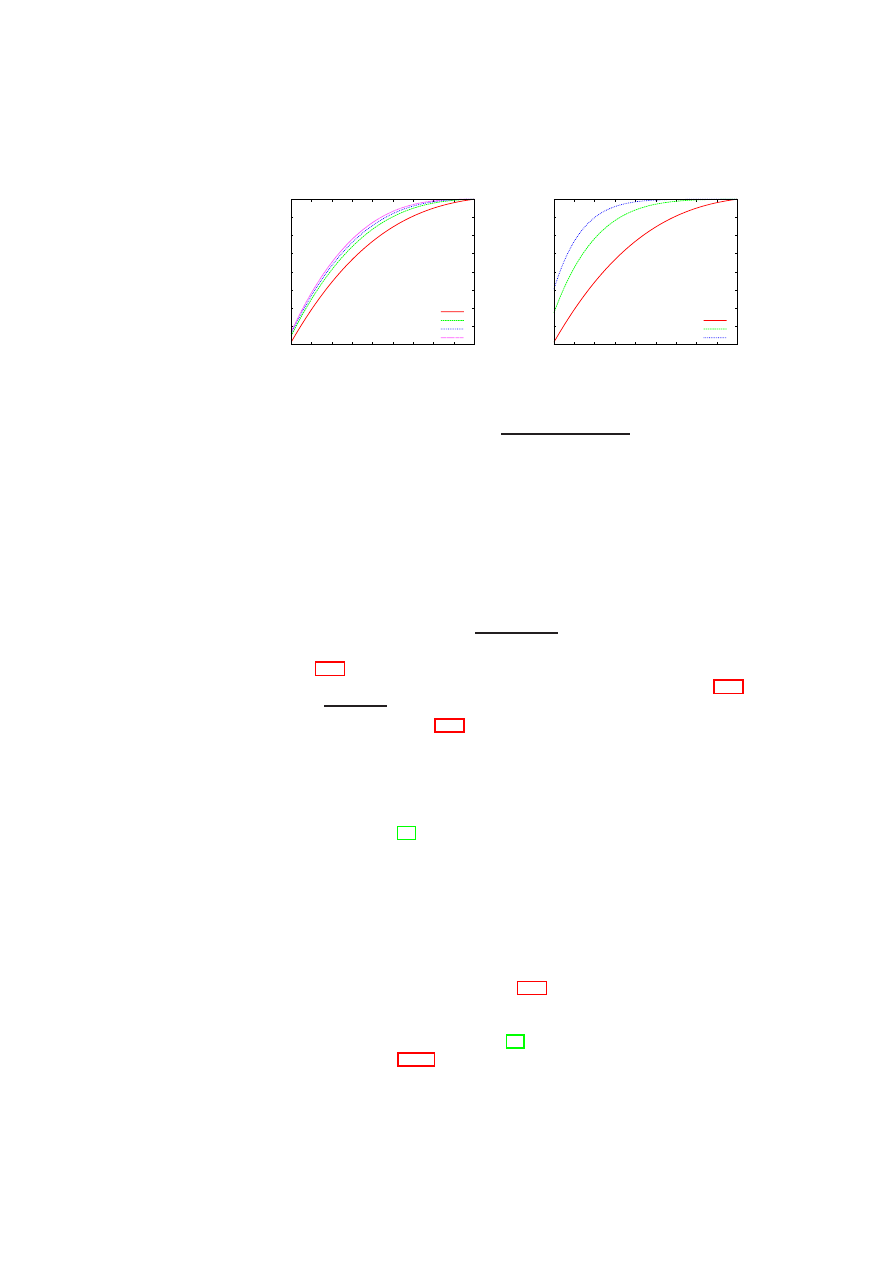

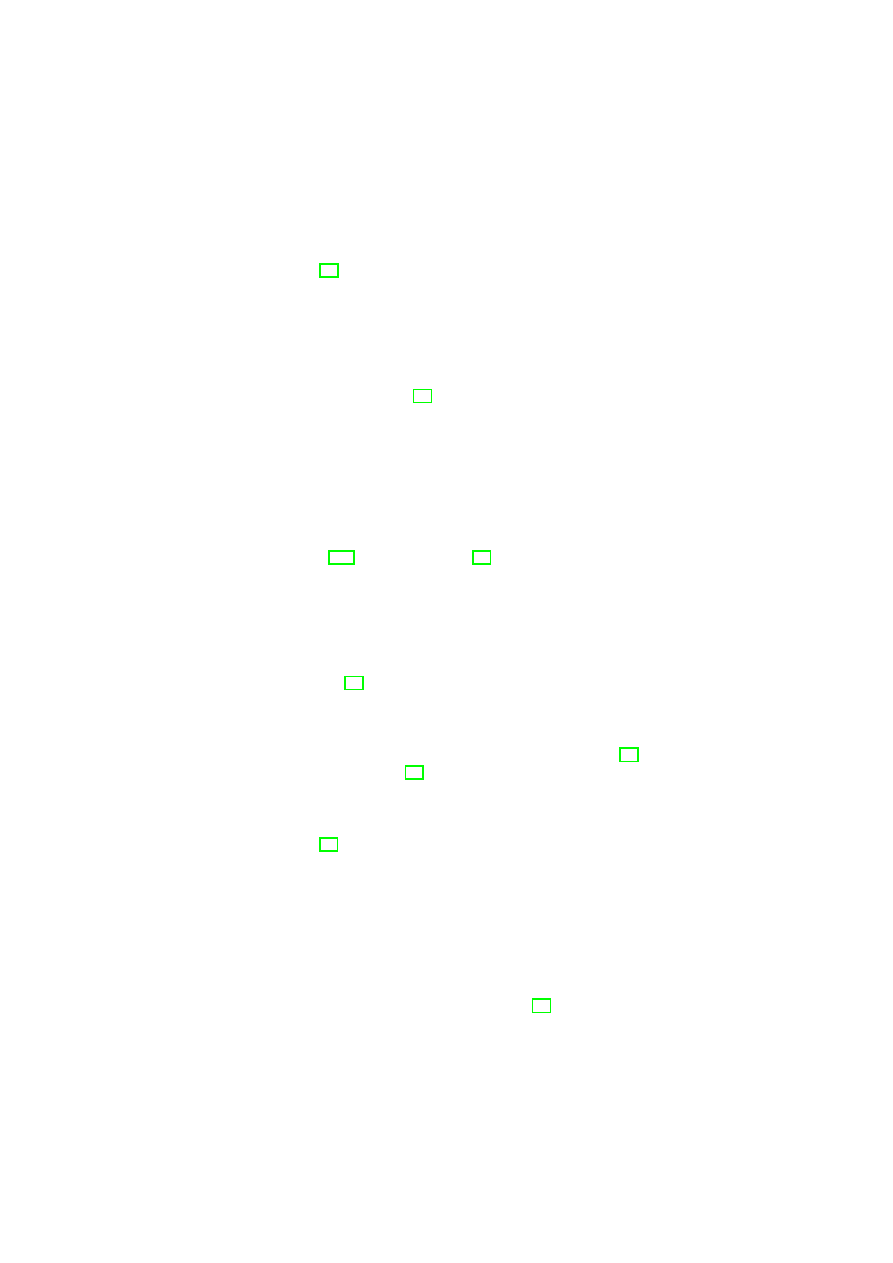

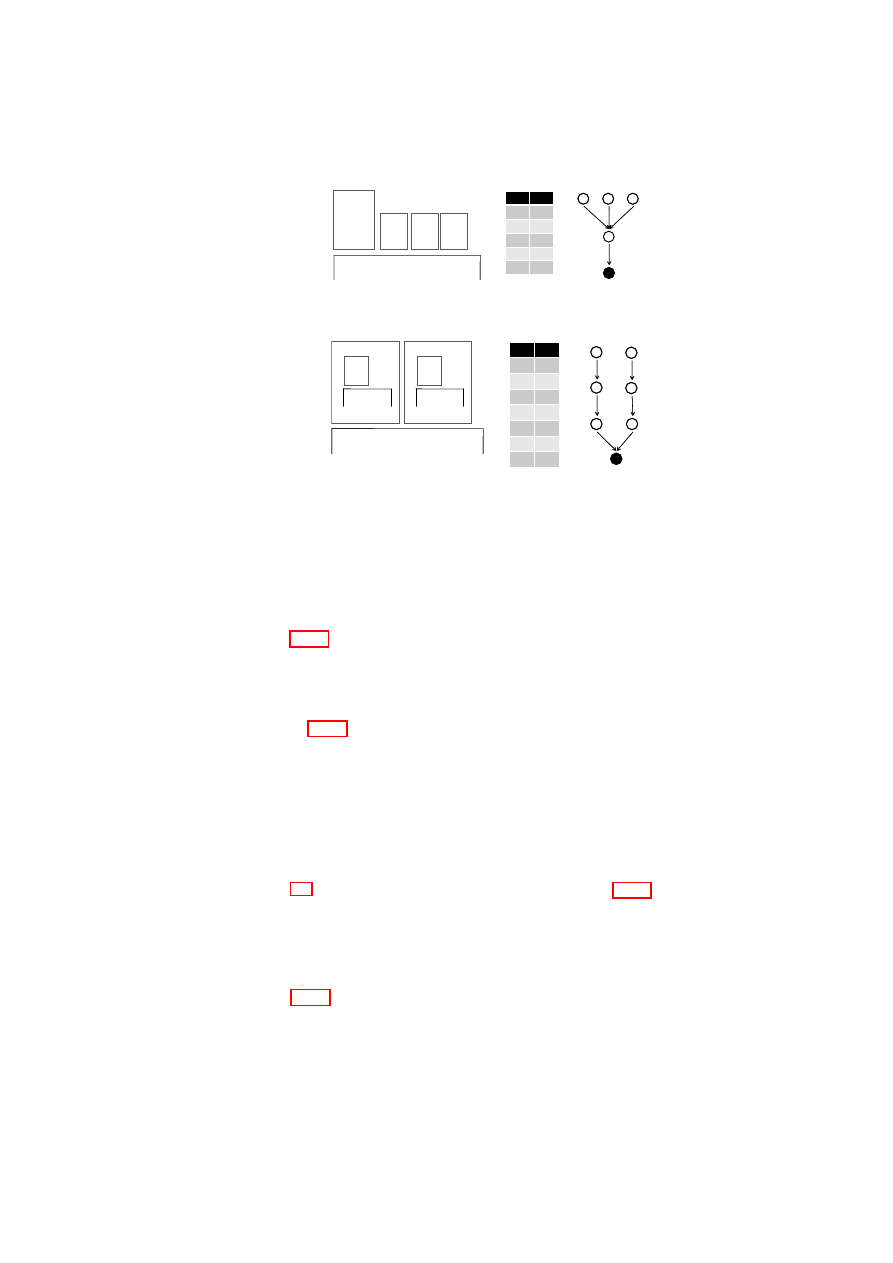

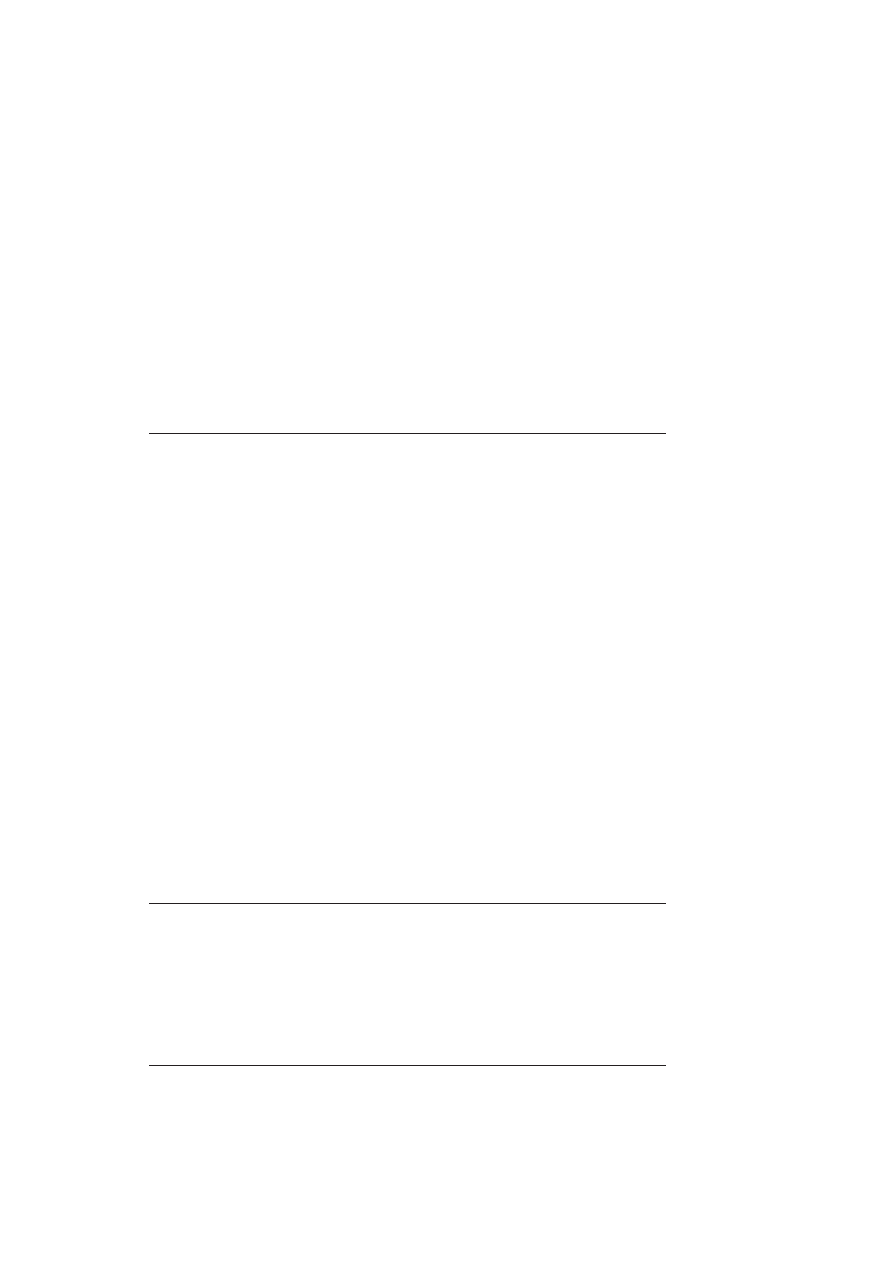

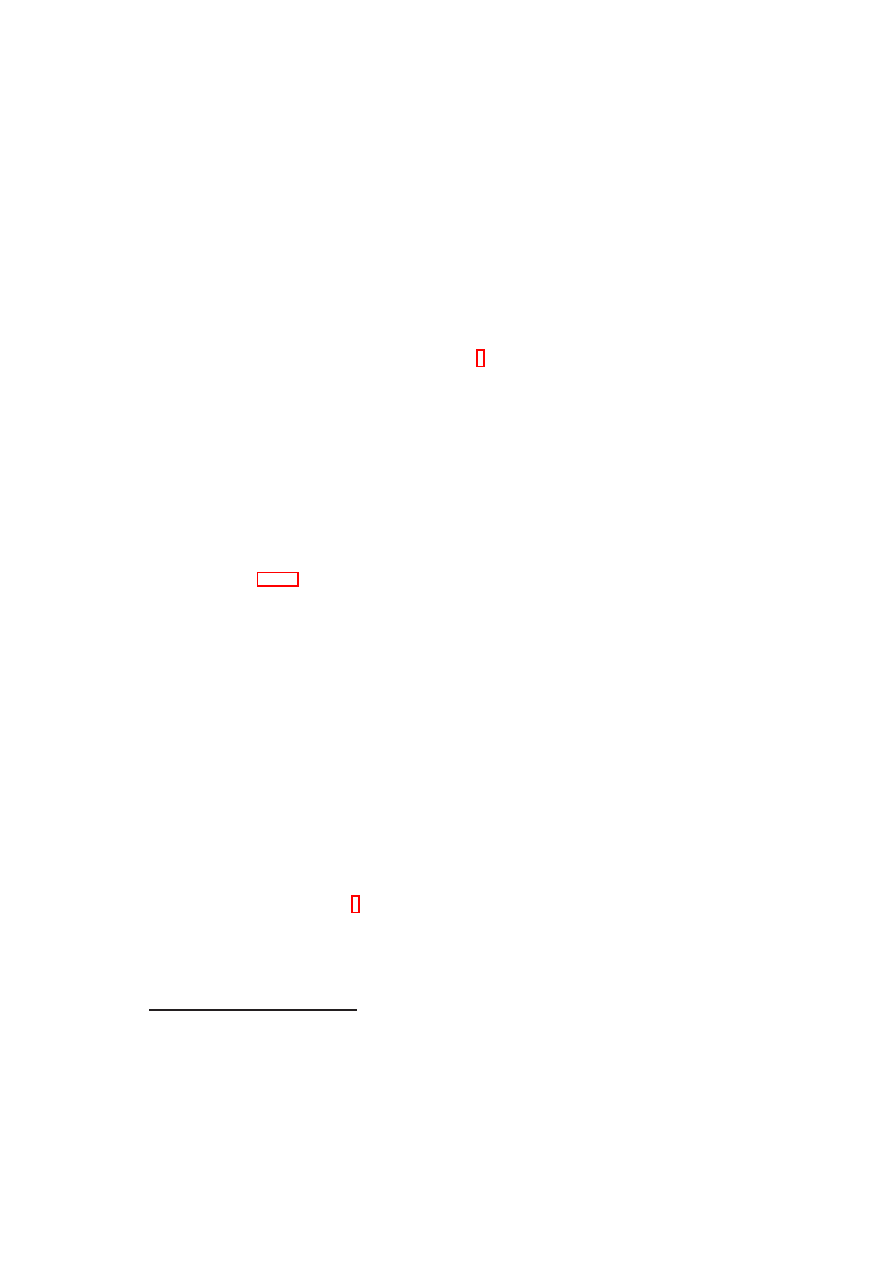

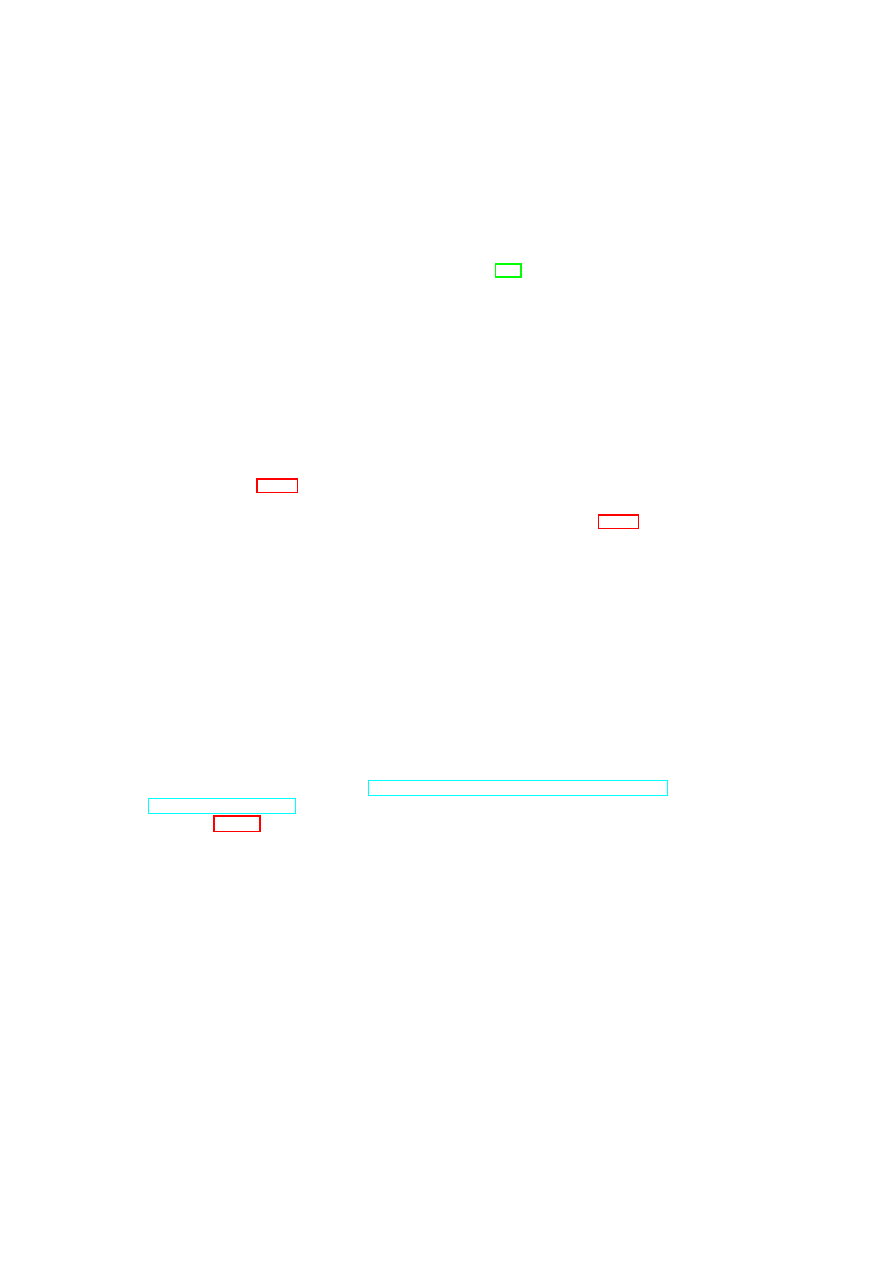

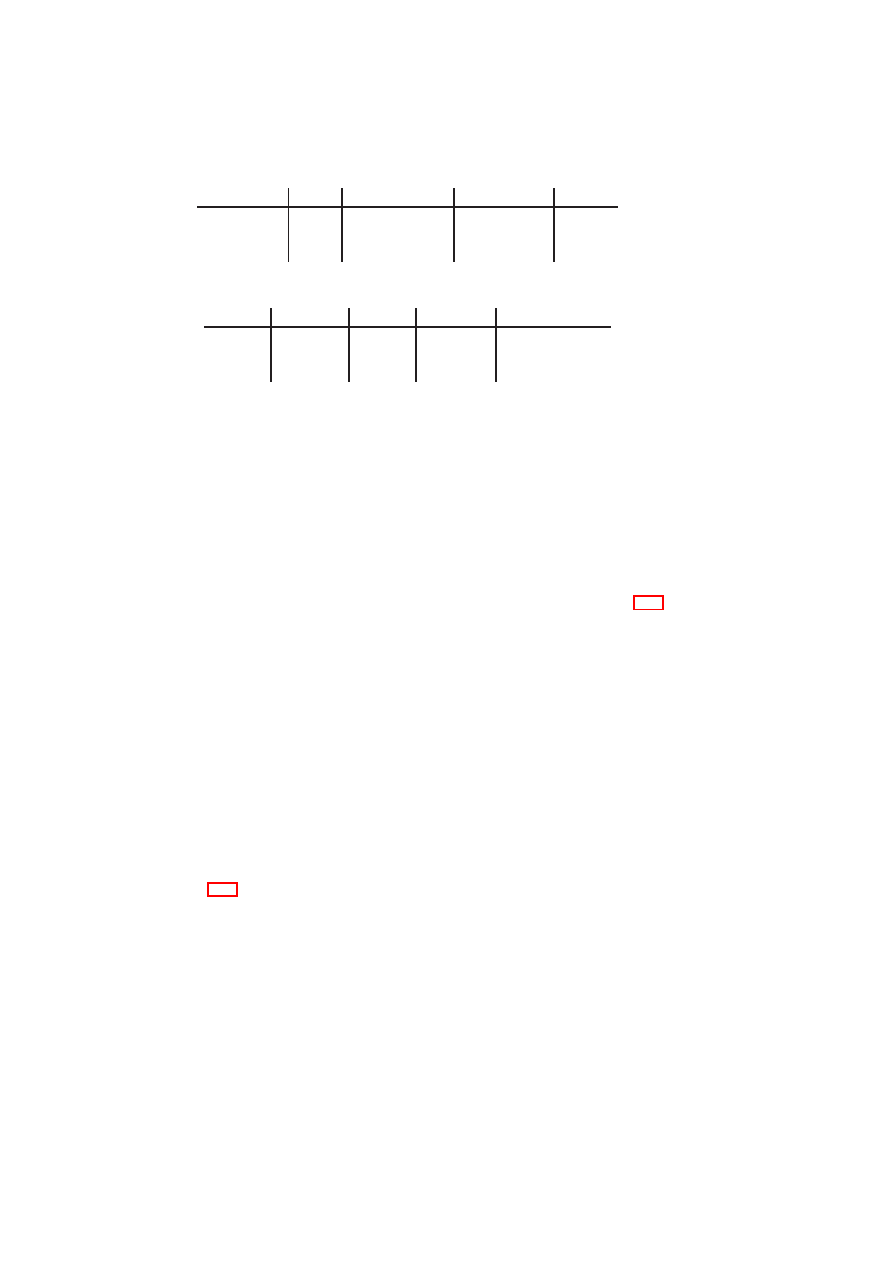

Consolidation

Access Control & Encryption

TVD A

TVD B

TVD C

Heterogeneous

Physical Storage

Per-TVD

Raw Storage

Per-TVD

Virtual Storage

TVD A

TVD B

TVD C

Metadata

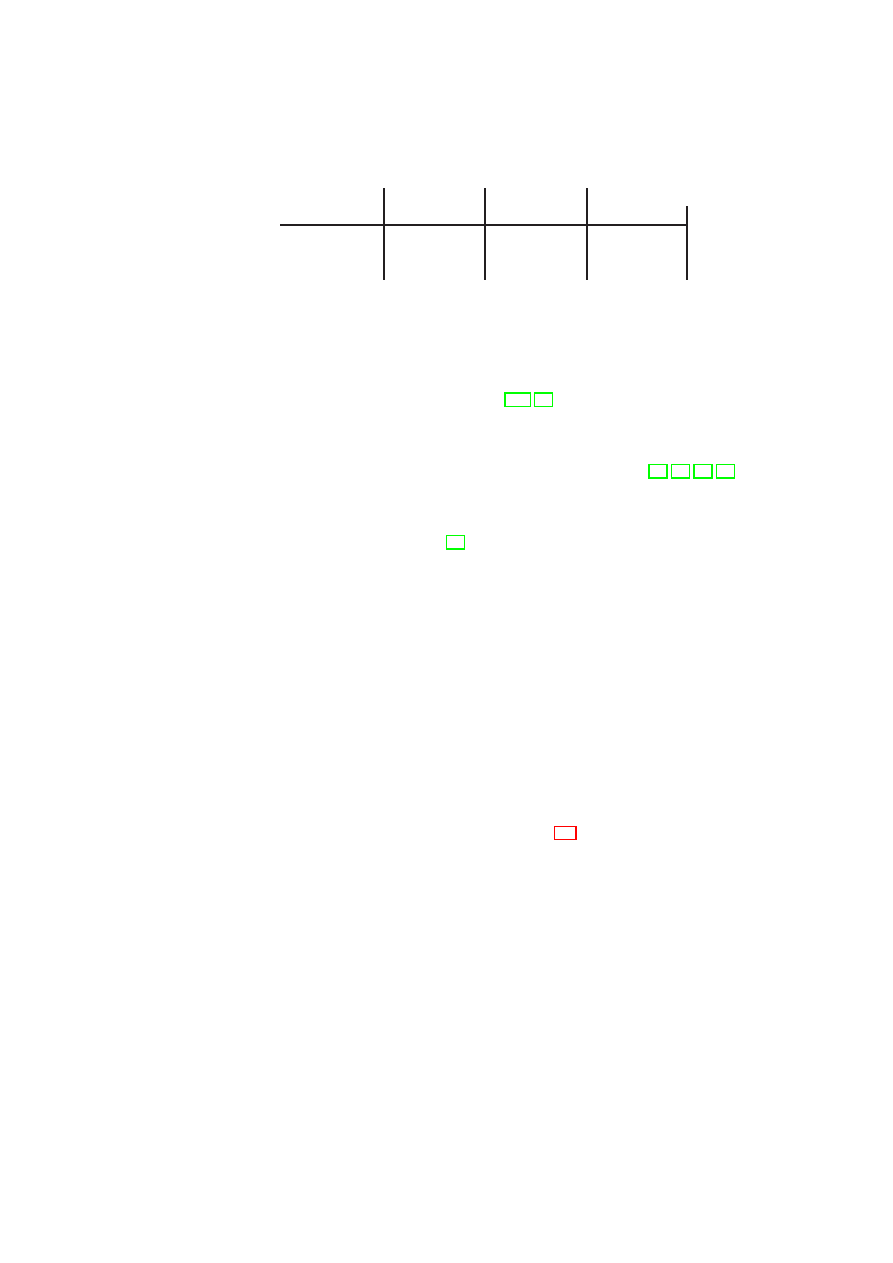

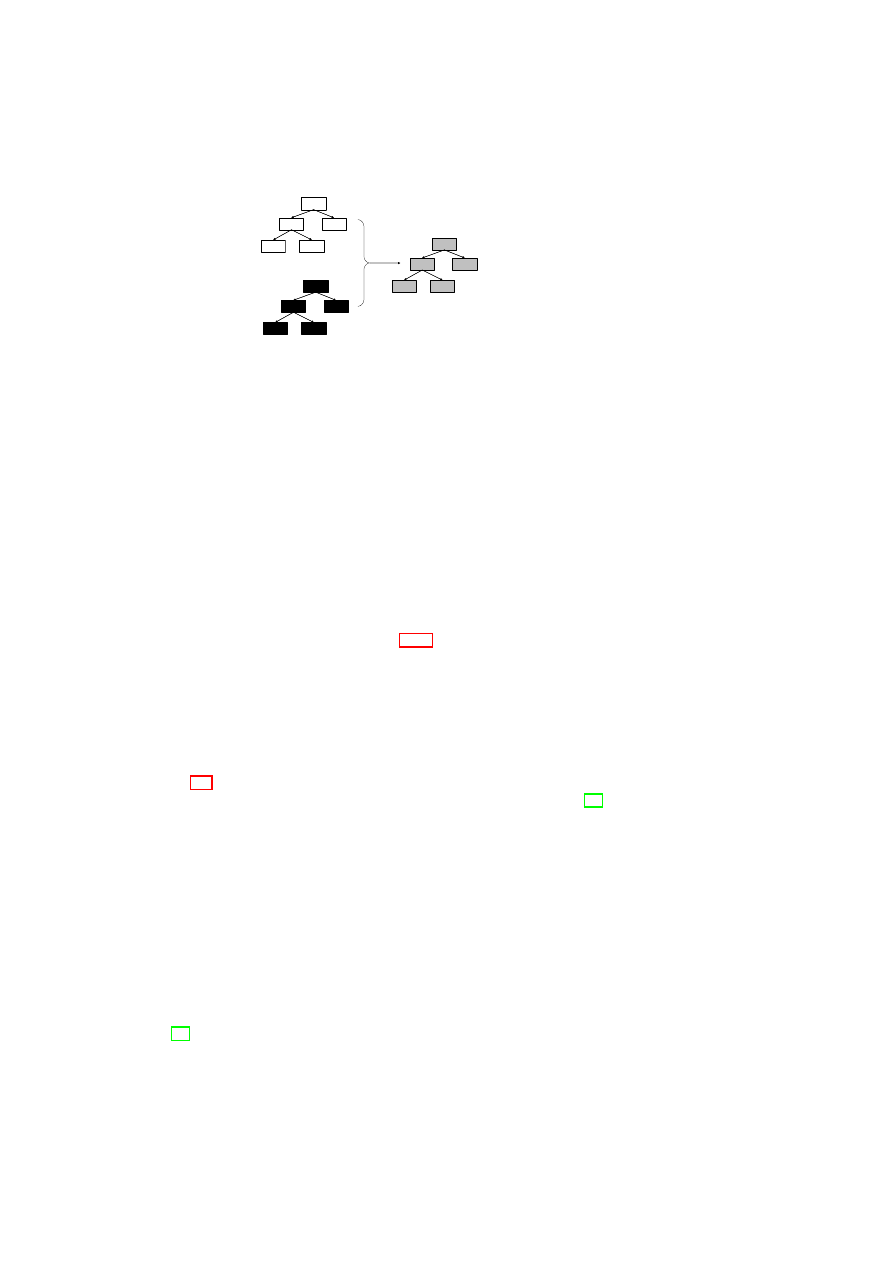

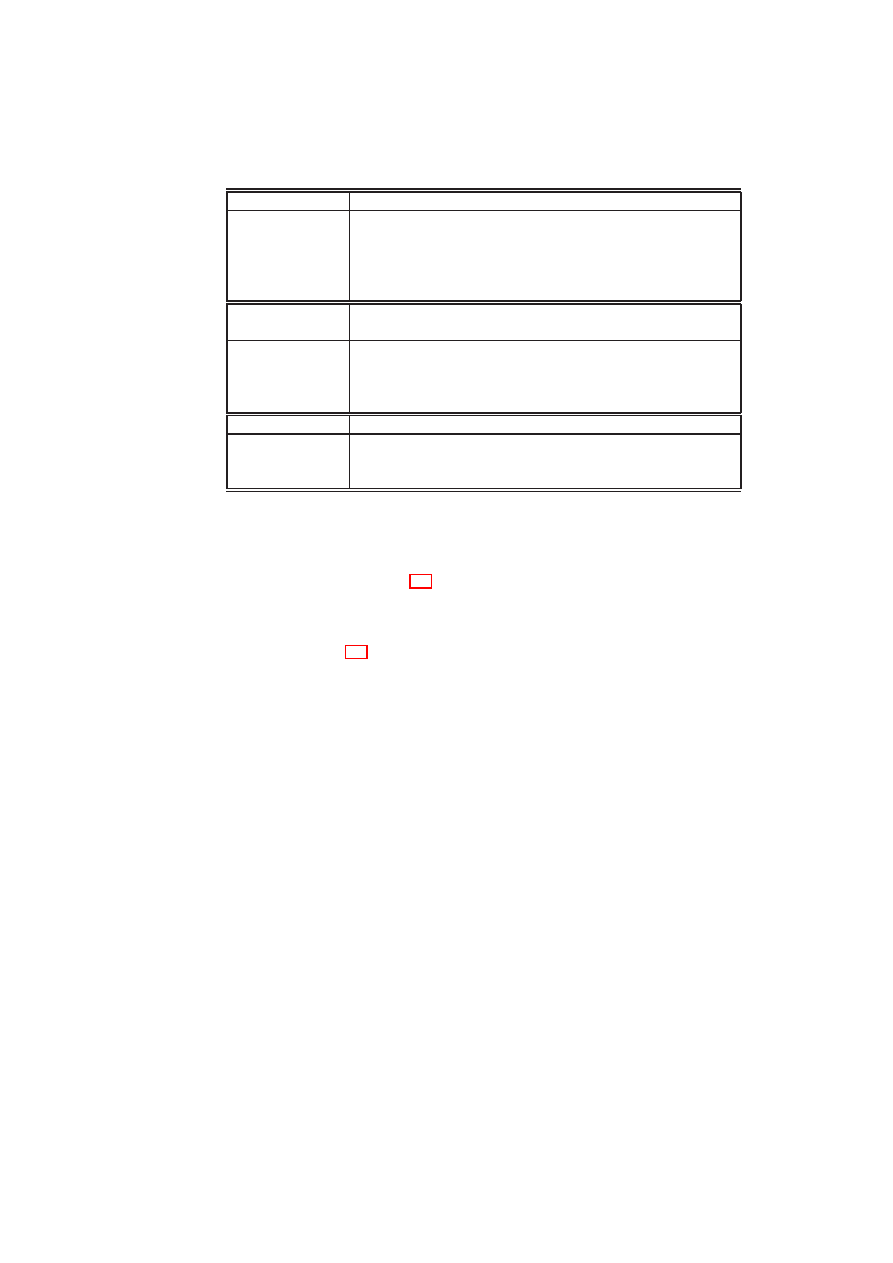

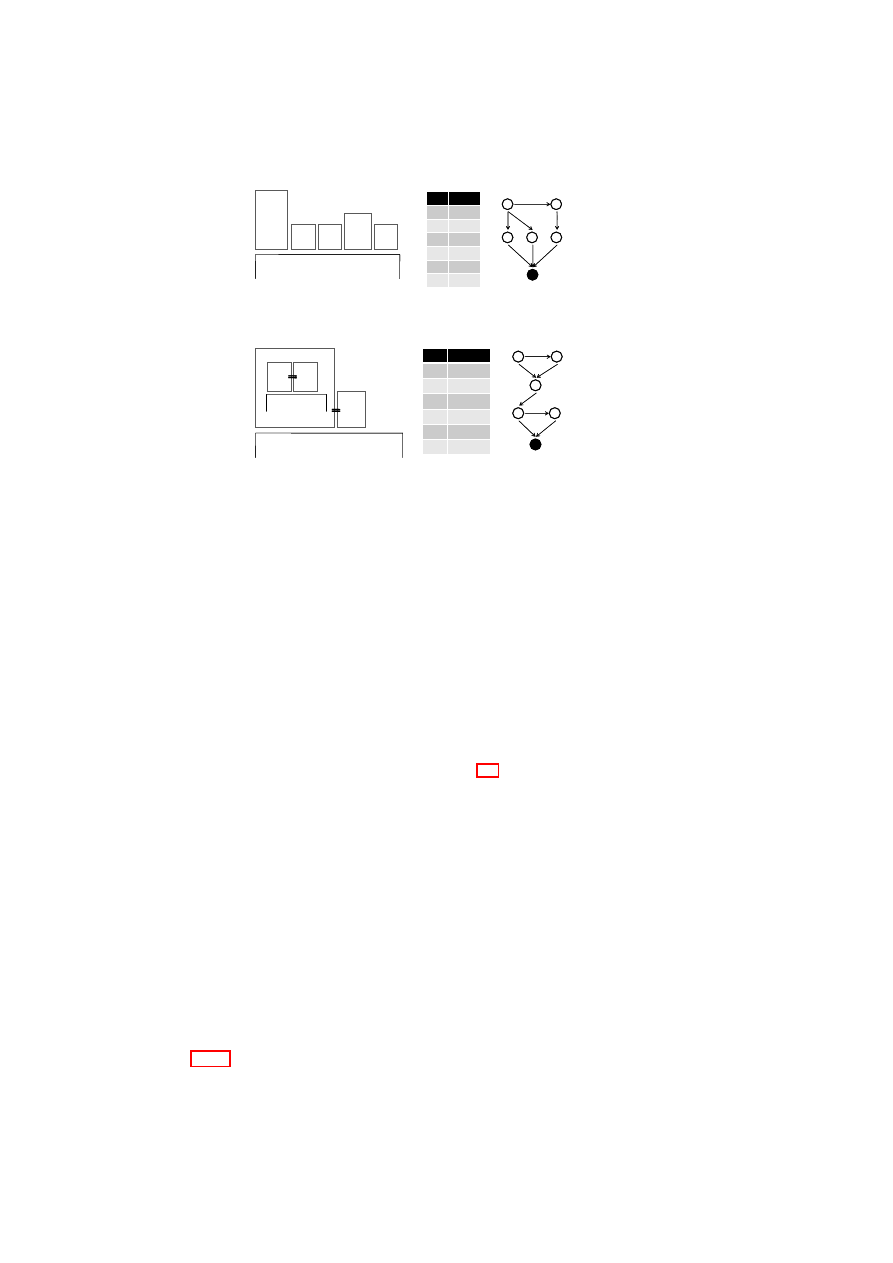

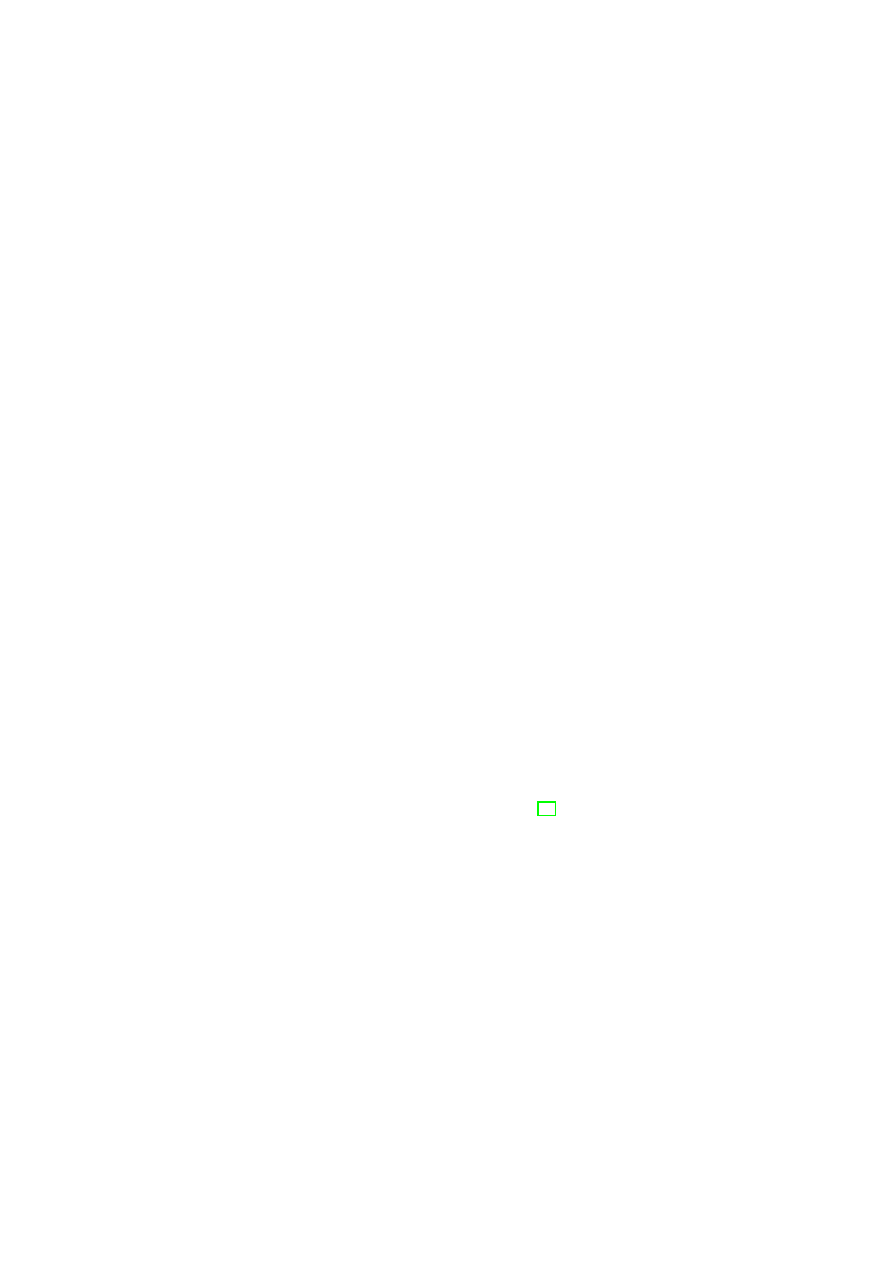

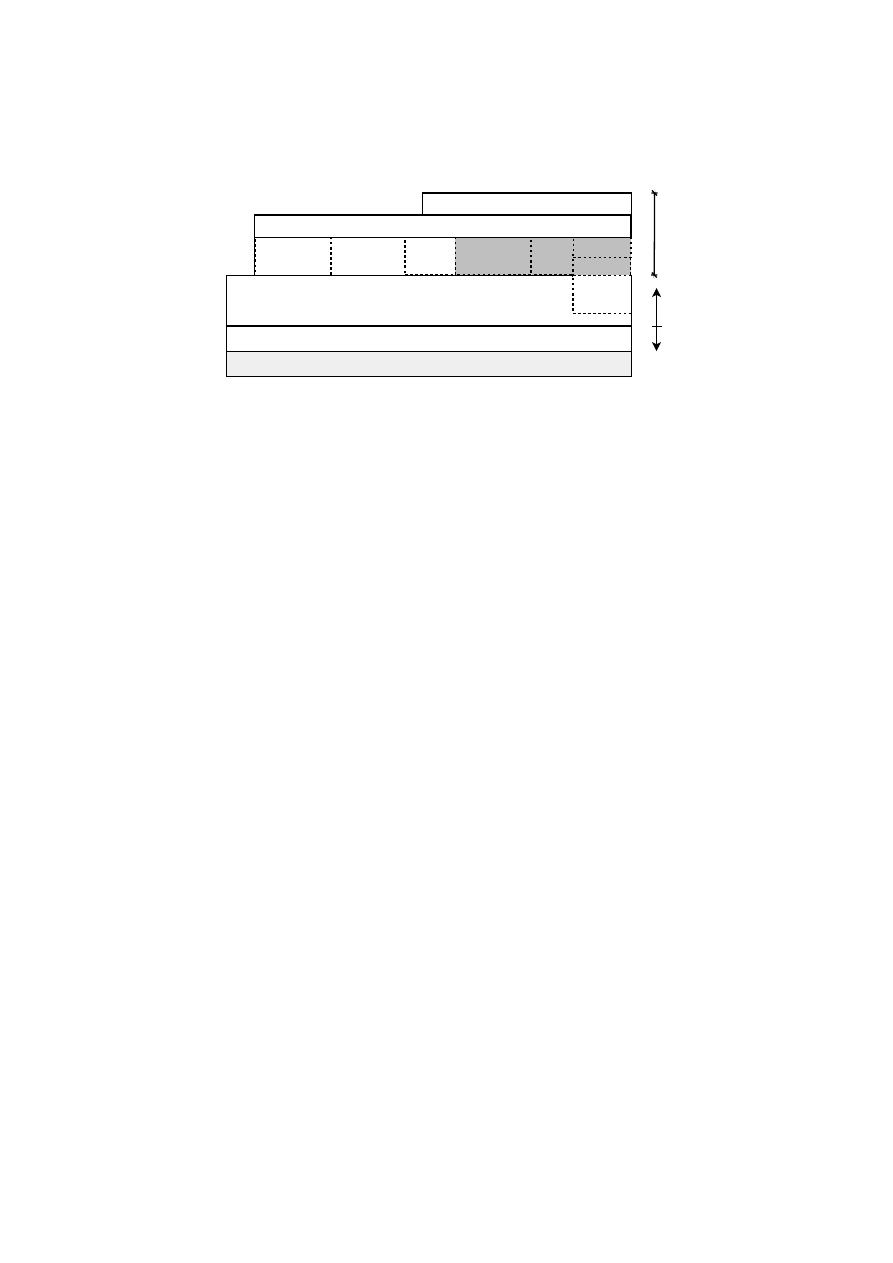

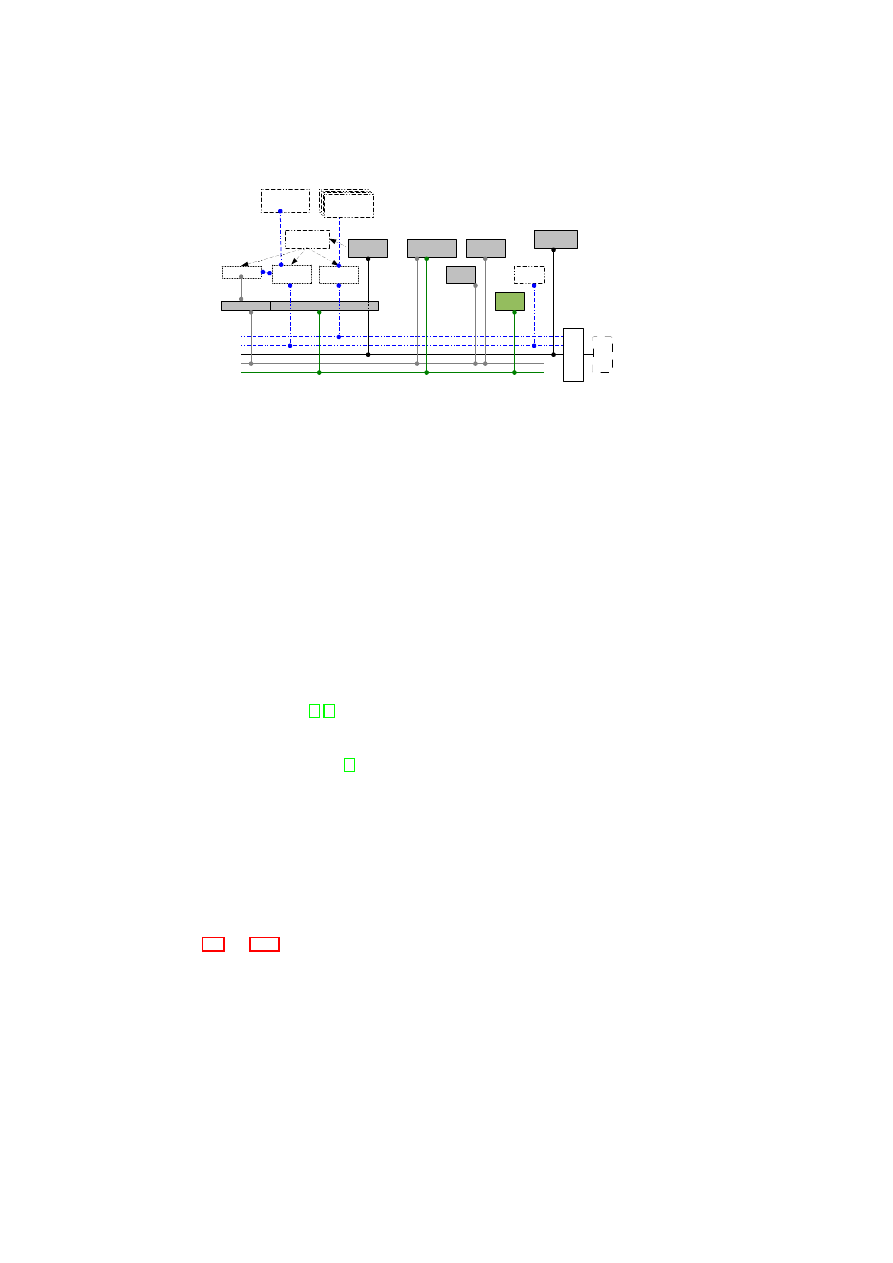

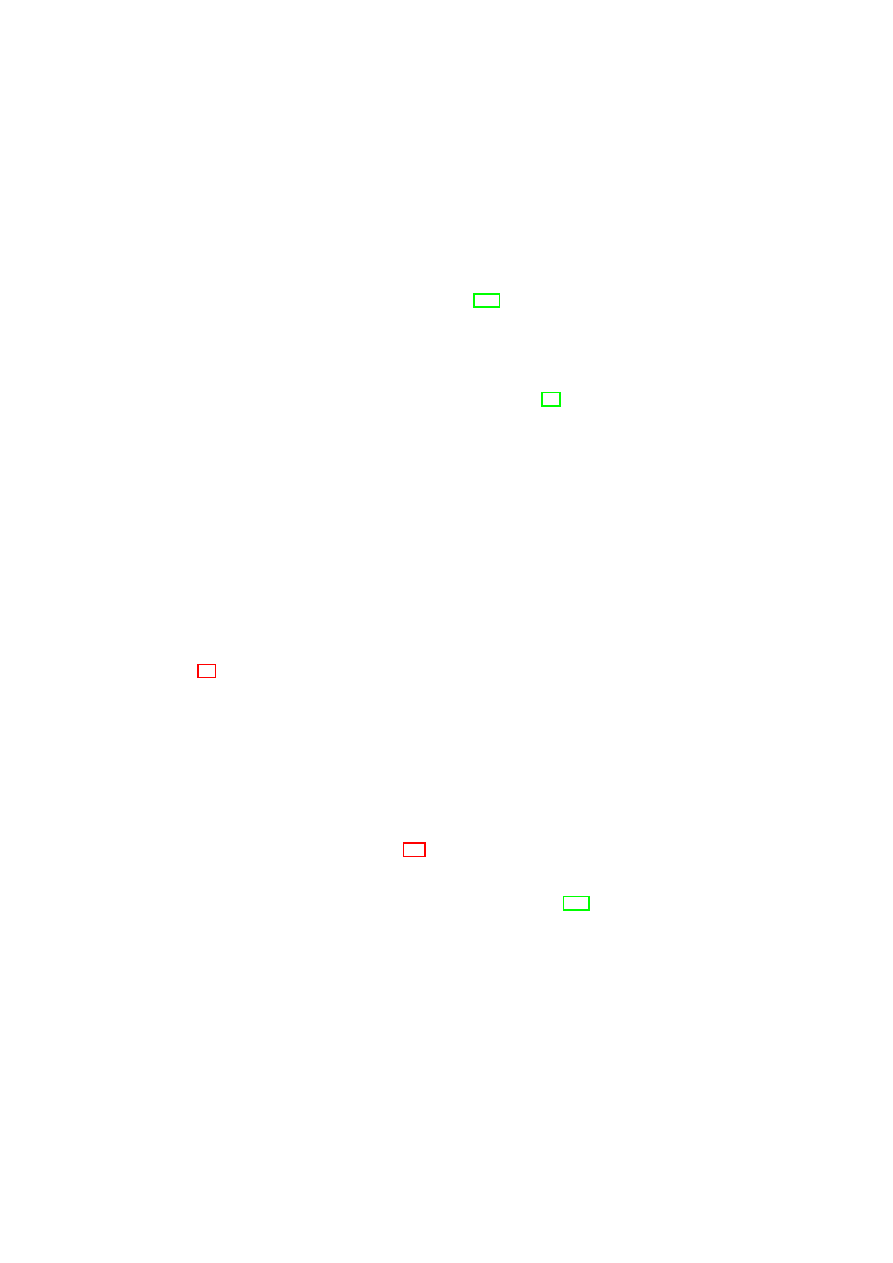

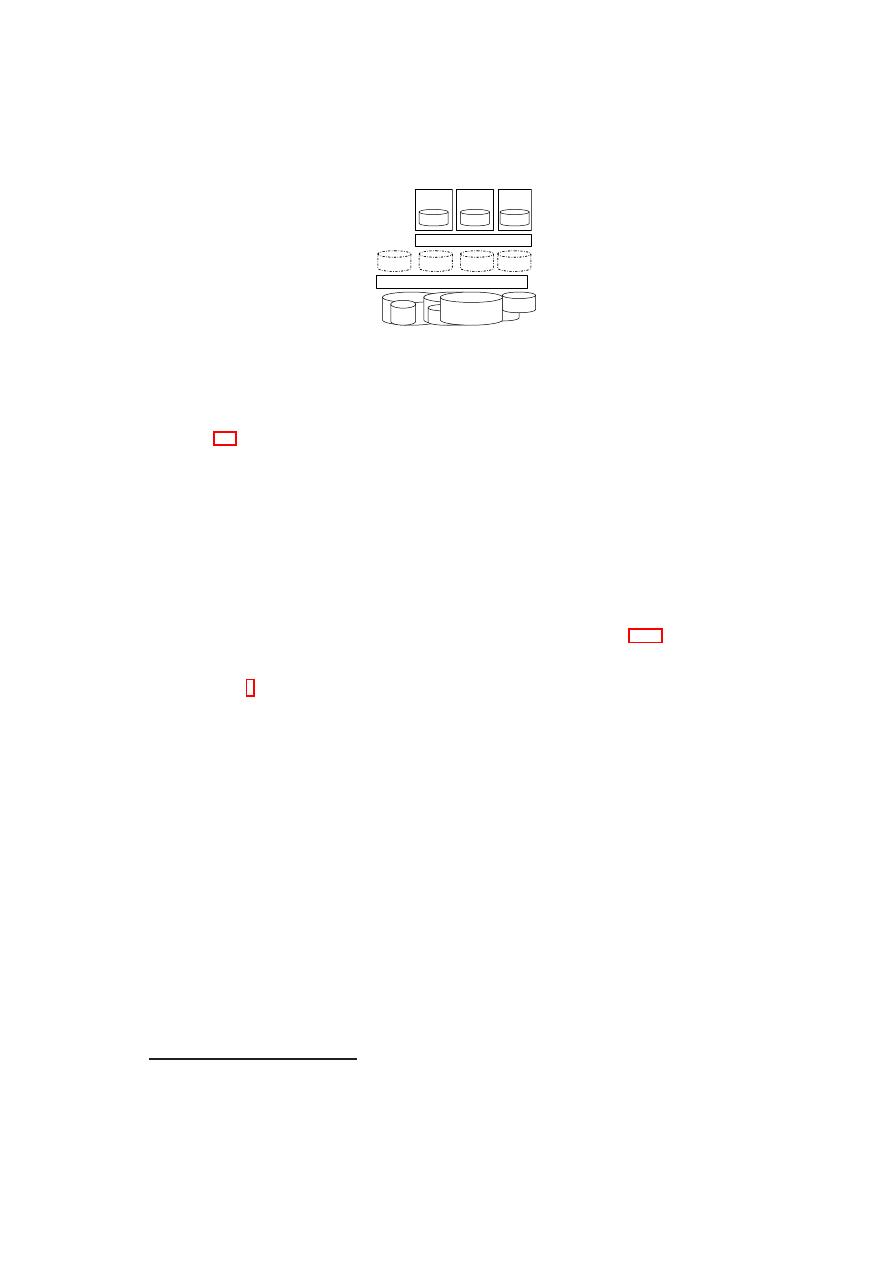

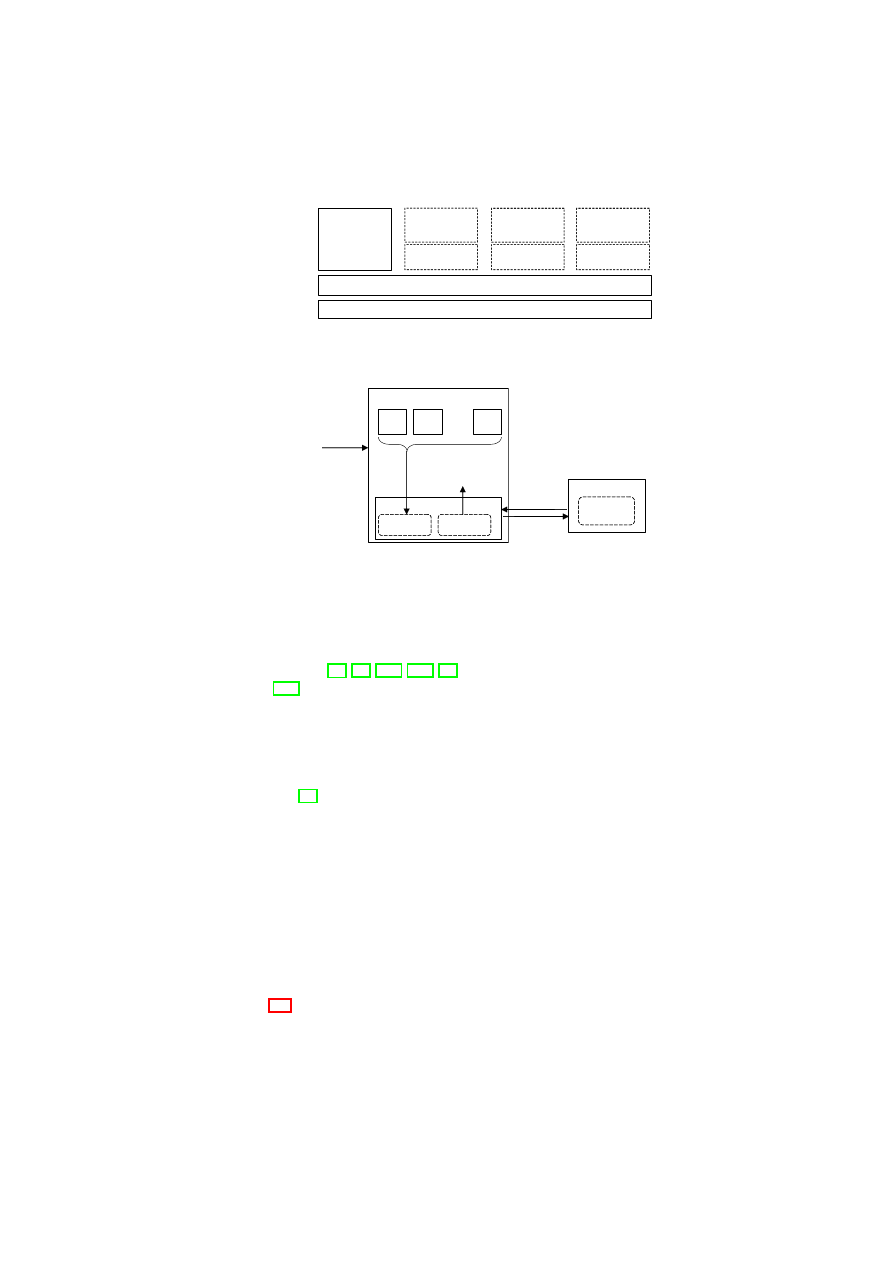

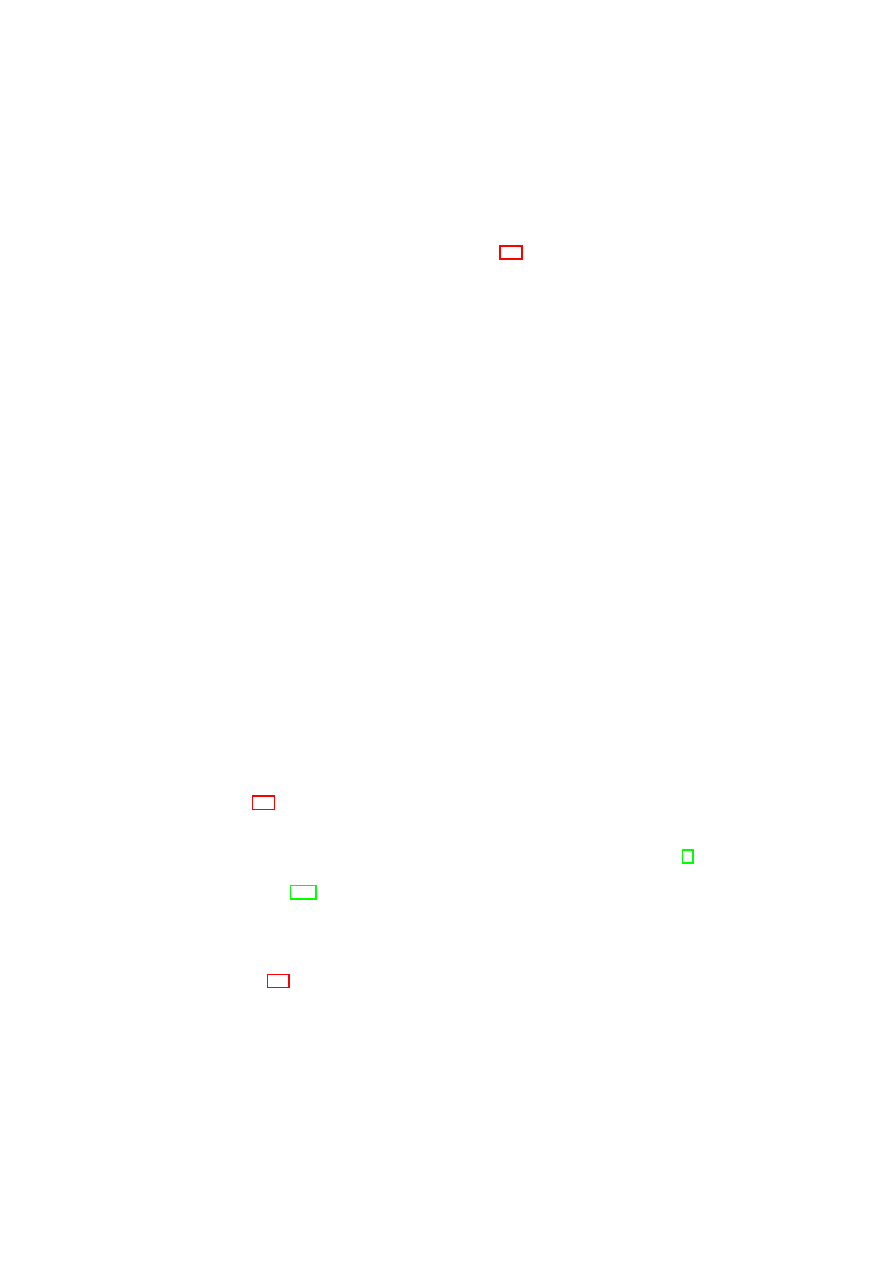

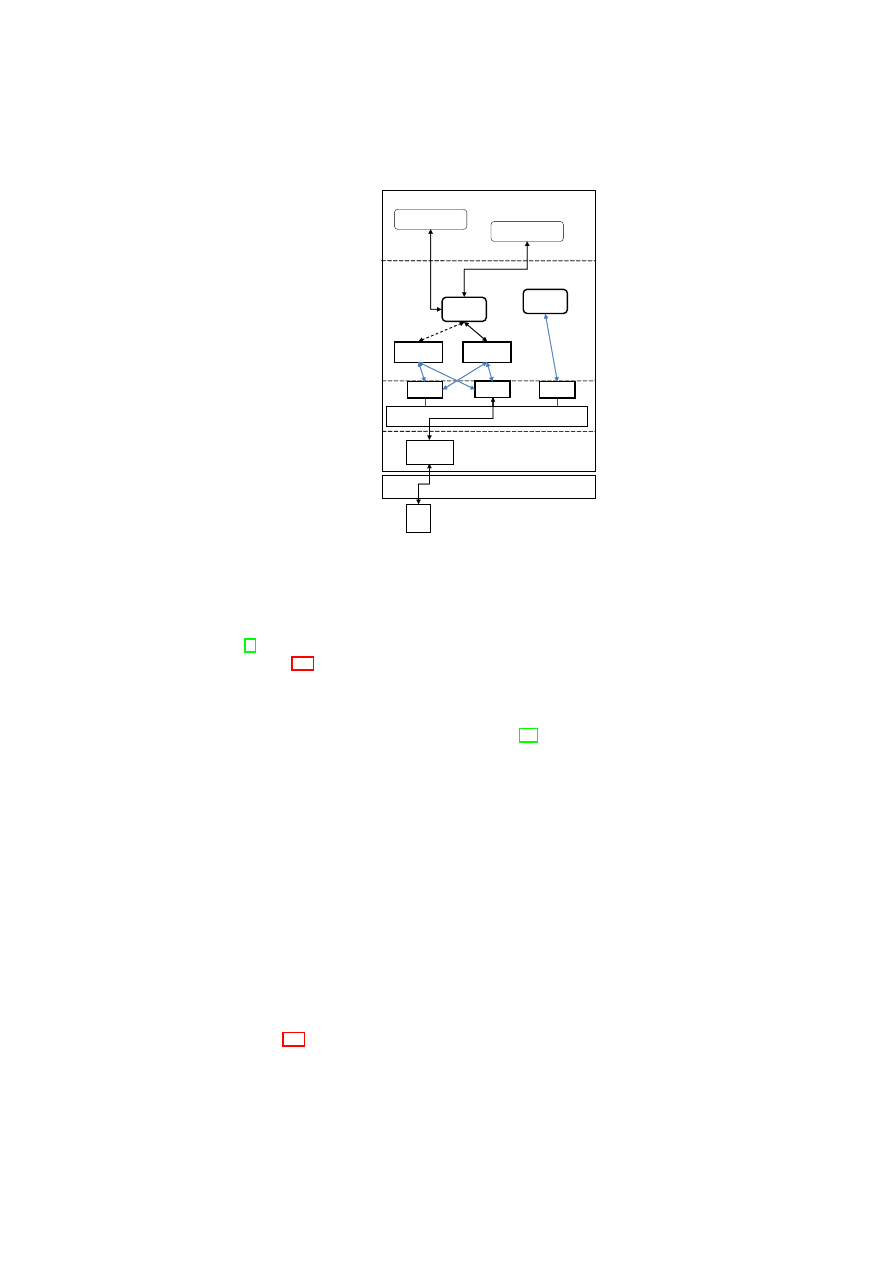

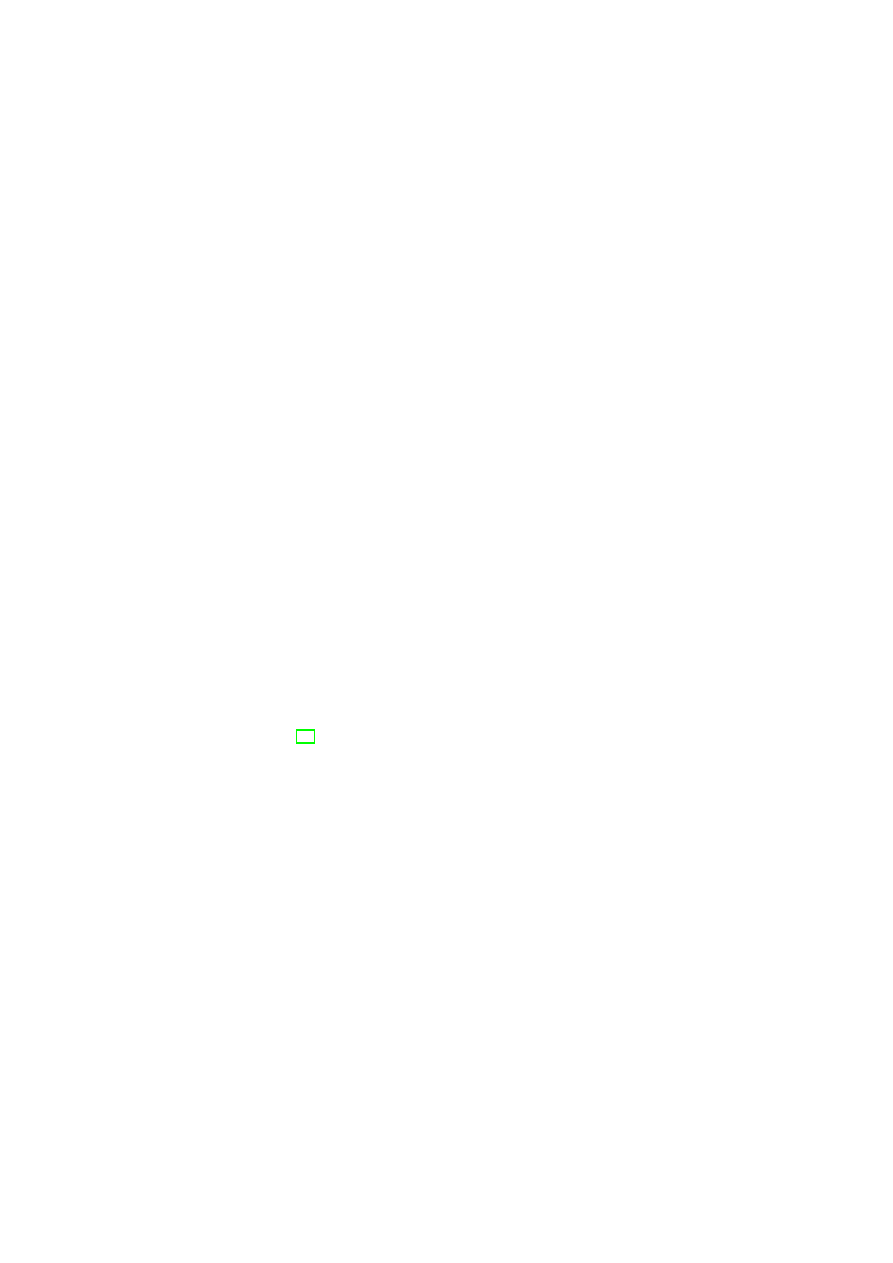

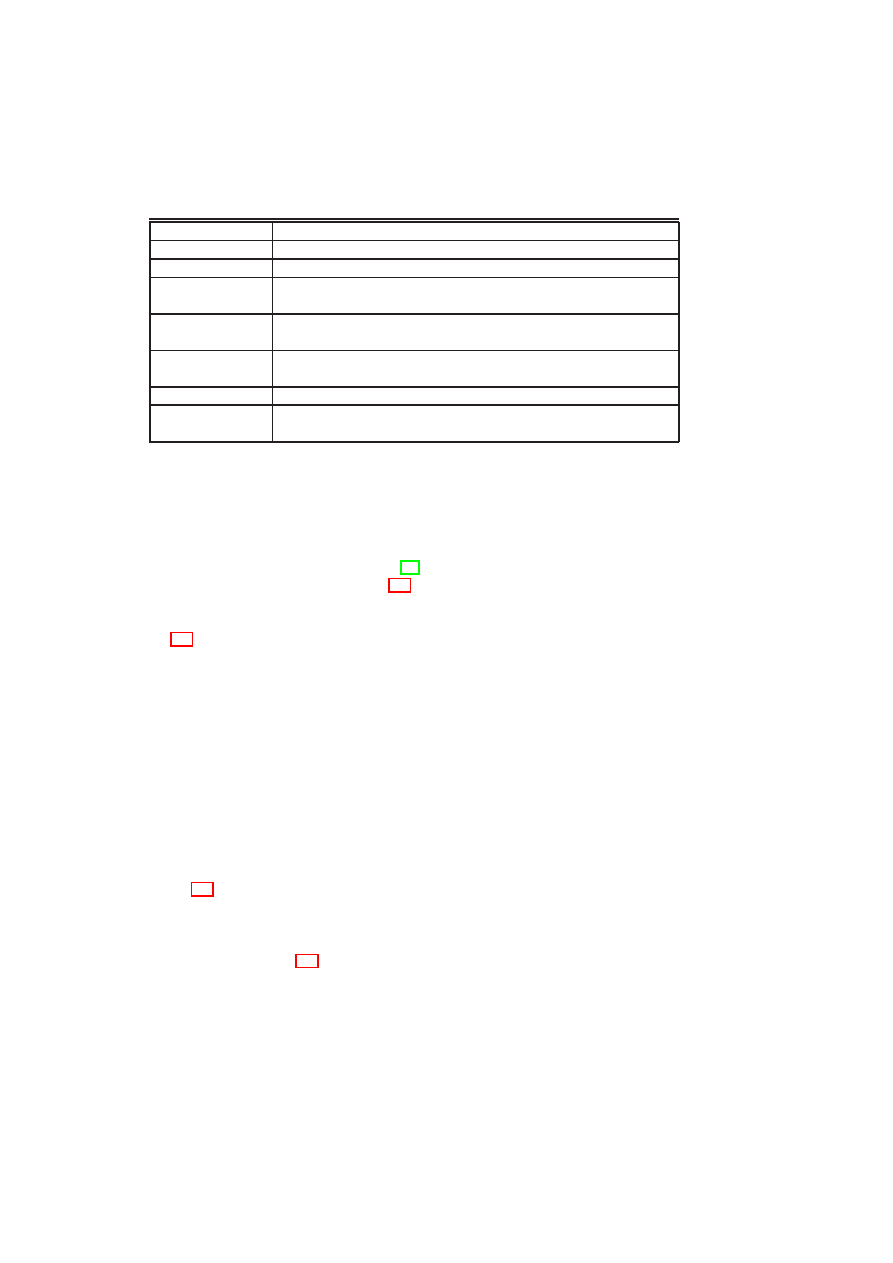

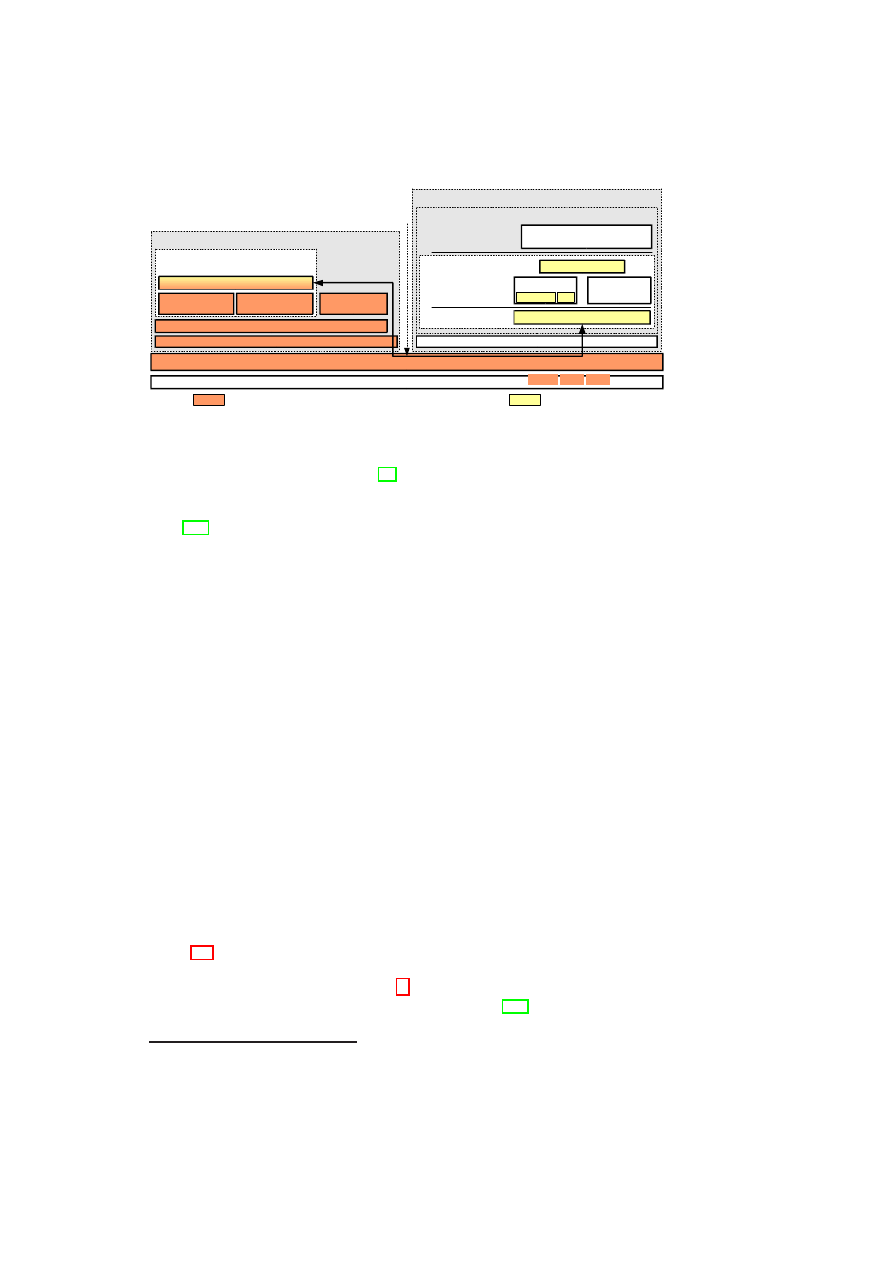

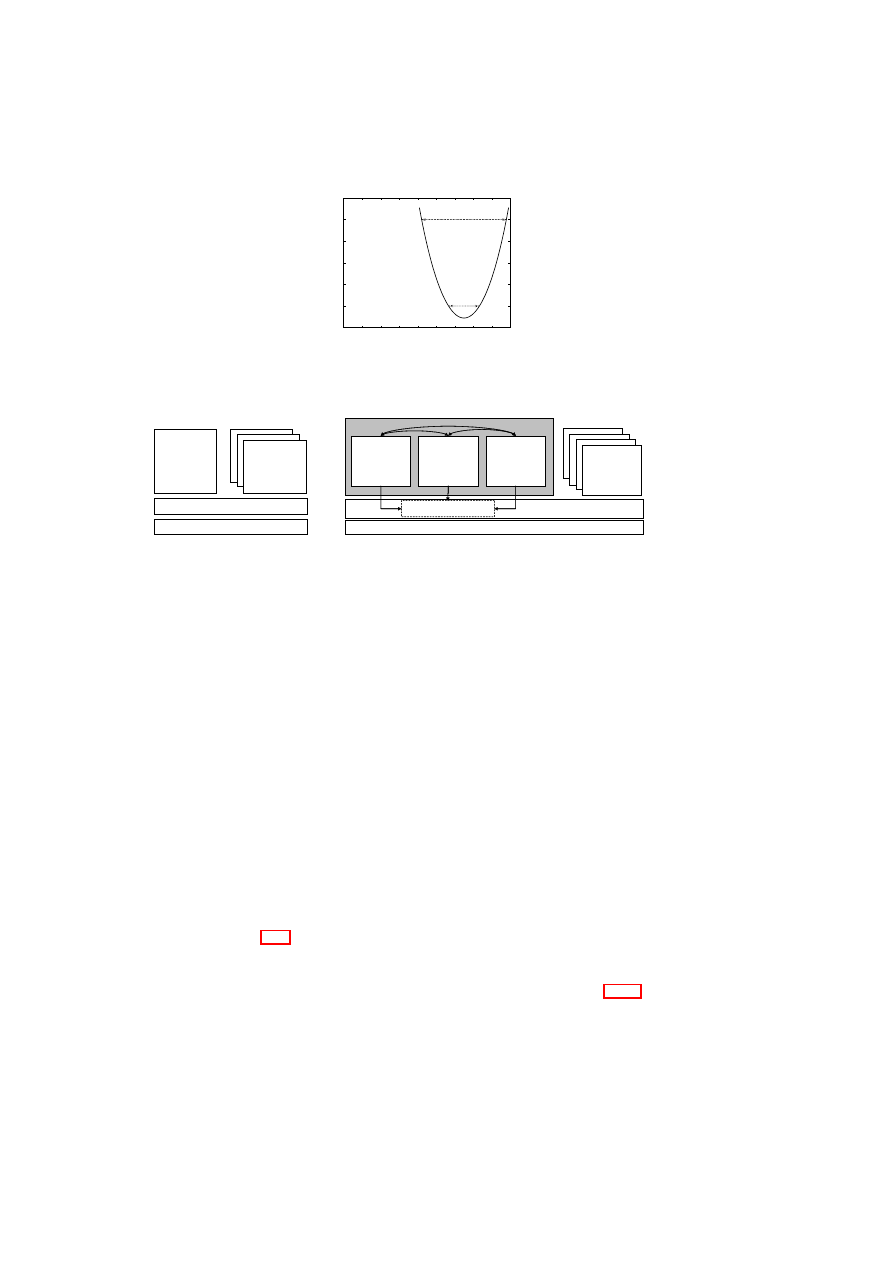

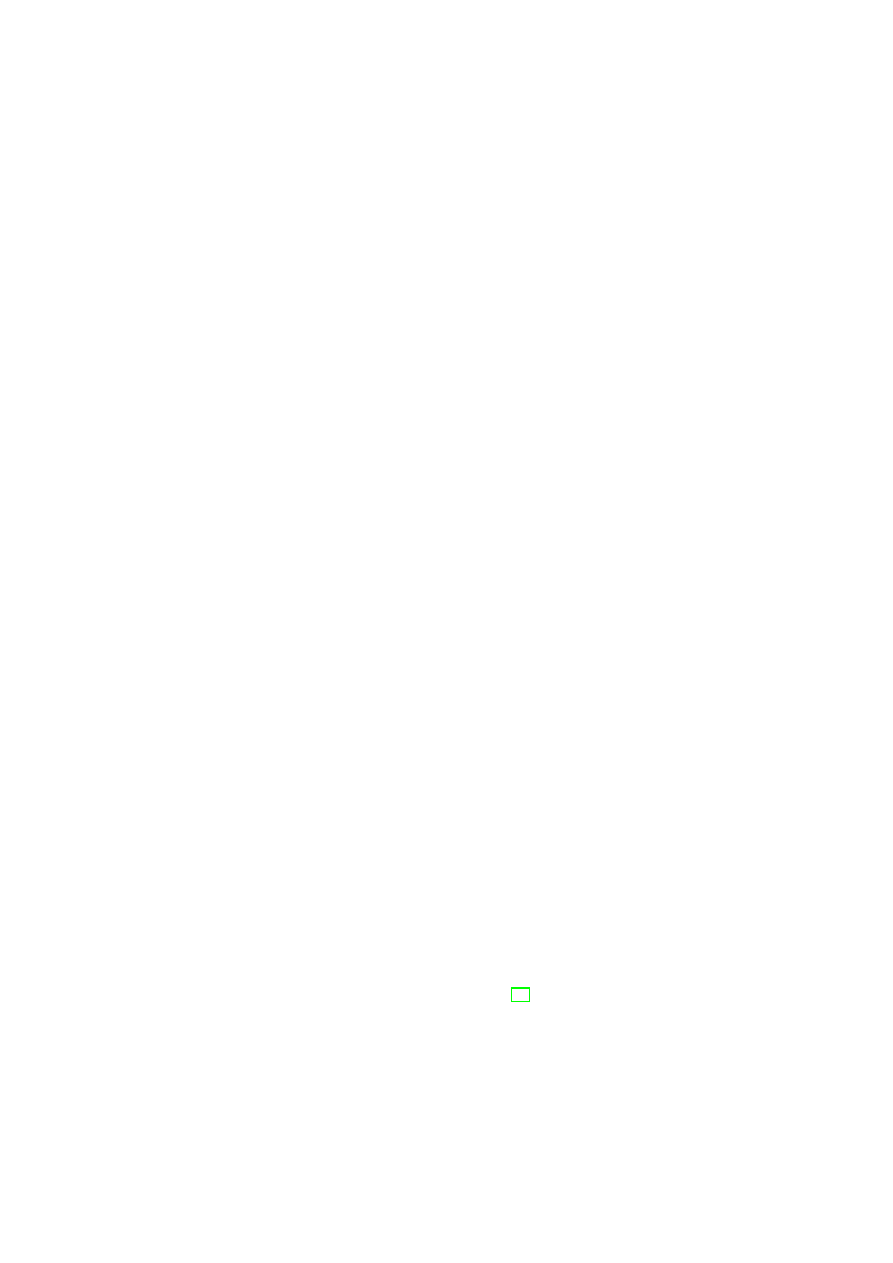

Figure 3.3: Security Enforcement for Virtualized Storage.

which data in each pool is presented to the higher-level entities (operating systems,

applications, or VMs).

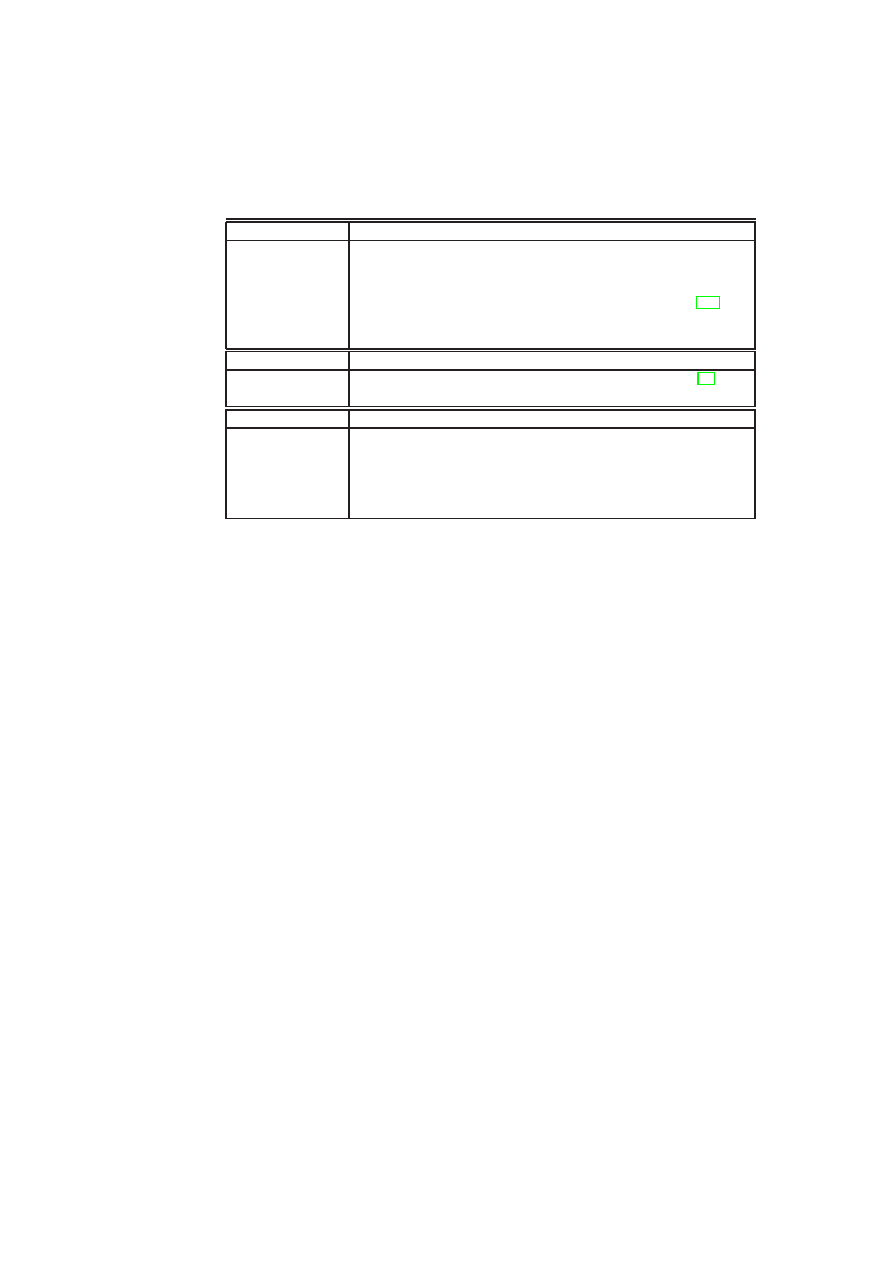

Figure 3.3 shows our storage security enforcement architecture, in which existing

heterogeneous physical storage devices are consolidated into a joint pool. This vir-

tual storage pool is then subdivided into raw storage for each TVD. Each raw storage

volume has an owner TVD that determines its policy (indicated by the labels TVD

A, TVD B, and TVD C at the per-TVD raw storage layer in the figure). In addition,

when a volume shall be shared among multiple TVDs, there is also a set of member

TVDs associated with it. The access control and encryption layer helps enforce the

storage-sharing policy defined by the owner TVD, e.g., enforcing read, write, create,

and update access permissions for the member TVDs. This layer is a logical layer that

in reality consists of the virtual storage managers (part of the security services) located

on each physical platform. The virtual storage manager on each physical platform is

responsible for enforcing the owner TVD’s storage security policies (see Section 2.3.3)

on these volumes. If a certain intra-TVD security policy requires confidentiality and

does not declare the medium as trusted, the disk is encrypted using a key belonging to

the owner TVD.

1

If conditions for (re-)mounting a disk have been defined, the disk is

also encrypted and the key is sealed against the TCB while including these conditions

into the unsealing instructions. The policy and meta-data are held on a separate raw

volume that is only accessible by the data center infrastructure.

An administrator of a domain may request that a disk be mounted to a particular

VM in a particular mode (read/write). In Xen, the disk is usually mounted in the

management machine Domain-0 as a back-end device and then accessed by a guest

VM via a front-end device. The virtual storage manager on the platform validates the

mount request against the policies of both the TVD the VM is part of and the owner

TVD for the disk. Once mounted, appropriate read-write permissions are granted based

on the flow control policy for the two TVDs, e.g., read access is granted only if the

policies specified in the disk policy matrix allow the VM’s TVD such an access to the

disk belonging to the owner TVD.

3.4

TVD Admission Control

When a VM is about to join a TVD, different properties will be verified by the local

TVD Proxy to ensure that policies of all the TVDs that the VM is currently a member

of as well as of the TVD that it wants to join are not violated. If the verification is

successful, then the VM will be connected to that TVD. The TVD admission control

1

For efficiency reasons, we currently do not provide integrity protection.

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

CHAPTER 3. UNIFIED POLICY ENFORCEMENT FOR VIRTUAL DATA CENTERS27

protocol is the procedure by which the VM gets connected to the TVD. In the case of

a VM joining multiple TVDs, the admission control protocol is executed for each of

those TVDs. We now describe the steps of the protocol.

We assume that the computing platform that executes the VM provides mechanisms

that allow remote parties to convince themselves about its trustworthiness. Example

mechanisms include trusted (authenticated) boot and the remote attestation protocol

(see Section 4.2) based on TPM technology.

TVD Proxy Initialization Phase:

To allow a VM to join a TVD, the platform host-

ing the VM needs access to the TVD policy, and upon successful admission, to TVD

secrets, such as the VPN key. For this purpose, TVD Proxy services are started on the

platform for each TVD whose VMs may be hosted. The TVD Proxy can be started at

boot time of the underlying hypervisor, by a system service (TVD Proxy Factory), or

by the VM itself, as long as the TVD Proxy is strongly isolated from the VM.

Pre-Admission Phase:

When a VM wants to join a TVD that is going to be hosted

on the platform for the first time, the TVD Master has to establish a trust relationship

with the platform running the VM, specifically with the TVD Proxy. We call this

step the pre-admission phase. It involves the establishment of a trusted channel (see

Section 4.3) between the TVD Master and the TVD Proxy (or the TVD Proxy Factory).

The trusted channel allows the TVD Master to verify the integrity of the TVD Proxy

(Factory) and the underlying platform. After the trusted channel has been established

and the correct configuration of the Proxy has been verified, the TVD Master can send

the TVD policies and credentials (such as a VPN key) to the TVD Proxy.

Admission Control Phase:

The Compartment Manager (part of the platform secu-

rity services shown in Figure 3.1) is responsible for starting new VMs. The Compart-

ment Manager loads the VM configuration and enforces the security directives with

the help of the Integrity Manager (also part of the platform security services shown in

Figure 3.1). The security directives may include gathering the VM state information,

such as the VM configuration, kernel, and disk(s) that are going to be attached to the

VM.

If the VM configuration states that the VM should join one or more TVDs, then the

Compartment Manager interacts with the corresponding TVD Proxy(ies) and invokes

TPM functions to attest the state of the VM. The TVD Proxy verifies certain properties

before allowing the VM to join the TVD. More concretely, the TVD Proxy has to

ensure that

•

the VM fulfills the integrity requirements of the TVD;

•

the information flow policies of all TVDs the VM will be a member of will not

be violated;

•

the VM enforces specific information flow rules between TVDs if such rules are

required by the TVD policy, and that

•

the underlying platform (e.g., the hypervisor and attached devices) fulfills the

security requirements of the TVD.

Platform verification involves matching the security requirements with the platform’s

capabilities and mechanisms instantiated on top of these capabilities. For example,

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

28

OpenTC D05.6 – Final Report of OpenTC Workpackage 5

suppose that data confidentiality is a TVD requirement. Then, if hard disks or network

connections are not trusted, additional mechanisms, such as block encryption or VPN

(respectively), need to be instantiated to satisfy the requirement.

TVD Join Phase:

If the VM and the provided infrastructure fulfill all TVD require-

ments, a new network stack is created and configured as described in Section 3.2. Once

the Compartment Manager has started the VM, it sends an attach request to the corre-

sponding TVD vSwitch. Once the VM is connected to the vSwitch, it is a member of

the TVD.

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

Chapter 4

Background and Related Work

In order to put our work in context we survey key concepts that underlie our approach.

Section 4.1 presents the TVD concept, which can be thought of as a virtualization

of today’s security zones while making security requirements explicit. Section 4.2

describes Trusted Computing concepts. The core of this concept is a security hardware

device called Trusted Platform Module that guarantees certain security functionalities

in spite of attacks. We finally survey related work on trusted channels in Section 4.3

and on secure virtual networking in Section 4.4.

4.1

Overview of Trusted Virtual Domains

Bussani et al. [16] introduced the concept of TVDs. A Trusted Virtual Domain con-

sists of a set of distributed Virtual Processing Elements (VPEs), storage for the VPEs,

and a communication medium interconnecting the VPEs [16, 62, 45]. The TVD pro-

vides a policy and containment boundary around those VPEs. VPEs within each TVD

can usually exchange information freely and securely with each other. At the same

time, they are sufficiently isolated from outside VPEs, including those belonging to

other TVDs. Here, isolation loosely refers to the requirement that a dishonest VPE in

one TVD cannot exchange information (e.g., by sending messages or by sharing stor-

age) with a dishonest VPE in another TVD, unless the inter-TVD policies explicitly

allow such an exchange. There is a TVD infrastructure (for each TVD) that provides a

unified level of security to member VPEs, while restricting the interaction with VPEs

outside the TVD to pre-specified, well-defined means only. Unified security within a

virtual domain is obtained by defining and enforcing membership requirements that the

VPEs have to satisfy before being admitted to the TVD and for retaining membership.

Each TVD defines rules regarding information exchange with the outside world, e.g.,

restrictions regarding in-bound and out-bound network traffic.

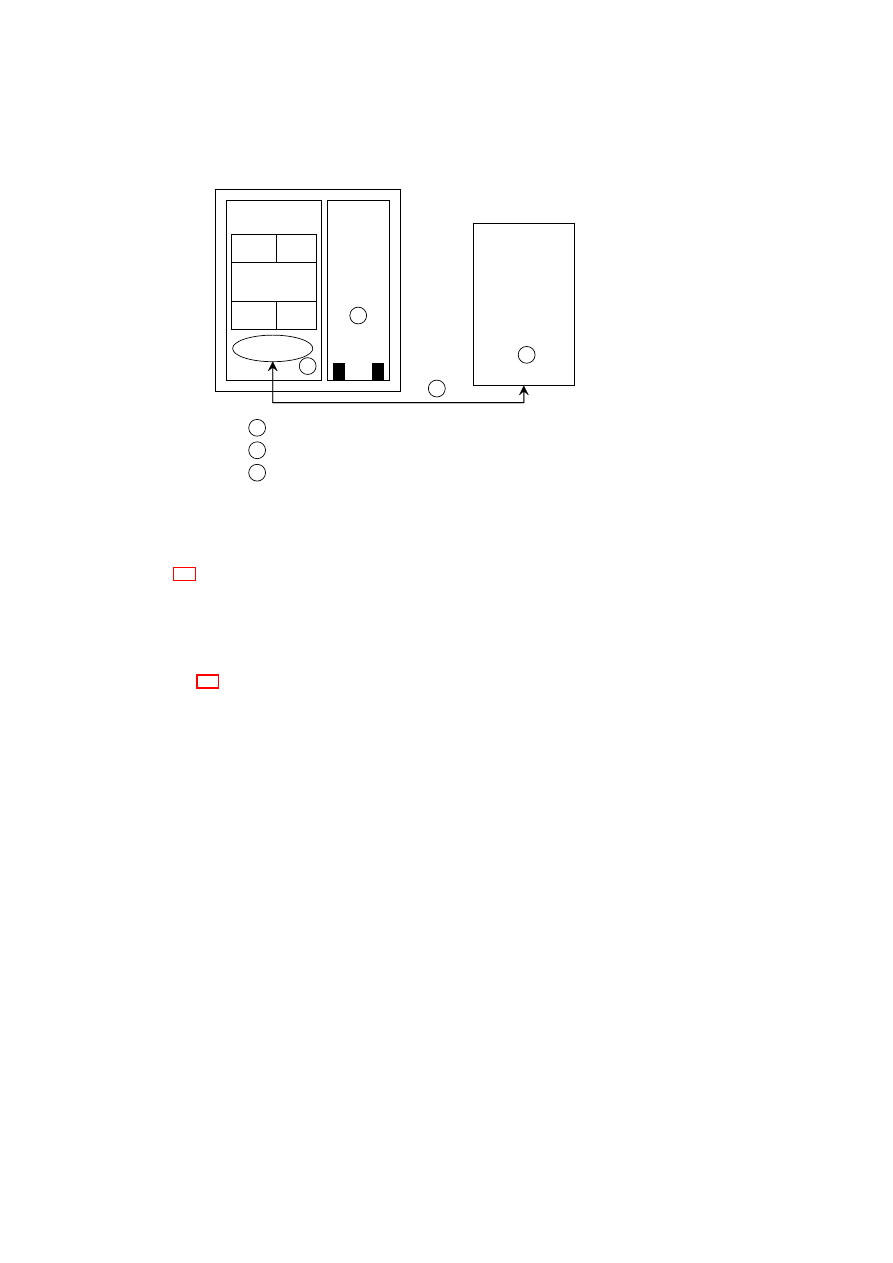

Figure 1.1 shows customer VMs as VPEs belonging to

TVD

1

spanning two plat-

forms (contained in the dashed boxes). The Master (

TVD1 Master

) and Proxy compo-

nents (

Proxy1

on each platform) are part of the TVD infrastructure, which we describe

in detail in Section 3.1. The TVD Master is the orchestrator of the TVD deployment

and configuration. There is one TVD Proxy for each platform hosting VMs belonging

to that TVD. If the platform hosts VMs belonging to multiple TVDs, then there are

multiple TVD proxies on that platform, one per TVD. The TVD Proxy on a platform

is configured by the TVD Master and can be thought of as the local TVD policy en-

29

30

OpenTC D05.6 – Final Report of OpenTC Workpackage 5

forcer. VMs belonging to the same TVD can usually exchange information freely with

each other unless restricted by VM-level policies. For example, traffic originating from

VM

A

1

or

VM

A

2

on Host A is routed to

VM

Bi

(

i

= 1

,

· · ·

,

4

) on Host B without any

restrictions. Information exchange among TVDs can be allowed; however, it is subject

to the network and storage policies stated by each TVD Master and locally enforced by

each TVD Proxy.

4.2

Trusted Computing – The TCG Approach

It is important to have reliable mechanisms for a system to reason and verify the trust-

worthiness (i.e., compliance with a certain security policy) of a peer endpoint (local

or remote). A recent industrial initiative towards realizing such a mechanism was put

forward by the Trusted Computing Group (TCG) [113], a consortium of a large number

of IT enterprises that proposes a new generation of computing platforms that employs

both supplemental hardware and software (see, e.g., [81, 100]). The TCG

1

has pub-

lished several specifications on various concepts of trusted infrastructures [121].

The Trusted Platform Module

The core component the TCG specifies is the Trusted

Platform Module (TPM). Currently, the widespread implementation of the TPM is a

small tamper-evident chip

2

that implements multiple roots-of-trust [122, 120], e.g., the

root-of-trust for reporting and the root-of-trust for storage. Each root-of-trust enables

parties, both local and remote, to place trust on a TPM-equipped platform that the lat-

ter will behave as expected for the intended purpose. By definition, the parties trust

each root-of-trust, and therefore it is essential that the roots-of-trust always behave as

expected. Given that requirement, a hardware root-of-trust – especially one that is com-

pletely protected from software attacks and tamper-evident against physical attacks, as

required by the TPM specification – is assumed to provide a better protection than

software-only solutions.

Attestation and Integrity Verification

The Trusted Computing features we leverage

in this paper are protection of keys, secure recording of integrity measurements, attes-

tation, and sealing. Integrity verification mechanisms enable a remote party to verify

whether system components conform to certain security policies. Measurement of a

component involves computing the SHA-1 hash of the binary code of that component.

In particular, each software component in the Trusted Computing Base (TCB) is first

measured and then its measurement recorded before control is passed to it. The hash

values are then appended to a hash chain, which is kept in special protected registers

called Platform Configuration Registers (PCRs), thus acting as accumulators for mea-

surements. Recording a measurement means appending it to the hash chain by PCR

extend operation

3

. The sequence of measured values are also stored in a measurement

log

4

, external to the TPM.

1

TCG’s claimed role is to develop, define, and promote open and vendor-neutral industry specifications

for Trusted Computing, including hardware building blocks and software interface specifications across mul-

tiple platforms and operating environments.

2

Many vendors already ship their platforms with TPMs (mainly laptop PCs and servers).

3

Extending of PCR values is performed as follows:

PCR

i

+1

:= SHA1(

PCR

i

|

I

)

, with the old register

value

PCR

i

, the new register value

PCR

i

+1

, and the input

I

(e.g. a SHA-1 hash value).

4

Since each PCR holds only the digest of (part of) the chain of trust, keeping the list of all measured

values is required if afterwards, during the attestation process, a remote party wants to identify each measured

component.

OpenTC Document D05.6/V01 – Final R7628/2009/01/15/OpenTC Public (PU)

CHAPTER 4. BACKGROUND AND RELATED WORK

31

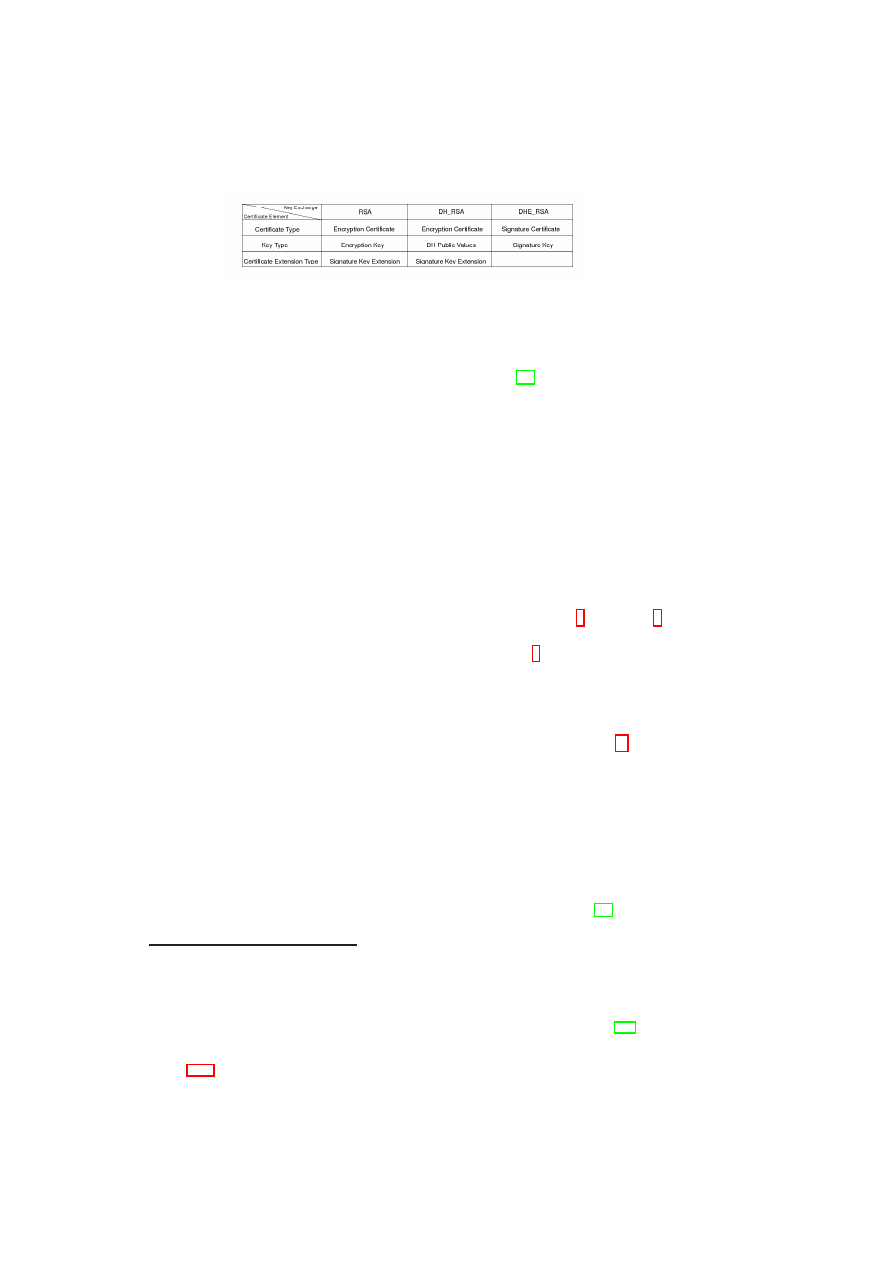

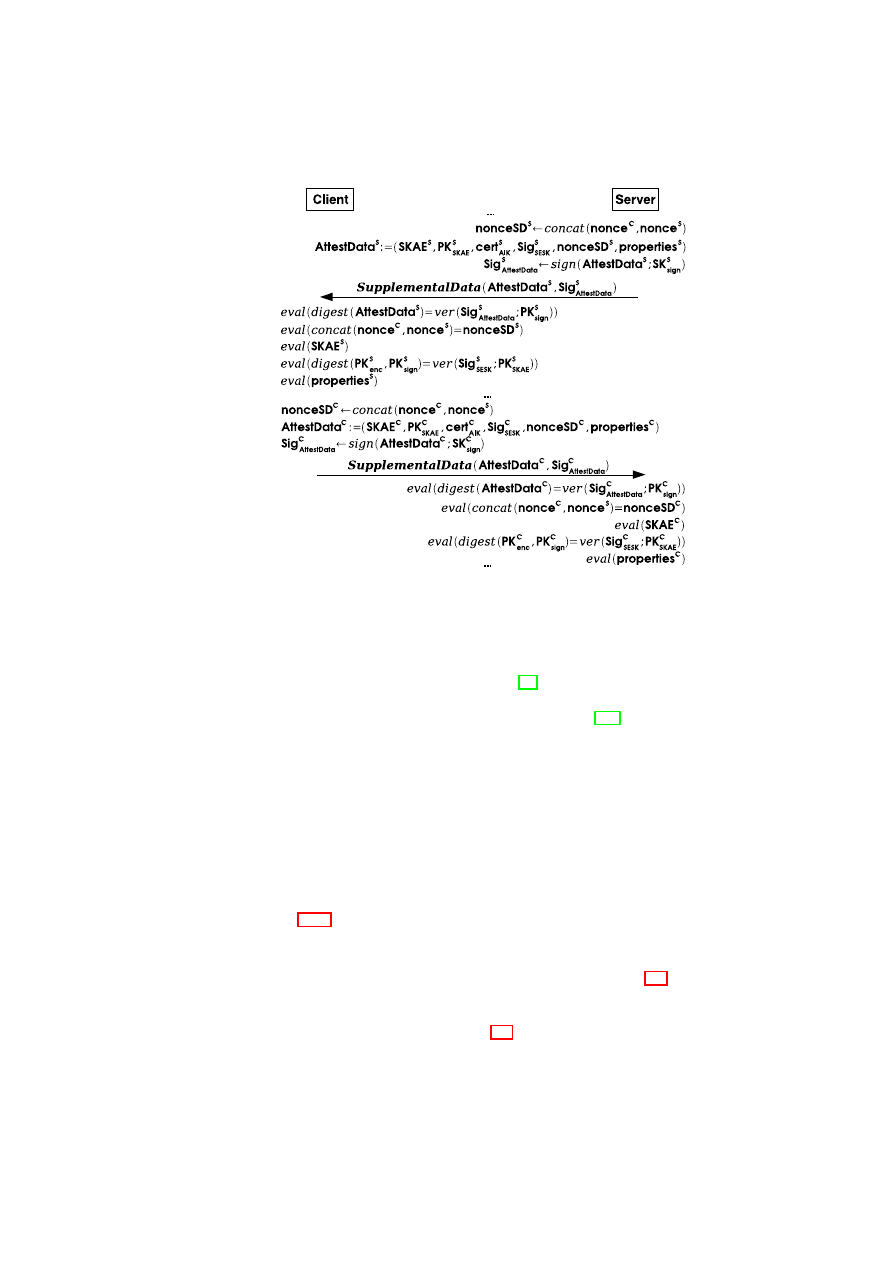

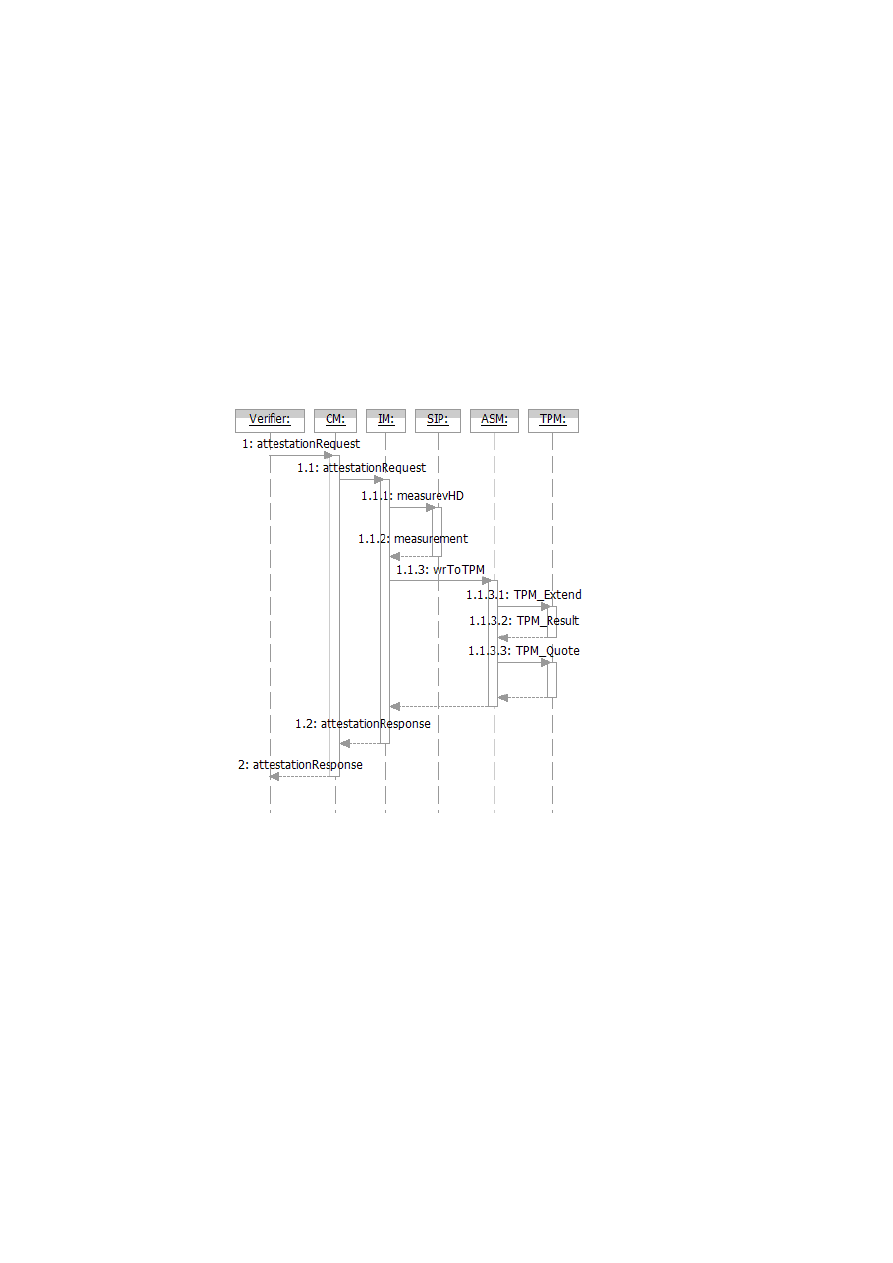

Attestation refers to the challenge-response-style cryptographic protocol for a re-