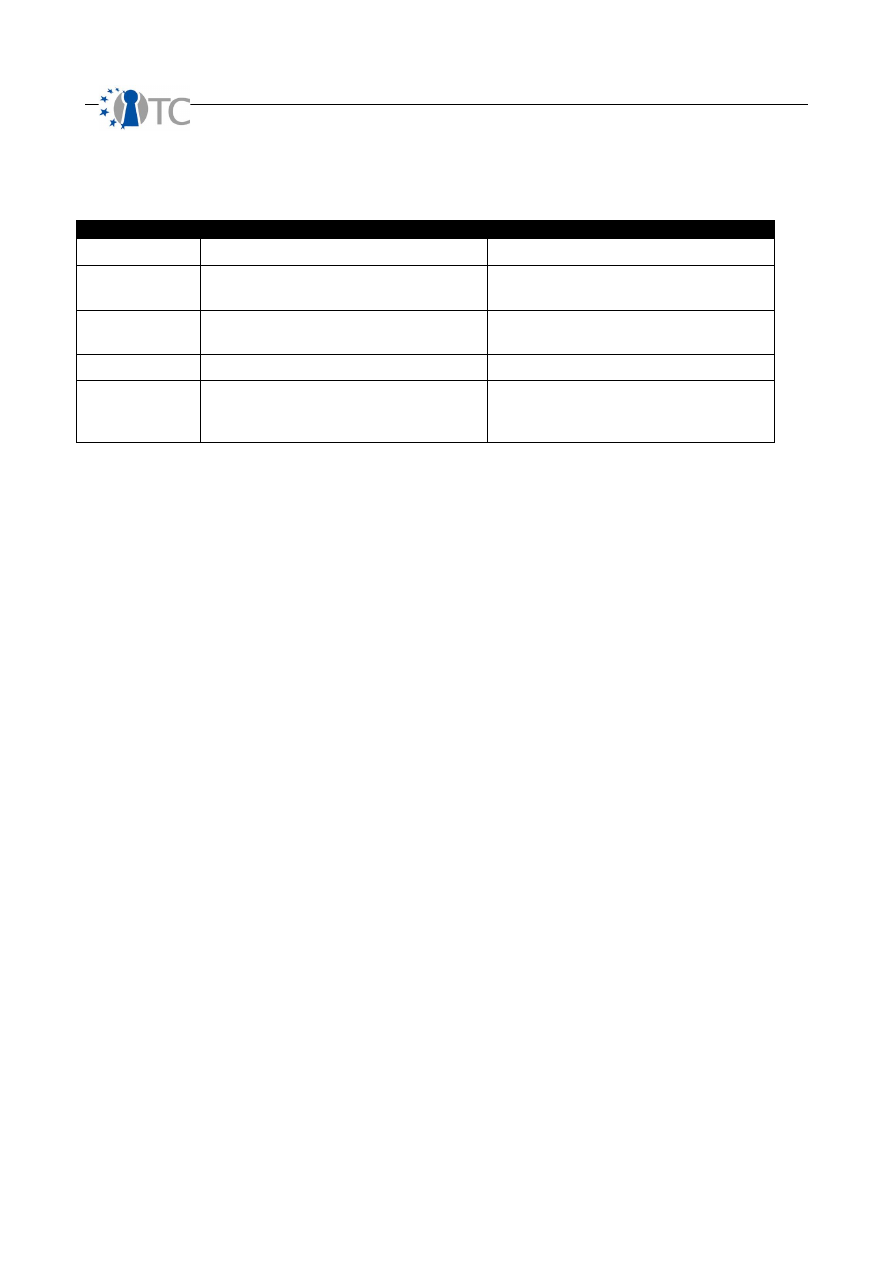

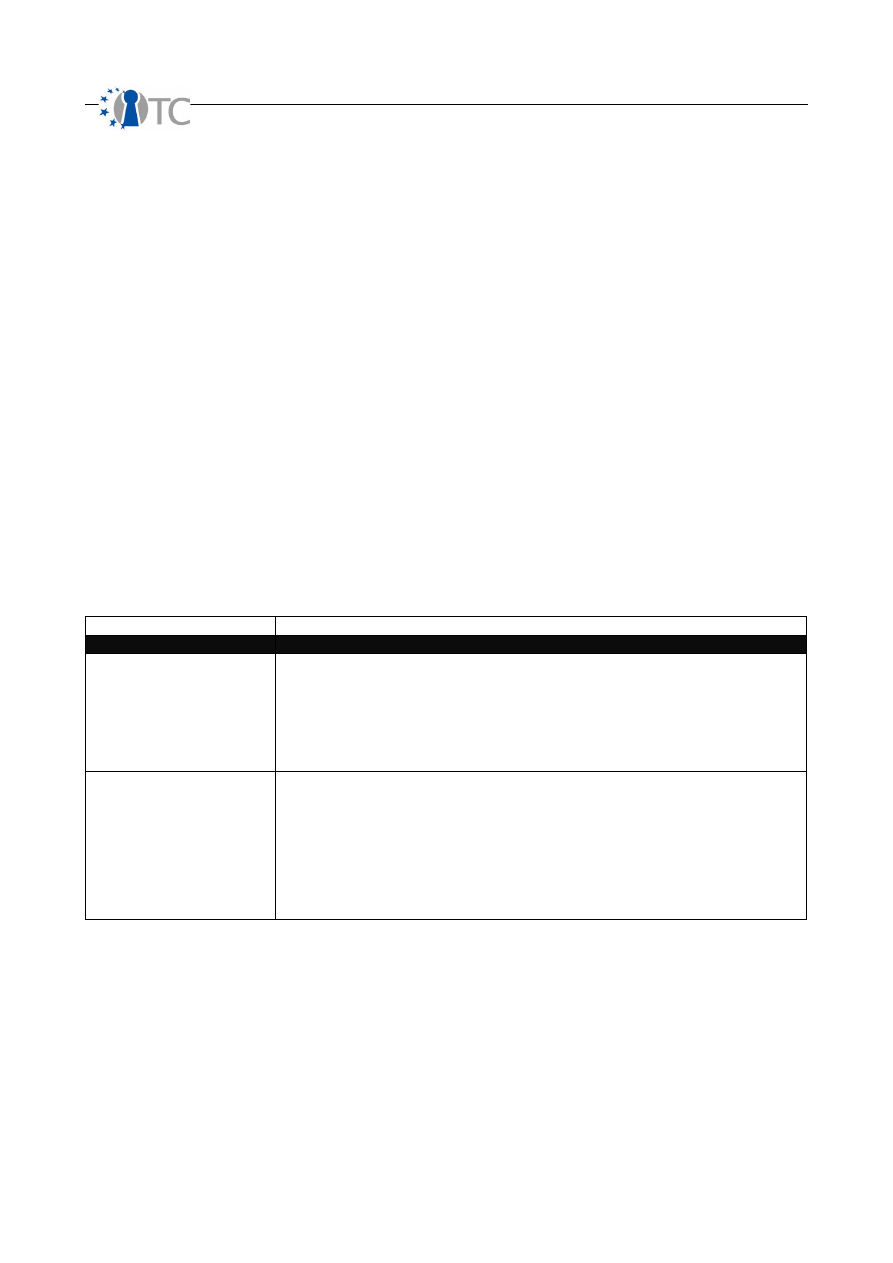

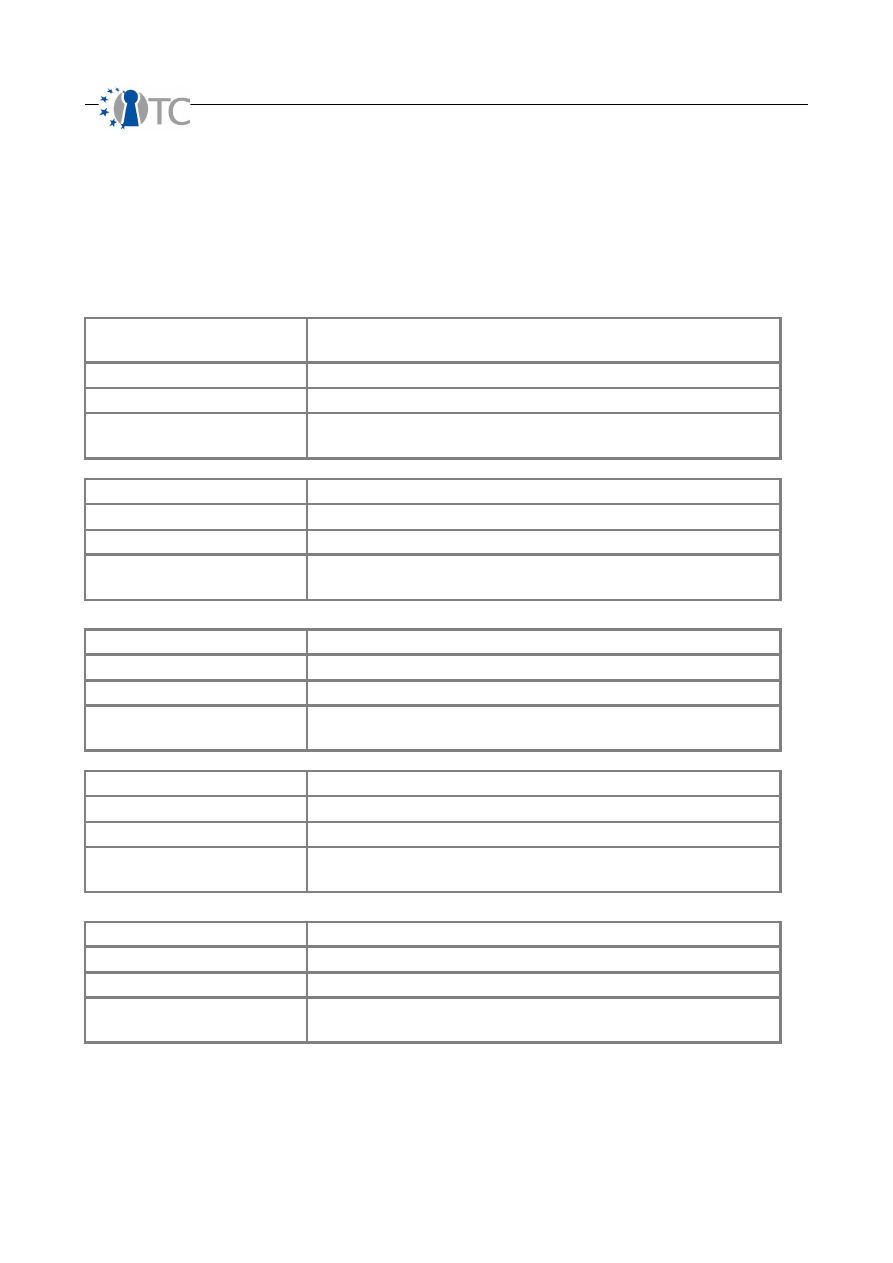

D07.2 V&V Report #2: Methodology definition,

analyses results and certification

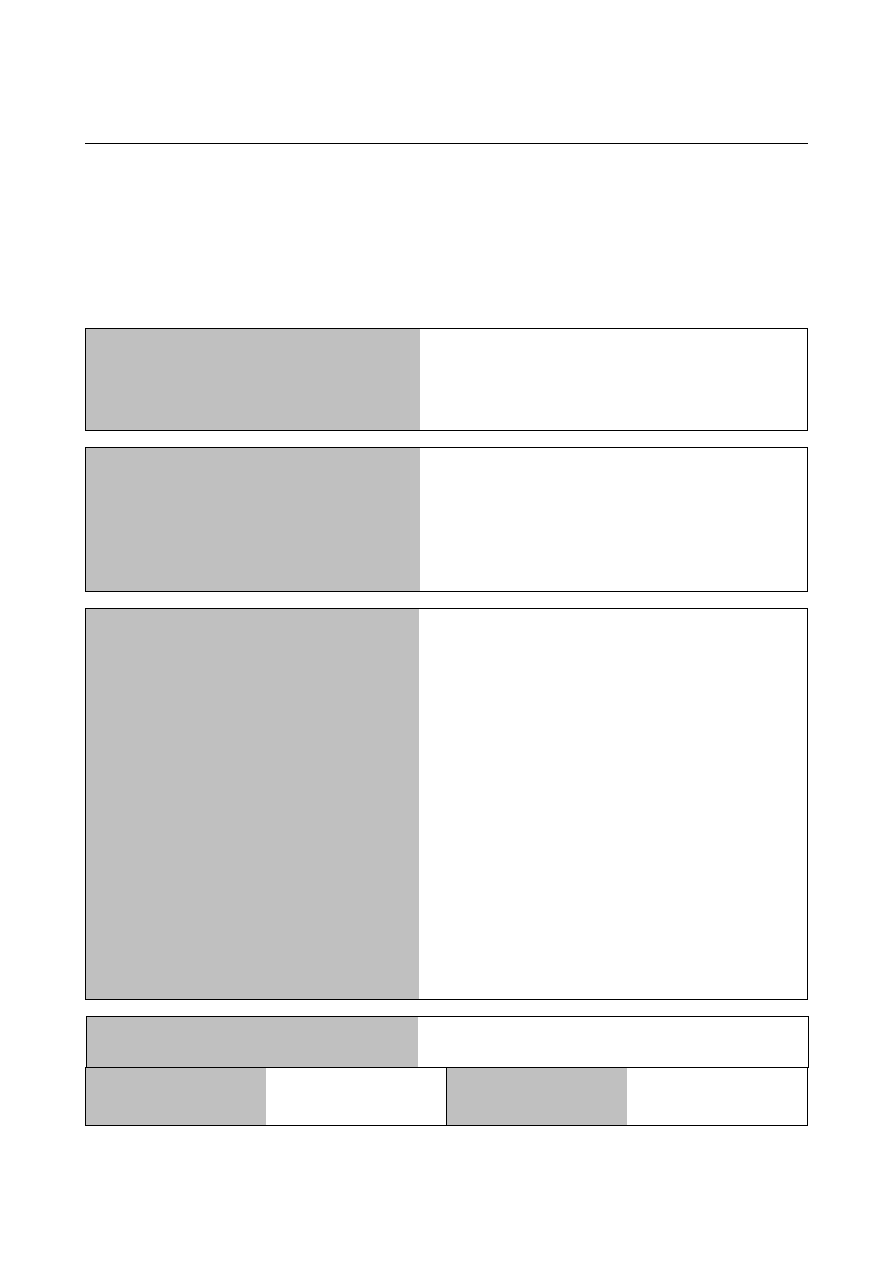

Project number

IST-027635

Project acronym

Open_TC

Project title

Open Trusted Computing

Deliverable type

Report

Deliverable reference number

IST-027635/D07.02/1.2

Deliverable title

D07.2 V&V Report #2: Methodology

definition, analyses results and certification

WP contributing to the deliverable

WP07

Due date

Oct 2007 - M24

Actual submission date

4 December 2007

Responsible Organisation

CEA

Authors

Pascal Cuoq, Roman Drahtmüller, Ivan

Evgeniev, Vesselin Gueorguiev,

Pete Herzog,

Zoltan Hornak, Virgile Prevosto, Armand

Puccetti (eds.), Gergely Toth.

Abstract

This deliverable is an intermediate report on

the V&V activities undertaken in WP07. This

document present research and application

results. These deal with 1) the testing and

analysis of the WP07 targets, 2) tools for the

analysis of C and C++, 3) trust and security

metrics and methodology improvements,

and 4) certifiability of the XEN target.

Keywords

V&V, analysis, certification, testing, XEN

Dissemination level

Public

Revision

1.2

Instrument

IP

Start date of the

project

1

st

November 2005

Thematic Priority

IST

Duration

42 months

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

If you need further information, please visit our website

www.opentc.net

or contact

the coordinator:

Technikon Forschungs-und Planungsgesellschaft mbH

Richard-Wagner-Strasse 7, 9500 Villach, AUSTRIA

Tel.+43 4242 23355 –0

Fax. +43 4242 23355 –77

Email

coordination@opentc.net

The information in this document is provided “as is”, and no guarantee

or warranty is given that the information is fit for any particular purpose.

The user thereof uses the information at its sole risk and liability.

OpenTC Deliverable 07.02

2/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

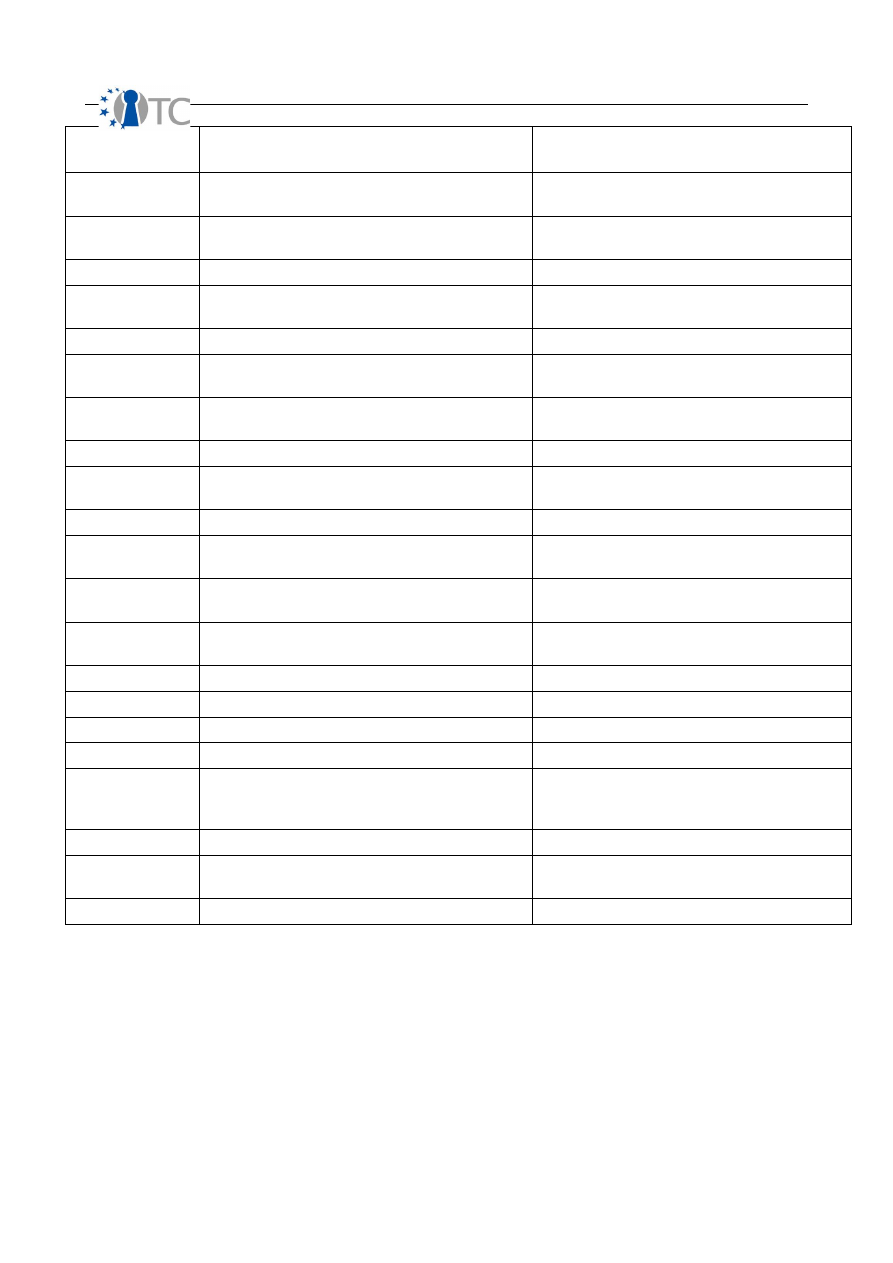

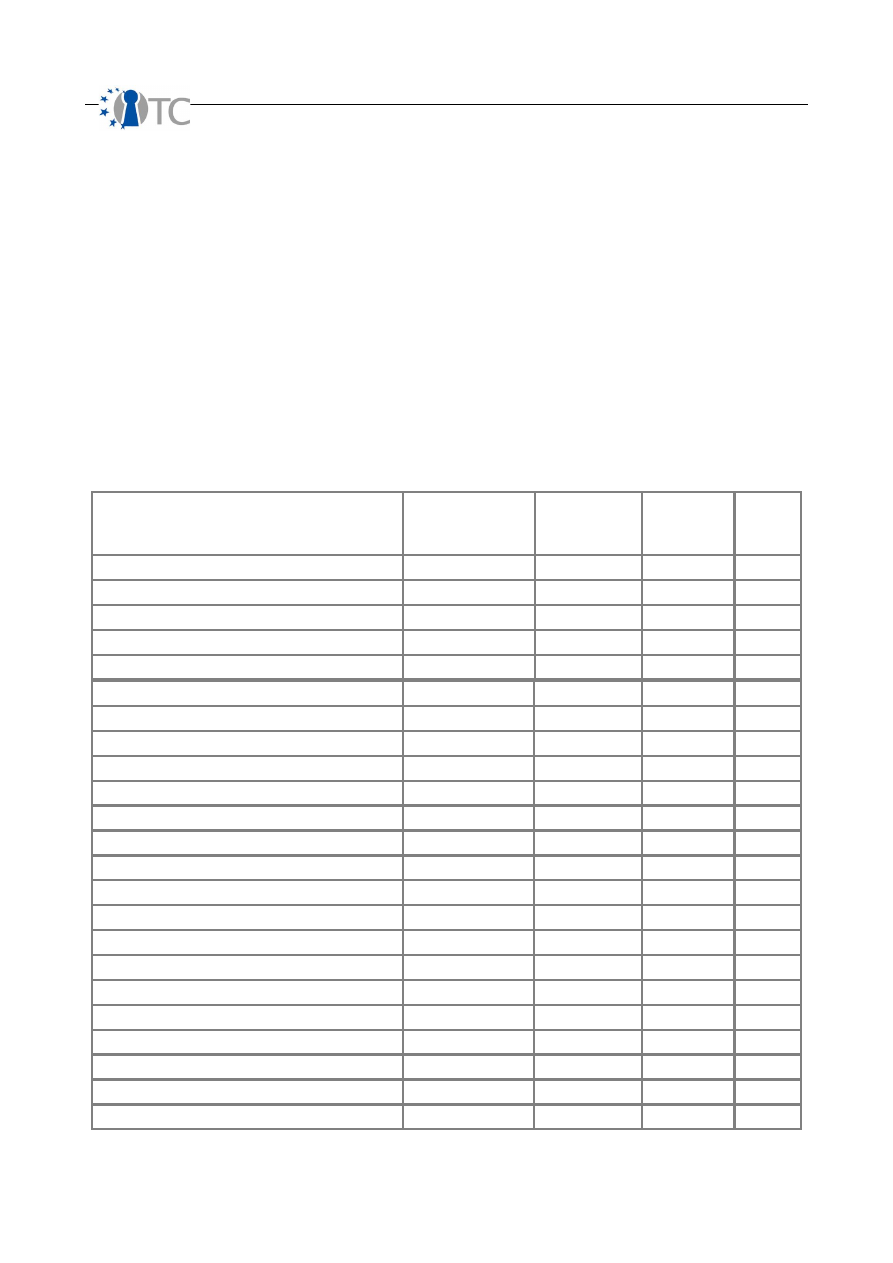

Table of Contents

1 Summary.................................................................................................................... 6

2 Introduction ............................................................................................................... 7

2.1 Outline................................................................................................................... 7

2.2 Targets analyses.................................................................................................... 8

2.3 Structure of this report.......................................................................................... 8

3 Development of security and trust metrics.............................................................. 10

3.1 Overview.............................................................................................................. 10

3.2 Technical background.......................................................................................... 10

3.3 Security Metrics................................................................................................... 13

3.4 Trust metrics........................................................................................................ 25

3.5 Complexity........................................................................................................... 28

3.6 Testing Methodology Improvements.................................................................... 33

3.7 On-going work and future directions.................................................................... 34

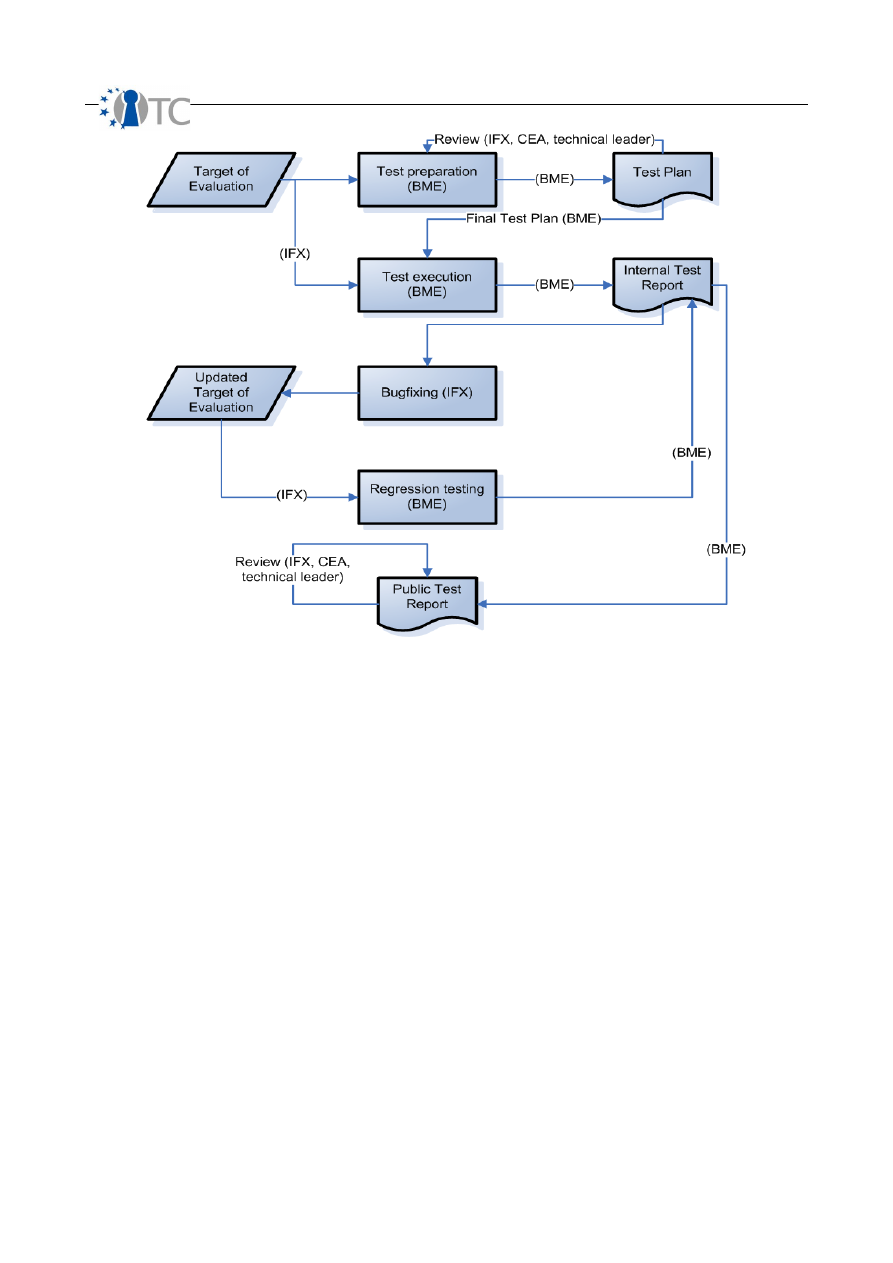

4 Dynamic analysis of targets..................................................................................... 35

4.1 Overview.............................................................................................................. 35

4.2 Technical background........................................................................................... 36

4.3 Testing the IFX TSS............................................................................................... 39

4.3.2.1 Test summary................................................................................................. 45

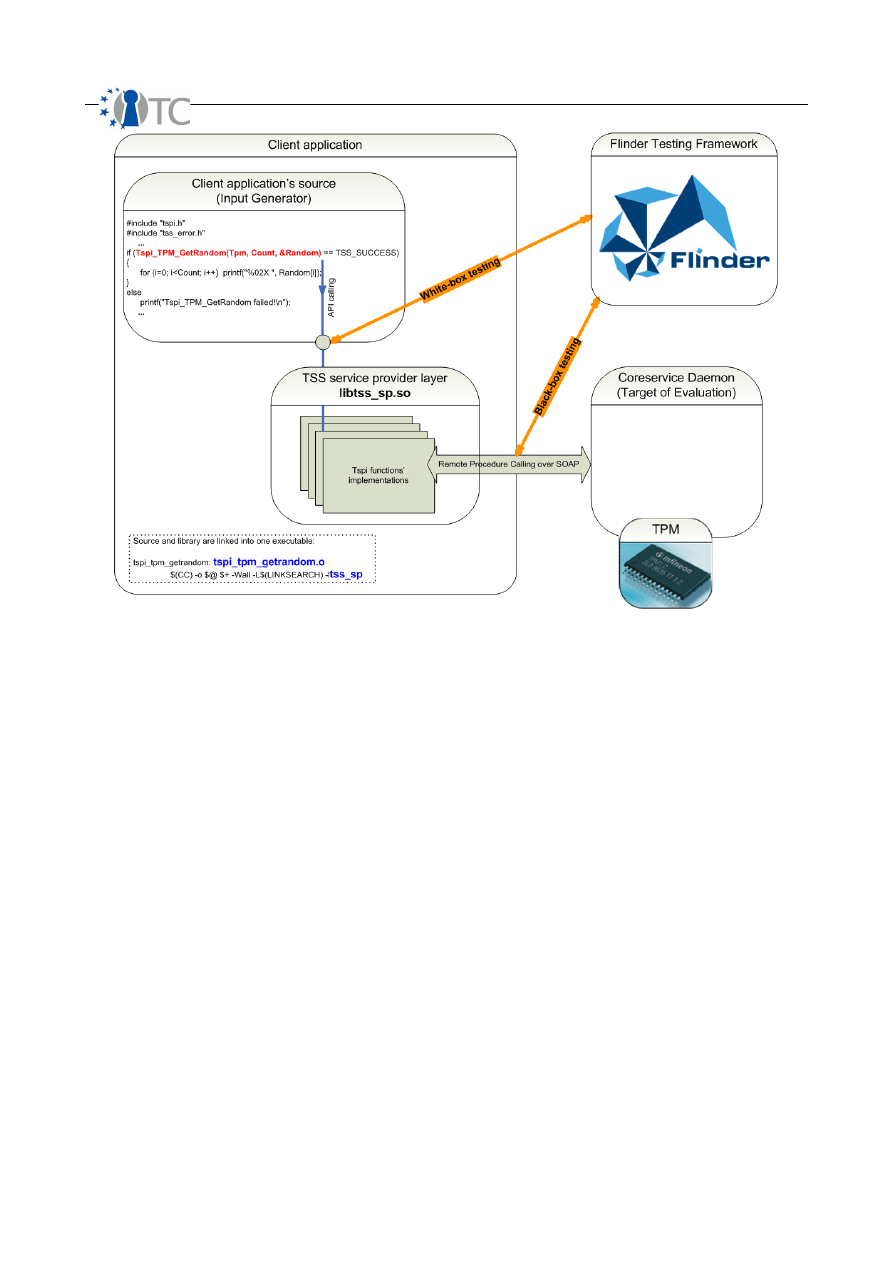

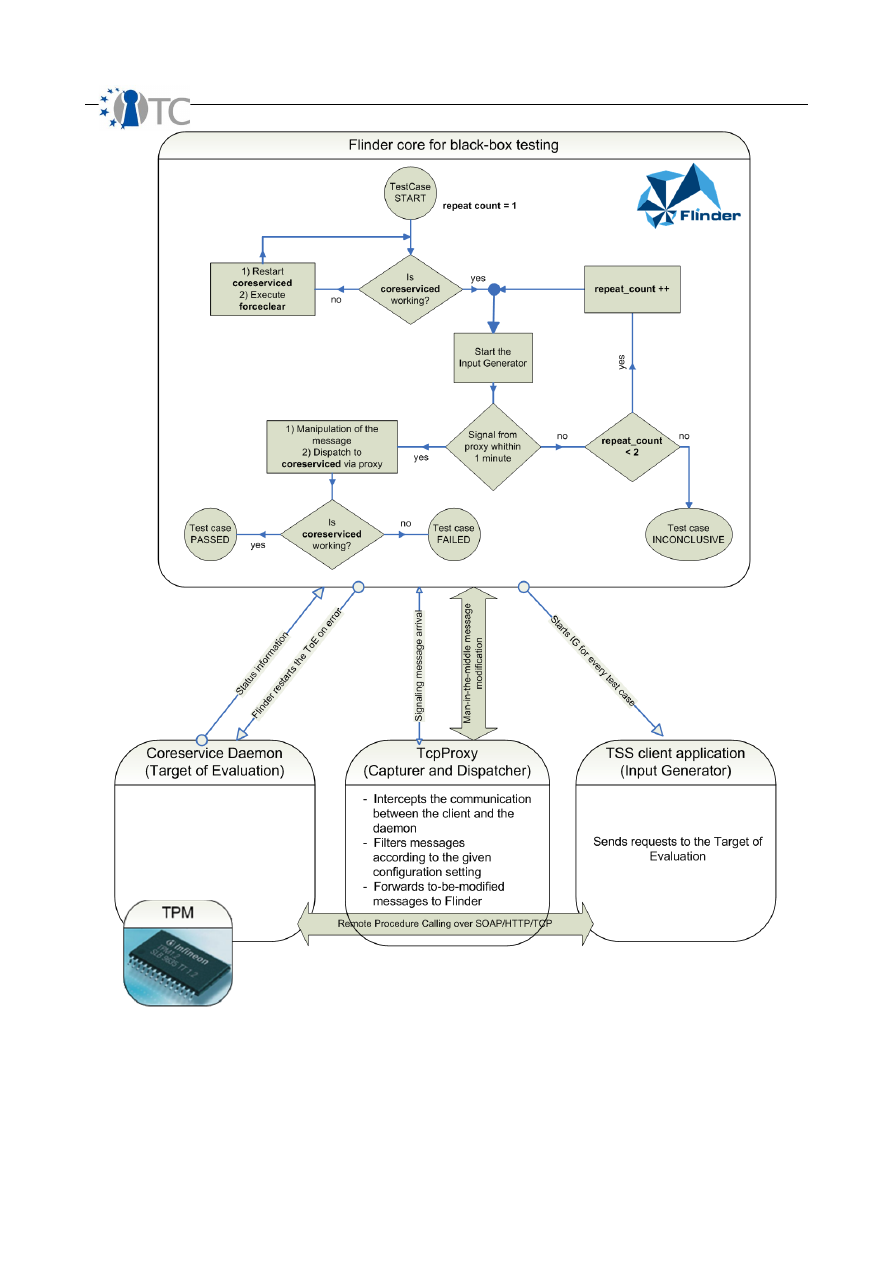

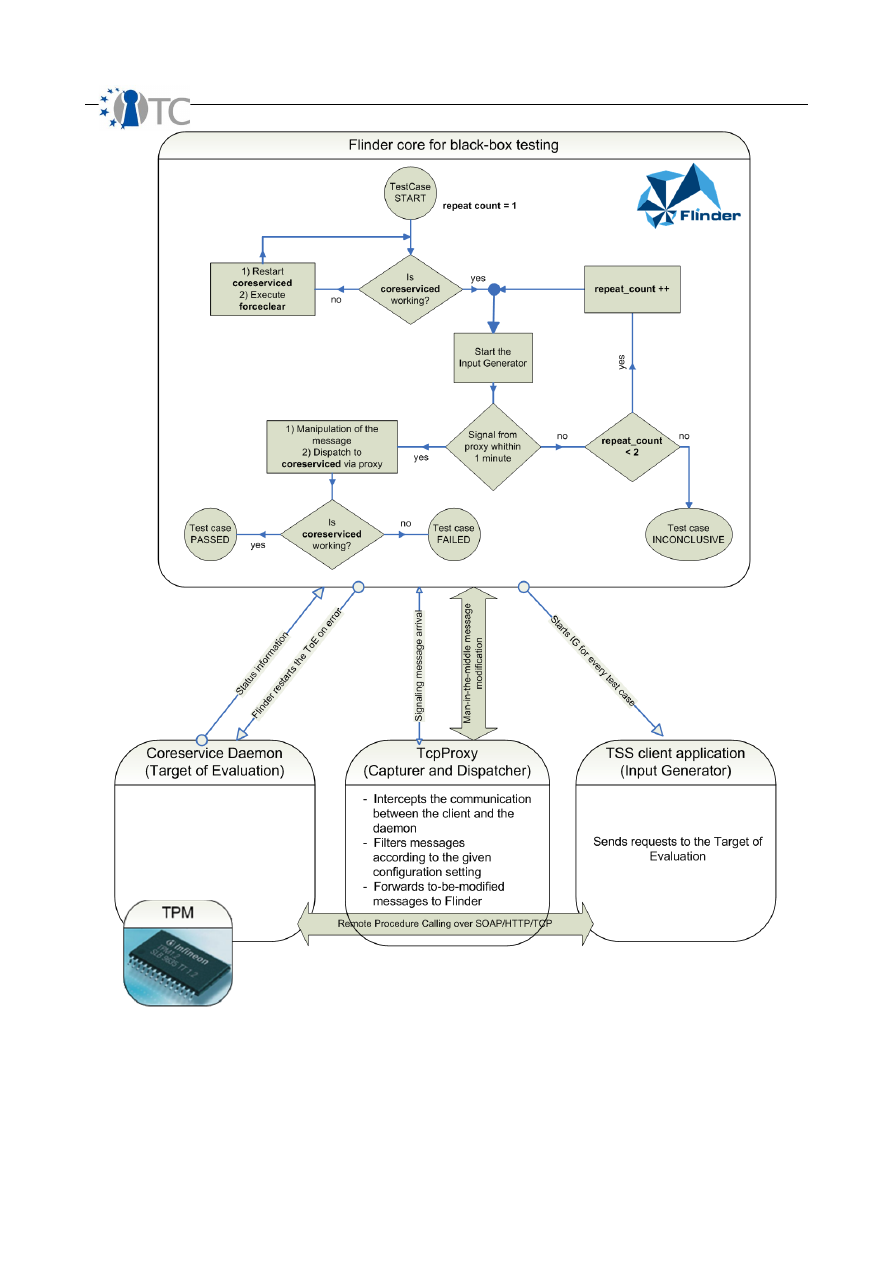

4.3.2.2 Black-box SOAP testing................................................................................... 50

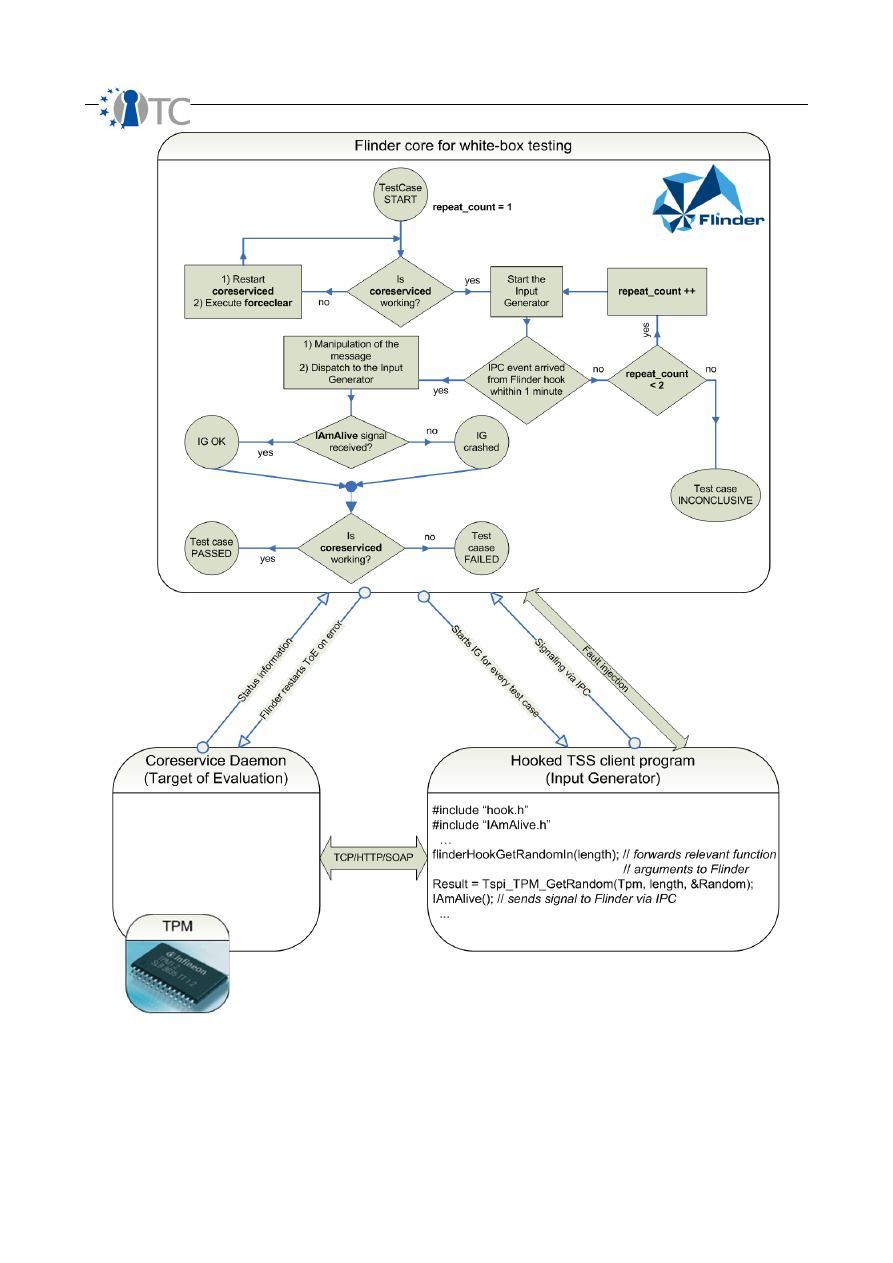

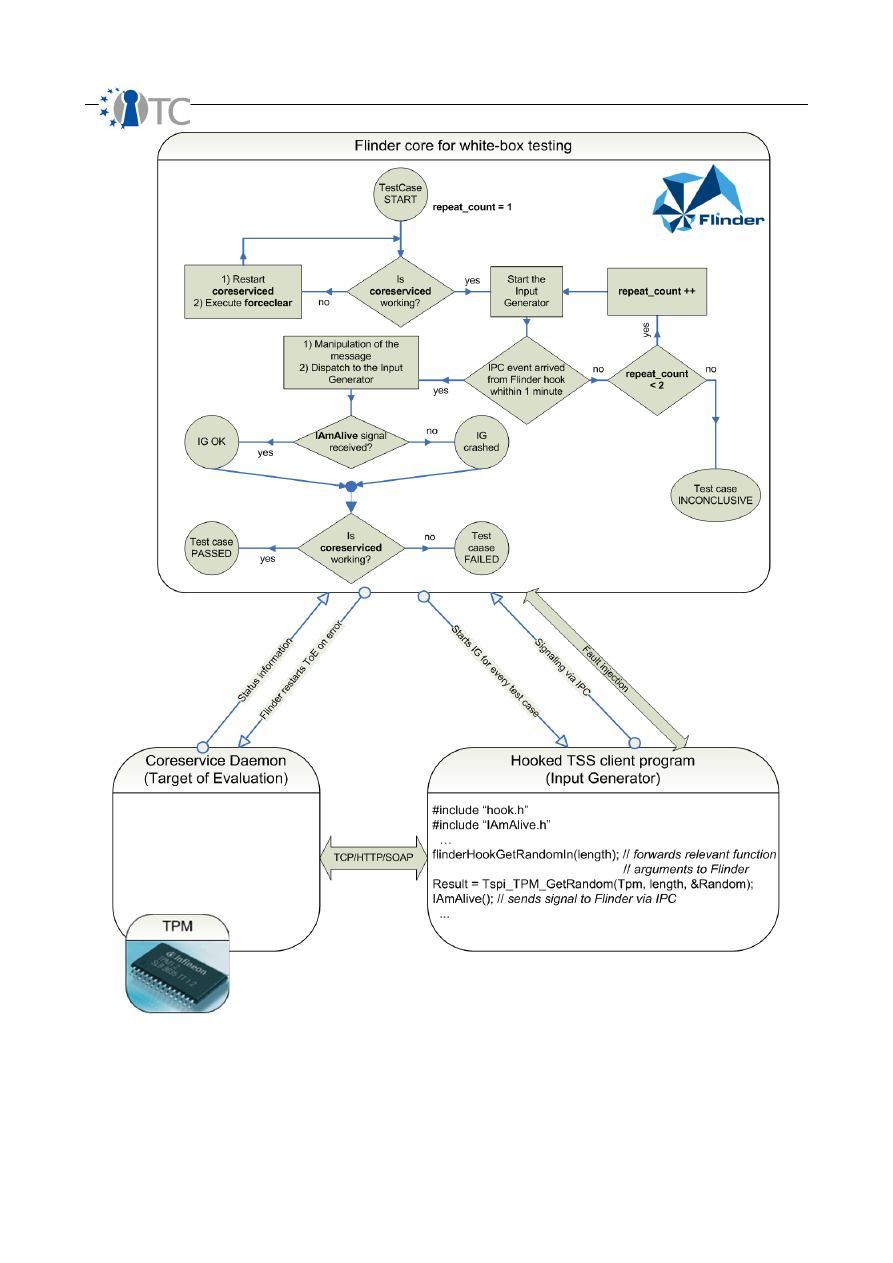

4.3.2.3 White-box testing............................................................................................ 53

4.4 Testing of XEN...................................................................................................... 58

4.5 On-going work and future directions..................................................................... 59

5 Static analysis of targets using AI............................................................................. 60

5.1 Overview.............................................................................................................. 60

5.2 Enhancements and support of Frama-C............................................................... 61

5.3 Research on the static analysis of C++ code...................................................... 64

5.4 Static Analysis of XEN using Coverity.................................................................. 72

5.5 Static Analysis of XEN using Frama-C.................................................................. 80

5.6 On-going work and future directions.................................................................... 87

6 Feasibility study: Xen and Common Criteria EAL5 evaluation.................................. 89

6.1 Overview.............................................................................................................. 89

6.2 Availability of documentation............................................................................... 90

6.3 Xen architecture and immediate implications.......................................................90

6.4 Common Criteria components.............................................................................. 92

6.5 Security Target properties.................................................................................... 95

6.6 Conclusion, discussion.......................................................................................... 97

6.7 Abbreviations........................................................................................................ 99

7 References.............................................................................................................. 100

OpenTC Deliverable 07.02

3/100

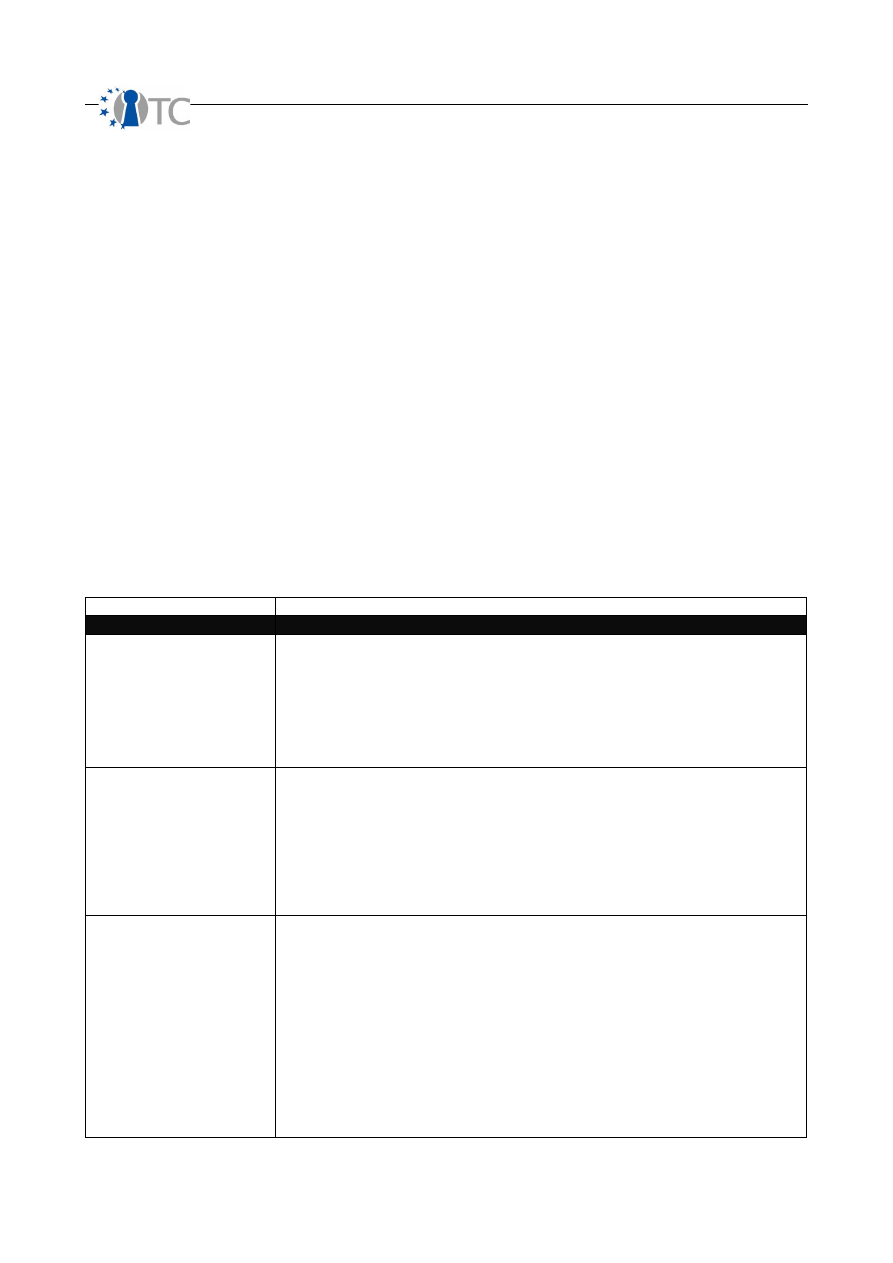

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

List of figures

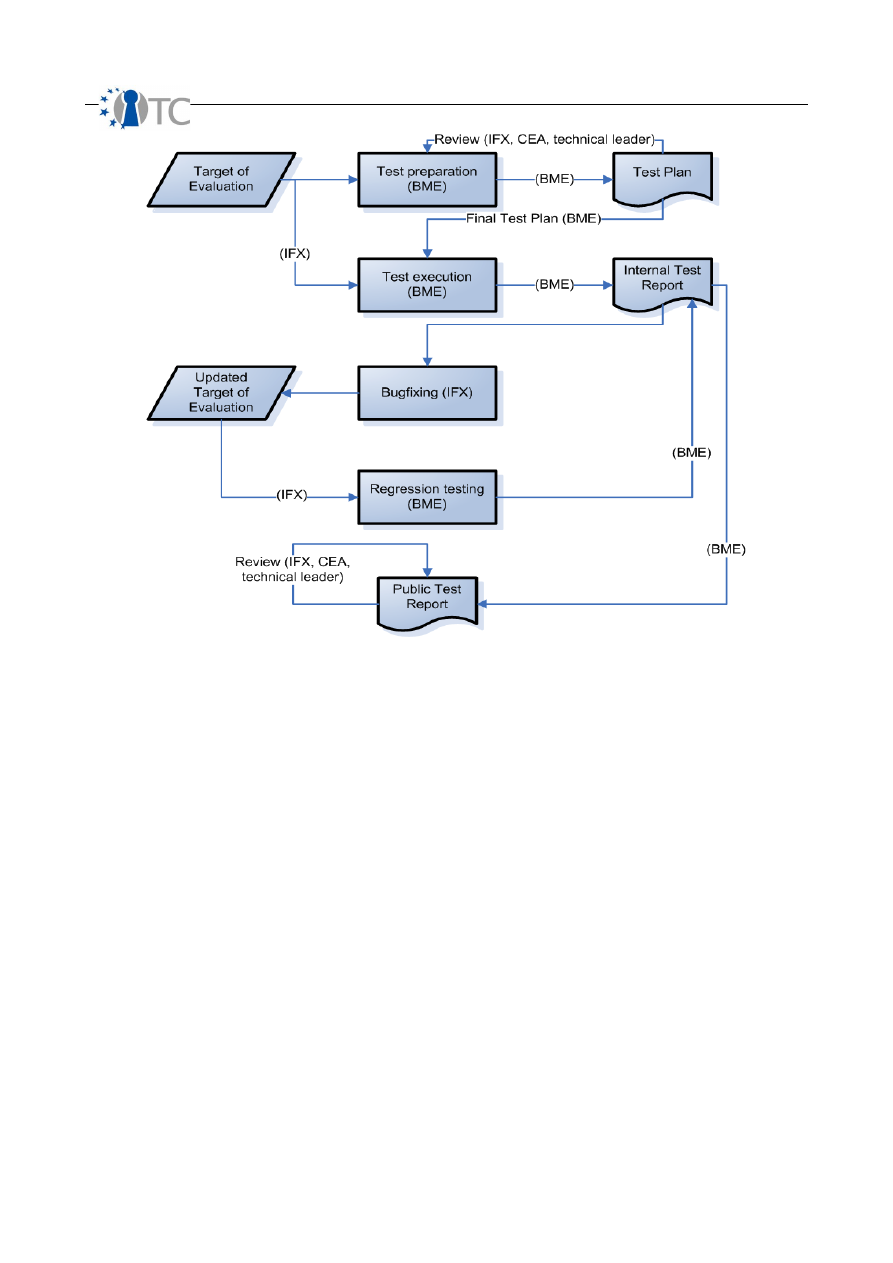

Figure 1: Test process overview................................................................................... 37

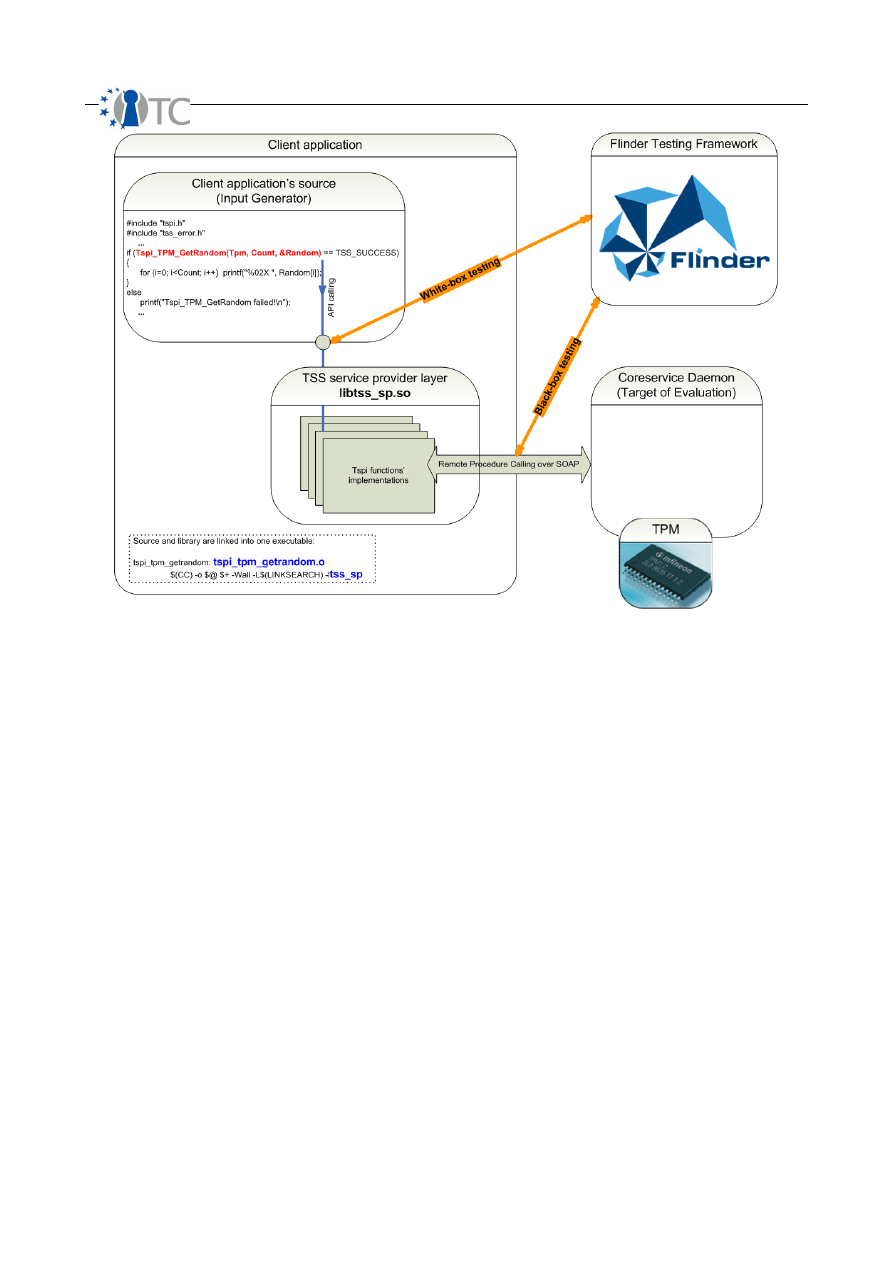

Figure 2: Code snippet before instrumentation............................................................ 41

Figure 3: Modified code................................................................................................ 41

Figure 4: Hooking cycle................................................................................................ 42

Figure 5: SOAP transport level hooking........................................................................ 44

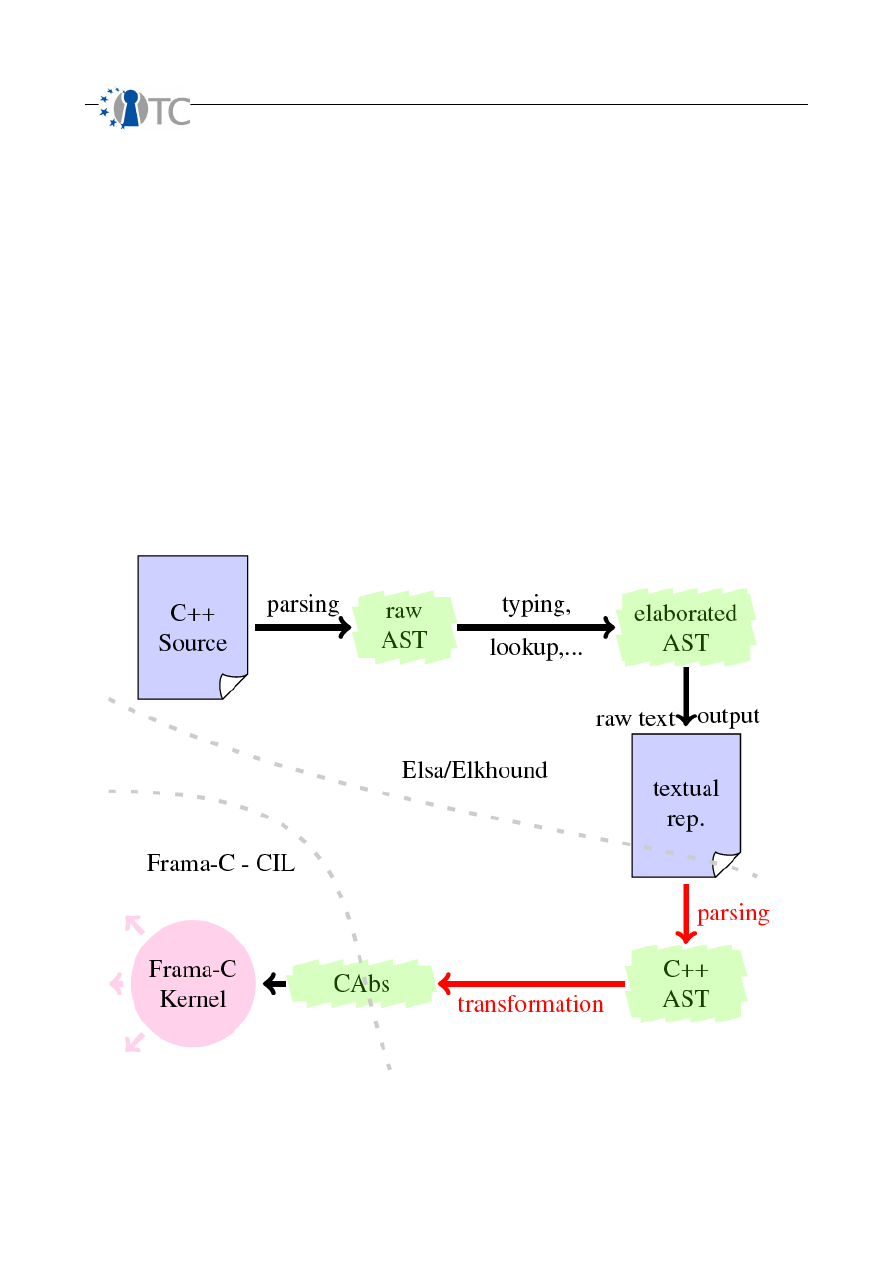

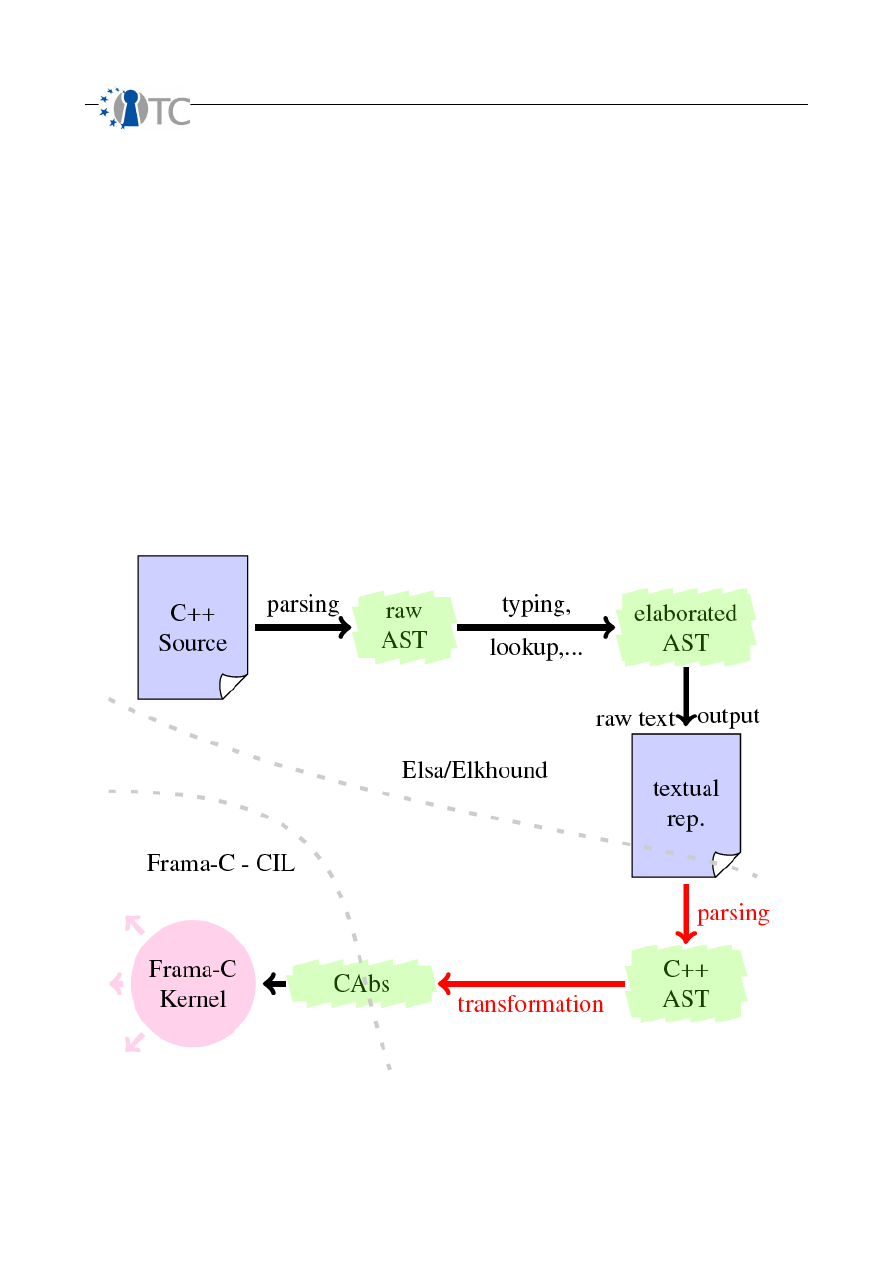

Figure 6: Processing a C++ file in Frama-C.................................................................. 67

Figure 7: Correspondence between the implementation and the ghost model............ 72

OpenTC Deliverable 07.02

4/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

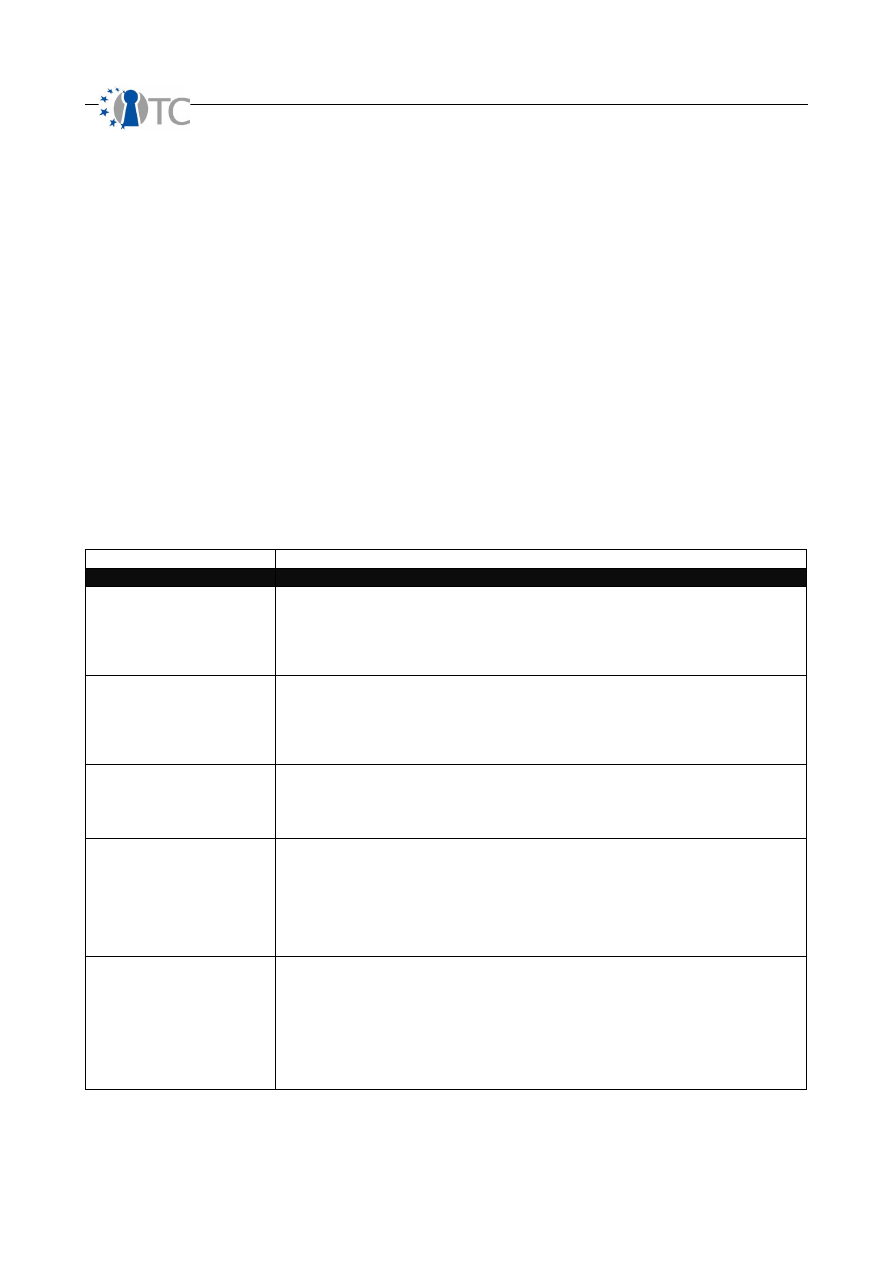

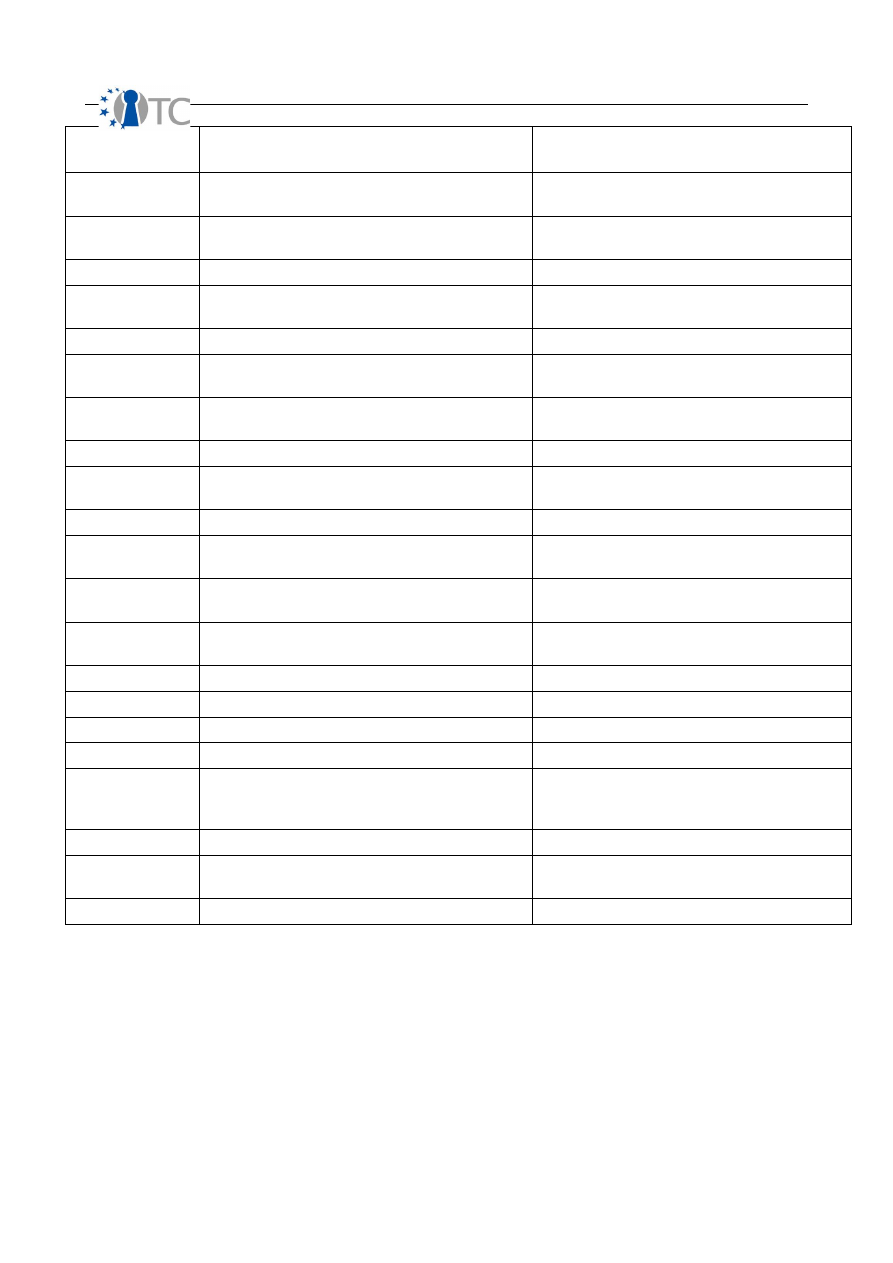

Index of Tables

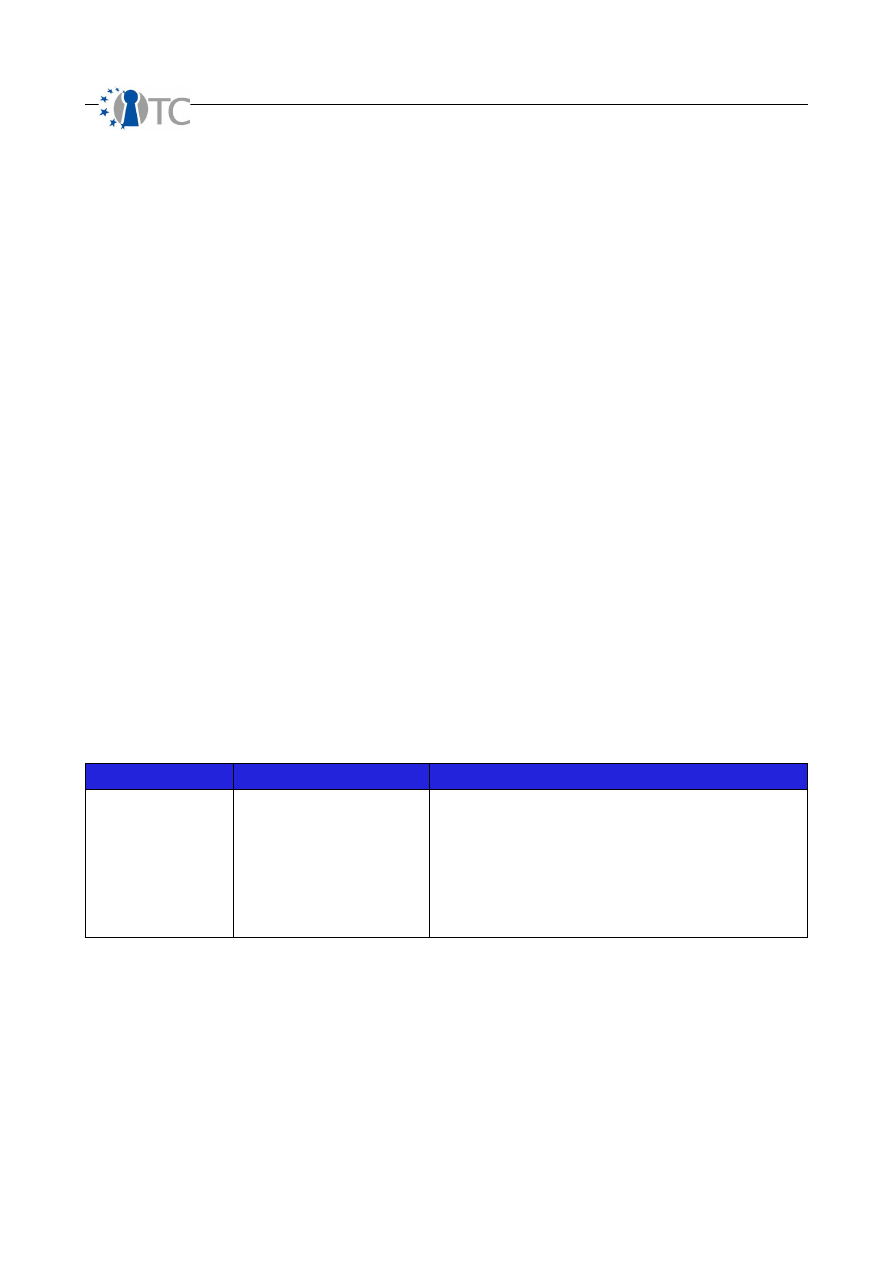

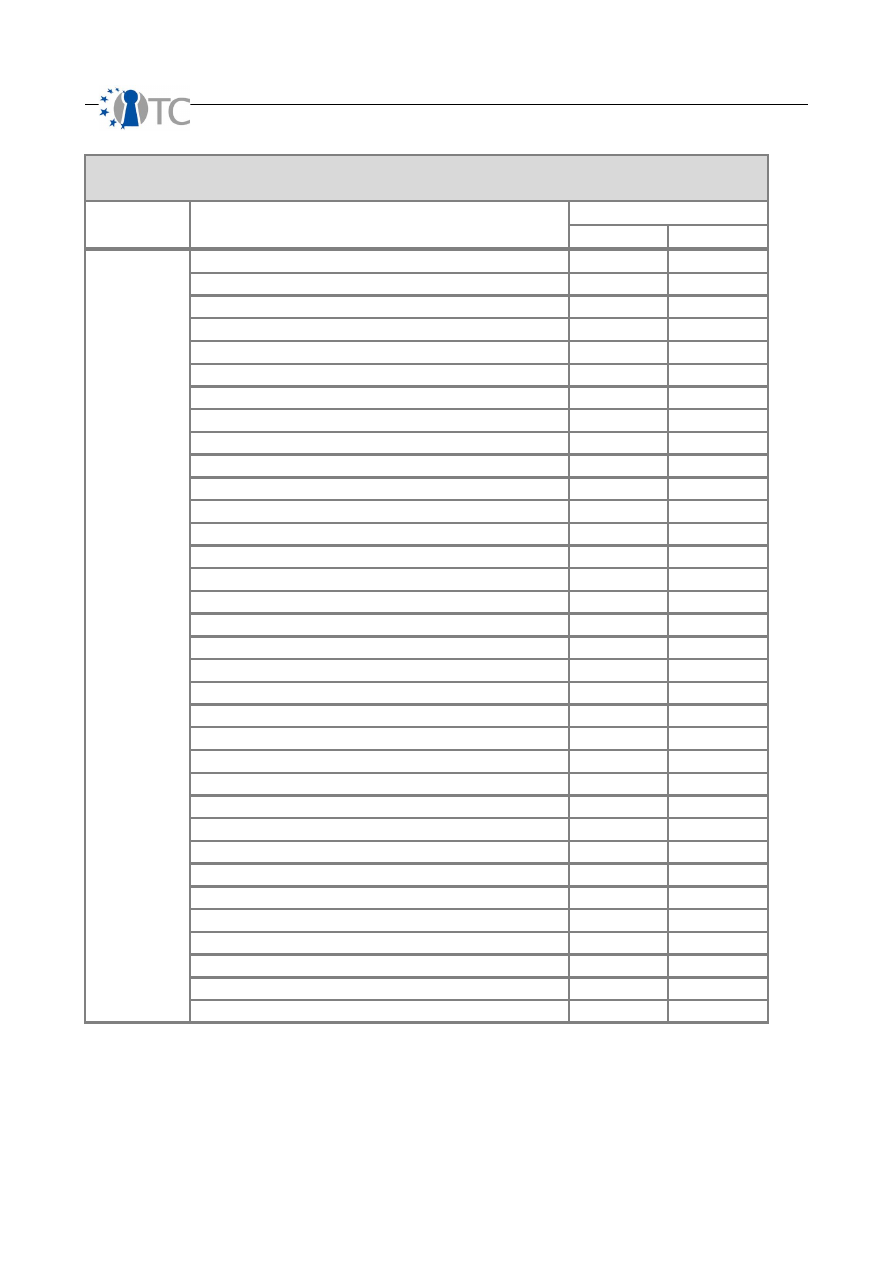

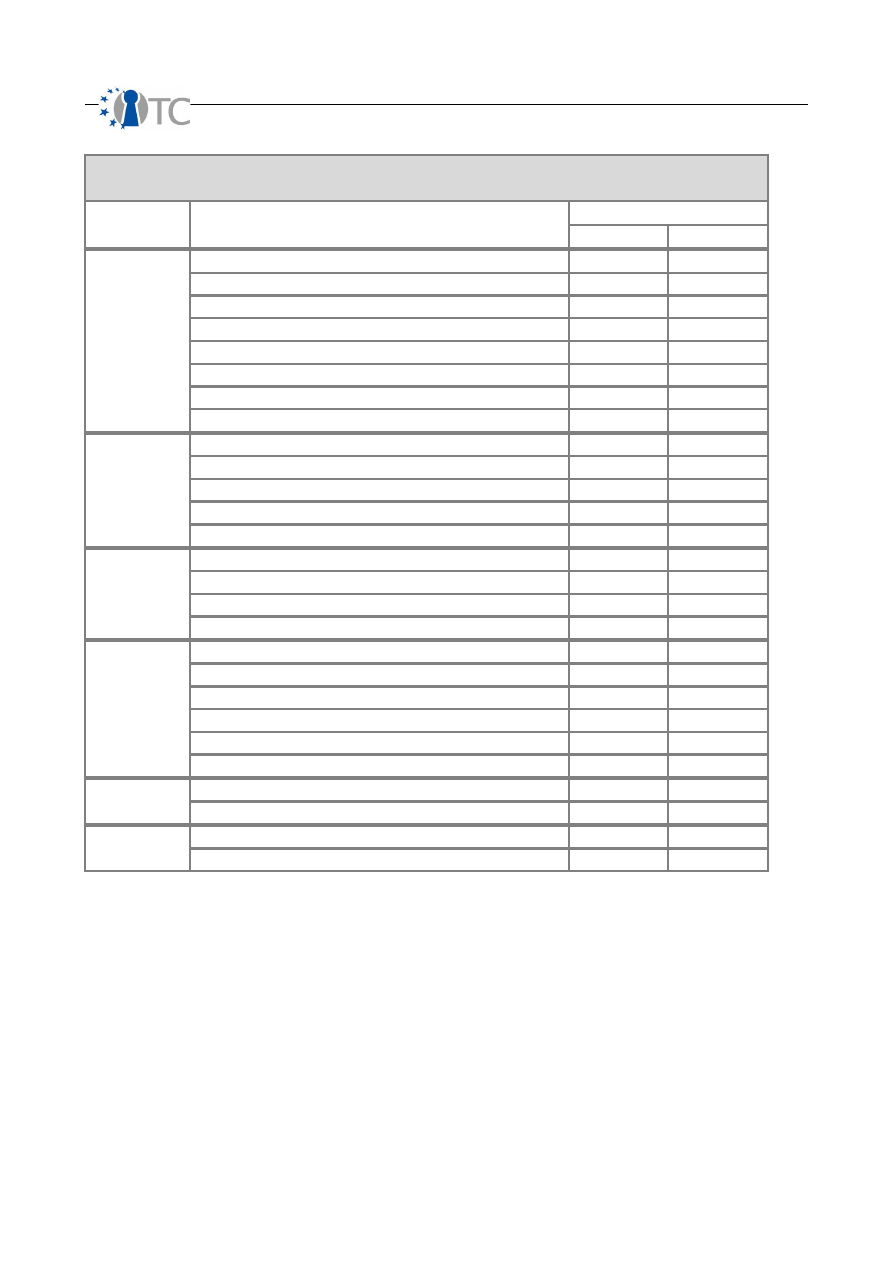

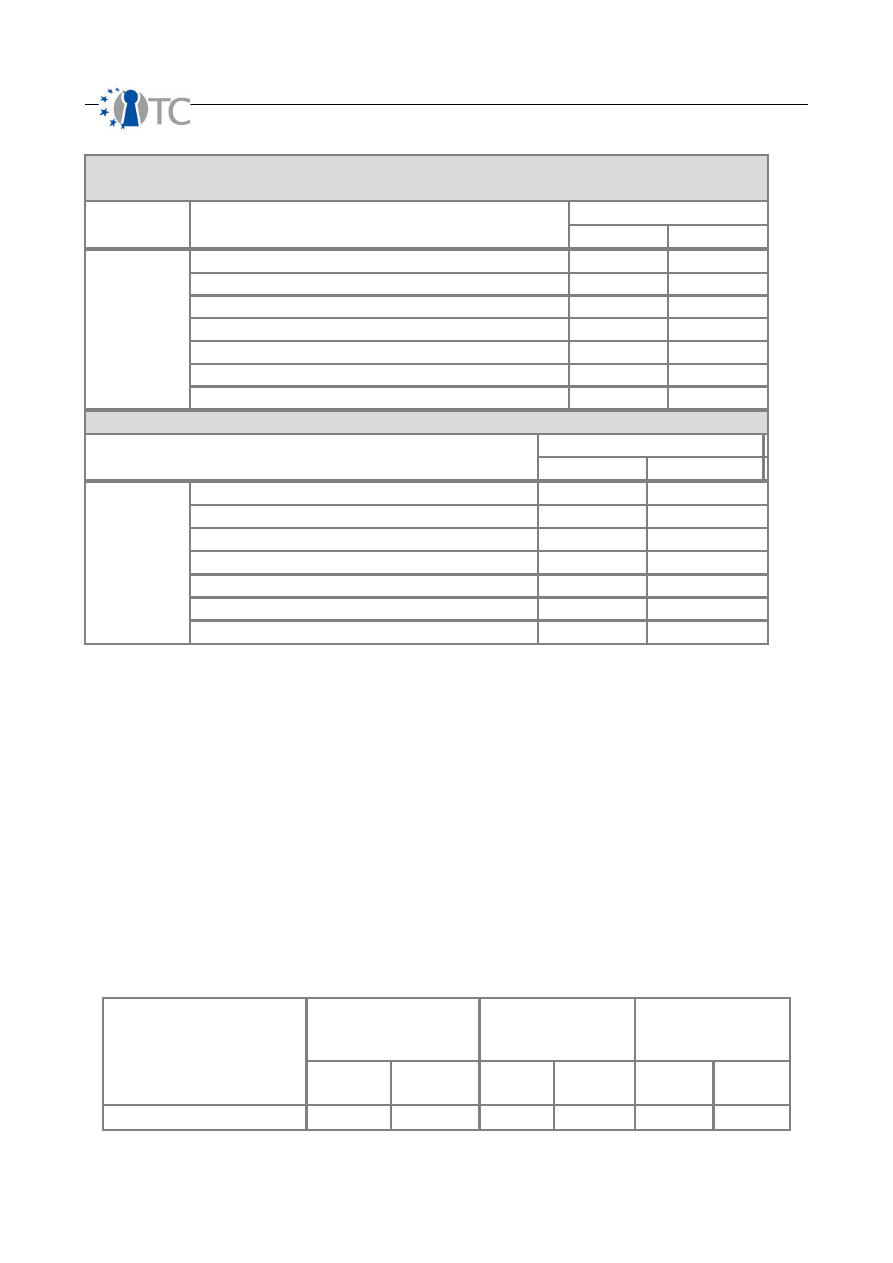

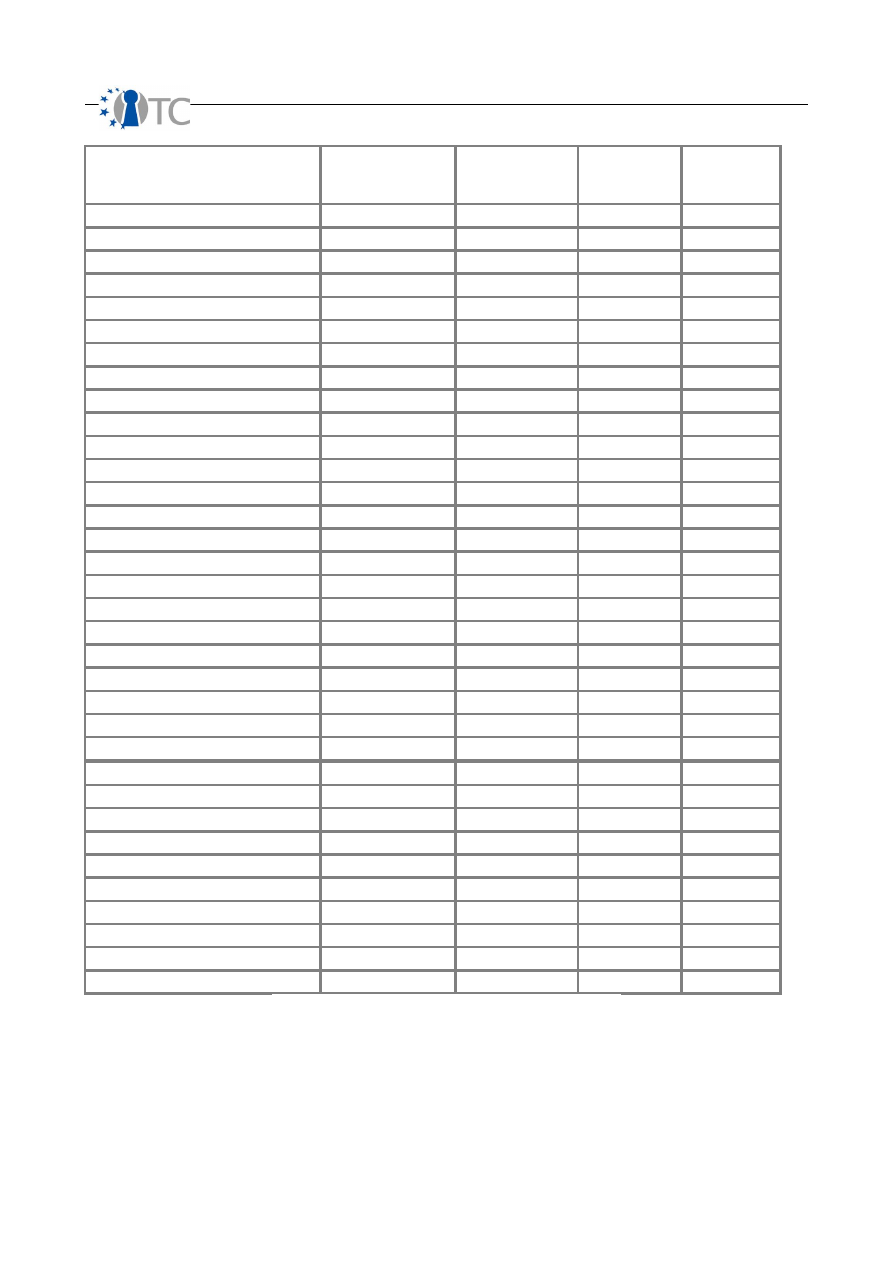

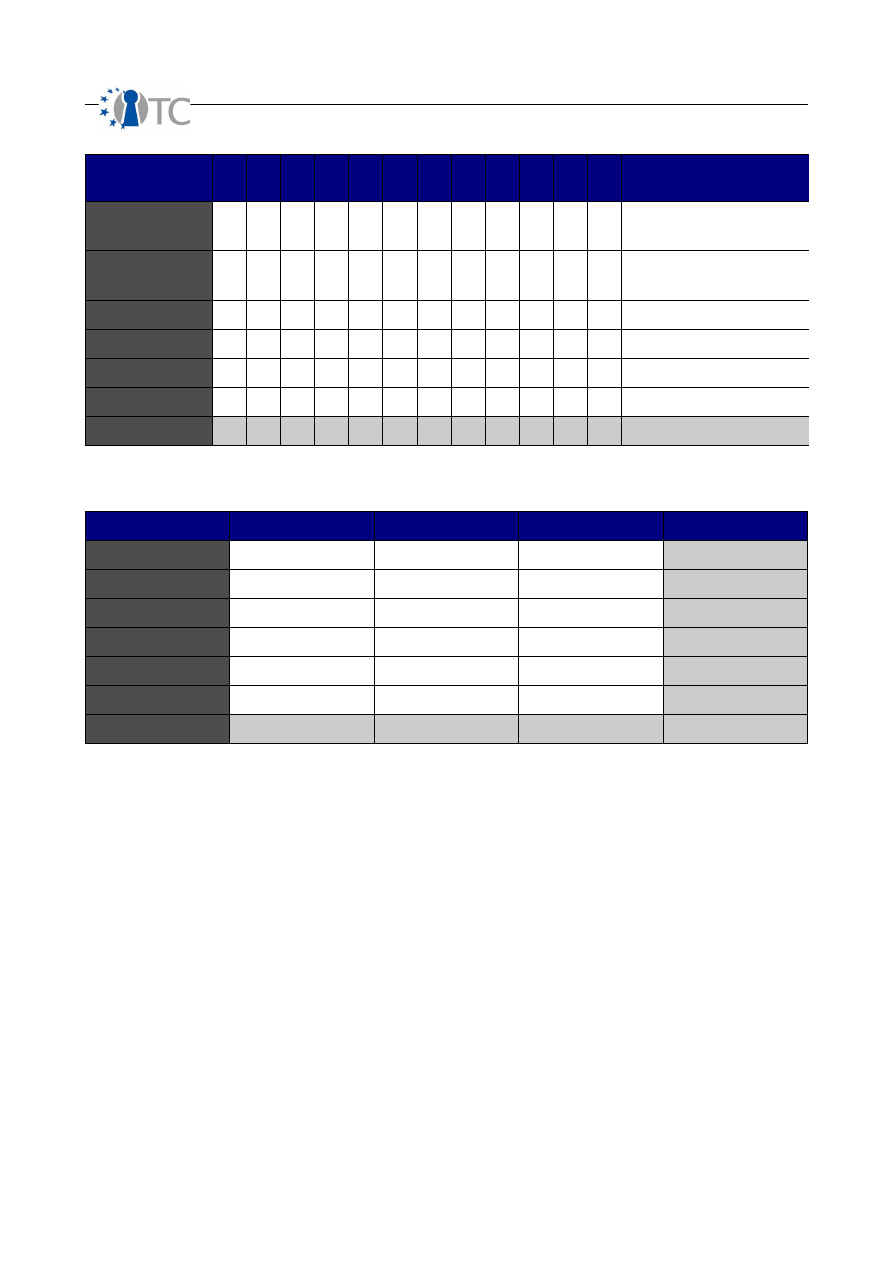

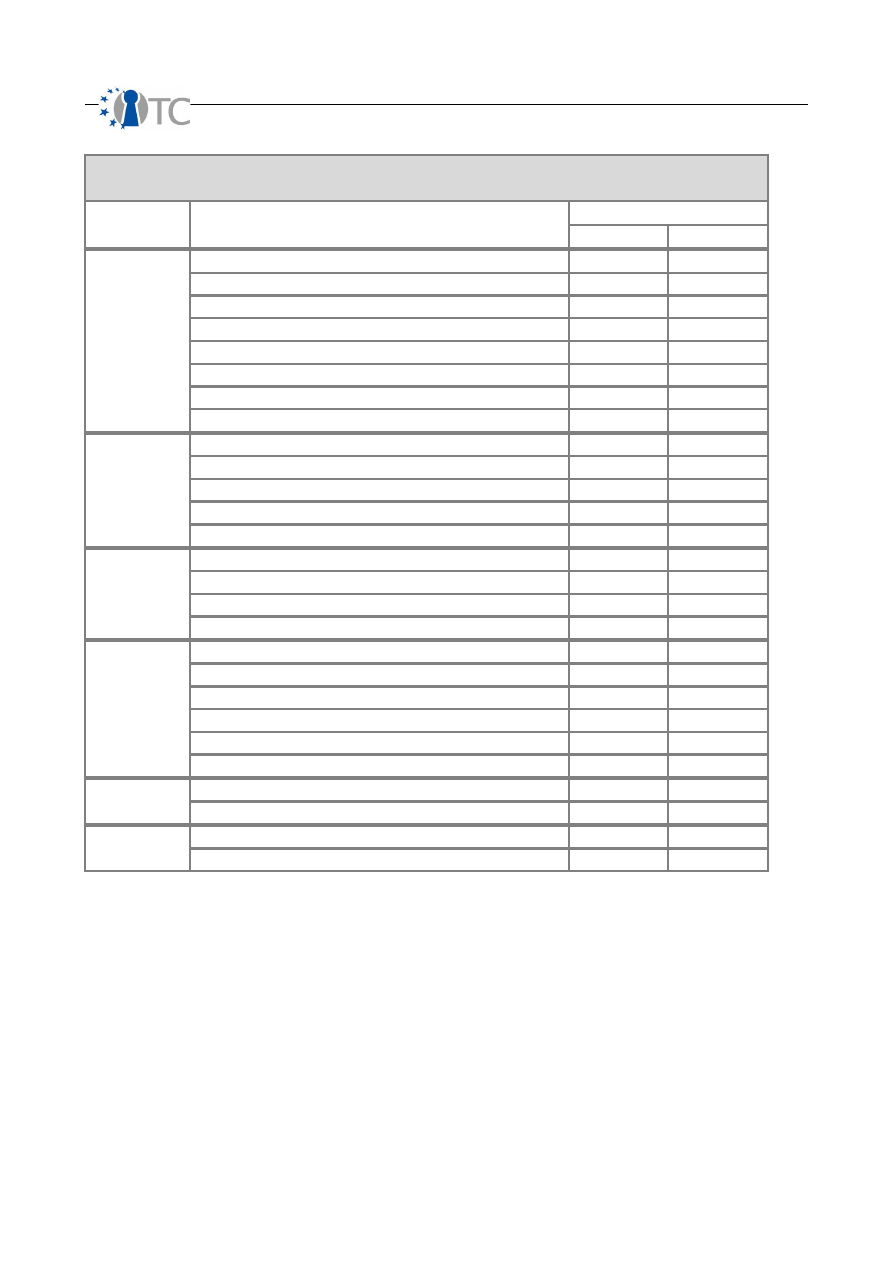

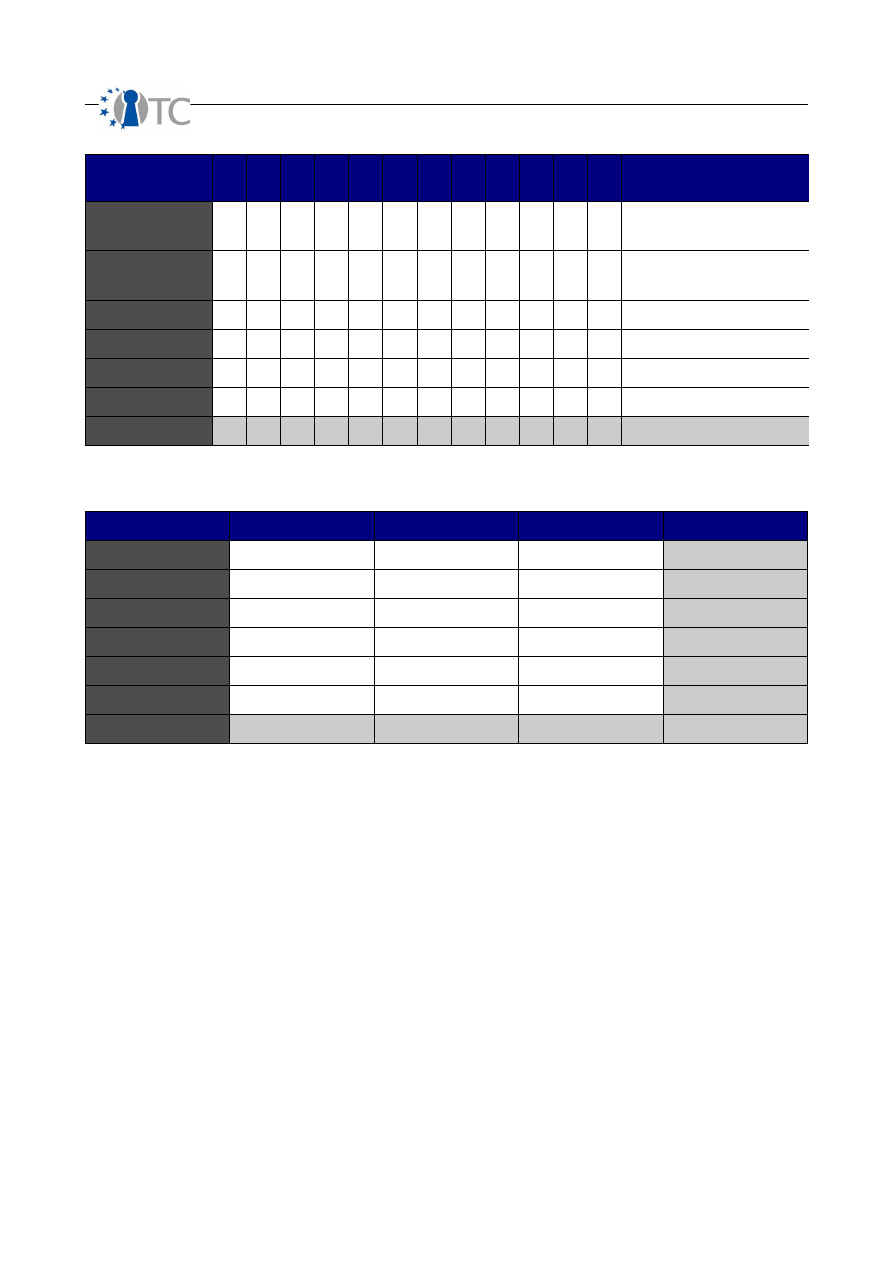

Table 1: Terminology.................................................................................................... 14

Table 2: Calculating OPSEC.......................................................................................... 15

Table 3: Calculating Controls........................................................................................ 17

Table 4: Calculating Security Limitations......................................................................22

Table 5: Calculating Actual Security............................................................................. 24

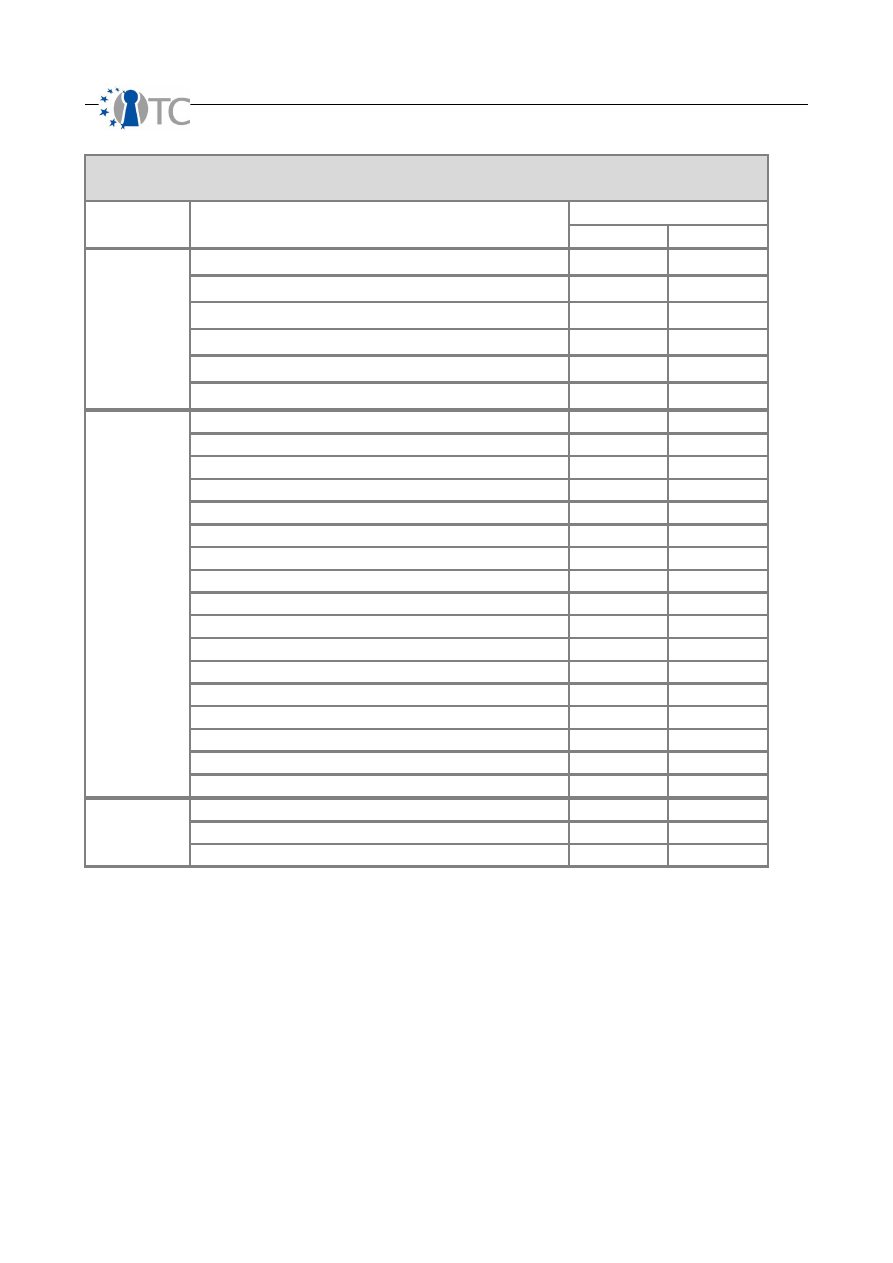

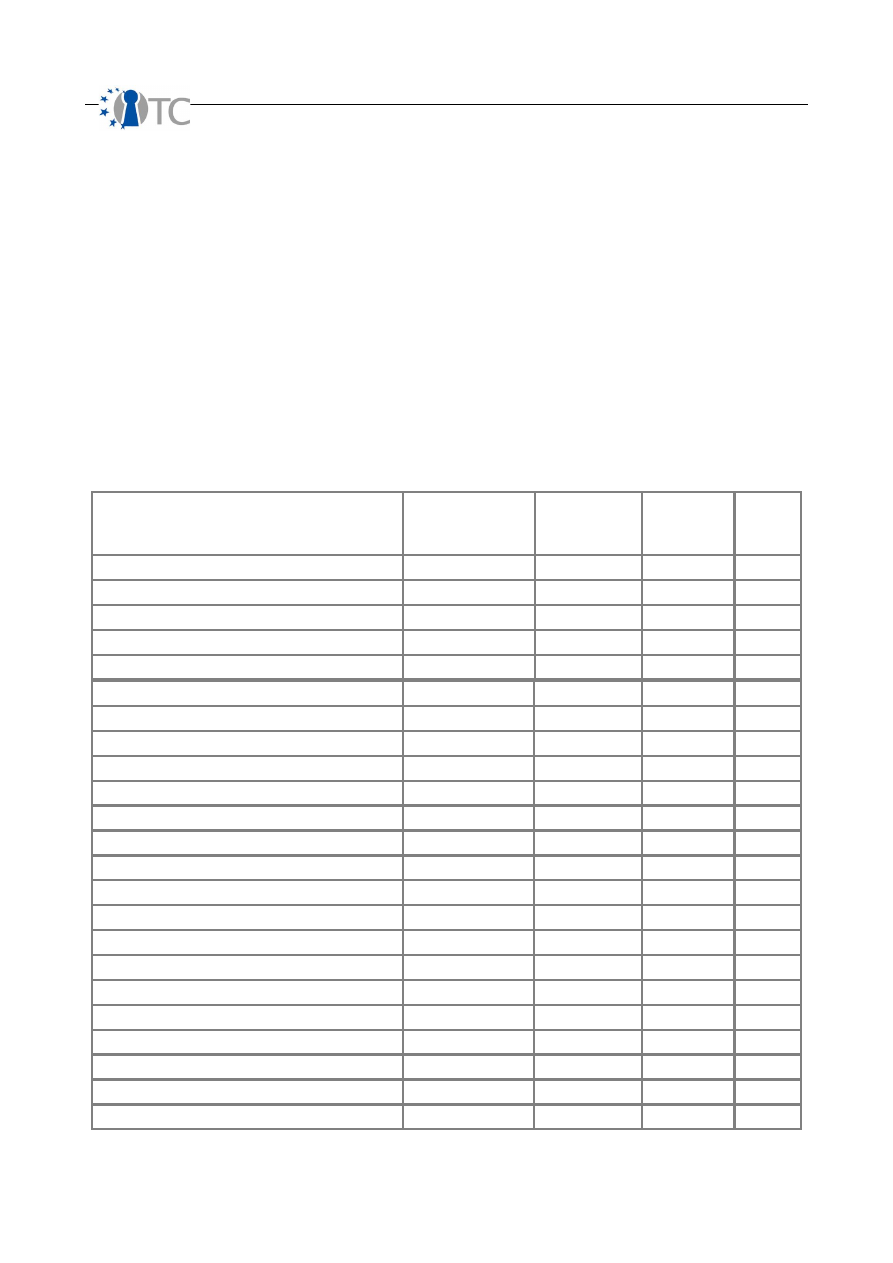

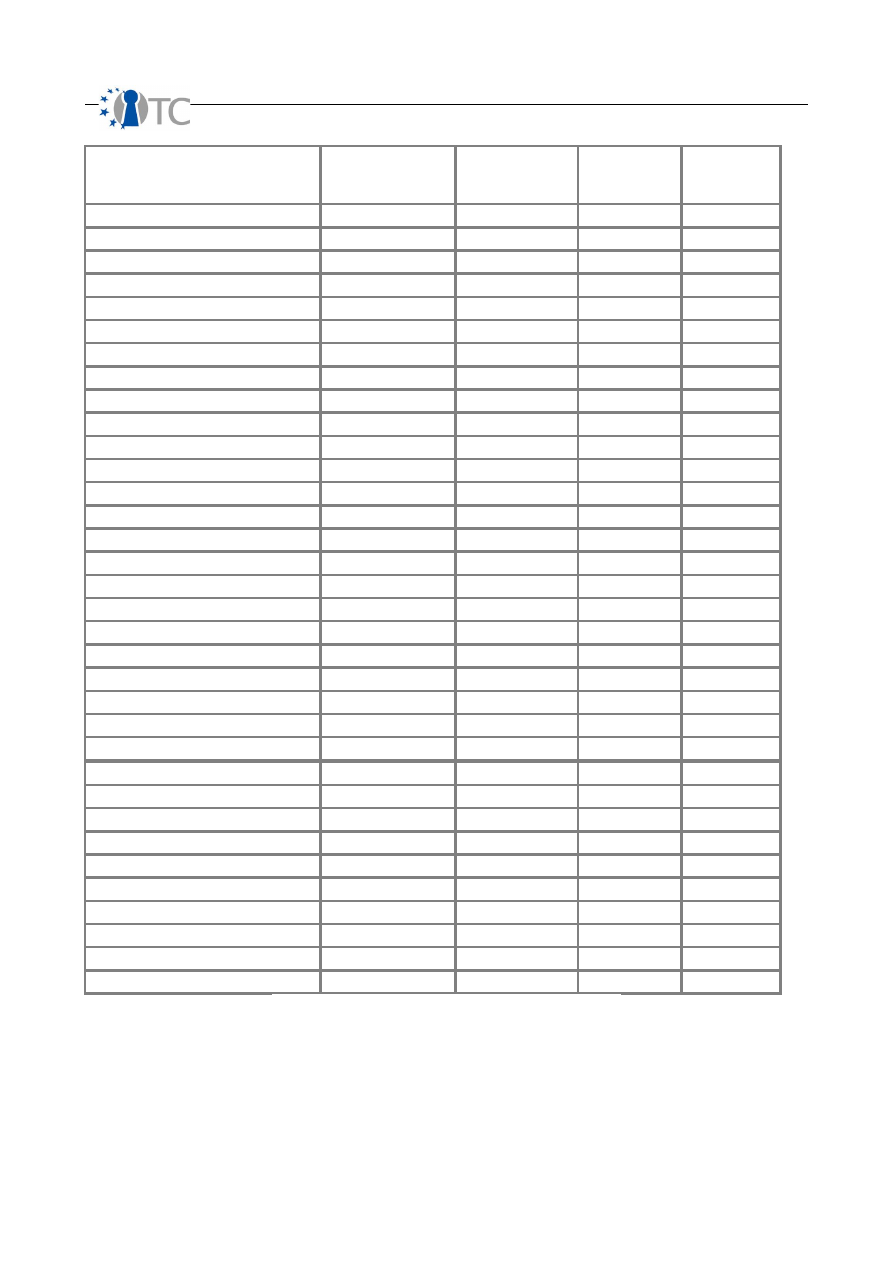

Table 6: Statistics on test categories............................................................................ 50

Table 7: SOAP message testing summary.................................................................... 51

Table 8: White-box testing summary............................................................................ 54

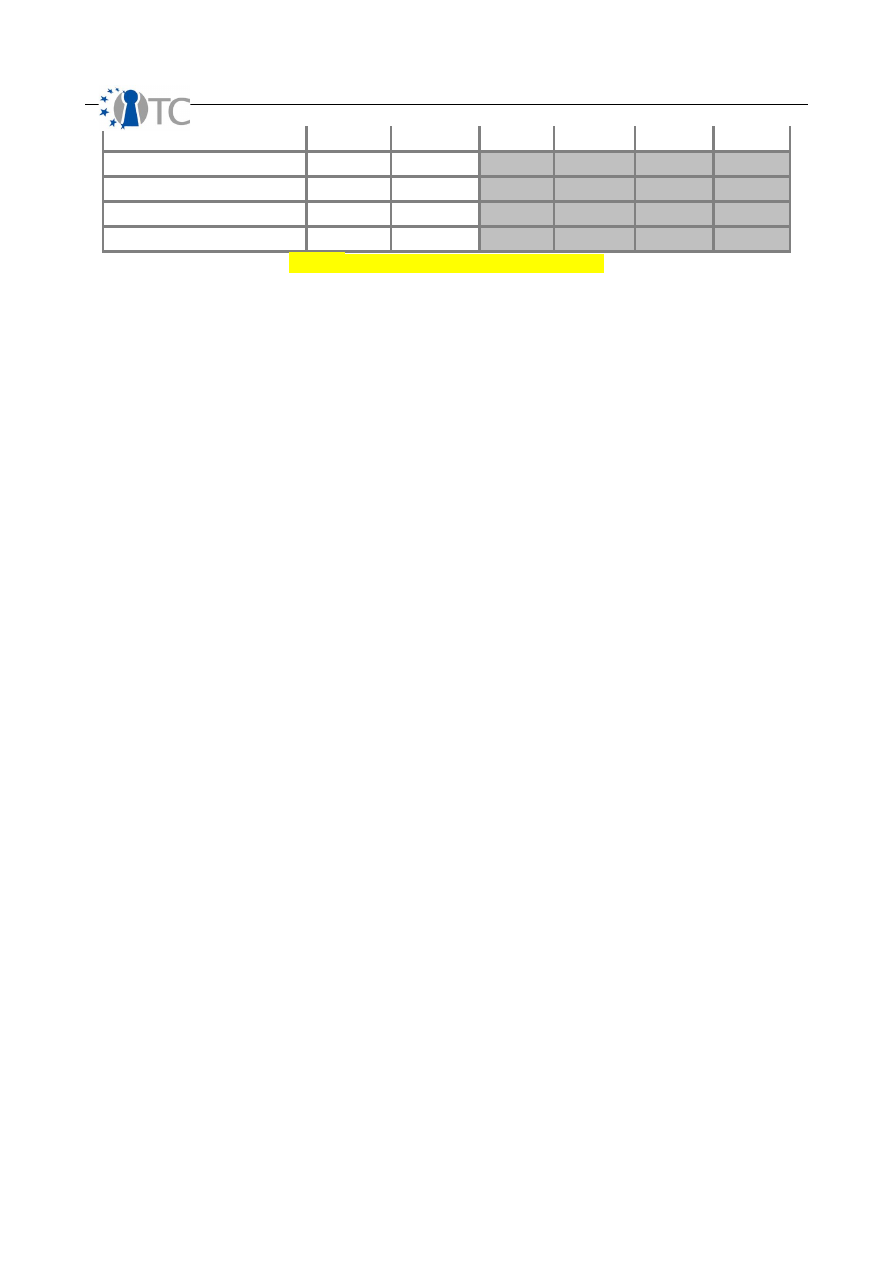

Table 9: Functions returning pointers........................................................................... 75

Table 10: Functions returning numerical values........................................................... 75

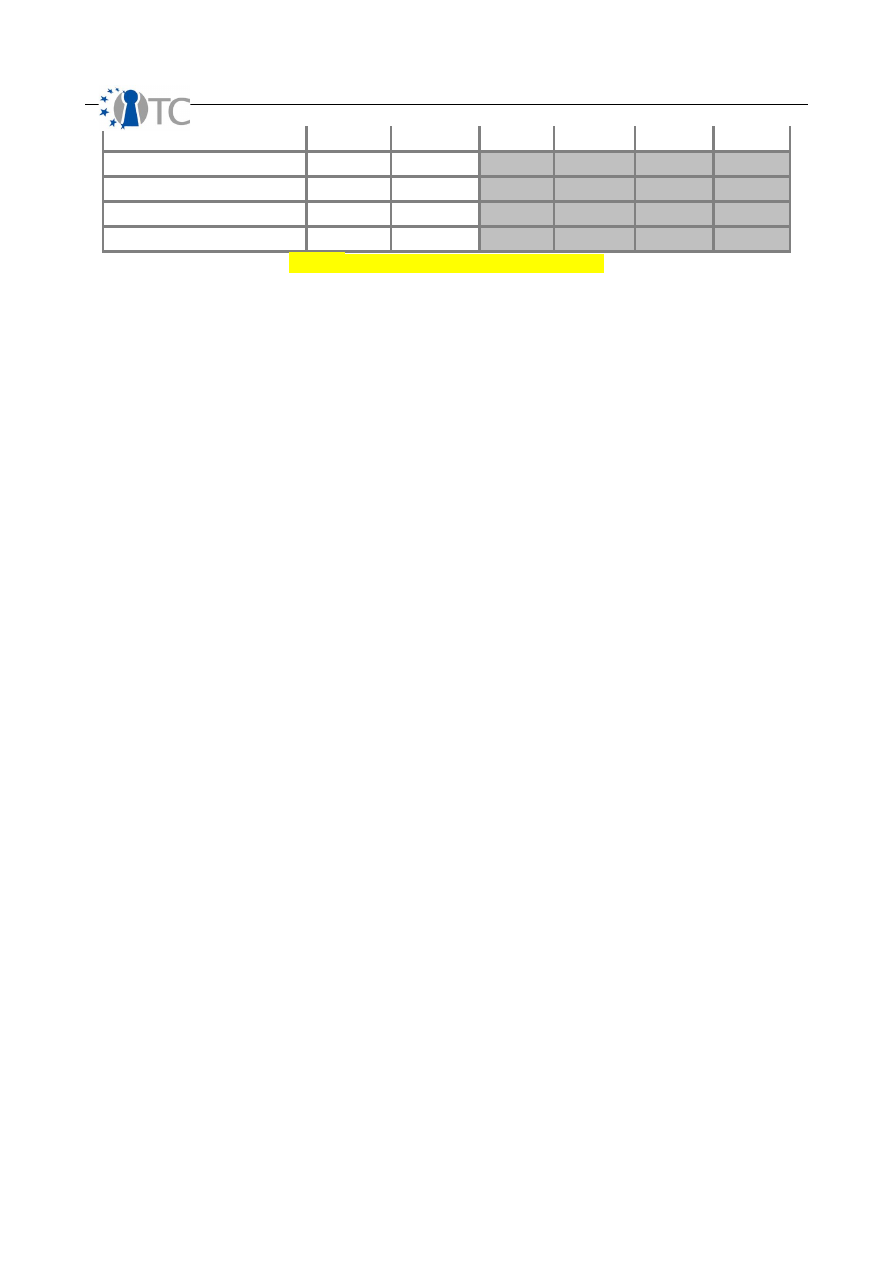

Table 11: Categories of potential bugs......................................................................... 82

Table 12: Bugs per categories...................................................................................... 87

Table 13: Bugs statistics............................................................................................... 87

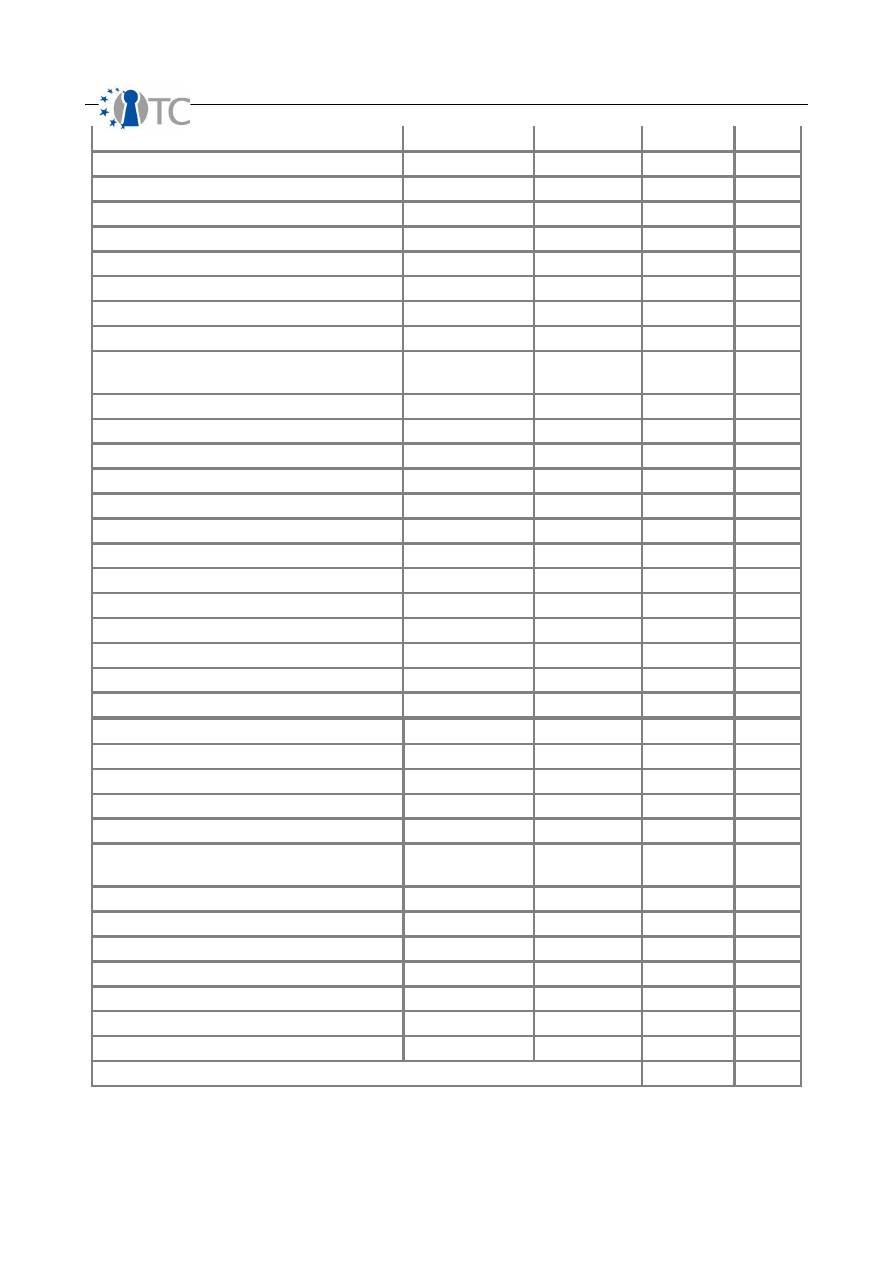

Table 14: Differences between EAL4 and EAL5............................................................ 92

OpenTC Deliverable 07.02

5/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

1 Summary

OpenTC sets out to develop trusted and secure computing systems based on Trusted

Computing hardware and Open Source Software. This deliverable is the main output of

WP07 for the second yearly period, i.e. from November 2006 to October 2007. It

describes the main results of that period as well as work in progress of all partners of

WP07, i.e. of BME, CEA, ISECOM, SUSE and TUS. These results stem from various

research directions, and are directly related to the OS developments and their building

blocks. The main results are the development of testing and verification tools, their

application to OS components and the definition of an Open Source Security testing

Methodology.

In this report we only present the research and development results for that period,

but do not address any project management issues, for which the reader is invited to

open the activities report.

OpenTC Deliverable 07.02

6/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

2 Introduction

2.1 Outline

The initial and still actual main objectives of this WP is to evaluate the reliability and

security of the OS code issued by WP04 (that is a combination of a trusted XEN/L4

virtualisation layer and the Linux kernel) by means of extensive testing and static

analysis, guided by an proper methodology. The aim is to quantify the quality and

safety of this OS code, provide feedback to the developers of this code, and analyze

the possibility to certify (parts of) it at levels EAL5+.

Indeed, operating systems form a particular class of applications in terms of

development process and code that need particular adaptations in terms of

methodology, methods and tools. Starting from state of the art V&V techniques, we

studied how to analyze and test the OS code with a maximum of precision.

WP07 has done significant progress toward the objectives set initially. All support

tasks are focussed on the main objectives above and decomposed it into simpler

objectives. This has been done in several ways.

BME has improved the testing methodology by the addition of trust and security

metrics. Research has been done on how these metrics are applied to applications,

especially the WP07 targets. Also the complexity of the targets became a subject of

investigations, between several partners, aiming at understanding why the

hypervisors are quite difficult targets in terms of V&V.

BME has tested intensively the TSS, by running 135 000 test cases, that revealed

8

weaknesses and 1 remotely exploitable buffer overflow

. All of these have been

corrected and non-regression tests have confirmed this. Plans for testing the XEN core

hypercalls are made.

WP07 has analysed statically the XEN core, especially focussing on five hypercalls

designated by CUCL as the most critical. The Coverity Prevent tool has been used by

TUS and has produced over

300 potential bugs on these hypercalls and a total

of 1900 warnings on XEN versions 3.0.3, 3.0.4 and 3.1

. The Frama-C prototype

has also been applied by CEA to the same hypercalls of

XEN 3.0.3 and has

produced 170 potential errors amongst which 17 true errors.

These lists of

potential bugs are being filtered and pruned, to keep only real errors.

WP07 has also developed several tools: the Frama-C tool has been improved by CEA to

improve the precision and efficiency of the analyses and correct bugs and weaknesses

discovered along its usage. Another tool is being developed by TUS to analyse the

severity of potential bugs and perform slicings of the code to find out what portions of

code are influenced by given errors or variables.

ISECOM has developed the security testing methodology for security testing within the

OpenTC security testing activities as well as defined types of test activities and report

tables. After the definitions have been set during the first project year, WP07 has

studied how to quantify Trust and Security in a measurable manner. WP07 has also

OpenTC Deliverable 07.02

7/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

designed and implemented a security complexity measurement tool, SCARE, for static

C source code. WP07 has also studied means for teaching Trust as in rules applicable

to computer heuristics.

Certification is done on a given version of some product upon request. CC certification

has already been done on some OS, especially when they are safety or mission critical

(for instance, RTOS). But when dealing with open-source software, certification is

much harder. SUSE has investigated remaining CC criteria left after D07.1 and has

concluded about the impossibility to certify the entire XEN hypervisor due to the non-

observance of CC design criteria.

In co-operation with ISECOM and CEA, HPLB participated in investigation on additional

quantitative metrics for the OSSTMM and in the introductory training for this

methodology. HPLB co-defined the functional coverage and test set for the automated

black- and white box testing of XEN source code and modules. In co-operation with the

WP07 leader CEA and WP04 partner CUCL, HPLB contributed to a classification results

of automated testing to improve their the further development of the XEN code base.

WP07 has also followed closely the OS developments done by the WP04 and WP06

partners, to understand the nature of the developments and ensure that the WP07

support activities remain helpful to these developments.

2.2 Targets analyses

The WP07 activities provide support to the development activities of WP05 and WP06

and therefore has investigated which targets are important to address and support. It

was considered since the beginning of the project, that stable components are to be

addressed first, followed by components developed along OpenTC. It was also

considered that components are to be considered from the bottom layers (close to the

hardware) to the upper layer (central OS components). Hardware components, such as

the TPM or CPU are out of the scope of this project, as we deal with software items

only.

During the first year we have considered that the virtualization layers, namely XEN

and L4/Fiasco, are quite stable and merit that we V&V them. Below these, we find

BIOSes and boot loaders, that are critical components too, but that are not always

open source. We will address the bootloader OSLO during year 3. On top of the central

security TPM component, OpenTC has developed in WP03 the TSS, which has been

another target for V&V.

Year 2 has therefore concentrated on XEN and the TSS, and year 3 will consider parts

of the other items mentioned above.

2.3 Structure of this report

This report is structured along the technical research areas, presenting them in details

and giving the reader an insight into the techniques, and also presenting the main

results. Some detailed results, particularly those related to static analysis, are moved

to appendixes.

Whenever possible, each research task will be described using the following same

model:

•

Overview o

f the task, description of its aims and relationship with the original

OpenTC Deliverable 07.02

8/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

plans of WP07 and its SWP. This introduces the task and binds it to the first

workplan (see annex 1 of the OpenTC contract).

•

Technical background

: this contains basic technical elements for the reader

to understand the results. Indeed, some tasks are quite new, and some

material is given for the reader to understand where the progress lies.

•

A detailed description of the

research done

: this is the core part, highlighting

the main technical results.

•

On-going work

, to give some perspectives on what will be done during the

next project period and what research directions will be taken in that time

frame.

OpenTC Deliverable 07.02

9/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

3 Development of security and trust metrics

3.1 Overview

In the creation of a trusted system, one must trust the components of the system,

trust the operation of those components in an interactive scenario with each other and

with the user, and trust the integrity of the components alone or together operating

within a specific environment. No methodology has previously existed which could

allow this. No metrics have existed to describe this or allow for one system to be

compared to another or for operation within a particular environment.

Within OpenTC, ISECOM has been studying and defining the tests and metrics required

to accomplish this task. The first year, ISECOM devoted research to the completion of

a full security audit and unbiased metrics to facilitate the scientific, operational

security testing of OpenTC components as well as define which components must be

tested. The second year has focused on research towards defining Trust more

completely, trust tests, integrity tests, trust metrics, and security complexity metrics

of static source code.

As of this moment, ISECOM has published the penultimate draft of the Open Source

Security Testing Methodology Manual (OSSTMM) 3 which comprises of the full

requirements for completing an operational security audit and creating unbiased

metrics, the Source Code Analysis Risk Evaluation (SCARE) metric and tool for

calculating operational security complexity (aka “how complicated is it to secure this

software and how volatile is it?”) for the C programming language as well as the

means to apply it to others, tested three versions of the XEN source code, and

published a draft on defining and measuring Trust.

However, what we have completed is a small portion of what we have done. For this

year ISECOM has researched the following:

●

We ran various studies and seminars regarding the security testing metrics and

defined it mathematically to further its application.

●

We have researched an better Trust definition, the elements that form Trust and

a metric to represent it as an unambiguous amount.

●

We have mapped test types as required to run against the TC system to

measure its level of trust and security.

●

We have defined a process for measuring security complexity metrics in source

code, and have applied it to the C programming language.

●

A tool has been written to perform the tasks of the security complexity metric

and is being tested.

●

The progress of security complexity in XEN by measuring 3 versions of the

source code under SCARE.

●

The progress of security complexity in the Linux Kernel and how to accurately

measure it under SCARE.

3.2 Technical background

“Security Testing” is an umbrella term to encompass all forms and styles of security

tests from the intrusion to the hands-on audit. The application of the methodology

from this manual will not deter from the chosen type of testing.

OpenTC Deliverable 07.02

10/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

Practical implementation of this methodology requires defining individual testing

practices to meet the requirements defined here. This means that even when

following this methodology, the application of it, the technique, will reflect the type of

test one has chosen to do. However, regardless if the test type is blind, double blind,

gray box, double gray box, tandem, or reversal, the test must be indicative of the

target's ability to operate adequately.

Why test operations? Unfortunately, not everything works as configured. Not

everyone behaves as trained. Therefore the truth of configuration and training is in

the resulting operations.

This security testing methodology is designed on the principle of verifying the security

of operations. While it may not always test processes and policy directly, a successful

test of operations will allow for analysis of both direct and indirect data to study the

gap between operations and processes. This will show the size of the rift between

what management expects of operations from the processes they developed and what

is really happening. More simply put, the auditor's goal is to answer: how do current

operations work and how do they work differently from how management thinks they

work?

The security testing process is a discrete event test of a dynamic, stochastic system.

The target is a system, a collection of interacting and co-dependent processes, which

is also influenced by the stochastic environment it exists in. Being stochastic means

the behaviour of events in a system cannot be determined because the next

environmental state can only be partially but not fully determined by the previous

state. The system contains a finite, possibly extremely large, number of variables and

each change in variable presents an event and a change in state. Since the

environment is stochastic, there is an element of randomness and there is no means

for predetermining with certainty how all the variables will affect the system state. A

discrete test examines these states within the dynamic system at particular time

intervals. Monitoring operations in a continuous manner, as opposed to a discrete

one, would provide far too much information to analyse. Nor may it even be possible.

Even continuous tests however, require tracking each state in reference to time in

order to be analysed correctly.

A point of note is the extensive research available on change control for processes to

limit the amount of indeterminable events in a stochastic system. The auditor will

often attempt to exceed the constraints of change control and present “what if”

scenarios which the change control implementers may not have considered. A

thorough understanding of change control is essential for any auditor.

An operational security test therefore requires a thorough understanding of the testing

process, choosing the correct the type of test, recognizing the test channels and

vectors, defining the scope according to the correct index, and applying the

methodology properly.

Strangely, nowhere besides in security testing is the echo process considered the

defacto test. Like yelling into a cavernous area and awaiting the response, the echo

process requires agitating and then monitoring emanations from the target for

indicators of a particular state (secure or insecure, vulnerable or protected, on or off,

left or right). The echo process is of the cause and effect type of verification. The

OpenTC Deliverable 07.02

11/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

auditor makes the cause and analyzes the effect from the target. It is strange that

this is the primary means of testing something as critical as security because although

it makes for a very fast test, it is also highly prone to errors, some of which may be

devastating to the target. Consider that in a security test using the echo process it

means that should the target not respond then it is secure. Following that logic, a

target need only be not interactive to give the appearance of security.

If hospitals used the echo process to determine the health of an individual, it would

rarely help people but at least the waiting room time would be very short. Hospitals

however, like most other scientific industries, apply the Four Point Process which

includes a function of the echo process called the “interaction” as just one of the four

tests where the other three are: the “inquest” of reading emanations from the patient

(such as pulse, blood pressure, and brain waves), the “intervention” of changing and

stressing operating conditions (changing the patient's homeostasis, behavior, routine,

or comfort level), and the “induction” of examining the environment as to how it

affected the target (analyzing what the patient has interacted with: touched, eaten,

drank, breathed in, etc.). However in security testing, the majority of tests are of the

echo process alone. There is so much information lost in such one-dimensional testing

we should be thankful that the healthcare industry has evolved past just the “Does it

hurt if I do this?” manner of diagnosis.

The security test process in this methodology does not recommend the echo process

alone for reliable results. While the echo process may be used for certain, particular

tests where the error margin is small and the increased efficiency allows for time to be

moved to other time-intensive techniques, it is not recommended for tests outside of a

deterministic environment. The auditor must choose carefully when and under what

conditions to apply the echo process.

While many testing processes exist, the Four Point Process for security testing is

designed for optimum efficiency, accuracy, and thoroughness to assure test validity

and minimize errors in uncontrolled and stochatic environments. It is optimized for

real-world test scenarios outside of the lab. And while it also uses agitation, it differs

from the echo process in that it allows for determining more than one cause per effect

and more than one effect per cause.

The Four Points

1.

Induction

: establishing principle truths about the target from environmental

laws and facts.

2.

Inquest

: investigating target emanations.

3.

Interaction:

like

echo tests, standard and non-standard interactions with the

target to trigger responses.

4.

Intervention

: changing resource interactions with the target or between

targets.

Point 1, the Induction Phase

The auditor determines factual principles regarding the target from the environment

where the target resides. As the target will be influenced by its environment, its

behavior will be determinable within this influence. Where the target is not influenced

by its environment but should exists an anomaly to be understood.

Point 2, the Inquest Phase

OpenTC Deliverable 07.02

12/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

The auditor investigates the emanations from the target and any tracks or indicators

of those emanations. A system or process will generally leave a signature of its

existence through interactions with its environment.

Point 3, the Interaction Phase

The auditor will inquirey or agitate the target to trigger responses for analysis.

Point 4, the Intervention Phase

The auditor will intervene with the resources the target requires from its environment

or from its interactions with other targets to understand the extremes under which it

can continue operating adequately.

An audit according to this methodology will require that the full 4 Point Process

security tests are completed thoroughly. It will not be possible to follow the full

methodology with just the Interaction tests.

3.3 Security Metrics

The completion of a thorough security audit has the advantage of providing accurate

metrics on the state of security. The less thorough the audit means a less accurate

overall metric. Alternately, lesser skilled auditors and lesser experienced analysts will

also adversely affect the quality of the metric. Therefore, a successful metric of

security requires an audit which can be described as testing (measuring) from the

appropriate vectors required while accounting for inaccuracies and misrepresentations

in the test data and skills or experience of the security professionals performing the

audit. Faults in these requirements will result in lower quality measurements and false

security determinations.

This methodology refers to metrics as

Risk Assessment Values

(RAVs). While not a

risk assessment in itself, an audit with this methodology and the RAVs will provide the

factual basis for a more accurate and more complete risk assessment.

Overview

Appropriate security metrics require overcoming the bias of common metrics where

measurements are generally based on opinions. By not measuring the typical

qualitative assessment we can begin to factually quantify security. The further we can

remove the emotional element from the security test, the more accurately the metrics

will represent the situation.

Applying Risk Assessment Values

This methodology will define and quantify three areas within the scope which together

create the big picture defined as Actual Security as its relevance to the current and

real state of security. The big picture approach is to calculate separately as a

condensed value, each of the areas: Operations, Controls, and Limitations. The 3

values are combined and further condensed to form the fourth value, Actual Security,

to provide the big picture overview and a final metric for comparisons. Since the RAV

is relevant security information condensed it is extremely scalable. This allows for

comparable values between two or more scopes regardless of the number of targets,

vector, test type, or index where the index is the method of how individual targets are

OpenTC Deliverable 07.02

13/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

calculated. This means that with RAVs the security of a single target can be

realistically compared with 10,000 targets.

One important rule to applying these metrics is that Actual Security can only be

calculated per scope. A change in channel, vector, or index is a new scope and a new

calculation for Actual Security. However, multiple scopes can be calculated together

to create one Actual Security that represents a fuller vision of operational security.

For example, the audit will be made of internet-facing servers from both the internet

side and from within the perimeter network which they reside. That is 2 vectors. The

first vector is indexed by IP address and contains 50 targets. The second vector is

indexed by MAC address and is 100 targets. Once each audit is completed and metrics

are counted for each of the 3 areas, they can be combined into one calculation of 150

targets and the sums of each area. This will give a final Actual Security metric which

is much more complete for that perimeter network then either would be alone.

The use of the RAVs requires understanding this specific terminology and current

security research. This terminology provides a specific means to describe quantified

security. Without such exact definitions it is not possible to convey the meaning

without referring to the process of obtaining the numbers.

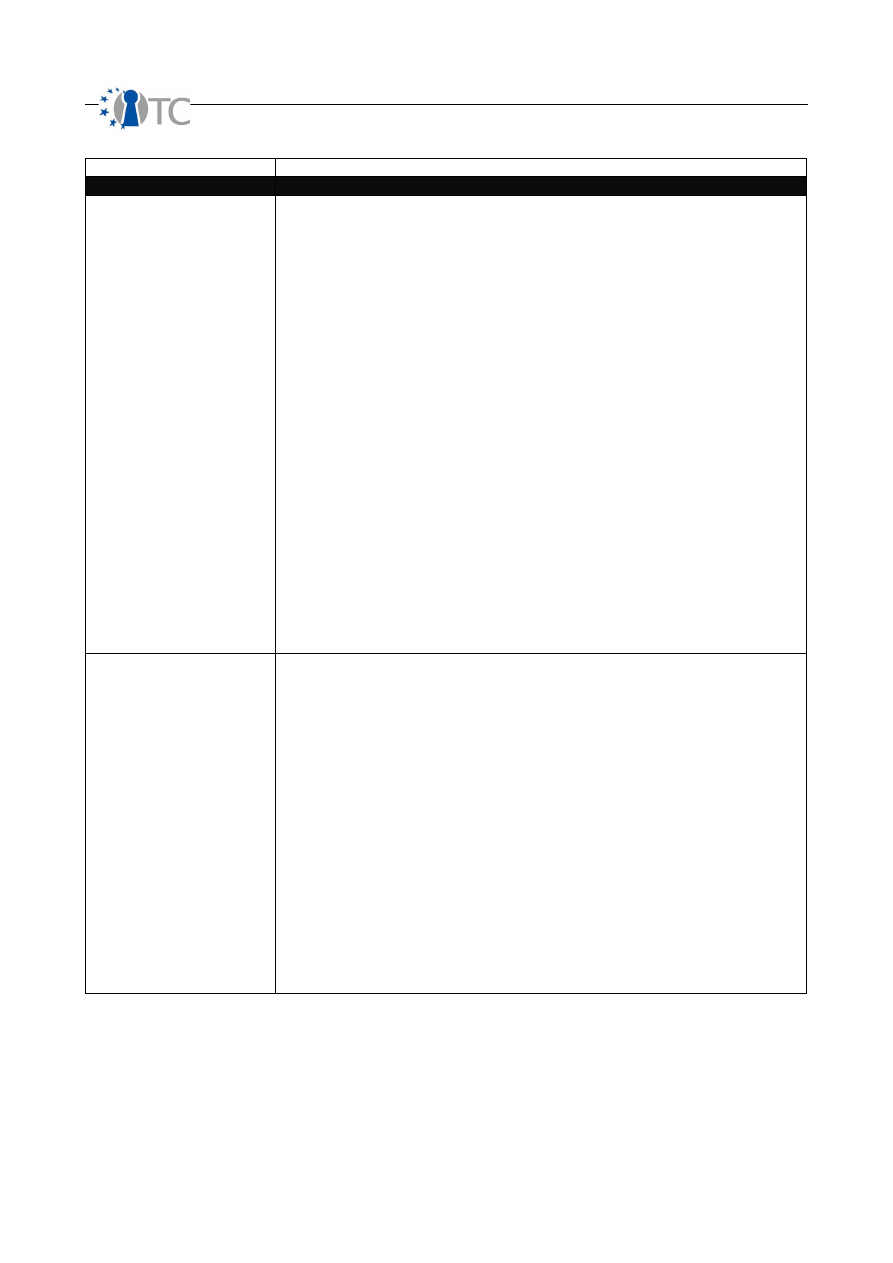

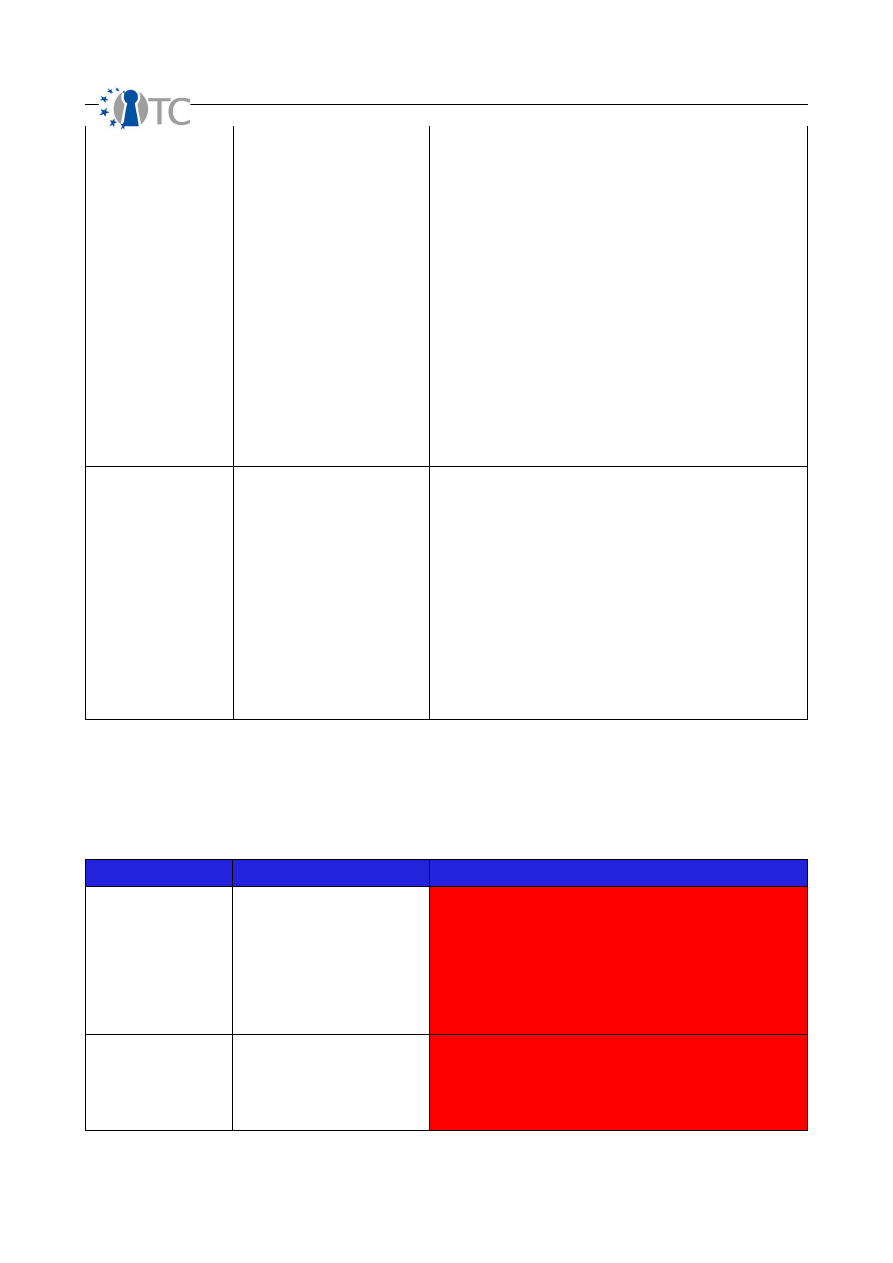

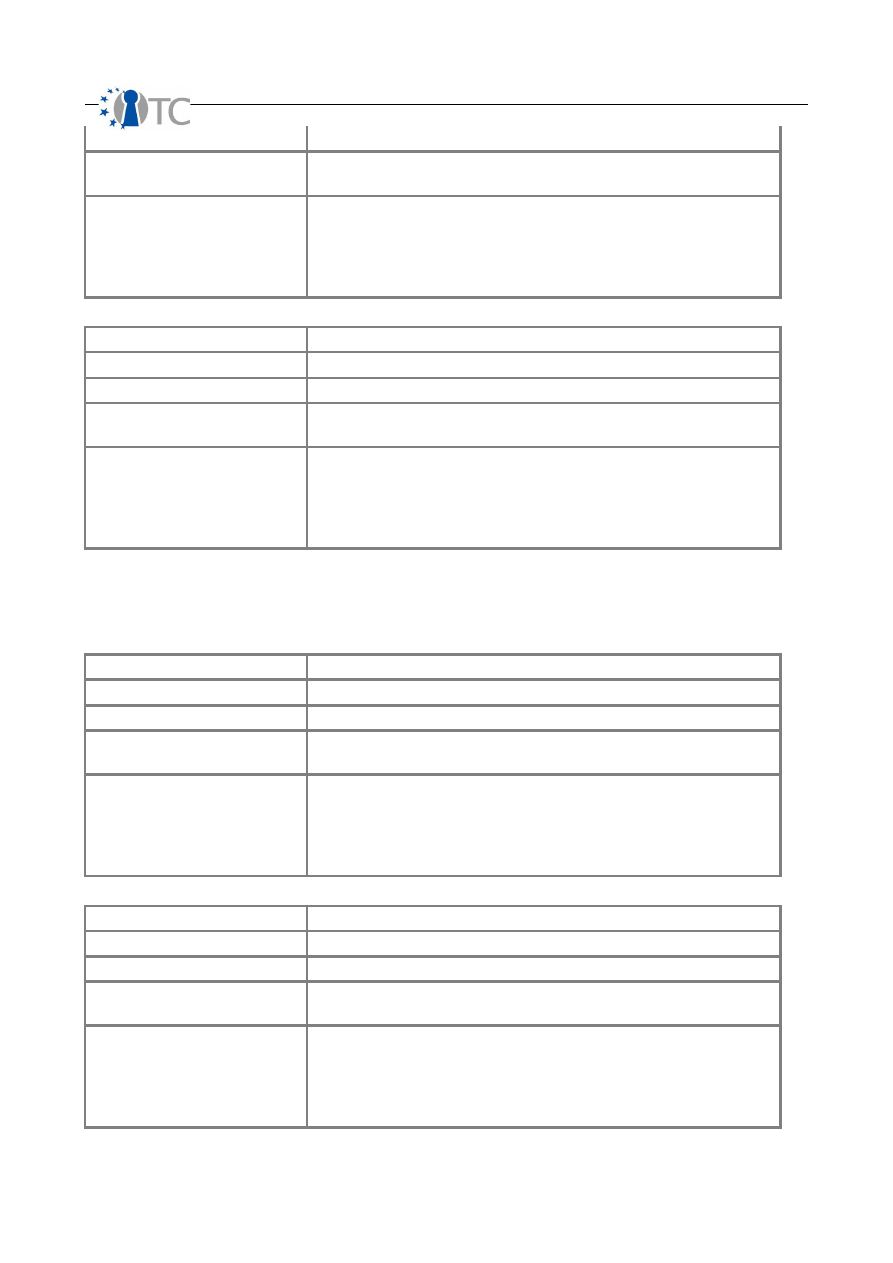

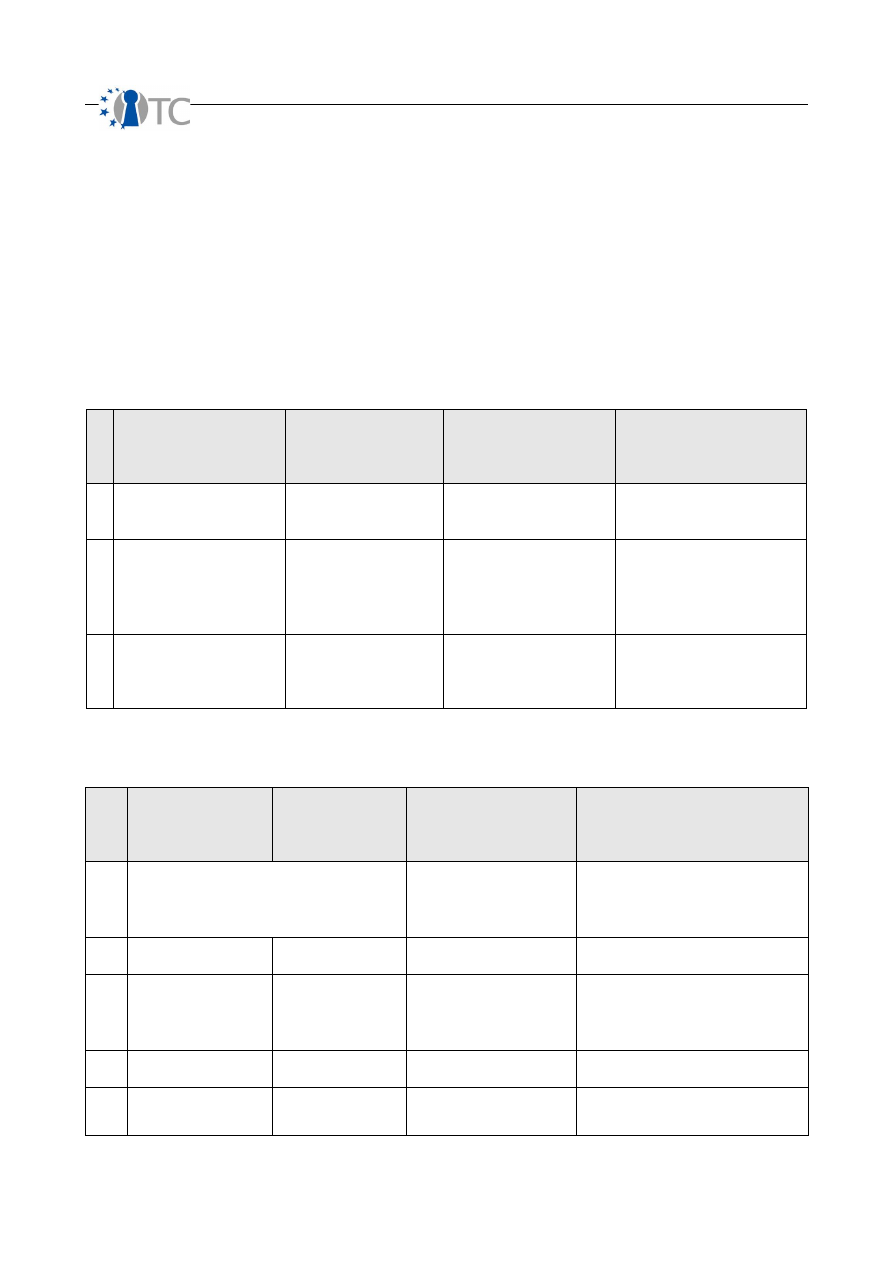

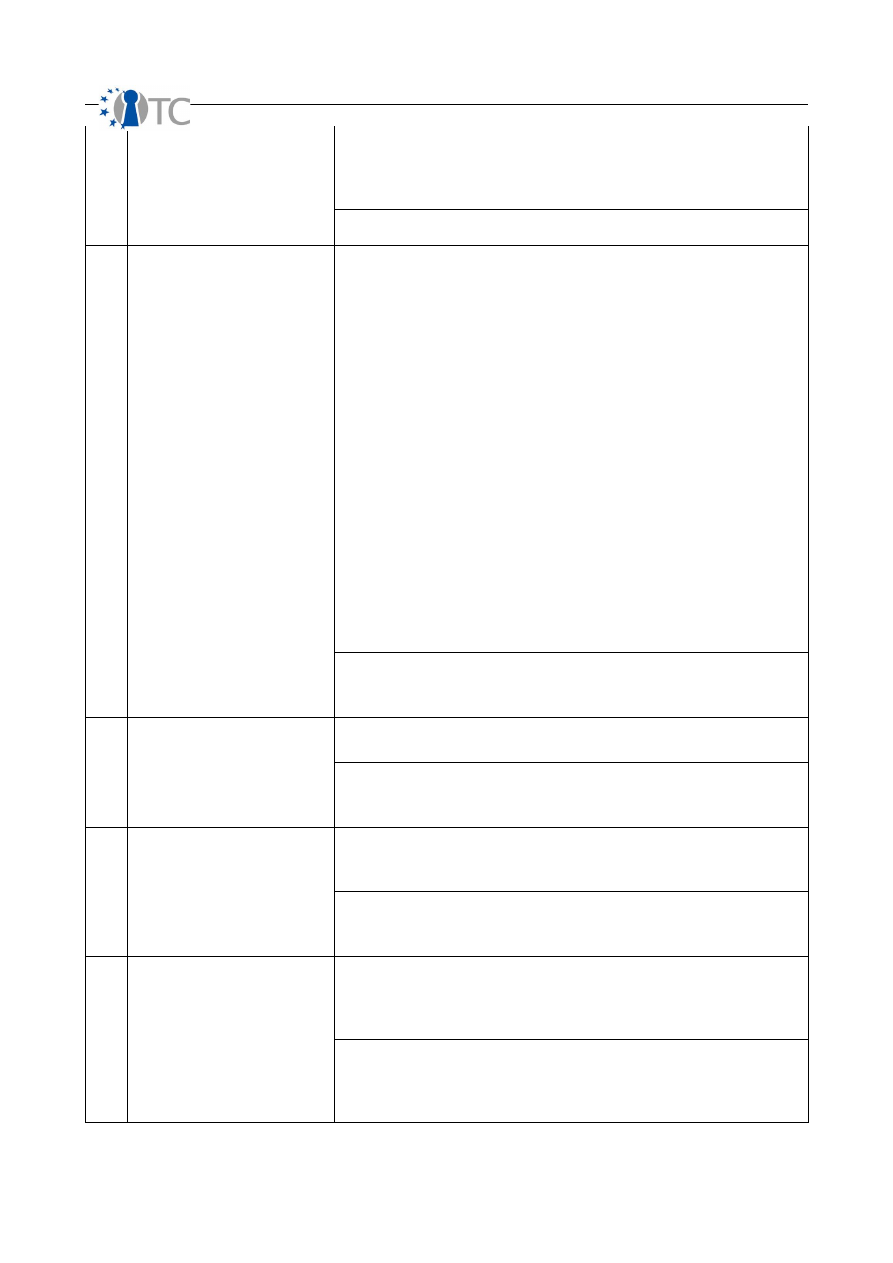

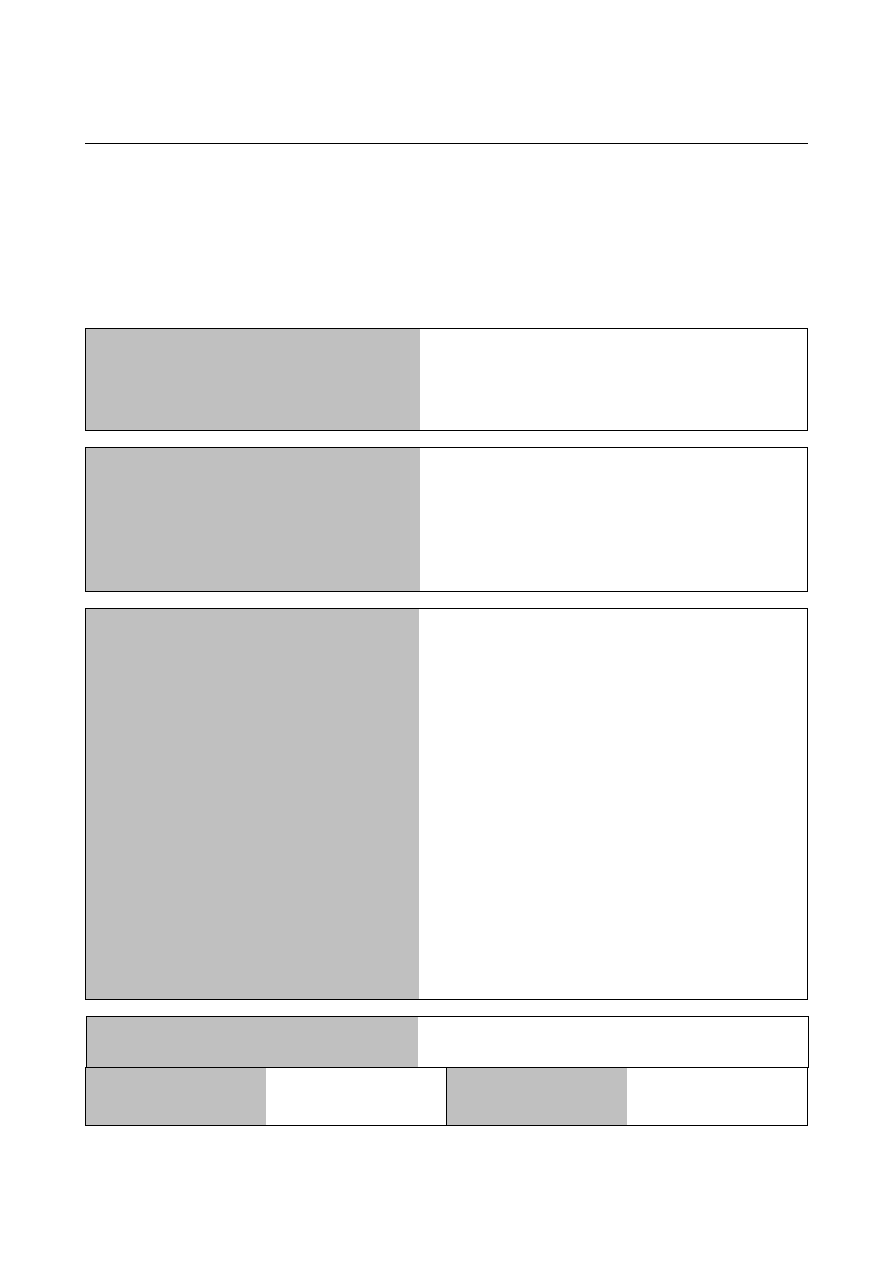

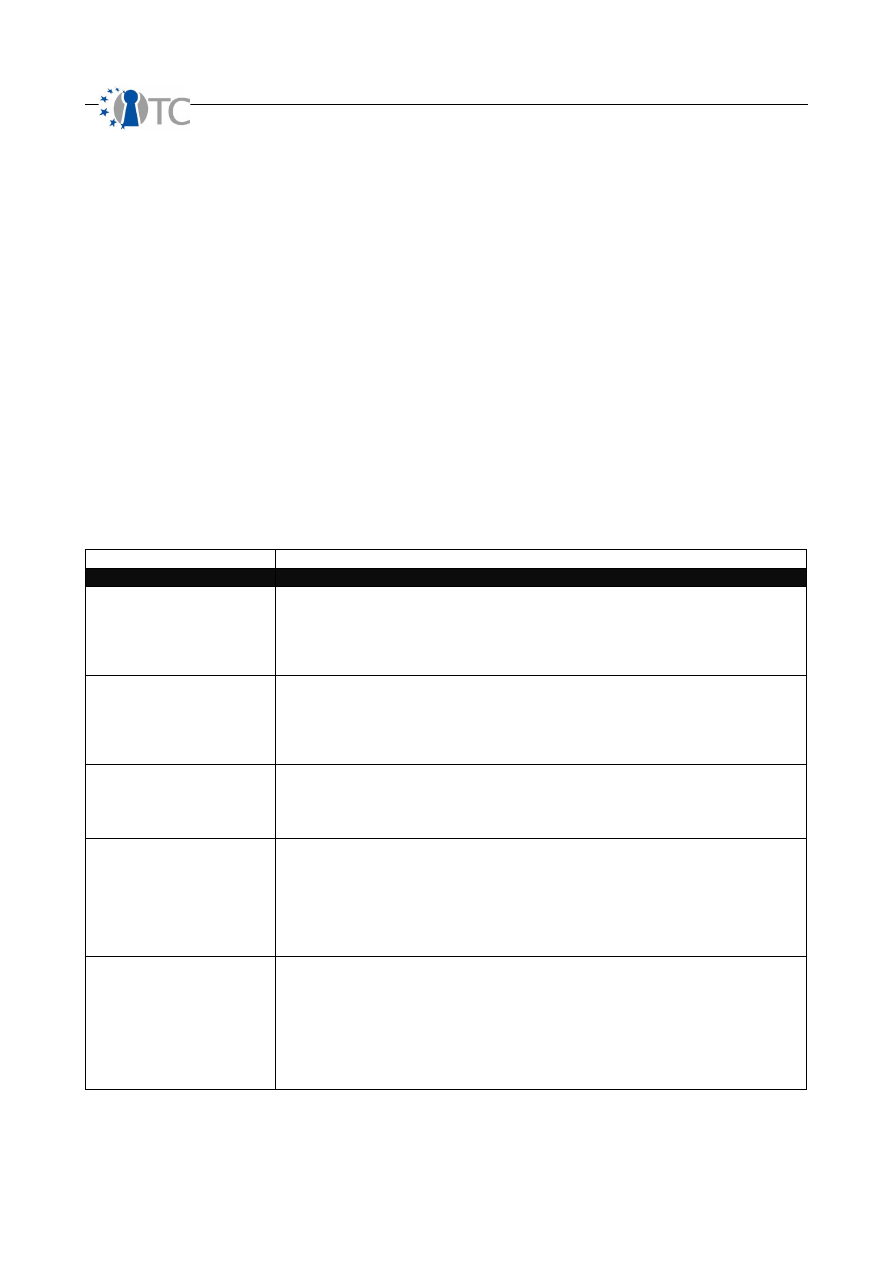

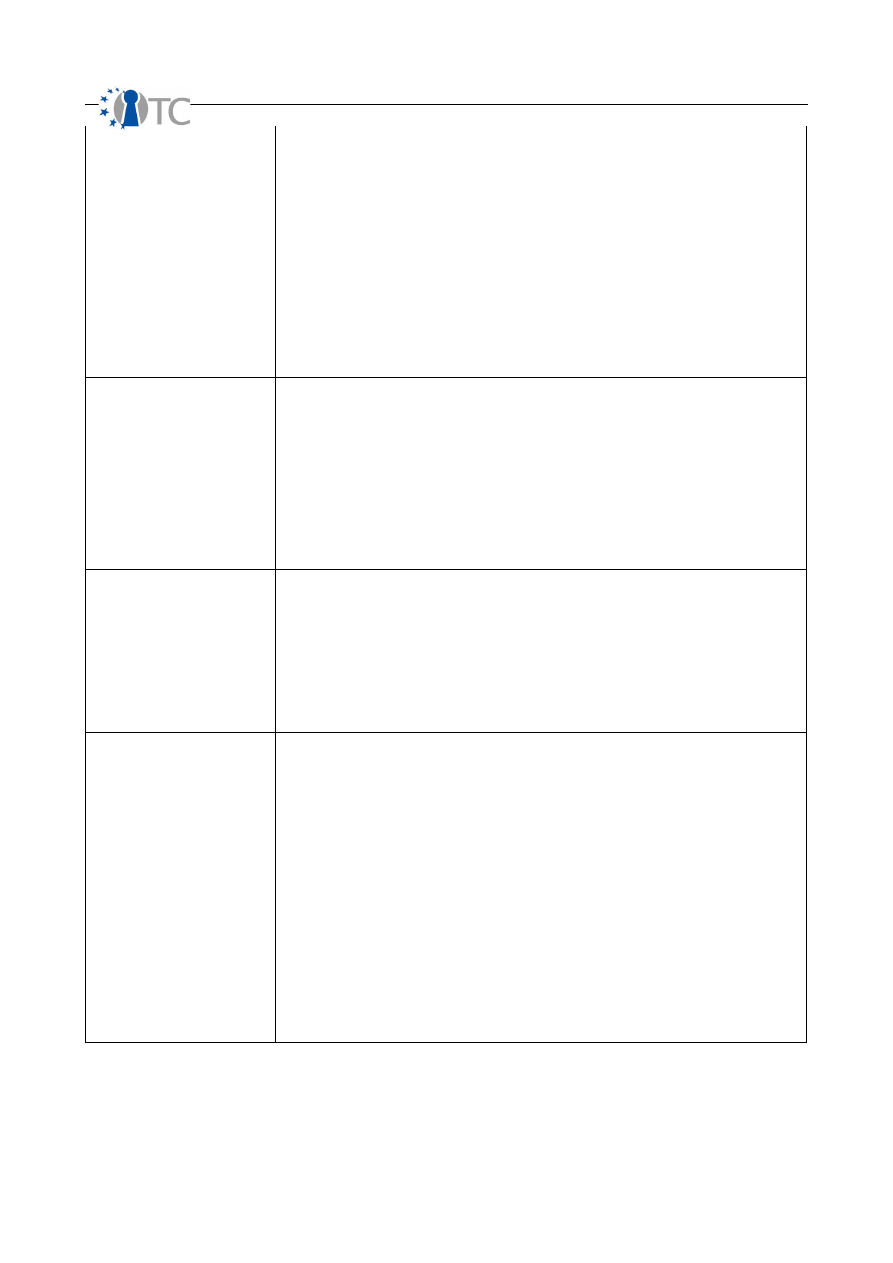

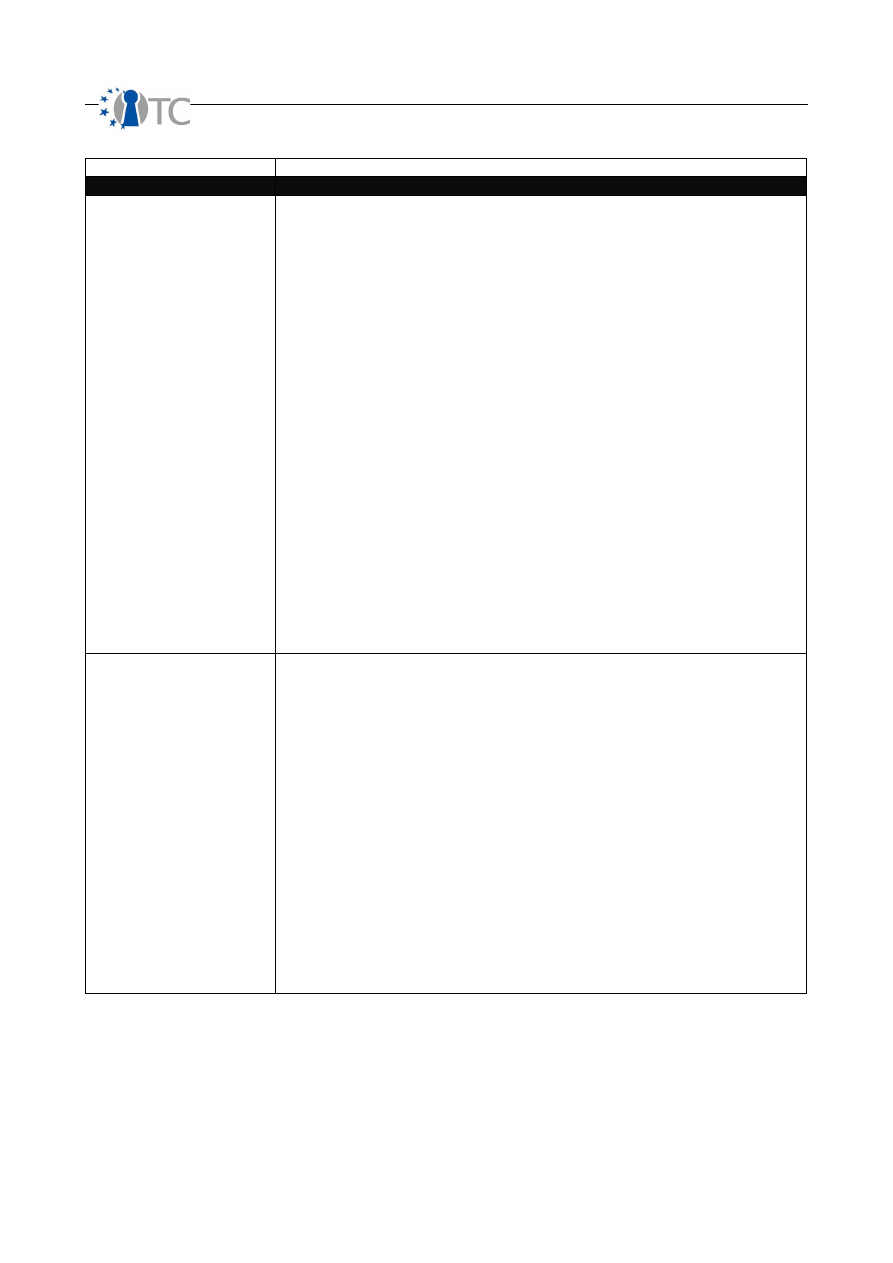

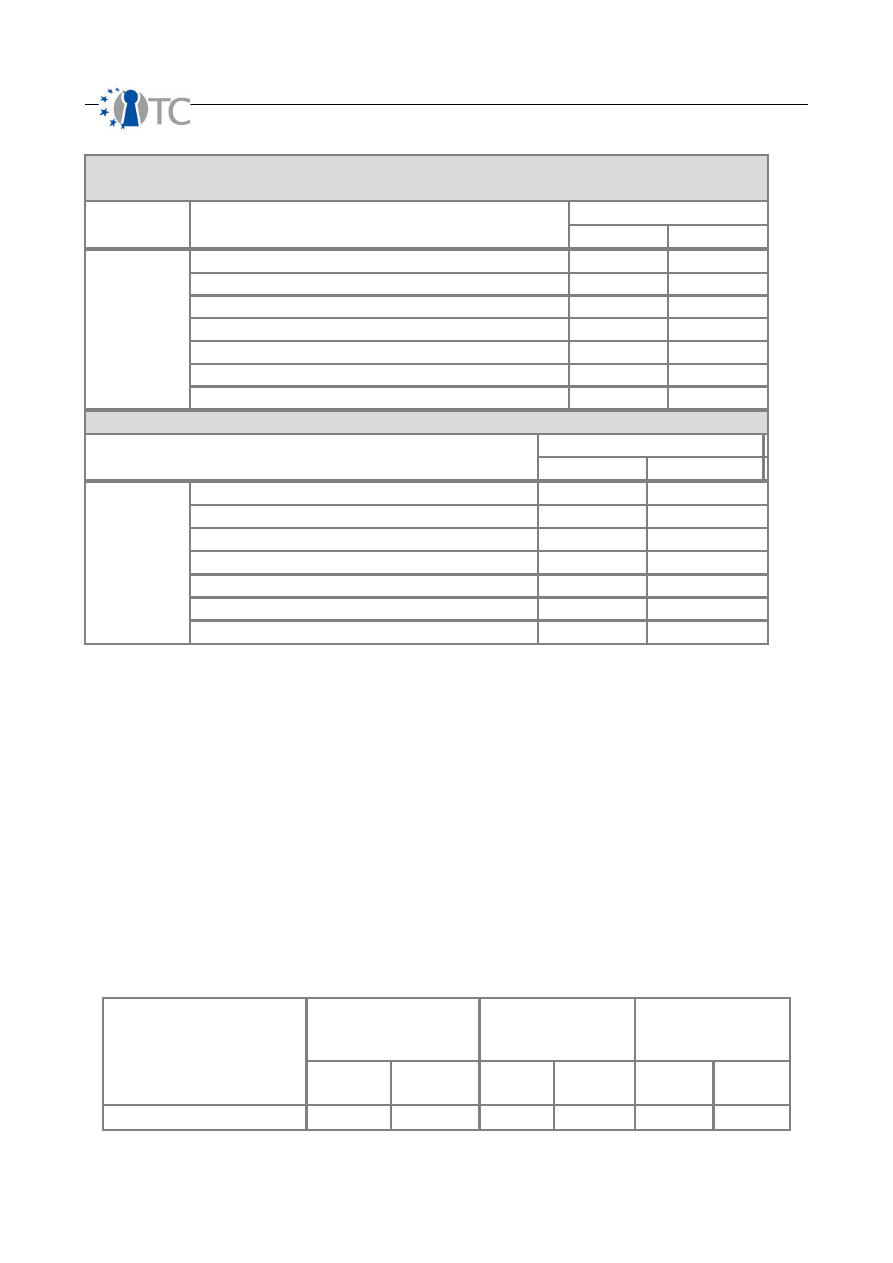

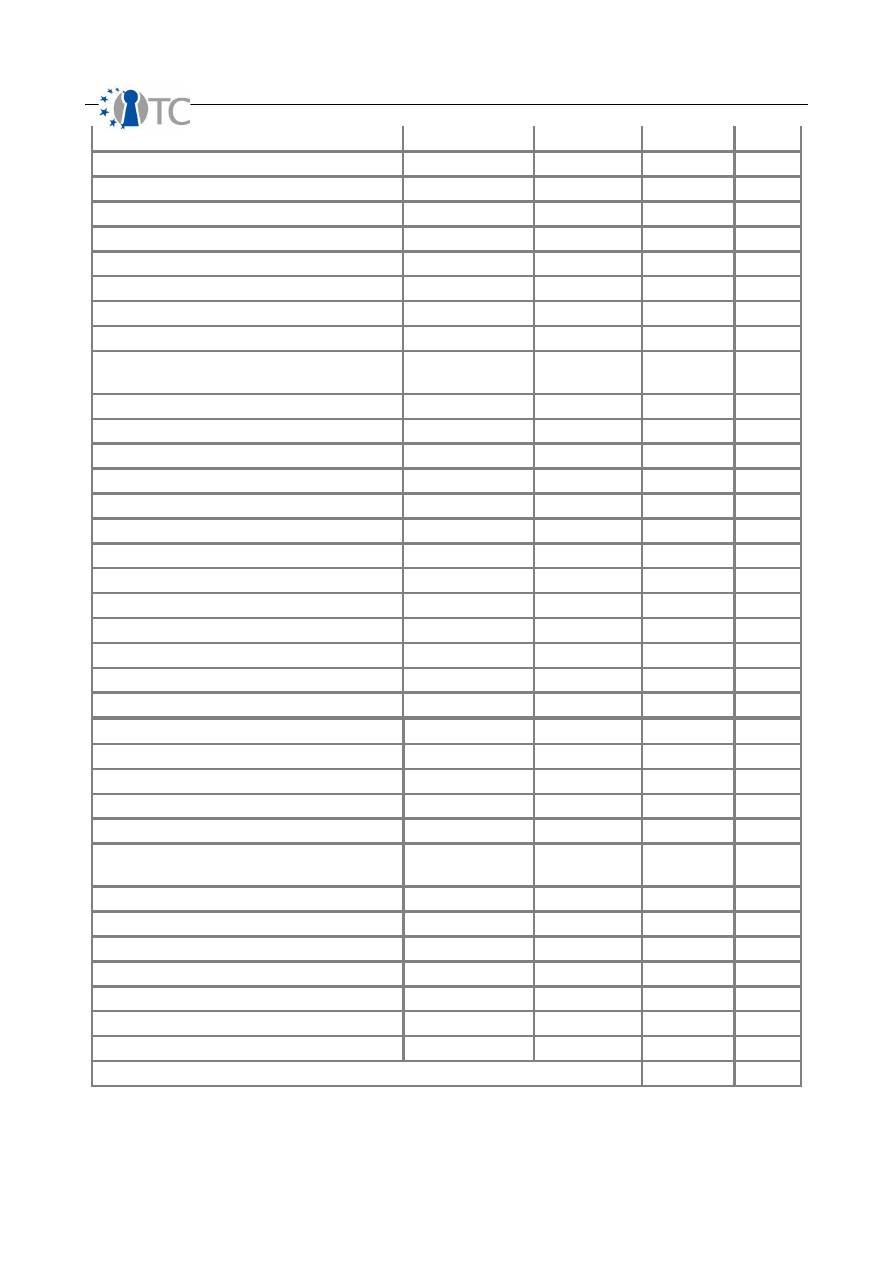

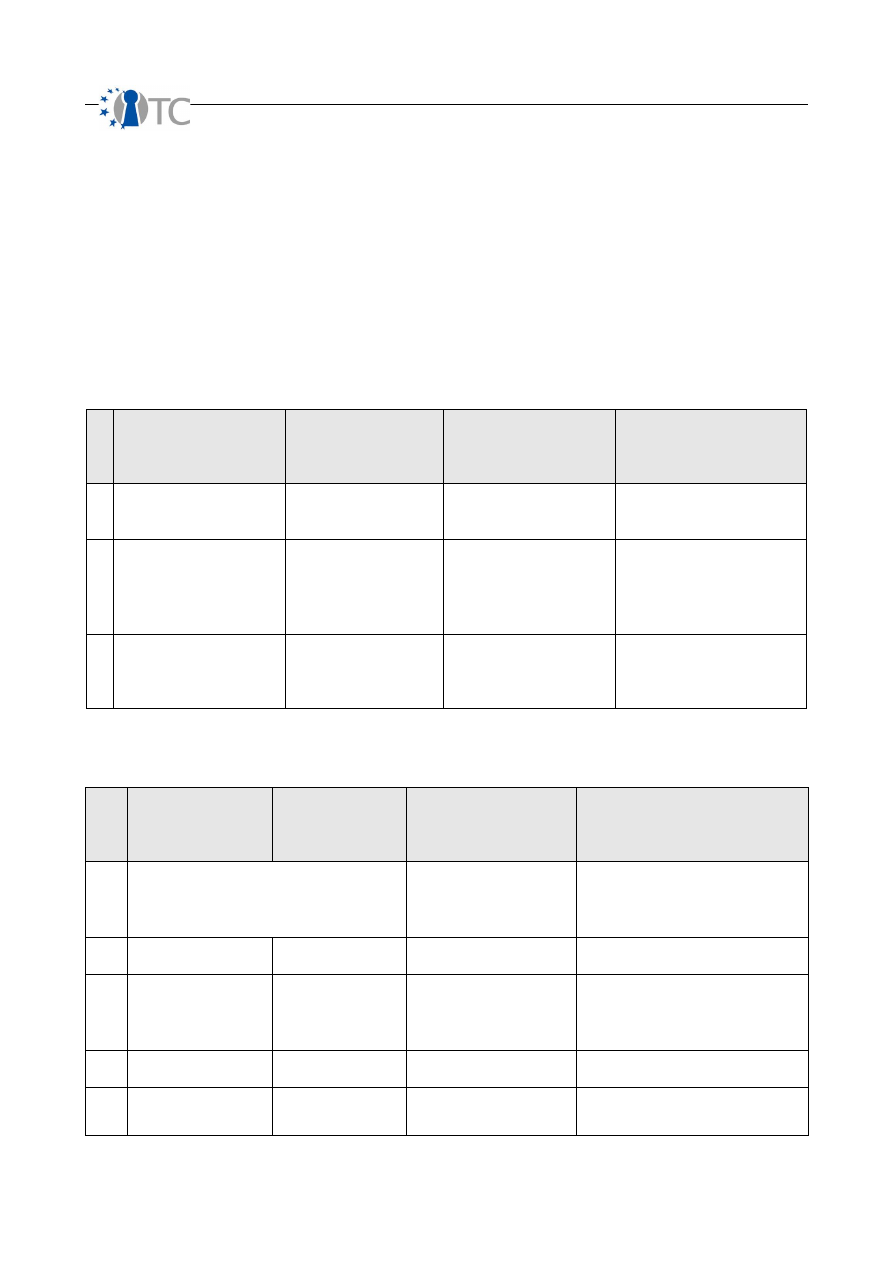

Table 1: Terminology

Term

Definition

Security

A form of protection where a physical separation is created between the assets and the

threat. In order to be secure, either the asset is physically removed from the threat or

the threat is physically removed from the asset. This includes elimination of either the

asset or the threat. This manual covers security from an operational perspective which is

verifying security measures in an operating or live environment.

Safety

A form of protection where the threat or its effects are controlled. In order to be safe,

the threat must be identified and the controls must be in place to assure the threat itself

or the effects of the threat are minimized to an acceptable level by the asset owner or

manager. This manual covers safety as “controls” which is the means to mitigate risk in

an operational or live environment.

Operations

The lack of security one must have to be interactive, useful, public, open, or available.

For example, limiting how a person buys goods or services from a store over a particular

channel, such as 1 door for going in and out, is a method of security within the store's

operations. Operations are defined by visibility, trusts, and accesses.

Controls

Impact and loss reduction controls. The assurance that the physical and information

assets as well as the channels themselves are protected from various types of invalid

interactions as defined by the channel. For example, insuring the store in the case of fire

is a control that does not prevent the inventory from getting damaged or stolen but will

pay out equivalent value for the loss. There are 10 controls. The first five controls are

Class A which control interactions. The five class B controls are relevant to controlling

procedures.

Limitations

This is the current state of perceived and known limits for channels, operations, and

controls as verified within the audit. For example, an old lock that is rusted and

crumbling used to secure the gates of the store at closing time has an imposed security

limitation where it is at a fraction of the protection strength necessary to delay or

withstand an attack. Determining that it is old and weak through visual verification in

this case is referred to as an identified limitation. Determining it is old and weak by

breaking it using 100 kg of force when a successful deterrent requires 1000 kg of force

shows a verified limitation.

OpenTC Deliverable 07.02

14/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

Operational Security

Operational Security also known as the scope’s Porosity is the first of the three RAV

factors that should be determined. It is initially measured as the sum of the scope’s

visibility, access and trust (

sum

OpSec

).

When we want to calculate the Risk Assessment Value it is however necessary to

determine the Operational Security base value,

base

OpSec

. The Operational Security

base value is given by the equation

base

OpSec

(

)

(

)

(

)

2

100

1

10

log

×

+

×

=

sum

OpSec

.

To measure the security of operations (OPSEC) requires the measurements of

visibility, trust, and access from the scope. The number of targets in the scope that

can be determined to exist by direct interaction, indirect interaction, or passive

emanations is its

visibility

. As visibility is determined, its value represents the

number of targets in the scope. Trust is any non-authenticated interaction to any of

the targets. Access is the number of interaction points with each target. The sum of

all three is the OPSEC Delta, which is the total number of openings within operations

and represents the total amount of operational security decreased within the target.

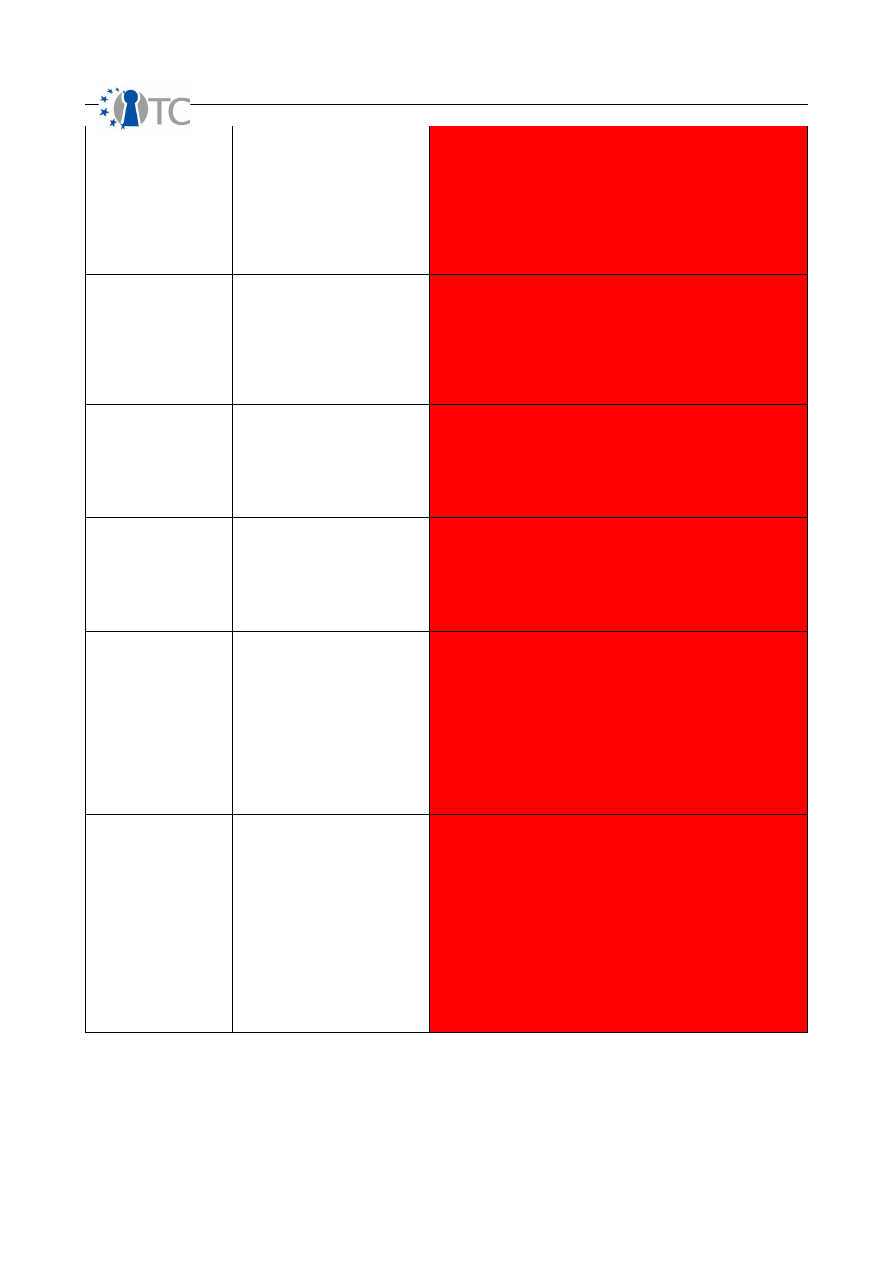

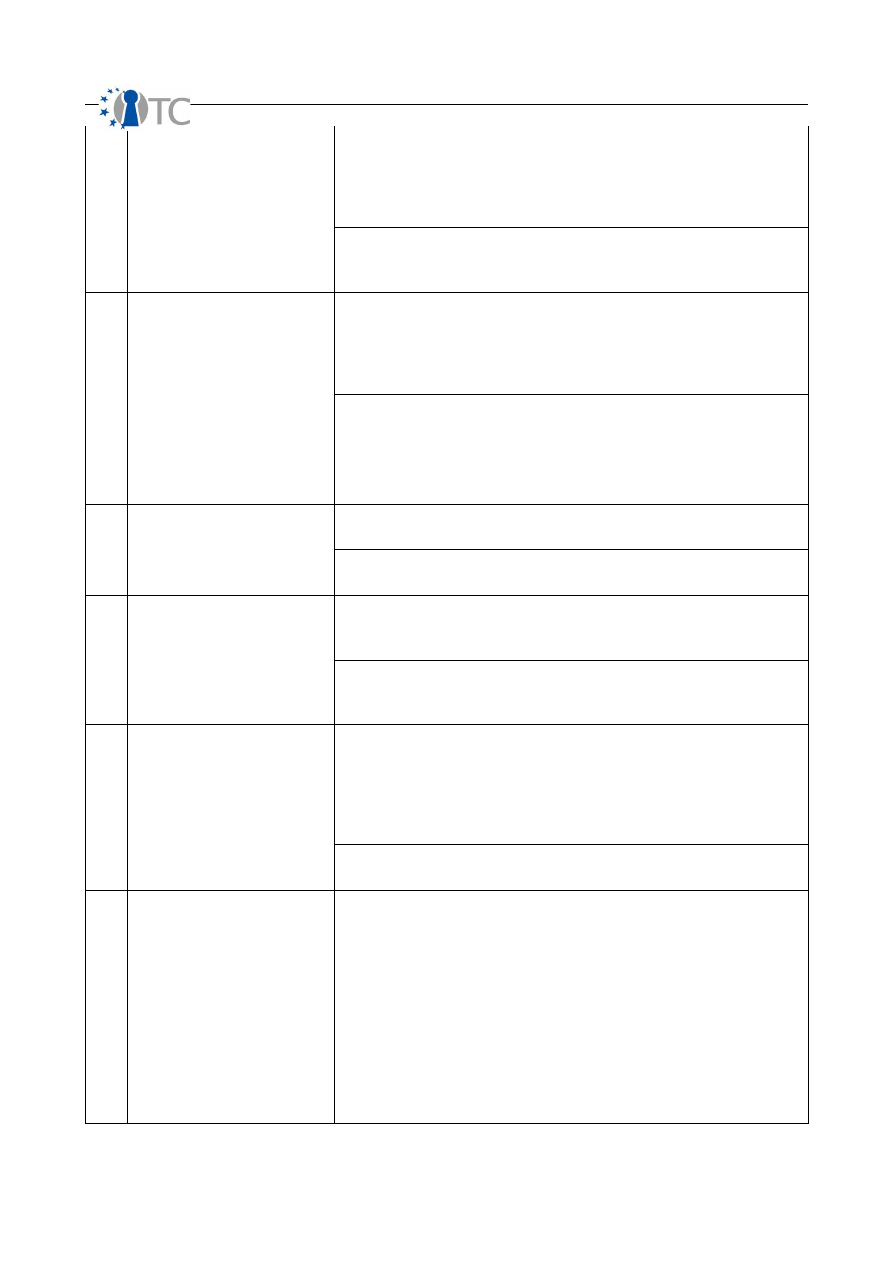

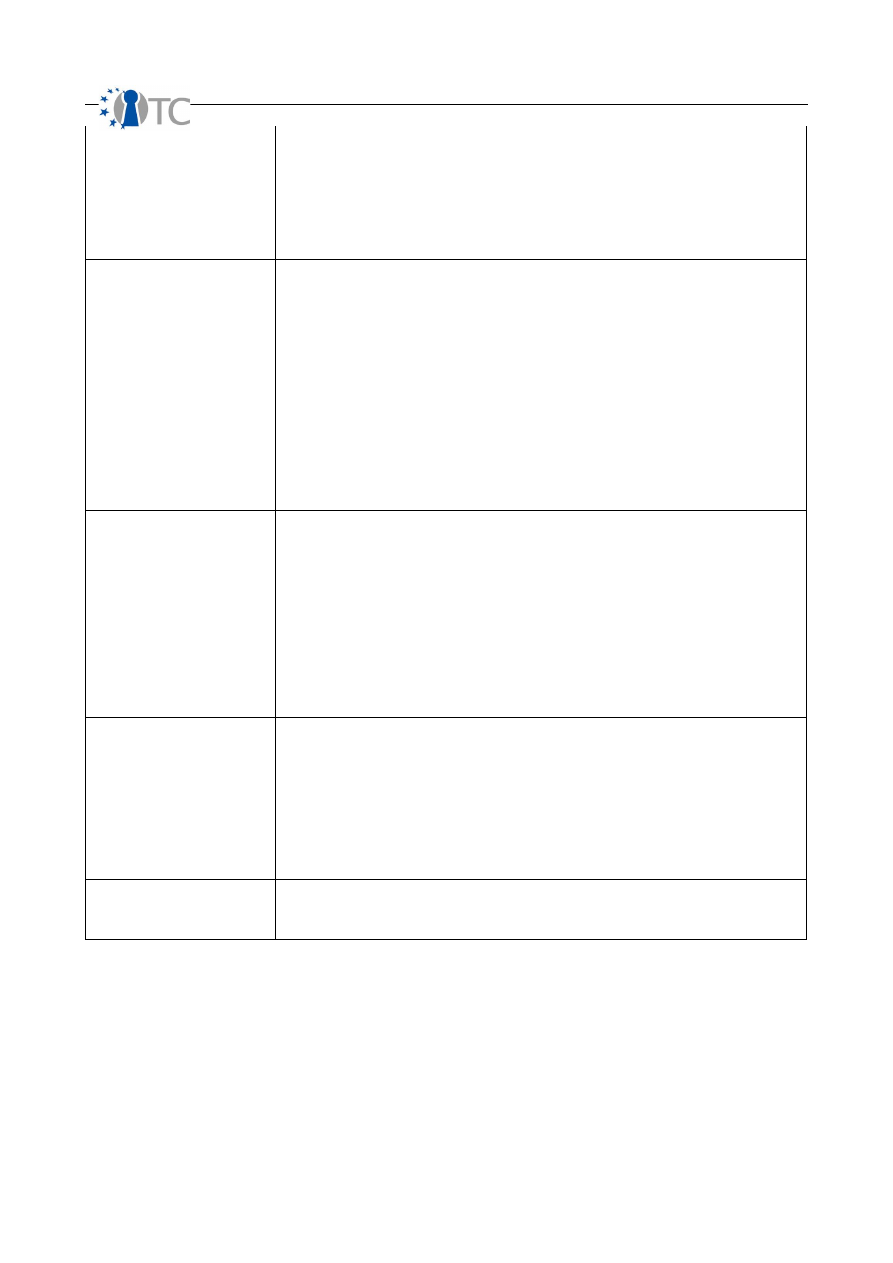

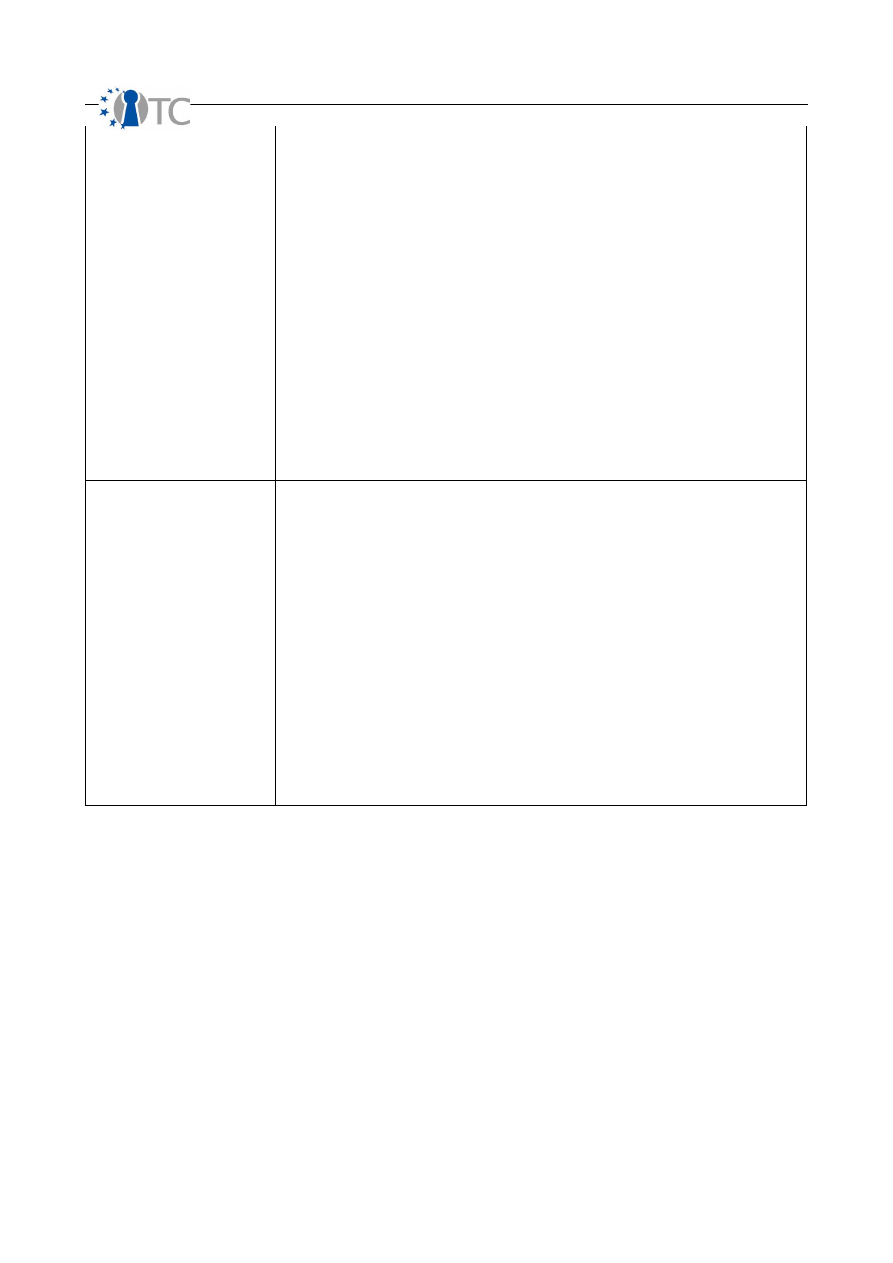

Table 2: Calculating OPSEC

OPSEC Categories

Descriptions

Visibility

The number of targets in the scope according to the scope. Count all targets by index

only once and maintain the index consistently for all targets. It is generally unrealistic to

have more targets visible then are targets in the defined scope however it may be

possible due to vector bleeds where a target which is normally not visible from one

vector is visible due to a misconfiguration or anomaly.

A HUMSEC audit employs 50 people however only 38 of them are interactive from the

test vector and channel. This would make a visibility of 38.

Trust

Count only each target allowing for unauthenticated interaction according to the

scope.

A HUMSEC audit may reveal that the help desk employees grant password resets for all

calls coming from internal phones without requesting identifying or authorizing

information. Within this context, each help desk employee who does this is counted as a

Trust for this scope. However, the same cannot be held true for external calls as in that

different scope, the one with the external to internal vector, these same help desk

employees are not counted as trusts.

Access

This is different from visibility where one is determining the number of existing targets.

Here the auditor must count each Access per unique interaction point per unique

probe.

In a PHYSSEC audit, a building with 2 doors and 5 windows which all open has an Access

of 7. If all the doors and windows are sealed then it is an Access of 0 as these are not

points where one can gain entry.

For a COMSEC audit of data networks, the auditor counts each port response as an

Access regardless how many different ways the auditor can probe that port. However,

if a service is not hosted at that port (daemon or an application) then all replies come

from the IP Stack. Therefore a server that responds with a SYN/ACK and service

interactivity to 1 of the TCP ports scanned and with a RST to the rest is not said to have

an access count of 65536 (including port 0) since 66535 of the ports respond with the

same response of RST which is from the kernel. To simplify, count uniquely only ports with

OpenTC Deliverable 07.02

15/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

service responses and IP Stack responses only when the probe initiates service

interactivity. A good example of a service activity over the IP Stack is an ICMP echo

response (PING reply).

With HUMSEC audits, this is much more simplified. A person who responds to a query

counts as an access with all types of queries (all the different questions you may ask or

statements made count as the same type of response on the same channel). Therefore

a person can only be an Access of 1 per channel and vector. Only a person who

completely ignores the request by not acknowledging the channel is not counted.

OPSEC Delta

Visibility + Trust + Access

The negative change in OPSEC protection.

Controls

The next step in calculating the RAV is to define the Loss Controls; the security

mechanisms put in place to protect the operations. First the sum of the Loss Controls,

sum

LC

, must be determined by adding together the 10 Loss Control categories. Now,

the Controls base value can be calculated as

base

LC

(

)

(

)

(

)

2

10

1

10

log

×

+

×

=

sum

LC

.

The

sum

LC

is multiplied by 10 here as opposed to 100 in the Operational Security

equation to account for the fact that all 10 Loss Controls are necessary to fully protect

1 visibility, access or trust.

Missing Controls

Given that the combination of the 10 Loss Controls combined balance the value of 1

OpSec loss (visibility, access, trust) it is necessary to determine the amount of Missing

Controls,

sum

MC

, in order to assess the value of the Security Limitations. This must be

done individually for each of the 10 Loss Control categories. For example, to

determine the Missing Controls for Authentication (

Auth

MC

) we must subtract the sum

of Authentication Controls (

sum

Auth

) of the scope from the

sum

OpSec

. The Missing

Controls can never be less than zero however.

The equation for determining the Missing Controls for Authentication (

Auth

MC

) is given

by

Auth

MC

=

sum

OpSec

-

sum

Auth

.

If

sum

OpSec

-

0

≤

sum

Auth

then

0

≈

Auth

MC

.

The resulting Missing Control totals for each of the 10 Loss Controls must then be

added to arrive at the total Missing Control value

(

sum

MC

).

Controls are the 10 loss protection categories in two categories, Class A (interactive)

and Class B (process). The Class A categories are authentication, indemnification,

subjugation, continuity, and resilience. The Class B categories are non-repudiation,

confidentiality, privacy, integrity, and alarm.

OpenTC Deliverable 07.02

16/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

Class A

●

Authentication is the control of interaction requiring having both credentials and

authorization where identification is required for obtaining both.

●

Indemnification is the control over the value of assets by law and/or insurance

to recoup the real and current value of the loss.

●

Subjugation is the locally sourced control over the protection and restrictions of

interactions by the asset responsible.

●

Continuity is the control over processes to maintain access to assets in the

events of corruption or failure.

●

Resilience is the control over security mechanisms to provide protection to

assets in the event of corruption or failure.

Class B

●

Non-repudiation prevents the source from denying its role in any interactivity

regardless whether or not access was obtained.

●

Confidentiality is the control for assuring an asset displayed or exchanged

between parties can be known outside of those parties.

●

Privacy is the control for the method of how an asset displayed or exchanged

between parties can be known outside of those parties.

●

Integrity is the control of methods and assets from undisclosed changes.

●

Alarm is the control of notification that OPSEC or any controls have failed, been

compromised, or circumvented.

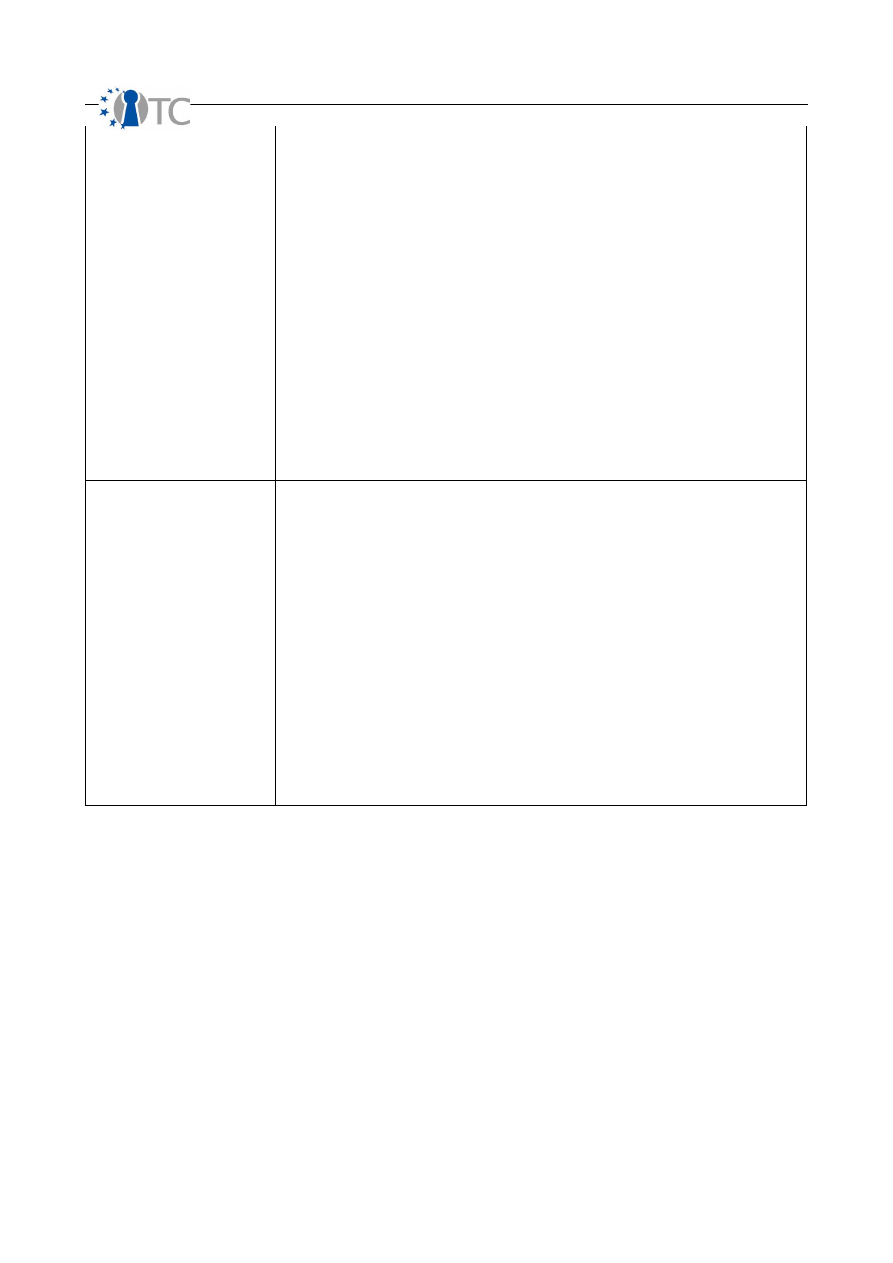

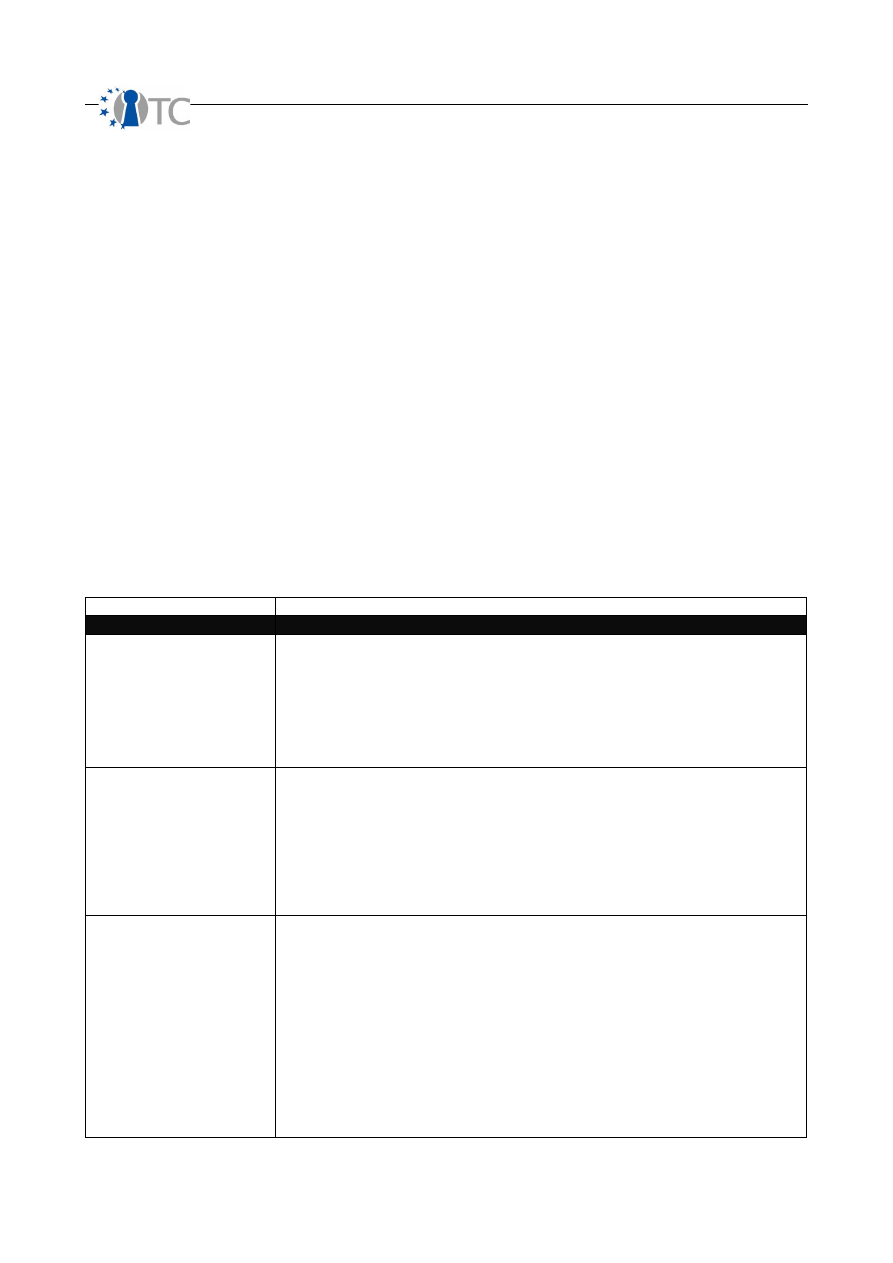

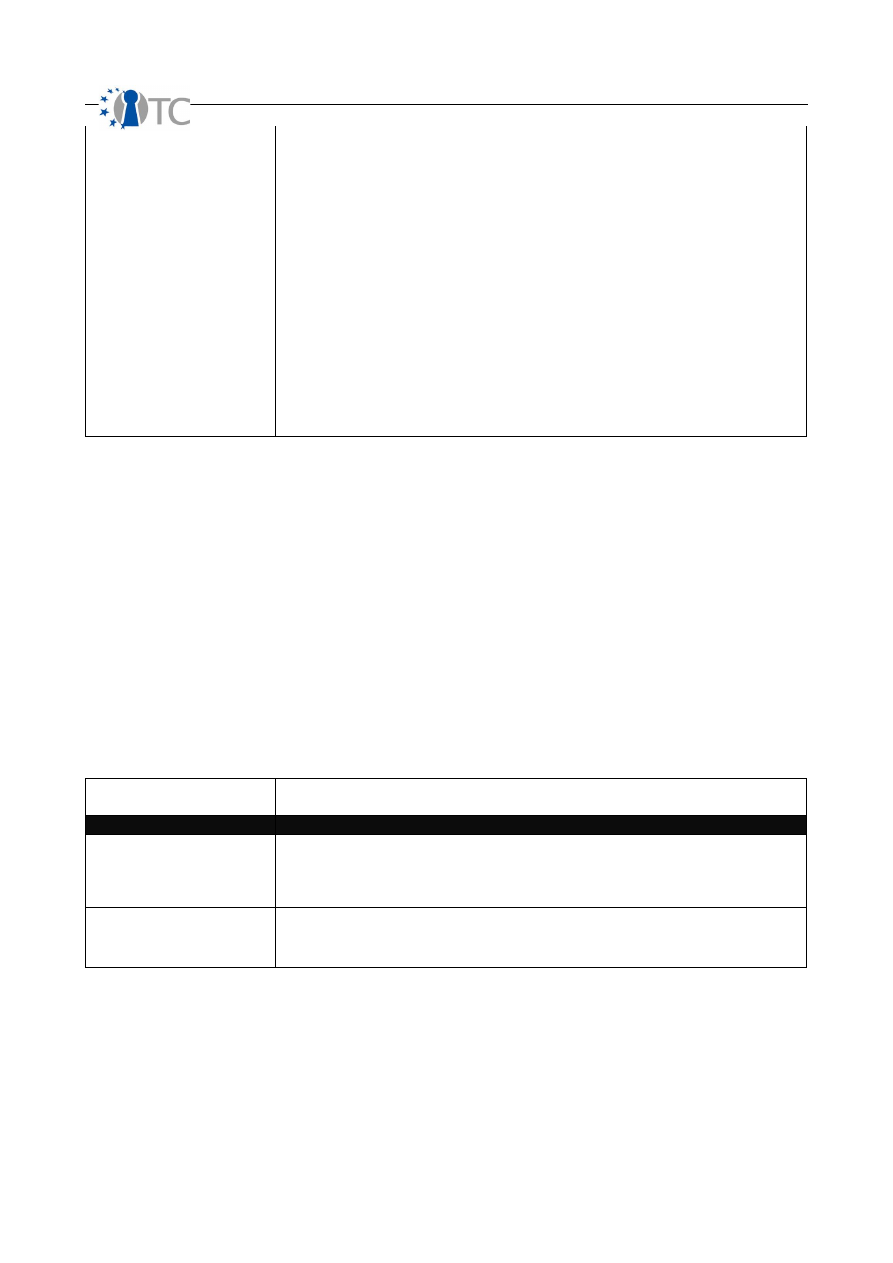

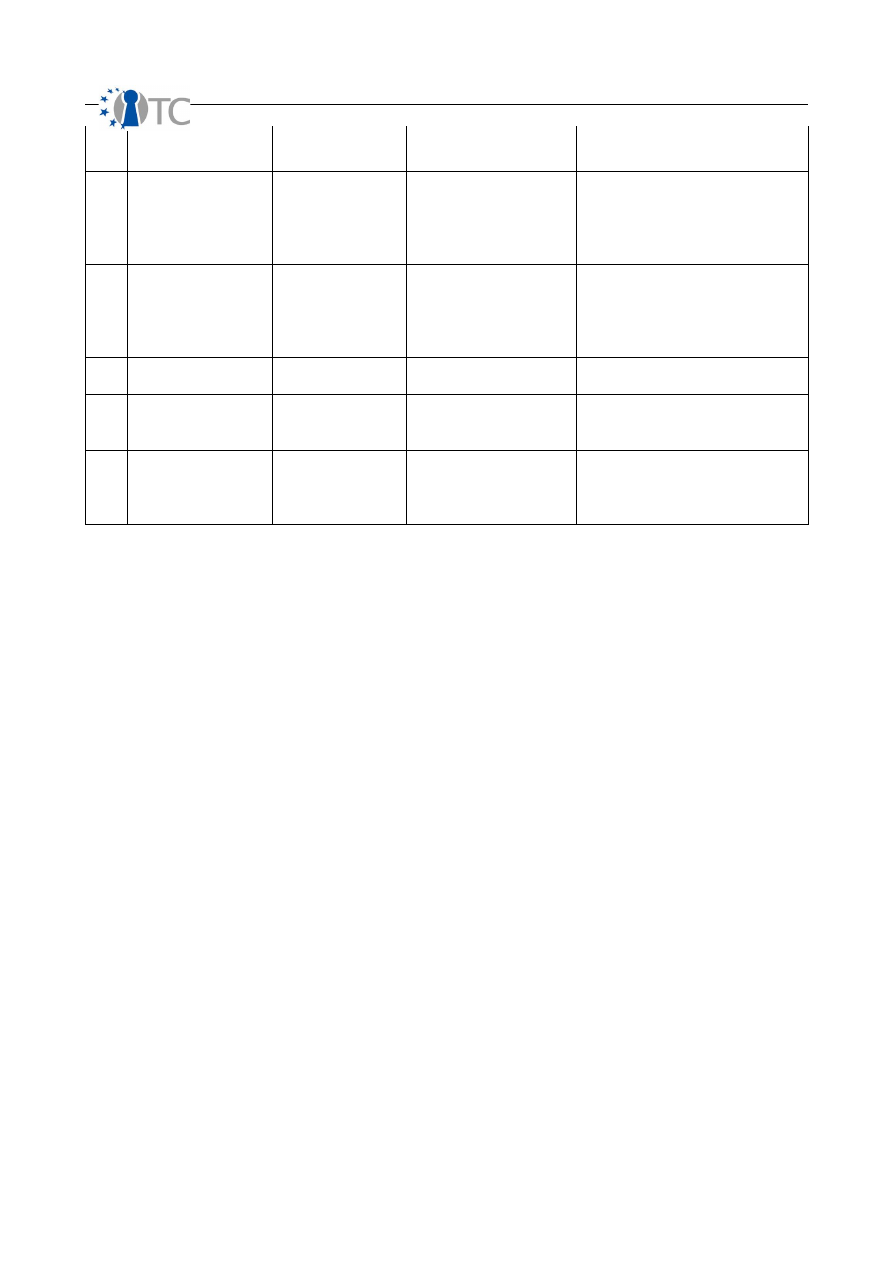

Table 3: Calculating Controls

Controls Categories

Descriptions

Authentication

Count each instance of authentication required to gain access. This requires that

authorization and identification make up the process for the proper use of the

authentication mechanism.

In a PHYSSEC audit, if both a special ID card and a thumb print scan is required to gain

access then add two for authentication. However if access just requires one or the

other then only count one.

Indemnification

Count each instance of methods used to exact liability and insure compensation for all

assets within the scope.

A basic PHYSSEC example is a warning sign threatening to prosecute trespassers.

Another common example is property insurance. In a scope of 200 computers, a

blanket insurance policy against theft applies to all 200 and therefore is a count of 200.

However, do not confuse the method with the flaw in the method. A threat to prosecute

without the ability or will to prosecute is still an indemnification method however with a

limitation.

OpenTC Deliverable 07.02

17/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

Subjugation

Count each instance for access or trust in the scope which strictly does not allow for

controls to follow user discretion or originate outside of itself. This is different from being a

security limitation in the target since it applies to the design or implementation of

controls.

In a COMSEC data networks audit, if a login can be made in HTTP as well as HTTPS but

requires the user to make that distinction then it fails to count toward Subjugation.

However, if the implementation requires the secured mode by default such as a PKI-

based internal messaging system then it does meet the requirement of the Subjugation

control for that scope.

More simply, in HUMSEC, a non-repudiation process where the person must sign a

register and provide an identification number to receive a document is under

Subjugation controls when the provider of the document records the identification

number rather than having the receiver do so to eliminate the recording of a false

number with a false name.

Continuity

Count each instance for access or trust in the scope which assures that no interruption in

interaction over the channel and vector can be caused even under situations of total

failure. Continuity is the umbrella term for characteristics such as survivability, load

balancing, and redundancy.

In a PHYSSEC audit, it is discovered that if an entry way into a store becomes blocked no

alternate entry way is possible and customers cannot enter therefore the access does

not have Continuity.

In a COMSEC data networks audit, if a web server service fails from high-load then an

alternate web server provides redundancy so no interactions are lost. This access does

have Continuity.

Resilience

Count each instance for access or trust in the scope that does not fail open and without

protection or provide new accesses upon a security failure. In common language, it is

said to “fail securely”.

In a PHYSSEC audit, from 2 guards controlling access to a door if one is removed in any

way, then the door cannot be opened by the remaining guard then it has Resilience.

In a COMSEC data networks audit, if a web service requiring a login or password loses

communication with its authentication database, then all access should be denied

rather than permitted to have Resilience.

Non-repudiation

Count each instance for the access or trust that provides a non-repudiation mechanism

for each interaction to provide assurance that the particular interaction did occur at a

particular time between the identified parties. Non-repudiation depends upon

identification and authorization to be properly established for it to be properly applied

without limitations.

In a PHYSSEC audit, the Non-repudiation control exists if the entrance to a building

requires a camera with a biometric face scan to gain entry and each time it is used, the

time of entry is recorded with the ID. However, if a key-card is used instead, the Non-

repudiation control, requires a synchronized, time-coded camera to assure the record

of the card-users identity to avoid being a flawed implementation. If the door is tried

without the key card, not having the synchronized camera monitoring the door would

mean that not all interactions with the entryway have the Non-repudiation control and

therefore does not count for this control.

In a COMSEC data networks audit, there may be multiple log files for non-repudiation. A

port scan has interactions at the IP Stack and go into one log while interaction with the

web service would log to another file. However, as the web service may not log the

interactions from the POST method, the control is still counted however so is the security

limitation.

OpenTC Deliverable 07.02

18/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

Confidentiality

Count each instance for access or trust in the scope that provides the means to

maintain the content of interactions undisclosed between the interacting parties.

A typical tool for Confidentiality is encryption. Additionally, obfuscation of the content

of an interaction is also a type of confidentiality albeit a flawed one.

In HUMSEC, however, a method of Confidentiality may include whispering or using hand

signals.

Privacy

Count each instance for access or trust in the scope that provides the means to

maintain the method of interactions undisclosed between the interacting parties. While

“being private” is a common expression, the phrase is a bad example of what privacy is

as a loss control because it includes elements of confidentiality. As a loss control, when

something is done “in private” it means that only “the doing” is private but the content

of the interaction may not be.

A typical tool for Privacy is opaquing the interaction, having the interaction take place

outside of the Visibility of third parties. Confusion of the means of interaction as

obfuscation is another method of applying the Privacy control.

In HUMSEC, a method of Privacy may be simply taking the interaction into a closed

room away from other people. In movies, we see techniques to create the Privacy

control such as setting two of the same suitcases set side by side, some type of incident

to create confusion takes place and the two people switch the suitcases in seemingly

plain view.

Integrity

Count each instance for access or trust in the scope which can assure that the

interaction process and access to assets has finality and cannot be corrupted, hanged,

continued, redirected, or reversed without it being known to the parties involved.

Integrity is a change control process.

In COMSEC data networks, encryption or a file hash can provide the Integrity control

over the change of the file in transit.

In HUMSEC, segregation of duties and other corruption-reduction mechanism provide

Integrity control. Assuring integrity in personnel requires that two or more people are

required for a single process to assure oversight of that process. This includes that no

master access to the whole process exists. This can be no person with full access and no

master key to all doors.

Alarm

Count each instance for access or trust which has a record or makes a notification

when unauthorized and unintended porosity increases for the vector or restrictions and

controls are compromised or corrupted.

In COMSEC data networks, count each server and service which a network-based

intrusion detection system monitors. Or count each service that maintains a monitored

log of interaction. Access logs count even if they are not used to send a notification

alert immediately unless they are never monitored. However, logs which are not

designed to be used for such notifications, such as a counter of packets sent and

received, does not classify as an alarm as there is too little data stored for such use.

Controls Delta

Sum (all controls) *.1

The positive change over OPSEC protection. The 10 loss controls combined balance the

value of 1 OPSEC loss (access, visibility, or trust).

Security Limitations

The state of security in regard to known flaws and protection restrictions within the

scope are calculated as Limitations. To give appropriate values to each limitation

type, they must be categorized and classified. While any classification name or

number can be used, this methodology attempts to name them according to their

effects on OPSEC and Controls and does not regard them in a hierarchical format of

severity. Five classifications are designated to represent all types of limitations.

OpenTC Deliverable 07.02

19/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

1. Vulnerability is a flaw or error that: (a) denies access to assets for authorized

people or processes, (b) allows for privileged access to assets to unauthorized

people or processes, or (c) allows unauthorized people or processes to hide

assets or themselves within the scope.

2. Weakness is a flaw or error that disrupts, reduces, abuses, or nullifies

specifically the effects of the interactivity controls authentication,

indemnification, resistance, subjugation, and continuity.

3. Concern is a flaw or error that disrupts, reduces, abuses, or nullifies the effects

of the flow or execution of process controls non-repudiation, confidentiality,

privacy, integrity, and alarm.

4. Exposure is an unjustifiable action, flaw, or error that provides direct or indirect

visibility of targets or assets within the chosen scope channel of the security

presence.

5. Anomaly is any unidentifiable or unknown element which cannot be accounted

for in normal operatio

ns.

The concept that limitations are only limitations if they have no justification in

business or otherwise is false. A limitation is a limitation if it behaves in one of the

limiting factors as described here. A justification for a limitation is a risk decision and

one that is either met with a control of some kind even if that control is merely

acceptance. Risk decisions that accept the limitations as they are often come down

to: the damage a limitation can do does not justify the cost to fix or control the

limitation, the limitation must be so according to legislation, regulations, or policy, or a

conclusion that the threat does not exist or is likely for the particular limitation. Risk

justifications do not enter in the RAV metrics and all limitations should be counted as

discovered regardless if best practice, common practice, or legal practice denotes it as

not an acceptable risk. For the metric to be a true representation of the operational

security of the scope, for the ability of future risk assessments to be performed with

the metric as a basis, and for proper controls to be used to offset even those risks

deemed necessary for legislative reasons, the auditor must report the operational

security state as it is.

Another concept that must be taken into consideration is one of managing flaws and

errors in an audit. An audit will often uncover more than one flaw per target. The

auditor is to report the flaws per target and not the weak targets. These flaws may be

in the protection measures and controls themselves diminishing actual security. Each

flaw is to be rated as to what occurs when the flaw is invoked even if that must be

theoretical or of limited execution to restrict actual damages. Theoretical

categorization, where operation could not take place, is a slippery slope and should

really only be limited in the case of a medium to high risk of actual damages or where

recovery from damage is difficult or requires a long time period. When categorizing

the flaws, each flaw should be examined and calculated in specific terms of operation

at its most basic components. However, the auditor should be sure never to report a

“flaw within a flaw” where the flaws share the same component and same operational

effect.

The Security Limitations are individually weighted. The weighting of the

Vulnerabilities, Weaknesses and Concerns are based on a relationship between the

Porosity or

sum

OpSec

and the Loss Controls.

OpenTC Deliverable 07.02

20/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

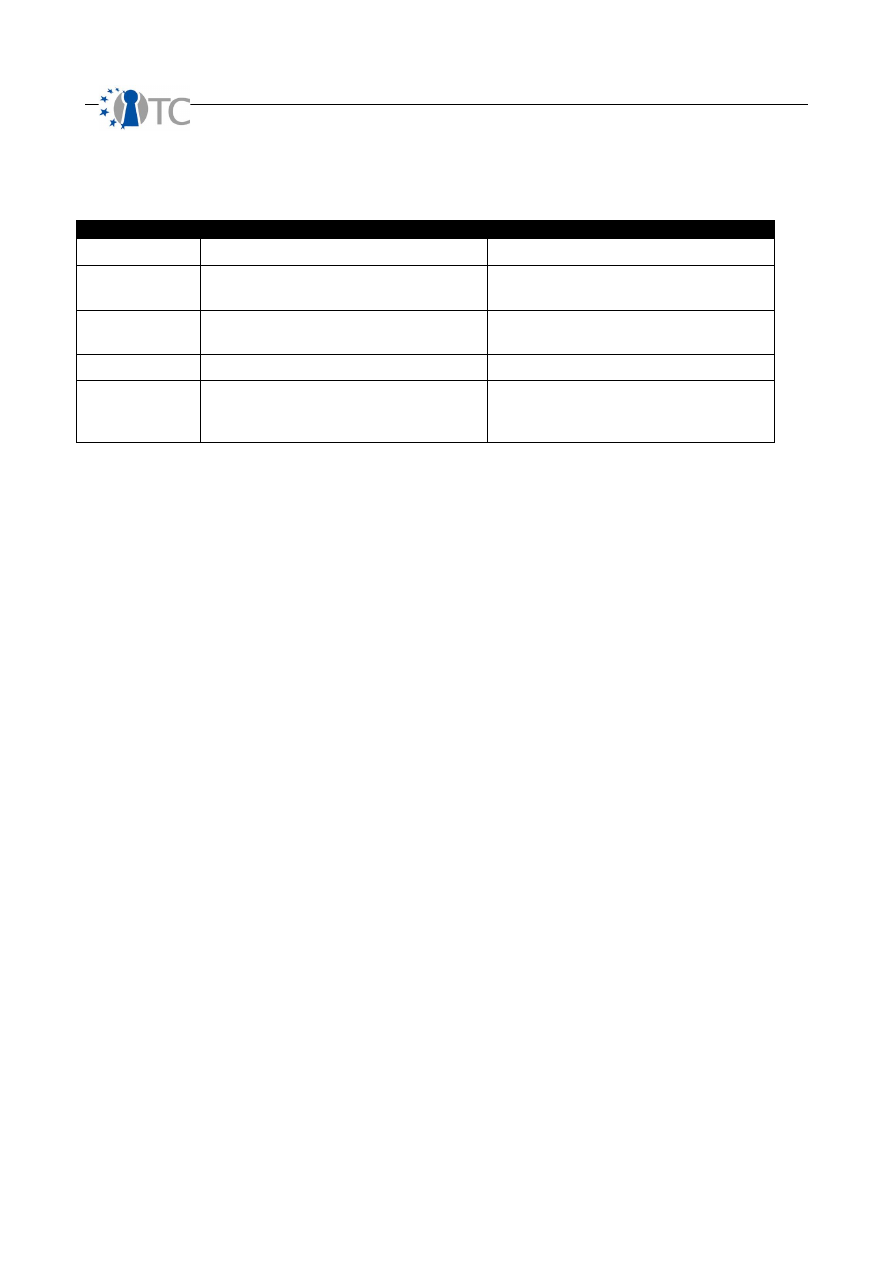

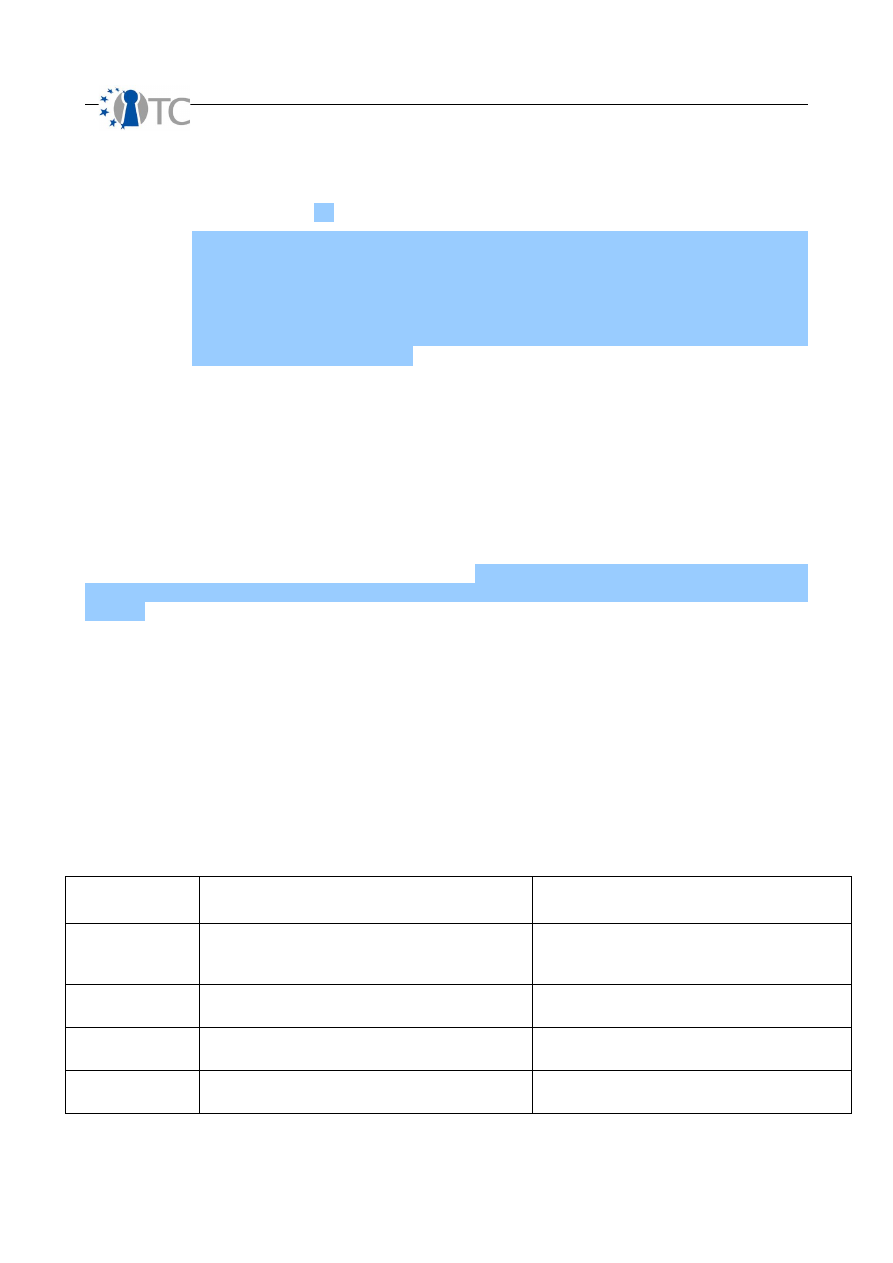

The following value table is used to calculate the

sum

SecLim

variable, as

an intermediate step between the Security Limitation inputs and the

base

SecLim

variable, which is the Security Limitations basic input for the RAV equation.

Input

Weighted Value

Variables

Vulnerability

(

)

(

)

1

10

log

+

+

sum

sum

MC

OpSec

sum

MC

: sum of Missing Controls

Weakness

(

)

(

)

1

10

log

+

+

A

sum

MC

OpSec

A

MC

: sum of Missing Controls in

Control Class A

Concern

(

)

(

)

1

10

log

+

+

B

sum

MC

OpSec

B

MC

: sum of Missing Controls in

Control Class B

Exposure

( )

(

)

1

10

log

+

V

V

: sum of Visibility

Anomaly

(

)

(

)

1

10

log

+

+

MCa

V

V

: sum of Visibility

A

MC

: sum of Missing Controls in

Control Class A

Security Limitations Base

sum

SecLim

is then calculated as the aggregated total of each input multiplied by its

corresponding weighted value as defined in the table above. The Security Limitations

base equation is given as:

base

SecLim

(

)

(

)

(

)

2

100

1

10

log

×

+

×

=

sum

SecLim

OpenTC Deliverable 07.02

21/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

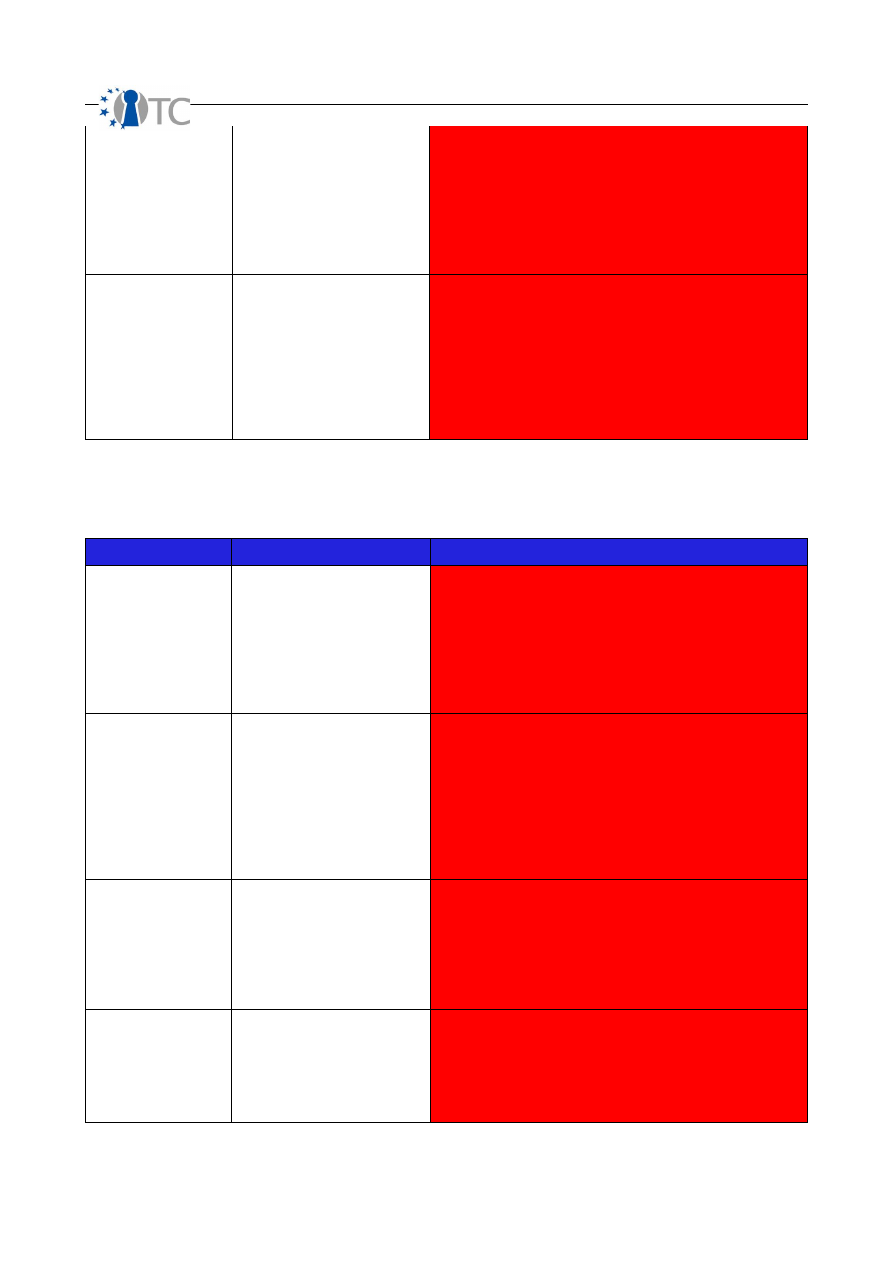

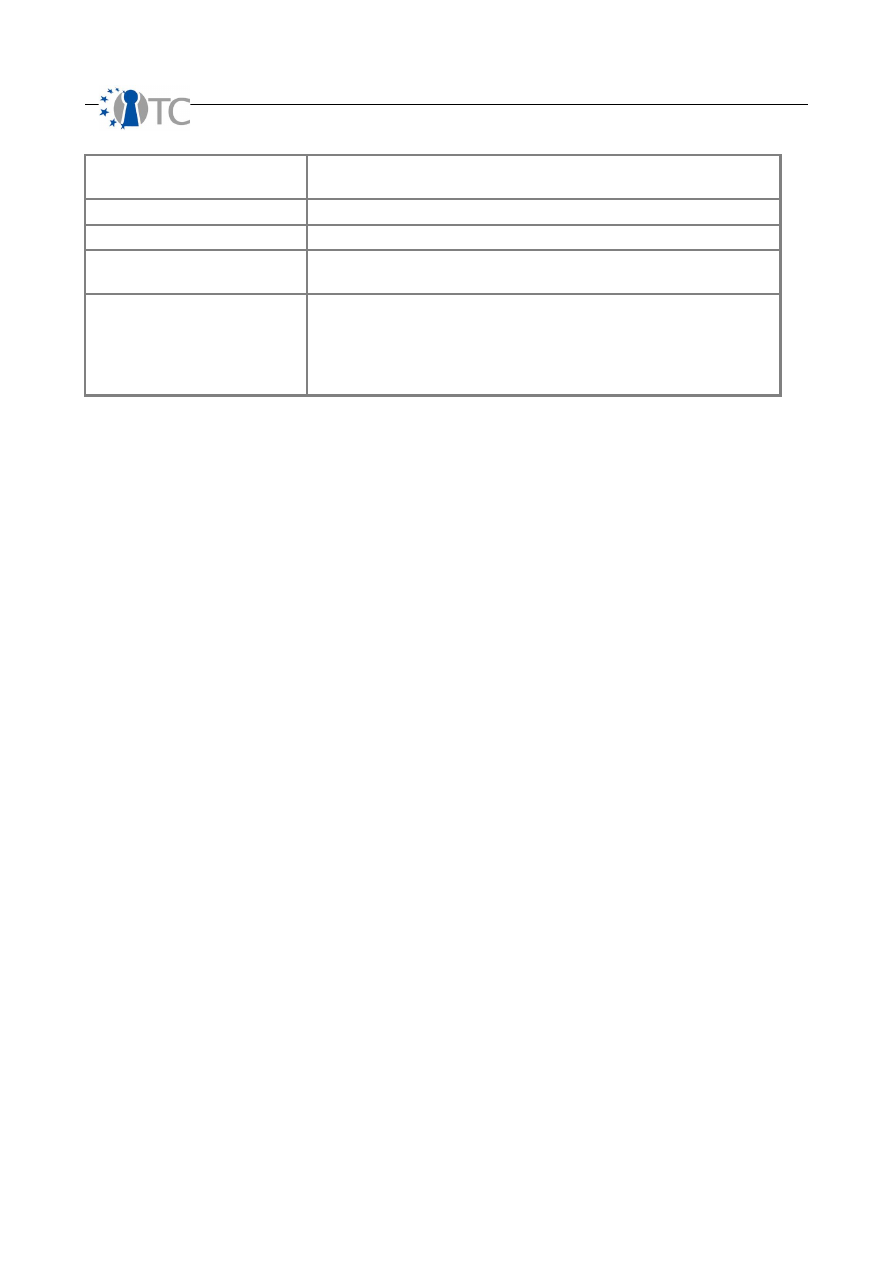

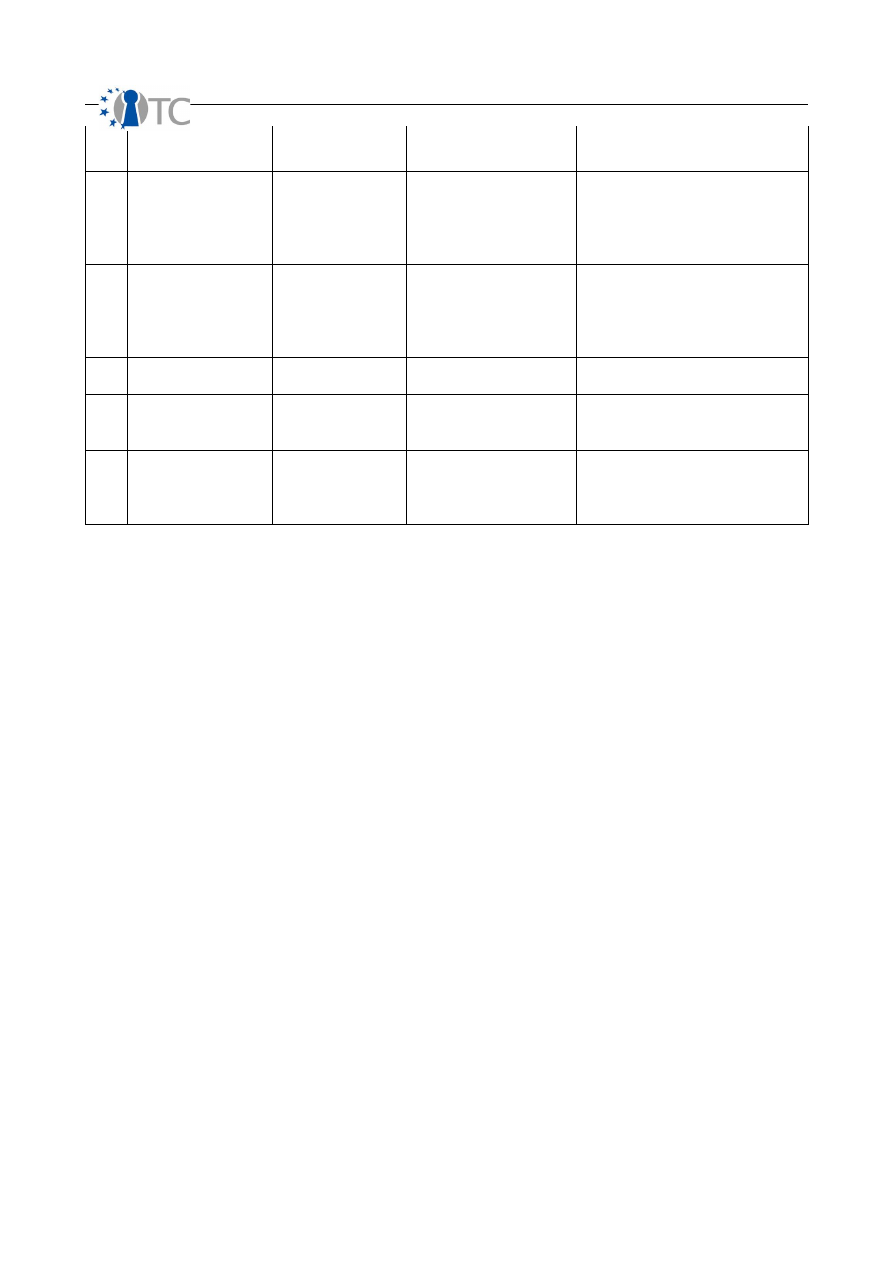

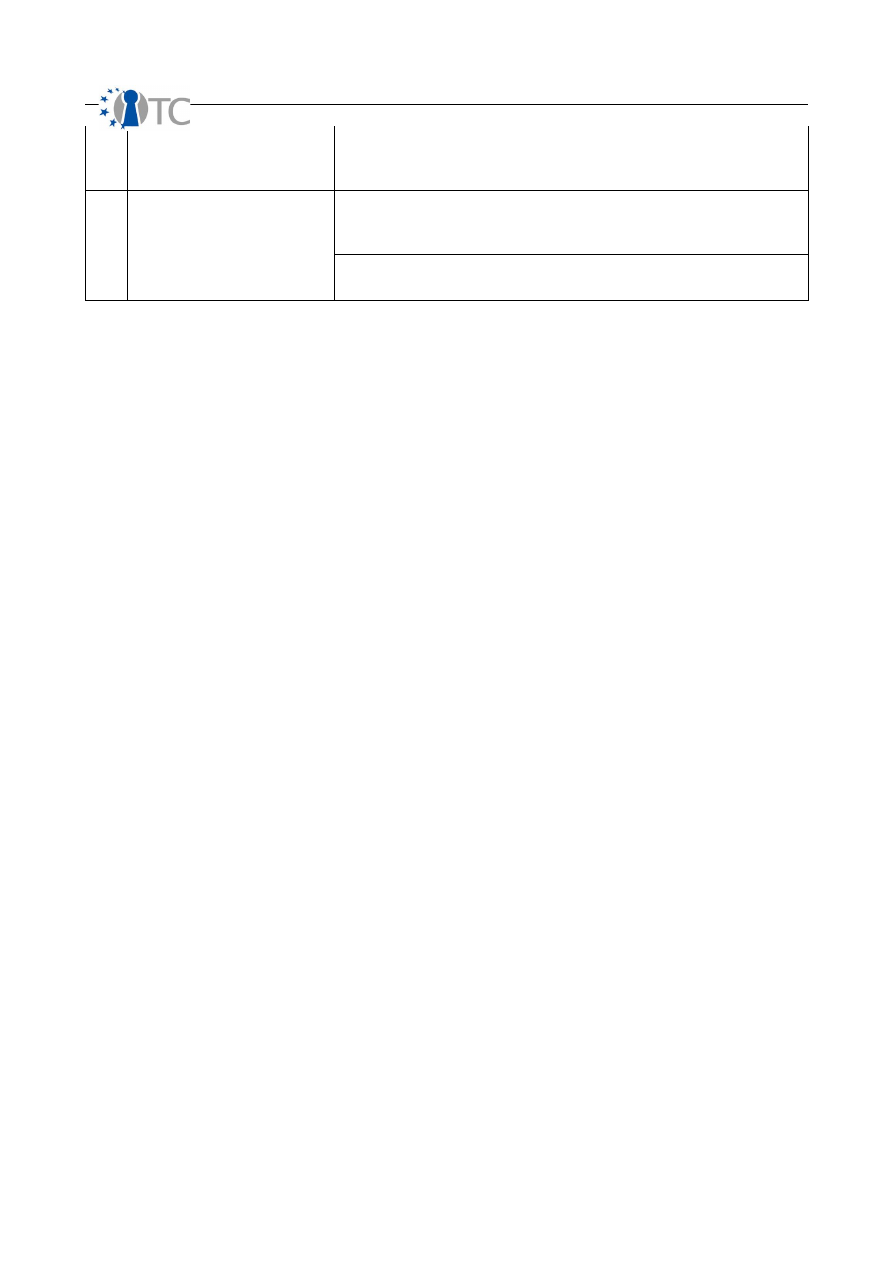

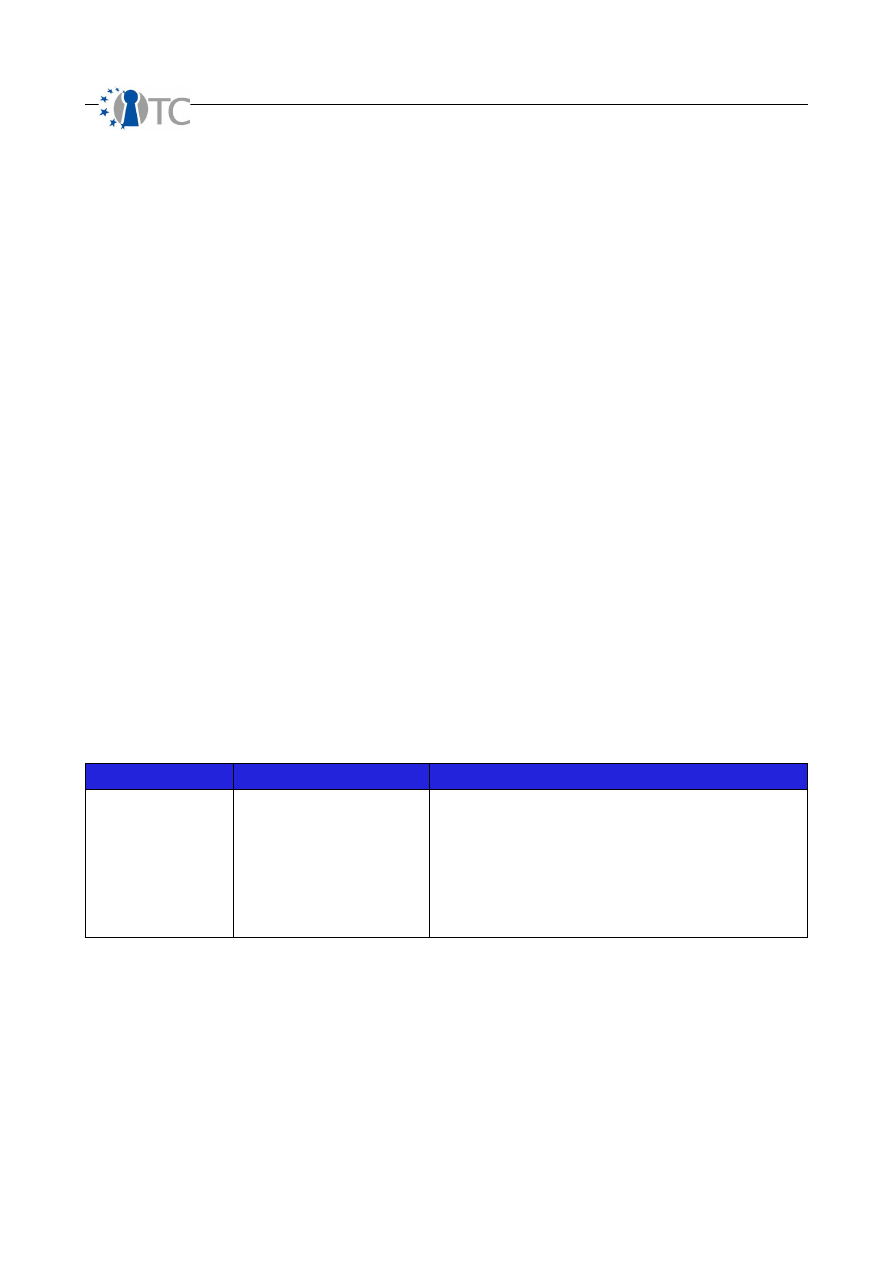

Table 4: Calculating Security Limitations

Limitations Categories

Auditing and Examples

Vulnerability

Count separately each flaw or error that that defies protections whereby a person or

process can access, deny access to others, or hide itself or assets within the scope.

In PHYSSEC, a vulnerability can be such things as a simple glass door, a metal gate

corroded by the weather, a door that can be sealed by wedging coins into the gap

between it and its frame, electronic equipment outdoors not sealed from pests such as

ants or mice, a bootable cd-rom drive on a PC, or a process that allows an employee to

take a trashcan large enough to hide or transport assets out of the scope.

In HUMSEC, a vulnerability can be a cultural bias that does not allow an employee to

question others who do not look like they belong there or a lack of training which leaves

a new secretary to give out business information classified for internal use only to a

caller.

In COMSEC data security, a vulnerability can be such things as a flaw in software that

allows an attacker to overwrite memory space to gain access, a computation flaw that

allows an attacker to lock the CPU into 100% usage, or an operating system that allows

enough data to be copied onto the disk until it itself can't operate anymore.

In COMSEC telecommunications, a vulnerability can be a flaw in the pay phone system

that allows sounds through the receiver mimic coin drops, a telephone box that allows

anyone to access anyone else's phone line, a voice mail system that provides messages

from any phone anywhere, or a FAX machine that can be polled remotely to resend the

last thing in memory to the caller's number.

In SPECSEC, a vulnerability can be hardware which can be overloaded and burnt out

by higher powered versions of the same frequency or a near frequency, a standard

receiver without special configuration which can access the data in the signal, a

receiver which can be forced to accept a third-party signal in place of the intended

one, or a wireless access point dropping connections from a nearby microwave oven.

Weakness

Count each flaw or error in the controls for interactivity: authentication, indemnification,

resistance, subjugation, and continuity.

In PHYSSEC, a weakness can be such things as a door lock that opens when a card is

wedged between it and the door frame, a back-up generator with no fuel, or insurance

that doesn't cover flood damage in a flood zone.

In HUMSEC, a weakness can be a process failure of a second guard to take the post of

the guard who runs after an intruder or a cultural climate within a company for allowing

friends into posted restricted spaces.

In COMSEC data security, a weakness can be such things as login that allows unlimited

attempts or a web farm with round-robin DNS for load balancing although each system

has also a unique name for direct linking.

In COMSEC telecommunications, a weakness can be a flaw in the PBX that has still the

default administration passwords or a modem bank for remote access dial-in which

does not log the caller numbers, time, and duration.

In SPECSEC, a weakness can be a wireless access point authenticating users based on

MAC addresses or a RFID security tag that no longer receives signals and therefore fails

“open” after receiving a signal from a high power source.

OpenTC Deliverable 07.02

22/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

Concern

Count each flaw or error in process controls: non-repudiation, confidentiality, privacy,

integrity, and alarm.

In PHYSSEC, a concern can be such things as a door lock mechanism whose operation

controls and key types are public, a back-up generator with no power meter or fuel

gage, an equipment process that does not require the employee to sign-out materials

when received, or a fire alarm not loud enough to be heard by machine workers with

ear plugs.

In HUMSEC, a concern can be a process failure of a guard who maintains the same

schedule and routine or a cultural climate within a company that allows employees to

use public meeting rooms for internal business.

In COMSEC data security, a concern can be the use of locally generated web server

certificates for HTTPS or log files which record only the transaction participants and not

the correct date and time of the transaction.

In COMSEC telecommunications, a concern can be the use of a FAX machine for

sending private information or a voice mail system that uses touch tones for entering a

PIN or password.

In SPECSEC, a concern can be a wireless access point using weak data encryption or an

infrared door opener that cannot read th sender in the rain.

Exposure

Count each unjustifiable action, flaw, or error that provides direct or indirect visibility of

targets or assets within the chosen scope channel of the security presence.

In PHYSSEC, an exposure can be such things as a window which allows one to view

assets and processes or an available power meter that shows how much energy a

building uses and its fluctuation over time.

In HUMSEC, an exposure can be a guard who allows all visitors to view the sign-in sheet

with all the other visitors listed on it or a company operator who informs callers that a

particular person is out sick or on vacation.

In COMSEC data security, an exposure can be a descriptive and valid banner about a

service (disinformation banners are not exposures) or a ICMP echo reply from a host.

In COMSEC telecommunications, an exposure can be an automated company

directory sorted by alphabet allowing anyone to cycle through all persons and numbers

or a FAX machine that stores the last dialed numbers.

In SPECSEC, an exposure can be a signal that disrupts other machinery announcing its

activity or an infrared device whose operation is visible by standard video cameras with

night capability.

OpenTC Deliverable 07.02

23/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

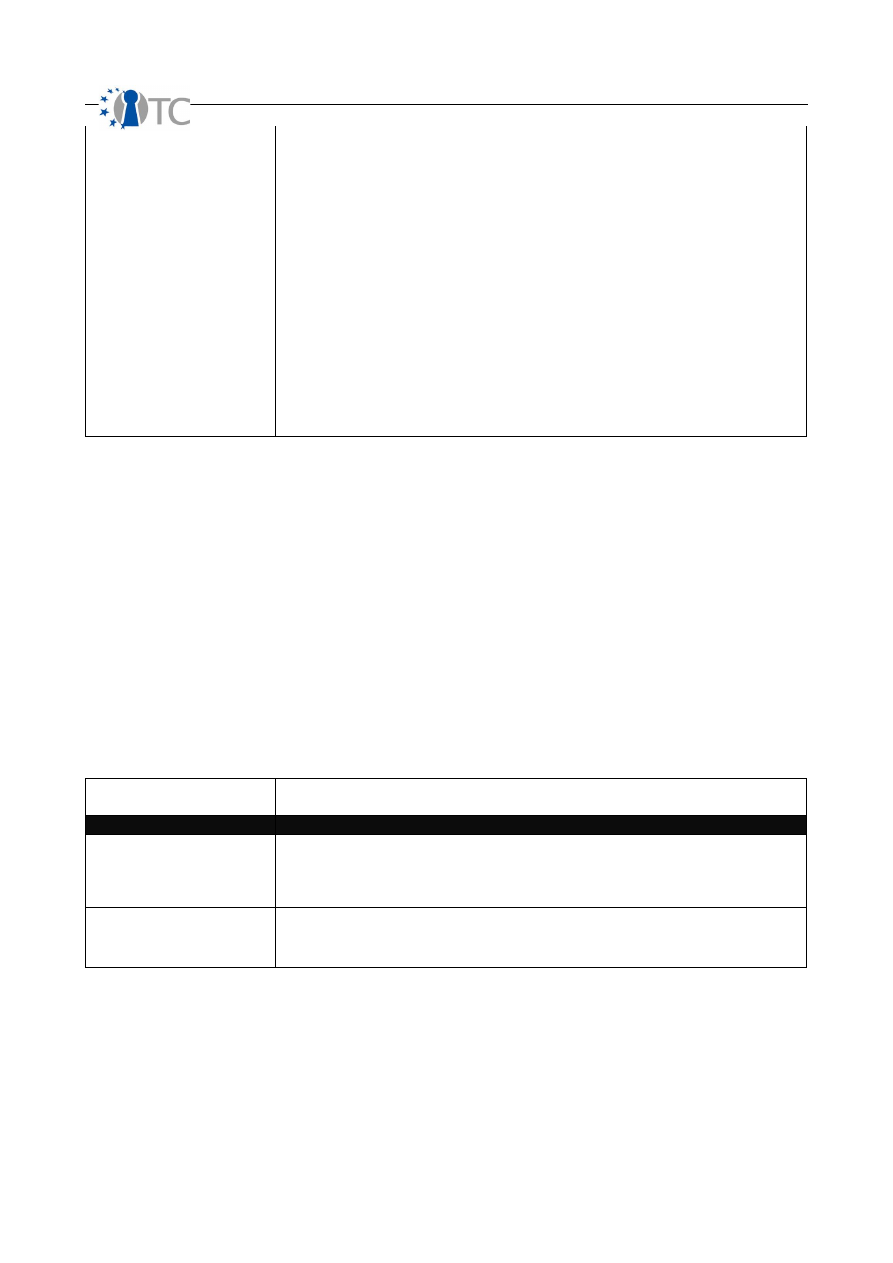

Anomaly

Count each unidentifiable or unknown element which cannot be accounted for in

normal operations, generally when the source or destination of the element cannot be

understood. An anomaly may be an earl sign of a security problem. Since unknowns

are elements which cannot be controlled for, a proper audit requires noting any and all

anomalies.

In PHYSSEC, an anomaly can be dead birds discovered on the roof a building around

communications equipment.

In HUMSEC, an anomaly can be questions a guard asks which may seem irrelevant to

either the job or standard small talk.

In COMSEC data security, an anomaly can be correct responses to a probe from a

different IP address than was probed or expected.

In COMSEC telecommunications, an anomaly can be a modem response from a

number that has no modem.

In SPECSEC, an anomaly can be a powerful and probably local signal that appears

once momentarily but not long enough to locate the source.

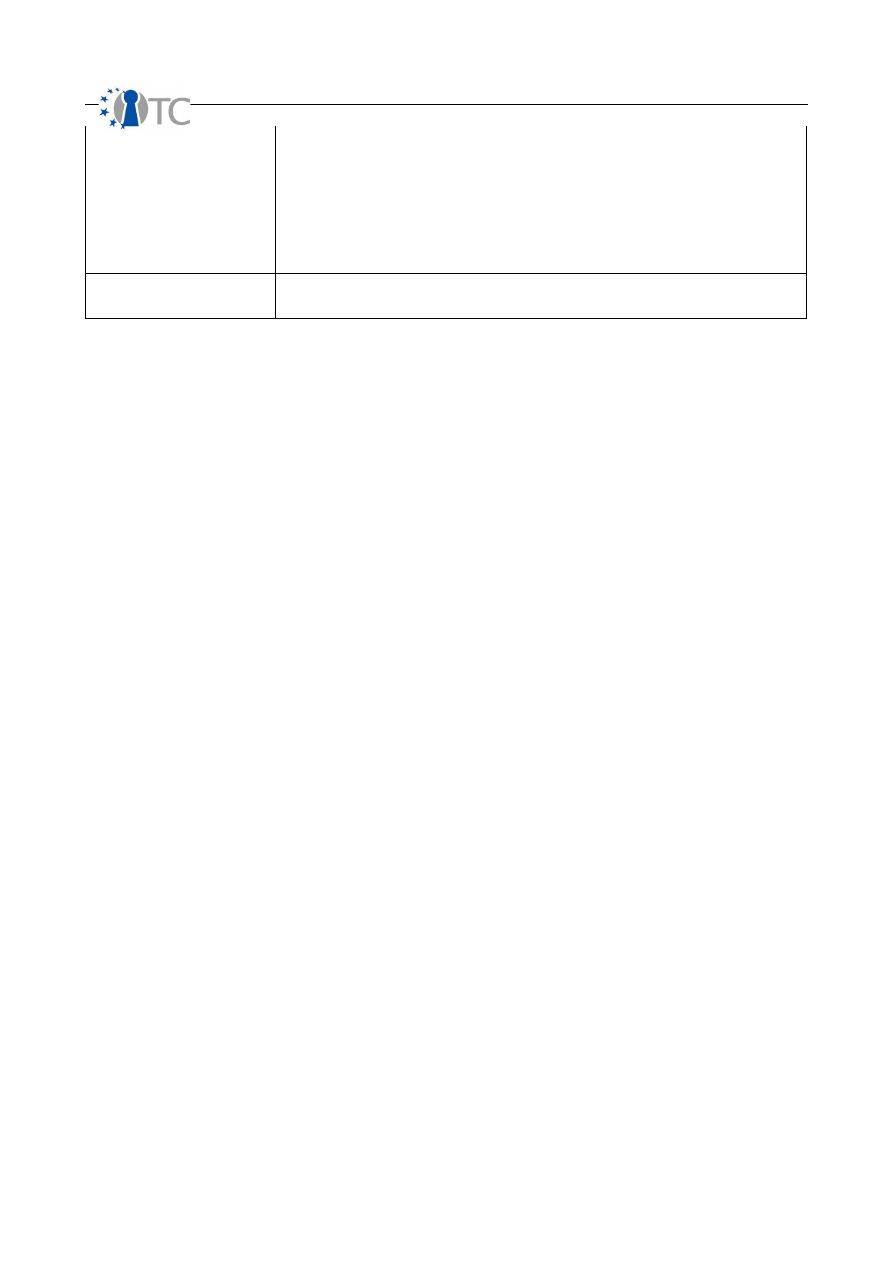

Actual Security

To measure the current state of operations with applied controls and discovered

limitations, a final calculation is required to define Actual Security. As implied by its

name this is the whole security value which combines the three values of operational

security, controls, and limitations to show the actual state of security.

The purpose of Actual Security is to condense the three combined values into a simple

metric value percentile that can be used to rate operational security effectiveness and

provide a method of comparison, scoring, and rating. This big picture approach is

effective because it does not simply show how one is prepared for threats but how

effective one's preparations are against threats.

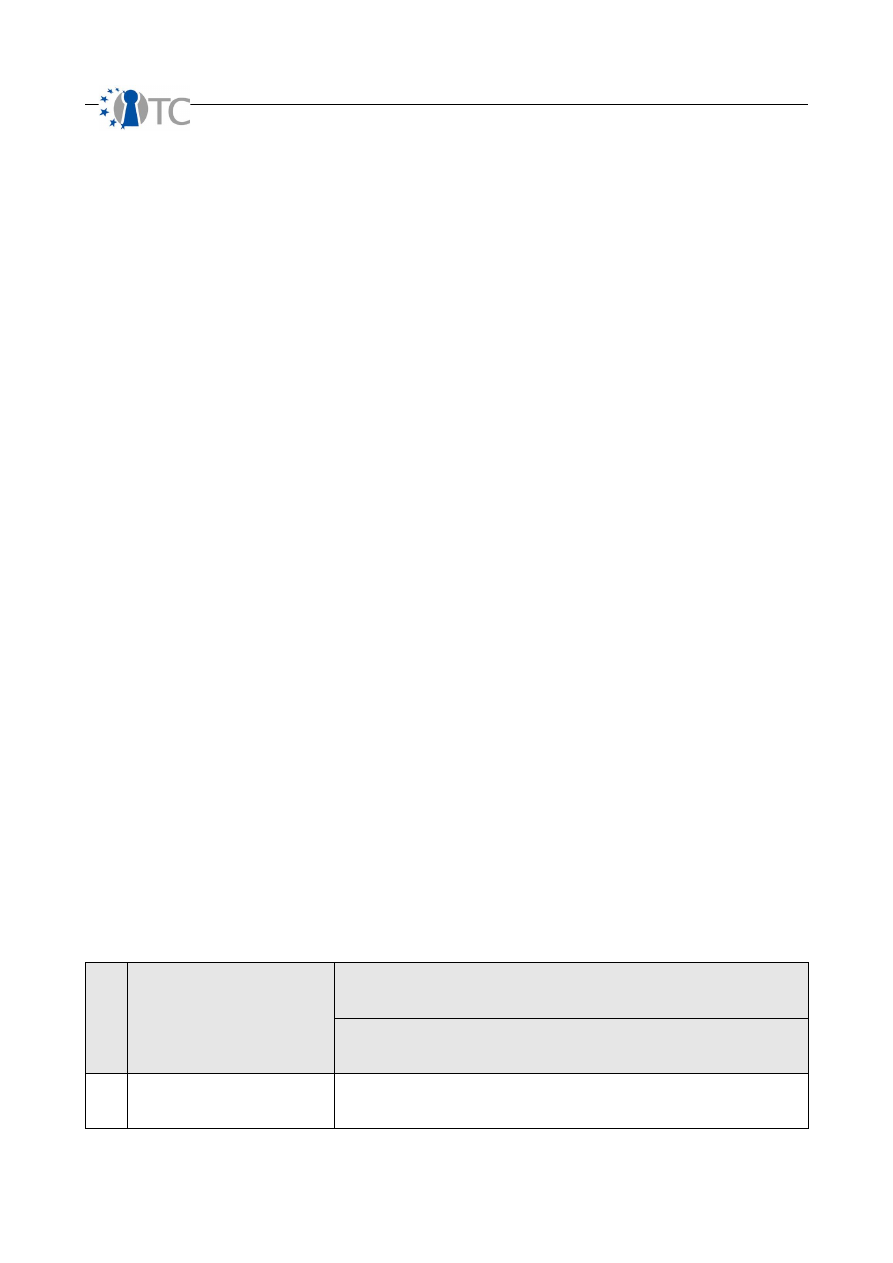

Table 5: Calculating Actual Security

Actual Security

Categories

Descriptions

Actual Delta

The actual security delta is the sum of Op Sec Delta and Loss Controls Delta and

subtracting the Security Limitations Delta. The Actual Delta is useful for comparing

products and solutions by previously estimating the change (delta) the product or

solution would make in the scope.

Actual Security (Total)

Actual security is the true (actual) state of security provided as a hash of all three

sections and represented in a percentage where 100% represents a balance of controls

for interaction points to assets with no limitations.

The Actual Delta is useful for comparing products and solutions by previously

estimating the change (delta) the product or solution would make in the scope. We

can find the Actual Security Delta,

∆

ActSec

, with the formula:

base

base

base

ActSec

OpSec

LC

ActSec

−

−

=

∆

.

OpenTC Deliverable 07.02

24/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

To measure the current state of operations with applied controls and discovered

limitations, a final calculation is required to define Actual Security. As implied by its

name this is the whole security value which combines the three values of operational

security, controls, and limitations to show the actual state of security.

Actual Security

(total),

ActSec

, is the true state of security provided as a hash of all

three sections and represented in a percentage where 100% represents a balance of

controls for interaction points to assets with no limitations. The final RAV equation for

Actual Security is given as:

(

)

) (

)

(

(

)

)

(

((

) )

) )

(

(

01

.

100

01

.

100

100

×

×

+

−

−

×

×

−

+

−

=

base

base

base

base

base

base

ActSec

LC

OpSec

LC

OpSec

OpSec

ActSec

3.4 Trust metrics

AVIT, the Applied Verification for Integrity and Trust methodology will be both a sequence

of proper and thorough testing as well as a guide for the application of trust. A

methodology is useful reproducibility is a key component to a task. An open methodology

is necessary when the means of reproducing the results must be transparent. This

methodology, AVIT, the Applied Verification of Integrity and Trust, is a core component of

the OpenTC project because the test subject deals with privacy issues therefore the

methods of assuring the privacy issues are well tested requires a transparent, reproducible

test method. And a strong methodology of operation tests to standardize on should be

able to provide meaningful, unbiased metrics.

AVIT follows the work which has been developed for the OSSTMM (Open Source Security

Testing Methodology Manual) which is an open, security testing standard. The OSSTMM

provides the flexible security testing methodology which can be conformed under AVIT to

provide a security test of the full range of OpenTC components, the Linux OS on which it

resides, and the networked environment from multiple vectors. Thereby it will allow

OpenTC to quantify the integrity and ultimately the trust value of the OpenTC system.

Furthermore, it will provide a means and a gage by which the public can understand trust.

The basis to measuring Trust is to relate it to integrity, that thing that tells us something is

still “all right”. Integrity is a subset of security. It is a loss control and when applied will

determine if a change has occurred, intended or not. Integrity tests will need to be made

with the security tests to determine that the system as a whole is mostly free of holes (no

porosity) and that this condition does not change with the introduction of the various

OpenTC components. A high integrity score should validate that a strong chain of trust is

in place meaning that the point of origin and manufacture for each component in the

system, either software or hardware, can be identified, verified, and assured that no

change has taken place upon the intended, displayed, and non-malicious state of the

device.

This means in AVIT in order to show a trust level we need to prove its level of operational

integrity. And to prove it's level of integrity we need to determine its level of porosity, the

lack of security as a protection mechanism. Therefore the methodology provided by AVIT

will consist of:

OpenTC Deliverable 07.02

25/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

•

Four Point Process: the process of a thorough security test of operations,

•

Error Types: the process of recognizing causes of errors in operational tests,

•

Security Testing Methodology: the sequence of operational security tests,

•

Security Metrics: the means to calculate security,

•

Integrity Testing Methodology: the sequence of trust tests,

•

Trust Metrics: the means to calculate trust levels based on integrity and security

tests

The difficulty of this research and subsequent tests and metrics is in the nature of trust

and integrity to be closely tied to security but is also not affected by it should inaccuracies

or a high level of porosity be determined within it. Partly this has to do with human

psychology and the human irrationality when it comes to trust which is a known element

already profitably exploited in advertising, gambling, and politics. Therefore prior studies

in psychology, marketing, game theory, economics, and political science are evaluated

concurrently with the published articles within the project focus of Trusted Computing.

Current research has shown trust is obtainable quantitatively through the manipulation of

several elements known as the Trust Rules. The Trust Rules are:

1. Scope: the superset which contains the target (number of items/people/processes

to be trusted) and all of the components of those targets. This defines the scope of

trust and all inclusive parts of what needs to be trusted. This is like defining how

and where you see a tree where tree is the term for roots, branches, trunk, sap,

leaves, and all the other parts that make up a tree.

2. Symmetry of trust: the vector (direction) of the trust. It may be one way

(asymmetrical) and defined as to which way the trust must travel or both ways

(symmetrical).

3. Transparency: the level of visibility of all parts of the scope and the operating

environment of the target.

4. Control: the amount and direction of influence over the scope by the operator(s)

(also known as subjugation).

5. Historical consistency: the use of time as a measure of integrity by examining prior

operations and behaviours of the target.

6. Integrity: the amount and timely notice of change within the target.

7. Offsets of sufficient assurance: the comparison of the amount that which the value

placed within the target to the value of compensation to the operator or

punishment to the target should the trust fail.

8. Value of reward: the amount of gain for which trust in the target is sufficient for the

risk.

9. Chain of trust: the verification of the origins and influences over the target prior to

its current state. The further back to the origins of the target, the greater the

likelihood malicious players or foul play can be determined.

10. Adequacy of security, controls, and limitations: the amount and effectiveness of

protection levels can tell the actual state of the target's integrity.

A major part of the Trust Rules requires a documented and verifiable process chain

starting at the distributors and moving backwards in the process to the manufacturers,

coders, and architects. This part of the integrity test alone provides a huge challenge to

Open Source software developers as such a process is rarely followed properly. However,

without it, there can be only a very short chain of trust and the inability to fully verify

integrity.

Interestingly, security, is only a small part within those rules. Rational decision making

where it pertains to trust often does not include security because it is often mistakenly

confused for feelings of risk and can therefore be often satisfied by rule no. 8. This is

OpenTC Deliverable 07.02

26/100

D07.2 V&V Report #2: Methodology definition, analyses results and certification 1.2

notable when we compare trust with security. The need to gain security acceptance,

where acceptance is the same goal to achieve as in trust, they follow a pyramid notably

similar to the advertising pyramid which categorizes a linear acceptance of a message as

such:

1. Awareness --> 2. Comprehension --> 3. Conviction --> 4. Desire --> 5. Action

1. A need for security is communicated in the media or through sales channels which

generates awareness, often promoting fear, uncertainty, or doubt (Awareness).

2. The need for security is taught or mandated through regulations and legislation

(Comprehension).

3. Incentives and punishments are used to convince the need to apply the appropriate

security measures (Conviction).

4. Security becomes desired often as a result of a problem or an unfavorable risk

assessment (Desire).

5. Security is seen as necessary and applied with the motivation to maintain security

levels (Action).

However, in a trust model, there is no pyramid. It is not linear. There is no building

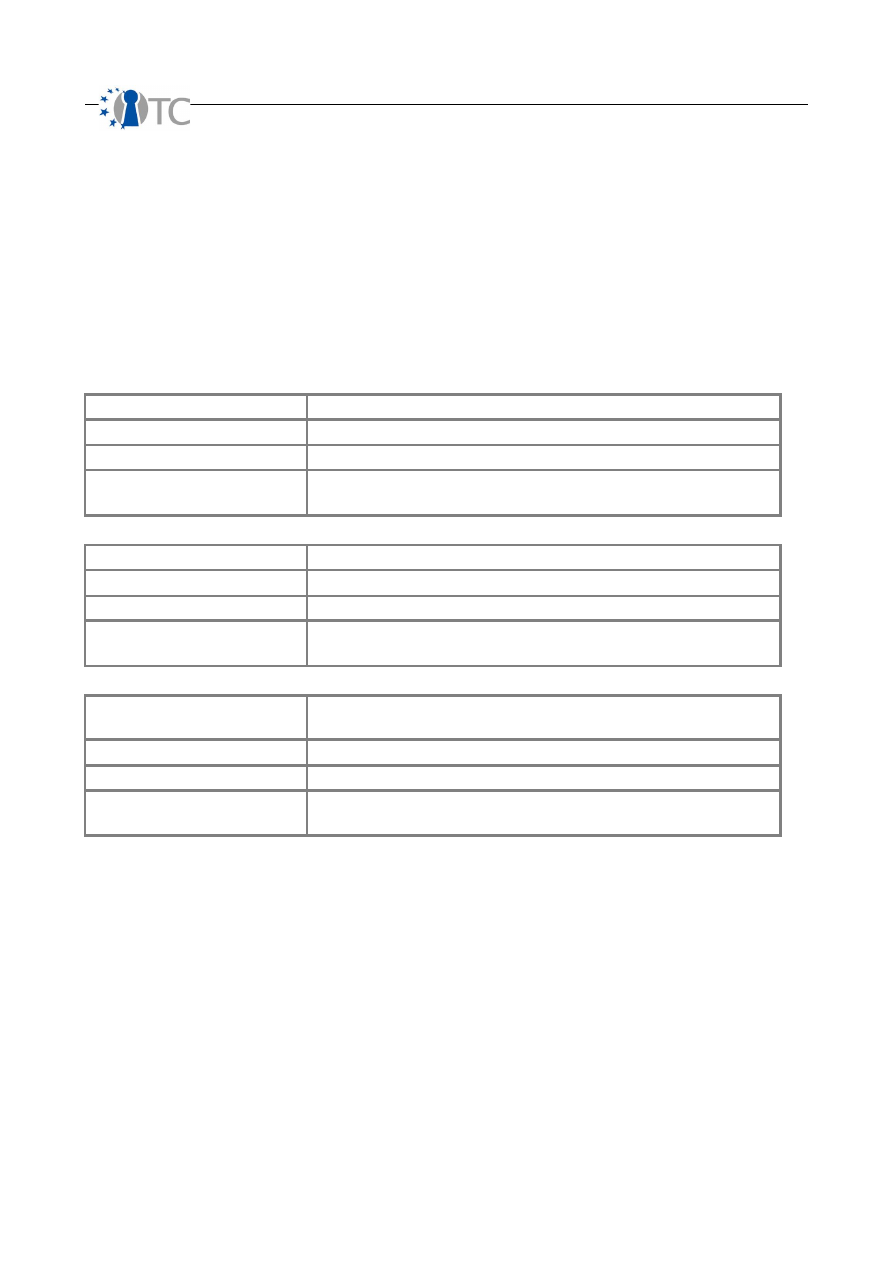

towards trust in a particular order or even an effort value system where it can be